Development and Evaluation of a Multiaxial Modular Ground Robot for Estimating Soybean Phenotypic Traits Using an RGB-Depth Sensor

Abstract

1. Introduction

- Modularity: Most ground robots developed for agricultural research have restricted functionality due to their chassis designs [16]. To solve this problem and enable functionality across different agricultural applications and a wide range of crops, it was necessary for the robot’s chassis dimensions to be easily adjustable and replaceable to ensure modularity.

- Payload capacity: To facilitate the incorporation of various sensors, manipulators, and tanks required for precision agriculture applications, including phenotyping, spot spraying, and harvesting, a significant payload capacity was necessary.

- Environmental considerations: Gonzalez-de-Santos et al. [20] recommended the use of lighter vehicles on the farm to reduce the effect of soil compaction on soil microorganisms. To this end, the robot’s chassis material needed to be lightweight, sturdy, and rigid.

- Material cost: Recent surveys highlighted economic and financial-related issues as a major barrier to the adoption of ground robot technology by farmers [21,22]. To promote the adoption of ground robot technology while ensuring cost-effectiveness and efficiency, materials and components were optimally selected.

- Safety: Ensuring the safety of both humans and crops on the farm is crucial for the successful integration of ground robots. To achieve this, it was necessary for safety features to be included in the navigation algorithm of the vehicle.

2. Materials and Methods

2.1. Phenotyping System Overview

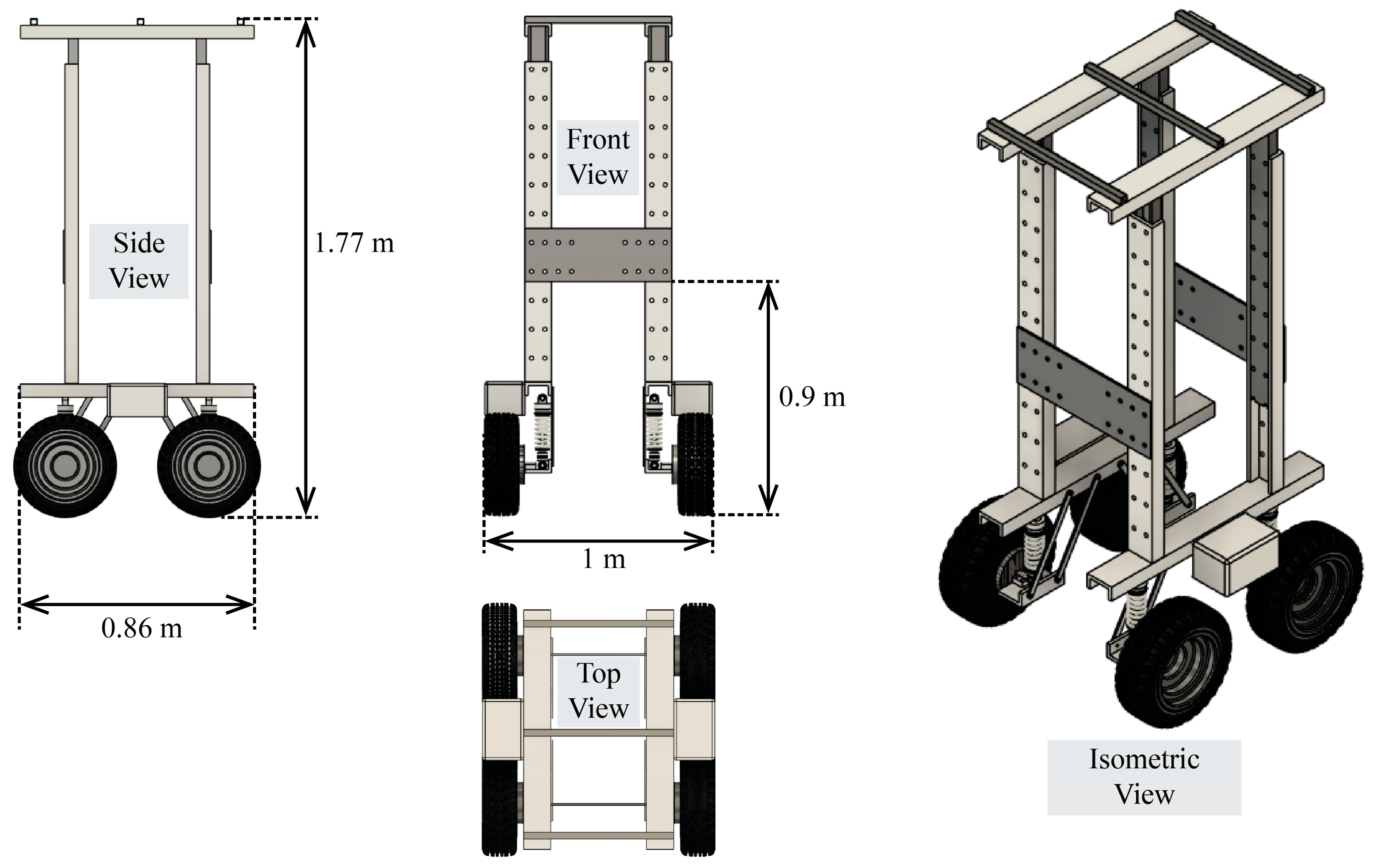

2.2. Platform Design

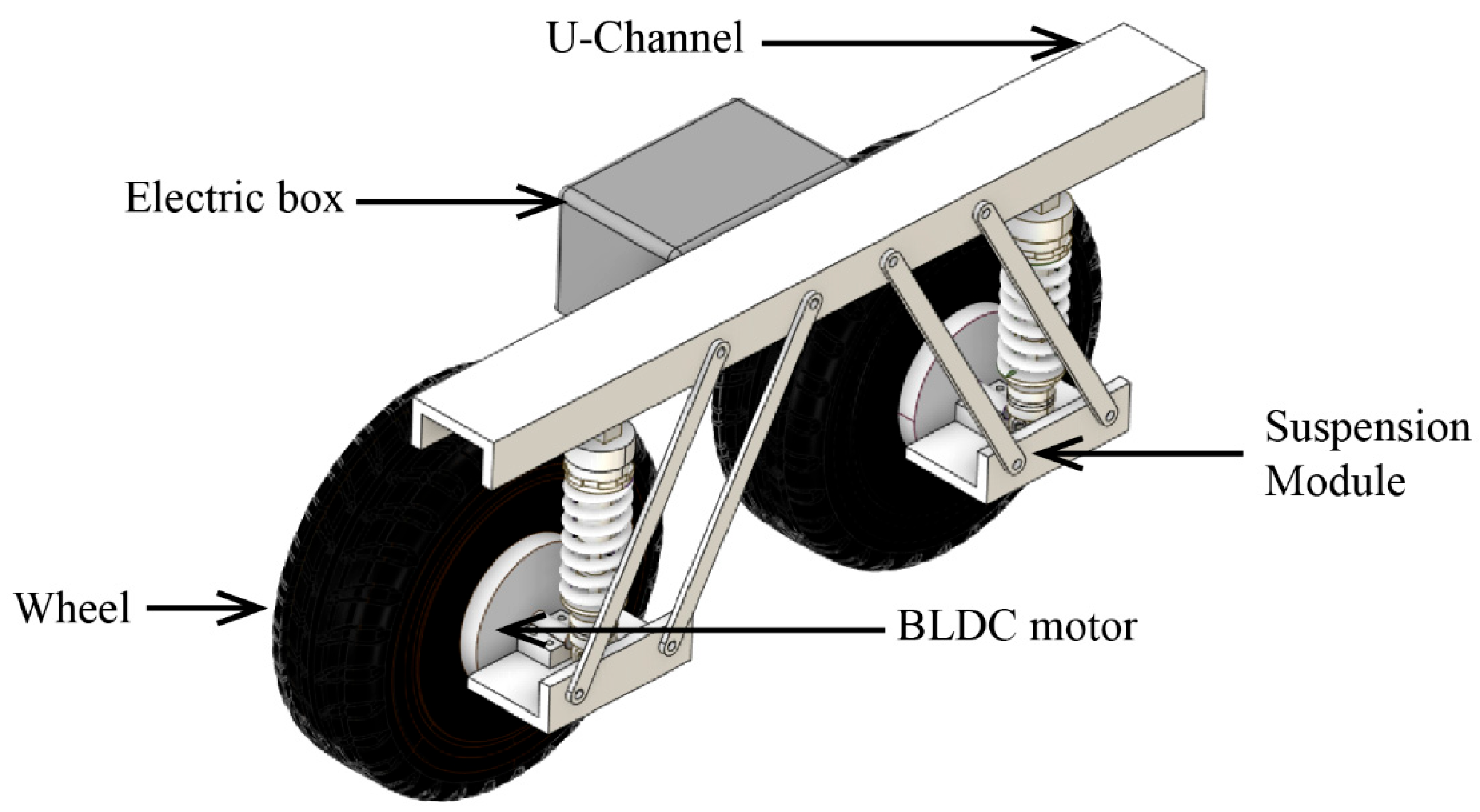

2.2.1. Independent Suspension Module

2.2.2. Drivetrain

2.3. Evaluation of the ModagRobot in Soybean Phenotyping

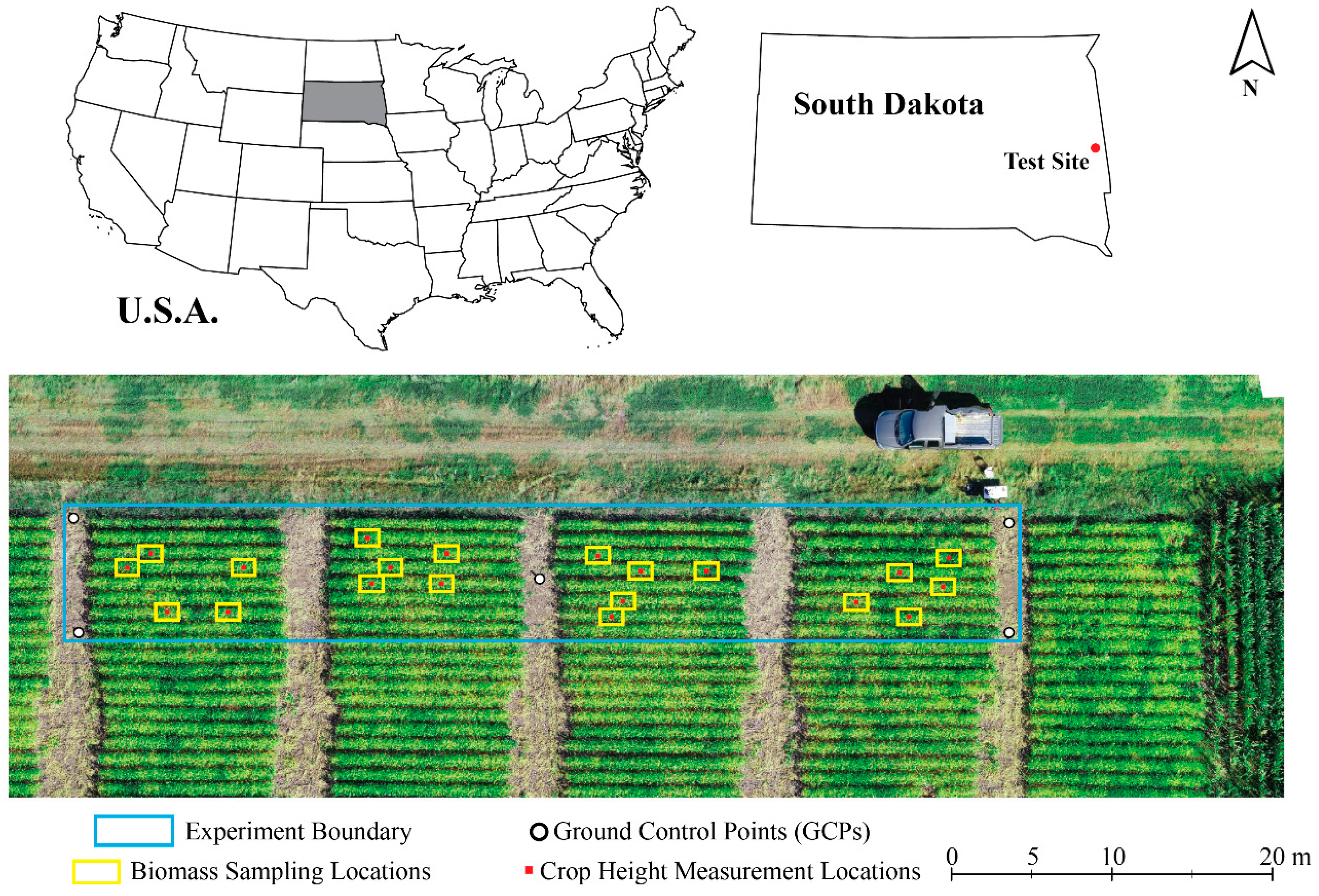

2.3.1. Study Area and Data

Test Site and Experimental Setup

Ground Truth Data Acquisition

ModagRobot Data Acquisition

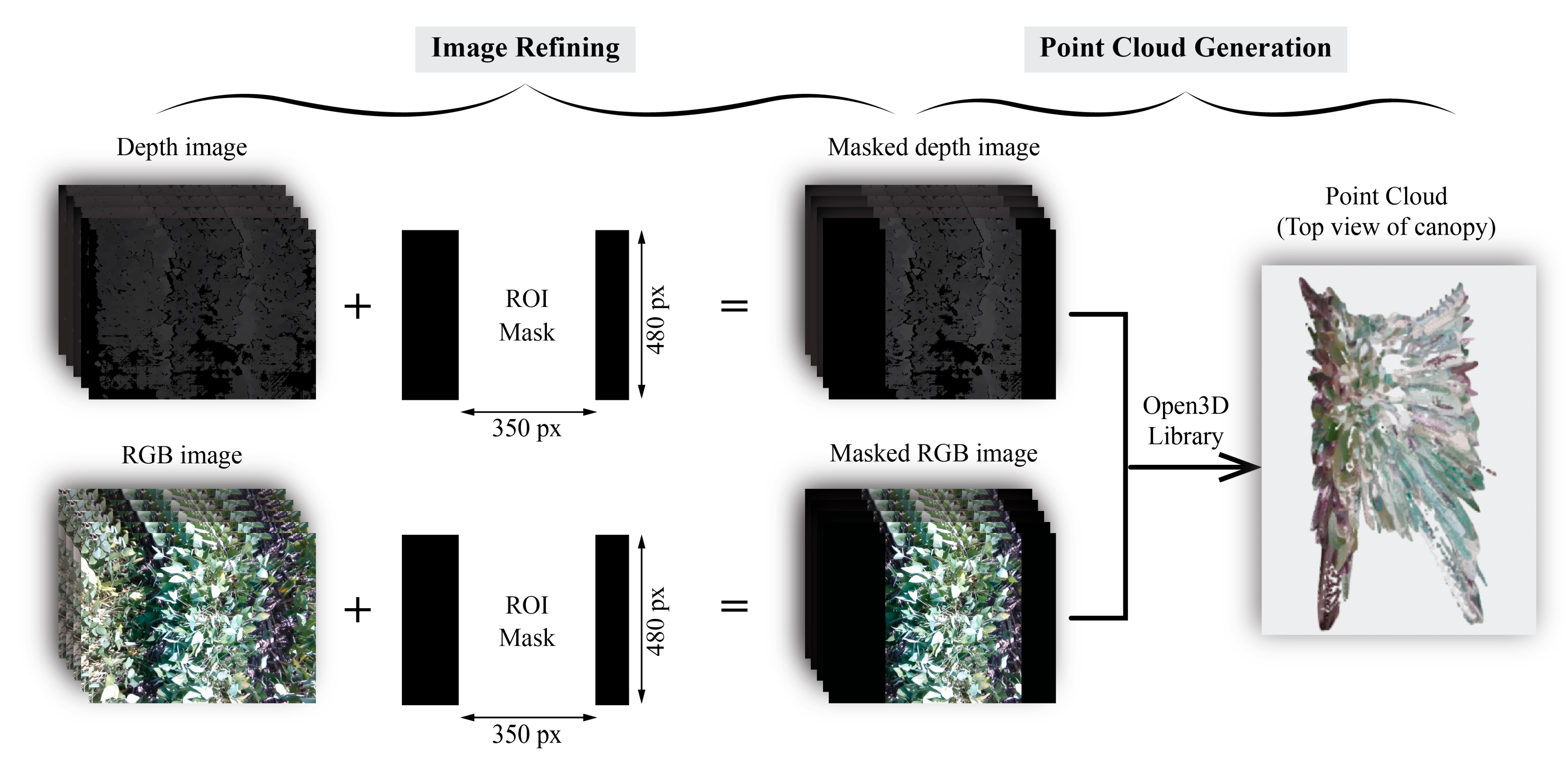

Data Preprocessing and Point Cloud Generation

2.3.2. Phenotypic Trait Extraction

Canopy Height Extraction

Canopy Ground Coverage Area

Soybean Aboveground Biomass Estimation

2.3.3. Model Evaluation

3. Results and Discussion

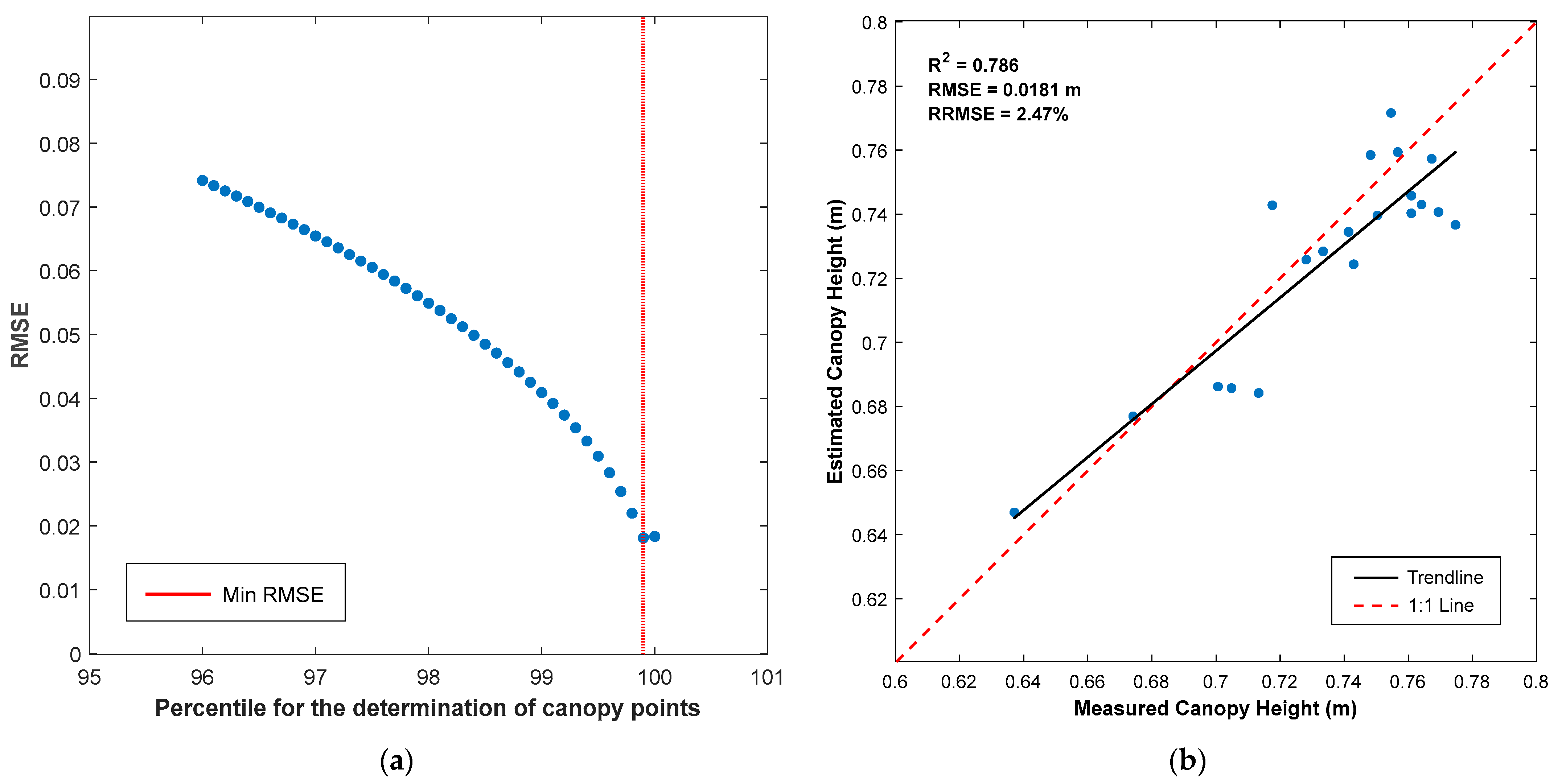

3.1. Canopy Height

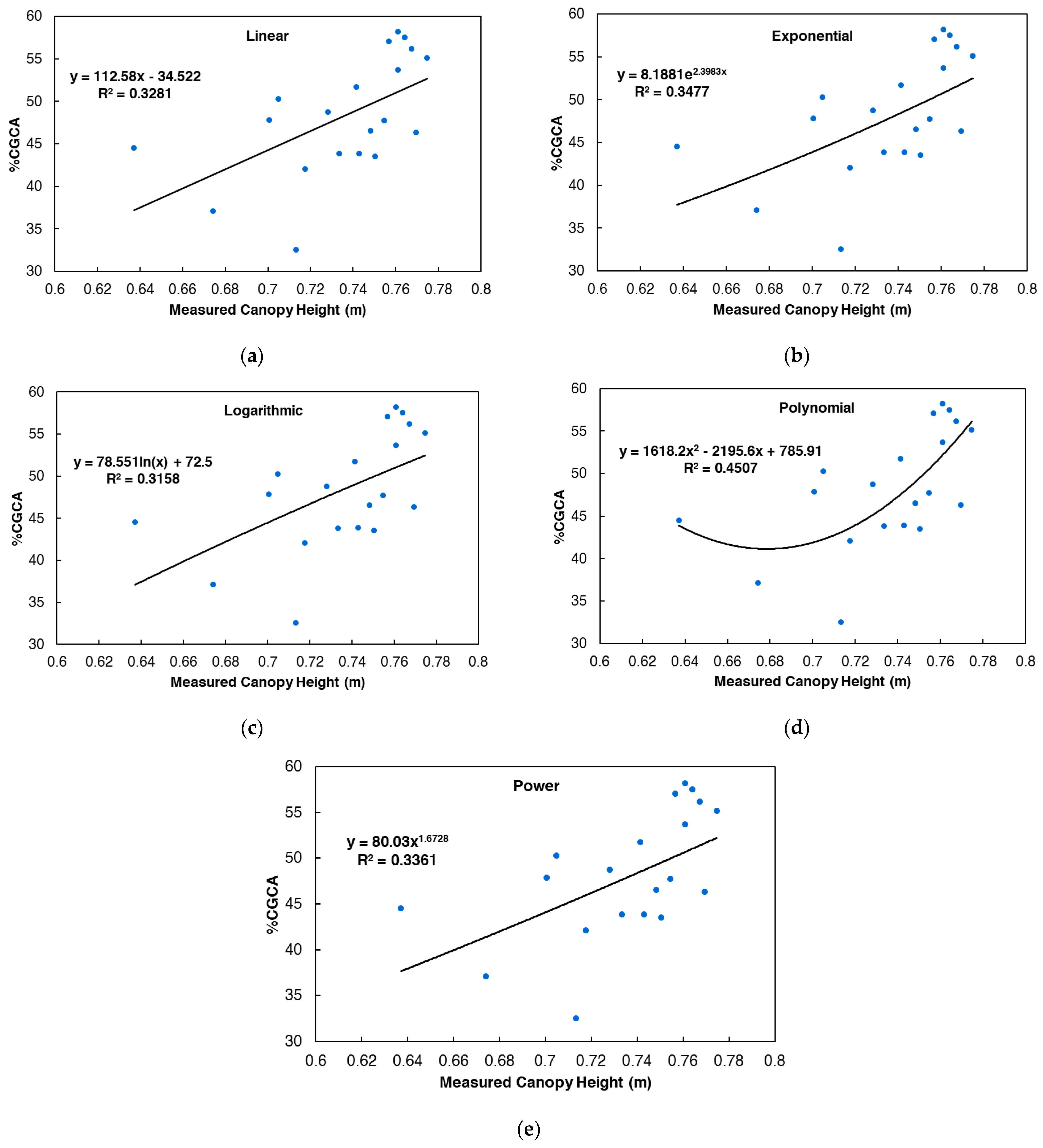

3.2. Relationship Between Canopy Ground Coverage Area and Canopy Height

3.3. Aboveground Biomass Estimation

3.3.1. Optimization of Correction Factor, k for Biomass Estimation Using 3D Profile Index

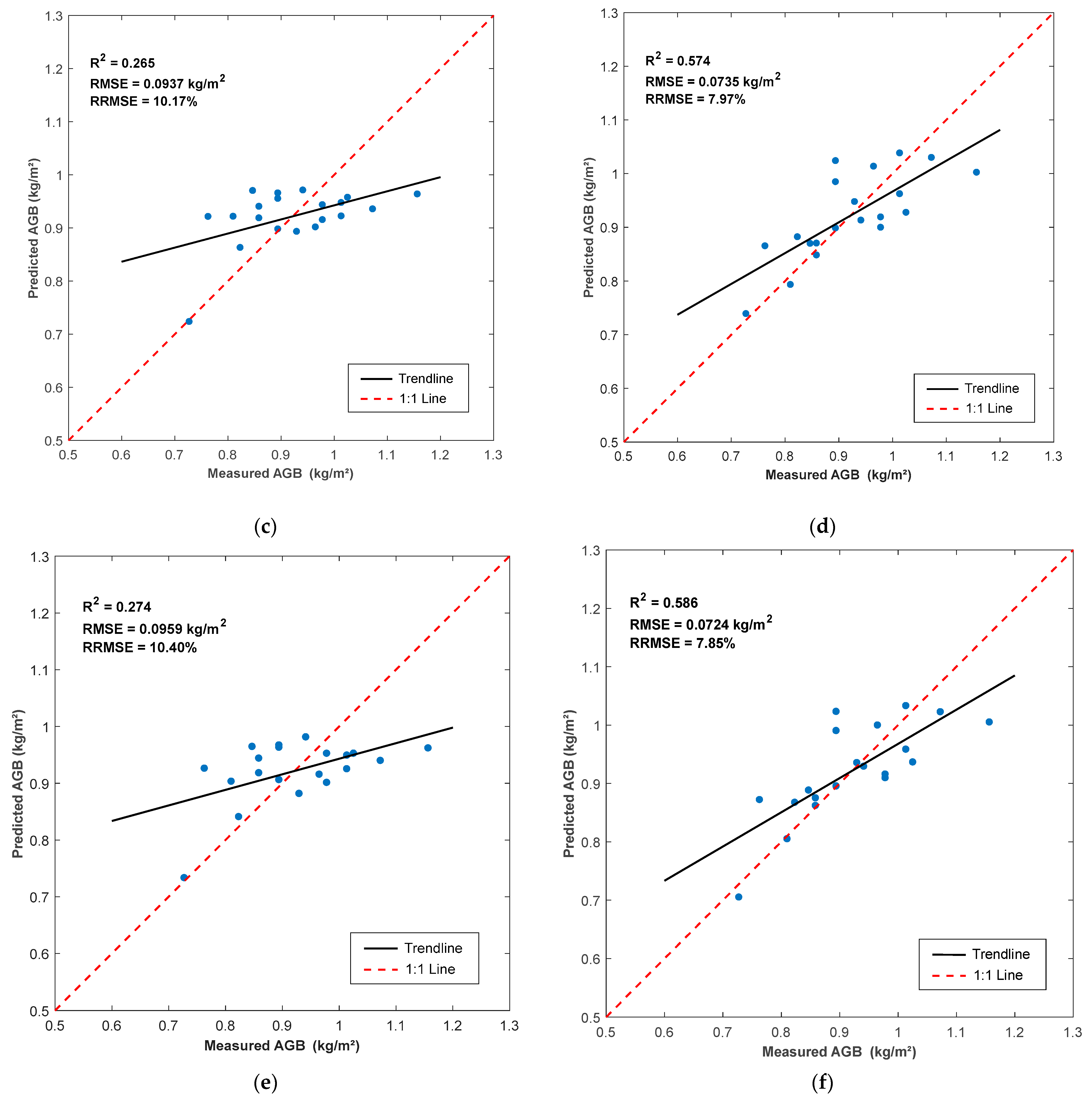

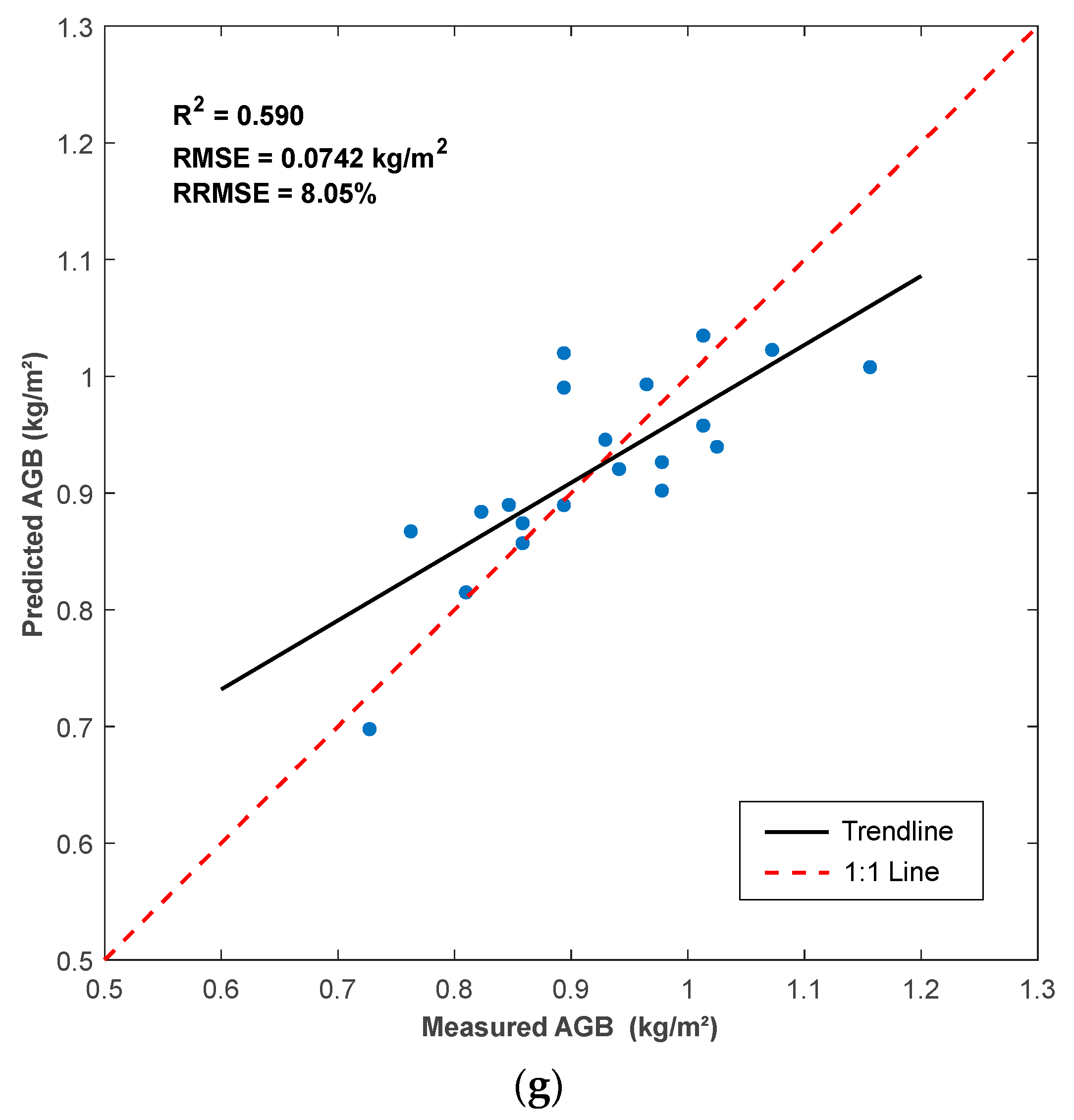

3.3.2. Aboveground Biomass Estimation Using Extracted Phenotypic Traits

3.4. Limitations

3.5. Practical Applications

4. Conclusions

Future Studies

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- USDA. Benefits and Evolution of Precision Agriculture; USDA: Washington, DC, USA, 2020. Available online: https://www.ars.usda.gov/oc/utm/benefits-and-evolution-of-precision-agriculture/ (accessed on 5 March 2025).

- Javaid, M.; Haleem, A.; Singh, R.P.; Suman, R. Enhancing Smart Farming through the Applications of Agriculture 4.0 Technologies. Int. J. Intell. Netw. 2022, 3, 150–164. [Google Scholar] [CrossRef]

- Cobb, J.N.; DeClerck, G.; Greenberg, A.; Clark, R.; McCouch, S. Next-Generation Phenotyping: Requirements and Strategies for Enhancing Our Understanding of Genotype-Phenotype Relationships and Its Relevance to Crop Improvement. In Theoretical and Applied Genetics; Springer: Berlin/Heidelberg, Germany, 2013; pp. 867–887. [Google Scholar] [CrossRef]

- Awika, H.O.; Bedre, R.; Yeom, J.; Marconi, T.G.; Enciso, J.; Mandadi, K.K.; Jung, J.; Avila, C.A. Developing Growth-Associated Molecular Markers Via High-Throughput Phenotyping in Spinach. Plant Genome 2019, 12, 190027. [Google Scholar] [CrossRef]

- Xiao, Q.; Bai, X.; Zhang, C.; He, Y. Advanced High-Throughput Plant Phenotyping Techniques for Genome-Wide Association Studies: A Review. J. Adv. Res. 2022, 35, 215–230. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Neumann, K.; Friedel, S.; Kilian, B.; Chen, M.; Altmann, T.; Klukas, C. Dissecting the Phenotypic Components of Crop Plant Growth and Drought Responses Based on High-Throughput Image Analysis. Plant Cell 2014, 26, 4636–4655. [Google Scholar] [CrossRef]

- Liang, Y.; Urano, D.; Liao, K.-L.; Hedrick, T.L.; Gao, Y.; Jones, A.M. A Nondestructive Method to Estimate the Chlorophyll Content of Arabidopsis Seedlings. Plant Methods 2017, 13, 26. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.W.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation Index Weighted Canopy Volume Model (CVMVI) for Soybean Biomass Estimation from Unmanned Aerial System-Based RGB Imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Jimenez-Berni, J.A.; Deery, D.M.; Rozas-Larraondo, P.; Condon, A.T.G.; Rebetzke, G.J.; James, R.A.; Bovill, W.D.; Furbank, R.T.; Sirault, X.R.R. High Throughput Determination of Plant Height, Ground Cover, and above-Ground Biomass in Wheat with LiDAR. Front. Plant Sci. 2018, 9, 237. [Google Scholar] [CrossRef]

- Qingchun, F.; Wang, X.; Wang, G.; Li, Z. Design and Test of Tomatoes Harvesting Robot. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar] [CrossRef]

- Mueller-Sim, T.; Jenkins, M.; Abel, J.; Kantor, G. The Robotanist: A Ground-Based Agricultural Robot for High-Throughput Crop Phenotyping. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3634–3639. [Google Scholar] [CrossRef]

- Young, S.N.; Kayacan, E.; Peschel, J.M. Design and Field Evaluation of a Ground Robot for High-Throughput Phenotyping of Energy Sorghum. Precis. Agric. 2019, 20, 697–722. [Google Scholar] [CrossRef]

- Bangert, W.; Kielhorn, A.; Rahe, F.; Albert, A.; Biber, P.; Grzonka, S.; Hänsel, M.; Haug, S.; Michaels, A.; Mentrup, D.; et al. Field-Robot-Based Agriculture: “RemoteFarming.1” and “BoniRob-Apps”. VDI-Berichte 2013, 2193, 439–446. [Google Scholar]

- Bawden, O.; Kulk, J.; Russell, R.; McCool, C.; English, A.; Dayoub, F.; Lehnert, C.; Perez, T. Robot for Weed Species Plant-specific Management. J. Field Robot. 2017, 34, 1179–1199. [Google Scholar] [CrossRef]

- Nielsen, S.H.; Jensen, K.; Bøgild, A.; Jørgensen, O.J.; Jacobsen, N.J.; Jaeger, C.; Lund, D.; Jørgensen, R.N. A Low Cost, Modular Robotics Tool Carrier for Precision Agriculture Research. In Proceedings of the 11th International Conference on Precision Agriculture, Indianapolis, IN, USA, 15–18 July 2020; International Society of Precision Agriculture: Monticello, IL, USA, ; 2012; Volume 16. [Google Scholar]

- Xu, R.; Li, C. A Modular Agricultural Robotic System (MARS) for Precision Farming: Concept and Implementation. J. Field Robot. 2022, 39, 387–409. [Google Scholar] [CrossRef]

- Murman, J.N. Flex-Ro: A Robotic High Throughput Field Phenotyping System. Master’s Thesis, University of Nebraska-Lincoln, Lincoln, NE, USA, 2019. Available online: https://digitalcommons.unl.edu/biosysengdiss/99 (accessed on 5 March 2025).

- Grimstad, L.; From, P.J. The Thorvald II Agricultural Robotic System. Robotics 2017, 6, 24. [Google Scholar] [CrossRef]

- Guri, D.; Lee, M.; Kroemer, O.; Kantor, G. Hefty: A Modular Reconfigurable Robot for Advancing Robot Manipulation in Agriculture. arXiv 2024, arXiv:2402.18710. [Google Scholar]

- Gonzalez-de-Santos, P.; Fernandez, R.; Sepúlveda, D.; Navas, E.; Armada, M. Unmanned Ground Vehicles for Smart Farms. In Agronomy Climate Change & Food Security; IntechOpen: London, UK, 2020. [Google Scholar] [CrossRef]

- Dibbern, T.; Romani, L.A.S.; Massruhá, S.M.F.S. Main Drivers and Barriers to the Adoption of Digital Agriculture Technologies. Smart Agric. Technol. 2024, 8, 100459. [Google Scholar] [CrossRef]

- Schimmelpfennig, D. Farm Profits and Adoption of Precision Agriculture; United States Department of Agriculture: Washington, DC, USA, 2016. Available online: www.ers.usda.gov/sites/default/files/_laserfiche/publications/80326/ERR-217.pdf?v=88942 (accessed on 5 March 2025).

- Cai, S.; Gou, W.; Wen, W.; Lu, X.; Fan, J.; Guo, X. Design and Development of a Low-Cost UGV 3D Phenotyping Platform with Integrated LiDAR and Electric Slide Rail. Plants 2023, 12, 483. [Google Scholar] [CrossRef] [PubMed]

- Song, P.; Li, Z.; Yang, M.; Shao, Y.; Pu, Z.; Yang, W.; Zhai, R. Dynamic Detection of Three-Dimensional Crop Phenotypes Based on a Consumer-Grade RGB-D Camera. Front. Plant Sci. 2023, 14, 1097725. [Google Scholar] [CrossRef]

- Kross, A.; McNairn, H.; Lapen, D.; Sunohara, M.; Champagne, C. Assessment of RapidEye Vegetation Indices for Estimation of Leaf Area Index and Biomass in Corn and Soybean Crops. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 235–248. [Google Scholar] [CrossRef]

- Mandal, D.; Kumar, V.; McNairn, H.; Bhattacharya, A.; Rao, Y.S. Joint Estimation of Plant Area Index (PAI) and Wet Biomass in Wheat and Soybean from C-Band Polarimetric SAR Data. Int. J. Appl. Earth Obs. Geoinf. 2019, 79, 24–34. [Google Scholar] [CrossRef]

- Okada, M.; Barras, C.; Toda, Y.; Hamazaki, K.; Yamasaki, Y.; Takahashi, H.; Takanashi, H.; Tsuda, M.; Hirai, Y.; Tsujimoto, H.; et al. High-Throughput Phenotyping of Soybean Biomass: Conventional Trait Estimation and 5 Novel Latent Feature Extraction Using UAV Remote Sensing and Deep Learning Models. Plant Phenomics 2024, 6, 0244. [Google Scholar] [CrossRef]

- Kemeshi, J.; Gummi, S.R.; Chang, Y. R2B2 Project: Design and Construction of a Low-Cost and Efficient Semi-Autonomous UGV for Row Crop Monitoring. In Proceedings of the 16th International Conference on Precision Agriculture, Manhattan, NY, USA, 21–24 July 2024; International Society of Precision Agriculture: Manhattan, KS, USA, 2024. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Nielsen, D.C.; Miceli-Garcia, J.J.; Lyon, D.J. Canopy Cover and Leaf Area Index Relationships for Wheat, Triticale, and Corn. Agron. J. 2012, 104, 1569–1573. [Google Scholar] [CrossRef]

- Parker, G.G. Tamm Review: Leaf Area Index (LAI) Is Both a Determinant and a Consequence of Important Processes in Vegetation Canopies. For. Ecol. Manag. 2020, 477, 118496. [Google Scholar] [CrossRef]

- Yuan, Y.; Wang, X.; Yin, F.; Zhan, J. Examination of the Quantitative Relationship between Vegetation Canopy Height and LAI. Adv. Meteorol. 2013, 2013, 964323. [Google Scholar] [CrossRef]

- Ma, X.; Wei, B.; Guan, H.; Yu, S. A Method of Calculating Phenotypic Traits for Soybean Canopies Based on Three-Dimensional Point Cloud. Ecol. Inf. 2022, 68, 101524. [Google Scholar] [CrossRef]

- Wang, C.; Nie, S.; Xi, X.; Luo, S.; Sun, X. Estimating the Biomass of Maize with Hyperspectral and LiDAR Data. Remote Sens. 2017, 9, 11. [Google Scholar] [CrossRef]

| Vehicle Specifications | Value | Unit |

|---|---|---|

| Vehicle mass | 64 | kg |

| Payload capacity | 60 | kg |

| Rated speed | 2 | m/s |

| Maximum speed | 4 | m/s |

| Width | 0.584–1 | m |

| Length | 0.86 | m |

| Ground clearance | 0.9–1.77 | m |

| Height | 1.77–2.38 | m |

| Operating time | 8 | h |

| Charge time | 4.25 | h |

| Material | Quantity | Unit Price (USD) | Total Price (USD) |

|---|---|---|---|

| DC hub motor | 4 | 44 | 176 |

| Wheel | 4 | 73 | 292 |

| Motor controller | 4 | 19 | 76 |

| Microcontrollers | 2 | 25 | 50 |

| Bluetooth module | 2 | 11 | 22 |

| Batteries | 2 | 45 | 90 |

| Shock absorber | 4 | 40 | 160 |

| Frame (aluminum) | N/A | 400 | |

| Electric box (IP65) | 2 | 13 | 26 |

| Total cost | 1292 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kemeshi, J.; Chang, Y.; Yadav, P.K.; Maimaitijiang, M.; Reicks, G. Development and Evaluation of a Multiaxial Modular Ground Robot for Estimating Soybean Phenotypic Traits Using an RGB-Depth Sensor. AgriEngineering 2025, 7, 76. https://doi.org/10.3390/agriengineering7030076

Kemeshi J, Chang Y, Yadav PK, Maimaitijiang M, Reicks G. Development and Evaluation of a Multiaxial Modular Ground Robot for Estimating Soybean Phenotypic Traits Using an RGB-Depth Sensor. AgriEngineering. 2025; 7(3):76. https://doi.org/10.3390/agriengineering7030076

Chicago/Turabian StyleKemeshi, James, Young Chang, Pappu Kumar Yadav, Maitiniyazi Maimaitijiang, and Graig Reicks. 2025. "Development and Evaluation of a Multiaxial Modular Ground Robot for Estimating Soybean Phenotypic Traits Using an RGB-Depth Sensor" AgriEngineering 7, no. 3: 76. https://doi.org/10.3390/agriengineering7030076

APA StyleKemeshi, J., Chang, Y., Yadav, P. K., Maimaitijiang, M., & Reicks, G. (2025). Development and Evaluation of a Multiaxial Modular Ground Robot for Estimating Soybean Phenotypic Traits Using an RGB-Depth Sensor. AgriEngineering, 7(3), 76. https://doi.org/10.3390/agriengineering7030076