Abstract

In recent years, artificial intelligence (AI) has seen remarkable advancements, stretching the limits of what is possible and opening up new frontiers. This comparative review investigates the evolving landscape of AI advancements, providing a thorough exploration of innovative techniques that have shaped the field. Beginning with the fundamentals of AI, including traditional machine learning and the transition to data-driven approaches, the narrative progresses through core AI techniques such as reinforcement learning, generative adversarial networks, transfer learning, and neuroevolution. The significance of explainable AI (XAI) is emphasized in this review, which also explores the intersection of quantum computing and AI. The review delves into the potential transformative effects of quantum technologies on AI advancements and highlights the challenges associated with their integration. Ethical considerations in AI, including discussions on bias, fairness, transparency, and regulatory frameworks, are also addressed. This review aims to contribute to a deeper understanding of the rapidly evolving field of AI. Reinforcement learning, generative adversarial networks, and transfer learning lead AI research, with a growing emphasis on transparency. Neuroevolution and quantum AI, though less studied, show potential for future developments.

1. Introduction

Since the advent of computers that required human manipulation in the 1950s, researchers have been focusing on enhancing computers’ capacity for independent learning. This development ushers in a new era for business, society, and computer science. In a sense, computers have advanced to the point where they can now complete brand-new tasks independently. To adapt to and learn from people, future artificial intelligence (AI) will interact with them using their language, gestures, and emotions. Due to the popularity and interconnectivity of various intelligent terminals, people will no longer only live in actual physical space, but will also continue to exist within the digital virtualized network. In this cyberspace, the lines between people and machines will already be blurred [,].

Robots exhibit AI as compared to humans. Human intelligence and animal intelligence both display consciousness and emotions, whereas the other does not []. Alan Turing popularized the idea that computers might one day think similarly to humans in 1950 []. Since it has been growing for more than 60 years, AI has evolved into an interdisciplinary field that combines several scientific and social science fields [,,]. There is a growing scholarly interest in the possibility that machine learning and AI could replace people, take over occupations, and alter how organizations run []. The underlying assumption is that, given specific restrictions on information processing, AI may produce results that are more accurate, efficient, and high-quality than those produced by human specialists [,].

Devices that can perform mental functions like learning and problem-solving in a manner that is comparable to how humans think are usually referred to as AI [,]. Artificial agents are defined as systems that observe their environment and take actions to enhance their chances of achieving their objectives. AI is a class of sophisticated machines that can successfully understand human speech []. The ability to use objects as well as convey knowledge, reasoning, planning, learning, and processing are among the core objectives of AI research [,]. AI aims are pursued using a variety of strategies, including computational intelligence and statistical modeling. In addition to having an impact on computer science, AI also draws researchers from languages, mathematics, and engineering [,,].

Exploring new AI frontiers is crucial because they develop technology, tackle new problems, boost performance, speed up research, and have positive societal and economic effects. By conducting a thorough literature review, introducing cutting-edge AI techniques such as reinforcement learning, generative adversarial networks, transfer learning, neuroevolution, explainable AI (XAI), and quantum AI with real-world applications, addressing ethical concerns, and outlining future directions, this paper significantly advances the field. By doing this, the article supports creativity, promotes ethical AI adoption, and stimulates additional research, ultimately advancing AI and its advantageous effects on a variety of industries as well as society at large. It helps people make informed judgments about technology adoption, ethics, and the future of AI by giving historical context, multidisciplinary ideas, and a glimpse into cutting-edge AI techniques. The evaluation helps grasp AI’s disruptive potential and difficulties, enabling ethical and beneficial integration across sectors and society.

2. Evolution of AI Techniques

Over time, there have been notable advancements in the field of AI techniques. The field has gone through several stages of evolution and revolution, which have increased impact and given rise to new technologies. AI’s history began in the 1940s, about the time that electronic computers were first introduced []. The area of AI was officially founded in 1955, when the phrase “AI” was first used in a workshop proposal []. AI has developed over time, moving from theoretical ideas to machine learning, expert systems, machine logic, and artificial neural networks [,,].

Interesting patterns of knowledge inflows and trends in AI research themes were found in a study by Dwivedi et al. The study presented in [] focuses on the evolution of AI research in technological forecasting and social change (TF&SC). By balancing development and revolution in research, the field of AI in education (AIED) has also undergone refinement and audacious thinking []. AI development has been marked by important turning points, breakthroughs, and depressing times called “AI winters” []. Training computation in the field has grown exponentially, which has resulted in the development of increasingly powerful AI systems [].

2.1. Emergence of Deep Learning and Its Impact

Deep learning has a significant influence that is still being felt today, changing the way intelligent systems function and opening up new possibilities for AI applications. Deep learning will likely become more significant as research into it advances, greatly influencing the potential and capabilities of intelligent computers across a range of fields. The amalgamation of historical turning points, technological breakthroughs, and a wide range of applications defines deep learning as a pillar in the continuous AI story.

AI has been greatly impacted by the advent of deep learning, which has revolutionized machine learning techniques. Several factors came together in the early 2010s to catapult deep learning—a class of machine learning algorithms that gradually extracts higher-level features from raw input—into the public eye [,]. Hardware advancements have been crucial in that they have made it possible to train massive deep neural networks with the processing power required, especially in the case of GPUs and specialized accelerators [,,]. Deep learning has a wide range of applications, including natural language processing, computer vision, and medical diagnostics []. Deep learning has drawn praise and criticism alike, and its significant influence is still being felt today, shaping intelligent system functioning and broadening the scope of AI applications. Novel studies in the field of deep learning are constantly emerging as a result of the remarkable advancements in hardware technologies as well as the unpredictable growth in data acquisition capabilities []. The significance of deep learning research is expected to grow as it advances, potentially influencing the potential and capabilities of intelligent computers across multiple domains.

2.2. Transition from Rule-Based Systems to Data-Driven Approaches

Rule-based systems, which depended on explicit programming of predetermined rules to control system behavior, were prevalent in the early phases of AI development []. Although these systems were quick and simple to construct, their reliance on hardcoded rules and inference limited their capacity to handle the complexity and unpredictability of real-world data. When they were not in their area of expertise, they were rarely accurate. Machine learning systems were far more difficult to comprehend, adjust, and maintain than rule-based systems. But when it came to solving problems with a large number of variables, where it was difficult for humans to come up with a comprehensive set of rules, they encountered difficulties [,,].

The development of data-driven techniques became apparent as AI advanced and changed the game. This paradigm change made it possible for AI systems to use data for learning and adaptation [], and it was fueled by developments in machine learning, particularly supervised learning. These systems may generalize patterns from enormous datasets, enabling adaptability in the face of varied and dynamic settings, as opposed to being restricted by strict rules [].

Data-driven methods were demonstrated by deep learning, a form of machine learning that uses multi-layered neural networks. Notwithstanding their benefits, certain obstacles still exist, such as the requirement for representative datasets [], worries over bias, and problems with interpretability []. However, the transition from rule-based to data-driven methodologies has unquestionably transformed the AI environment, unleashing hitherto unrealized potential and shaping the course of continuing research and development.

3. Core AI Techniques

The capabilities of AI have substantially increased as a result of the extraordinary changes it has undergone in recent years. This section will examine the cutting-edge developments in AI, exposing a wide range of ground-breaking methods that have the potential to transform a wide range of fields and applications.

AI research and development have advanced quickly over the years, resulting in the introduction of cutting-edge methods that push the limits of what AI is capable of. These cutting-edge techniques can transform entire industries, resolve difficult issues, and open up new research directions. While classic AI approaches have been effective in solving particular problems, they frequently have trouble adapting, scaling, and dealing with novel scenarios. The exploration of these cutting-edge approaches has been driven by the need for AI systems to complement human capabilities, learning from experience, generalizing information, and performing tasks effectively. Emerging AI techniques are characterized by their capacity to let robots learn from data, emulate human reasoning, and get better over time. These methods accomplish amazing feats by utilizing sophisticated algorithms, robust computational resources, and, occasionally, natural inspiration [,,]. For example, reinforcement learning empowers robots to acquire knowledge by means of experimentation, thereby facilitating the execution of intricate operations like object manipulation and tool application. By analyzing massive datasets, recognizing patterns, and generating forecasts, machine learning, on the other hand, enables robotics to enhance their performance gradually [,,].

3.1. Reinforcement Learning

Through contact with an environment where an agent senses the state of that environment, reinforcement learning is a learning framework that enhances a policy in terms of a given aim []. Reinforcement learning was created at the nexus of concepts from cognitive science, neurology, and AI. To create the notions employed in computational reinforcement learning algorithms, many behaviorist principles were transformed. Every time an artificial agent finds itself in a position where it has a choice of actions, reinforcement learning can be used as a general-purpose framework for making decisions. Robot control is one area where reinforcement learning has been used [,].

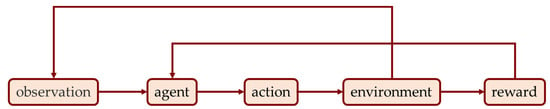

The reinforcement learning process involves several steps as shown in Figure 1. An agent interacts with its environment in this iterative cycle by receiving observations that represent the system’s current state. Equipped with a policy, the agent subsequently decides on an action by these observations. By applying the selected action to the environment, a reward signal is generated and the environment undergoes a transition to a new state. Both the reward and the amended observation are received by the agent; these are essential for the agent to learn and refine its policy. As the agent seeks to maximize cumulative rewards over time this cyclical interaction persists, and it eventually teaches the agent the optimal strategy for navigating and making decisions in the given environment.

Figure 1.

Reinforcement learning process.

Reinforcement learning refers to the practice of increasing rewards through a variety of environmental behaviors. Implementing the behaviors that optimize these rewards is part of this learning process. The agent needs to learn on his own using hit-and-trial mechanisms for maximal reward in this sort of learning, which acts similarly to natural learning []. Machine learning can be divided into supervised, unsupervised, and semi-supervised categories. Unsupervised and supervised learning is not the same as reinforcement learning (semi-supervised). The goal of supervised learning is to map the input to the corresponding output and learn the rules from labeled data. There is a set of instructions for each action. Depending on whether the value is continuous or discrete, a regressive or classification model is utilized. As opposed to supervised learning, unsupervised learning requires the agent to find the hidden structure in unlabeled data []. In contrast to supervised learning, unsupervised learning can be used when the amount of data is insufficient or the data are not labeled. However, in reinforcement learning, the agent has an initial point and an endpoint, and to get there, the agent needs to choose the best course of action by modifying the environment. Agents are rewarded for finding the solution, but they are not rewarded if they do not, therefore agents need to study their surroundings to collect the most benefits []. In reinforcement learning, the issue formulation is carried out using the Markov decision process (MDP), and the solution can be model-based (Q-learning, SARSA) or model-free (policy). In this method, the agent engages with the environment, generates policies based on incentives, and then the system is trained to perform better [,].

The utilization of reinforcement learning in the domains of robotics, gaming, marketing, and automated vehicles was examined by Wei et al. []. Their primary area of interest was Monte Carlo-based reinforcement learning control in the context of unmanned aerial vehicle systems. Wang et al. [] investigated the use of a Monte Carlo tree search-based self-play framework to learn to traverse graphs. Maoudj and Hentout [] introduced a novel method for mobile robot path planning that is optimal, utilising the Q-learning algorithm. Intayoad et al. [] developed personalized online learning recommendation systems by employing reinforcement learning based on contextual bandits.

3.2. Generative Adversarial Networks

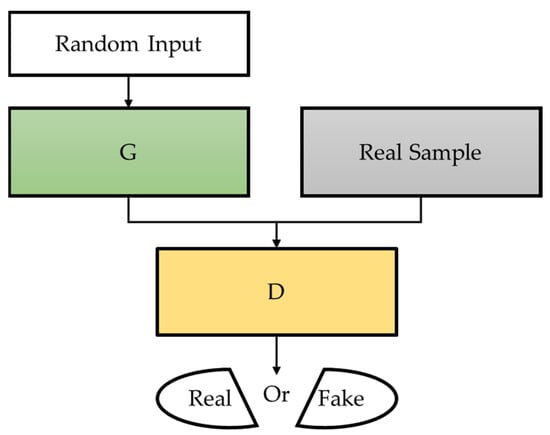

Generative adversarial networks, a brand-new generative model, were put forth by Goodfellow et al. [] in 2014. Generative adversarial networks engage in both a competitive and a cooperative process because they are made up of two neural networks: the discriminator (D) and the generator (G). While the discriminator seeks to distinguish between actual and synthetic data, the generator is tasked with creating synthetic data samples that resemble real data. Through this competitive training, generative adversarial networks gain knowledge from one another, causing the generator to produce more and more accurate data until it reaches an equilibrium where it is impossible to tell the difference between generated and genuine data. Figure 2 shows the process of generative adversarial networks.

Figure 2.

Process of generative adversarial networks.

Generative adversarial networks are used in many different fields, such as image synthesis, style transfer, and picture-to-image translation. Additionally, they have demonstrated potential in the areas of data augmentation, medication discovery, and building lifelike virtual environments for AI training. Despite their effectiveness, generative adversarial networks still encounter problems including instability during training and mode collapse, which results in a lack of diversity in the generated samples. Additionally, due to their potential abuse in the production of deep fakes and deceptive information, ethical considerations surface [].

3.3. Transfer Learning

It is expensive or not practical in many applications to recollect the ideal training data to update the models. Transfer learning or knowledge transfer between the task domains would be necessary in such circumstances. By transferring the useful parameters, transfer learning helps a classifier learn from one domain to another []. “What to transfer”, “how to transfer”, and “when to transfer” are the three key questions in transfer learning []. The question “what to transfer” asks what information should be transferred across domains or tasks. Knowledge can be specialized for particular tasks and domains that may or may not be useful. However, certain knowledge might be shared across several domains and could improve performance in the target domain or activity. Learning algorithms need to proceed after determining the portion of knowledge that has to be conveyed to transfer the beneficial knowledge. Therefore, “how to transfer” becomes the following issue. The final issue, “when to transfer”, asks under what conditions transmitting should occur. The majority of TL research now in existence focuses on “what to transfer” and “how to transfer”, implicitly presuming that the source domain and the target domain are related [].

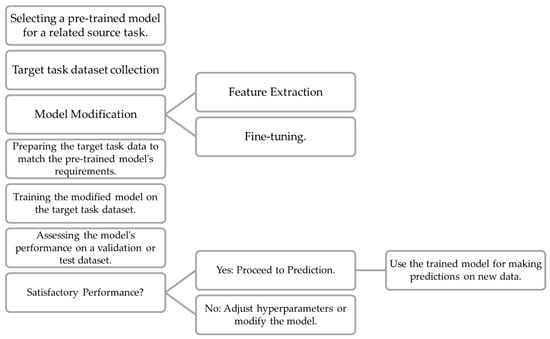

Choosing a basic model trained on a source task and then fine-tuning it with a smaller, task-specific dataset for the target task are typical steps in the process. Through this adaptation, the model can preserve its general knowledge while adapting its learned representations to the specifics of the current task. In the transfer learning process (Figure 3), a pre-trained model is chosen based on a related source task, and a target task dataset is collected and pre-processed accordingly. The model is then modified either through feature extraction, where new layers are added while keeping pre-trained layers frozen, or fine-tuning, where some pre-trained layers are unfrozen. The modified model is trained on the target task dataset, and its performance is evaluated on a separate dataset. If the performance is satisfactory, the trained model can be used for making predictions on new data. If not, adjustments such as hyperparameter tuning or model modifications can be made to improve performance. Once the desired performance is achieved, the transfer learning process is complete, and the model is ready for practical use in the target task.

Figure 3.

Transfer learning: from source task to target task.

3.4. Neuroevolution

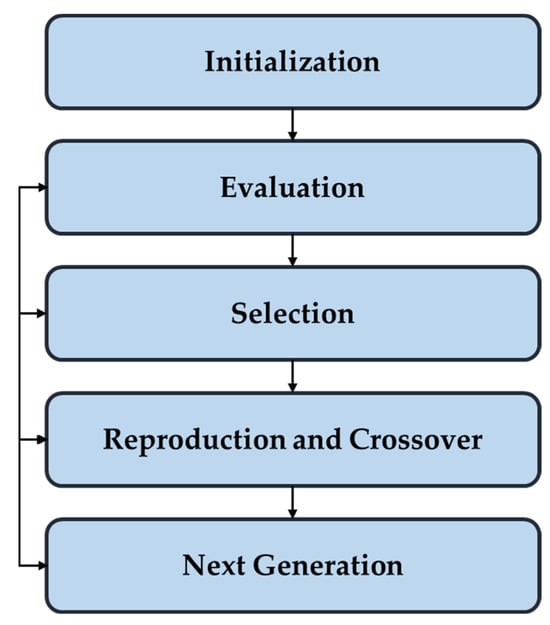

Artificial neural networks, or ANNs, are created using evolutionary methods by the AI branch known as neuroevolution. This approach uses evolutionary algorithms to train the neural networks. Even though we would think it has something to do with deep learning, neural evolution is not quite the same as what deep learning is at its core. As previously mentioned, neuroevolution is a method of machine learning that uses evolutionary algorithms to create artificial neural networks, drawing inspiration from the evolution of organic nervous systems in nature []. The process as shown in Figure 4 begins with initializing a population of neural networks with random parameters, representing potential solutions. These networks are then evaluated based on predefined metrics, determining their fitness for the given task. The top-performing networks are selected to proceed, mimicking natural selection. Through reproduction and crossover, new offspring with random changes and combined traits are generated. This process iterates for multiple generations, continuously refining and improving the neural networks’ performance.

Figure 4.

Process of neuroevolution.

The capacity of neuroevolution to examine a wider variety of network designs and hyperparameters than conventional techniques is one of its main advantages. Because of this, it is especially well suited for complicated situations where the ideal network structure might not be obvious or simple to build by human experts. Success in a variety of fields, including robotics, gaming, optimization problems, and control systems, has been demonstrated via neuroevolution []. Additionally, it has the potential to build neural networks using fewer computer resources, which makes it appealing for applications in contexts with limited resources [].

Neuroevolution, like many AI techniques, is not without its difficulties and limitations. The evolutionary process can be computationally expensive, and it may take several generations to find the best solution. Another crucial issue that researchers need to carefully handle is balancing exploration and exploitation to prevent early convergence []. Despite these difficulties, neuroevolution represents an innovative strategy in AI research, offering a potent substitute for conventional training techniques [].

4. Explainable AI

Given the widespread use of intricate deep learning architectures, it is vital to pay attention to the inner workings and gain an understanding of the results. This is one of XAI’s main driving forces []. The enhanced robustness of AI systems in business, enterprise computing, and essential industries is the main driver of XAI’s explosive expansion []. XAI tackles the issue of “black-box” AI models, which are intricate and challenging for people to understand. Systems that make decisions that are difficult to understand are difficult to trust, especially in industries like healthcare or self-driving cars where moral and justice concerns have inevitably surfaced. The field of XAI []—which is devoted to the understanding and interpretation of the behavior of AI systems—was revived as a result of the need for reliable, equitable, robust, high-performing models for real-world applications. In the years before its revival, the scientific community had lost interest as most research concentrated on the predictive power of algorithms rather than the recognition behind these predictions.

Techniques for XAI take both model-specific and model-neutral stances, accommodating multiple AI model architectures. With the help of these techniques, users can obtain an understanding of the information, characteristics, and preliminary judgments that go into the final prediction or choice. As a result, XAI assists in locating potential biases, weaknesses, and development opportunities, increasing the transparency of AI systems and ensuring that they adhere to legal standards. XAI is crucial in the healthcare industry, assisting physicians in making better decisions by assisting them in understanding the logic behind AI-assisted diagnosis. Similar to how it explains credit scoring or investment advice in finance, XAI increases client transparency and fosters faith in AI-powered services [,,,,].

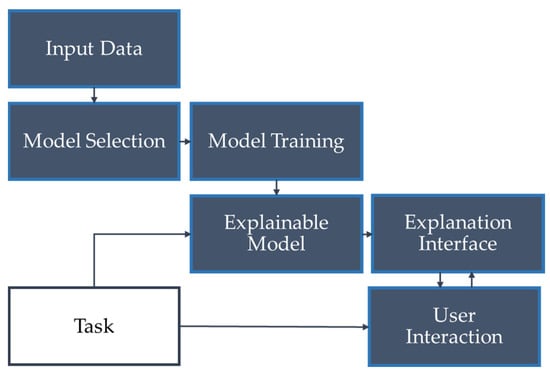

XAI is a process that starts with gathering input data, followed by selecting an appropriate AI model and training it on the data. Once trained, the model is capable of making predictions while providing explanations for its decisions. Users can interact with the system to explore these explanations, gaining insights into the factors influencing the model’s predictions (Figure 5). Finding the ideal balance between model complexity and interpretability is still difficult, despite advances in XAI. Researchers are always trying to find better ways to balance performance with explainability. The incorporation of XAI will be essential as AI develops to ensure that AI systems are not just effective, but also reliable and intelligible.

Figure 5.

Process of XAI.

5. Quantum AI

The intriguing and cutting-edge field of quantum AI, which combines AI and quantum computing, has the potential to fundamentally alter how humans handle complicated issues and process data. In contrast to classical computing, which uses bits to encode data as 0 s and 1 s, quantum computing makes use of quantum bits, or qubits, which can exist in several states at once thanks to entanglement and superposition [,].

In quantum AI, researchers and scientists use quantum physics to accomplish computations that are virtually or physically impossible for conventional computers. Certain activities, like factorization, optimization, and searching through enormous databases, could be dramatically sped up using these quantum algorithms. Shor’s method, which can factor big numbers exponentially faster than any known conventional algorithm, is one of the most impressive quantum algorithms. Given that many encryption techniques currently used in secure communications would be susceptible to a quantum computer executing Shor’s algorithm, this capacity has important implications for cryptography [].

The promise of quantum AI also includes improved machine learning methods. Quantum machine learning tries to increase the effectiveness and precision of various AI activities by utilizing quantum algorithms [,]. To address challenging classification and regression issues, quantum support vector machines and quantum neural networks, for instance, are being investigated. Quantum AI also offers special benefits in data processing and analysis. Quantum data compression techniques make it feasible to store and retrieve information more effectively, handling massive amounts of data with less processing effort.

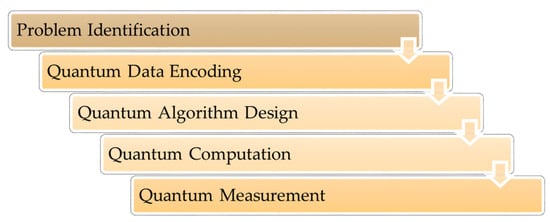

Quantum AI has certain difficulties, though. The technical challenge of stable and error-proof quantum computation is continuing. Decoherence and environmental disturbances are two factors that can lead to computing mistakes in quantum systems. To solve these problems and increase the dependability of quantum calculations, researchers are currently developing error correction methods. As quantum AI develops, ethical issues also become more important. As quantum computers become more potent, care needs to be taken to use them ethically and responsibly. Similar to classical AI, quantum AI should be designed with careful consideration of potential biases and unforeseen consequences. Figure 6 illustrates the quantum AI process. The first step in the procedure is to identify the issue and get the classical data ready for quantum processing. To solve the issue, a quantum algorithm is created and runs on a quantum computer. Following measurement, traditional data is acquired and examined using traditional AI methods. To determine the quantum advantage, the findings are benchmarked against traditional AI techniques.

Figure 6.

Process of Quantum AI.

6. Literature Comparison

In their research projects, several authors have emphasized cutting-edge AI methodologies. To assess the significance of cutting-edge AI approaches in various topic categories, this section will look into the statistics of articles in this field. Here, the search was conducted on 27 July 2023, and the results were based on the “Scopus” database (between 2013 and 2023).

Based on a search, Table 1 gives an examination of cutting-edge AI approaches in several issue categories. With 28,412 documents, mostly in the field of computer science and frequently presented as conference papers, reinforcement learning stands out as the technique that has been the subject of the greatest research among those that have been identified. This shows how prevalent it is at academic conferences and how pertinent it is to the field of AI research. Another well-known method is the generative adversarial network, which has 8186 documents and a significant number of published articles, mostly in the field of computer science. The widespread use of generative adversarial networks and their contributions to the generation of synthetic data, picture synthesis, and other innovative applications can be seen in their popularity. With 11,633 documents, transfer learning also stands out as a prominent area and is heavily covered in the field of computer science through articles. The large number of articles reflects both the importance of transfer learning in practical applications and the curiosity that it inspires among academics.

Table 1.

Emerging AI techniques: reviewing the current state.

Although these three methods dominate the field of AI study, it is important to note that explainability in AI has received a lot of attention, as shown by the 1479 documents. The concentration of studies shows a rising emphasis on improving the transparency and interpretability of AI systems, a critical component for applications in delicate fields. Neuroevolution and quantum AI have, however, received comparatively little research. With 338 documents, mostly conference papers, AI research in neuroevolution is a specialized field. However, as can be seen from the required 8 documents—most of which are conference papers—quantum AI is still in its infancy. Both neuroevolution and quantum AI have room for expansion and could end up taking center stage as the field of AI develops and grows.

A thorough summary of numerous AI methods, including reinforcement learning, generative adversarial networks, transfer learning, neuroevolution, XAI, and quantum AI, is provided in Table 2. Despite needing a lot of computing power and adjustment, reinforcement learning works well in dynamic and uncertain situations. Generative adversarial networks are excellent at producing high-quality data, however, they can experience mode collapse and training instability. Although there are benefits to knowledge transfer, transfer learning depends on task and domain similarity and requires careful fine-tuning. Evolutionary neural structures are made possible by neuroevolution, however, it can be computationally expensive and prone to premature convergence. XAI improves transparency, but it may also simplify models and only have a narrow range of applications. Although it faces technological limitations and calls for specialized knowledge, quantum AI shows promise for some applications. Knowing these advantages and disadvantages enables academics and practitioners to choose the best AI approach for their applications.

Table 2.

Strengths and weaknesses of emerging AI techniques.

To summarize, the examination of emerging AI methods demonstrates the widespread use of reinforcement learning, transfer learning, and generative adversarial networks in computer science. It also highlights the increasing significance of XAI and the possibilities for advancing neuroevolution and quantum AI. Moreover, the extensive implementation of AI approaches across many businesses highlights the profound influence of AI on various sectors.

7. Ethical Considerations and Future Prospects

It is critical to recognize and solve the enormous problems and ethical issues that come along with these developments as the boundaries of AI are continually pushed and novel methodologies are created. Though AI can transform many industries and enhance human life, it also comes with special risks and moral quandaries that need to be carefully considered.

7.1. Ethical Concerns Related to AI Advancements

Any scientific advancement inevitably raises ethical questions and considerations, especially one that moves so swiftly. Data scientists and other academics (such as social scientists, historians, and others) have recently begun to raise more concerns about the potential ethical problems of AI and the exploitation of personal data [,].

The persistence of bias and unjust treatment within AI systems, which has its roots in the biases prevalent in the enormous datasets they learn from, is one of the main concerns. These prejudices may show up in automated decision-making procedures, which could result in discrimination in the hiring, lending, and criminal justice systems, among other areas. It is crucial to protect fairness by tackling bias in AI systems to avoid feeding into societal stereotypes [,]. Privacy issues are also brought up by the enormous amount of individual data that is necessary for AI to perform at its best []. To preserve user privacy and stop unlawful use of sensitive information, it is essential to ensure data protection and informed permission [].

As AI algorithms become more complicated and frequently obscure their decision-making processes, transparency, and accountability offer additional ethical problems. This “black box” issue might breed mistrust and make it more difficult to comprehend AI decisions. To foster confidence and enable appropriate examination of AI systems, methods for AI accountability need to be established, and transparency needs to be promoted. Furthermore, concerns regarding responsibility are raised by AI’s growing autonomy. A careful balancing act between ethical and legal frameworks is needed to determine responsibility in cases of AI-related accidents or undesirable outcomes. Addressing the issue of accountability for AI acts and ensuring proper oversight become critical considerations as AI grows more independent [].

Beyond these issues, there are anxieties about job loss and economic inequity as automation and AI change various industries. Many workers may become unemployed or underemployed as a result of the potential disruption to employment markets, demanding preemptive efforts to address economic repercussions and guarantee a just transition to an AI-driven future. Due to AI’s heavy reliance on computational resources, which increases the technology’s carbon footprint significantly, the ethical implications also include AI’s potential environmental impact. It is essential to address the environmental effects and create sustainable AI methods to prevent additional planetary devastation [].

7.2. Mitigating Potential Risks and Unintended Consequences

It is crucial to address potential hazards and unintended consequences connected with these breakthroughs as the area of AI continues to progress and unveil novel methodologies. Although AI has the potential to alter businesses and enhance human lives, it also presents enormous problems and ethical issues that need to be controlled early on. Robust testing and validation, which expose models to a variety of datasets to discover biases and weaknesses, are essential to ensuring the responsible and secure deployment of AI systems. Employing XAI techniques can give explanations for AI judgments that are understood by humans, boosting confidence and revealing potential biases. To avoid prejudice and respect human values, one needs to adhere to ethical frameworks and rules while also making an effort to reduce bias and promote justice.

To further protect sensitive information, take action in unclear situations, and guarantee regulatory compliance, secure data management, human-in-the-loop solutions, and regular audits are essential. AI models can continue to be accurate and up-to-date throughout time by placing a strong emphasis on continual learning and adaptation. AI capabilities, restrictions, and risks are made more widely known to the public, promoting responsible use and well-informed decision-making. We can harness AI’s potential while reducing risks and creating an atmosphere where it is a force for good and societal advancement by embracing these ideas and taking a proactive stance. Building a future that embraces AI’s advantages while avoiding unanticipated negative effects requires responsible AI development, deployment, and governance.

7.3. Future Advancements

AI advancements have created intriguing new possibilities and potential applications across numerous industries. AI’s capacity to evaluate large amounts of patient data in the healthcare and medical fields holds promise for personalized medicine, customizing therapies to particular patients, and speeding up drug discovery. Additionally, AI-driven medical imaging improves diagnostic precision and helps identify diseases earlier. The advancement of AI benefits autonomous vehicles and transportation, enabling safer self-driving automobiles and streamlining logistics for quicker and more effective delivery services.

AI is essential for environmental conservation since it uses climate modeling to comprehend and slow down climate change while assisting in the protection of species. In the field of education, AI provides individualized learning experiences, content adaptation to student needs, and intelligent tutoring systems that offer on-the-fly support. AI can evaluate market trends for algorithmic trading and spot fraud to safeguard clients and financial institutions.

While content creation and curation benefit from AI algorithms’ ability to recognize audience preferences, AI-generated art, music, and literature push the frontiers of creativity. To ensure equity, accountability, and transparency in the use of AI, ethical issues need to be addressed as these new frontiers develop. Responsible AI development and collaboration will enable this ground-breaking technology to realize its full potential and transform industries, enhance lives, and create new opportunities.

8. Conclusions

The investigation of new frontiers in AI has uncovered a wealth of cutting-edge methods that have enormous potential to change the world. From traditional machine learning algorithms to the transformative power of deep learning, the exploration seamlessly transitioned through core AI techniques, highlighting the role of RL, the revolutionary impact of generative adversarial networks, the adaptability of transfer learning, and the optimization capabilities of neuroevolution. The imperative of XAI underscored the need for transparency in AI systems, while the intersection of quantum computing and AI signaled a frontier of unprecedented computational power. The limits of what was once thought achievable in AI have been reshaped by these techniques’ impressive capabilities. While classic AI approaches have shown promise in several areas, there is still room for improvement on the new frontiers.

A thorough analysis of the ethical implications linked to these revolutionary technologies is crucial in light of the significant progress made in AI. It is critical to maintain a balanced perspective while pursuing progress, recognizing the potential repercussions that may be obscured by the allure of novelty. AI’s enhanced problem-solving capabilities and increased efficacy are accompanied by the imminent danger of biased algorithms. The principal issue at hand pertains to the vulnerability of AI systems to perpetuate biases that may be inherent in the training data, thus contributing to societal inequities. Immediate action is required to ensure that algorithms adhere to the tenets of equity and inclusiveness.

The intricacy of advanced AI models presents difficulties, especially in industries such as finance or healthcare where accountability is of the utmost importance. Interpretability is of the utmost importance for these models to elucidate the reasoning behind decisions. In research, achieving a harmonious equilibrium between complexity and transparency proves to be an insurmountable challenge. In addition, the convergence of AI and quantum computing brings about an unparalleled expansion of computational capabilities, which not only presents opportunities for revolutionary advancements but also gives rise to ethical dilemmas. The potential compromise of currently secure encryption methods by quantum algorithms has led to an increase in privacy and security concerns, as it exposes sensitive data to risk.

As a result of AI’s disruptive influence on the socioeconomic landscape, employment dynamics are undergoing a paradigm shift. To equitably distribute the advantages resulting from AI, it is critical to adopt a strategic framework for workforce development, given the potential obsolescence of specific professions and the emergence of new roles that demand distinct skill sets. The escalation of privacy concerns caused by the processing of immense quantities of personal data by AI systems has sparked investigations into surveillance, ethical implementation, and the protection of individual rights. Faced with these obstacles, the demand for ethics in AI development becomes a practical necessity. Allocating financial resources toward technological progress is of equal importance to conducting high-quality studies that address these issues. By fostering a culture of accountability, the positive societal impact of AI innovation is ensured.

Success criteria no longer exclusively depend on technological expertise as AI progresses. Achieving success in this undertaking requires a thorough assessment of the ethical ramifications, reduction of personal biases, the establishment of transparent systems, and a dedication to guaranteeing inclusive advantages. The long-lasting influence of AI on human progress is contingent not only on its functionalities but also on its ethical assimilation into society. A more profound comprehension of the intricate obstacles and prospective advantages of AI enables sustainable and human-centric incorporation of technology into the continuous fabric of human progress.

Author Contributions

Conceptualization, H.T.; methodology, H.T.; validation, M.M. and H.T.; formal analysis, H.T. and M.M.; resources, H.T.; data curation, M.M.; writing—original draft preparation, M.M. and H.T.; writing—review and editing, M.M.; visualization, M.M.; supervision, H.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

Hamed Taherdoost is employed by the company, Quark Minded Technology Inc. Mitra Madanchian is employed by the company, Hamta Business Corporation. All authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Russell, S. Artificial Intelligence: A Modern Approach, eBook, Global Edition; Pearson Education, Limited: London, UK, 2016. [Google Scholar]

- Doshi-Velez, F.; Kim, B. Towards a rigorous science of interpretable machine learning. arXiv 2017, arXiv:1702.08608. [Google Scholar]

- McCarthy, J.; Minsky, M.L.; Rochester, N.; Shannon, C.E. A proposal for the dartmouth summer research project on artificial intelligence, 31 August 1955. AI Mag. 2006, 27, 12. [Google Scholar]

- Turing, A. Computing machinery and intelligence. Mind 1950, 59, 433–460. [Google Scholar] [CrossRef]

- Tan, K.-H.; Lim, B.P. The artificial intelligence renaissance: Deep learning and the road to human-level machine intelligence. APSIPA Trans. Signal Inf. Process. 2018, 7, e6. [Google Scholar] [CrossRef]

- Mata, J.; de Miguel, I.; Duran, R.J.; Merayo, N.; Singh, S.K.; Jukan, A.; Chamania, M. Artificial intelligence (AI) methods in optical networks: A comprehensive survey. Opt. Switch. Netw. 2018, 28, 43–57. [Google Scholar] [CrossRef]

- Došilović, F.K.; Brčić, M.; Hlupić, N. Explainable artificial intelligence: A survey. In Proceedings of the 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 21–25 May 2018; pp. 210–215. [Google Scholar]

- Von Krogh, G. Artificial intelligence in organizations: New opportunities for phenomenon-based theorizing. Acad. Manag. Discov. 2018, 4, 404–409. [Google Scholar] [CrossRef]

- Bughin, J.; Hazan, E.; Lund, S.; Dahlström, P.; Wiesinger, A.; Subramaniam, A. Skill shift: Automation and the future of the workforce. McKinsey Glob. Inst. 2018, 1, 3–84. [Google Scholar]

- Agrawal, A.; Gans, J.S.; Goldfarb, A. Exploring the impact of artificial intelligence: Prediction versus judgment. Inf. Econ. Policy 2019, 47, 1–6. [Google Scholar] [CrossRef]

- Legg, S.; Hutter, M. A collection of definitions of intelligence. Front. Artif. Intell. Appl. 2007, 157, 17. [Google Scholar]

- Taherdoost, H. An overview of trends in information systems: Emerging technologies that transform the information technology industry. Cloud Comput. Data Sci. 2023, 4, 1–16. [Google Scholar] [CrossRef]

- Russell, S.J. Artificial Intelligence a Modern Approach; Pearson Education, Inc.: London, UK, 2010; Available online: https://cse.sc.edu/~mgv/csce580sp15/Newell_Issues1983.pdf (accessed on 17 December 2023).

- Kolata, G. How Can Computers Get Common Sense? Two of the founders of the field of artificial intelligence disagree on how to make a thinking machine. Science 1982, 217, 1237–1238. [Google Scholar] [CrossRef] [PubMed]

- Taherdoost, H.; Madanchian, M. Artificial Intelligence and Knowledge Management: Impacts, Benefits, and Implementation. Computers 2023, 12, 72. [Google Scholar] [CrossRef]

- Kurzweil, R. The singularity is near. In Ethics and Emerging Technologies; Springer: Berlin/Heidelberg, Germany, 2005; pp. 393–406. [Google Scholar]

- Simeone, O. Machine Learning for Engineers; Cambridge University Press: Cambridge, UK, 2022. [Google Scholar]

- McClarren, R.G. Machine Learning for Engineers; Springer: Berlin, Germany, 2021. [Google Scholar]

- Newell, A. Intellectual issues in the history of artificial intelligence. Artif. Intell. Crit. Concepts 1982, 25–70. [Google Scholar]

- Kaynak, O. The Golden Age of Artificial Intelligence: Inaugural Editorial; Springer: Berlin/Heidelberg, Germany, 2021; Volume 1, pp. 1–7. [Google Scholar]

- Duan, Y.; Edwards, J.S.; Dwivedi, Y.K. Artificial intelligence for decision making in the era of Big Data—Evolution, challenges and research agenda. Int. J. Inf. Manag. 2019, 48, 63–71. [Google Scholar] [CrossRef]

- Mijwil, M.M.; Abttan, R.A. Artificial intelligence: A survey on evolution and future trends. Asian J. Appl. Sci. 2021, 9, 87–93. [Google Scholar] [CrossRef]

- Pannu, A. Artificial intelligence and its application in different areas. Artif. Intell. 2015, 4, 79–84. [Google Scholar]

- Dwivedi, Y.K.; Sharma, A.; Rana, N.P.; Giannakis, M.; Goel, P.; Dutot, V. Evolution of artificial intelligence research in Technological Forecasting and Social Change: Research topics, trends, and future directions. Technol. Forecast. Soc. Chang. 2023, 192, 122579. [Google Scholar] [CrossRef]

- Roll, I.; Wylie, R. Evolution and revolution in artificial intelligence in education. Int. J. Artif. Intell. Educ. 2016, 26, 582–599. [Google Scholar] [CrossRef]

- Atkinson, P. ‘The Robots are Coming! Perennial problems with technological progress. Des. J. 2017, 20, S4120–S4131. [Google Scholar] [CrossRef][Green Version]

- Desislavov, R.; Martínez-Plumed, F.; Hernández-Orallo, J. Trends in AI inference energy consumption: Beyond the performance-vs-parameter laws of deep learning. Sustain. Comput. Inform. Syst. 2023, 38, 100857. [Google Scholar] [CrossRef]

- Silvano, C.; Ielmini, D.; Ferrandi, F.; Fiorin, L.; Curzel, S.; Benini, L.; Conti, F.; Garofalo, A.; Zambelli, C.; Calore, E. A survey on deep learning hardware accelerators for heterogeneous hpc platforms. arXiv 2023, arXiv:2306.15552. [Google Scholar]

- Roddick, T. Learning Birds-Eye View Representations for Autonomous Driving; University of Cambridge: Cambridge, UK, 2021. [Google Scholar]

- Nabavinejad, S.M.; Baharloo, M.; Chen, K.-C.; Palesi, M.; Kogel, T.; Ebrahimi, M. An overview of efficient interconnection networks for deep neural network accelerators. IEEE J. Emerg. Sel. Top. Circuits Syst. 2020, 10, 268–282. [Google Scholar] [CrossRef]

- Wang, E.; Davis, J.J.; Zhao, R.; Ng, H.-C.; Niu, X.; Luk, W.; Cheung, P.Y.; Constantinides, G.A. Deep neural network approximation for custom hardware: Where we’ve been, where we’re going. ACM Comput. Surv. 2019, 52, 1–39. [Google Scholar] [CrossRef]

- Reuther, A.; Michaleas, P.; Jones, M.; Gadepally, V.; Samsi, S.; Kepner, J. Survey and benchmarking of machine learning accelerators. In Proceedings of the 2019 IEEE High Performance Extreme Computing Conference (HPEC), Westin Hotel, Waltham, MA, USA, 24–26 September 2019; pp. 1–9. [Google Scholar]

- Dong, S.; Wang, P.; Abbas, K. A survey on deep learning and its applications. Comput. Sci. Rev. 2021, 40, 100379. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Subramanian, B.; Maurya, M.; Puranik, V.G.; Kumar, A.S. Toward Artificial General Intelligence: Deep Learning, Neural Networks, Generative AI; De Gruyter: Berlin, Germany, 2023. [Google Scholar]

- Liu, H.; Gegov, A.; Stahl, F. Categorization and construction of rule based systems. In Proceedings of the Engineering Applications of Neural Networks: 15th International Conference, EANN 2014, Proceedings 15, Sofia, Bulgaria, 5–7 September 2014; pp. 183–194. [Google Scholar]

- Alty, J.; Guida, G. The use of rule-based system technology for the design of man-machine systems. IFAC Proc. Vol. 1985, 18, 21–41. [Google Scholar] [CrossRef]

- Vilone, G.; Longo, L. A quantitative evaluation of global, rule-based explanations of post-hoc, model agnostic methods. Front. Artif. Intell. 2021, 4, 717899. [Google Scholar] [CrossRef]

- Tufail, S.; Riggs, H.; Tariq, M.; Sarwat, A.I. Advancements and Challenges in Machine Learning: A Comprehensive Review of Models, Libraries, Applications, and Algorithms. Electronics 2023, 12, 1789. [Google Scholar] [CrossRef]

- Zhang, X.-Y.; Liu, C.-L.; Suen, C.Y. Towards robust pattern recognition: A review. Proc. IEEE 2020, 108, 894–922. [Google Scholar] [CrossRef]

- Fink, O.; Wang, Q.; Svensen, M.; Dersin, P.; Lee, W.-J.; Ducoffe, M. Potential, challenges and future directions for deep learning in prognostics and health management applications. Eng. Appl. Artif. Intell. 2020, 92, 103678. [Google Scholar] [CrossRef]

- Ching, T.; Himmelstein, D.S.; Beaulieu-Jones, B.K.; Kalinin, A.A.; Do, B.T.; Way, G.P.; Ferrero, E.; Agapow, P.-M.; Zietz, M.; Hoffman, M.M. Opportunities and obstacles for deep learning in biology and medicine. J. R. Soc. Interface 2018, 15, 20170387. [Google Scholar] [CrossRef] [PubMed]

- Hermann, E.; Hermann, G. Artificial intelligence in research and development for sustainability: The centrality of explicability and research data management. AI Ethics 2022, 2, 29–33. [Google Scholar] [CrossRef]

- Collins, C.; Dennehy, D.; Conboy, K.; Mikalef, P. Artificial intelligence in information systems research: A systematic literature review and research agenda. Int. J. Inf. Manag. 2021, 60, 102383. [Google Scholar] [CrossRef]

- National Academies of Sciences, Engineering, and Medicine (NASEM). Machine Learning and Artificial Intelligence to Advance Earth System Science: Opportunities and Challenges: Proceedings of a Workshop; The National Academies Press: Washington, DC, USA, 2022. [Google Scholar]

- Soori, M.; Arezoo, B.; Dastres, R. Artificial intelligence, machine learning and deep learning in advanced robotics, A review. Cogn. Robot. 2023, 3, 54–70. [Google Scholar] [CrossRef]

- Sivamayil, K.; Rajasekar, E.; Aljafari, B.; Nikolovski, S.; Vairavasundaram, S.; Vairavasundaram, I. A systematic study on reinforcement learning based applications. Energies 2023, 16, 1512. [Google Scholar] [CrossRef]

- Botvinick, M.; Ritter, S.; Wang, J.X.; Kurth-Nelson, Z.; Blundell, C.; Hassabis, D. Reinforcement learning, fast and slow. Trends Cogn. Sci. 2019, 23, 408–422. [Google Scholar] [CrossRef] [PubMed]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Peters, J.; Schaal, S. Reinforcement learning of motor skills with policy gradients. Neural Netw. 2008, 21, 682–697. [Google Scholar] [CrossRef]

- Endo, G.; Morimoto, J.; Matsubara, T.; Nakanishi, J.; Cheng, G. Learning CPG-based biped locomotion with a policy gradient method: Application to a humanoid robot. Int. J. Robot. Res. 2008, 27, 213–228. [Google Scholar] [CrossRef]

- Yi, F.; Fu, W.; Liang, H. Model-based reinforcement learning: A survey. arXiv 2018, arXiv:2006.16712v4. [Google Scholar]

- Kumar, D.P.; Amgoth, T.; Annavarapu, C.S.R. Machine learning algorithms for wireless sensor networks: A survey. Inf. Fusion 2019, 49, 1–25. [Google Scholar] [CrossRef]

- Ravishankar, N.; Vijayakumar, M. Reinforcement learning algorithms: Survey and classification. Indian J. Sci. Technol. 2017, 10, 1–8. [Google Scholar] [CrossRef]

- Van Otterlo, M.; Wiering, M. Reinforcement learning and markov decision processes. In Reinforcement Learning: State-of-the-Art; Springer: Berlin, Germany, 2012; pp. 3–42. [Google Scholar]

- Tanveer, J.; Haider, A.; Ali, R.; Kim, A. Reinforcement Learning-Based Optimization for Drone Mobility in 5G and Beyond Ultra-Dense Networks. Comput. Mater. Contin. 2021, 68, 3807–3823. [Google Scholar] [CrossRef]

- Wei, Q.; Yang, Z.; Su, H.; Wang, L. Monte Carlo-based reinforcement learning control for unmanned aerial vehicle systems. Neurocomputing 2022, 507, 282–291. [Google Scholar] [CrossRef]

- Wang, Q.; Hao, Y.; Cao, J. Learning to traverse over graphs with a Monte Carlo tree search-based self-play framework. Eng. Appl. Artif. Intell. 2021, 105, 104422. [Google Scholar] [CrossRef]

- Maoudj, A.; Hentout, A. Optimal path planning approach based on Q-learning algorithm for mobile robots. Appl. Soft Comput. 2020, 97, 106796. [Google Scholar] [CrossRef]

- Intayoad, W.; Kamyod, C.; Temdee, P. Reinforcement learning based on contextual bandits for personalized online learning recommendation systems. Wirel. Pers. Commun. 2020, 115, 2917–2932. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27. Available online: https://papers.nips.cc/paper_files/paper/2014/hash/5ca3e9b122f61f8f06494c97b1afccf3-Abstract.html (accessed on 17 December 2023).

- Jin, L.; Tan, F.; Jiang, S. Generative adversarial network technologies and applications in computer vision. Comput. Intell. Neurosci. 2020. [Google Scholar] [CrossRef]

- Gu, Y.; Ge, Z.; Bonnington, C.P.; Zhou, J. Progressive transfer learning and adversarial domain adaptation for cross-domain skin disease classification. IEEE J. Biomed. Health Inform. 2019, 24, 1379–1393. [Google Scholar] [CrossRef]

- Muller, B.; Al-Sahaf, H.; Xue, B.; Zhang, M. Transfer learning: A building block selection mechanism in genetic programming for symbolic regression. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Prague, Czech Republic, 13–17 July 2019; pp. 350–351. [Google Scholar]

- Agarwal, N.; Sondhi, A.; Chopra, K.; Singh, G. Transfer learning: Survey and classification. Smart Innovations in Communication and Computational Sciences: Proceedings of ICSICCS 2020. Adv. Intell. Syst. Comput. 2021, 1168, 145–155. [Google Scholar]

- Khan, R.M. A Review Study on Neuro Evolution. Int. J. Sci. Res. Eng. Trends 2021, 7, 181–185. [Google Scholar]

- Galván, E.; Mooney, P. Neuroevolution in deep neural networks: Current trends and future challenges. IEEE Trans. Artif. Intell. 2021, 2, 476–493. [Google Scholar] [CrossRef]

- Pontes-Filho, S.; Olsen, K.; Yazidi, A.; Riegler, M.A.; Halvorsen, P.; Nichele, S. Towards the Neuroevolution of Low-level artificial general intelligence. Front. Robot. AI 2022, 9, 1007547. [Google Scholar] [CrossRef] [PubMed]

- Doran, D.; Schulz, S.; Besold, T.R. What does explainable AI really mean? A new conceptualization of perspectives. arXiv 2017, arXiv:1710.00794. [Google Scholar]

- Gunning, D.; Aha, D. DARPA’s explainable artificial intelligence (XAI) program. AI Mag. 2019, 40, 44–58. [Google Scholar]

- Chaddad, A.; Peng, J.; Xu, J.; Bouridane, A. Survey of explainable AI techniques in healthcare. Sensors 2023, 23, 634. [Google Scholar] [CrossRef] [PubMed]

- Loh, H.W.; Ooi, C.P.; Seoni, S.; Barua, P.D.; Molinari, F.; Acharya, U.R. Application of explainable artificial intelligence for healthcare: A systematic review of the last decade (2011–2022). Comput. Methods Programs Biomed. 2022, 107161. [Google Scholar] [CrossRef]

- Sadeghi, Z.; Alizadehsani, R.; Cifci, M.A.; Kausar, S.; Rehman, R.; Mahanta, P.; Bora, P.K.; Almasri, A.; Alkhawaldeh, R.S.; Hussain, S. A Brief Review of Explainable Artificial Intelligence in Healthcare. arXiv 2023, arXiv:2304.01543. [Google Scholar]

- Band, S.S.; Yarahmadi, A.; Hsu, C.-C.; Biyari, M.; Sookhak, M.; Ameri, R.; Dehzangi, I.; Chronopoulos, A.T.; Liang, H.-W. Application of explainable artificial intelligence in medical health: A systematic review of interpretability methods. Inform. Med. Unlocked 2023, 226, 101286. [Google Scholar] [CrossRef]

- Di Martino, F.; Delmastro, F. Explainable AI for clinical and remote health applications: A survey on tabular and time series data. Artif. Intell. Rev. 2023, 56, 5261–5315. [Google Scholar] [CrossRef]

- Glisic, S.G.; Lorenzo, B. Artificial Intelligence and Quantum Computing for Advanced Wireless Networks; John Wiley & Sons: Hoboken, NJ, USA, 2022. [Google Scholar]

- Marella, S.T.; Parisa, H.S.K. Introduction to quantum computing. Quantum Comput. Commun. 2020. [Google Scholar] [CrossRef]

- Sgarbas, K.N. The road to quantum artificial intelligence. arXiv 2007, arXiv:0705.3360. [Google Scholar]

- Zeguendry, A.; Jarir, Z.; Quafafou, M. Quantum machine learning: A review and case studies. Entropy 2023, 25, 287. [Google Scholar] [CrossRef] [PubMed]

- Krenn, M.; Landgraf, J.; Foesel, T.; Marquardt, F. Artificial intelligence and machine learning for quantum technologies. Phys. Rev. A 2023, 107, 010101. [Google Scholar] [CrossRef]

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement learning: A survey. J. Artif. Intell. Res. 1996, 4, 237–285. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2018, 37, 421–436. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Brock, A.; Donahue, J.; Simonyan, K. Large scale GAN training for high fidelity natural image synthesis. arXiv 2018, arXiv:1809.11096. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? Adv. Neural Inf. Process. Syst. 2014, 27, 3320–3328. [Google Scholar]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. In Proceedings of the Artificial Neural Networks and Machine Learning–ICANN 2018: 27th International Conference on Artificial Neural Networks, Proceedings, Part III 27, Rhodes, Greece, 4–7 October 2018; pp. 270–279. [Google Scholar]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Learning and transferring mid-level image representations using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1717–1724. [Google Scholar]

- Stanley, K.O.; Miikkulainen, R. Evolving neural networks through augmenting topologies. Evol. Comput. 2002, 10, 99–127. [Google Scholar] [CrossRef] [PubMed]

- Real, E.; Moore, S.; Selle, A.; Saxena, S.; Suematsu, Y.L.; Tan, J.; Le, Q.V.; Kurakin, A. Large-scale evolution of image classifiers. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2902–2911. [Google Scholar]

- Clune, J.; Stanley, K.O.; Pennock, R.T.; Ofria, C. On the performance of indirect encoding across the continuum of regularity. IEEE Trans. Evol. Comput. 2011, 15, 346–367. [Google Scholar] [CrossRef]

- Mouret, J.-B.; Clune, J. Illuminating search spaces by mapping elites. arXiv 2015, arXiv:1504.04909. [Google Scholar]

- Lipton, Z.C. The mythos of model interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue 2018, 16, 31–57. [Google Scholar] [CrossRef]

- Caruana, R.; Lou, Y.; Gehrke, J.; Koch, P.; Sturm, M.; Elhadad, N. Intelligible models for healthcare: Predicting pneumonia risk and hospital 30-day readmission. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; pp. 1721–1730. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should I trust you? ” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2018, 2, 79. [Google Scholar] [CrossRef]

- McArdle, S.; Endo, S.; Aspuru-Guzik, A.; Benjamin, S.C.; Yuan, X. Quantum computational chemistry. Rev. Mod. Phys. 2020, 92, 015003. [Google Scholar] [CrossRef]

- Childs, A.M.; Gosset, D.; Webb, Z. Universal computation by multiparticle quantum walk. Science 2013, 339, 791–794. [Google Scholar] [CrossRef]

- Kandala, A.; Mezzacapo, A.; Temme, K.; Takita, M.; Brink, M.; Chow, J.M.; Gambetta, J.M. Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature 2017, 549, 242–246. [Google Scholar] [CrossRef]

- Jobin, A.; Ienca, M.; Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar] [CrossRef]

- Etzioni, A.; Etzioni, O. Incorporating ethics into artificial intelligence. J. Ethics 2017, 21, 403–418. [Google Scholar] [CrossRef]

- Belenguer, L. AI bias: Exploring discriminatory algorithmic decision-making models and the application of possible machine-centric solutions adapted from the pharmaceutical industry. AI Ethics 2022, 2, 771–787. [Google Scholar] [CrossRef] [PubMed]

- Fletcher, R.R.; Nakeshimana, A.; Olubeko, O. Addressing fairness, bias, and appropriate use of artificial intelligence and machine learning in global health. Front. Artif. Intell. 2021, 3, 561802. [Google Scholar] [CrossRef] [PubMed]

- Bartneck, C.; Lütge, C.; Wagner, A.; Welsh, S. Privacy issues of AI. Introd. Ethics Robot. AI 2021, 61–70. [Google Scholar] [CrossRef]

- Naik, N.; Hameed, B.; Shetty, D.K.; Swain, D.; Shah, M.; Paul, R.; Aggarwal, K.; Ibrahim, S.; Patil, V.; Smriti, K. Legal and ethical consideration in artificial intelligence in healthcare: Who takes responsibility? Front. Surg. 2022, 9, 266. [Google Scholar] [CrossRef]

- Chen, Z. Ethics and discrimination in artificial intelligence-enabled recruitment practices. Humanit. Soc. Sci. Commun. 2023, 10, 567. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).