The Detection and Counting of Olive Tree Fruits Using Deep Learning Models in Tacna, Perú

Abstract

1. Introduction

2. Literarature Review

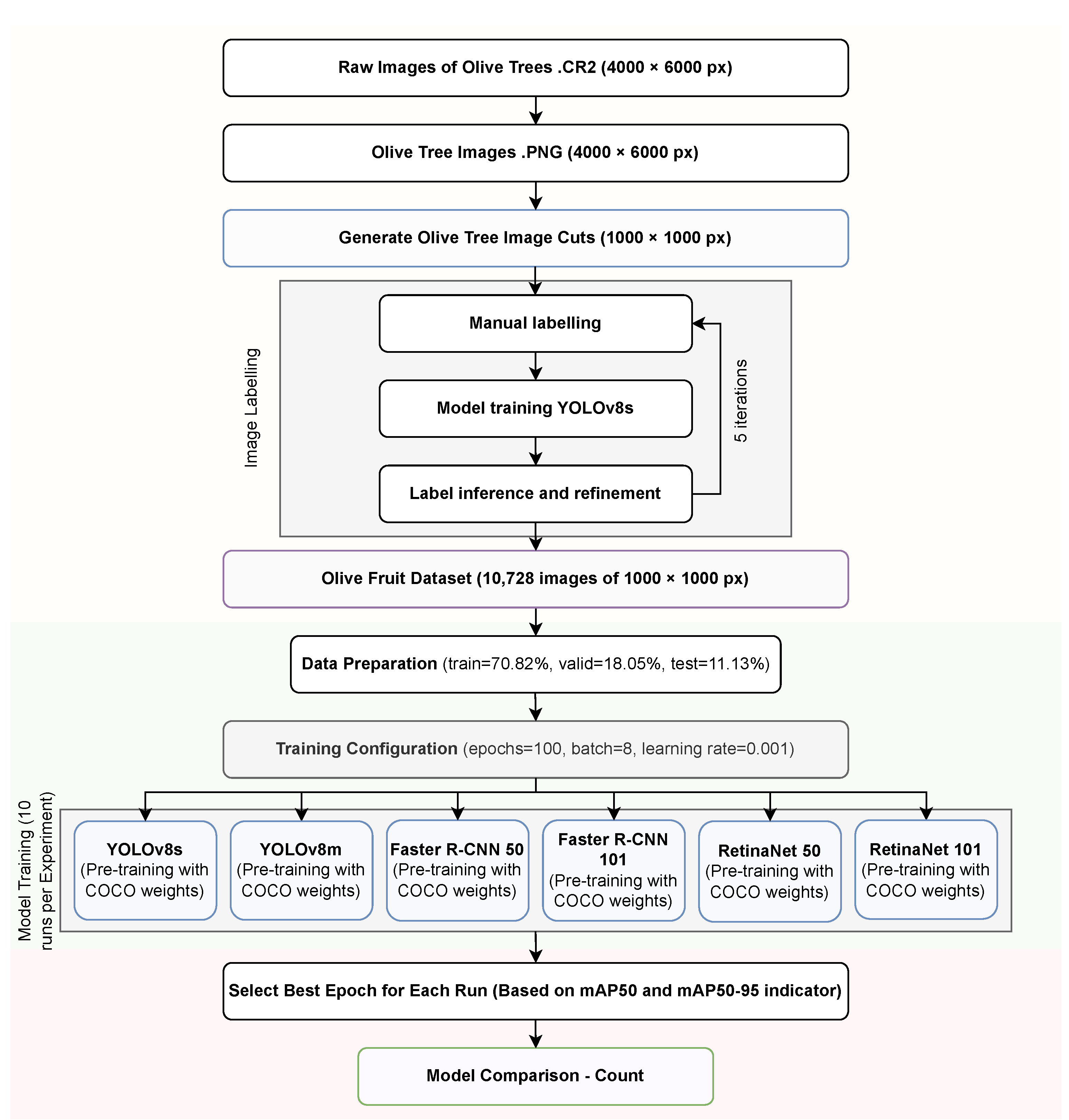

3. Materials and Methods

3.1. Detection Architectures

3.1.1. YOLOv8

3.1.2. Faster R-CNN

3.1.3. RetinaNet

3.2. Tacna Olive Dataset

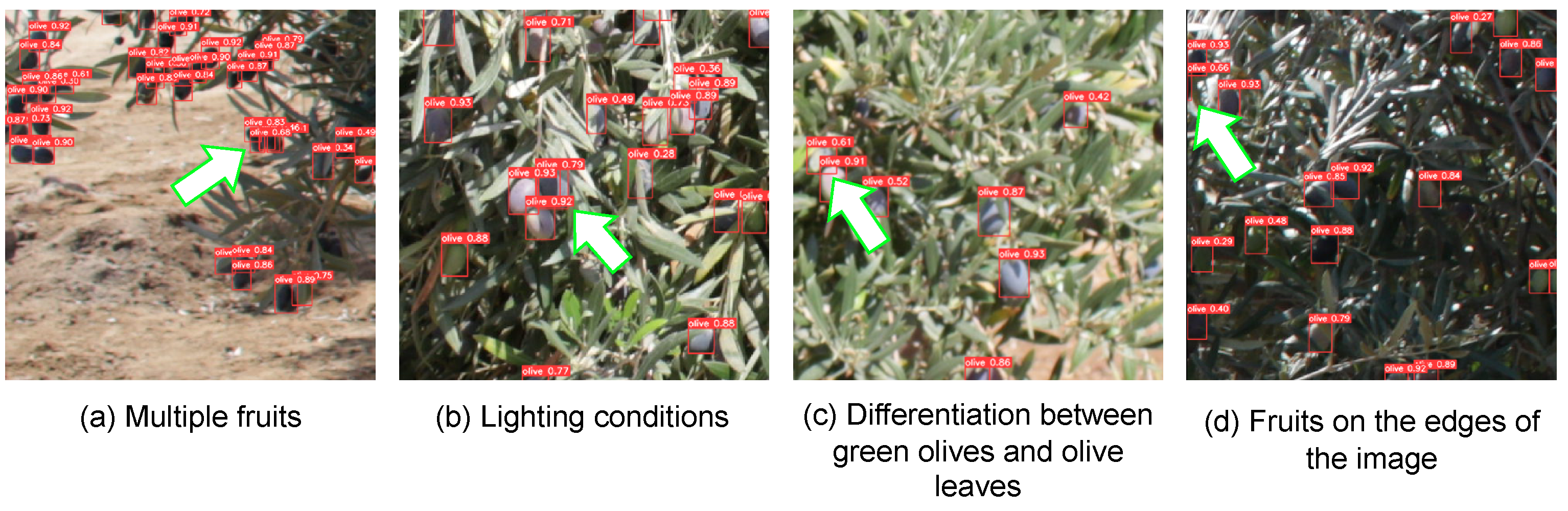

3.2.1. Data Acquisition

3.2.2. Data Generation

3.2.3. Data Labeling

3.3. Data Processing

3.3.1. Training

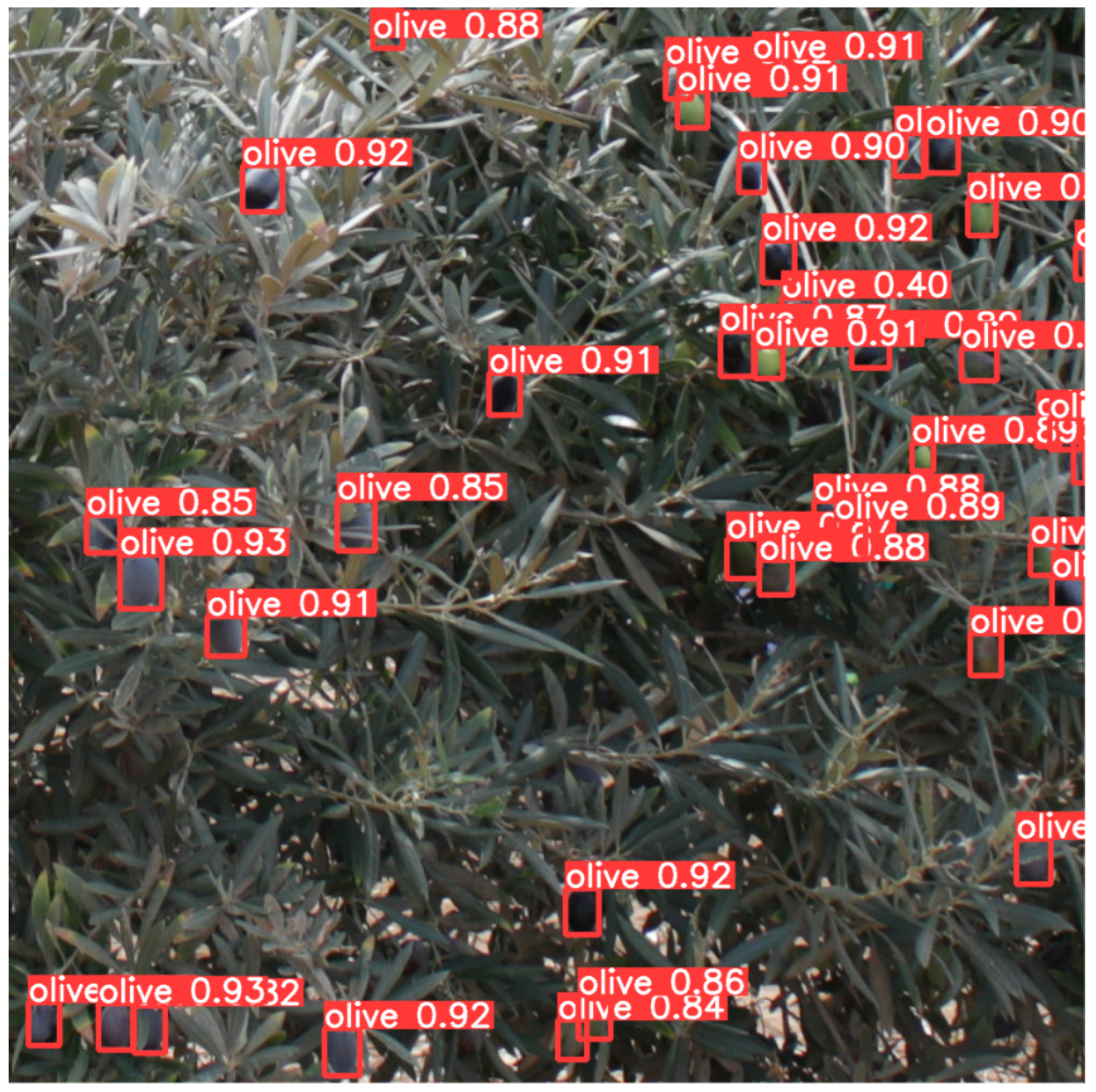

3.3.2. Count

3.4. Evaluation Metrics

3.4.1. Intersection over Union (IoU)

3.4.2. Mean Average Precision (mAP)

3.4.3. MAP50

3.4.4. MAP50-95

3.4.5. Root Mean Square Error (RMSE)

4. Results

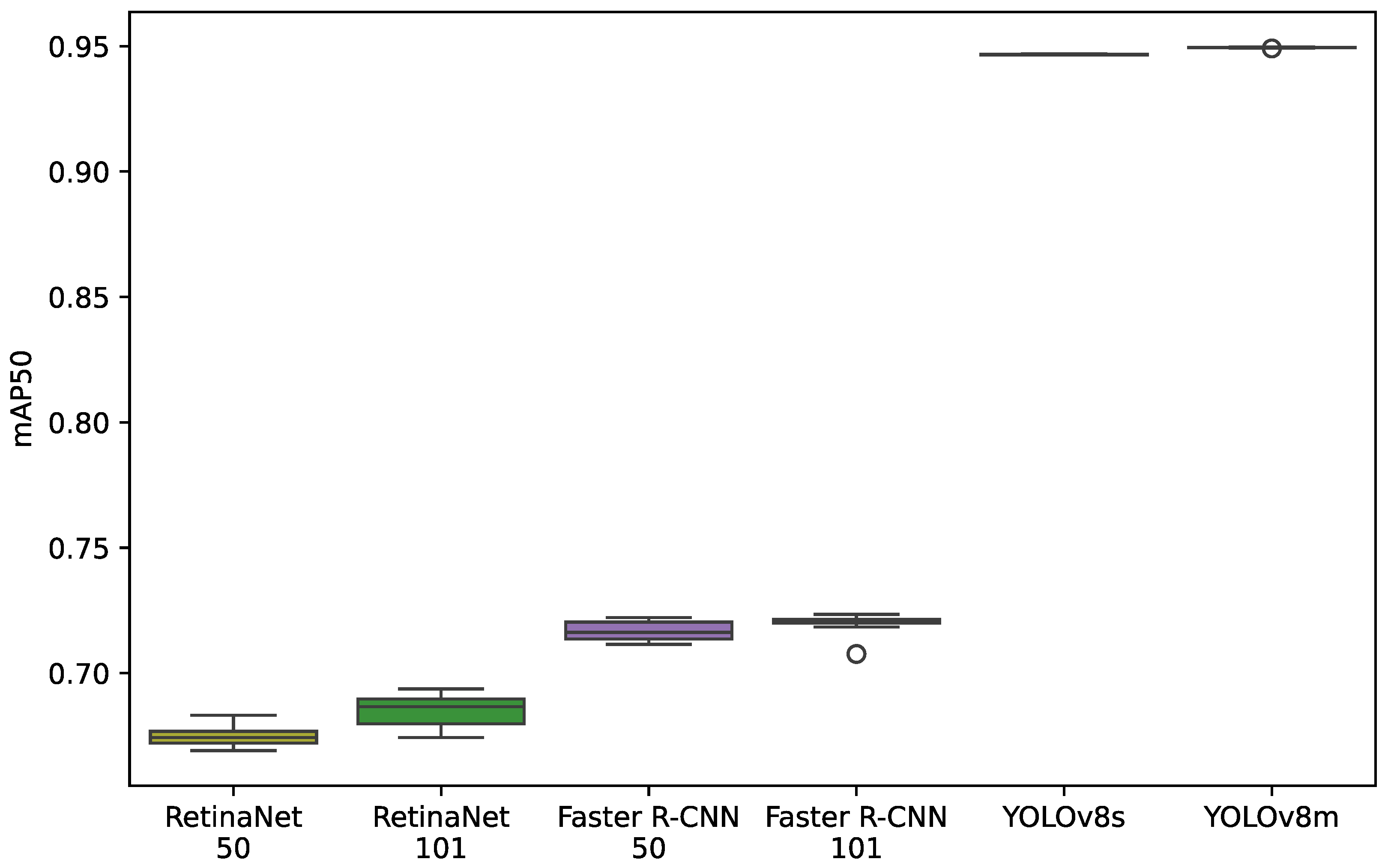

4.1. Model Results for the mAP50 Indicator

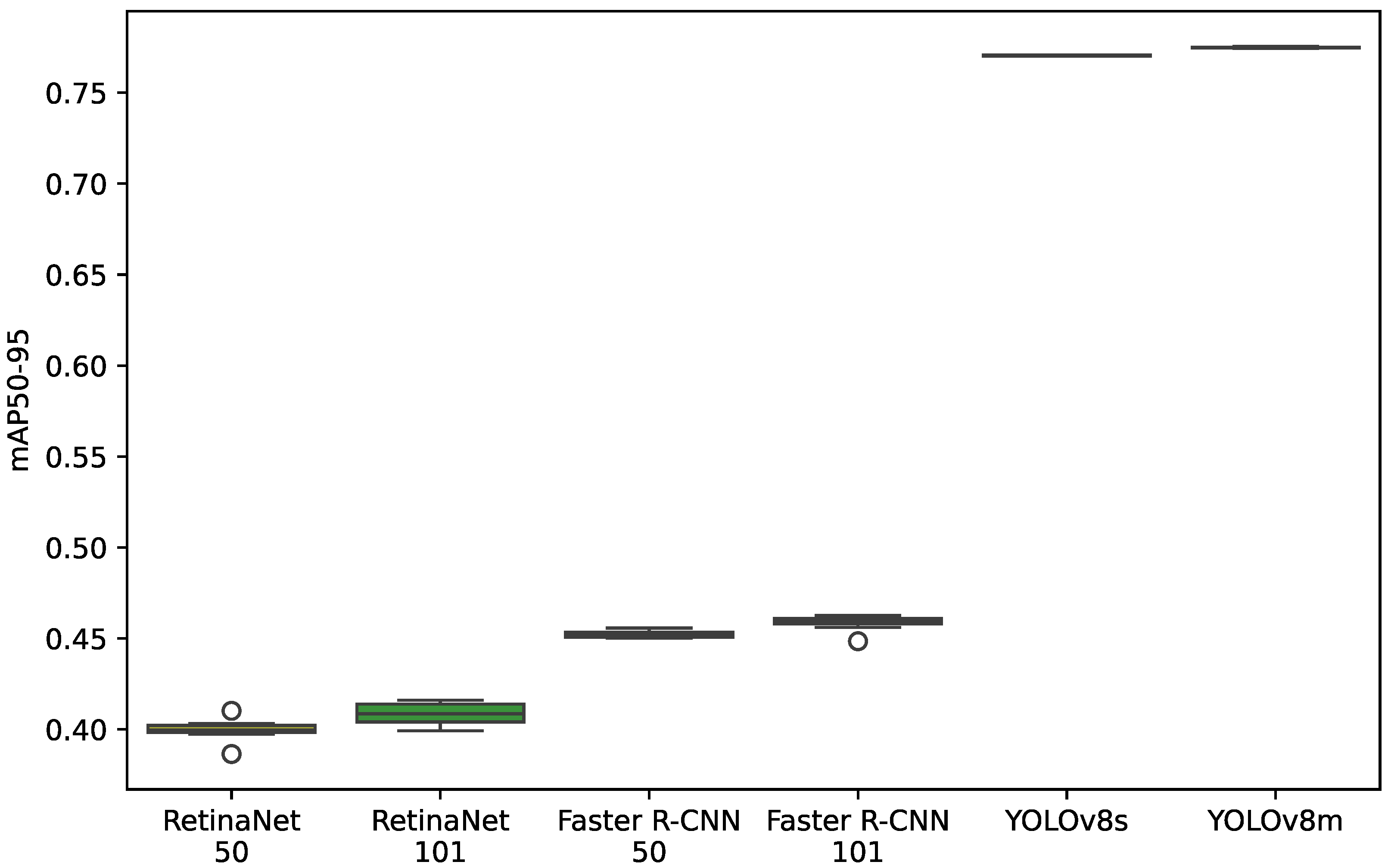

4.2. Model Results for the mAP50-95 Indicator

4.3. Model Results for the mAP50 Indicator on the Box Plot

4.4. Model Results for the mAP50-95 Indicator on the Box Plot

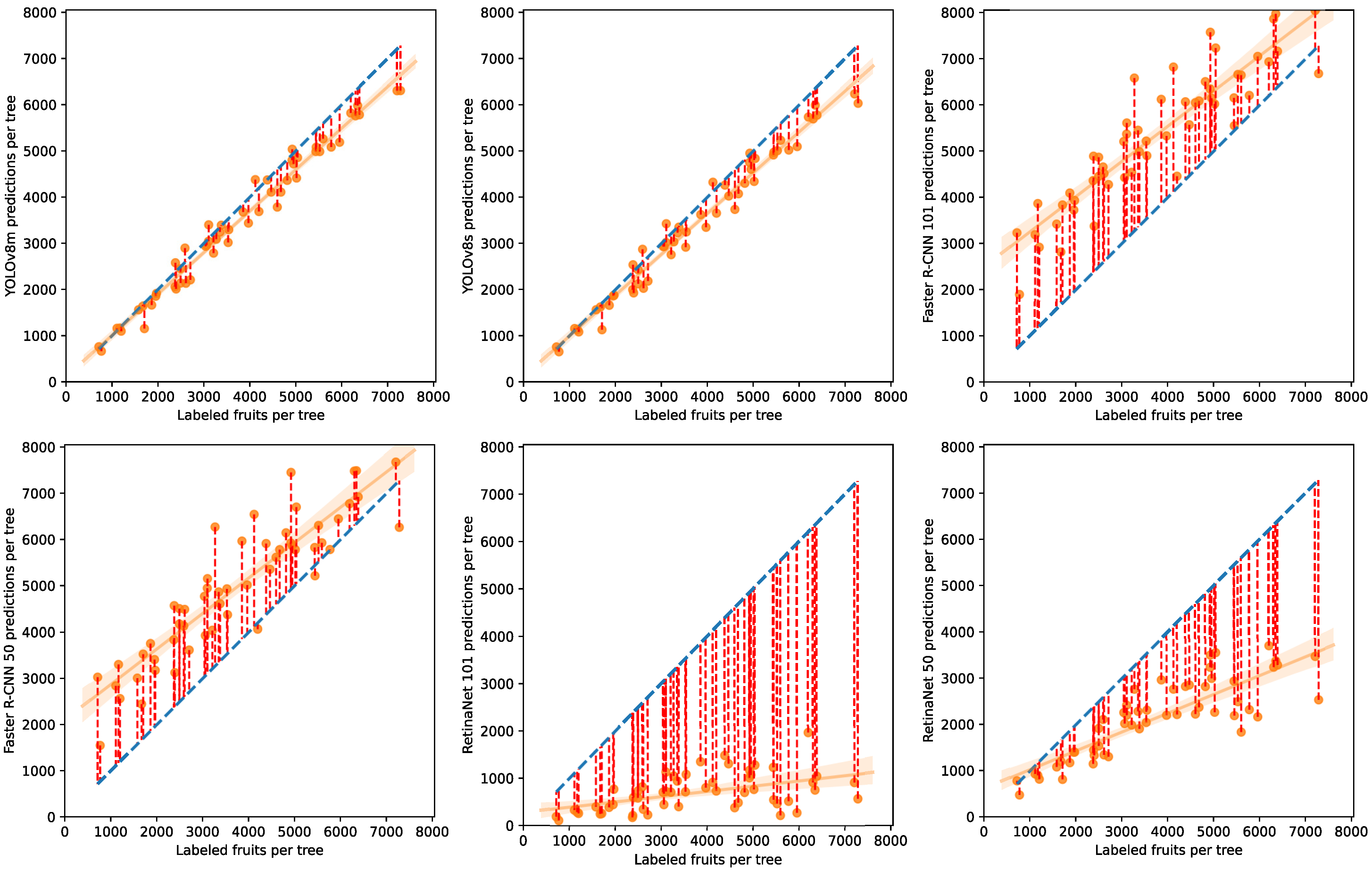

4.5. Model Comparison

5. Discussion

5.1. mAP50 Indicator

5.2. mAP50-95 Indicator

5.3. Olive Fruit Count

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Casanova Núñez Melgar, D.P. Guía Técnica del Cultivo de Olivo en la Región Tacna; Instituto Nacional de Innovación Agraria: Lima, Peru, 2022. [Google Scholar]

- Dawson, D. El Niño Diezma la Cosecha de Aceituna Peruana. Available online: https://es.oliveoiltimes.com/production/el-nino-decimates-peruvian-olive-harvest/127929 (accessed on 13 October 2024).

- de Agricultura Tacna, D.R. Región Tacna: Serie Histórica Producción Agropecuaria 2014–2023. Available online: https://www.agritacna.gob.pe/direcciones/estadistica-agraria (accessed on 17 December 2024).

- de Freitas Cunha, R.L.; Silva, B. Estimating crop yields with remote sensing and deep learning. In Proceedings of the 2020 IEEE Latin American GRSS & ISPRS Remote Sensing Conference (LAGIRS), Santiago, Chile, 22–26 March 2020; pp. 273–278. [Google Scholar] [CrossRef]

- Lin, Y.; Huang, Z.; Liang, Y.; Liu, Y.; Jiang, W. AG-YOLO: A Rapid Citrus Fruit Detection Algorithm with Global Context Fusion. Agriculture 2024, 14, 114. [Google Scholar] [CrossRef]

- Li, G.; Huang, X.; Ai, J.; Yi, Z.; Xie, W. Lemon-YOLO: An efficient object detection method for lemons in the natural environment. IET Image Process. 2021, 15, 1998–2009. [Google Scholar] [CrossRef]

- Hu, J.; Fan, C.; Wang, Z.; Ruan, J.; Wu, S. Fruit detection and counting in apple orchards based on improved Yolov7 and multi-Object tracking methods. Sensors 2023, 23, 5903. [Google Scholar] [CrossRef] [PubMed]

- Yang, W.; Ma, X.; An, H. Blueberry Ripeness Detection Model Based on Enhanced Detail Feature and Content-Aware Reassembly. Agronomy 2023, 13, 1613. [Google Scholar] [CrossRef]

- Kim, E.; Hong, S.J.; Kim, S.Y.; Lee, C.H.; Kim, S.; Kim, H.J.; Kim, G. CNN-based object detection and growth estimation of plum fruit (Prunus mume) using RGB and depth imaging techniques. Sci. Rep. 2022, 12, 20796. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.; Yun, L.; Xue, C.; Chen, Z.; Xia, Y. Walnut Recognition Method for UAV Remote Sensing Images. Agriculture 2024, 14, 646. [Google Scholar] [CrossRef]

- Mu, Y.; Chen, T.S.; Ninomiya, S.; Guo, W. Intact detection of highly occluded immature tomatoes on plants using deep learning techniques. Sensors 2020, 20, 2984. [Google Scholar] [CrossRef]

- Peng, J.; Ouyang, C.; Peng, H.; Hu, W.; Wang, Y.; Jiang, P. MultiFuseYOLO: Redefining Wine Grape Variety Recognition through Multisource Information Fusion. Sensors 2024, 24, 2953. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Ulutas, E.G.; ALTIN, C. Kiwi Fruit Detection with Deep Learning Methods. J. Adv. Nat. Sci. Eng. Res. 2023, 7, 39–45. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fruit detection, segmentation and 3D visualisation of environments in apple orchards. Comput. Electron. Agric. 2020, 171, 105302. [Google Scholar] [CrossRef]

- Ponce, J.M.; Aquino, A.; Millán, B.; Andújar, J.M. Olive-fruit mass and size estimation using image analysis and feature modeling. Sensors 2018, 18, 2930. [Google Scholar] [CrossRef] [PubMed]

- Ponce, J.M.; Aquino, A.; Millan, B.; Andújar, J.M. Automatic Counting and Individual Size and Mass Estimation of Olive-Fruits Through Computer Vision Techniques. IEEE Access 2019, 7, 59451–59465. [Google Scholar] [CrossRef]

- Ortenzi, L.; Figorilli, S.; Costa, C.; Pallottino, F.; Violino, S.; Pagano, M.; Imperi, G.; Manganiello, R.; Lanza, B.; Antonucci, F. A machine vision rapid method to determine the ripeness degree of olive lots. Sensors 2021, 21, 2940. [Google Scholar] [CrossRef]

- Bellocchio, E.; Ciarfuglia, T.A.; Costante, G.; Valigi, P. Weakly supervised fruit counting for yield estimation using spatial consistency. IEEE Robot. Autom. Lett. 2019, 4, 2348–2355. [Google Scholar] [CrossRef]

- Murillo-Bracamontes, E.A.; Martinez-Rosas, M.E.; Miranda-Velasco, M.M.; Martinez-Reyes, H.L.; Martinez-Sandoval, J.R.; Cervantes-de Avila, H. Implementation of Hough transform for fruit image segmentation. Procedia Eng. 2012, 35, 230–239. [Google Scholar] [CrossRef][Green Version]

- Lin, G.; Tang, Y.; Zou, X.; Cheng, J.; Xiong, J. Fruit detection in natural environment using partial shape matching and probabilistic Hough transform. Precis. Agric. 2020, 21, 160–177. [Google Scholar] [CrossRef]

- Sidehabi, S.W.; Suyuti, A.; Areni, I.S.; Nurtanio, I. Classification on passion fruit’s ripeness using K-means clustering and artificial neural network. In Proceedings of the 2018 International Conference on Information and Communications Technology (ICOIACT), Yogyakarta, Indonesia, 6–7 March 2018; pp. 304–309. [Google Scholar] [CrossRef]

- Yu, Y.; Velastin, S.A.; Yin, F. Automatic grading of apples based on multi-features and weighted K-means clustering algorithm. Inf. Process. Agric. 2020, 7, 556–565. [Google Scholar] [CrossRef]

- Bhargava, A.; Bansal, A. Fruits and vegetables quality evaluation using computer vision: A review. J. King Saud-Univ. Comput. Inf. Sci. 2021, 33, 243–257. [Google Scholar] [CrossRef]

- Vibhute, A.; Bodhe, S.K. Applications of image processing in agriculture: A survey. Int. J. Comput. Appl. 2012, 52, 34–40. [Google Scholar] [CrossRef]

- Jafar, A.; Bibi, N.; Naqvi, R.A.; Sadeghi-Niaraki, A.; Jeong, D. Revolutionizing agriculture with artificial intelligence: Plant disease detection methods, applications, and their limitations. Front. Plant Sci. 2024, 15, 1356260. [Google Scholar] [CrossRef] [PubMed]

- Mesías-Ruiz, G.A.; Peña, J.M.; de Castro, A.I.; Borra-Serrano, I.; Dorado, J. Cognitive Computing Advancements: Improving Precision Crop Protection through UAV Imagery for Targeted Weed Monitoring. Remote Sens. 2024, 16, 3026. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, F.; Zhang, X.; Zheng, Y.; Peng, X.; Chen, C. Detection the maturity of multi-cultivar olive fruit in orchard environments based on Olive-EfficientDet. Sci. Hortic. 2024, 324, 112607. [Google Scholar] [CrossRef]

- Wang, W.; Shi, Y.; Liu, W.; Che, Z. An Unstructured Orchard Grape Detection Method Utilizing YOLOv5s. Agriculture 2024, 14, 262. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Fu, L.; Feng, Y.; Majeed, Y.; Zhang, X.; Zhang, J.; Karkee, M.; Zhang, Q. Kiwifruit detection in field images using Faster R-CNN with ZFNet. IFAC-PapersOnLine 2018, 51, 45–50. [Google Scholar] [CrossRef]

- Zhou, B.; Wu, K.; Chen, M. Detection of Gannan Navel Orange Ripeness in Natural Environment Based on YOLOv5-NMM. Agronomy 2024, 14, 910. [Google Scholar] [CrossRef]

- Jia, W.; Tian, Y.; Luo, R.; Zhang, Z.; Lian, J.; Zheng, Y. Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic bunch detection in white grape varieties using YOLOv3, YOLOv4, and YOLOv5 deep learning algorithms. Agronomy 2022, 12, 319. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Using YOLOv3 algorithm with pre-and post-processing for apple detection in fruit-harvesting robot. Agronomy 2020, 10, 1016. [Google Scholar] [CrossRef]

- Yang, G.; Wang, J.; Nie, Z.; Yang, H.; Yu, S. A lightweight YOLOv8 tomato detection algorithm combining feature enhancement and attention. Agronomy 2023, 13, 1824. [Google Scholar] [CrossRef]

- Ma, L.; Zhao, L.; Wang, Z.; Zhang, J.; Chen, G. Detection and counting of small target apples under complicated environments by using improved YOLOv7-tiny. Agronomy 2023, 13, 1419. [Google Scholar] [CrossRef]

- Xiao, B.; Nguyen, M.; Yan, W.Q. Fruit ripeness identification using YOLOv8 model. Multimed. Tools Appl. 2024, 83, 28039–28056. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Detectron2. Available online: https://github.com/ultralytics/ultralytics (accessed on 13 October 2024).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Chincholi, F.; Koestler, H. Detectron2 for lesion detection in diabetic retinopathy. Algorithms 2023, 16, 147. [Google Scholar] [CrossRef]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. Available online: https://github.com/facebookresearch/detectron2 (accessed on 14 October 2024).

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Santos, A.; Marcato Junior, J.; de Andrade Silva, J.; Pereira, R.; Matos, D.; Menezes, G.; Higa, L.; Eltner, A.; Ramos, A.P.; Osco, L.; et al. Storm-drain and manhole detection using the retinanet method. Sensors 2020, 20, 4450. [Google Scholar] [CrossRef] [PubMed]

- COCOdataset. Detection Evaluation. Available online: https://cocodataset.org/#detection-eval (accessed on 13 October 2024).

- Padilla, R.; Netto, S.L.; Da Silva, E.A. A survey on performance metrics for object-detection algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-time flying object detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)?–Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Aljaafreh, A.; Elzagzoug, E.Y.; Abukhait, J.; Soliman, A.H.; Alja’Afreh, S.S.; Sivanathan, A.; Hughes, J. A Real-Time Olive Fruit Detection for Harvesting Robot Based on YOLO Algorithms. Acta Technol. Agric. 2023, 26, 121–132. [Google Scholar] [CrossRef]

| Model | Number of Parameters | COCO mAP50-95 |

|---|---|---|

| YOLOv8s | 11.2 M | 44.9 |

| YOLOv8m | 25.9 M | 50.2 |

| Faster R-CNN 50 | 42 M | 40.2 |

| Faster R-CNN 101 | 105 M | 43.0 |

| RetinaNet 50 | 38 M | 38.7 |

| RetinaNet 101 | 57 M | 40.4 |

| Number of Trees | Olive Tree Images | Image Crops | Image Size (px) | Image Crop Size (px) | Training | Validation | Test |

|---|---|---|---|---|---|---|---|

| 62 | 503 | 12,072 | 6000 × 4000 | 1000 × 1000 | 8549 | 2179 | 1344 |

| Set | Metric | Config | YOLOv8m | YOLOv8s | Faster R-CNN 101 | Faster R-CNN 50 | RetinaNet 101 | RetinaNet 50 |

|---|---|---|---|---|---|---|---|---|

| Validation Set | mAP50 | Min ↑ | 0.94908 | 0.94642 | 0.70764 | 0.71145 | 0.67431 | 0.66907 |

| Max ↑ | 0.94960 | 0.94685 | 0.72343 | 0.72217 | 0.69367 | 0.68317 | ||

| Median ↑ | 0.94943 | 0.94656 | 0.72044 | 0.71628 | 0.68663 | 0.67432 | ||

| Mean ↑ | 0.94941 | 0.94660 | 0.71950 | 0.71662 | 0.68499 | 0.67460 | ||

| IQR ↓ | 0.00014 | 0.00017 | 0.00143 | 0.00672 | 0.00991 | 0.00475 | ||

| mAP50-95 | Min ↑ | 0.77443 | 0.77001 | 0.44847 | 0.45016 | 0.39926 | 0.38647 | |

| Max ↑ | 0.77533 | 0.77070 | 0.46274 | 0.45571 | 0.41604 | 0.41020 | ||

| Median ↑ | 0.77485 | 0.77030 | 0.45975 | 0.45217 | 0.40858 | 0.39963 | ||

| Mean ↑ | 0.77486 | 0.77035 | 0.45874 | 0.45238 | 0.40840 | 0.39980 | ||

| IQR ↓ | 0.00026 | 0.00050 | 0.00284 | 0.00270 | 0.00983 | 0.00374 | ||

| Test Set | mAP50 | Min ↑ | 0.98052 | 0.97302 | 0.74823 | 0.70900 | 0.65702 | 0.67012 |

| Max ↑ | 0.98273 | 0.97396 | 0.77196 | 0.74358 | 0.74416 | 0.71648 | ||

| Median ↑ | 0.98127 | 0.97321 | 0.75698 | 0.73403 | 0.72824 | 0.71083 | ||

| Mean ↑ | 0.98142 | 0.97328 | 0.75822 | 0.73230 | 0.72033 | 0.70649 | ||

| IQR ↓ | 0.00027 | 0.00027 | 0.00764 | 0.01577 | 0.00845 | 0.00843 | ||

| mAP50-95 | Min ↑ | 0.95183 | 0.89984 | 0.47001 | 0.46071 | 0.35718 | 0.23806 | |

| Max ↑ | 0.96060 | 0.90068 | 0.51599 | 0.49783 | 0.44312 | 0.42318 | ||

| Median ↑ | 0.95319 | 0.90021 | 0.49278 | 0.48431 | 0.41572 | 0.37883 | ||

| Mean ↑ | 0.95365 | 0.90023 | 0.49370 | 0.48224 | 0.40890 | 0.37242 | ||

| IQR ↓ | 0.00171 | 0.00029 | 0.01553 | 0.00940 | 0.04628 | 0.05260 |

| Model | Average Training Time (Hrs) | Average Inference Time (ms) |

|---|---|---|

| YOLOv8m | 8.640 | 13.22 |

| YOLOv8s | 5.014 | 07.18 |

| Faster R-CNN 101 | 4.896 | 87.04 |

| Faster R-CNN 50 | 2.221 | 43.60 |

| RetinaNet 101 | 2.640 | 52.29 |

| RetinaNet 50 | 2.200 | 42.51 |

| Model | RMSE | R2 |

|---|---|---|

| YOLOv8m | 402.46 | 0.94 |

| YOLOv8s | 452.98 | 0.93 |

| Faster R-CNN 101 | 1758.15 | −0.06 |

| Faster R-CNN 50 | 1435.63 | 0.29 |

| RetinaNet 101 | 3417.34 | −3.02 |

| RetinaNet 50 | 1942.45 | −0.30 |

| Sum of Squares | gl | Root Mean Square | F | Sig. | ||

|---|---|---|---|---|---|---|

| mAP50 | Between groups | 0.842 | 5 | 0.168 | 10,640.937 | 0.000 |

| Within groups | 0.001 | 54 | 0.000 | |||

| Total | 0.843 | 59 | ||||

| mAP50-95 | Between groups | 1.594 | 5 | 0.319 | 19,747.337 | 0.000 |

| Within groups | 0.001 | 54 | 0.000 | |||

| Total | 1.595 | 59 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Osco-Mamani, E.; Santana-Carbajal, O.; Chaparro-Cruz, I.; Ochoa-Donoso, D.; Alcazar-Alay, S. The Detection and Counting of Olive Tree Fruits Using Deep Learning Models in Tacna, Perú. AI 2025, 6, 25. https://doi.org/10.3390/ai6020025

Osco-Mamani E, Santana-Carbajal O, Chaparro-Cruz I, Ochoa-Donoso D, Alcazar-Alay S. The Detection and Counting of Olive Tree Fruits Using Deep Learning Models in Tacna, Perú. AI. 2025; 6(2):25. https://doi.org/10.3390/ai6020025

Chicago/Turabian StyleOsco-Mamani, Erbert, Oliver Santana-Carbajal, Israel Chaparro-Cruz, Daniel Ochoa-Donoso, and Sylvia Alcazar-Alay. 2025. "The Detection and Counting of Olive Tree Fruits Using Deep Learning Models in Tacna, Perú" AI 6, no. 2: 25. https://doi.org/10.3390/ai6020025

APA StyleOsco-Mamani, E., Santana-Carbajal, O., Chaparro-Cruz, I., Ochoa-Donoso, D., & Alcazar-Alay, S. (2025). The Detection and Counting of Olive Tree Fruits Using Deep Learning Models in Tacna, Perú. AI, 6(2), 25. https://doi.org/10.3390/ai6020025