Artificial Intelligence in Ovarian Cancer: A Systematic Review and Meta-Analysis of Predictive AI Models in Genomics, Radiomics, and Immunotherapy

Abstract

1. Introduction

2. Materials and Methods

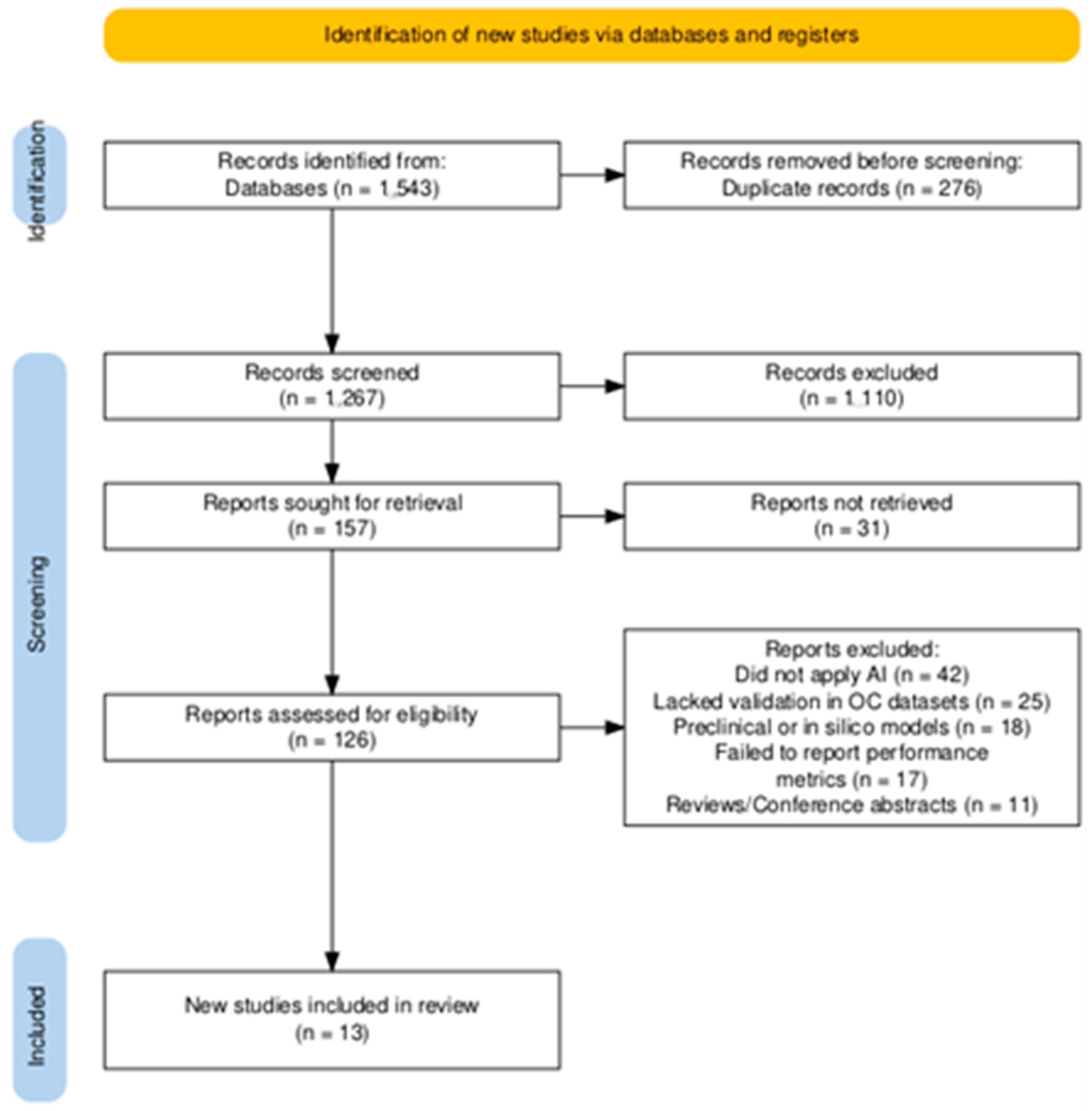

2.1. Search Strategy and Study Selection

2.2. Eligibility Criteria

2.3. Data Extraction and Synthesis

2.4. Meta-Analysis and Statistical Analysis

2.5. Risk of Bias Assessment

2.6. Publication Bias Assessment

2.7. Assessment of Evidence Certainty

3. Results

3.1. Main Findings

3.1.1. AI in Genomic and Molecular Profiling

3.1.2. AI in Imaging and Radiomics for Predicting Response to Therapy

3.1.3. AI for Immunotherapy and Novel Targeted Treatments in Ovarian Cancer

3.1.4. Risk of Bias Assessment

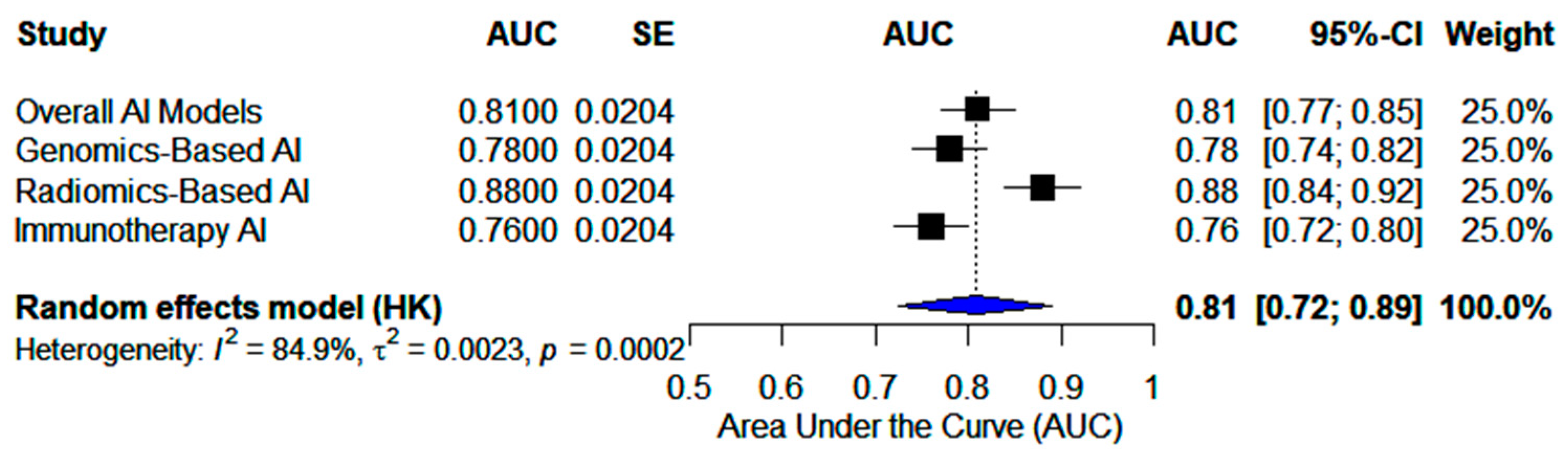

3.2. Meta-Analyses

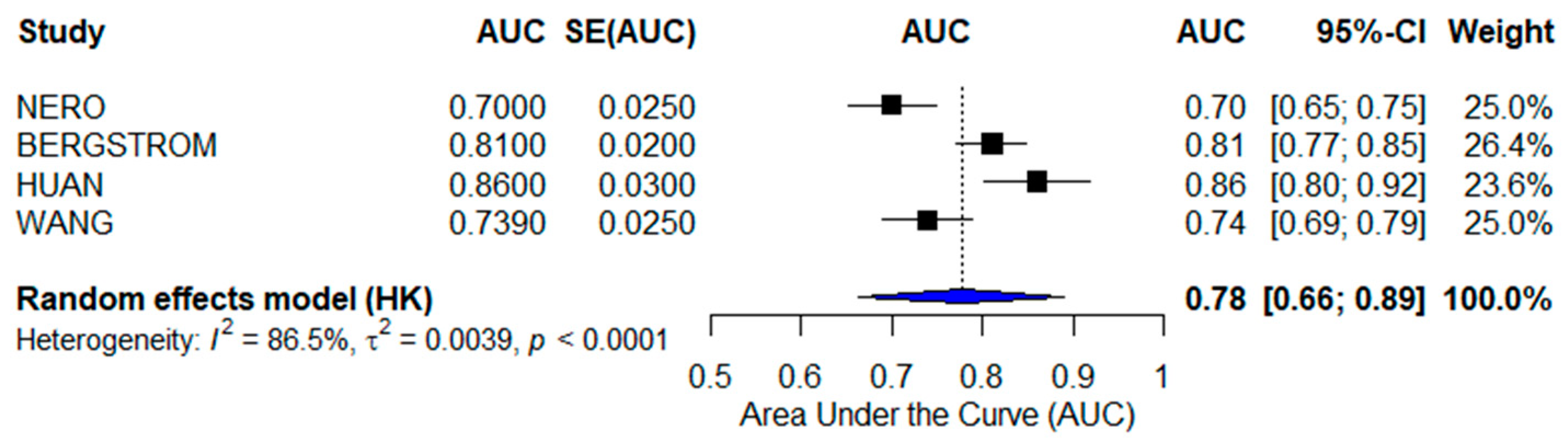

3.2.1. Genomics-Based AI Models

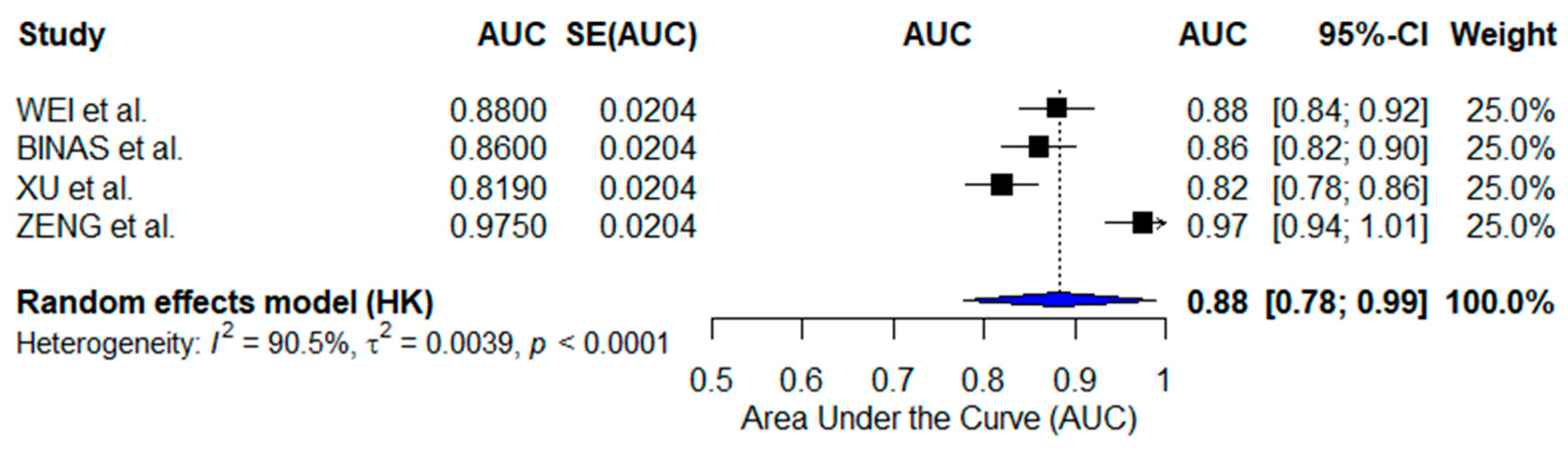

3.2.2. Radiomics-Based AI Models

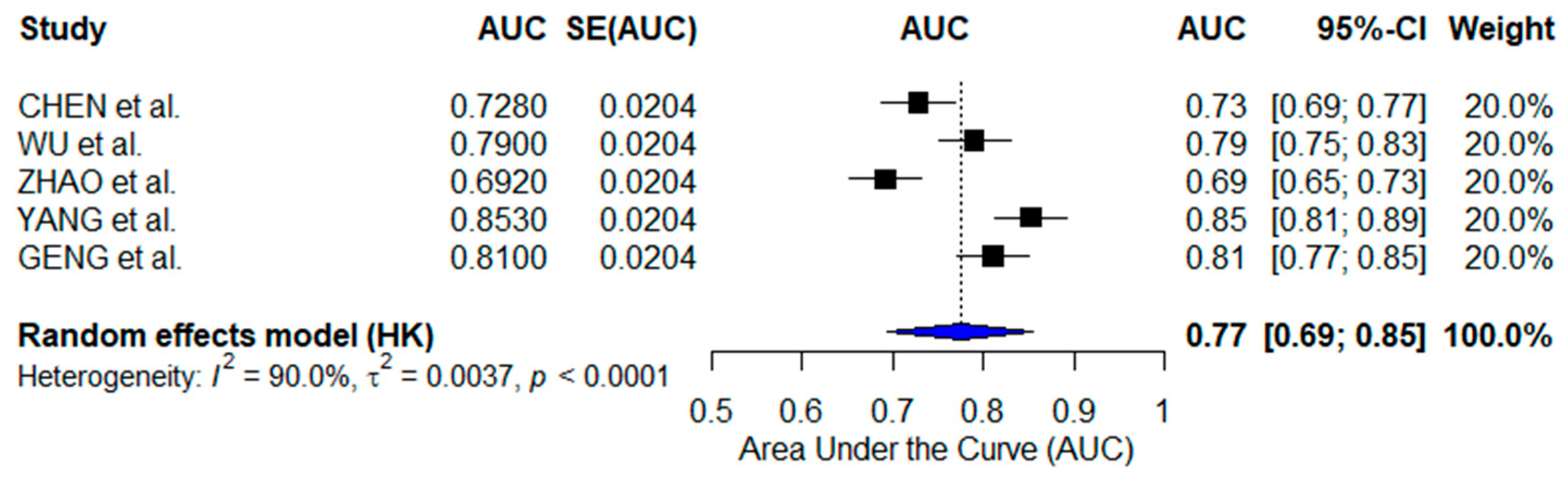

3.2.3. Immunotherapy-Focused AI Models

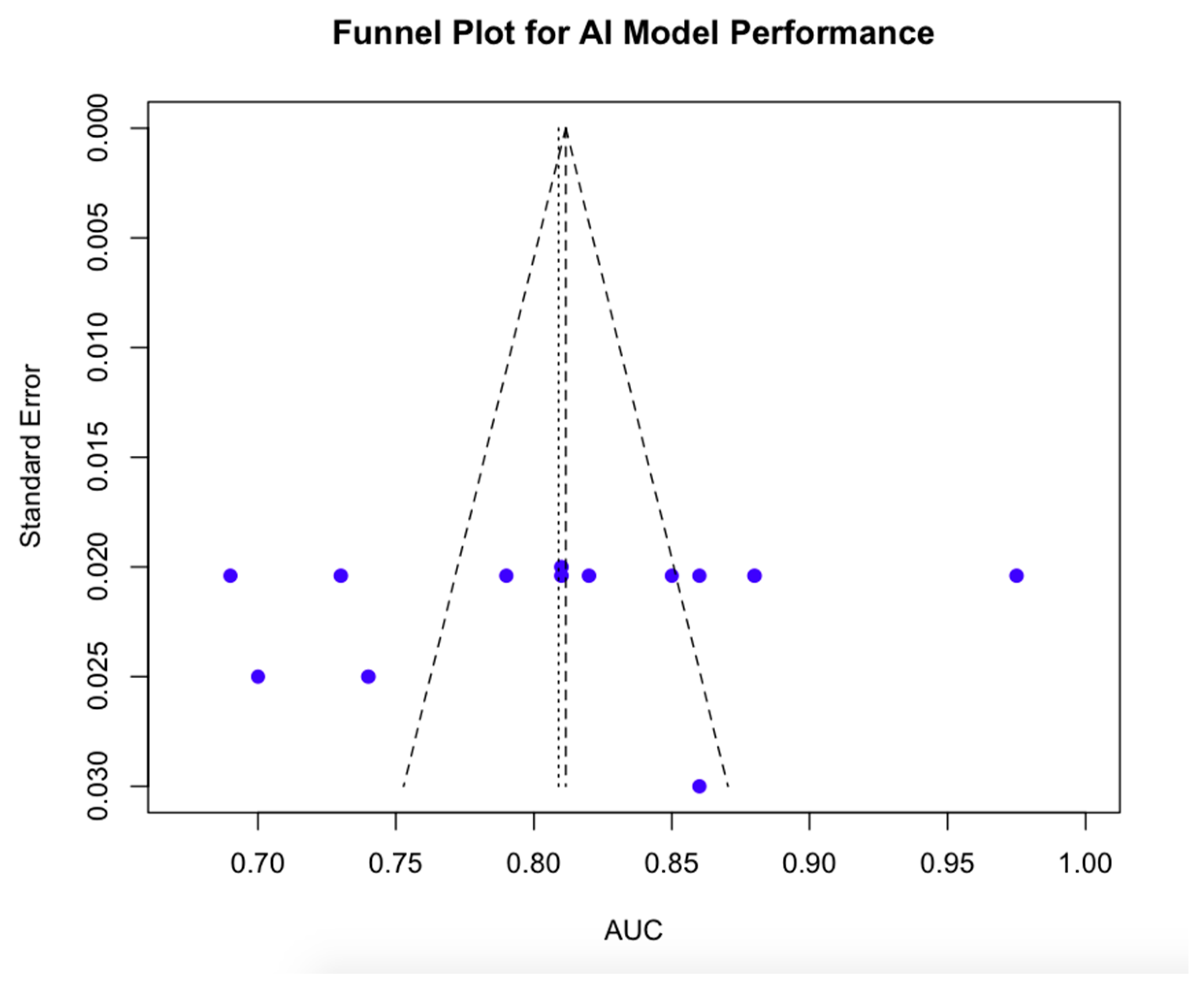

3.2.4. Publication Bias Findings

3.2.5. Evidence Certainty Assessment

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Webb, P.M.; Jordan, S.J. Global epidemiology of epithelial ovarian cancer. Nat. Rev. Clin. Oncol. 2024, 21, 389–400. [Google Scholar] [CrossRef] [PubMed]

- Siegel, R.L.; Miller, K.D.; Jemal, A. Cancer statistics, 2020. CA Cancer J. Clin. 2020, 70, 7–30. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Zhou, N.; Liu, J. Ovarian Cancer Diagnosis and Prognosis Based on Cell-Free DNA Methylation. Cancer Control 2024, 31, 10732748241255548. [Google Scholar] [CrossRef]

- Lin, A.; Xue, F.; Pan, C.; Li, L. Integrative prognostic modeling of ovarian cancer: Incorporating genetic, clinical, and immunological markers. Discov. Oncol. 2025, 16, 115. [Google Scholar] [CrossRef]

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial intelligence in healthcare: Transforming the practice of medicine. Future Healthc. J. 2021, 8, e188–e194. [Google Scholar] [CrossRef] [PubMed]

- Fanizzi, A.; Arezzo, F.; Cormio, G.; Comes, M.C.; Cazzato, G.; Boldrini, L.; Bove, S.; Bollino, M.; Kardhashi, A.; Silvestris, E.; et al. An Explainable Machine Learning Model to Solid Adnexal Masses Diagnosis Based on Clinical Data and Qualitative Ultrasound Indicators. Cancer Med. 2024, 13, e7425. [Google Scholar] [CrossRef]

- Duwe, G.; Mercier, D.; Wiesmann, C.; Kauth, V.; Moench, K.; Junker, M.; Neumann, C.C.M.; Haferkamp, A.; Dengel, A.; Höfner, T. Challenges and perspectives in the use of artificial intelligence to support treatment recommendations in clinical oncology. Cancer Med. 2024, 13, e7398. [Google Scholar] [CrossRef]

- Mysona, D.P.; Kapp, D.S.; Rohatgi, A.; Lee, D.; Mann, A.K.; Tran, P.; Tran, L.; She, J.X.; Chan, J.K. Applying Artificial Intelligence to Gynecologic Oncology: A Review. Obstet. Gynecol. Surv. 2021, 76, 292–301. [Google Scholar] [CrossRef]

- Erdemoglu, E.; Serel, T.A.; Karacan, E.; Köksal, O.K.; Turan, İ.; Öztürk, V.; Bozkurt, K.K. Artificial intelligence for prediction of endometrial intraepithelial neoplasia and endometrial cancer risks in pre- and postmenopausal women. AJOG Glob. Rep. 2023, 3, 100154. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Schardt, C.; Adams, M.B.; Owens, T.; Keitz, S.; Fontelo, P. Utilization of the PICO framework to improve searching PubMed for clinical questions. BMC Med. Inform. Decis. Mak. 2007, 7, 16. [Google Scholar]

- DerSimonian, R.; Kacker, R. Random-effects model for meta-analysis of clinical trials: An update. Contemp. Clin. Trials 2007, 28, 105–114. [Google Scholar] [CrossRef] [PubMed]

- DerSimonian, R.; Laird, N. Meta-analysis in clinical trials. Control. Clin. Trials 1986, 7, 177–188. [Google Scholar] [CrossRef]

- Cochran, W.G. The comparison of percentages in matched samples. Biometrika 1950, 37, 256–266. [Google Scholar] [CrossRef] [PubMed]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021; Available online: https://www.R-project.org/ (accessed on 9 March 2025).

- Collins, G.S.; Dhiman, P.; Andaur Navarro, C.L.; Ma, J.; Hooft, L.; Reitsma, J.B.; Logullo, P.; Beam, A.L.; Peng, L.; Van Calster, B.; et al. Protocol for development of a reporting guideline (TRIPOD-AI) and risk of bias tool (PROBAST-AI) for diagnostic and prognostic prediction model studies based on artificial intelligence. BMJ Open 2021, 11, e048008. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Egger, M.; Davey Smith, G.; Schneider, M.; Minder, C. Bias in meta-analysis detected by a simple, graphical test. BMJ 1997, 315, 629–634. [Google Scholar] [CrossRef]

- Guyatt, G.H.; Ebrahim, S.; Alonso-Coello, P.; Johnston, B.C.; Mathioudakis, A.G.; Briel, M.; Mustafa, R.A.; Sun, X.; Walter, S.D.; Heels-Ansdell, D.; et al. GRADE guidelines 17: Assessing the risk of bias associated with missing participant outcome data in a body of evidence. J. Clin. Epidemiol. 2017, 87, 14–22. [Google Scholar] [CrossRef]

- Nero, C.; Boldrini, L.; Lenkowicz, J.; Giudice, M.T.; Piermattei, A.; Inzani, F.; Pasciuto, T.; Minucci, A.; Fagotti, A.; Zannoni, G.; et al. Deep-Learning to Predict BRCA Mutation and Survival from Digital H&E Slides of Epithelial Ovarian Cancer. Int. J. Mol. Sci. 2022, 23, 11326. [Google Scholar] [CrossRef]

- Bergstrom, E.N.; Abbasi, A.; Díaz-Gay, M.; Galland, L.; Ladoire, S.; Lippman, S.M.; Alexandrov, L.B. Deep Learning Artificial Intelligence Predicts Homologous Recombination Deficiency and Platinum Response From Histologic Slides. J. Clin. Oncol. 2024, 42, 3550–3560. [Google Scholar] [CrossRef]

- Wang, L.; Chen, X.; Song, L.; Zou, H. Machine Learning Developed a Programmed Cell Death Signature for Predicting Prognosis, Ecosystem, and Drug Sensitivity in Ovarian Cancer. Anal. Cell Pathol. 2023, 2023, 7365503. [Google Scholar] [CrossRef]

- Huan, Q.; Cheng, S.; Ma, H.F.; Zhao, M.; Chen, Y.; Yuan, X. Machine learning-derived identification of prognostic signature for improving prognosis and drug response in patients with ovarian cancer. J. Cell. Mol. Med. 2024, 28, e18021. [Google Scholar] [CrossRef] [PubMed]

- Wei, W.; Liu, Z.; Rong, Y.; Zhou, B.; Bai, Y.; Wei, W.; Wang, S.; Wang, M.; Guo, Y.; Tian, J. A Computed Tomography-Based Radiomic Prognostic Marker of Advanced High-Grade Serous Ovarian Cancer Recurrence: A Multicenter Study. Front. Oncol. 2019, 9, 255. [Google Scholar] [CrossRef]

- Binas, D.A.; Tzanakakis, P.; Economopoulos, T.L.; Konidari, M.; Bourgioti, C.; Moulopoulos, L.A.; Matsopoulos, G.K. A Novel Approach for Estimating Ovarian Cancer Tissue Heterogeneity through the Application of Image Processing Techniques and Artificial Intelligence. Cancers 2023, 15, 1058. [Google Scholar] [CrossRef]

- Xu, S.; Zhu, C.; Wu, M.; Gu, S.; Wu, Y.; Cheng, S.; Wang, C.; Zhang, Y.; Zhang, W.; Shen, W.; et al. Artificial intelligence algorithm for preoperative prediction of FIGO stage in ovarian cancer based on clinical features integrated 18F-FDG PET/CT metabolic and radiomics features. J. Cancer Res. Clin. Oncol. 2025, 151, 87. [Google Scholar] [CrossRef]

- Zeng, S.; Wang, X.L.; Yang, H. Radiomics and radiogenomics: Extracting more information from medical images for the diagnosis and prognostic prediction of ovarian cancer. Mil. Med. Res. 2024, 11, 77. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.; Zheng, Y.; Fei, C.; Ye, J.; Fei, H. Machine learning developed a CD8+ exhausted T cells signature for predicting prognosis, immune infiltration and drug sensitivity in ovarian cancer. Sci. Rep. 2024, 14, 5794. [Google Scholar] [CrossRef] [PubMed]

- Wu, Q.; Tian, R.; He, X.; Liu, J.; Ou, C.; Li, Y.; Fu, X. Machine learning-based integration develops an immune-related risk model for predicting prognosis of high-grade serous ovarian cancer and providing therapeutic strategies. Front. Immunol. 2023, 14, 1164408. [Google Scholar] [CrossRef]

- Zhao, B.; Pei, L. A macrophage related signature for predicting prognosis and drug sensitivity in ovarian cancer based on integrative machine learning. BMC Med. Genom. 2023, 16, 230. [Google Scholar] [CrossRef]

- Yang, Z.; Zhou, D.; Huang, J. Identifying Explainable Machine Learning Models and a Novel SFRP2+ Fibroblast Signature as Predictors for Precision Medicine in Ovarian Cancer. Int. J. Mol. Sci. 2023, 24, 16942. [Google Scholar] [CrossRef]

- Geng, T.; Zheng, M.; Wang, Y.; Reseland, J.E.; Samara, A. An artificial intelligence prediction model based on extracellular matrix proteins for the prognostic prediction and immunotherapeutic evaluation of ovarian serous adenocarcinoma. Front. Mol. Biosci. 2023, 10, 1200354. [Google Scholar] [CrossRef]

- Wang, Y.; Lin, W.; Zhuang, X.; Wang, X.; He, Y.; Li, L.; Lyu, G. Advances in artificial intelligence for the diagnosis and treatment of ovarian cancer (Review). Oncol. Rep. 2024, 51, 46. [Google Scholar] [CrossRef]

- Xu, H.L.; Gong, T.T.; Liu, F.H.; Chen, H.Y.; Xiao, Q.; Hou, Y.; Huang, Y.; Sun, H.Z.; Shi, Y.; Gao, S.; et al. Artificial Intelligence Performance in Image-Based Ovarian Cancer Identification: A Systematic Review and Meta-Analysis. EClinicalMedicine 2022, 53, 101662. [Google Scholar] [CrossRef] [PubMed]

- Huang, M.L.; Ren, J.; Jin, Z.Y.; Liu, X.Y.; He, Y.L.; Li, Y.; Xue, H.D. A Systematic Review and Meta-Analysis of CT and MRI Radiomics in Ovarian Cancer: Methodological Issues and Clinical Utility. Insights Imaging 2023, 14, 117. [Google Scholar] [CrossRef] [PubMed]

- O’Sullivan, N.J.; Temperley, H.C.; Horan, M.T.; Kamran, W.; Corr, A.; O’Gorman, C.; Saadeh, F.; Meaney, J.M.; Kelly, M.E. Role of Radiomics as a Predictor of Disease Recurrence in Ovarian Cancer: A Systematic Review. Abdom. Radiol. 2024, 49, 3540–3547. [Google Scholar] [CrossRef]

- Hatamikia, S.; Nougaret, S.; Panico, C.; Avesani, G.; Nero, C.; Boldrini, L.; Sala, E.; Woitek, R. Ovarian Cancer beyond Imaging: Integration of AI and Multiomics Biomarkers. Eur. Radiol. Exp. 2023, 7, 50. [Google Scholar] [CrossRef]

- Asadi, F.; Rahimi, M.; Ramezanghorbani, N.; Almasi, S. Comparing the Effectiveness of Artificial Intelligence Models in Predicting Ovarian Cancer Survival: A Systematic Review. Cancer Rep. 2025, 8, e70138. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Pecchi, A.; Bozzola, C.; Beretta, C.; Besutti, G.; Toss, A.; Cortesi, L.; Balboni, E.; Nocetti, L.; Ligabue, G.; Torricelli, P. DCE-MRI Radiomic Analysis in Triple Negative Ductal Invasive Breast Cancer. Comparison between BRCA and Not BRCA Mutated Patients: Preliminary Results. Magn. Reson. Imaging 2024, 113, 110214. [Google Scholar] [CrossRef]

- Azadinejad, H.; Farhadi Rad, M.; Shariftabrizi, A.; Rahmim, A.; Abdollahi, H. Optimizing Cancer Treatment: Exploring the Role of AI in Radioimmunotherapy. Diagnostics 2025, 15, 397. [Google Scholar] [CrossRef]

- Mirza, Z.; Ansari, M.S.; Iqbal, M.S.; Ahmad, N.; Alganmi, N.; Banjar, H.; Al-Qahtani, M.H.; Karim, S. Identification of Novel Diagnostic and Prognostic Gene Signature Biomarkers for Breast Cancer Using Artificial Intelligence and Machine Learning Assisted Transcriptomics Analysis. Cancers 2023, 15, 3237. [Google Scholar] [CrossRef]

- Gandhi, Z.; Gurram, P.; Amgai, B.; Lekkala, S.P.; Lokhandwala, A.; Manne, S.; Mohammed, A.; Koshiya, H.; Dewaswala, N.; Desai, R.; et al. Artificial Intelligence and Lung Cancer: Impact on Improving Patient Outcomes. Cancers 2023, 15, 5236. [Google Scholar] [CrossRef] [PubMed]

- Pandya, P.H.; Jannu, A.J.; Bijangi-Vishehsaraei, K.; Dobrota, E.; Bailey, B.J.; Barghi, F.; Shannon, H.E.; Riyahi, N.; Damayanti, N.P.; Young, C.; et al. Integrative Multi-OMICs Identifies Therapeutic Response Biomarkers and Confirms Fidelity of Clinically Annotated, Serially Passaged Patient-Derived Xenografts Established from Primary and Metastatic Pediatric and AYA Solid Tumors. Cancers 2022, 15, 259. [Google Scholar] [CrossRef]

- Zhuang, S.; Chen, T.; Li, Y.; Wang, Y.; Ai, L.; Geng, Y.; Zou, M.; Liu, K.; Xu, H.; Wang, L.; et al. A transcriptional signature detects homologous recombination deficiency in pancreatic cancer at the individual level. Mol. Ther. Nucleic Acids 2021, 26, 1014–1026. [Google Scholar] [CrossRef]

- Gorski, J.W.; Ueland, F.R.; Kolesar, J.M. CCNE1 Amplification as a Predictive Biomarker of Chemotherapy Resistance in Epithelial Ovarian Cancer. Diagnostics 2020, 10, 279. [Google Scholar] [CrossRef] [PubMed]

- Jardim, D.L.; Goodman, A.; de Melo Gagliato, D.; Kurzrock, R. The Challenges of Tumor Mutational Burden as an Immunotherapy Biomarker. Cancer Cell 2021, 39, 154–173. [Google Scholar] [CrossRef]

- Cui, C.; Xu, C.; Yang, W.; Chi, Z.; Sheng, X.; Si, L.; Xie, Y.; Yu, J.; Wang, S.; Yu, R.; et al. Ratio of the interferon-γ signature to the immunosuppression signature predicts anti-PD-1 therapy response in melanoma. NPJ Genom. Med. 2021, 6, 7. [Google Scholar] [CrossRef]

- Lu, G.; Fei, B. Medical Hyperspectral Imaging: A Review. J. Biomed. Opt. 2014, 19, 010901. [Google Scholar] [CrossRef] [PubMed]

- Mahajan, A.; Sahu, A.; Ashtekar, R.; Kulkarni, T.; Shukla, S.; Agarwal, U.; Bhattacharya, K. Glioma radiogenomics and artificial intelligence: Road to precision cancer medicine. Clin. Radiol. 2023, 78, 137–149. [Google Scholar] [CrossRef] [PubMed]

- Kohan, A.; Hinzpeter, R.; Kulanthaivelu, R.; Mirshahvalad, S.A.; Avery, L.; Tsao, M.; Li, Q.; Ortega, C.; Metser, U.; Hope, A.; et al. Contrast Enhanced CT Radiogenomics in a Retrospective NSCLC Cohort: Models, Attempted Validation of a Published Model and the Relevance of the Clinical Context. Acad. Radiol. 2024, 31, 2953–2961. [Google Scholar] [CrossRef]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and operating a public information repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef]

- Abgrall, G.; Holder, A.L.; Chelly Dagdia, Z.; Zeitouni, K.; Monnet, X. Should AI models be explainable to clinicians? Crit. Care 2024, 28, 301. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?” Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ‘16), San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Frenel, J.S.; Bossard, C.; Rynkiewicz, J.; Thomas, F.; Salhi, Y.; Salhi, S.; Chetritt, J. Artificial intelligence to predict homologous recombination deficiency in ovarian cancer from whole-slide histopathological images. J. Clin. Oncol. 2024, 42, 5578. [Google Scholar] [CrossRef]

- Shah, P.D.; Wethington, S.L.; Pagan, C.; Latif, N.; Tanyi, J.; Martin, L.P.; Morgan, M.; Burger, R.A.; Haggerty, A.; Zarrin, H.; et al. Combination ATR and PARP Inhibitor (CAPRI): A phase 2 study of ceralasertib plus olaparib in patients with recurrent, platinum-resistant epithelial ovarian cancer. Gynecol. Oncol. 2021, 163, 246–253. [Google Scholar] [CrossRef]

- Ginghina, O.; Hudita, A.; Zamfir, M.; Spanu, A.; Mardare, M.; Bondoc, I.; Buburuzan, L.; Georgescu, S.E.; Costache, M.; Negrei, C.; et al. Liquid Biopsy and Artificial Intelligence as Tools to Detect Signatures of Colorectal Malignancies: A Modern Approach in Patient’s Stratification. Front. Oncol. 2022, 12, 856575. [Google Scholar]

| Population | Patients diagnosed with ovarian cancer, undergoing treatment with CHT, PARPis, or ICIs |

| Intervention | AI-based models applied for therapy response prediction, including genomics-based, radiomics-based, and immunotherapy-focused models |

| Comparator | Standard clinical or molecular predictors, including traditional biomarker-based testing (HRD status, BRCA mutations), clinician-based radiologic assessments, and conventional histopathologic scoring methods |

| Outcomes | The predictive performance of AI models, assessed using the area under the receiver operating characteristic curve (AUC), sensitivity, specificity, and hazard ratios (HR) for progression-free survival (PFS) and overall survival (OS). Secondary outcomes included model generalizability, external validation, and clinical applicability |

| Study Design | Retrospective and prospective cohort studies, observational studies, and RCTs that employed AI for therapy response prediction |

| AI in Genomic and Molecular Profiling | ||||

|---|---|---|---|---|

| Study | AI Model (Type) | Dataset Used | AUC | Outcome Assessed |

| NERO et al. [20] | Weakly Supervised AI (Deep Learning) | TCGA | 0.700 | BRCA status prediction |

| BERGSTROM et al. [21] | DeepHRD (Deep Learning) | TCGA + external cohorts | 0.810 | HRD prediction |

| WANG et al. [22] | ML Prognostic Signature (Traditional ML) | Multi-center cohorts | 0.739–0.820 (OS 2–5 yrs) | Survival prediction, drug response |

| HUAN et al. [23] | MLDPS Prognostic AI (Traditional ML) | Multi-cohort OV datasets | 0.859–0.795 (OS 1–5 yrs) | Prognosis, drug response prediction |

| AI in Imaging and Radiomics for Therapy Prediction | ||||

| WEI et al. [24] | CT-Based Radiomics (Traditional ML) | Multi-center CT datasets | 0.880 | Recurrence prediction |

| BINAS et al. [25] | MRI-Based AI (Traditional ML) | Multi-center MRI datasets | 0.860 | Tumor heterogeneity assessment |

| XU et al. [26] | PET/CT-Based AI (Traditional ML) | Clinical PET/CT scans | 0.819 | FIGO stage prediction |

| ZENG et al. [27] | Radiomics & Radiogenomics (Deep Learning) | Multi-center Imaging & Genomics | 0.975 | Diagnosis, prognosis, therapy response |

| AI for Immunotherapy and Novel Targeted Treatments | ||||

| CHEN et al. [28] | CD8+ Tex Prognostic Signature (Traditional ML) | TCGA, GSE datasets | 0.728–0.783 | ICI response prediction |

| WU et al. [29] | Immune Risk Model (Traditional ML) | TCGA, GEO datasets | 0.790 | ICI response, TME profiling |

| ZHAO et al. [30] | MRS- Macrophage AI (Traditional ML) | TCGA, GEO datasets | 0.692–0.774 | Prognosis, drug sensitivity |

| YANG et al. [31] | SFRP2+ Fibroblast Signature (Deep Learning) | TCGA, GEO datasets | 0.853 | ICI response, TP53 mutation |

| GENG et al. [32] | ECM-Based AI (Deep Learning) | TCGA-Pancancer | 0.810 | Immunotherapy response prediction |

| AI Model | Risk of Bias | Inconsistency | Indirectness | Imprecision | Publication Bias | Certainty of Evidence |

|---|---|---|---|---|---|---|

| Genomics-Based AI | ● | ● | ● | ● | ● | ● |

| Radiomics-Based AI | ● | ● | ● | ● | ● | ● |

| Immunotherapy AI | ● | ● | ● | ● | ● | ● |

| Overall AI Models | ● | ● | ● | ● | ● | ● |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maiorano, M.F.P.; Cormio, G.; Loizzi, V.; Maiorano, B.A. Artificial Intelligence in Ovarian Cancer: A Systematic Review and Meta-Analysis of Predictive AI Models in Genomics, Radiomics, and Immunotherapy. AI 2025, 6, 84. https://doi.org/10.3390/ai6040084

Maiorano MFP, Cormio G, Loizzi V, Maiorano BA. Artificial Intelligence in Ovarian Cancer: A Systematic Review and Meta-Analysis of Predictive AI Models in Genomics, Radiomics, and Immunotherapy. AI. 2025; 6(4):84. https://doi.org/10.3390/ai6040084

Chicago/Turabian StyleMaiorano, Mauro Francesco Pio, Gennaro Cormio, Vera Loizzi, and Brigida Anna Maiorano. 2025. "Artificial Intelligence in Ovarian Cancer: A Systematic Review and Meta-Analysis of Predictive AI Models in Genomics, Radiomics, and Immunotherapy" AI 6, no. 4: 84. https://doi.org/10.3390/ai6040084

APA StyleMaiorano, M. F. P., Cormio, G., Loizzi, V., & Maiorano, B. A. (2025). Artificial Intelligence in Ovarian Cancer: A Systematic Review and Meta-Analysis of Predictive AI Models in Genomics, Radiomics, and Immunotherapy. AI, 6(4), 84. https://doi.org/10.3390/ai6040084