Abstract

A central focus of this study was the methodology used to evaluate both humans and AI platforms, particularly in terms of their competitiveness and the implications of six key challenges to society resulting from the development and increasing use of artificial intelligence (AI) technologies. The list of challenges was compiled by consulting various online sources and cross-referencing with academics from 15 countries across Europe and the USA. Professors, scientific researchers, and PhD students were invited to independently and remotely evaluate the challenges. Rather than contributing another discussion based solely on social arguments, this paper seeks to provide a logical evaluation framework, moving beyond qualitative discourse by incorporating numerical values. The pairwise comparison of AI challenges was conducted by two groups of participants using the multicriteria decision-making model known as the analytic hierarchy process (AHP). Thirty-eight humans performed pairwise comparisons of the six challenges after they were listed in a distributed questionnaire. The same procedure was carried out by four AI platforms—ChatGPT, Gemini (BardAI), Perplexity, and DedaAI—who responded to the same requests as the human participants. The results from both groups were grouped and compared, revealing interesting differences in the prioritization of AI challenges’ impact on society. Both groups agreed on the highest importance of data privacy and security, as well as the lowest importance of social and cultural resistance, specifically the clash of AI with existing cultural norms and societal values.

1. Introduction and Literature Review

The history of artificial intelligence (AI) spans decades, evolving from theoretical concepts to practical applications shaping the modern world. AI is a branch of computer science focused on creating systems that perform tasks requiring human intelligence, such as learning, reasoning, problem-solving, understanding language, perceiving the environment, and decision-making. AI is categorized into narrow AI (specific tasks) and general AI (theoretical human-like intelligence across domains).

In short, AI simulates human intelligence, enabling machines to perform tasks like learning, reasoning, problem-solving, and decision-making. Its rapid advancements make it integral to modern life, with applications in healthcare, transportation, education, finance, retail, manufacturing, customer service, cybersecurity, and IoT integration [1].

Beginning in 1950 with the Turing Machine and Turing Test to assess machine intelligence, the term ‘Artificial Intelligence’ was coined in 1956, establishing AI as a computer science field. The following two decades saw AI growth through programs solving mathematical problems and early machine learning algorithms, setting the stage for modern neural networks.

The 1970s–1980s are often seen as an ‘Overpromises’ and ‘AI Winter’ era due to setbacks from computer power limitations and declining funding and interest. However, the 1980s–1990s saw the rise of rule-based expert systems and advancements in computer power, statistics, and machine learning, setting the foundation for more adaptive AI and accelerating progress.

The modern era of AI (2000s–present) is marked by the rise of big data, or data-driven AI. Advances in computing power and algorithms like back-propagation and convolutional neural networks have enabled AI to revolutionize technologies such as voice assistants, recommendation systems, self-driving cars, and medical diagnostics. A key issue remains AI’s ethical and societal impact, with discussions centered on ethics, bias, jobs, privacy, and society.

AI has evolved from theory to a transformative force, driving technological advancement and shaping how we interact with the world. The modern era is characterized by rapid growth in AI capabilities and applications, fueled by computing power, data availability, and algorithmic innovation. The explosion of data from social media, e-commerce, IoT, and industrial equipment has provided the “fuel” for AI model training, with companies like Google, Facebook, and Amazon enabling breakthroughs in AI for computers and mobile devices.

Milestones like advances in computer power, cloud platforms, and deep learning have revolutionized AI. Deep neural networks, especially convolutional and recurrent neural networks, have transformed fields like computer vision, natural language processing, and speech recognition. Applications in natural language processing now include chatbots, machine translation, sentiment analysis, and OpenAI’s GPT-3 for text generation. Generative adversarial networks (GANs) have enabled hyper-realistic content creation in gaming, deepfakes, and style transfer.

Modern AI applications are widespread in healthcare, autonomous vehicles, finance, retail, e-commerce, robotics, education, and content creation. In content creation, AI tools like Jasper, Grammarly, and Canva help with video editing, article writing, and other creative tasks. However, contemporary AI developments also present ethical and societal challenges. Bias and fairness issues arise as AI models can inherit biases from training data, leading to unfair outcomes in areas like hiring, policing, and lending. Additionally, the vast personal data used to train AI models raises concerns about surveillance and misuse, contributing to potential job displacement in sectors like manufacturing, customer service, and transportation. Misinformation, particularly through deepfakes, is another major social concern, challenging trust and authenticity.

Efforts are underway to design AI systems that are transparent, accountable, and aligned with human values. A key challenge is fostering human–AI collaboration, shifting the focus from replacing humans to augmenting their capabilities.

In summary, the modern era of AI is defined by remarkable progress, offering transformative opportunities and challenges that continue to influence society, industries, and human interaction with technology. The literature reveals a wealth of information on AI advancements and extensive discussions on its impact, particularly in technology, society, and ethics. Numerous online platforms, discussions, and conferences are dedicated to exploring AI’s influence on our civilization. The following research reports highlight ongoing developments and achievements in AI, introducing the research presented in this paper.

Kingsley Ofosu-Ampong [2] presented an analysis of 85 peer-reviewed articles from 2020 to 2023 concerning artificial intelligence (AI) in information systems and innovation. A review of the current issues and stock of knowledge in the AI literature, research methodology, frameworks, level of analysis, and conceptual approaches aimed to identify research gaps that can guide future investigations. The findings show that the extant literature is skewed toward the prevalence of technological issues and highlights the relatively lower focus on other themes, such as the contextual knowledge co-creation issues, conceptualization, and application domains. While there have been increasing technological issues with AI, the three identified areas of security concern are data security, model security, and network security. One of the author’s conclusions is that computational capabilities lead to increasingly intricate decision-making challenges and point to the significance of organizational learning in dealing with AI’s potential such as autonomy and learnability.

In their editorial, Ajomi and Karimi [3] analyze the global challenges and impacts of AI, particularly in medicine. They begin by discussing IBM Watson, an AI tool that can deliver diagnoses and analyze data more quickly than traditional methods. The authors argue that machine learning algorithms in AI can process vast amounts of data faster, enabling more accurate and targeted treatments. This could, in turn, lower medical costs throughout the healthcare system, such as by streamlining hospital revenue systems, organizing medical records, speeding up test results, and improving doctor and healthcare provider workflows, thus granting them more autonomy. AI’s role in enhancing patient–doctor interactions is also highlighted, with AI supporting healthcare professionals in spending more time with patients. Additionally, AI’s impact on surgeries is discussed, where it helps guide procedures using multidimensional imagery. The editorial mentions that some countries are implementing regulations and legislation to address AI’s potential risks, including banning ChatGPT in certain areas. Furthermore, social and cultural concerns about AI, particularly regarding privacy, are raised in the context of OECD countries and beyond. The authors suggest that governments, the private sector, and other stakeholders should collaborate to establish guidelines that ensure the safe use of AI. Countries like Italy and the U.S. are proposing policies to regulate AI and prevent misinformation and bias. In conclusion, the authors emphasize the disruptive potential of AI and warn that it could hinder global cooperation and sustainability. They argue that the need for coordinated global action on AI regulation is urgent, stating that our future has arrived, and the time for collaboration is now.

Chapter 5 of the 2021 AI Index report [4] addresses the efforts to tackle the ethical issues that have emerged alongside the growing use of AI applications. It explores the recent surge in documents outlining AI principles and frameworks, as well as the media’s coverage of AI-related ethical concerns. The chapter also reviews ethics-related research presented at AI conferences and the types of ethics courses being offered by computer science departments worldwide. The AI Index team was surprised by the lack of data on this topic. While several groups are producing qualitative or normative outputs in the field of AI ethics, there is a general absence of benchmarks that could measure or assess the relationship between societal discussions about technology and the development of the technology itself. One example of AI-related technical performance data is facial recognition, particularly regarding bias. The report notes that finding ways to generate more quantitative data in this area remains a challenge for the research community, though it is an important area to focus on. Policymakers are acutely aware of ethical concerns surrounding AI, but they are more comfortable managing issues they can measure. Therefore, translating qualitative arguments into quantitative data is seen as a crucial step forward. The chapter highlights two key points: (1) the number of papers with ethics-related keywords in their titles submitted to AI conferences has increased since 2015, although the average number of such papers at major AI conferences remains relatively low, and (2) the five most discussed ethical AI issues in 2020 were: the release of the European Commission’s white paper on AI, the dismissal of ethics researcher Timnit Gebru by Google, the formation of an AI ethics committee by the United Nations, the Vatican’s AI ethics plan, and IBM’s exit from the facial-recognition business.

In his position paper, Ghallab [5] discusses the requirements and challenges for responsible AI concerning two interdependent objectives: (1) promoting research and development efforts aimed at socially beneficial applications, and (2) addressing and mitigating the human and social risks posed by AI systems. These objectives encompass technical, legal, and social challenges. Regarding the second objective, Ghallab points out that AI scientists are generally part of a highly enthusiastic and positive community that supports social and humanistic values. Most AI publications highlight the potential positive impacts of their work, but few delve into the risks inherent in AI development. Every AI advancement carries specific risks that need to be studied and addressed. He identifies three general categories of risks common to many AI applications: (1) the safety of critical AI systems, (2) the security and privacy of individual users, and (3) social risks. These issues are interconnected, and many of them are not unique to AI alone. Concerning social risks, Ghallab notes that the social acceptability of AI is a much broader concern than individual acceptance. Social acceptability requires considering long-term effects, including potential impacts on future generations; addressing issues like social cohesion, employment, resource sharing, inclusion, and social recognition; integrating human rights imperatives as well as the historical, social, cultural, and ethical values of communities; and accounting for global constraints related to the environment or international relations. The paper also briefly covers other risks, such as biases, behavioral manipulation, and impacts on democracy, the economy, employment, and military applications. In conclusion, Ghallab emphasizes that the growing effectiveness of AI must be matched by a corresponding sense of social responsibility. While the technical and organizational challenges are significant, the AI research community must confront and address them.

In discussing the role of artificial intelligence in enhancing human capabilities as a key component of next-generation human-machine collaboration technologies, the authors Raftopoulos and Hamari [6] highlight the limited understanding of how organizations can harness this potential to create sustainable business value. Through a literature review of interdisciplinary research on the challenges and opportunities in adopting human–AI collaboration for value creation, the authors identify five key research areas that are central to integrating and aligning the socio-technical challenges of augmented collaboration. These areas include: strategic positioning, human engagement, organizational evolution, technology development, and intelligence building. The findings are synthesized into an integrated model that emphasizes the importance of organizations developing the necessary internal micro-foundations to systematically manage augmented systems.

In Adere’s [7] analysis of the impact of artificial intelligence on society, the author asserts that AI has quickly become an integral part of daily life, transforming various industries and creating new opportunities. However, as AI continues to expand, it also raises significant concerns regarding its societal effects and the potential consequences of its widespread adoption. This research paper provides a thorough overview of AI’s impact on various aspects of society, including the economy, education, healthcare, employment, and ethics. The paper reviews existing research and data to examine the economic, social, and ethical implications of AI, along with the challenges that come with its implementation. The literature review reveals that while AI holds significant potential to benefit society, it also presents challenges and risks that must be addressed. The paper examines the effects of AI across various sectors, including healthcare, education, and employment. In healthcare, AI has the potential to enhance patient outcomes and provide more efficient and effective services. In education, it can transform learning by offering personalized and adaptive experiences. However, AI’s role in the workplace raises concerns about job displacement and the risk of increasing economic inequality. Additionally, the paper explores the ethical considerations of AI, emphasizing the need for responsible development and deployment. This includes creating ethical frameworks and guidelines to tackle issues such as bias, privacy, and transparency. Ultimately, this paper provides a thorough overview of AI’s impact on society, identifies key trends and challenges, and proposes solutions to address these issues. It aims to guide policymakers, technology experts, and the general public toward the responsible and equitable deployment of AI.

In their paper [8], Wong and Tan present a detailed analysis of how AI-assisted programming and large language models (LLMs) can enhance software development by leveraging human feedback. They explore the use of AI tools to incorporate this feedback into reinforcement learning processes, supported by crowd-sourced computation, to improve text-to-code generation. The authors introduce a Bayesian optimization framework that facilitates AI alignment in code generation by distributing the workload of feedback collection, emphasizing the importance of high-quality human input. They conclude that this framework can be adapted for a wide range of domain-specific languages, thus aligning LLM capabilities more effectively with human intentions in AI-assisted programming.

The discussion on optimization aspects of their method is based on the view that AI language models can be represented as log-linear models, where features encode historical context up to a certain length. The parameters of these models are optimized using maximum likelihood estimation. According to the authors, this approach scales linearly concerning both the corpus size and the number of features. They also reference other researchers (e.g., [9,10,11]) who suggest that this optimization method provides a robust alternative to sequential updates, which can sometimes cause model drift and lead to suboptimal confidence estimates.

2. Materials and Methods

2.1. Methodological Framework

At the start of this research, the prompt “Define in a few words what is ChatGPT” was entered at the website http://chatGPT.com [12]. The response received almost instantly, was: “ChatGPT is an AI language model developed by OpenAI. It’s designed to generate human-like text based on the input it receives. The model can engage in conversations, answer questions, provide explanations, write creative content, assist with problem-solving, and more. It processes vast amounts of text data and learns patterns in language to generate coherent and contextually relevant responses. ChatGPT is used in various applications, including customer support, content creation, language translation, and as a tool for learning and personal assistance”.

Next, ChatGPT was asked to list 10 major challenges to human society impacting the development and implementation of AI technologies. The generated answers, with brief explanations, are presented in the left column of Table 1 (Run 1). An additional note mentioned that these challenges require interdisciplinary approaches and international collaboration to ensure AI technologies are developed and implemented responsibly and equitably.

Table 1.

Chat with the ChatGPT AI platform about the 10 most important AI challenges to human society that impact the development and implementation of AI technologies.

Following this, ChatGPT was asked to list these 10 challenges in order of importance, from highest to lowest. The answers, listed in the right column of Table 1 (Run 2), reflect a balance between immediate risks and long-term implications for AI technology and society.

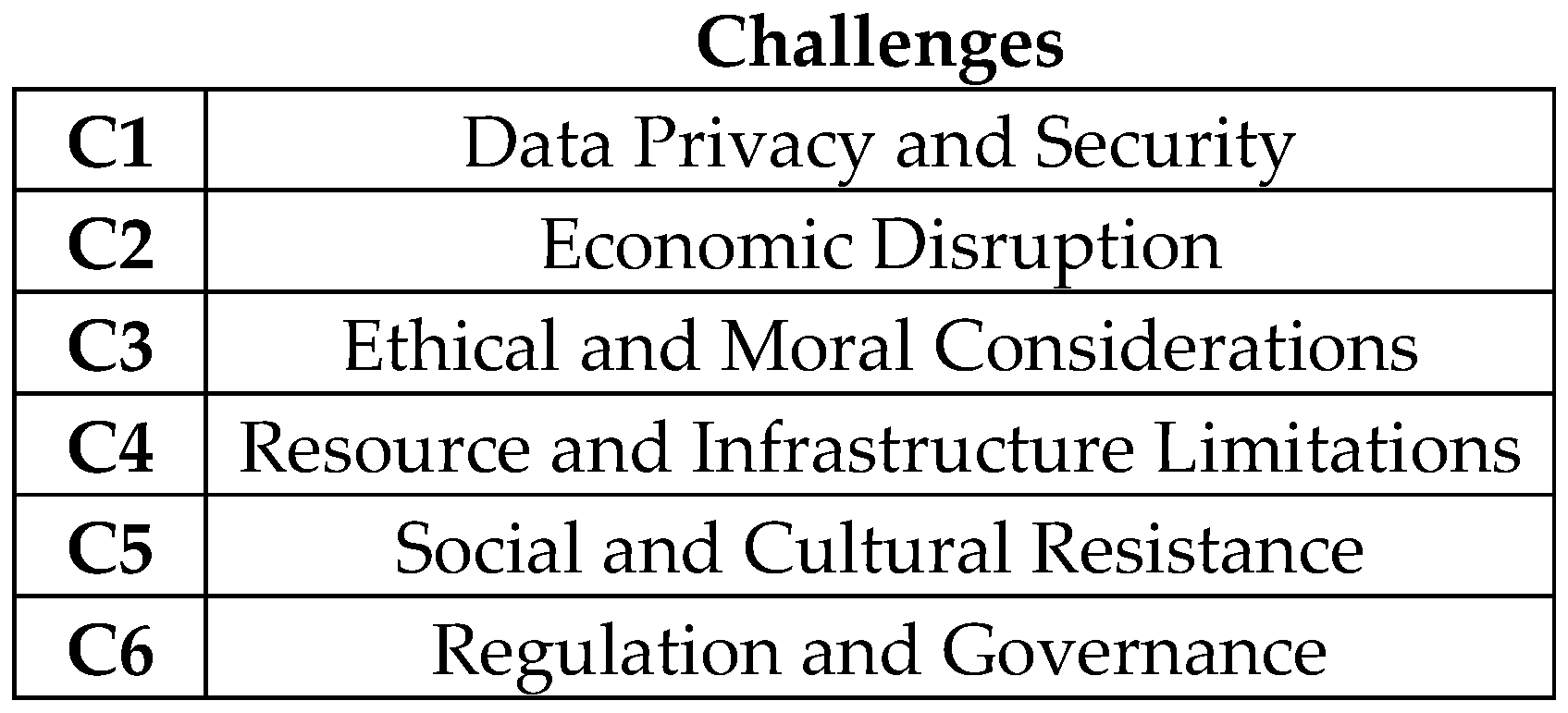

Between August and November 2024, an in-depth study was conducted to examine the societal implications of various challenges arising from the development and increasing use of artificial intelligence (AI) technologies. A list of six key challenges was compiled by reviewing answers generated by ChatGPT, cross-referencing online sources, conference proceedings, journal articles, and consulting academics from several European countries and the USA. The selected challenges, presented in Figure 1, are listed in no particular order, without any preference or ranking. Brief descriptions of each challenge are as follows:

Figure 1.

Major challenges to human society that impact the development and implementation of AI technologies.

- Data Privacy and Security: AI systems rely heavily on large datasets, often containing personal information. Protecting this data from breaches and misuse is critical, and there is ongoing debate over how to balance innovation with privacy rights.

- Economic Disruption: AI has the potential to disrupt labor markets by automating jobs, leading to unemployment and economic inequality. The challenge lies in managing this disruption and ensuring a fair distribution of the benefits AI can bring.

- Ethical and Moral Considerations: AI technologies raise complex ethical issues, such as bias, privacy concerns, and the potential for AI to be used in ways that may harm individuals or society. Determining how to design and deploy AI responsibly is a major challenge.

- Resource and Infrastructure Limitations: The development and deployment of AI require significant computational resources and infrastructure. In some regions, limited access to these resources can hinder AI progress and exacerbate global inequalities.

- Social and Cultural Resistance: AI can sometimes clash with existing cultural norms and societal values. Resistance to AI adoption can arise from fear of the unknown, distrust in technology, or concerns over loss of human agency.

- Regulation and Governance: Governments and regulatory bodies are struggling to keep up with the rapid pace of AI development. Establishing effective regulations that promote innovation while preventing misuse or unintended.

A total of 43 professors, scientific researchers, and PhD candidates, referred to here as a group of “Humans”, participated in a distance-based and independent evaluation of challenges, labeled as C1 through C6 in Figure 1.

The participants were instructed on how to compare the challenges in pairwise manner using the 9-point scale outlined in Table 2, a common tool in the decision-making method known as the analytic hierarchy process (AHP), developed by Saaty [13].

Table 2.

Saaty’s 9-point scale.

A similar procedure was conducted with a group of four AI platforms, representing a non-human group of individuals. Comparisons of challenges received from this confronting group (‘non-human individuals’) passed the same computational procedure and the results were finally compared with the results obtained for humans.

The computational procedure involved the individual application of the eigenvector method (Saaty, 1980) [13] to each matrix to derive the priority vector, which represents the weights of challenges C1–C6 for each human and non-human entity. These individual preference vectors were then aggregated into two group vectors: one represents the human-related group and the other represents the non-human-related group. The final step of the analysis was to compare these two vectors, evaluate the preferences, and draw conclusions. One key conclusion focused on potential differences in how humans perceive the importance of challenges, as suggested by AI-related sources, compared to how AI platforms view these challenges, often based on their own identification and/or information from closely related online sources.

2.2. Pairwise Comparisons

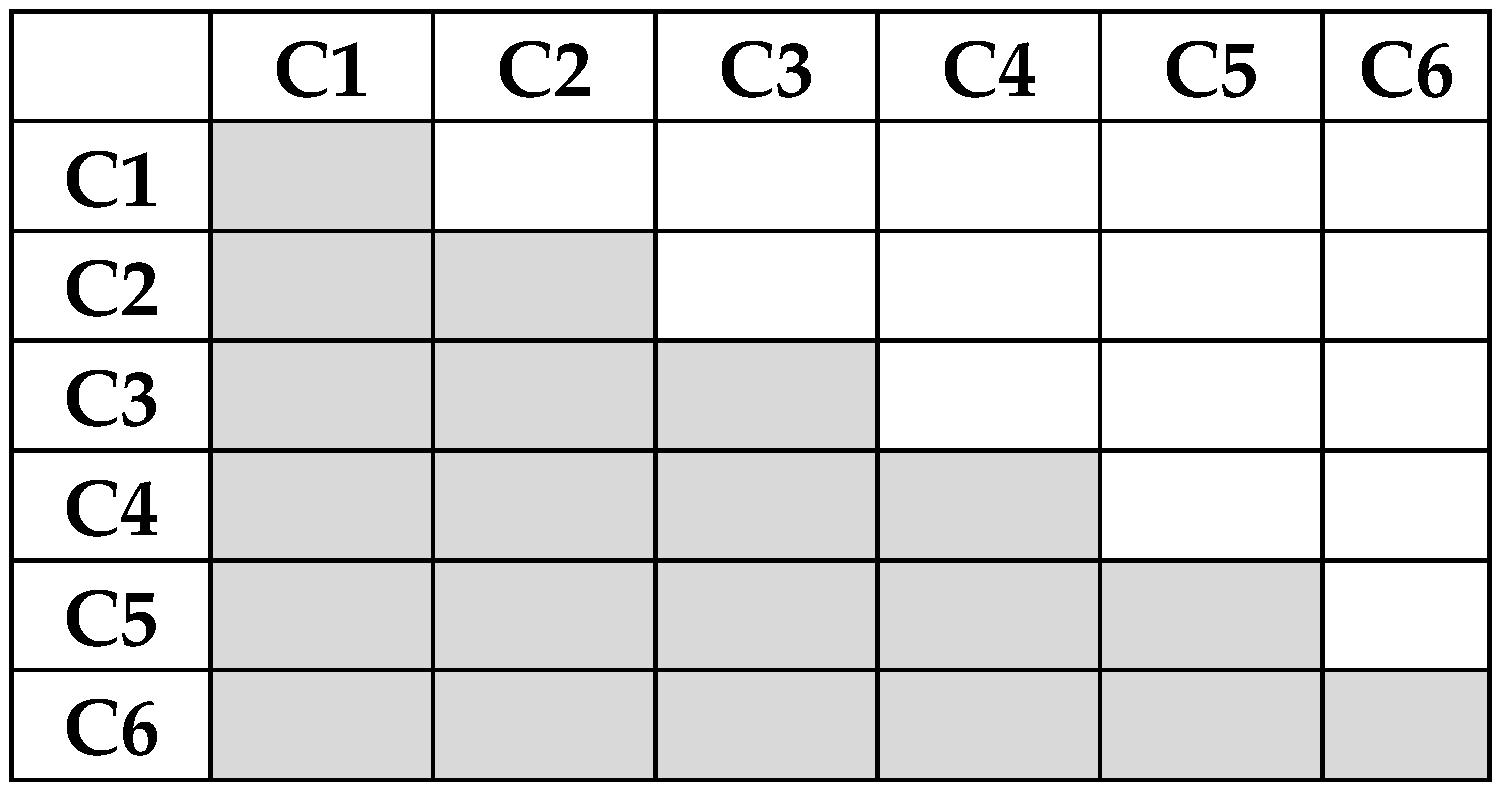

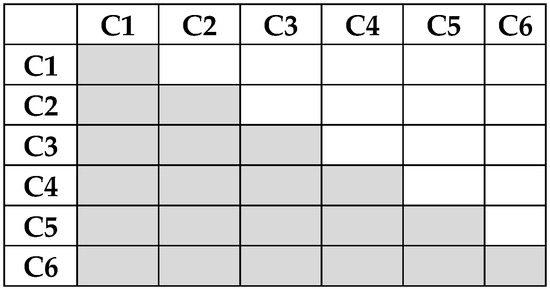

Each individual, human and non-human, compared the challenges against one another and filled in the empty cells in the upper triangle of the square matrix A(aij), given in Figure 2.

Figure 2.

Comparison matrix for AI challenges.

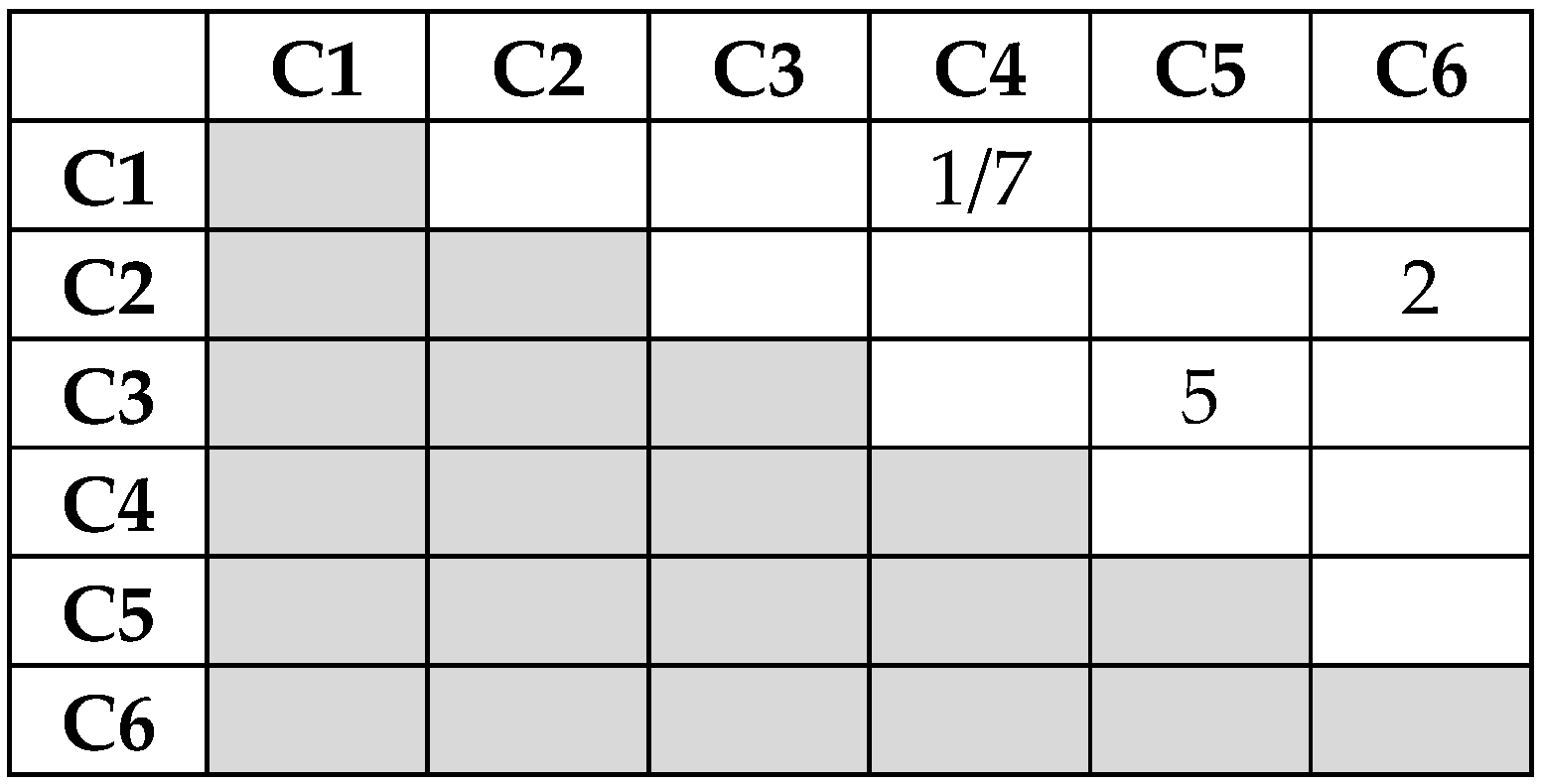

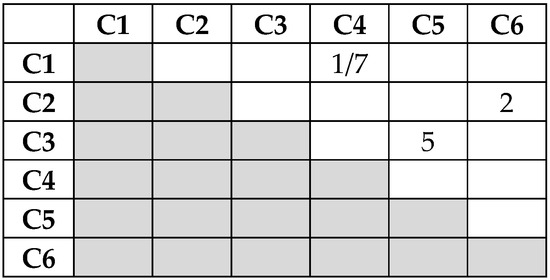

The following explanation was provided to humans on how to fill the matrix (see Figure 3): If an individual considers that challenge C3 is strongly more important than challenge C5, the number ‘5’ should be inserted into the cell (C3,C5). If C4 is strongly more important than C1, then ‘1/7’ should be inserted in the cell (C1,C4). If an individual considers that challenge C2 is slightly more important than challenge C6, then ‘2’ should be inserted into the cell (C2,C6), etc.

Figure 3.

Explanation to individuals of how to fill a 6 × 6 comparison matrix using Saaty’s 9-point scale given in Table 1.

According to individual preferences, only cells in the upper triangle had to be filled with numbers from the 9-point scale (or their reciprocals). This means that each individual had to make 6 × 5/2 = 15 judgments on the mutual importance of challenges. At the main diagonal, there are values ‘1’, while in the lower triangle the reciprocal values to values in the upper triangle, symmetrical to the main diagonal, are automatically inserted.

2.3. Participants in the Study

2.3.1. Human Individuals

A total of 52 questionnaires were sent to randomly selected individuals from an extended email list curated by the author. Most of the recipients are academic colleagues from 15 countries across Europe and the USA. Their expertise spans various fields, including engineering, economics, human sciences, and communication and telecommunication technologies. All of them actively use internet resources and have some familiarity with AI. Many already engage with AI platforms such as ChatGPT, Perplexity, Gemini (Bard AI), and others in their daily activities. However, this aspect was not discussed before or during the experiment. Each individual received the same brief email with a Word document containing the questionnaire.

Eight individuals either did not respond or indicated that they were not qualified to provide answers. The remaining 44 completed questionnaires were collected for analysis. Due to significant inconsistencies in the responses from six individuals, the final analysis was based on the remaining 38 completed questionnaires.

2.3.2. AI ‘Individuals’

In addition to human participants, four AI platforms were also questioned in the same manner as the human respondents: ChatGPT [12], Perplexity [14], Gemini (BARD.AI) [15], and DedaAI [16]. The latter is currently in its final stage of development and is expected to be launched soon.

2.4. Prioritizing Judgments in Group AHP Manner

2.4.1. Prioritization Methods

If the analytic hierarchy process (AHP) is adopted to validate the relative importance of decision elements (here AI challenges) within a group context, various algorithms can be employed to accomplish this task. These algorithms focus on estimating priorities (weights summing to 1) from pairwise comparison matrices obtained from the members of the group. The techniques used to derive priorities from the matrix can be broadly classified into two categories: matrix-based and optimization-based approaches.

A prominent matrix-based technique is the eigenvector method (EV) proposed by Saaty in [13]. Saaty alone [13,17] and Vargas [18,19] demonstrated that the principal eigenvector of the comparison matrix serves as the desired priority vector, regardless of whether the decision maker’s (DM) judgments are consistent or inconsistent. This method is employed here for deriving priority vectors of individuals providing at the same time assessment of the consistency of the individual judgments and monitoring related consistency values for the group.

Other methods for deriving priorities from comparison matrices, used also in this study as a control mechanism, rely on optimization approaches. In these methods, the priority derivation problem is formulated as minimizing a specified objective function that quantifies the deviations between an ‘ideal’ solution and the actual solution, subject to certain constraints [20]. The following four optimization-based methods used in this study are:

- Logarithmic Least Squares Method (LLS): Provides an explicit solution by minimizing a logarithmic objective function subject to multiplicative constraints [21].

- Weighted Least Squares Method (WLS): Utilizes a modified Euclidean norm as the objective function to minimize deviations [22].

- Fuzzy Preference Programming Method (FPP): Introduced by Mikhailov [23], this method uses a geometrical representation of the prioritization problem, reducing it to a fuzzy programming problem solvable as a standard linear program.

- Cosine Maximization Method (CMM): Developed by Kou and Lin [24], this recent approach maximizes the sum of the cosines of the angles between the priority vector and each column vector of the comparison matrix.

Each of these methods offers unique strengths and is suited to specific scenarios depending on the characteristics of the comparison matrix and the decision-making context.

As noted by several authors, deriving a priority vector from a pairwise comparison matrix is a critical step in the analytic hierarchy process (AHP). In our study, however, we did not employ a complete AHP framework (e.g., goal-criteria-alternatives). Instead, we implemented an AHP-inspired procedure, where the prioritization of elements in a single matrix was carried out by both participating humans and AI platforms. As a result, the priority vectors derived in our study did not meet the conditions for order preservation across criteria (which did not exist), a challenge not encountered in our approach but addressed in complete AHP. Tu et al. [25] interestingly discuss this issue, asserting that priority vectors obtained from existing methods often violate the order preservation condition, and this violation propagates through the entire AHP process (unlike in our study). To address this, they propose the Minimal Number of Violations and Deviations Method (MNVDM), a model designed to minimize violation of conditions for order preservation violations when deriving a priority vector. The model’s feasibility and efficiency are demonstrated through numerical examples and Monte Carlo simulations, making this work valuable for future applications of complete AHP.

2.4.2. Prioritization by the Eigenvector Method

The mathematical representation of prioritization in a group context begins with the assumption that each individual constructs a square pairwise comparison matrix A by comparing decision elements.

The pairwise comparison principle is applied using Saaty’s scale, which provides 9 levels of preferences or 17 levels when incorporating both parts of the scale—linear (1–9) and nonlinear (1/9–1/2)—as shown in Table 1.

The prioritization of elements employed in a matrix (1) by the eigenvector method produces the weight vector = (, …, ) by solving the following linear system:

In relation (2), λ represents the principal eigenvalue of the comparison matrix A, and e is the unit vector = (1, 1, …, 1) of dimension n.

If an individual’s judgments are completely consistent, the transitive rule holds for every i, j, and k from the set {1, 2, …, n}. In this case, the principal eigenvalue λ = n. For inconsistent judgments, λ > n, where the deviation of λ from n indicates the degree of inconsistency. The maximum eigenvalue (λmax) for an inconsistent matrix can be estimated by repeatedly squaring the comparison matrix A. After each squaring, the row sums of the resulting matrix are normalized. The process continues until the difference between two successive approximations of the priority vector w is less than a predefined threshold.

This iterative approach ensures that the priority vector accurately reflects the relative importance of decision elements while addressing consistency in the judgments provided by individuals.

To summarize: (1) Comparison matrix A is a square matrix where each element aij represents the pairwise comparison of elements i and j. (2) Principal eigenvalue λmax is the largest eigenvalue of A which ensures consistency in the judgment matrix. (3) Weight vector = (, …, ) is the eigenvector corresponding to λmax normalized so that the sum of its elements equals 1.

2.4.3. Consistency Measures

To assess the consistency of pairwise comparisons and the quality of the results in AHP, the Consistency Ratio (CR) and Euclidean Distance (ED) are commonly used measures of judgment quality.

The Consistency Ratio (CR) is computed using the Consistency Index (CI) calculated by relation (3)

and the Random Index (RI) presented in [13] or various matrix orders. The Consistency Ratio (CR) is obtained by relation (4).

The tolerance value for the CR is 0.10. If this value is less than 0.10, the judgment process is considered sufficiently consistent. In some cases, this statement holds even if CR is greater than 0.10. However, adjustments may need to be made to the evaluations until a small enough value of CR is achieved.

The Euclidean Distance (ED) [26], also referred to as the total deviation, represents the distance measured between all elements in a comparison matrix (1) and the related ratios of the weights of the derived priority vector:

This consistency measure is a universal error measure and is therefore invariant to the prioritization method (including the eigenvector method) used for deriving vector w [27].

2.4.4. Aggregation of Individual Priorities

When a group consists of M individuals, each member creates a pairwise comparison matrix of type (1). From each matrix, a weight vector w can be extracted using one of the mentioned methods (here eigenvector method). These weights provide cardinal information about the relative importance of the compared elements, and based on these weights, the ranks of the elements are determined as ordinal information.

In group applications of AHP, the most commonly used aggregation procedure is AIP—Aggregation of Individual Priorities, that is, the aggregation of individually obtained w vectors. Another option for aggregation is to apply procedure AIJ—Aggregation of Individual Judgments at each entry aij of matrix A and then continue with prioritization. More details on AIP and AIJ can be found in [28,29].

In this study, the priority vectors obtained from individuals are aggregated geometrically to obtain the group priority vector shown by Equation (6).

In Equation (6), M represents the number of members in the group, represents the priority of the i-th decision element (here AI challenge) for the j-th member, and wiG represents the aggregated group weight. The final normalization of priorities wiG is required.

Once the group vector wG () is derived, it can be used as the reference vector for the members of the group to compute deviations of individual vectors from the group vector. The term ’conformity’ or ‘compatibility’ (CO) is commonly used for computing individual deviations [13,27]. These deviations are also known as Manhattan distances and are computed by Equation (7).

In Equation (7), the superscript G represents the reference priority vector obtained by aggregation. CO indicates the overall similarity between the individual priority vector and the reference group vector. This performance indicator only applies after all computations in AHP are concluded, which is different from using consistency indicators CR and ED.

3. Results

3.1. Humans

The applied EV prioritization method showed that out of 44 humans, 6 of them demonstrated unacceptable consistency (far above the tolerant value of 0.100). The remaining 38 priority vectors are included in the aggregation process to obtain group human-related vectors. Individual priority vectors w for evaluated AI challenges are shown in Table 3, and the group vector is replicated for comparison purposes in Table 4.

Table 3.

Priority vectors and consistency/conformity indicators by human individuals.

Table 4.

Group weights of AI challenges obtained for humans.

Based on group results in Table 3 and Table 4, humans believe that Data Privacy and Security (Challenge 1) is a critical AI challenge for society, probably due to many interconnected reasons, including the protection of personal data, exposure to possible cyber attacks, and surveillance and control. Other reasons include bias and discrimination in manipulating data, and the level of regulatory and legal compliance. In summary, data privacy and security are important challenges in the development and deployment of AI because they are directly tied to protecting individual rights. Safeguarding privacy and security is crucial for AI to be a force for good in society, rather than a risk to personal freedoms and security. Building societal trust in AI technologies and systems is closely related to data ownership and consent and it seems that humans participating in this study find that this aspect of AI is more important than the other challenges.

The second-ranked is AI challenge Ethical and Moral Considerations. It is ranked as very important because it asks fundamental questions about how technology should serve humanity. As AI continues to integrate into every aspect of society, the decisions made now and in the future regarding ethical design, regulation, and oversight will shape the future of AI and its impact on human lives. Societal trust in AI will depend heavily on how well these ethical and moral concerns are addressed, ensuring that AI is not only effective technology but also fair, accountable, and aligned with human values.

The first two ranked challenges bear almost half of the total weight of all six challenges (exactly 48.5%). The next two ranked challenges are Regulation and Governance and Economic Disruption, with nearly equal weights of 0.171 and 0.166, and a total impact of 33.7%. Social and Cultural Resistance (with a weight of 0.093) and Resource and Infrastructure Limitations (0.085) received the least concern from the human audience, 17.8% of the total six challenges.

The average consistency parameter CR for the group was found to be 0.093, which is below the tolerance limit of 0.100 and therefore considered satisfactory. The number of individuals with inconsistency below the limit of 0.100 was 24 (63%), most of them with very good values in the range of 0.018–0.069.

Minimum inconsistency (full consistency!) was obtained by individual 11 (0.000) who judged that all AI challenges are equally important, and by responding in the following way: ‘All the cells are filled with one. In my opinion, this is a co-evolution with many necessary conditions to keep it in a safe operating space. The aspect left unmanaged will accumulate the momentum for disruption. From this perspective, all aspects are equally important, as all have the potential to accumulate the critical threat. So in the end our management approach will indirectly formulate the threat regardless of our intentions’.

Out of 38 individuals, 14 showed some inconsistency, though not significantly, except for 2 individuals. The inconsistency of individuals 7 and 37 was notably higher, with values of 0.260 and 0.259, respectively.

The average Euclidean Distance (ED) for the group was 7.045, which closely aligns with the CR values and is considered quite satisfactory given the size (6) of the comparison matrix.

The average conformity (CO) of individual vectors with the geometrically averaged vector for the group was 0.04, which is excellent and consistently confirms the competency and quality of the group of 38 individuals in evaluating AI challenges.

3.2. AI Platforms (Non-Humans)

The same question (empty matrix with the same explanation as for humans) was forwarded to four selected AI platforms: ChatGPT, Gemini, Perplexity, and DedaAI. Responses were collected in the same way as for humans used to prioritize six challenges. Priority vectors (weights) are presented in Table 5 with inserted consistency parameters CR and ED. The last column presents the conformity of priority vectors computed for AI platforms with an averaged group vector in the last row of the table.

Table 5.

Priority vectors and consistency/conformity indicators by ‘AI individuals’.

Table 6 replicates weights of challenges obtained by AI platforms for easy comparison with weights obtained by humans and shown in Table 4.

Table 6.

Group weights of AI challenges obtained for AI platforms.

The weights of the first two ranked challenges Data Privacy and Security (w1) and Economic Disruption (w2) bear 67% of the total weight of all six challenges. The next two ranked challenges are Ethical and Moral Considerations(w3) and Resource and Infrastructure Limitations (w4) with 21.1%. The remaining weights belong to Social and Cultural Resistance (w5) and Regulation and Governance (w6) at 11.9%.

The average consistency parameter CR for the group was found to be 0.061, which is below the tolerance limit of 0.100 and therefore considered satisfactory. Only the Gemini platform was slightly inconsistent (0.123), while the other three had very good CR values below the tolerant value (ChatGPT—0.0354, Perplexity—0.044, and DeadAI—0.041).

Out of the 4 AI platforms (non-human individuals), only Gemini exhibited some inconsistency, though it was minimal (0.123 compared to the tolerant threshold of 0.100). The average consistency across all platforms was 0.061.

The average Euclidean Distance (ED) for the AI group was 5.906, which closely aligns with the CR values and is considered satisfactory, especially given the size (6) of the comparison matrix.

The average conformity (CO) of individual vectors with the geometrically averaged vector for this group was 0.037. This consistently supports the ‘competency and quality of the group’ of four non-human individuals in evaluating AI challenges, further affirming the reliability and effectiveness of the AI platforms in these evaluations.

4. Discussion

As AI increasingly influences decision-making across various sectors, our findings on AI governance, policy, and the real-world application of generative AI offer valuable insights into integrating AI-driven systems into society and developing ethical frameworks. Our study highlights a significant difference in how humans and AI prioritize challenges: humans ranked governance as third, while AI platforms placed it last. This suggests that AI governance frameworks must account for the fact that AI systems may assess or prioritize issues differently than humans. For example, transparency in AI decision-making is vital because AI may prioritize issues based on data patterns or algorithms that do not align with human ethical values, leading to risks in areas like healthcare, criminal justice, and finance. Thus, governance structures must ensure AI systems are transparent and auditable, allowing their decision-making processes to be understood, explained, and questioned.

As our study shows differences in AI and human prioritization, it could be crucial to create policies that support human oversight in AI systems. Rather than relying solely on AI for decision-making, policies could encourage hybrid models where human intuition and judgment complement AI assessments. A collaborative human–AI approach might help mitigate risks from over-reliance on AI and preserve essential human oversight, ensuring that values like fairness, accountability, and ethics are upheld. Finally, as AI prioritized challenges in ways that did not reflect the diversity of human perspectives or exacerbate biases, safeguards should ensure that AI systems are not perpetuating existing inequities. Regarding inherent bias and fairness considerations, continuous monitoring of AI systems is required as well as recalibration of AI models to align with societal values.

The intersection of the analytic hierarchy process (AHP) and modern AI-driven optimization techniques, as explored by Wong and Tan [8], opens intriguing possibilities in decision-making and problem-solving. AHP, a structured method for comparing and prioritizing multiple criteria, aligns well with AI-driven optimization techniques like Bayesian optimization. While AHP excels in dealing with human judgment and preferences, AI techniques such as reinforcement learning and large language models can enhance this by processing vast amounts of data and feedback more efficiently. Integrating human feedback into AI models, as Wong and Tan suggest, can guide optimization processes in complex systems. This hybrid approach has the potential to refine the decision-making framework in software development, balancing both human insight and AI’s computational power to achieve more precise, context-sensitive outcomes.

Our study identified differences in prioritization between human and AI evaluations. In more detailed studies (larger number of participants—both humans and AI platforms—more AI challenges, etc.), additional findings may be derived about the influence on society of AI governance, policy decisions, and real-world applications of generative AI. Given the increasing integration of AI in decision-making frameworks, understanding how AI-driven rankings compare to human intuition could be crucial for developing robust, ethical AI systems. Addressing these aspects would enhance the understanding of both researchers and policymakers about the importance of AI frameworks and the relevance of proper societal reactions to AI’s challenges.

Our study revealed differences in how humans and AI prioritize evaluations of challenges brought by AI. Future research, involving a larger sample of participants—both human and AI platforms—and incorporating more AI challenges, could uncover further insights into AI’s impact on society, governance, policy decisions, and real-world applications of AI. As AI becomes increasingly integrated into decision-making frameworks, understanding how AI-driven rankings compare to human intuition is essential for developing ethical and effective AI systems. Exploring these aspects will help both researchers and policymakers grasp the importance of robust AI frameworks and the need for appropriate societal responses to AI challenges.

5. Conclusions

Today, we live in a world where artificial intelligence (AI) tools can write essays, tackle complex questions, and engage in conversations that feel almost human. AI is an umbrella term that encompasses technologies such as machine learning, deep learning, natural language processing, and robotic process automation (RPA), each with its unique strengths and applications. These technologies contribute to AI’s broad impact by enabling the creation of images, graphics, music, and even the design of new concepts. AI platforms assist with tasks like efficient translation, text rewriting to improve style and grammar correction. Key breakthroughs in AI include generative AI (tools that create images, designs, and art from simple text prompts), speech and language models (such as Google Assistant or Amazon Alexa), and computer vision systems that interpret images or videos. AI also powers self-driving cars, facial recognition, and medical imaging, helping detect diseases like cancer with precision levels that often surpass those of human doctors.

This study aimed to assess the significance of six-selected challenges inherent in current and anticipated trends in AI development and its societal impact. While the approach has specific limitations, it seeks to provide one of the possible frameworks for evaluating the emerging influence of AI on our lives.

Six main challenges were selected for evaluation using the analytic hierarchy process (AHP), specifically its pairwise comparison and prioritization procedures. Two groups of participants, 38 humans, and four AI platforms, took part in a three-month-long experiment organized by the author of this paper. A comparison of the results revealed that both groups agreed on the top priority AI challenge: Data Privacy and Security (C1). However, while humans ranked Ethical and Moral Considerations (C3) as the second most important challenge, the AI platforms identified Economic Disruption (C2) as the second priority. This difference likely stems from AI’s potential to disrupt labor markets through automation, leading to unemployment and economic inequality. Addressing this challenge involves managing economic disruption and ensuring a fair distribution of AI’s benefits.

On the other hand, humans’ ranking of Ethical and Moral Considerations (C3) as second in importance aligns with their concerns about AI, including issues related to bias, fairness, transparency in AI decision-making, and the risks of surveillance, privacy violations, and misuse.

The evaluation also revealed that AI platforms ranked Ethical and Moral Considerations (C3) as third in importance, while humans ranked Regulation and Governance (C6) in third place. Regulation and Governance is a crucial issue involving regulatory and legal frameworks. Without clear laws and accountability, AI risks could lead to harm or exploitation.

The research presented in this paper was inherently limited for several key reasons. Firstly, the number and nature of the challenges analyzed could be considered limited and potentially arbitrary. However, it is important to note that the human participants in the study did not raise any objections to the selection of challenges and appeared to accept the choices without issue. A central focus of this study was the methodology used to evaluate both human and AI platforms, particularly in terms of their competitiveness. Rather than contributing another discussion based solely on social arguments, this paper aims to provide a logical framework for evaluation. It moves beyond purely qualitative discourse by incorporating numerical values, using the pairwise comparison procedure within the multicriteria decision-making model known as the analytic hierarchy process (AHP).

Looking ahead, future research could expand on this work by providing a more comprehensive exploration of the problem, potentially incorporating a larger sample of human participants and a wider range of AI platforms. In this study, AI platforms simply answered questions without “seeing” each other’s responses, much like the humans involved. In future research, it could be interesting to allow both humans and AI to compare or evaluate each other’s opinions, and even explore AI-to-AI interaction. This could open up new avenues for studying how different systems—human or machine—might influence each other’s decision-making processes and potentially enhance the overall evaluation process. Additionally, a more refined evaluation methodology would enhance this study, possibly drawing from social choice theory, including both preferential methods like the Borda Count, as well as non-preferential approaches such as Approval Voting. This approach could provide deeper insights into the evaluation process and offer a more robust foundation for comparing human and robotic performance.

Funding

Ministry of Science, Technological Development and Innovation of Serbia, Grant No. 451-03-47/2024-01/200117.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and ethical approval was obtained from the Faculty of Agriculture IRB committee under number 1000/0102-664/4 (date of approval 7 June 2016).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The author declares no conflicts of interest.

References

- How AI Is Impacting Society And Shaping The Future. Last Updated 20 June 2024. Available online: https://www.geeksforgeeks.org/how-ai-is-impacting-society-and-shaping-the-future/ (accessed on 27 June 2024).

- Kingsley, O.-A. Artificial intelligence research: A review on dominant themes, methods, frameworks and future research directions. Telemat. Inform. Rep. 2024, 14, 100127. [Google Scholar] [CrossRef]

- Ajami, R.A.; Karimi, H. Artificial Intelligence: Opportunities and Challenges. J. Asua-Pac. Bus. 2023, 24, 73–75. [Google Scholar] [CrossRef]

- AI Index Report 2021 // Chapter 5. Available online: https://wp.oecd.ai/app/uploads/2021/03/2021-AI-Index-Report-_Chapter-5.pdf (accessed on 27 June 2024).

- Ghallab, M. Responsible AI: Requirements and challenges. AI Perspect. 2019, 1, 3. [Google Scholar] [CrossRef]

- Raftopoulos, M.; Hamariu, J. Human-AI collaboration in organisations: A literature review on enabling value creation. In Proceedings of the Conference: European Conference on Information Systems (ECIS), Kristiansan, Norway, 11–16 June 2023. [Google Scholar]

- Adere, Y.B. The impact of artificial intelligence on society. International Research Journal of Engineering IT & Scientific Research 2023, 5, 3120–3125. [Google Scholar] [CrossRef]

- Wong, M.F.; Tan, C.W. Aligning Crowd-Sourced Human Feedback for Reinforcement Learning on Code Generation by Large Language Models. IEEE Trans. Big Data 2024, 1–12. [Google Scholar] [CrossRef]

- Chen, S.F.; Rosenfeld, R. A survey of smoothing techniques for me models. IEEE Trans. Speech Audio Process. 2000, 8, 37–50. [Google Scholar] [CrossRef]

- Martins, A.; Smith, N.; Figueiredo, M.; Aguiar, P. Structured sparsity in structured prediction. In Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing, Edinburgh, UK, 27–31 July 2011; Association for Computational Linguistics: Stroudsburg, PA, USA, 2011; pp. 1500–1511. [Google Scholar]

- Nelakanti, A.K.; Archambeau, C.; Mairal, J.; Bach, F.; Bouchard, G. Structured penalties for log-linear language models. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; Association for Computational Linguistics: Stroudsburg, PA, USA, 2013; pp. 233–243. [Google Scholar]

- Available online: http://chatGPT.com (accessed on 17 July 2024).

- Saaty, T.L. Analytic Hierarchy Process; McGraw-Hill: New York, NY, USA, 1980. [Google Scholar]

- Available online: https://www.perplexity.ai/ (accessed on 12 August 2024).

- Available online: https://gemini.google.com/ (accessed on 12 August 2024).

- Available online: https://dedaai.com/ (accessed on 12 August 2024).

- Saaty, T.L. Eigenvector and logarithmic least squares. Eur. J. Oper. Res. 1990, 48, 156–160. [Google Scholar] [CrossRef]

- Saaty, T.L.; Vargas, L.G. Inconsistency and rank preservation. Math. Psychol. 1984, 28, 205–214. [Google Scholar] [CrossRef]

- Saaty, T.L.; Vargas, L.G. Comparison of eigenvalue, logarithmic least squares and least squares methods in estimating ratios. Math. Model. 1984, 5, 309–324. [Google Scholar] [CrossRef]

- Srdjevic, B. Combining different prioritization methods in the analytic hierarchy process synthesis. Comput. Oper. Res. 2005, 32, 1897–1919. [Google Scholar] [CrossRef]

- Crawford, G.; Williams, C. A note on the analysis of subjective judgment matrices. J. Math. Psychol. 1985, 29, 387–405. [Google Scholar] [CrossRef]

- Chu, A.; Kalaba, R.; Springam, K. A comparison of two methods for determining the weights of belonging to fuzzy sets. J. Optim. Theory Appl. 1979, l27, 531–541. [Google Scholar] [CrossRef]

- Mikhailov, L. A fuzzy programming method for deriving priorities in the analytic hierarchy process. J. Oper. Res. Soc. 2000, 51, 341–349. [Google Scholar] [CrossRef]

- Kou, G.; Lin, C. A cosine maximization method for the priority vector derivation in AHP. Eur. J. Oper. Res. 2014, 235, 225–232. [Google Scholar] [CrossRef]

- Tu, J.; Zhibin, W.; Li, Y.; Xiang, C. Optimization Models to Meet the Conditions of Order Preservation in the Analytic Hierarchy Process. Available online: https://arxiv.org/abs/2411.02227 (accessed on 17 July 2024).

- Takeda, E.; Cogger, K.; Yu, P.L. Estimating criterion weights using eigenvectors: A comparative study. Eur. J. Oper. Res. 1987, 29, 360–369. [Google Scholar] [CrossRef]

- Srđević, B.; Srđević, Z. Prioritisation in the analytic hierarchy process for real and generated comparison matrices. Expert Syst. Appl. 2023, 225, 120015. [Google Scholar] [CrossRef]

- Forman, E.; Peniwati, K. Aggregating individual judgments and priorities with the analytic hierarchy process. Eur. J. Oper. Res. 1998, 108, 165–169. [Google Scholar] [CrossRef]

- Srđević, B. Aggregation of priorities in group applications of the analytic hierarchy process in water management. Vodoprivreda 2024, 56, 37–48. (In Serbian) [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).