Interactive Agent-Based Simulation for Experimentation: A Case Study with Cooperative Game Theory

Abstract

1. Introduction

2. Background

2.1. Cooperative Game Theory

2.2. Agent-Based Modeling

2.3. Game Theory and Agent-Based Modeling

Game Theory and Agent-Based Modeling in Human Subject Experimentation

2.4. Glove Game

3. Agent-Based Model and Simulation

3.1. ODD Description of the Model Used in the Experiment to Study Human Decision-Making of Coalition Formation

3.1.1. Purpose and Patterns

3.1.2. Entities, State Variables, and Scales

- Scales

3.1.3. Process Overview and Scheduling

3.1.4. Design Concepts

- Basic principles

- Emergence

- Adaptation

- Objectives

- Learning

- Prediction

- Sensing

- Interaction

- Stochasticity

- Collectives

- Observation

3.1.5. Initialization

3.1.6. Input Data

3.1.7. Sub-Models

- Graphical User Interface

- Algorithm Steps

4. Methodology

4.1. Experimental Method

Correlational Research

4.2. Prototype

4.3. Recruitment Approach

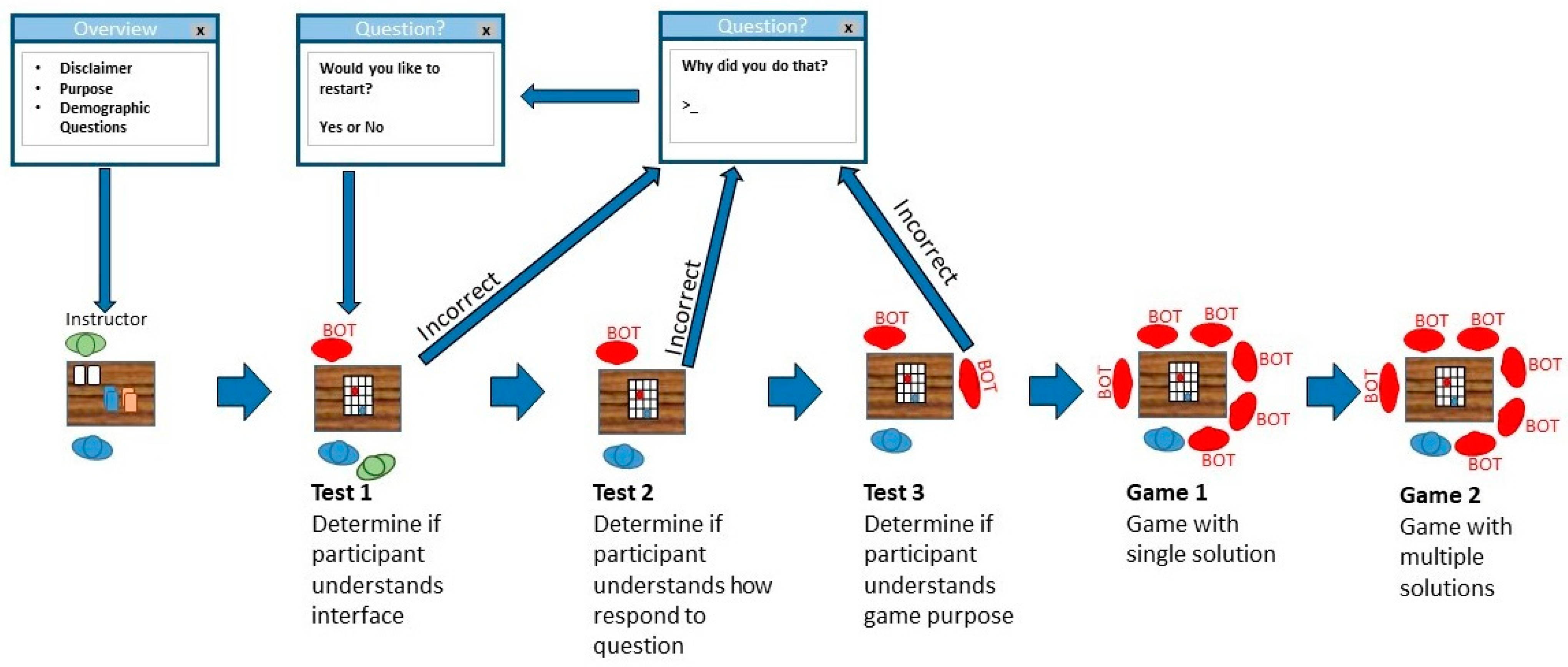

4.4. Experimental Protocol

4.5. Data Collection

- What is your expertise in game theory? “None”, “Low”, “Medium”, “High”, “Never heard of it”, “Prefer not to answer”.

5. Results

5.1. Descriptive Statistics

5.2. Game Theory Experiences Impact

- Does the experience affect the outcome of being a core coalition?

- Does the experience affect the final payoff of the human player?

- Do those with the experience receive, on average, a higher payoff than those that do not?

5.3. Implications of the Findings

6. Limitations and Discussion

6.1. Methodology

6.2. Experiment Protocol

6.2.1. Carryover Effect

6.2.2. Participants

6.2.3. Glove Game

6.3. Statistical Tests

6.3.1. Sample Size

6.3.2. Causality

6.3.3. Power Analysis

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hughes, H.P.N.; Clegg, C.W.; Robinson, M.A.; Crowder, R.M. Agent-based modelling and simulation: The potential contribution to organizational psychology. J. Occup. Organ. Psychol. 2012, 85, 487–502. [Google Scholar] [CrossRef]

- Miller, J.H.; Page, S.E. Complex Adaptive Systems: An Introduction to Computational Models of Social Life, Illustrated edition ed.; Princeton University Press: Princeton, NJ, USA, 2007. [Google Scholar]

- Collins, A.J.; Frydenlund, E. Strategic Group Formation in Agent-based Simulation. Simulation 2018, 94, 179–193. [Google Scholar] [CrossRef]

- Hadzikadic, M.; Carmichael, T.; Curtin, C. Complex adaptive systems and game theory: An unlikely union. Complexity 2010, 16, 34–42. [Google Scholar] [CrossRef]

- Chalkiadakis, G.; Elkind, E.; Wooldridge, M. Computational Aspects of Cooperative Game Theory. Synthesis Lectures on Artificial Intelligence and Machine Learning; Morgan & Claypool: London, UK, 2011; Volume 5, pp. 1–168. [Google Scholar]

- Gilbert, N. Agent-Based Models; Sage Publications Inc.: Thousand Oaks, CA, USA, 2008. [Google Scholar]

- An, L.; Grimm, V.; Turner Ii, B.L. Editorial: Meeting Grand Challenges in Agent-Based Models. J. Artif. Soc. Soc. Simul. 2020, 23, 13. [Google Scholar] [CrossRef]

- Cheng, R.; Macal, C.; Nelson, B.; Rabe, M.; Currie, C.; Fowler, J.; Lee, L.H. Simulation: The past 10 years and the next 10 years. In Proceedings of the 2016 Winter Simulation Conference; Roeder, T.M.K., Frazier, P.I., Szechtman, R., Zhou, E., Huschka, T., Chick, S.E., Eds.; IEEE Press: Washington, DC, USA, 2016; pp. 2180–2192. [Google Scholar]

- Epstein, J.M. Generative Social Science: Studies in Agent-Based Computational Modeling; Princeton University Press: Princeton, NJ, USA, 2007; p. 352. [Google Scholar]

- Vernon-Bido, D.; Collins, A.J. Finding Core Members Of Cooperative Games Using Agent-Based Modeling. J. Artif. Soc. Soc. Simul. 2021, 24, 6. [Google Scholar] [CrossRef]

- Collins, A.J.; Etemadidavan, S. Human Characteristics Impact on Strategic Decisions in a Human-in-the-Loop Simulation. In IIE Annual Conference; Institute of Industrial and Systems Engineers (IISE): Virtual, 2021. [Google Scholar]

- Grimm, V.; Railsback, S.F.; Vincenot, C.E.; Berger, U.; Gallagher, C.; Deangelis, D.L.; Edmonds, B.; Ge, J.; Giske, J.; Groeneveld, J.; et al. The ODD Protocol for Describing Agent-Based and Other Simulation Models: A Second Update to Improve Clarity, Replication, and Structural Realism. J. Artif. Soc. Soc. Simul. 2020, 23, 7. [Google Scholar] [CrossRef]

- Murnighan, J.K.; Roth, A.E. The effects of communication and information availability in an experimental study of a three-person game. Manag. Sci. 1977, 23, 1336–1348. [Google Scholar] [CrossRef]

- Murnighan, J.K.; Roth, A.E. Effects of group size and communication availability on coalition bargaining in a veto game. J. Personal. Soc. Psychol. 1980, 39, 92–103. [Google Scholar] [CrossRef][Green Version]

- Hart, S. Nontransferable utility games and markets: Some examples and the Harsanyi solution. Econometrica 1985, 53, 1445–1450. [Google Scholar] [CrossRef]

- Hart, S.; Kurz, M. Endogenous formation of coalitions. Econom. J. Econom. Soc. 1983, 51, 1047–1064. [Google Scholar] [CrossRef]

- Banerjee, S.; Konishi, H.; Sönmez, T. Core in a simple coalition formation game. Soc. Choice Welf. 2001, 18, 135–153. [Google Scholar] [CrossRef]

- Collins, A.J.; Etemadidavan, S.; Pazos-Lago, P. A Human Experiment Using a Hybrid. Agent-based Model. In 2020 Winter Simulation Conference; IEEE: Orlando, FL, USA, 2020. [Google Scholar]

- Chakravarty, S.R.; Mitra, M.; Sarkar, P. A Course on Cooperative Game Theory; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar]

- Gillies, D.B. Solutions to general non-zero-sum games. Contrib. Theory Games 1959, 4, 47–85. [Google Scholar]

- Shapley, L. A Value of n-person Games. In Contributions to the Theory of Games; Kuhn, H.W., Tucker, A.W., Eds.; Princeton University Press: Princeton, NJ, USA, 1953; pp. 307–317. [Google Scholar]

- Bolton, G.E.; Chatterjee, K.; McGinn, K.L. How communication links influence coalition bargaining: A laboratory investigation. Manag. Sci. 2003, 49, 583–682. [Google Scholar] [CrossRef]

- Neslin, S.A.; Greenhalgh, L. Nash’s theory of cooperative games as a predictor of the outcomes of buyer-seller negotiations: An experiment in media purchasing. J. Mark. Res. 1983, 20, 368–379. [Google Scholar] [CrossRef]

- Montero, M.; Sefton, M.; Zhang, P. Enlargement and the balance of power: An experimental study. Soc. Choice Welf. 2008, 30, 69–87. [Google Scholar] [CrossRef]

- Beimborn, D. The stability of cooperative sourcing coalitions-game theoretical analysis and experiment. Electron. Mark. 2014, 24, 19–36. [Google Scholar] [CrossRef]

- Berl, J.E.; McKelvey, R.D.; Ordeshook, P.C.; Winer, M.D. An experimental test of the core in a simple N-person cooperative nonsidepayment game. J. Confl. Resolut. 1976, 20, 453–479. [Google Scholar] [CrossRef]

- Wilensky, U.; Rand, W. An Introduction to Agent-Based Modeling: Modeling Natural, Social, and Engineered Complex Systems with NetLogo; MIT Press: Cambridge, MA, USA, 2015. [Google Scholar]

- North, M.J.; Macal, C.M. Managing Business Complexity: Discovering Strategic Solutions with Agent-Based Modeling and Simulation; Oxford University Press: New York, NY, USA, 2007. [Google Scholar]

- Farmer, J.D.; Foley, D. The economy needs agent-based modelling. Nature 2009, 460, 685–686. [Google Scholar] [CrossRef]

- Siebers, P.O.; Macal, C.M.; Garnett, J.; Buxton, D.; Pidd, M. Discrete-event simulation is dead, long live agent-based simulation! J. Simul. 2010, 4, 204–210. [Google Scholar] [CrossRef]

- Lee, S.H. Integrated human decision behavior modeling under an extended belief-desire-intention framework. In Systems & Industrial Engineering Graduate College; University of Arizona: Tucson, AZ, USA, 2009; p. 241. [Google Scholar]

- Liang, Y.; An, K.N.; Yang, G.; Huang, J.P. Contrarian behavior in a complex adaptive system. Phys. Rev. E 2013, 87, 12809-1–12809-9. [Google Scholar] [CrossRef]

- Lee, B.; Cheng, S.-F.; Koh, A. An analysis of extreme price shocks and illiquidity among systematic trend followers. In Proceedings of the 20th Asia-Pacific Futures Research Symposium, Hong Kong, China, 25–26 February 2010. [Google Scholar]

- Jie, G. A Study of Organizational Knowledge Management with Agent-Based Modeling and Behavioral Experiments; University of Tokyo: Tokyo, Japan, 2016. [Google Scholar]

- Zhao, L.; Yang, G.; Wang, W.; Chen, Y.; Huang, J.P.; Ohashi, H.; Stanley, H.E. Herd behavior in a complex adaptive system. Proc. Natl. Acad. Sci. USA 2011, 108, 15058–15063. [Google Scholar] [CrossRef] [PubMed]

- Liang, Y.; An, K.N.; Yang, G.; Huang, J.P. A possible human counterpart of the principle of increasing entropy. Phys. Lett. A 2014, 378, 488–493. [Google Scholar] [CrossRef]

- Song, K.; An, K.; Yang, G.; Huang, J. Risk-return relationship in a complex adaptive system. PLoS ONE 2012, 7, e33588. [Google Scholar] [CrossRef] [PubMed]

- Brailsford, S.C.; Eldabi, T.; Kunc, M.; Mustafee, N.; Osorio, A.F. Hybrid simulation modelling in operational research: A state-of-the-art review. Eur. J. Oper. Res. 2019, 278, 721–737. [Google Scholar] [CrossRef]

- Hill, R.R.; Champagne, L.E.; Price, J.C. Using agent-based simulation and game theory to examine the WWII Bay of Biscay U-boat campaign. J. Def. Modeling Simul. Appl. Methodol. Technol. 2004, 1, 99–109. [Google Scholar] [CrossRef]

- Bonnevay, S.; Kabachi, N.; Lamure, M. Agent-based simulation of coalition formation in cooperative games. In Intelligent Agent Technology, IEEE/WIC/ACM International Conference on 2005; IEEE: Compiegne, France, 2005. [Google Scholar]

- Janovsky, P.; DeLoach, S.A. Multi-agent simulation framework for large-scale coalition formation. In 2016 IEEE/WIC/ACM International Conference on Web Intelligence (WI); IEEE: Omaha, NE, USA, 2016. [Google Scholar]

- Cao, Y.; Wei, J. Distributed coalition formation for selfish relays and eavesdroppers in wireless networks: A job-hopping game. In Proceedings of the 2012 International Conference on Wireless Communications and Signal Processing (WCSP), Huangshan, China, 25–27 October 2012. [Google Scholar]

- Jang, I.; Shin, H.-S.; Tsourdos, A. Anonymous hedonic game for task allocation in a large-scale multiple agent system. IEEE Trans. Robot. 2018, 34, 1534–1548. [Google Scholar] [CrossRef]

- Shin, H.-S.; Jang, I.; Tsourdos, A. Frequency channel assignment for networked UAVs using a hedonic game. In 2017 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED-UAS); IEEE: Linkoping, Sweden, 2017. [Google Scholar]

- Collins, A.J.; Krejci, C.C. Understanding the Impact of Farmer Autonomy on Transportation Collaboration using Agent-based Modeling. In Proceedings of the Computational Social Science Society of Americas, Sante Fe, NM, USA, 25–28 October 2018; pp. 1–12. [Google Scholar]

- Collins, A.J. Strategic group formation in the El Farol bar problem. In Complex Adaptive Systems: Views from the Physical, Natural, and Social Sciences; Carmichael, T., Collins, A.J., Eds.; Springer: Cham, Switzerland, 2019; pp. 199–212. [Google Scholar]

- Macal, C.M. Everything you need to know about agent-based modelling and simulation. J. Simul. 2016, 10, 144–156. [Google Scholar] [CrossRef]

- Takko, T. Study on Modelling Human Behavior in Cooperative Games. In School of Science; Aalto University: Espoo, Finland, 2019; p. 63. [Google Scholar]

- Coen, C. Mixing rules: When to cooperate in a multiple-team competition. Simul. Model. Pract. Theory 2006, 14, 423–437. [Google Scholar] [CrossRef]

- Dal Forno, A.; Merlone, U. Grounded theory based agents. In Proceedings of the 2012 Winter Simulation Conference (WSC); IEEE: Berlin, Germany, 2012. [Google Scholar]

- Pansini, R.; Campennì, M.; Shi, L. Segregating socioeconomic classes leads to an unequal redistribution of wealth. Palgrave Commun. 2020, 6, 46. [Google Scholar] [CrossRef]

- Li, X.; An, K.; Yang, G.; Huang, J. Human behavioral regularity, fractional Brownian motion, and exotic phase transition. Phys. Lett. A 2016, 380, 2912–2919. [Google Scholar] [CrossRef]

- Sohn, J.-w. A study on how market policy affects human market selection decision. In Information Sciences and Technology; Pennsylvania State University: State College, PA, USA, 2013; p. 242. [Google Scholar]

- Bhattacharya, K.; Takko, T.; Monsivais, D.; Kaski, K. Group formation on a small-world: Experiment and modelling. J. R. Soc. Interface 2019, 16, 20180814. [Google Scholar] [CrossRef] [PubMed]

- Bogomolnaia, A.; Jackson, M.O. The stability of hedonic coalition structures. Games Econ. Behav. 2002, 38, 201–230. [Google Scholar] [CrossRef]

- Thomas, L.C. Games, Theory and Applications; Dover Publications: Mineola, NY, USA, 2003. [Google Scholar]

- Iehlé, V. The core-partition of a hedonic game. Math. Soc. Sci. 2007, 54, 176–185. [Google Scholar] [CrossRef][Green Version]

- Grimm, V.; Berger, U.; Bastiansen, F.; Eliassen, S.; Ginot, V.; Giske, J.; Goss-Custard, J.; Grand, T.; Heinz, S.K.; Huse, G.; et al. A standard protocol for describing individual-based and agent-based models. Ecol. Model. 2006, 198, 115–126. [Google Scholar] [CrossRef]

- Wilensky, U. Netlogo. 1999. Available online: http://ccl.northwestern.edu/netlogo/ (accessed on 24 September 2021).

- Jhangiani, R.S.; Chiang, I.; Price, P.C. Research Methods in Psychology, 2nd Canadian Edition; BC Campus: Vancouver, BC, Canada, 2015. [Google Scholar]

- Feyerabend, P. Against Method; Verso: Brooklyn, NY, USA, 1993. [Google Scholar]

- Balci, O. How to assess the acceptability and credibility of simulation results. In Proceedings of the 1989 Winter Simulation Conference; MacNair, E., Musselman, K.J., Heidelberger, P., Eds.; IEEE: Piscataway, NJ, USA, 1989; pp. 62–71. [Google Scholar]

- Saolyleh, M.; Collins, A.J.; Pazos, P. Human Subjects Experiment Data Collection for Validating an Agent-based Model. In Proceedings of the MODSIM World 2019 Conference, Norfolk, VA, USA, 23–24 April 2019; pp. 1–7. [Google Scholar]

- Devore, J.L. Probability and Statistics for Engineering and the Sciences, 7th ed.; Brooks/Cole; Thomson Learning, Probability Handbook: Pacific Grove, CA, USA, 2009. [Google Scholar]

- Collins, A.J. Comparing Agent-Based Modeling to Cooperative Game Theory and Human Behavior. In Proceedings of the 2020 Computational Social Sciences Conference, Virtual. 17–20 July 2020; pp. 1–13. [Google Scholar]

- Petty, M.D. Verification, validation, and accreditation. In Modeling and Simulation Fundamentals: Theoretical Underpinnings and Practical Domains; Sokolowski, J.A., Banks, C.M., Eds.; Wiley: Hoboken, NJ, USA, 2010; pp. 325–372. [Google Scholar]

- Rosnow, R.L.; Rosenthal, R. The volunteer subject revisited. Aust. J. Psychol. 1976, 28, 97–108. [Google Scholar] [CrossRef]

- Collins, A.J.; Etemadidavan, S.; Khallouli, W. Generating empirical core size distributions of hedonic games using a Monte Carlo Method. Int. Game Theory Rev. 2022, in press. [Google Scholar]

- Etemadidavan, S.; Collins, A.J. An Empirical Distribution of the Number of Subsets in the Core Partitions of Hedonic Games. Oper. Res. Forum 2022, in press. [Google Scholar]

- Mayr, S.; Erdfelder, E.; Buchner, A.; Faul, F. A short tutorial of GPower. Tutor. Quant. Methods Psychol. 2007, 3, 51–59. [Google Scholar] [CrossRef]

| Category | Population |

|---|---|

| Experienced in game theory | 8 |

| Not experienced in game theory | 23 |

| Category | Percentage |

|---|---|

| The human’s final coalition is a member of the core | 42% |

| The human’s final coalition is not a member of the core | 58% |

| Category | Percentage |

|---|---|

| The human’s final payoff is core payoff or higher | 60% |

| The human’s final payoff is less than the core payoff | 40% |

| Core Coalition Membership | Otherwise | |

|---|---|---|

| Game theory experience | 7 | 9 |

| No experience | 19 | 27 |

| Core Payoff or Greater | Less Than Core Payoff | |

|---|---|---|

| Game theory experience | 11 | 5 |

| No experience | 26 | 20 |

| Experience Characteristics | Game | Final Payoff | |||

|---|---|---|---|---|---|

| Correlation | p-Value | T-Test of Sample Means | p-Value | ||

| Game Theory Experience | Single | 0.08 | 0.69 | (1.69, 1.65) | 0.32 |

| Game Theory Experience | Multiple | 0.04 | 0.82 | (1.53, 1.51) | 0.43 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Collins, A.J.; Etemadidavan, S. Interactive Agent-Based Simulation for Experimentation: A Case Study with Cooperative Game Theory. Modelling 2021, 2, 425-447. https://doi.org/10.3390/modelling2040023

Collins AJ, Etemadidavan S. Interactive Agent-Based Simulation for Experimentation: A Case Study with Cooperative Game Theory. Modelling. 2021; 2(4):425-447. https://doi.org/10.3390/modelling2040023

Chicago/Turabian StyleCollins, Andrew J., and Sheida Etemadidavan. 2021. "Interactive Agent-Based Simulation for Experimentation: A Case Study with Cooperative Game Theory" Modelling 2, no. 4: 425-447. https://doi.org/10.3390/modelling2040023

APA StyleCollins, A. J., & Etemadidavan, S. (2021). Interactive Agent-Based Simulation for Experimentation: A Case Study with Cooperative Game Theory. Modelling, 2(4), 425-447. https://doi.org/10.3390/modelling2040023