Leveraging Multimodal Large Language Models (MLLMs) for Enhanced Object Detection and Scene Understanding in Thermal Images for Autonomous Driving Systems

Abstract

:1. Introduction

2. Literature Review

3. Methodology

3.1. Visual Reasoning and Scene Understanding

3.2. Proposed MLLM Object Detection Framework Using IR-RGB Combination

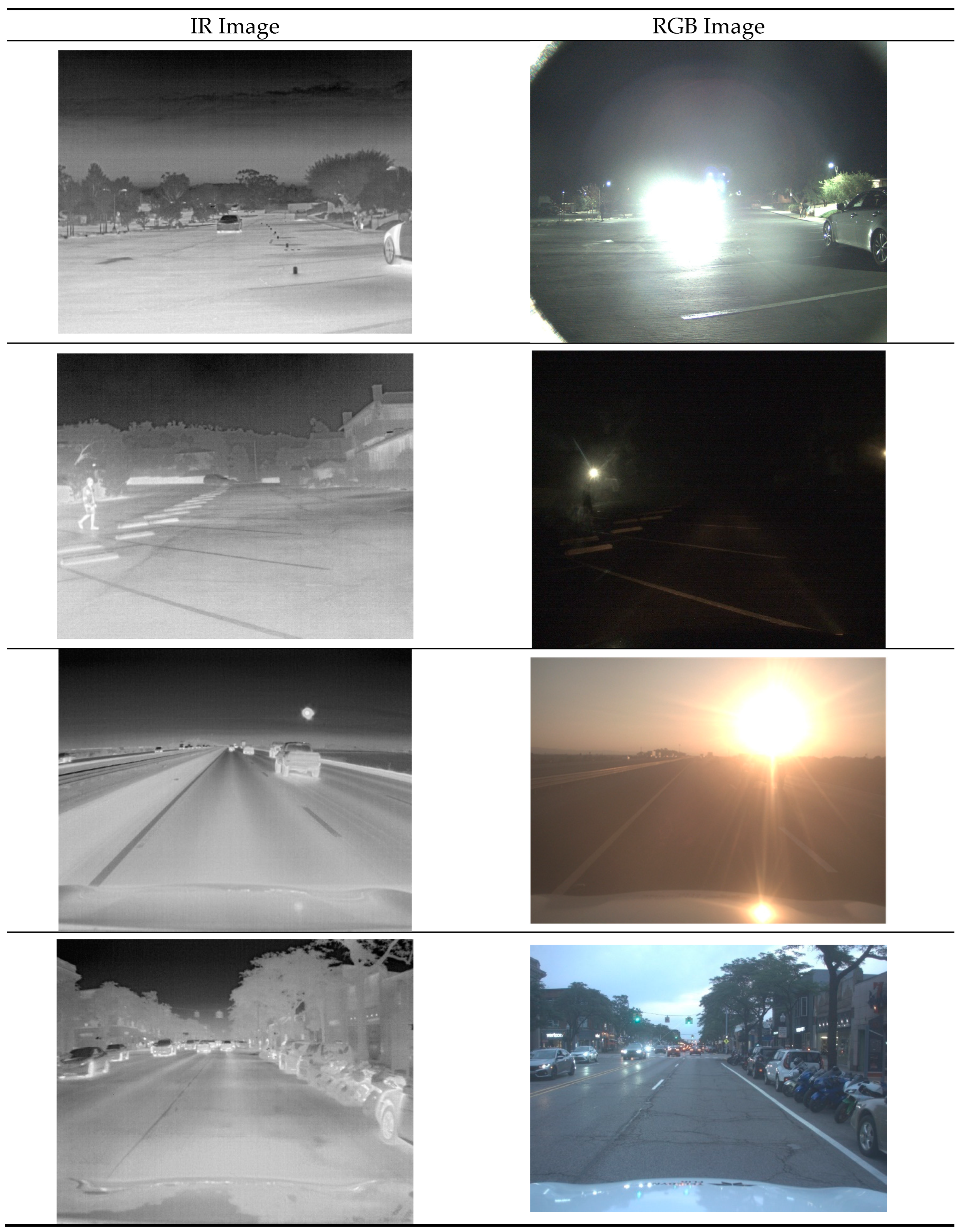

3.3. Dataset

4. Analysis and Results

4.1. Visual Reasoning and Scene Understanding Results

4.1.1. RQ1: Generalized Understanding

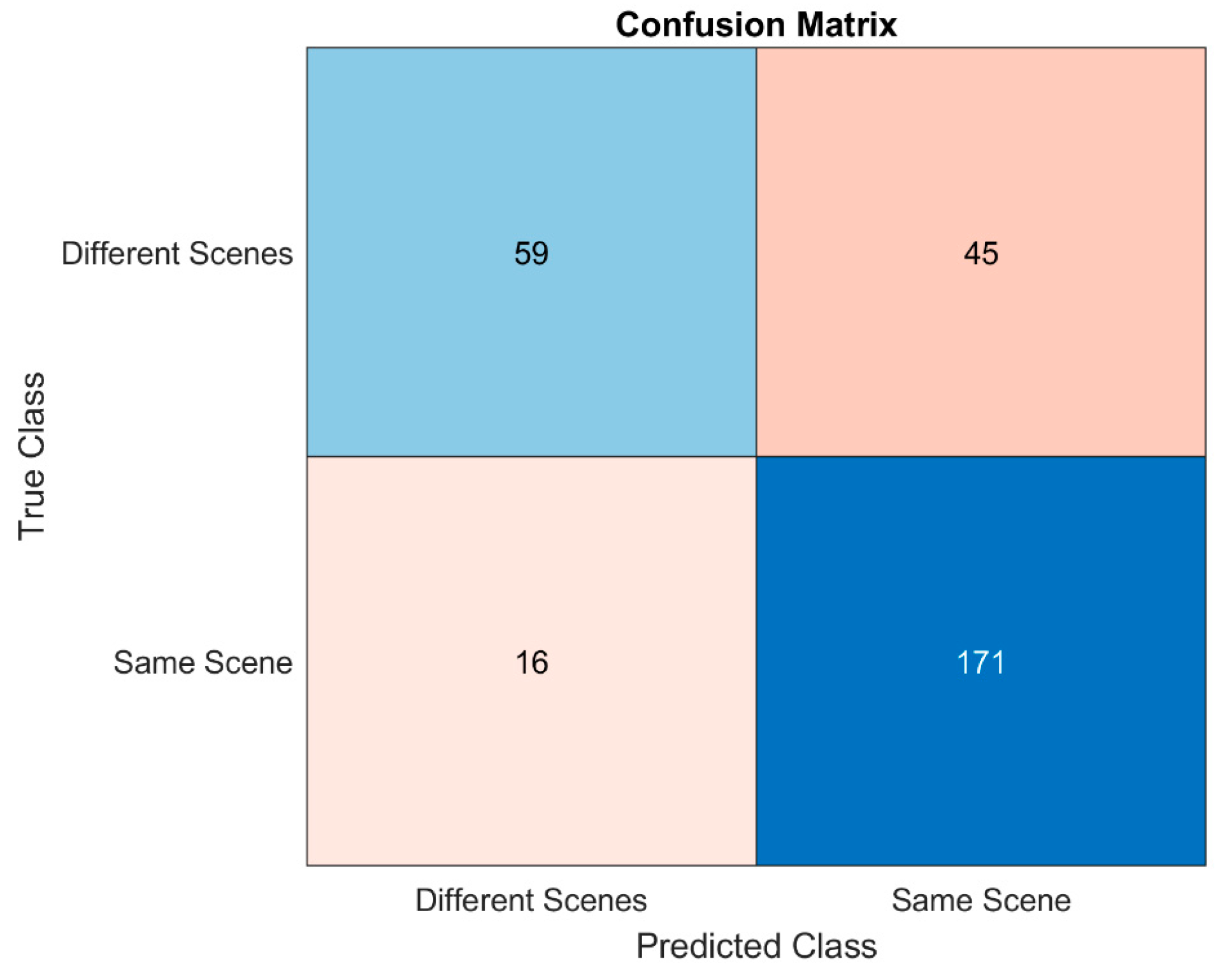

4.1.2. RQ2: Discerning Same Scene Images

4.1.3. Evaluation Results

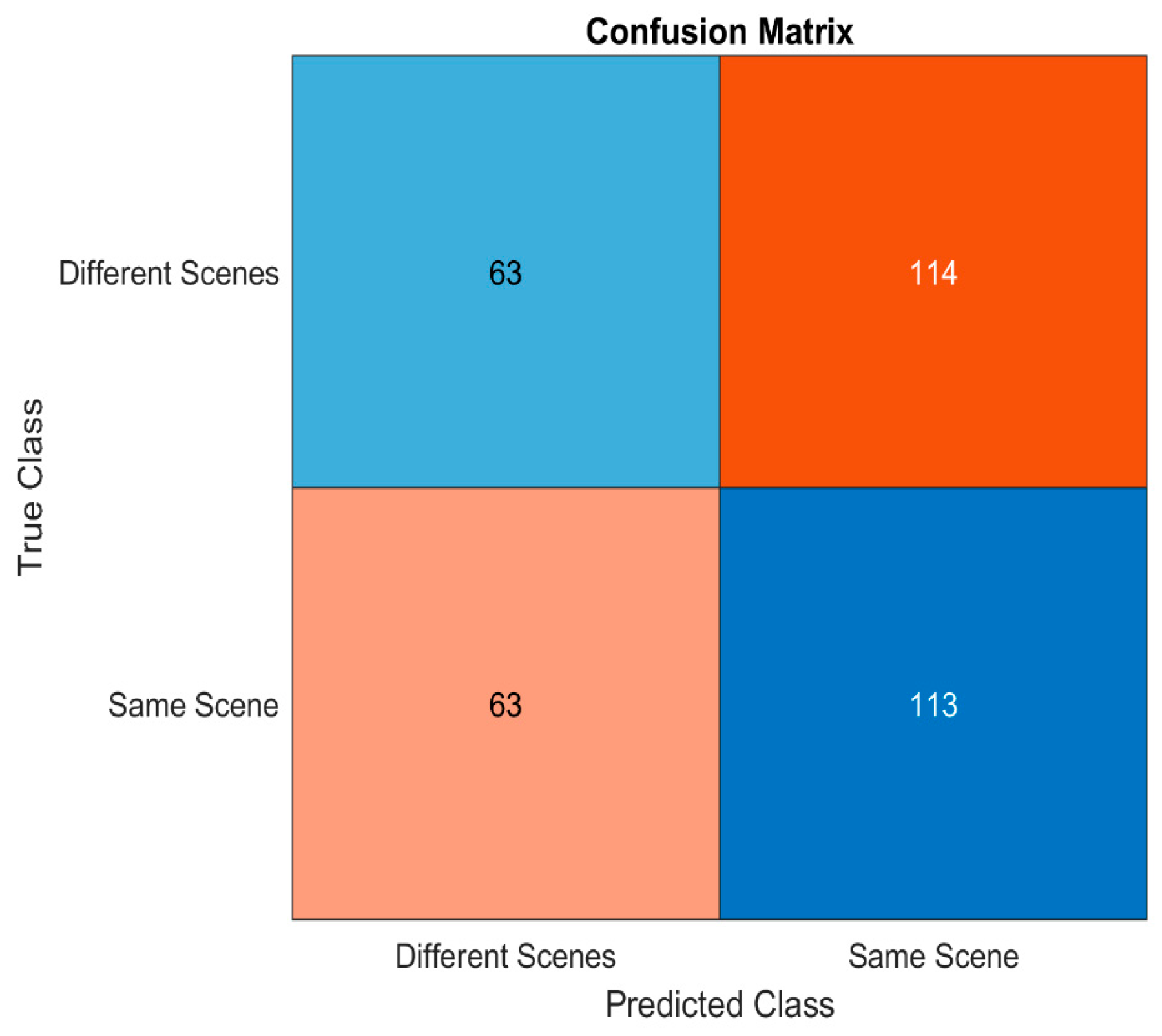

4.2. MLLM Framework of IR-RGB

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Arnay, R.; Acosta, L.; Sigut, M.; Toledo, J. Asphalted road temperature variations due to wind turbine cast shadows. Sensors 2009, 9, 8863–8883. [Google Scholar] [CrossRef] [PubMed]

- Ligocki, A.; Jelinek, A.; Zalud, L.; Rahtu, E. Fully automated dcnn-based thermal images annotation using neural network pretrained on rgb data. Sensors 2021, 21, 1552. [Google Scholar] [CrossRef] [PubMed]

- Ashqar, H.I.; Jaber, A.; Alhadidi, T.I.; Elhenawy, M. Advancing Object Detection in Transportation with Multimodal Large Language Models (MLLMs): A Comprehensive Review and Empirical Testing. arXiv 2024, arXiv:2409.18286. [Google Scholar] [CrossRef]

- Skladchykov, I.O.; Momot, A.S.; Galagan, R.M.; Bohdan, H.A.; Trotsiuk, K.M. Application of YOLOX deep learning model for automated object detection on thermograms. Inf. Extr. Process. 2022, 2022, 69–77. [Google Scholar] [CrossRef]

- Zhang, C.; Okafuji, Y.; Wada, T. Reliability evaluation of visualization performance of convolutional neural network models for automated driving. Int. J. Automot. Eng. 2021, 12, 41–47. [Google Scholar] [CrossRef]

- Hassouna, A.A.A.; Ismail, M.B.; Alqahtani, A.; Alqahtani, N.; Hassan, A.S.; Ashqar, H.I.; AlSobeh, A.M.; Hassan, A.A.; Elhenawy, M.A. Generic and Extendable Framework for Benchmarking and Assessing the Change Detection Models. Preprints 2024, 2024031106. [Google Scholar] [CrossRef]

- Muthalagu, R.; Bolimera, A.S.; Duseja, D.; Fernandes, S. Object and Lane detection technique for autonomous car using machine learning approach. Transp. Telecommun. J. 2021, 22, 383–391. [Google Scholar] [CrossRef]

- Wang, L.; Sun, P.; Xie, M.; Ma, S.; Li, B.; Shi, Y.; Su, Q. Advanced driver-assistance system (ADAS) for intelligent transportation based on the recognition of traffic cones. Adv. Civ. Eng. 2020, 2020, 8883639. [Google Scholar] [CrossRef]

- Lewis, A. Multimodal Large Language Models for Inclusive Collaboration Learning Tasks. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Student Research Workshop, Seattle, DC, USA, 10–15 July 2022. [Google Scholar] [CrossRef]

- Radwan, A.; Amarneh, M.; Alawneh, H.; Ashqar, H.I.; AlSobeh, A.; Magableh, A.A.A.R. Predictive analytics in mental health leveraging llm embeddings and machine learning models for social media analysis. Int. J. Web Serv. Res. (IJWSR) 2024, 21, 1–22. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Z.; Ouyang, X.; Wang, Q.; Shen, D. ChatCAD: Interactive computer-aided diagnosis on medical image using large language models. arXiv 2023, arXiv:2302.07257. [Google Scholar] [CrossRef]

- Gao, P. Research on Grid Inspection Technology Based on General Knowledge Enhanced Multimodal Large Language Models. In Proceedings of the Twelfth International Symposium on Multispectral Image Processing and Pattern Recognition (MIPPR2023), Wuhan, China, 10–12 November 2023. [Google Scholar] [CrossRef]

- Jaradat, S.; Alhadidi, T.I.; Ashqar, H.I.; Hossain, A.; Elhenawy, M. Exploring Traffic Crash Narratives in Jordan Using Text Mining Analytics. arXiv 2024, arXiv:2406.09438. [Google Scholar]

- Tami, M.; Ashqar, H.I.; Elhenawy, M. Automated Question Generation for Science Tests in Arabic Language Using NLP Techniques. arXiv 2024, arXiv:2406.08520. [Google Scholar]

- Alhadidi, T.; Jaber, A.; Jaradat, S.; Ashqar, H.I.; Elhenawy, M. Object Detection using Oriented Window Learning Vi-sion Transformer: Roadway Assets Recognition. arXiv 2024, arXiv:2406.10712. [Google Scholar]

- Ren, Y.; Chen, Y.; Liu, S.; Wang, B.; Yu, H.; Cui, Z. TPLLM: A traffic prediction framework based on pretrained large language models. arXiv 2024, arXiv:2403.02221. [Google Scholar]

- Zhou, X.; Liu, M.; Zagar, B.L.; Yurtsever, E.; Knoll, A.C. Vision language models in autonomous driving and intelligent transportation systems. arXiv 2023, arXiv:2310.14414. [Google Scholar]

- Zhang, Z.; Sun, Y.; Wang, Z.; Nie, Y.; Ma, X.; Sun, P.; Li, R. Large language models for mobility in transportation systems: A survey on forecasting tasks. arXiv 2024, arXiv:2405.02357. [Google Scholar]

- Cui, C.; Ma, Y.; Cao, X.; Ye, W.; Wang, Z. Receive, reason, and react: Drive as you say, with large language models in autonomous vehicles. IEEE Intell. Transp. Syst. Mag. 2024, 16, 81–94. [Google Scholar] [CrossRef]

- Sha, H.; Mu, Y.; Jiang, Y.; Chen, L.; Xu, C.; Luo, P.; Li, S.E.; Tomizuka, M.; Zhan, W.; Ding, M. Languagempc: Large language models as decision makers for autonomous driving. arXiv 2023, arXiv:2310.03026. [Google Scholar]

- Voronin, V.; Zhdanova, M.; Gapon, N.; Alepko, A.; Zelensky, A.A.; Semenishchev, E.A. Deep Visible and Thermal Image Fusion for Enhancement Visibility for Surveillance Application. In Proceedings of the SPIE Security + Defence, Berlin, Germany, 5–8 September 2022. [Google Scholar] [CrossRef]

- Chen, W.; Hu, H.; Chen, X.; Verga, P.; Cohen, W.W. MuRAG: Multimodal Retrieval-Augmented Generator for Open Question Answering Over Images and Text. arXiv 2022, arXiv:2210.02928. [Google Scholar] [CrossRef]

- Li, G.; Wang, Y.; Li, Z.; Zhang, X.; Zeng, D. RGB-T Semantic Segmentation with Location, Activation, and Sharpening. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1223–1235. [Google Scholar] [CrossRef]

- Morales, J.; Vázquez-Martín, R.; Mandow, A.; Morilla-Cabello, D.; García-Cerezo, A. The UMA-SAR Dataset: Multimodal Data Collection from a Ground Vehicle During Outdoor Disaster Response Training Exercises. Int. J. Rob. Res. 2021, 40, 835–847. [Google Scholar] [CrossRef]

- Kütük, Z.; Algan, G. Semantic Segmentation for Thermal Images: A Comparative Survey. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, New Orleans, LA, USA, 19–20 June 2022. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. arXiv 2014, arXiv:1411.4038. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, T.; Wang, Y.; Wang, Y.; Zhao, H. Futr3d: A unified sensor fusion framework for 3d detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–23 June 2023; pp. 172–181. [Google Scholar]

- Selvia, N.; Ashour, K.; Mohamed, R.; Essam, H.; Emad, D.; Elhenawy, M.; Ashqar, H.I.; Hassan, A.A.; Alhadidi, T.I. Advancing roadway sign detection with yolo models and transfer learning. In Proceedings of the IEEE 3rd International Conference on Computing and Machine Intelligence (ICMI), Mt Pleasant, MI, USA, 13–14 April 2024; pp. 1–4. [Google Scholar]

- Xu, Z.; Zhang, Y.; Xie, E.; Zhao, Z.; Guo, Y.; Wong, K.-Y.K.; Li, Z.; Zhao, H. Drivegpt4: Interpretable end-to-end autonomous driving via large language model. IEEE Robot. Autom. Lett. 2024, 9, 8186–8193. [Google Scholar] [CrossRef]

- Jaradat, S.; Nayak, R.; Paz, A.; Ashqar, H.I.; Elhenawy, M. Multitask Learning for Crash Analysis: A Fine-Tuned LLM Framework Using Twitter Data. Smart Cities 2024, 7, 2422–2465. [Google Scholar] [CrossRef]

- Elhenawy, M.; Abutahoun, A.; Alhadidi, T.I.; Jaber, A.; Ashqar, H.I.; Jaradat, S.; Abdelhay, A.; Glaser, S.; Rakotonirainy, A. Visual Reasoning and Multi-Agent Approach in Multimodal Large Language Models (MLLMs): Solving TSP and mTSP Combinatorial Challenges. Mach. Learn. Knowl. Extr. 2024, 6, 1894–1920. [Google Scholar] [CrossRef]

- Masri, S.; Ashqar, H.I.; Elhenawy, M. Leveraging Large Language Models (LLMs) for Traffic Management at Urban Intersections: The Case of Mixed Traffic Scenarios. arXiv 2024, arXiv:2408.00948. [Google Scholar]

- Tami, M.A.; Ashqar, H.I.; Elhenawy, M.; Glaser, S.; Rakotonirainy, A. Using Multimodal Large Language Models (MLLMs) for Automated Detection of Traffic Safety-Critical Events. Vehicles 2024, 6, 1571–1590. [Google Scholar] [CrossRef]

- Tan, H.; Bansal, M. Vokenization: Improving language understanding with contextualized, visual-grounded supervision. arXiv 2020, arXiv:2010.06775. [Google Scholar]

- Su, L.; Duan, N.; Cui, E.; Ji, L.; Wu, C.; Luo, H.; Liu, Y.; Zhong, M.; Bharti, T.; Sacheti, A. GEM: A general evaluation benchmark for multimodal tasks. arXiv 2021, arXiv:2106.09889. [Google Scholar]

- Brauers, J.; Schulte, N.; Aach, T. Multispectral filter-wheel cameras: Geometric distortion model and compensation algorithms. IEEE Trans. Image Process. 2008, 17, 2368–2380. [Google Scholar] [CrossRef]

- Yang, L.; Ma, R.; Zakhor, A. Drone object detection using rgb/ir fusion. arXiv 2022, arXiv:2201.03786. [Google Scholar] [CrossRef]

- Reithmeier, L.; Krauss, O.; Zwettler, A.G. Transfer Learning and Hyperparameter Optimization for Instance Segmentation with RGB-D Images in Reflective Elevator Environments. In Proceedings of the WSCG’2021–29. International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision’2021, Plzen, Czech Republic, 17–20 May 2021. [Google Scholar]

- Shinmura, F.; Deguchi, D.; Ide, I.; Murase, H.; Fujiyoshi, H. Estimation of Human Orientation using Coaxial RGB-Depth Images. In Proceedings of the 10th International Conference on Computer Vision Theory and Applications (VISAPP-2015), Berlin, Germany, 11–14 March 2015; pp. 113–120. [Google Scholar]

- Yamakawa, T.; Fukano, K.; Onodera, R.; Masuda, H. Refinement of colored mobile mapping data using intensity images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 167–173. [Google Scholar] [CrossRef]

- Rosalina, N.H. An approach of securing data using combined cryptography and steganography. Int. J. Math. Sci. Comput. (IJMSC) 2020, 6, 1–9. [Google Scholar]

- Dale, R. GPT-3: What’s it good for? Nat. Lang. Eng. 2021, 27, 113–118. [Google Scholar] [CrossRef]

- Kenton, J.D.M.-W.C.; Toutanova, L.K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the naacL-HLT, Minneapolis, MN, USA, 2–7 June 2019; p. 2. [Google Scholar]

- Liu, Y. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar] [CrossRef]

- Sanderson, K. GPT-4 is here: What scientists think. Nature 2023, 615, 773. [Google Scholar] [CrossRef]

- Krišto, M.; Ivašić-Kos, M. Thermal imaging dataset for person detection. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 1126–1131. [Google Scholar]

| Do the following/1—Describe the first image/2—describe the second image/3—compare the two descriptions then What is the probability that these two images were captured of the same scene? Please return a number between zero and one, where one means you are certain the two images depict the same scene. |

| Your role is systematically inspecting RGB image for ADAS and autonomous vehicles applications, analyze the provided RGB image. Your output should be formatted as a Python dictionary. Each key in the dictionary represents a category ID from the list of interest, and each corresponding value should be the frequency at which that category appears in the scene captured by the RGB image. The analysis must focus exclusively on the following categories:/Category Id 1: Person/Category Id 2: Bike/Category Id 3: Car (includes pickup trucks and vans)/Category Id 4: Motorcycle/Category Id 6: Bus/Category Id 7: Train/Category Id 8: Truck (specifically semi/freight trucks, not pickup trucks)/Category Id 10: Traffic light/Category Id 11: Fire hydrant/Category Id 12: Street sign/Category Id 17: Dog/Category Id 37: Skateboard/Category Id 73: Stroller (also known as a pram, a four-wheeled carriage for a child)/Category Id 77: Scooter/Category Id 79: Other vehicle (includes less common vehicles such as construction equipment and trailers)/Do not include descriptive text or explanations in your output. Only provide the dictionary with the category IDs and frequencies as values. The dictionary should look similar to this: {1: 0, 2: 1, 3: 3, …}. If a category is not present in the image, assign a frequency of 0 to it in the dictionary. |

| Your role is systematically inspecting thermal image for ADAS and autonomous vehicles applications, analyze the provided thermal image. Your output should be formatted as a Python dictionary. Each key in the dictionary represents a category ID from the list of interest, and each corresponding value should be the frequency at which that category appears in the scene captured by the RGB image. The analysis must focus exclusively on the following categories:/Category Id 1: Person/Category Id 2: Bike/Category Id 3: Car (includes pickup trucks and vans)/Category Id 4: Motorcycle/Category Id 6: Bus/Category Id 7: Train/Category Id 8: Truck (specifically semi/freight trucks, not pickup trucks)/Category Id 10: Traffic light/Category Id 11: Fire hydrant/Category Id 12: Street sign/Category Id 17: Dog/Category Id 37: Skateboard/Category Id 73: Stroller (also known as a pram, a four-wheeled carriage for a child)/Category Id 77: Scooter/Category Id 79: Other vehicle (includes less common vehicles such as construction equipment and trailers)/Do not include descriptive text or explanations in your output. Only provide the dictionary with the category IDs and frequencies as values. The dictionary should look similar to this: {1: 0, 2: 1, 3: 3, …}. If a category is not present in the image, assign a frequency of 0 to it in the dictionary. |

| Your role is systematically inspecting pair of thermal and RGB images for ADAS and autonomous vehicles applications, analyze the provided pair of images. Your output should be formatted as a Python dictionary. Each key in the dictionary represents a category ID from the list of interest, and each corresponding value should be the frequency at which that category appears in the scene captured by image pair. The analysis must focus exclusively on the following categories:/Category Id 1: Person/Category Id 2: Bike/Category Id 3: Car (includes pickup trucks and vans)/Category Id 4: Motorcycle/Category Id 6: Bus/Category Id 7: Train/Category Id 8: Truck (specifically semi/freight trucks, not pickup trucks)/Category Id 10: Traffic light/Category Id 11: Fire hydrant/Category Id 12: Street sign/Category Id 17: Dog/Category Id 37: Skateboard/Category Id 73: Stroller (also known as a pram, a four-wheeled carriage for a child)/Category Id 77: Scooter/Category Id 79: Other vehicle (includes less common vehicles such as construction equipment and trailers)/Do not include descriptive text or explanations in your output. Only provide the dictionary with the category IDs and frequencies as values. The dictionary should look similar to this: {1: 0, 2: 1, 3: 3, …}. If a category is not present in the image, assign a frequency of 0 to it in the dictionary. |

| Experiment 1: IR and RGB combined | Your role is systematically inspecting pair of thermal and RGB images for ADAS and autonomous vehicles applications, analyse the provided pair of images. Your output should be formatted as a Python dictionary. Each key in the dictionary represents a category ID from the list of interest, and each corresponding value should be the frequency at which that category appears in the scene captured by image pair. The analysis must focus exclusively on the following categories:/Category Id 1: Person/Category Id 2: Bike/Category Id 3: Car (includes pickup trucks and vans)/Category Id 4: Motorcycle/Category Id 6: Bus/Category Id 7: Train/Category Id 8: Truck (specifically semi/freight trucks, not pickup trucks)/Category Id 10: Traffic light/Category Id 11: Fire hydrant/Category Id 12: Street sign/Category Id 17: Dog/Category Id 37: Skateboard/Category Id 73: Stroller (also known as a pram, a four-wheeled carriage for a child)/Category Id 77: Scooter/Category Id 79: Other vehicle (includes less common vehicles such as construction equipment and trailers)/Do not include descriptive text or explanations in your output. Only provide the dictionary with the category IDs and frequencies as values. The dictionary should look similar to this: {1: 0, 2: 1, 3: 3, …}. If a category is not present in the image, assign a frequency of 0 to it in the dictionary. |

| Experiment 2: RGB given IR | def generate_prompt(detected_objects): category_descriptions = { 1: “Person”, 2: “Bike”, 3: “Car (includes pickup trucks and vans)”, 4: “Motorcycle”, 6: “Bus”, 7: “Train”, 8: “Truck (specifically semi/freight trucks, not pickup trucks)”, 10: “Traffic light”, 11: “Fire hydrant”, 12: “Street sign”, 17: “Dog”, 37: “Skateboard”, 73: “Stroller (also known as a pram)”, 77: “Scooter”, 79: “Other vehicle (includes construction equipment and trailers)”} # Start building the prompt prompt = (“Your task is to systematically inspect RGB images for ADAS and autonomous vehicles applications,” “taking into account prior detections from corresponding IR images. Detected objects in the IR image are provided in the format” f“‘{detected_objects}’. Note that certain categories might appear exclusively in the IR images and not in the RGB counterparts, and vice versa.\n\n” “Your findings should be structured as a Python dictionary, where each key corresponds to a category ID of interest and each value indicates” “the frequency of its appearance in both RGB and IR scenes. Concentrate your analysis on the following categories:\n\n”) # Add category list for category_id, description in category_descriptions.items(): prompt += f“—Category Id {category_id}: {description}\n” # Finish the prompt prompt += (“\nDo not include descriptive text or explanations in your output. Provide only the dictionary with the category IDs and frequencies as values,” “such as ‘{1: 0, 2: 1, 3: 3, …}’. If a category is not present in either the RGB or IR image, assign a frequency of 0 to it in the dictionary.”) return prompt |

| Experiment 3: IR given RGB | def generate_prompt_IR_given_RGB(rgb_categories): # Descriptions of categories detected in the RGB image category_descriptions = { 1: “Person”, 2: “Bike”, 3: “Car (includes pickup trucks and vans)”, 4: “Motorcycle”, 6: “Bus”, 7: “Train”, 8: “Truck (specifically semi/freight trucks, not pickup trucks)”, 10: “Traffic light”, 11: “Fire hydrant”, 12: “Street sign”, 17: “Dog”, 37: “Skateboard”, 73: “Stroller (also known as a pram)”, 77: “Scooter”, 79: “Other vehicle (includes construction equipment and trailers)”} # Start building the prompt prompt = (“Your task is to systematically inspect IR images for ADAS and autonomous vehicles applications,” “using the context of previously detected objects in corresponding RGB images. Categories detected in the RGB images are provided” f“as ‘{rgb_categories}’. Be aware that some categories may appear in the RGB images but not in the IR images, and vice versa.\n\n” “Your findings should be structured as a Python dictionary. Each key in the dictionary represents a category ID from the list of interest,” “and each corresponding value should be the frequency at which that category appears in the scene captured by the IR image. Focus your analysis on the following categories:\n\n”) # Add category list for category_id, description in category_descriptions.items(): prompt += f“- Category Id {category_id}: {description}\n” # Finish the prompt prompt += (“\nDo not include descriptive text or explanations in your output. Provide only the dictionary with the category IDs and frequencies as values,” “such as ‘{1: 0, 2: 1, 3: 3, …}’. If a category is not present in the IR image, assign a frequency of 0 to it in the dictionary.”) return prompt |

| Evaluation Metrics | Gemini 1.0 Pro Vision | GPT4 Vision Preview |

|---|---|---|

| Precision (same scene) | 0.79 | 0.50 |

| Recall (same scene) | 0.91 | 0.64 |

| F1 Score (same scene) | 0.85 | 0.56 |

| Precision (different scene) | 0.79 | 0.50 |

| Recall (different scene) | 0.57 | 0.36 |

| F1 Score (different scene) | 0.66 | 0.42 |

| MLLM Model | Input Images | MLLM Answer |

|---|---|---|

| GPT4 |   | 1. The first image depicts a dimly lit scene, possibly at night, with heavy fog or mist that reduces visibility. A single person is visible and walks away from the viewpoint of a bright light source that appears to be a street lamp. The ground shows parking lot markings, and the overall atmosphere is quite eerie owing to low light and fog. 2. The second image shows an urban environment with clearer visibility, which is likely captured during the day or evening in a well-lit area. There are multiple vehicles, both parked and in motion, and the architecture suggests an overpass or a bridge structure. The scene is black and white, and reflections on wet ground indicate recent rain or water presence. Comparing the two descriptions, the scenes depicted in the images were quite different. The first image is characterized by a solitary figure in a foggy, dimly lit parking lot, whereas the second image is an urban setting with multiple vehicles and clearer conditions. The architectural elements, lighting conditions, and presence of multiple subjects in the second image contrast with the solitary figure and the atmospheric conditions of the first image. Given these observations, the probability that these two images were captured in the same scene is very low. The differences in lighting, weather conditions, environment, and activity levels suggest that they represent different scenes. Therefore, the probability was estimated to be close to 0. |

| Gemini |   | 1. The first image is a thermal image of a street at night. There are cars on the street, and people walk sidewalks. The image is blurry, and it is difficult to see many details. 2. The second image is a photograph of a parking lot at night. There is one person walking in the parking lot, and there are cars parked in the background. The image is also blurry, and it is difficult to see many details. 3. The two images are similar in that they are both thermal images of a street at night. However, there are also some differences between the two images. The first image is a wider shot, and it shows more of the street. The second image is a closer shot, and it shows more detail of the person walking. Overall, I would say that the probability that these two images were captured of the same scene is 0.5. |

| Gemini |   | 1. The first image is a thermal image of a highway at night. The image is in black and white, and shows the highway from a driver’s perspective. The highway is straight and there are cars driving in both directions. The image is clear and the details of the cars and the highway are visible. 2. The second image is a color image of a street intersection during the day. The image is in color and shows the intersection from a driver’s perspective. The street is straight and there are cars driving in both directions. The image is clear and the details of the cars and the street are visible. 3. The two images are similar in that they are both images of roads from a driver’s perspective. However, the images are different in that the first image is a thermal image and the second image is a color image. The first image is taken at night and the second image is taken during the day. The first image is of a highway and the second image is of a street intersection. Based on the differences between the two images, it is unlikely that they were captured of the same scene. I would give it a probability of 0.1. |

| MLLM Model | GPT4 Vision Preview | Gemini 1.0 Pro Vision | ||||||

|---|---|---|---|---|---|---|---|---|

| Evaluation Metrics per Category | TPR | FPR | MAE | MAPE | TPR | FPR | MAE | MAPE |

| Category ID 1: Person | 0.57 | 0 | 4.76 | 70.39 | 0.39 | 0 | 4.47 | 81.48 |

| Category ID 2: Bike | 0.31 | 0.01 | 1.89 | 78.40 | 0.41 | 0.02 | 1.37 | 66.53 |

| Category ID 3: Car (includes pickup trucks and vans) | 0.86 | 0.09 | 4.35 | 55.81 | 0.90 | 0.08 | 5.04 | 59.35 |

| Category ID 4: Motorcycle | 0.08 | 0 | 1.38 | 96.15 | 0.24 | 0.01 | 1.06 | 78.18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ashqar, H.I.; Alhadidi, T.I.; Elhenawy, M.; Khanfar, N.O. Leveraging Multimodal Large Language Models (MLLMs) for Enhanced Object Detection and Scene Understanding in Thermal Images for Autonomous Driving Systems. Automation 2024, 5, 508-526. https://doi.org/10.3390/automation5040029

Ashqar HI, Alhadidi TI, Elhenawy M, Khanfar NO. Leveraging Multimodal Large Language Models (MLLMs) for Enhanced Object Detection and Scene Understanding in Thermal Images for Autonomous Driving Systems. Automation. 2024; 5(4):508-526. https://doi.org/10.3390/automation5040029

Chicago/Turabian StyleAshqar, Huthaifa I., Taqwa I. Alhadidi, Mohammed Elhenawy, and Nour O. Khanfar. 2024. "Leveraging Multimodal Large Language Models (MLLMs) for Enhanced Object Detection and Scene Understanding in Thermal Images for Autonomous Driving Systems" Automation 5, no. 4: 508-526. https://doi.org/10.3390/automation5040029

APA StyleAshqar, H. I., Alhadidi, T. I., Elhenawy, M., & Khanfar, N. O. (2024). Leveraging Multimodal Large Language Models (MLLMs) for Enhanced Object Detection and Scene Understanding in Thermal Images for Autonomous Driving Systems. Automation, 5(4), 508-526. https://doi.org/10.3390/automation5040029