GEB-YOLO: Optimized YOLOv7 Model for Surface Defect Detection on Aluminum Profiles †

Abstract

1. Introduction

2. Improved Surface Defect Detection Model

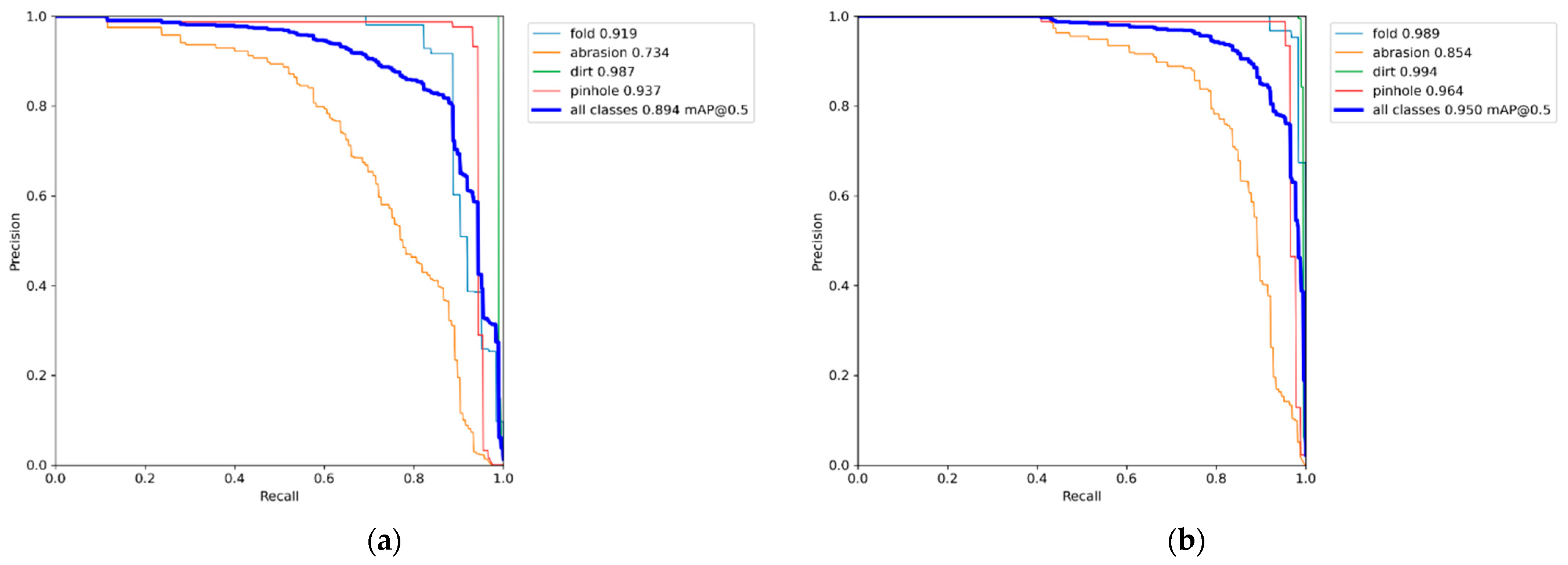

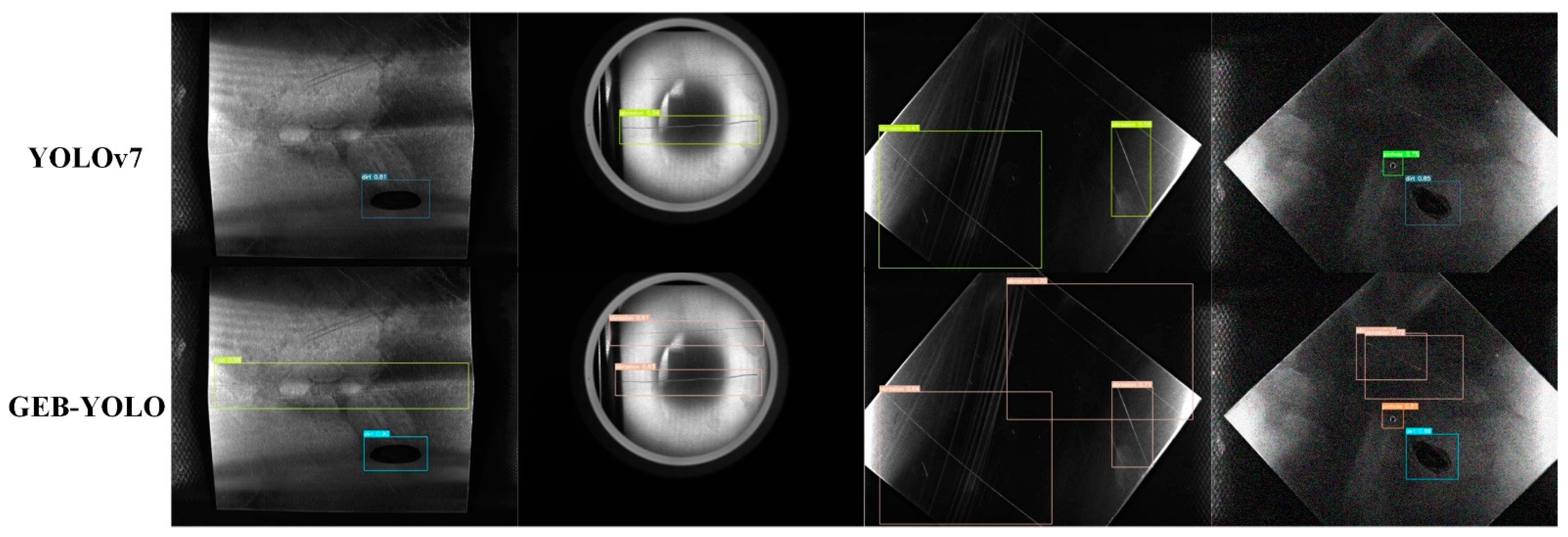

2.1. GEB-YOLO Model

2.2. YOLOv7 Model

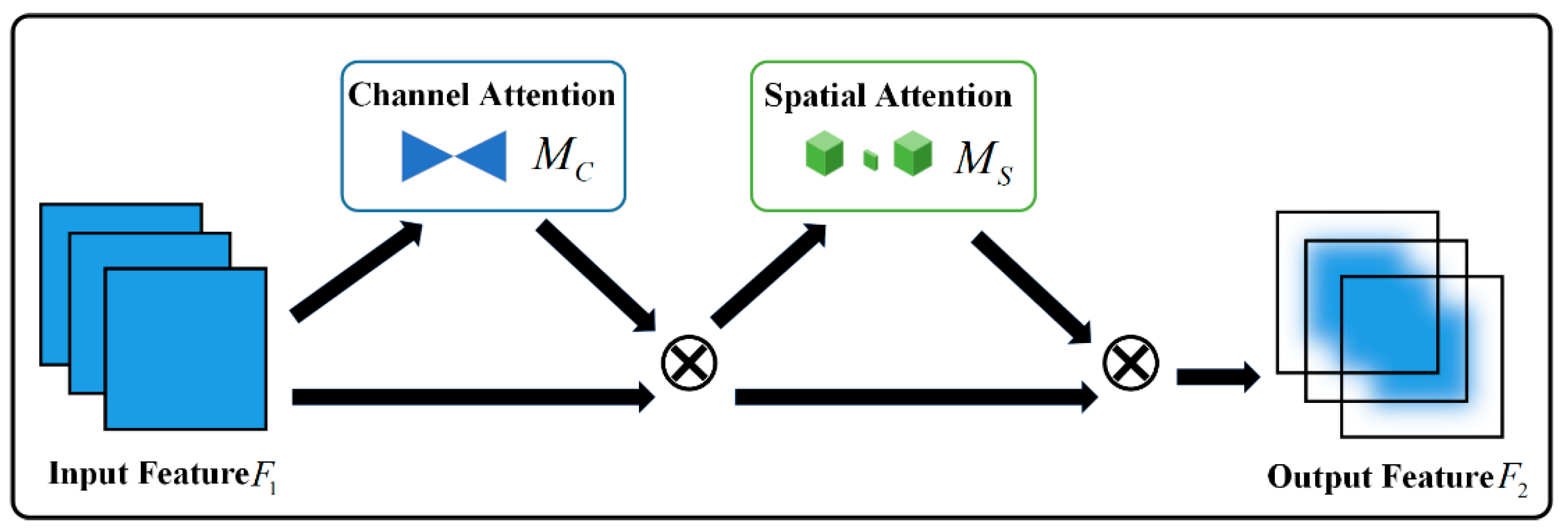

2.3. GAM Module

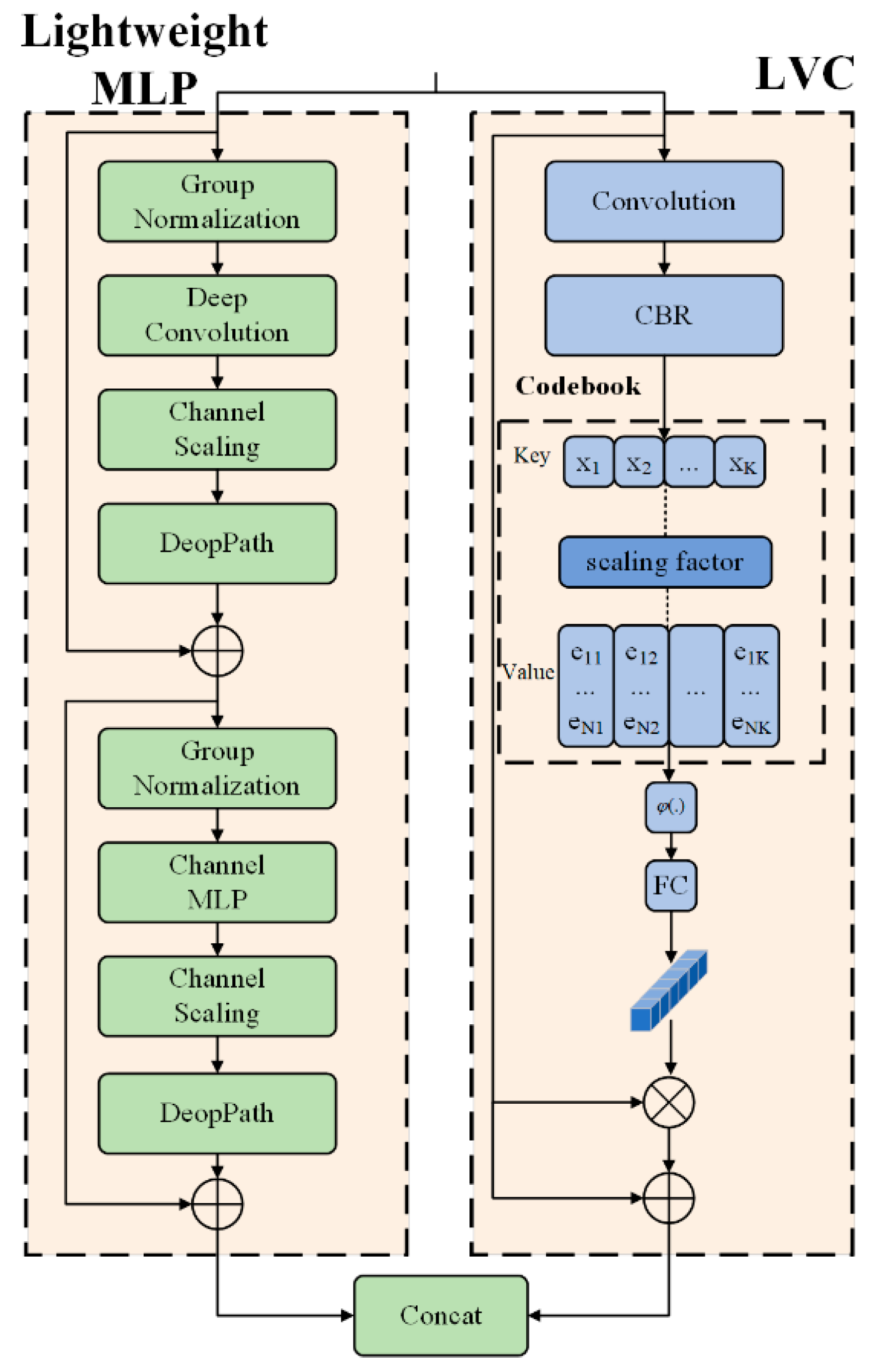

2.4. EVCBlock

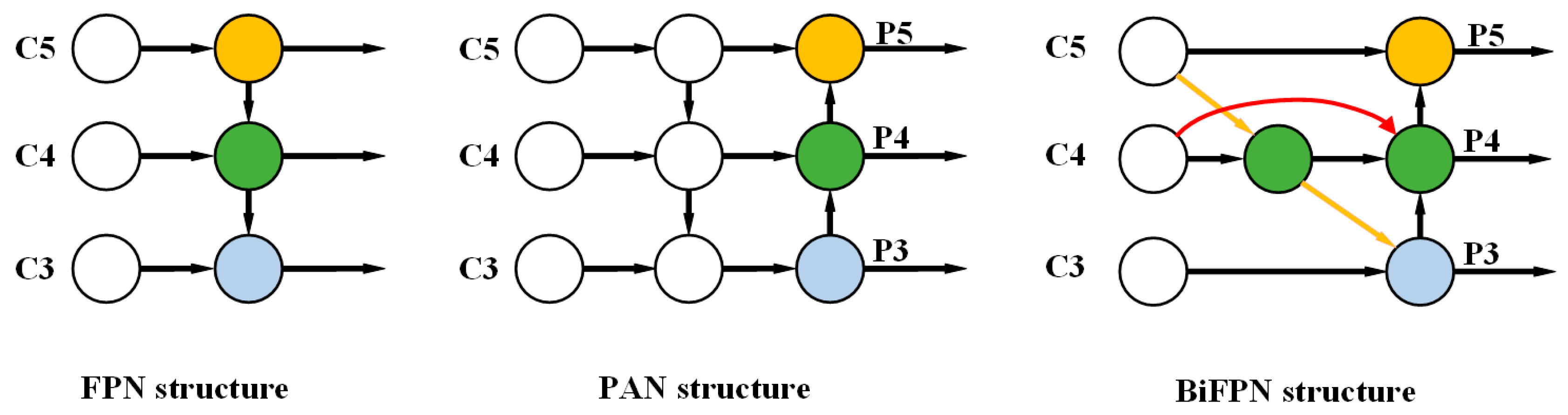

3. BiFPN

4. Experiment and Verification

4.1. Experimental Dataset and Environment

4.2. Evaluation Metrics

4.3. Network Training

4.4. Ablation Experiments

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ramirez-Pacheco, E.; Espina-Hernandez, J.H.; Caleyo, F.; Hallen, J.M. Defect detection in aluminium with an eddy currents sensor. In Proceedings of the 2010 IEEE Electronics, Robotics and Automotive Mechanics Conference, Cuernavaca, Mexico, 28 September–1 October 2010. [Google Scholar]

- Gupta, M.; Khan, M.A.; Butola, R.; Singari, R.M. Advances in applications of Non-Destructive Testing (NDT): A review. Adv. Mater. Process. Technol. 2022, 8, 2286–2307. [Google Scholar] [CrossRef]

- Xu, Z.; Meng, Y.; Yin, Z.; Liu, B.; Zhang, Y.; Lin, M. Enhancing autonomous driving through intelligent navigation: A comprehensive improvement approach. J. King Saud Univ.—Comput. Inf. Sci. 2024, 36, 102108. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Wei, X.; Yang, Z.; Liu, Y.; Wei, D.; Jia, L.; Li, Y. Railway track fastener defect detection based on image processing and deep learning techniques: A comparative study. Eng. Appl. Artif. Intell. 2019, 80, 66–81. [Google Scholar] [CrossRef]

- Hu, B.; Wang, J. Detection of PCB surface defects with improved faster-RCNN and feature pyramid network. IEEE Access 2020, 8, 108335–108345. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D. Ssd: Single shot multibox detector. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 June 2017. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3: An incremental improvement. In Computer Vision and Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Yuan, Z.; Ning, H.; Tang, X.; Yang, Z. GDCP-YOLO: Enhancing steel surface defect detection using lightweight machine learning approach. Electronics 2024, 13, 1388. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, G.; Wang, W.; Chen, J.; Jiang, X.; Yuan, H.; Huang, Z. A defect detection method for industrial aluminum sheet surface based on improved YOLOv8 algorithm. Front. Phys. 2024, 12, 1419998. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, W. Surface defect detection algorithm for strip steel based on improved yolov7 model. IAENG Int. J. Comput. Sci. 2024, 51, 308–316. [Google Scholar]

- Liu, Z.; Li, L.; Fang, X.; Qi, W.; Shen, J.; Zhou, H.; Zhang, Y. Hard-rock tunnel lithology prediction with TBM construction big data using a global-attention-mechanism-based LSTM network. Autom. Constr. 2021, 125, 103647. [Google Scholar] [CrossRef]

- Quan, Y.; Zhang, D.; Zhang, L.; Tang, J. Centralized feature pyramid for object detection. IEEE Trans. Image Process. 2023, 32, 4341–4354. [Google Scholar] [CrossRef] [PubMed]

- He, J.; Wang, Y.; Wang, Y.; Li, R.; Zhang, D.; Zheng, Z. A lightweight road crack detection algorithm based on improved YOLOv7 model. Signal Image Video Process. 2024, 18, 847–860. [Google Scholar] [CrossRef]

- Zhai, X.; Huang, Z.; Li, T.; Liu, H.; Wang, S. YOLO-Drone: An Optimized YOLOv8 Network for Tiny UAV Object Detection. Electronics 2023, 12, 3664. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

| Configuration | Version |

|---|---|

| Operating System | Window 11 |

| CPU | AMD Ryzen 9 5900X 12-Core Processor 3.70 GHz |

| GPU | NVIDIA GeForce GTX 3090 |

| PyTorch | 2.0.0 |

| Cuda | 11.7 |

| Python | 3.8 |

| GAM | EVCBlock | BiFPN | mAP/% | Precision/% | Recall/% | FPS/fps | |

|---|---|---|---|---|---|---|---|

| 1 | 89.41 | 90.84 | 83.66 | 60 | |||

| 2 | ✓ | 90.21 | 90.81 | 84.60 | 65 | ||

| 3 | ✓ | 89.78 | 89.62 | 86.22 | 61 | ||

| 4 | ✓ | 92.96 | 92.99 | 88.16 | 60 | ||

| 5 | ✓ | ✓ | 90.77 | 91.48 | 87.56 | 67 | |

| 6 | ✓ | ✓ | 92.15 | 93.27 | 87.66 | 63 | |

| 7 | ✓ | ✓ | 94.15 | 95.18 | 91.09 | 67 | |

| 8 | ✓ | ✓ | ✓ | 95.03 | 95.39 | 92.44 | 69 |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Z.; Hu, J.; Xiao, X.; Xu, Y. GEB-YOLO: Optimized YOLOv7 Model for Surface Defect Detection on Aluminum Profiles. Eng. Proc. 2024, 75, 28. https://doi.org/10.3390/engproc2024075028

Xu Z, Hu J, Xiao X, Xu Y. GEB-YOLO: Optimized YOLOv7 Model for Surface Defect Detection on Aluminum Profiles. Engineering Proceedings. 2024; 75(1):28. https://doi.org/10.3390/engproc2024075028

Chicago/Turabian StyleXu, Zihao, Jinran Hu, Xingyi Xiao, and Yujian Xu. 2024. "GEB-YOLO: Optimized YOLOv7 Model for Surface Defect Detection on Aluminum Profiles" Engineering Proceedings 75, no. 1: 28. https://doi.org/10.3390/engproc2024075028

APA StyleXu, Z., Hu, J., Xiao, X., & Xu, Y. (2024). GEB-YOLO: Optimized YOLOv7 Model for Surface Defect Detection on Aluminum Profiles. Engineering Proceedings, 75(1), 28. https://doi.org/10.3390/engproc2024075028