Information Geometry

A topical collection in Entropy (ISSN 1099-4300). This collection belongs to the section "Multidisciplinary Applications".

Viewed by 5127Editor

Interests: probability theory; Bayesian inference; machine learning; information geometry; differential geometry; nuclear fusion; plasma physics; plasma turbulence; continuum mechanics; statistical mechanics

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

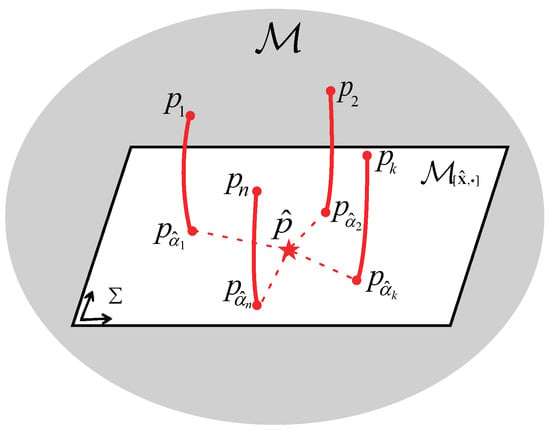

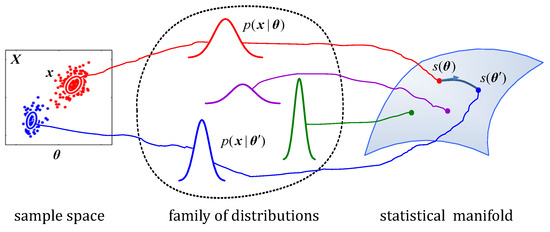

The mathematical field of information geometry originated from the observation that Fisher information can be used to define a Riemannian metric on manifolds of probability distributions. This led to a geometrical description of probability theory and statistics, allowing studies of the invariant properties of statistical manifolds. It was through the work of S.-I. Amari and others that it was later realized that the differential–geometric structure of a statistical manifold can be extended to families of dual affine connections and that such a structure can be derived from divergence functions.

Since then, information geometry has become a truly interdisciplinary field with applications in various domains. It enables a deeper and more intuitive understanding of the methods of statistical inference and machine learning, while providing a powerful framework for deriving new algorithms. As such, information geometry has many applications in optimization, signal and image processing, computer vision, neural networks, and other subfields of the information sciences. Furthermore, the methods of information geometry have been applied to a broad variety of topics in physics, mathematical finance, biology, and the neurosciences. In physics, there are many links with fields that have a natural probabilistic interpretation, including (nonextensive) statistical mechanics and quantum mechanics.

For this collection, we welcome submissions related to the foundations and applications of information geometry. We envisage contributions that aim at clarifying the connection of information geometry with both the information sciences and the physical sciences, so as to demonstrate the profound impact of the field in these disciplines. In addition, we hope to receive original papers illustrating the wide variety of applications of the methods of information geometry.

Prof. Dr. Geert Verdoolaege

Collection Editor

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Entropy is an international peer-reviewed open access monthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.