The Coronavirus Disease 2019 Spatial Care Path: Home, Community, and Emergency Diagnostic Portals

Abstract

:1. Introduction

2. Methods and Materials

2.1. Emergency Use Authorizations

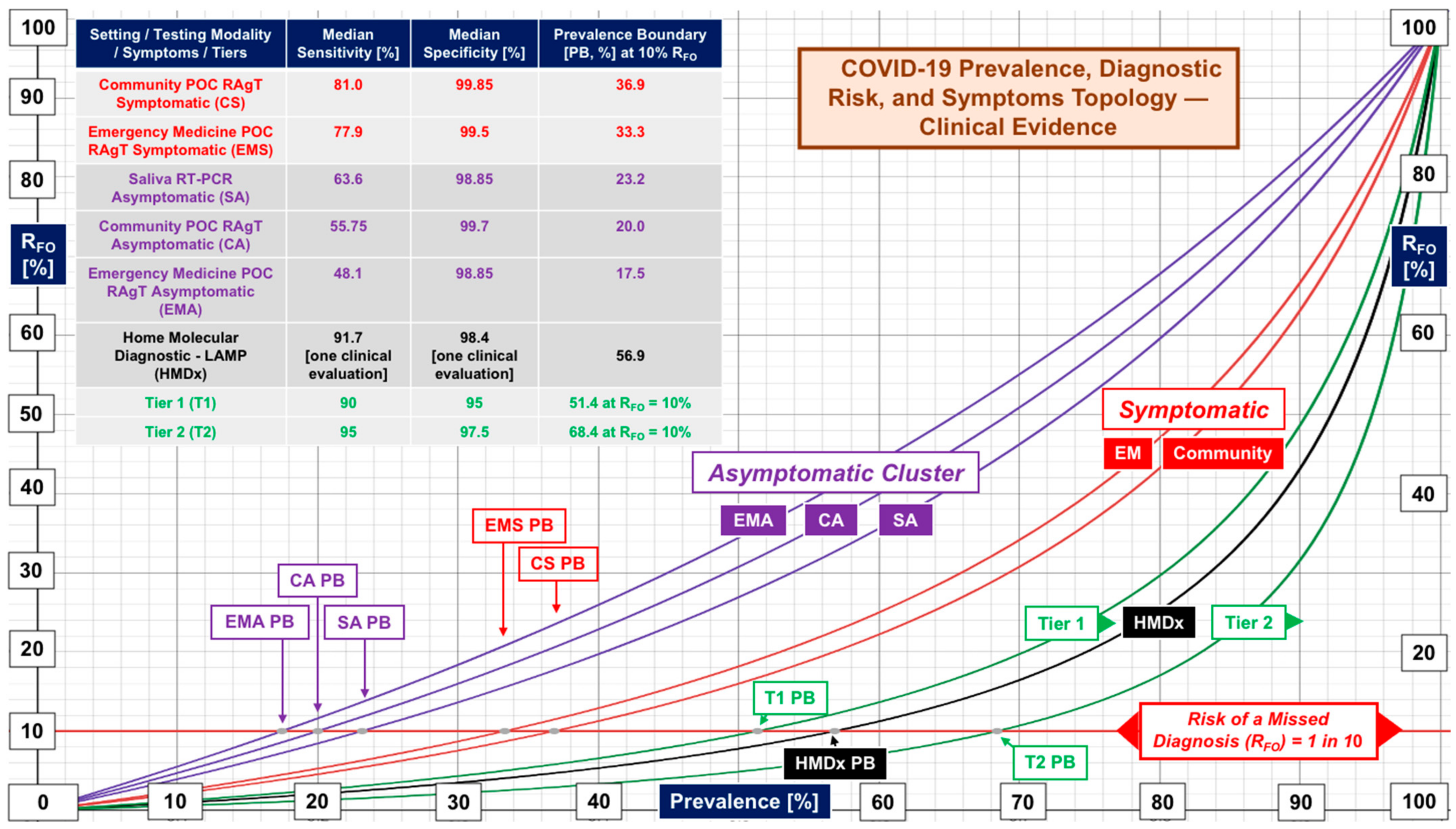

2.2. Clinical Evaluations

2.3. Sensitivity and Specificity Metrics

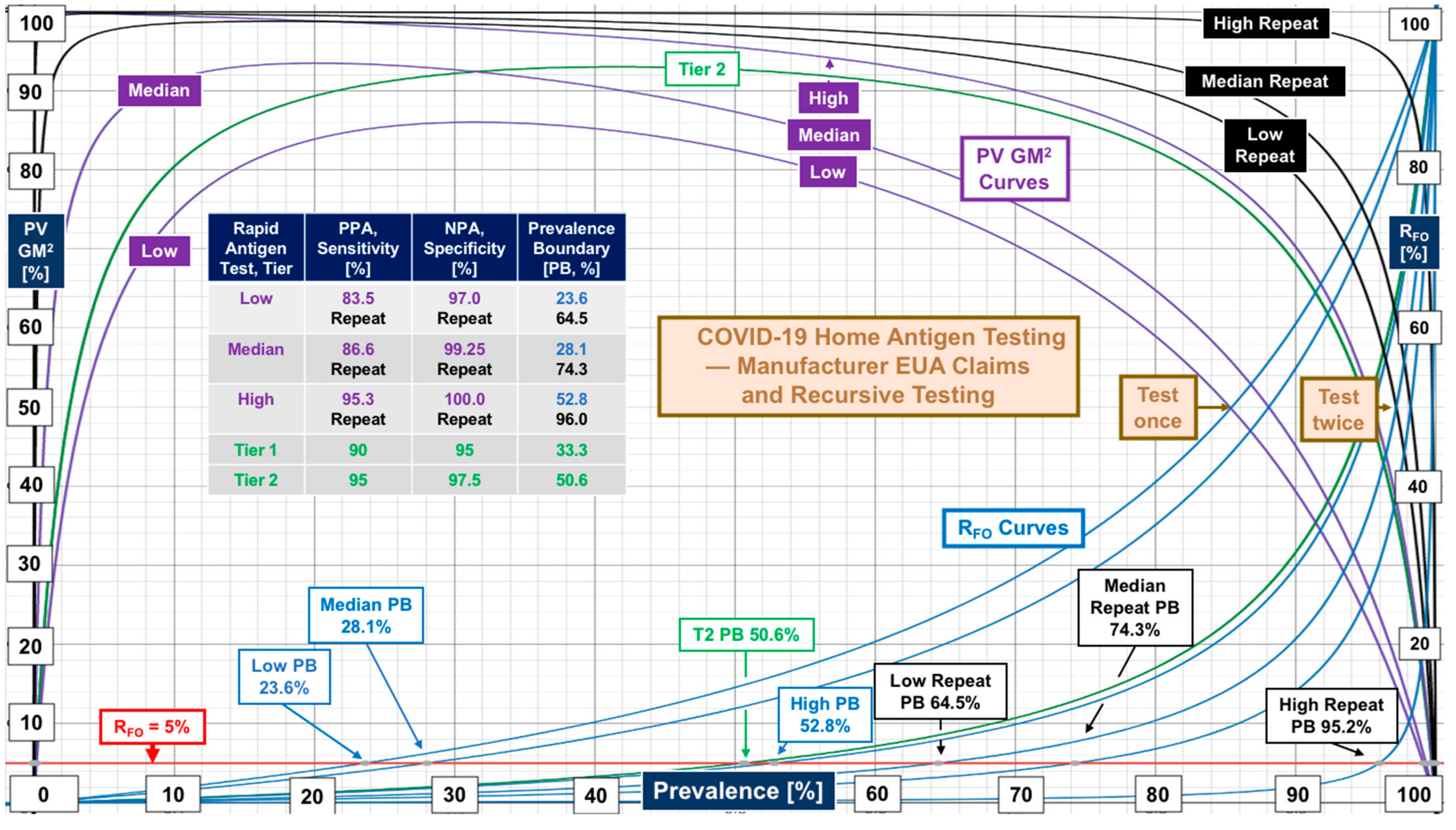

2.4. Bayesian Mathematics and Performance Tiers

2.5. Prevalence Boundaries

2.6. Pattern Recognition

2.7. Recursion

3. Results

4. Discussion

4.1. Missed Diagnoses

4.2. Transparency

4.3. Public Health at Points of Need

4.4. Focus, Standardization, and Risk Management

5. Conclusions

Supplementary Materials

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kost, G.J. Home antigen test recall affects millions: Beware false positives, but also uncertainty and potential false negatives. Arch. Pathol. Lab. Med. 2022, 146, 403. [Google Scholar] [CrossRef] [PubMed]

- Kost, G.J. The Coronavirus Disease 2019 Grand Challenge: Setting expectations and future directions for community and home testing. Arch. Pathol. Lab. Med. 2022; (published online ahead of print 25 March 2022). [Google Scholar] [CrossRef] [PubMed]

- White House. Nationwide Test to Treat Initiative. National COVID-19 Preparedness Plan. Available online: https://www.whitehouse.gov/covidplan/#protect (accessed on 26 April 2022).

- Kost, G.J.; Ferguson, W.J.; Kost, L.E. Principles of point of care culture, the spatial care path, and enabling community and global resilience. J. Int. Fed. Clin. Chem. Lab. Med. 2014, 25, 4–23. [Google Scholar]

- Kost, G.J.; Ferguson, W.J.; Truong, A.-T.; Prom, D.; Hoe, J.; Banpavichit, A.; Kongpila, S. The Ebola Spatial Care PathTM: Point-of-care lessons learned for stopping outbreaks. Clin. Lab. Int. 2015, 39, 6–14. [Google Scholar]

- Kost, G.J.; Ferguson, W.J.; Hoe, J.; Truong, A.T.; Banpavichit, A.; Kongpila, S. The Ebola Spatial Care Path™: Accelerating point-of-care diagnosis, decision making, and community resilience in outbreaks. Am. J. Disaster Med. 2015, 10, 121–143. [Google Scholar] [CrossRef] [PubMed]

- Food and Drug Administration. Individual EUAs for Antigen Diagnostic Tess for SARS-CoV-2. Available online: https://www.fda.gov/medical-devices/coronavirus-disease-2019-covid-19-emergency-use-authorizations-medical-devices/in-vitro-diagnostics-euas-antigen-diagnostic-tests-sars-cov-2 (accessed on 26 April 2022).

- Donato, L.J.; Trivedi, V.A.; Stransky, A.M.; Misra, A.; Pritt, B.S.; Binnicker, M.J.; Karon, B.S. Evaluation of the Cue Health point-of-care COVID-19 (SARS-CoV-2 nucleic acid amplification) test at a community drive through collection center. Diagn. Microbiol. Infect. Dis. 2021, 100, 115307. [Google Scholar] [CrossRef]

- Alghounaim, M.; Bastaki, H.; Essa, F.; Motlagh, H.; Al-Sabah, S. The performance of two rapid antigen tests during population-level screening for SARS-CoV-2 infection. Front. Med. 2021, 8, 797109. [Google Scholar] [CrossRef]

- García-Fiñana, M.; Hughes, D.M.; Cheyne, C.P.; Burnside, G.; Stockbridge, M.; Fowler, T.A.; Buchan, I. Performance of the Innova SARS-CoV-2 antigen rapid lateral flow test in the Liverpool asymptomatic testing pilot: Population based cohort study. BMJ 2021, 374, n1637. [Google Scholar] [CrossRef]

- Allan-Blitz, L.T.; Klausner, J.D. A real-world comparison of SARS-CoV-2 rapid antigen testing versus PCR testing in Florida. J. Clin. Microbiol. 2021, 59, e01107-21. [Google Scholar] [CrossRef]

- Mungomklang, A.; Trichaisri, N.; Jirachewee, J.; Sukprasert, J.; Tulalamba, W.; Viprakasit, V. Limited sensitivity of a rapid SARS-CoV-2 antigen detection assay for surveillance of asymptomatic individuals in Thailand. Am. J. Trop. Med. Hyg. 2021, 105, 1505–1509. [Google Scholar] [CrossRef]

- Jakobsen, K.K.; Jensen, J.S.; Todsen, T.; Kirkby, N.; Lippert, F.; Vangsted, A.M.; von Buchwald, C. Accuracy of anterior nasal swab rapid antigen tests compared with RT-PCR for massive SARS-CoV-2 screening in low prevalence population. Acta Path. Microbiol. Immunol. Scand. 2021, 130, 95–100. [Google Scholar] [CrossRef] [PubMed]

- Prince-Guerra, J.L.; Almendares, O.; Nolen, L.D.; Gunn, J.K.; Dale, A.P.; Buono, S.A.; Bower, W.A. Evaluation of Abbott BinaxNOW rapid antigen test for SARS-CoV-2 infection at two community-based testing sites—Pima County, Arizona, November 3–17, 2020. MMWR 2021, 70, 100–105. [Google Scholar] [CrossRef] [PubMed]

- Almendares, O.; Prince-Guerra, J.L.; Nolen, L.D.; Gunn, J.K.; Dale, A.P.; Buono, S.A. Performance characteristics of the Abbott BinaxNOW SARS-CoV-2 antigen test in comparison with real-time RT-PCR and viral culture in community testing sited during November 2020. J. Clin. Microbiol. 2022, 60, e01742-21. [Google Scholar] [CrossRef] [PubMed]

- Stohr, J.J.J.M.; Zwart, V.F.; Goderski, G.; Meijer, A.; Nagel-Imming, C.R.S.; Kluytmans-van den Bergh, M.F.Q.; Pas, S.D.; van den Oetelaar, F.; Hellwich, M.; Gan, K.H.; et al. Self-testing for the detection of SARS-CoV-2 infection with rapid antigen tests for people with suspected COVID-19 in the community. Clin. Microbiol. Infect. 2021, 28, 695–700. [Google Scholar] [CrossRef]

- Frediani, J.K.; Levy, J.M.; Rao, A.; Bassit, L.; Figueroa, J.; Vos, M.B. Multidisciplinary assessment of the Abbott BinaxNow Sars-CoV-2 point-of-care antigen test in the context of emerging viral variants and self-administration. Nat. Sci. Rep. 2021, 11, 14604. [Google Scholar] [CrossRef]

- Pollock, N.R.; Tran, K.; Jacobs, J.R.; Cranston, A.E.; Smith, S.; O’Kane, C.Y.; Smole, S.C. Performance and operational evaluation of the Access Bio CareStart Rapid Antigen Test in a high-throughput drive-through community testing site in Massachusetts. Open Forum Infect. Dis. 2021, 8, ofab243. [Google Scholar] [CrossRef]

- Pilarowski, G.; Lebel, P.; Sunshine, S.; Liu, J.; Crawford, E.; Marquez, C.; DeRisi, J. Performance characteristics of a rapid severe acute respiratory syndrome coronavirus 2 antigen detection assay at a public plaza testing site in San Francisco. J. Infect. Dis. 2021, 223, 1139–1144. [Google Scholar] [CrossRef]

- Boum, Y.; Fai, K.N.; Nikolay, B.; Mboringong, A.B.; Bebell, L.M.; Ndifon, M.; Mballa, G.A.E. Performance and operational feasibility of antigen and antibody rapid diagnostic tests for COVID-19 in symptomatic and asymptomatic patients in Cameroon: A clinical, prospective, diagnostic accuracy study. Lancet Infect. Dis. 2021, 21, 1089–1096. [Google Scholar] [CrossRef]

- Dřevínek, P.; Hurych, J.; Kepka, Z.; Briksi, A.; Kulich, M.; Zajac, M.; Hubacek, P. The sensitivity of SARS-CoV-2 antigen tests in the view of large-scale testing. Epidemiol. Mikrobiol. Imunol. 2021, 70, 156–160. [Google Scholar]

- Pollreis, R.E.; Roscoe, C.; Phinney, R.J.; Malesha, S.S.; Burns, M.C.; Ceniseros, A.; Ball, C.L. Evaluation of the Abbott BinaxNOW COVID-19 test Ag Card for rapid detection of SARS-CoV-2 infection by a local public health district with a rural population. PLoS ONE 2021, 16, e0260862. [Google Scholar] [CrossRef]

- Jakobsen, K.K.; Jensen, J.S.; Todsen, T.; Lippert, F.; Martel, C.J.M.; Klokker, M.; von Buchwald, C. Detection of Sars-CoV-2 infection by rapid antigen test in comparison with RT-PCR in a public setting. medRix 2021. [Google Scholar] [CrossRef]

- Nalumansi, A.; Lutalo, T.; Kayiwa, J.; Watera, C.; Balinandi, S.; Kiconco, J.; Kaleebu, P. Field evaluation of the performance of a SARS-CoV-2 antigen rapid diagnostic test in Uganda using nasopharyngeal samples. Int. J. Infect. Dis. 2021, 104, 282–286. [Google Scholar] [CrossRef] [PubMed]

- Fernandez-Montero, A.; Argemi, J.; Rodríguez, J.A.; Ariño, A.H.; Moreno-Galarraga, L. Validation of a rapid antigen test as a screening tool for SARS-CoV-2 infection in asymptomatic populations. Sensitivity, specificity and predictive values. E Clin. Med. 2021, 37, 100954. [Google Scholar]

- Gremmels, H.; Winkel, B.M.F.; Schuurman, R.; Rosingh, A.; Rigter, N.A.; Rodriguez, O.; Hofstra, L.M. Real-life validation of the Panbio™ COVID-19 antigen rapid test (Abbott) in community-dwelling subjects with symptoms of potential SARS-CoV-2 infection. E Clin. Med. 2021, 31, 100677. [Google Scholar] [CrossRef] [PubMed]

- Jian, M.J.; Perng, C.L.; Chung, H.Y.; Chang, C.K.; Lin, J.C.; Yeh, K.M.; Shang, H.S. Clinical assessment of SARS-CoV-2 antigen rapid detection compared with RT-PCR assay for emerging variants at a high-throughput community testing site in Taiwan. Intel. J. Infect. Dis. 2021, 115, 30–34. [Google Scholar] [CrossRef]

- Shah, M.M.; Salvatore, P.P.; Ford, L.; Kamitani, E.; Whaley, M.J.; Mitchell, K.; Tate, J.E. Performance of repeat BinaxNOW severe acute respiratory syndrome Coronavirus 2 antigen testing in a community setting, Wisconsin, November 2020-December 2020. Clin. Infect. Dis. 2021, 73 (Suppl. 1), S54–S57. [Google Scholar] [CrossRef]

- Pollock, N.R.; Jacobs, J.R.; Tran, K.; Cranston, A.E.; Smith, S.; O’Kane, C.Y.; Smole, S.C. Performance and implementation evaluation of the Abbott BinaxNOW Rapid Antigen Test in a high-throughput drive-through community testing site in Massachusetts. J. Clin. Microbiol. 2021, 59, e00083-21. [Google Scholar] [CrossRef]

- Ford, L.; Whaley, M.J.; Shah, M.M.; Salvatore, P.P.; Segaloff, H.E.; Delaney, A.; Kirking, H.L. Antigen test performance among children and adults at a SARS-CoV-2 community testing site. J. Pediatr. Infect. Dis. Soc. 2021, 10, 1052–1061. [Google Scholar] [CrossRef]

- Nsoga, M.T.N.; Kronig, I.; Perez Rodriguez, F.J.; Sattonnet-Roche, P.; Da Silva, D.; Helbling, J.; Eckerle, I. Diagnostic accuracy of Panbio rapid antigen tests on oropharyngeal swabs for detection of SARS-CoV-2. PLoS ONE 2021, 16, e0253321. [Google Scholar] [CrossRef]

- Siddiqui, Z.K.; Chaudhary, M.; Robinson, M.L.; McCall, A.B.; Peralta, R.; Esteve, R.; Ficke, J.R. Implementation and accuracy of BinaxNOW Rapid Antigen COVID-19 Test in asymptomatic and symptomatic populations in a high-volume self-referred testing site. Microbiol. Spectr. 2021, 9, e0100821. [Google Scholar] [CrossRef]

- Drain, P.; Sulaiman, R.; Hoppers, M.; Lindner, N.M.; Lawson, V.; Ellis, J.E. Performance of the LumiraDx microfluidic immunofluorescence point-of-care SARS-CoV-2 antigen test in asymptomatic adults and children. Am. J. Clin. Pathol. 2021, 157, 602–607. [Google Scholar] [CrossRef] [PubMed]

- Shrestha, B.; Neupane, A.K.; Pant, S.; Shrestha, A.; Bastola, A.; Rajbhandari, B.; Singh, A. Sensitivity and specificity of lateral flow antigen test kits for COVID-19 in asymptomatic population of quarantine centre of Province 3. Kathmandu Univ. Med. J. 2020, 18, 36–39. [Google Scholar] [CrossRef]

- Chiu, R.Y.T.; Kojima, N.; Mosley, G.L.; Cheng, K.K.; Pereira, D.Y.; Brobeck, M.; Klausner, J.D. Evaluation of the INDICAID COVID-19 rapid antigen test in symptomatic populations and asymptomatic community testing. Microbiol. Spectr. 2021, 9, e0034221. [Google Scholar] [CrossRef]

- Stokes, W.; Berenger, B.M.; Portnoy, D.; Scott, B.; Szelewicki, J.; Singh, T.; Tipples, G. Clinical performance of the Abbott Panbio with nasopharyngeal, throat, and saliva swabs among symptomatic individuals with COVID-19. Eur. J. Clin. Microbiol. Infect. Dis. 2021, 40, 1721–1726. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, J.; Das, A.; Pandey, P.; Sen, M.; Garg, J. “David vs. Goliath”: A simple antigen detection test with potential to change diagnostic strategy for SARS-CoV-2. J. Infect. Dev. Ctries. 2021, 15, 904–909. [Google Scholar] [CrossRef] [PubMed]

- Kernéis, S.; Elie, C.; Fourgeaud, J.; Choupeaux, L.; Delarue, S.M.; Alby, M.L.; Le Goff, J. Accuracy of antigen and nucleic acid amplification testing on saliva and nasopharyngeal samples for detection of SARS-CoV-2 in ambulatory care: A multicentric cohort study. MedRxiv 2021. [Google Scholar] [CrossRef]

- Van der Moeren, N.; Zwart, V.F.; Lodder, E.B.; Van den Bijllaardt, W.; Van Esch, H.R.; Stohr, J.J.; Kluytmans, J.A. Evaluation of the test accuracy of a SARS-CoV-2 rapid antigen test in symptomatic community dwelling individuals in the Netherlands. PLoS ONE 2021, 16, e0250886. [Google Scholar] [CrossRef]

- Drain, P.K.; Ampajwala, M.; Chappel, C.; Gvozden, A.B.; Hoppers, M.; Wang, M.; Montano, M. A rapid, high-sensitivity SARS-CoV-2 nucleocapsid immunoassay to aid diagnosis of acute COVID-19 at the point of care: A clinical performance study. Infect. Dis. Ther. 2021, 10, 753–761. [Google Scholar] [CrossRef] [PubMed]

- Van der Moeren, N.; Zwart, V.F.; Goderski, G.; Rijkers, G.T.; van den Bijllaardt, W.; Veenemans, J.; Stohr, J.J.J.M. Performance of the Diasorin SARS-CoV-2 antigen detection assay on the LIAISON XL. J. Clin. Virol. 2021, 141, 104909. [Google Scholar] [CrossRef]

- Gili, A.; Paggi, R.; Russo, C.; Cenci, E.; Pietrella, D.; Graziani, A.; Mencacci, A. Evaluation of Lumipulse® G SARS-CoV-2 antigen assay automated test for detecting SARS-CoV-2 nucleocapsid protein (NP) in nasopharyngeal swabs for community and population screening. Int. J. Infect. Dis. 2021, 105, 391–396. [Google Scholar] [CrossRef] [PubMed]

- Bianco, G.; Boattini, M.; Barbui, A.M.; Scozzari, G.; Riccardini, F.; Coggiola, M.; Costa, C. Evaluation of an antigen-based test for hospital point-of-care diagnosis of SARS-CoV-2 infection. J. Clin. Virol. 2021, 139, 104838. [Google Scholar] [CrossRef] [PubMed]

- Burdino, E.; Cerutti, F.; Panero, F.; Allice, T.; Gregori, G.; Milia, M.G.; Ghisetti, V. SARS-CoV-2 microfluidic antigen point-of-care testing in Emergency Room patients during COVID-19 pandemic. J. Virol. Methods 2022, 299, 114337. [Google Scholar] [CrossRef] [PubMed]

- Caruana, G.; Croxatto, A.; Kampouri, E.; Kritikos, A.; Opota, O.; Foerster, M.; Greub, G. Implementing SARS-CoV-2 rapid antigen testing in the emergency ward of a Swiss university hospital: The INCREASE study. Microorganisms 2021, 9, 798. [Google Scholar] [CrossRef] [PubMed]

- Caruana, G.; Lebrun, L.L.; Aebischer, O.; Opota, O.; Urbano, L.; de Rham, M.; Greub, G. The dark side of SARS-CoV-2 rapid antigen testing: Screening asymptomatic patients. New Microbes New Infect. 2021, 42, 100899. [Google Scholar] [CrossRef] [PubMed]

- Cento, V.; Renica, S.; Matarazzo, E.; Antonello, M.; Colagrossi, L.; Di Ruscio, F.; S. Co. Va Study Group. Frontline screening for SARS-CoV-2 infection at emergency department admission by third generation rapid antigen test: Can we spare RT-qPCR? Viruses 2021, 13, 818. [Google Scholar] [CrossRef]

- Cerutti, F.; Burdino, E.; Milia, M.G.; Allice, T.; Gregori, G.; Bruzzone, B.; Ghisetti, V. Urgent need of rapid tests for SARS CoV-2 antigen detection: Evaluation of the SD-Biosensor antigen test for SARS-CoV-2. J. Clin. Virol. 2020, 132, 104654. [Google Scholar] [CrossRef]

- Ciotti, M.; Maurici, M.; Pieri, M.; Andreoni, M.; Bernardini, S. Performance of a rapid antigen test in the diagnosis of SARS-CoV-2 infection. J. Med. Virol. 2021, 93, 2988–2991. [Google Scholar] [CrossRef]

- Holzner, C.; Pabst, D.; Anastasiou, O.E.; Dittmer, U.; Manegold, R.K.; Risse, J.; Falk, M. SARS-CoV-2 rapid antigen test: Fast-safe or dangerous? An analysis in the emergency department of an university hospital. J. Med. Virol. 2021, 93, 5323–5327. [Google Scholar] [CrossRef]

- Koeleman, J.G.M.; Brand, H.; de Man, S.J.; Ong, D.S.Y. Clinical evaluation of rapid point-of-care antigen tests for diagnosis of SARS-CoV-2 infection. Eur. J. Clin. Microbiol. Infect. Dis. 2021, 40, 1975–1981. [Google Scholar] [CrossRef]

- Leixner, G.; Voill-Glaniger, A.; Bonner, E.; Kreil, A.; Zadnikar, R.; Viveiros, A. Evaluation of the AMMP SARS-CoV-2 rapid antigen test in a hospital setting. Int. J. Infect. Dis. 2021, 108, 353–356. [Google Scholar] [CrossRef]

- Leli, C.; Di Matteo, L.; Gotta, F.; Cornaglia, E.; Vay, D.; Megna, I.; Rocchetti, A. Performance of a SARS-CoV-2 antigen rapid immunoassay in patients admitted to the emergency department. Int. J. Infect. Dis. 2021, 110, 135–140. [Google Scholar] [CrossRef] [PubMed]

- Linares, M.; Perez-Tanoira, R.; Carrero, A.; Romanyk, J.; Pérez-García, F.; Gómez-Herruz, P.; Cuadros, J. Panbio antigen rapid test is reliable to diagnoses SARS-CoV-2 infection in the first 7 days after the onset of symptoms. J. Clin. Virol. 2021, 133, 104659. [Google Scholar] [CrossRef] [PubMed]

- Loconsole, D.; Centrone, F.; Morcavallo, C.; Campanella, S.; Sallustio, A.; Casulli, D.; Chironna, M. The challenge of using an antigen test as a screening tool for SARS-CoV-2 infection in an emergency department: Experience of a tertiary care hospital in Southern Italy. BioMed Res. Int. 2021, 2021, 3893733. [Google Scholar] [CrossRef] [PubMed]

- Masiá, M.; Fernández-González, M.; Sánchez, M.; Carvajal, M.; García, J.A.; Gonzalo-Jiménez, N.; Gutiérrez, F. Nasopharyngeal Panbio COVID-19 antigen test performed at point-of-care has a high sensitivity in symptomatic and asymptomatic patients with higher risk for transmission and older age. Open Forum Infect. Dis. 2021, 8, ofab059. [Google Scholar] [CrossRef]

- Merrick, B.; Noronha, M.; Batra, R.; Douthwaite, S.; Nebbia, G.; Snell, L.B.; Harrison, H.L. Real-world deployment of lateral flow SARS-CoV-2 antigen detection in the emergency department to provide rapid, accurate and safe diagnosis of COVID-19. Infect. Prev. Pract. 2021, 3, 100186. [Google Scholar] [CrossRef]

- Möckel, M.; Corman, V.M.; Stegemann, M.S.; Hofmann, J.; Stein, A.; Jones, T.C.; Somasundaram, R. SARS-CoV-2 antigen rapid immunoassay for diagnosis of COVID-19 in the emergency department. Biomarkers 2021, 26, 213–220. [Google Scholar] [CrossRef]

- Oh, S.M.; Jeong, H.; Chang, E.; Choe, P.G.; Kang, C.K.; Park, W.B.; Kim, N.J. Clinical application of the standard Q COVID-19 Ag test for the detection of SARS-CoV-2 infection. J. Korean Med. Sci. 2021, 36, e101. [Google Scholar] [CrossRef]

- Orsi, A.; Pennati, B.M.; Bruzzone, B.; Ricucci, V.; Ferone, D.; Barbera, P.; Icardi, G. On-field evaluation of a ultra-rapid fluorescence immunoassay as a frontline test for SARS-CoV-2 diagnostic. J. Virol. Methods 2021, 295, 114201. [Google Scholar] [CrossRef]

- Osterman, A.; Baldauf, H.; Eletreby, M.; Wettengel, J.M.; Afridi, S.Q.; Fuchs, T.; Keppler, O.T. Evaluation of two rapid antigen tests to detect SARS-CoV-2 in a hospital setting. Med. Microbiol. Immunol. 2021, 210, 65–72. [Google Scholar] [CrossRef]

- Thell, R.; Kallab, V.; Weinhappel, W.; Mueckstein, W.; Heschl, L.; Heschl, M.; Szell, M. Evaluation of a novel, rapid antigen detection test for the diagnosis of SARS-CoV-2. PLoS ONE 2021, 16, e0259527. [Google Scholar] [CrossRef]

- Turcato, G.; Zaboli, A.; Pfeifer, N.; Ciccariello, L.; Sibilio, S.; Tezza, G.; Ausserhofer, D. Clinical application of a rapid antigen test for the detection of SARS-CoV-2 infection in symptomatic and asymptomatic patients evaluated in the emergency department: A preliminary report. J. Infect. 2021, 82, e14–e16. [Google Scholar] [CrossRef] [PubMed]

- Turcato, G.; Zaboli, A.; Pfeifer, N.; Sibilio, S.; Tezza, G.; Bonora, A.; Ausserhofer, D. Rapid antigen test to identify COVID-19 infected patients with and without symptoms admitted to the Emergency Department. Am. J. Emerg. Med. 2022, 51, 92–97. [Google Scholar] [CrossRef] [PubMed]

- Carbonell-Sahuquillo, S.; Lázaro-Carreño, M.I.; Camacho, J.; Barrés-Fernández, A.; Albert, E.; Torres, I.; Navarro, D. Evaluation of a rapid antigen detection test (Panbio™ COVID-19 Ag Rapid Test Device) as a point-of-care diagnostic tool for COVID-19 in a pediatric emergency department. J. Med. Virol. 2021, 93, 6803–6807. [Google Scholar] [CrossRef] [PubMed]

- Denina, M.; Giannone, V.; Curtoni, A.; Zanotto, E.; Garazzino, S.; Urbino, A.F.; Bondone, C. Can we trust in Sars-CoV-2 rapid antigen testing? Preliminary results from a paediatric cohort in the emergency department. Ir. J. Med. Sci. 2021; Epub ahead of printing. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Donapetry, P.; Garcia-Clemente, P.; Bloise, I.; García-Sánchez, C.; Sánchez Castellano, M.Á.; Romero, M.P.; García-Rodriguez, J. Think of the children. Rapid antigen test in pediatric population. Pediatr. Infect. Dis. J. 2021, 40, 385–388. [Google Scholar] [CrossRef]

- Jung, C.; Levy, C.; Varon, E.; Biscardi, S.; Batard, C.; Wollner, A.; Cohen, R. Diagnostic accuracy of SARS-CoV-2 antigen detection test in children: A real-life study. Front. Pediatr. 2021, 9, 727. [Google Scholar] [CrossRef]

- Lanari, M.; Biserni, G.B.; Pavoni, M.; Borgatti, E.C.; Leone, M.; Corsini, I.; Lazzarotto, T. Feasibility and effectiveness assessment of SARS-CoV-2 antigenic tests in mass screening of a pediatric population and correlation with the kinetics of viral loads. Viruses 2021, 13, 2071. [Google Scholar] [CrossRef]

- Quentin, O.; Sylvie, P.; Olivier, M.; Julie, G.; Charlotte, T.; Nadine, A.; Aymeric, C. Prospective evaluation of the point-of-care use of a rapid antigenic SARS-CoV-2 immunochromatographic test in a pediatric emergency department. Clin. Microbiol. Infect. 2022, 28, 734.e1–734.e6. [Google Scholar] [CrossRef]

- Reichert, F.; Enninger, A.; Plecko, T.; Zoller, W.G.; Paul, G. Pooled SARS-CoV-2 antigen tests in asymptomatic children and their caregivers: Screening for SARS-CoV-2 in a pediatric emergency department. Am. J. Infect. Cont. 2021, 49, 1242–1246. [Google Scholar] [CrossRef]

- Villaverde, S.; Dominquez-Rodriguez, S.; Sabrido, G.; Pérez-Jorge, C.; Plata, M.; Romero, M.P.; Segura, E. Diagnostic accuracy of the Panbio severe acute respiratory syndrome coronavirus 2 antigen rapid test compared with reverse-transcriptase polymerase chain reaction testing of nasopharyngeal samples in the pediatric population. J. Pediatr. 2021, 232, 287–289. [Google Scholar] [CrossRef]

- Nacher, M.; Mergeay-Fabre, M.; Blanchet, D.; Benois, O.; Pozl, T.; Mesphoule, P.; Demar, M. Diagnostic accuracy and acceptability of molecular diagnosis of COVID-19 on saliva samples relative to nasopharyngeal swabs in tropical hospital and extra-hospital contexts: The COVISAL study. PLoS ONE 2021, 16, e0257169. [Google Scholar] [CrossRef] [PubMed]

- Nacher, M.; Mergeay-Fabre, M.; Blanchet, D.; Benoit, O.; Pozl, T.; Mesphoule, P.; Demar, M. Prospective comparison of saliva and nasopharyngeal swab sampling for mass screening for COVID-19. Front. Med. 2021, 8, 621160. [Google Scholar] [CrossRef] [PubMed]

- Marx, G.E.; Biggerstaff, B.J.; Nawrocki, C.C.; Totten, S.E.; Travanty, E.A.; Burakoff, A.W. Detection of Severe Acute Respiratory Syndrome Coronavirus 2 on self-collected saliva or anterior nasal specimens compared with healthcare personnel-collected nasopharyngeal specimens. Clin. Infect. Dis. 2021, 73 (Suppl. 1), S65–S73. [Google Scholar] [CrossRef] [PubMed]

- Bosworth, A.; Whalley, C.; Poxon, C.; Wanigasooriya, K.; Pickles, O.; Aldera, E.L.; Beggs, A.D. Rapid implementation and validation of a cold-chain free SARS-CoV-2 diagnostic testing workflow to support surge capacity. J. Clin. Virol. 2020, 128, 104469. [Google Scholar] [CrossRef] [PubMed]

- Alkhateeb, K.J.; Cahill, M.N.; Ross, A.S.; Arnold, F.W.; Snyder, J.W. The reliability of saliva for the detection of SARS-CoV-2 in symptomatic and asymptomatic patients: Insights on the diagnostic performance and utility for COVID-19 screening. Diagn. Microbiol. Infect. Dis. 2021, 101, 115450. [Google Scholar] [CrossRef]

- LeGoff, J.; Kerneis, S.; Elie, C.; Mercier-Delarue, S.; Gastli, N.; Choupeaux, L.; Delaugerre, C. Evaluation of a saliva molecular point of care for the detection of SARS-CoV-2 in ambulatory care. Nat. Sci. Rep. 2021, 11, 21126. [Google Scholar] [CrossRef]

- Igloi, Z.; Velzing, J.; Huisman, R.; Geurtsvankessel, C.; Comvalius, A.; IJpelaar, J.; Molenkamp, R. Clinical evaluation of the SD Biosensor SARS-CoV-2 saliva antigen rapid test with symptomatic and asymptomatic, non-hospitalized patients. PLoS ONE 2021, 16, e0260894. [Google Scholar] [CrossRef]

- Lopes, J.I.F.; da Costa Silva, C.A.; Cunha, R.G.; Soares, A.M.; Lopes, M.E.D.; da Conceição Neto, O.C.; Amado Leon, L.A. A large cohort study of SARS-CoV-2 detection in saliva: A non-invasive alternative diagnostic test for patients with bleeding disorders. Viruses 2021, 13, 2361. [Google Scholar] [CrossRef]

- Fernández-González, M.; Agulló, V.; de la Rica, A.; Infante, A.; Carvajal, M.; García, J.A.; Gutiérrez, F. Performance of saliva specimens for the molecular detection of SARS-CoV-2 in the community setting: Does sample collection method matter? J. Clin. Microbiol. 2021, 59, e03033-20. [Google Scholar] [CrossRef]

- Nagura-Ikeda, M.; Imai, K.; Tabata, S.; Miyoshi, K.; Murahara, N.; Mizuno, T.; Kato, Y. Clinical evaluation of self-collected saliva by quantitative reverse transcription-PCR (RT-qPCR), direct RT-qPCR, reverse transcription-Loop-Mediated Isothermal Amplification, and a rapid antigen test to diagnose COVID-19. J. Clin. Microbiol. 2020, 58, e01438-20. [Google Scholar] [CrossRef]

- Babady, N.E.; McMillen, T.; Jani, K.; Viale, A.; Robilotti, E.V.; Aslam, A.; Kamboj, M. Performance of severe acute respiratory syndrome coronavirus 2 real-time RT-PCR tests on oral rinses and saliva samples. J. Mol. Diagn. 2021, 23, 3–9. [Google Scholar] [CrossRef]

- Chau, V.V.; Lam, V.T.; Dung, N.T.; Yen, L.M.; Minh, N.N.Q.; Hung, L.M.; Van Tan, L. The natural history and transmission potential of asymptomatic severe acute respiratory syndrome coronavirus 2 Infection. Clin. Infect. Dis. 2020, 71, 2679–2687. [Google Scholar] [CrossRef] [PubMed]

- Herrera, L.A.; Hidalgo-Miranda, A.; Reynoso-Noverón, N.; Meneses-García, A.A.; Mendoza-Vargas, A.; Reyes-Grajeda, J.P.; Escobar-Escamilla, N. Saliva is a reliable and accessible source for the detection of SARS-CoV-2. Int. J. Infect. Dis. 2021, 105, 83–90. [Google Scholar] [CrossRef] [PubMed]

- Yokota, I.; Shane, P.Y.; Okada, K.; Unoki, Y.; Yang, Y.; Inao, T.; Teshima, T. Mass screening of asymptomatic persons for severe acute respiratory syndrome coronavirus 2 using saliva. Clin. Infect. Dis. 2021, 73, e559–e565. [Google Scholar] [CrossRef] [PubMed]

- Kernéis, S.; Elie, C.; Fourgeaud, J.; Choupeaux, L.; Delarue, S.M.; Alby, M.L.; LeGoff, J. Accuracy of saliva and nasopharyngeal sampling for detection of SARS-CoV-2 in community screening: A multicentric cohort study. Eur. J. Clin. Microbiol. Infect. Dis. 2021, 40, 2379–2388. [Google Scholar] [CrossRef] [PubMed]

- Vogels, C.B.F.; Watkins, A.E.; Harden, C.A.; Brackney, D.E.; Shafer, J.; Wang, J.; Grubaugh, N.D. SalivaDirect: A simplified and flexible platform to enhance SARS-CoV-2 testing capacity. Med 2021, 2, 263–289. [Google Scholar] [CrossRef]

- Balaska, S.; Pilalas, D.; Takardaki, A.; Koutra, P.; Parasidou, E.; Gkeka, I.; Skoura, L. Evaluation of the Advanta Dx SARS-CoV-2 RT-PCR Assay, a high-throughput extraction-free diagnostic test for the detection of SARS-CoV-2 in saliva: A diagnostic accuracy study. Diagnostics 2021, 11, 1766. [Google Scholar] [CrossRef]

- Rao, M.; Rashid, F.A.; Sabri, F.; Jamil, N.N.; Seradja, V.; Abdullah, N.A.; Ahmad, N. COVID-19 screening test by using random oropharyngeal saliva. J. Med. Virol. 2021, 93, 2461–2466. [Google Scholar] [CrossRef]

- Kost, G.J. Designing and interpreting COVID-19 diagnostics: Mathematics, visual logistics, and low prevalence. Arch. Pathol. Lab. Med. 2020, 145, 291–307. [Google Scholar] [CrossRef]

- Kost, G.J. The impact of increasing prevalence, false omissions, and diagnostic uncertainty on Coronavirus Disease 2019 (COVID-19) test performance. Arch. Pathol. Lab. Med. 2021, 145, 797–813. [Google Scholar] [CrossRef]

- Kost, G.J. Diagnostic strategies for endemic Coronavirus disease 2019 (COVID-19): Rapid antigen tests, repeat testing, and prevalence boundaries. Arch. Pathol. Lab. Med. 2022, 146, 16–25. [Google Scholar] [CrossRef] [PubMed]

- Cable, R.; Coleman, C.; Glatt, T.; Mhlanga, L.; Nyano, C.; Welte, A. Estimates of prevalence of anti-SARS-CoV-2 antibodies among blood donors in eight provinces of South Africa in November 2021. Res. Sq. 2022. [Google Scholar] [CrossRef]

- Koller, G.; Morrell, A.P.; Galão, R.P.; Pickering, S.; MacMahon, E.; Johnson, J.; Addison, O. More than the eye can see: Shedding new light on SARS-CoV-2 lateral flow device-based immunoassays. ACS Appl. Mater. Interfaces 2021, 13, 25694–25700. [Google Scholar] [CrossRef] [PubMed]

- Homza, M.; Zelena, H.; Janosek, J.; Tomaskova, H.; Jezo, E.; Kloudova, A.; Prymula, R. Covid-19 antigen testing: Better than we know? A test accuracy study. Infect. Dis. 2021, 53, 661–668. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Hogan, C.A.; Verghese, M.; Solis, D.; Sibai, M.; Huang, C.; Pinsky, B.A. Ultra-sensitive severe Acute respiratory syndrome coronavirus 2 (SARS-CoV-2) antigen detection for the diagnosis of Coronavirus Disease 2019 (COVID-19) in upper respiratory samples. Clin. Infect. Dis. 2021, 73, 2326–2328. [Google Scholar] [CrossRef]

- Bohn, M.K.; Lippi, G.; Horvath, A.R.; Erasmus, R.; Grimmler, M.; Gramegna, M.; Adeli, K. IFCC interim guidelines on rapid point-of-care antigen testing for SARS-CoV-2 detection in asymptomatic and symptomatic individuals. Clin. Chem. Lab. Med. 2021, 59, 1507–1515. [Google Scholar] [CrossRef]

- Wang, H.; Paulson, K.R.; Pease, S.A.; Watson, S.; Comfort, H.; Zheng, P.; Murray, C.J. Estimating excess mortality due to the COVID-19 pandemic: A systematic analysis of COVID-19-related mortality, 2020–2021. Lancet 2022, 399, 1468. [Google Scholar] [CrossRef]

- Kost, G.J.; Ferguson, W.; Truong, A.-T.; Hoe, J.; Prom, D.; Banpavichit, A.; Kongpila, S. Molecular detection and point-of-care testing in Ebola virus disease and other threats: A new global public health framework to stop outbreaks. Expert Rev. Mol. Diagn. 2015, 15, 1245–1259. [Google Scholar] [CrossRef]

- Kost, G.J. Point-of-care testing for Ebola and other highly infectious threats: Principles, practice, and strategies for stopping outbreaks. In A Practical Guide to Global Point-Of-Care Testing; Shephard, M., Ed.; CSIRO (Commonwealth Scientific and Industrial Research Organization): Canberra, Australia, 2016; Chapter 24; pp. 291–305. [Google Scholar]

- Kost, G.J. Molecular and point-of-care diagnostics for Ebola and new threats: National POCT policy and guidelines will stop epidemics. Expert Rev. Mol. Diagn. 2018, 18, 657–673. [Google Scholar] [CrossRef]

- Zadran, A.; Kost, G.J. Enabling rapid intervention and isolation for patients with highly infectious diseases. J. Hosp. Mgt. Health Policy 2019, 3, 1–7. [Google Scholar] [CrossRef]

- Kost, G.J. Geospatial science and point-of-care testing: Creating solutions for population access, emergencies, outbreaks, and disasters. Front. Public Health 2020, 7, 329. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kost, G.J. Geospatial hotspots need point-of-care strategies to stop highly infectious outbreaks: Ebola and Coronavirus. Arch. Pathol. Lab. Med. 2020, 144, 1166–1190. [Google Scholar] [CrossRef]

- CORONADx Consortium 2020–2023. Rapid. Portable, Affordable Testing for COVID-19. European Union Horizon 2020 Grant No. 101003562. Available online: https://coronadx-project.eu/project/ and https://coronadx-project.eu/wp-content/uploads/2021/05/CORONADXLeaflet.pdf (accessed on 26 April 2022).

- Ogao, E. Hospitals in Kenya have been injecting people with water instead of vaccine—The hospitals also charged people for the privilege. Vice World News. 8 June 2021. Available online: https://www.vice.com/en/article/qj8j4b/hospitals-in-kenya-have-been-injecting-people-with-water-instead-of-the-vaccine (accessed on 26 April 2022).

- Whyte, J.; Wallensky, R.P. Coronavirus in context—Interview with CDC Director. Medscape Critical Care. 8 June 2021. Available online: https://www.medscape.com/viewarticle/952645?src=mkm_covid_update_210608_MSCPEDIT&uac=372400HG&impID=3429136&faf=1 (accessed on 26 April 2022).

- Kost, G.J.; Zadran, A.; Zadran, L.; Ventura, I. Point-of-care testing curriculum and accreditation for public health—Enabling preparedness, response, and higher standards of care at points of need. Front. Public Health 2019, 8, 385. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kost, G.J.; Zadran, A. Schools of public health should be accredited for, and teach the principles and practice of point-of-care testing. J. Appl. Lab. Med. 2019, 4, 278–283. [Google Scholar] [CrossRef] [PubMed]

- Kost, G.J. Public health education should include point-of-care testing: Lessons learned from the covid-19 pandemic. J. Int. Fed. Clin. Chem. Lab. Med. 2021, 32, 311–327. [Google Scholar]

- Eng, M.; Zadran, A.; Kost, G.J. Covid-19 risk avoidance and management in limited-resource countries—Point-of-care strategies for Cambodia. Omnia Digital Health. June–July 2021, pp. 112–115. Available online: https://secure.viewer.zmags.com/publication/44e87ae6?page=115&nocache=1625391874149&fbclid=IwAR2gIObRf6ThX15vlBpqMRYSC83hiZysJ8uMxEae7Em16bhOrnfEJTgNWuo#/44e87ae6/115 (accessed on 26 April 2022).

| Clinical Space | Median [N, Range] | Median [N, Range] | Data Source |

|---|---|---|---|

| Home Self-Tests | Supplemental Table S1 | ||

| Rapid antigen tests | PPA 86.6 [12, 83.5–95.3] | NPA 99.25 [12, 97–100] | Manufacturer FDA EUA claim (not substantiated) |

| Isothermal (LAMP) molecular tests | PPA 91.7 [3, 90.9–97.4] | NPA 98.2 [3, 97.5–99.1] | Manufacturer FDA EUA claim (not substantiated) |

| Isothermal (LAMP) molecular test | Sensitivity 91.7 [1, CI NR] | Specificity 98.4 [1, CI NR] | One independent clinical evaluation, see Donato et al. [8] |

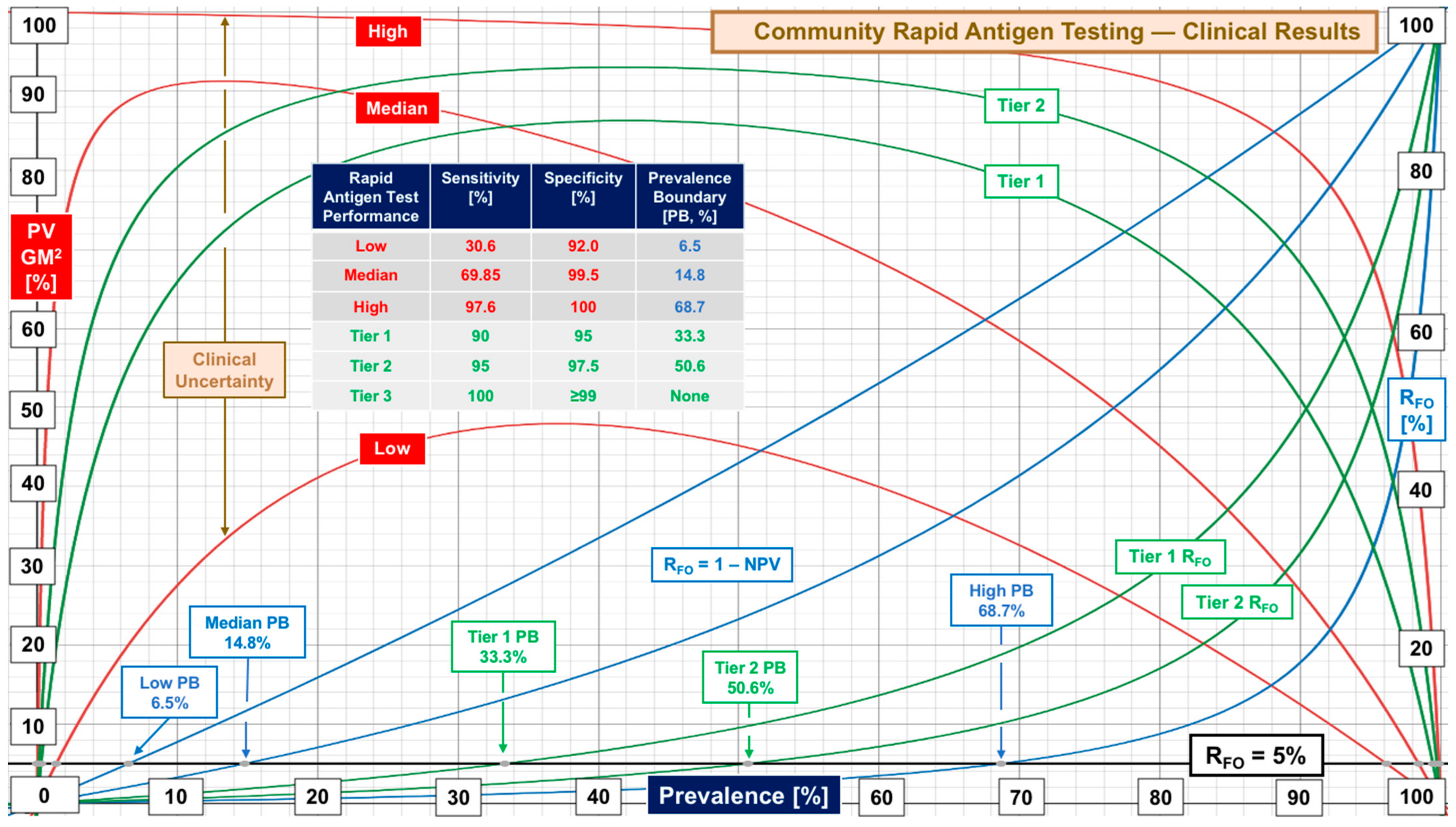

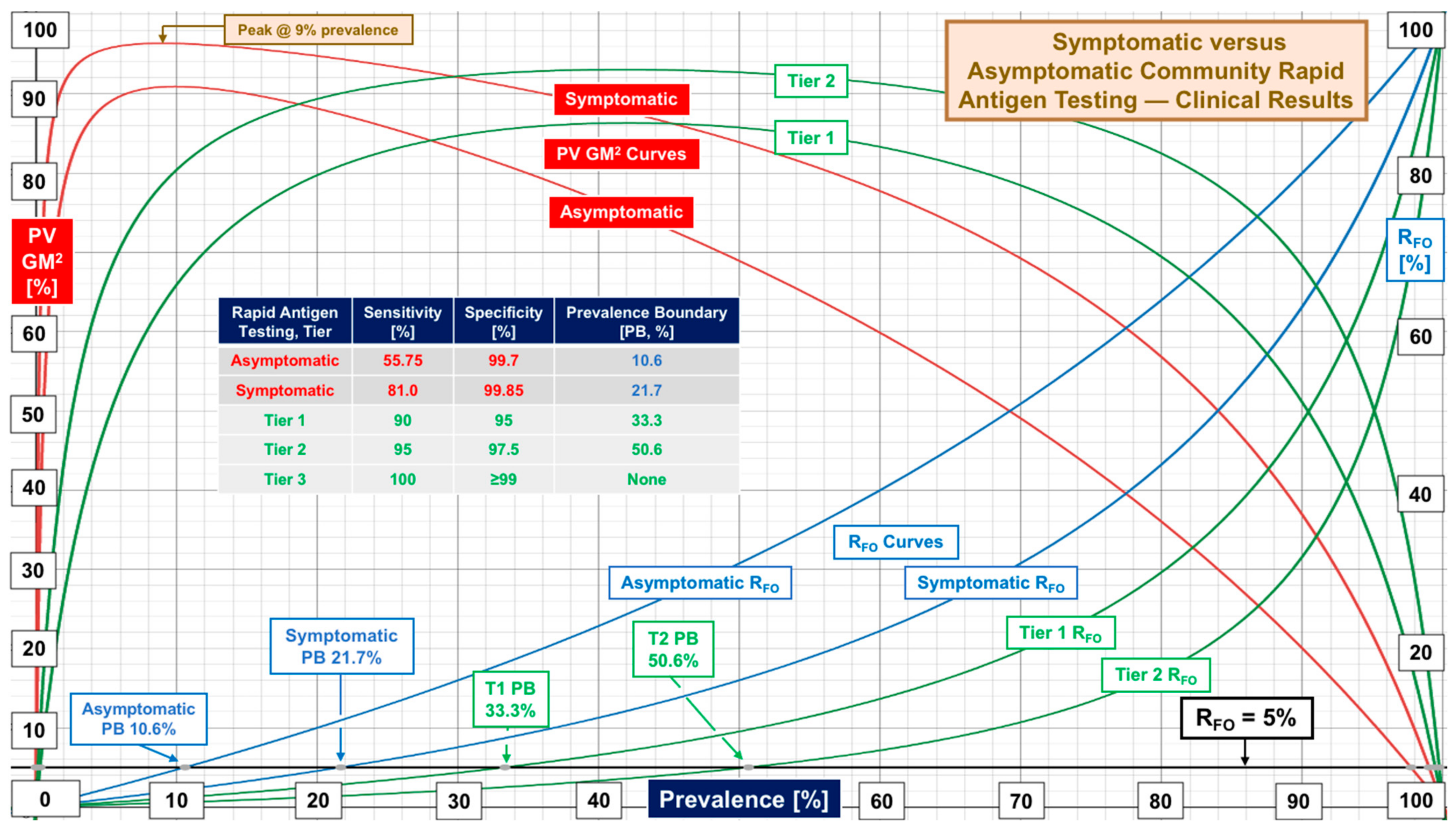

| Community RAgTs | Sensitivity | Specificity | Supplemental Table S2 |

| Overall | 69.85 [24, 30.6–97.6] | 99.5 [24, 92–100] | Performance evaluations |

| Symptomatic | 81.0 [19, 47.7–96.5] | 99.85 [16, 85–100] | Symptomatic subjects |

| Asymptomatic | 55.75 [20, 37–88] | 99.70 [16, 97.8–100] | Asymptomatic subjects |

| Automated antigen tests-overall | 62.3 [3, 43.3–100] | 99.5 [3, 94.8–99.9] | Evaluations using automated laboratory instruments (small set) |

| -symptomatic | 73 [3, 68.5–88.9] | 100 [3, 100] | Symptomatic subjects for above |

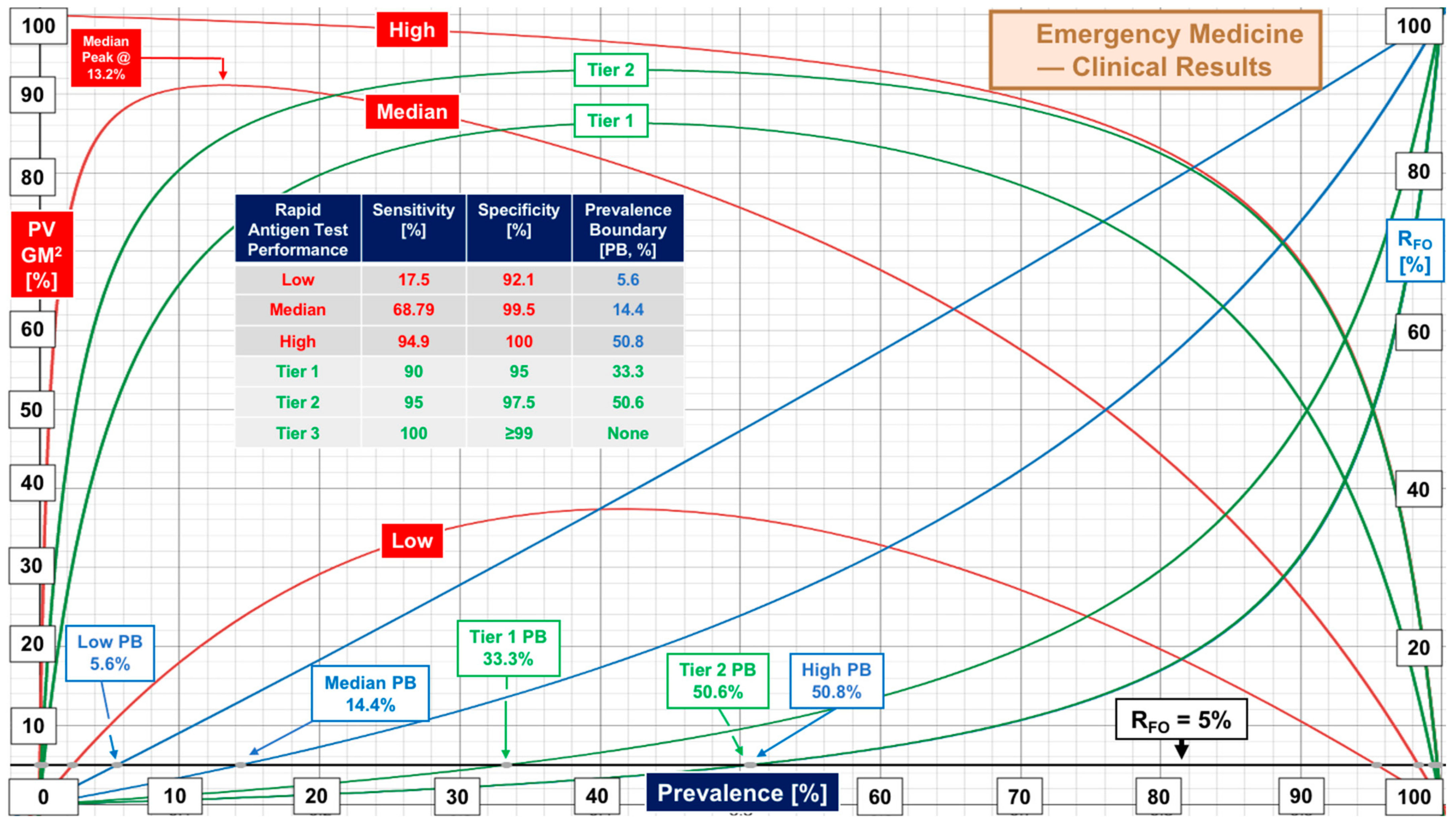

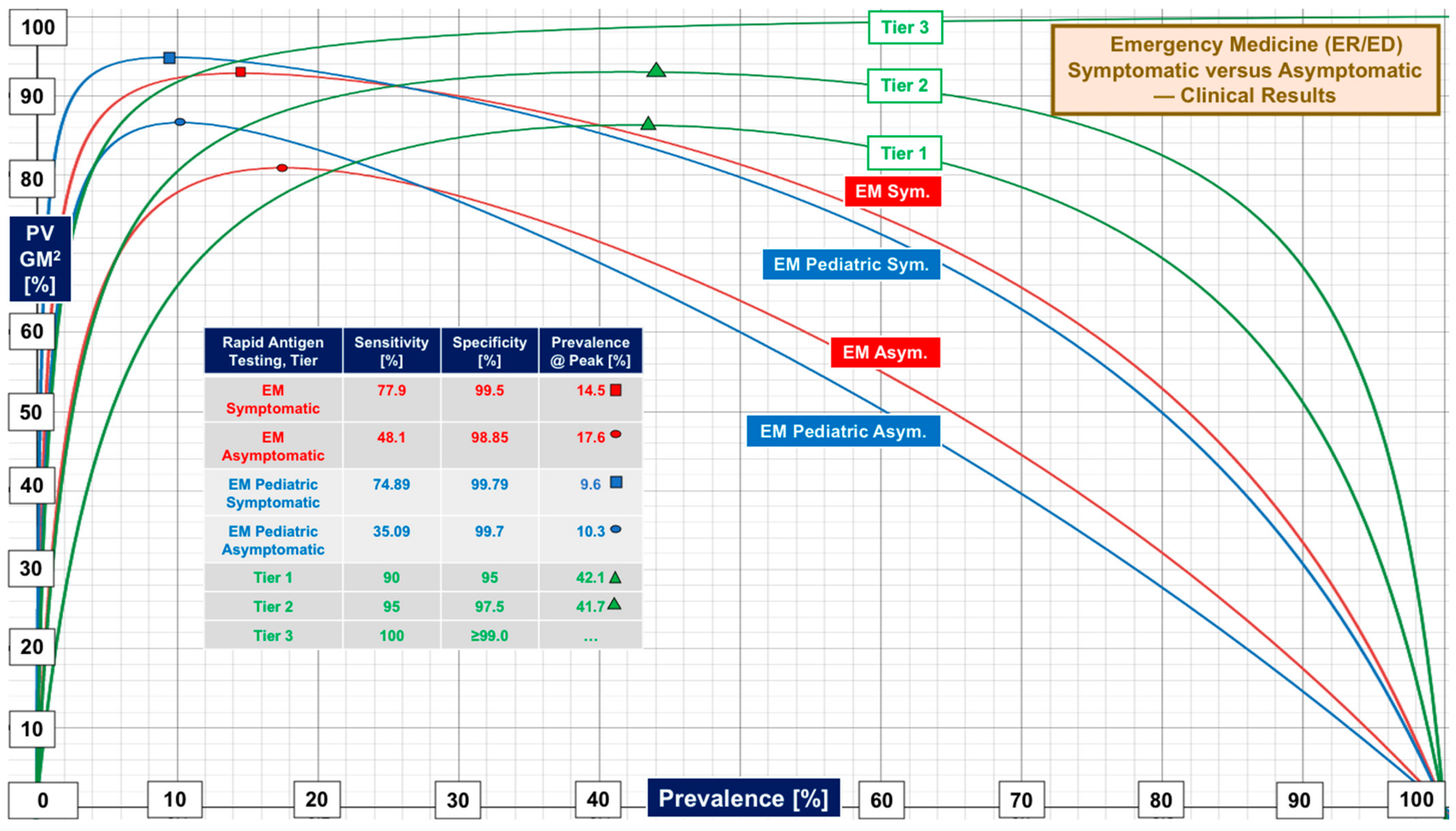

| Emergency Medicine RAgTs | Sensitivity | Specificity | Supplemental Table S3 |

| EM Overall | 68.79 [20, 17.5–94.9] | 99.5 [20, 92.1–100] | ER and ED evaluations |

| Symptomatic | 77.9 [15, 43.3–95.8] | 99.5 [14, 88.2–100] | Symptomatic EM subjects |

| Asymptomatic | 48.1 [11, 28.6–92.1] | 98.85 [10, 92.3–100] | Asymptomatic EM subjects |

| Pediatric EM | 71.3 [10, 42.9–94.1] | 99.55 [10, 91.9–100] | Pediatric ER/ED patients only |

| Ped. Symptomatic | 74.89 [6, 45.4–87.9] | 99.79 [6, 98.5–100] | Symptomatic EM children |

| Ped. Asymptomatic | 35.09 [2, 27.27–42.9] | 99.7 [2, 99.4–100] | Asymptomatic EM children |

| Saliva Testing | Sensitivity | Specificity | Supplemental Table S4 |

| Asymptomatic, molecular diagnostics | 63.6 [19, 16.8–95] | 98.85 [14, 95–100] | Community evaluations with strictly asymptomatic subjects |

| Tier | Performance Level | Sensitivity, % | Specificity, % | Target Prevalence Boundary [Actual] at RFO of 5% 10% 20% | ||

|---|---|---|---|---|---|---|

| 1 | Low | 90 | 95 | 33% (33.3) | 50% (51.4) | 70% (70.3) |

| 2 | Marginal | 95 | 97.5 | 50% (50.6) | 70% (68.4) | 85% (83.0) |

| 3 | High | 100 | ≥99 | No Boundary | No Boundary | No Boundary |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kost, G.J. The Coronavirus Disease 2019 Spatial Care Path: Home, Community, and Emergency Diagnostic Portals. Diagnostics 2022, 12, 1216. https://doi.org/10.3390/diagnostics12051216

Kost GJ. The Coronavirus Disease 2019 Spatial Care Path: Home, Community, and Emergency Diagnostic Portals. Diagnostics. 2022; 12(5):1216. https://doi.org/10.3390/diagnostics12051216

Chicago/Turabian StyleKost, Gerald J. 2022. "The Coronavirus Disease 2019 Spatial Care Path: Home, Community, and Emergency Diagnostic Portals" Diagnostics 12, no. 5: 1216. https://doi.org/10.3390/diagnostics12051216

APA StyleKost, G. J. (2022). The Coronavirus Disease 2019 Spatial Care Path: Home, Community, and Emergency Diagnostic Portals. Diagnostics, 12(5), 1216. https://doi.org/10.3390/diagnostics12051216