1. Introduction

The problem of source coding for computing considers the scenario where a decoder is interested in recovering a function of the message(s), other than the original message(s), that is (are) i.i.d. generated and independently encoded by the source(s). In rigorous terms:

Problem 1 (Source Coding for Computing)

. Given and . For each consider a discrete memoryless source

that randomly generates i.i.d. discrete data , where has a finite sample space and . For a discrete function

what is the largest region , such that, and , there exists an , such that for all , there exist s encoders

and one decoder

withwhere and

The region

is called the

achievable coding rate region for computing

g. A rate tuple

is said to be

achievable for computing

g (or simply achievable) if and only if

. A region

is said to be

achievable for computing

g (or simply achievable) if and only if

.

If

g is an

identity function, the computing problem, Problem 1, is known as the

Slepian–Wolf (SW)

source coding problem.

is then the

SW region [

1],

where

is the

complement of

T in

and

is the random variable array

. However, from [

1] it is hard to draw conclusions regarding the structure (linear or not) of the encoders, as the corresponding mappings are chosen randomly among all feasible mappings. This limits the scope of their potential applications. As a consequence,

linear coding over finite fields (LCoF), namely

’s are injectively mapped into some subsets of some finite

fields and the

’s are chosen as

linear mappings over these fields, is considered. It is shown that LCoF achieves the same encoding limit, the SW region [

2,

3]. Although it seems straightforward to study linear mappings over

rings (non-field rings in particular), it has not been proved (nor denied) that linear encoding over non-field rings can be equally optimal.

For an arbitrary discrete function

g, Problem 1 remains open in general, and

obviously. Making use of Elias’ theorem on

binary linear codes [

2], Körner–Marton [

4] shows that

(“

” is the

modulo-two sum) contains the region

This region is not contained in the SW region for certain distributions. In other words,

. Combining the standard random coding technique and Elias’ result, [

5] shows that

can be strictly larger than the convex hull of the union

. However, the functions considered in these works are relatively simple. With a

polynomial approach, [

6,

7] generalize the result of Ahlswede–Han ([

5], Theorem 10) to the scenario of

g being arbitrary. Making use of the fact that a discrete function is essentially a

polynomial function (see Definition 2) over some finite field, an achievable region is given for computing an arbitrary discrete function. Such a region contains and can be strictly larger (depending on the precise function and distribution under consideration) than the SW region. Conditions under which

is strictly larger than the SW region are presented in [

6,

8] from different perspectives, respectively. The cases regarding Abelian group codes are covered in [

9,

10,

11].

The present work proposes replacing the linear encoders over finite fields from Elias [

2] and Csiszár [

3] with linear encoders over finite rings in the case of the problems accounted for above. Achievability theorems related to

linear coding over finite rings (LCoR) for SW data compression are presented, covering the results in [

2,

3] as special cases in the sense of characterizing the achievable region. In addition, it is proved that there always exists a sequence of linear encoders over some finite non-field rings that achieves the SW region for any scenario of SW. Therefore, the issue of optimality of linear coding over finite non-field rings for data compression is closed with respect to existence. Furthermore, we also consider LCoR as an alternative technique for the general computing problem, Problem 1. Results from Körner–Marton [

4], Ahlswede–Han ([

5], Theorem 10) and [

7] are generalized to corresponding ring versions for encoding (

pseudo)

nomographic functions (over rings). Since any discrete function with a finite domain admits a

nomographic presentation, we conclude that our results universally apply for encoding all discrete functions of finite domains. Finally, it is shown that our ring approach dominates its field counterpart in terms of achieving better coding rates and reducing alphabet sizes of the encoders for encoding some discrete function. The proof is done by taking advantage of the fact that the

characteristic of a ring can be any positive integer while the characteristic of a field must be a prime. From this observation used in the proof, it is seen that there are actually infinite many such functions.

2. Rings, Ideals and Linear Mappings

We start by introducing some fundamental algebraic concepts and related properties. Readers who are already familiar with this material may still choose to go through quickly to identify our notation.

Definition 1. The tuple is called a ring if the following criteria are met:

- 1.

is an Abelian group ;

- 2.

There exists a multiplicative identity , namely, , ;

- 3.

, and ;

- 4.

, and .

We often write for when the operations considered are known from the context. The operation “·” is usually written by juxtaposition, for , for all .

A ring is said to be commutative if , . In Definition 1, the identity of the group , denoted by 0, is called the zero. A ring is said to be finite if the cardinality is finite, and is called the order of . The set of integers modulo q is a commutative finite ring with respect to the modular arithmetic. For any ring , the set of all polynomials of s indeterminants over is an infinite ring.

Definition 2. A polynomial function

(Polynomial and polynomial function are distinct concepts.) of k variables

over a finite ring is a function of the formwhere and m and ’s are non-negative integers. The set of all the polynomial functions of k variables over ring is designated by . Remark 1. Polynomial and polynomial function are sometimes only defined over a commutative ring [12,13]. It is a very delicate matter to define them over a non-commutative ring [14,15], due to the fact that and can become different objects. We choose to define “polynomial functions” with Formula (

5)

because those functions are within the scope of this paper’s interest. Proposition 1. Given s rings , for any non-empty set , the Cartesian product

(see [12]) forms a new ring with respect to the component-wise operations defined as follows:. Remark 2. In Proposition 1, is called the direct product of . It can be easily seen that and are the zero and the multiplicative identity of , respectively.

Definition 3. A non-zero element a of a ring is said to be invertible , if and only if there exists , such that . b is called the inverse of a, denoted by . An invertible element of a ring is called a unit.

Remark 3. It can be proved that the inverse of a unit is unique. By definition, the multiplicative identity is the inverse of itself.

Let

. The ring

is a

field if and only if

is an Abelian group. In other words, all non-zero elements of

are invertible. All fields are commutative rings.

is a field if and only if

q is a

prime. All finite fields of the same order are

isomorphic to each other ([

16], p. 549). This “unique” field of order

q is denoted by

. It is necessary that

q is a power of a prime. More details regarding finite fields can be found in ([

16], Chapter 14.3).

Theorem 1 (Wedderburn’s little theorem [

12])

. Let be a finite ring. is a field if and only if all non-zero elements of are invertible. Remark 4. Wedderburn’s little theorem guarantees commutativity for a finite ring if all of its non-zero elements are invertible. Hence, a finite ring is either a field or at least one of its elements has no inverse. However, a finite commutative ring is not necessary a field, e.g., is not a field if q is not a prime.

Definition 4 ([

16])

. The characteristic

of a finite ring is defined to be the smallest positive integer m, such that , where 0 and 1 are the zero and the multiplicative identity of , respectively. The characteristic of is often denoted by . Remark 5. Clearly, . For a finite field , is always the prime such that for some integer n ([12], Proposition 2.137). Proposition 2. Let be a finite field. For any , if and only if m is the smallest positive integer such that .

Proof. Since

,

The statement is proved. ☐

Definition 5. A subset of a ring is said to be a left ideal of , denoted by , if and only if

- 1.

is a subgroup of ;

- 2.

and , .

If condition 2 is replaced by

- 3.

and , ,

then is called a right ideal of , denoted by . is a trivial left (right) ideal, usually denoted by 0.

The cardinality is called the order of a finite left (right) ideal .

Remark 6. Let be a non-empty set of elements of some ring . It is easy to verify that is a right ideal and is a left ideal. Furthermore, and if is a unit for some .

It is well-known that if

, then

is divided into disjoint

cosets which are of equal size (cardinality). For any coset

,

,

. The set of all cosets forms a

left module over

, denoted by

. Similarly,

becomes a

right module over

if

[

17]. Of course,

can also be considered as a

quotient group [

12]. However, its structure is well richer than simply being a quotient group.

Proposition 3. Let () be a ring and . For any , (or ) if and only if and (or ), .

Proof. We prove for the

case only, and the

case follows from a similar argument. Let

(

) be the coordinate function assigning every element in

its

ith component. Then

, where

. Moreover, for any

where

for all feasible

i, we have that

where

has the

ith coordinate being 1 and others being 0. If

, then

by definition. Therefore,

. Consequently,

. Since

is a homomorphism, we also have that

for all feasible

i. The other direction is easily verified by definition. ☐

Remark 7. It is worthwhile to point out that Proposition 3 does not hold for infinite index set, namely, , where I is not finite.

For any , Proposition 3 states that any left (right) ideal of is a Cartesian product of some left (right) ideals of , . Let be a left (right) ideal of ring (). We define to be the left (right) ideal of .

Let be the transpose of a vector (or matrix) .

Definition 6. A mapping given as:where t stands for transposition and for all feasible i and j, is called a left linear mapping

over ring . Similarly,defines a right linear mapping

over ring . If , then f is called a left

( right

) linear function

over . From now on, left linear mapping (function) or right linear mapping (function) are simply called linear mapping (function). This will not lead to any confusion since the intended use can usually be clearly distinguished from the context.

Remark 8. The mapping f in Definition 6 is called linear

in accordance with the definition of linear mapping

(function

) over a field. In fact, the two structures have several similar properties. Moreover, (

11)

is equivalent towhere is an matrix

over and for all feasible i and j. is named the coefficient matrix

. It is easy to prove that a linear mapping is uniquely determined by its coefficient matrix, and vice versa. The linear mapping f is said to be trivial

, denoted by 0, if is the zero matrix

, i.e., for all feasible i and j. It should be noted that an interesting approach to coding over an

Abelian group was presented in [

9,

10,

11]. However, we emphasize that even if group, field and ring are closely related algebraic structures, the definition of the group encoder in [

11] and the linear encoder in [

3] and in the present work are in general fundamentally different (although there is an overlap in special cases). To highlight in more detail the difference between linear encoding (this work and [

3]) and encoding over a group, as in [

11], which is a nonlinear operation in general, take the Abelian group

, the field

of order 4 and the matrix ring

as examples.

By ([

11], Example 2), the Abelian group encoder encodes the source

based on a Slepian–Wolf like scheme. Namely, two binary linear encoders encode

and

separately as two binary sources. Therefore, the lengths of the codewords from encoding

and

can even be different, and the encoder is in general a highly nonlinear device.

On the other hand, the linear encoder over either or simply outputs a linear combination of the vector , namely for some matrix over or .

However, if one requires that the codewords from encoding and be of the same length in (1), then the output from encoding is the same as for some matrix over ring (a specific product ring whose multiplication is significantly different from those of or ). In other words, in this quite specific special case, the encoder becomes linear over a product ring of modulo integers, which is a sub-class of the completely general ring structures considered in this paper.

We also note that in some source network problems, linear codes appear superior to others [

3]. For instance, for encoding the modulo-two sum of binary symmetric sources, linear coding over

or

achieves the optimal Körner–Marton region [

4] (the

case will be established in later sections), while coding over

G achieves the sub-optimal Slepian–Wolf region ([

11], p. 1509). To avoid any remaining confusion, we in

Appendix D present additional details regarding the differences between linear coding, as in the present work and in [

3], and coding over an Abelian group, as in [

11].

Let

be an

matrix over ring

and

,

. For the

system of linear equations

let

be the set of all

solutions, namely

. It is obvious that

if

f is trivial, i.e.,

is the zero matrix. If

is a field, then

is a

subspace of

. We conclude this section with a lemma regarding the cardinalities of

and

in the following.

Lemma 1. For a finite ring and a linear functionwe havewhere . In particular, if is invertible for some , then . Proof. It is obvious that the image by definition. Moreover, , the pre-images satisfy and . Therefore, , i.e., . Moreover, if is a unit, then , thus, . ☐

3. Linear Coding over Finite Rings

In this section, we will present a coding rate region achieved with LCoR for the SW source coding problem, i.e.,

g is an identity function in Problem 1. This region is exactly the SW region if all the rings considered are fields. However, being field is not necessary as seen in

Section 5, where the issue of optimality is addressed.

Before proceeding, a subtlety needs to be cleared out. It is assumed that a source generates data taking values from a finite sample space , while does not necessarily admit any algebraic structure. We have to either assume that is with a certain algebraic structure, for instance is a ring, or injectively map elements of into some algebraic structure. In our subsequent discussions, we assume that is mapped into a finite ring of order at least by some injection . Hence, can simply be treated as a subset for a fixed . When required, can also be selected to obtain desired outcomes.

To facilitate our discussion, the following notation is used. For

,

(

and

resp.) is defined to be the Cartesian product

where

is a realization of

. If

, we denote the

marginal of

p with respect to

by

, i.e.,

, define the support

For simplicity,

is defined to be

(

is implicitly assumed), and

for any

and

. For any

, let

where

and

is a random variable with sample space

.

Theorem 2. is achievable with linear coding over the finite rings . In exact terms, , there exists , for all , there exist linear encoders (left linear mappings to be more precise) () and a decoder ψ, such thatwhere , as long as The following is a concrete example providing some insight into this theorem.

Example 1. Consider the single source scenario, where and , specified as follows. | 0 | 1 | 2 | 3 | 4 | 5 |

| | | | | | |

Obviously, contains 3 non-trivial ideals , and , and and admit the distributionsrespectively. In addition, is a constant. Thus, by Theorem 2, rate is achievable ifIn other words,is achievable with linear coding over ring . Obviously, is just the region . Optimality is claimed. Additionally, we would like to point out that some of the inequalities defining (

22) are not active for specific scenarios. Two classes of these scenarios are discussed in the following theorems. The first, Theorem 3, is for scenarios where rings considered are product rings, while the second, Theorem 4, is for cases of lower triangle matrix rings (similarly, readers can consider usual matrix rings, which are often non-commutative, if interested).

Theorem 3. Suppose () is a (finite) product ring of finite rings ’s, and the sample space satisfies for all feasible i and l. Given injections and letwhere is defined asWe have thatwhere , is achievable with linear coding over . Moreover, . Let

be a finite ring and

where

m is a positive integer. It is easy to verify that

is a ring with respect to matrix operations. Moreover,

is a left ideal of

if and only if

Let

be the set of all left ideals of the form

Theorem 4. Let () be a finite ring such that . For any injections , letwhere is defined asWe have thatwhere , is achievable with linear coding over . Moreover, . Remark 9. The difference between (

22), (

29)

and (

35)

lies in their restrictions defining ’s, respectively, as highlighted in the proofs given in Section 4. Remark 10. Without much effort, one can see that ( and , respectively) in Theorem 2 (Theorem 3 and Theorem 4, respectively) depends on Φ

via random variables ’s whose distributions are determined by Φ.

For each , there exist distinct injections from to a ring of order at least . Let be the convex hull of a set . By a straightforward time sharing argument, we have thatis achievable with linear coding over . Remark 11. From Theorem 5, one will see that (

22)

and (

36)

are the same when all the rings are fields. Actually, both are identical to the SW region. However, (

36)

can be strictly larger than (

22)

(see Section 5), when not all the rings are fields. This implies that, in order to achieve the desired rate, a suitable injection is required. However, be reminded that taking the convex hull in (

36)

is not always needed for optimality as shown in Example 1. A more sophisticated elaboration on this issue is found in Section 5. The rest of this section provides key supporting lemmata and concepts used to prove Theorems 2–4. The final proofs are presented in

Section 4.

Lemma 2. Let be two distinct sequences, where is a finite ring, and assume that . If is a random linear mapping chosen uniformly at random, i.e., generate the coefficient matrix of f by independently choosing each entry of from uniformly at random, thenwhere . Proof. Let

, where

is a random linear function. Then

since the

’s are independent from each other. The statement follows from Lemma 1, which ensures that

. ☐

Remark 12. In Lemma 2, if is a field and , then because every non-zero is a unit. Thus, .

Definition 7 ([

18])

. Let be a discrete random variable with sample space . The set of strongly

-typical sequences

of length n with respect to X is defined to bewhere is the number of occurrences of x in the sequence . The notation is sometimes replaced by when the length n and the random variable X referred to are clear from the context.

Now we conclude this section with the following lemma. It is a crucial part for our proofs of the achievability theorems. It generalizes the classic conditional typicality lemma ([

19], Theorem 15.2.2), yet at the same time distinguishes our argument from the one for the field version.

Lemma 3. Let be a jointly random variable whose sample space is a finite ring . For any , there exists , such that, and ,whereand is a random variable with sample space . Proof. Define the mapping

by

Assume that

, and let

By definition,

, where

,

Moreover,

For fixed

, the number of strongly

-typical sequences

such that

is strongly

-typical is strictly upper bounded by

if

n is large enough and

is small. Therefore,

☐

Remark 13. We acknowledge an anonymous reviewer of our paper [20] for suggesting the proof for Lemma 3 given above. Our original proof was presented as a special case of a more general result in [21]. The techniques behind the two proofs are quite different, however the full generality of our original proof is appreciated better in non-i.i.d. scenarios, as in [21]. Remark 14. Assume that , then is equivalent to .

5. Optimality

Obviously, Theorem 2 specializes to its field counterpart if all rings considered are fields, as summarized in the following theorem.

Theorem 5. Region (

22)

is the SW region if contains no proper non-trivial left ideal, equivalently, is a field, for all . As a consequence, region (

36)

is the SW region. Proof. In Theorem 2, random variable

admits a sample space of cardinality 1 for all

, since the only non-trivial left ideal of

is itself for all feasible

i. Thus,

. Consequently,

which is the SW region

. Therefore, region (

36) is also the SW region.

If is a field, then obviously it has no proper non-trivial left (right) ideal. Conversely, , implies that , such that . Similarly, , such that . Moreover, . Hence, . b is the inverse of a. By Wedderburn’s little theorem, is a field. ☐

One important question to address is whether linear coding over finite non-field rings can be equally optimal for data compression. Hereby, we claim that, for any SW scenario, there always exist linear encoders over some finite non-field rings which achieve the data compression limit. Therefore, optimality of linear coding over finite non-field rings for data compression is established in the sense of existence.

5.1. Existence Theorem I: Single Source

For any single source scenario, the assertion that there always exists a finite ring

, such that

is in fact the SW region

is equivalent to the existence of a finite ring

and an injection

, such that

where

.

Theorem 6. Let be a finite ring of order . If contains one and only one proper non-trivial left ideal and , then region (

36)

coincides with the SW region, i.e., there exists an injection , such that (

59)

holds. Remark 15. Examples of such a non-field ring in the above theorem include( is a ring with respect to matrix addition and multiplication) and , where p is any prime. For any single source scenario, one can always choose to be either or . Consequently, optimality is attained. Proof of Theorem 6. Notice that the random variable

depends on the injection

, so does its entropy

. Obviously

, since the sample space of the random variable

contains only one element. Therefore,

Consequently, (

59) is equivalent to

since

. By Lemma A1, there exists injection

such that (

62) holds if

. The statement follows. ☐

Up to isomorphism, there are exactly 4 distinct rings of order for a given prime p. They include 3 non-field rings, , and , in addition to the field . It has been proved that, using linear encoders over the last three, optimality can always be achieved in the single source scenario. Actually, the same holds true for all multiple sources scenarios.

5.2. Existence Theorem II: Multiple Sources

Theorem 7. Let be s finite rings with . If is isomorphic to either

- 1.

a field, i.e., contains no proper non-trivial left (right) ideal; or

- 2.

a ring containing one and only one proper non-trivial left ideal and ,

for all feasible i, then (

36)

coincides with the SW region . Remark 16. It is obvious that Theorem 7 includes Theorem 6 as a special case. In fact, its proof resembles the one of Theorem 6. Examples of ’s include all finite fields, and , where p is a prime. However, Theorem 7 does not guarantee that all rates, except the vertexes , in the polytope of the SW region are “directly” achievable for the multiple sources case. A time sharing scheme is required in our current proof. Nevertheless, all rates are “directly” achievable if ’s are fields or if . This is partially the reason that the two theorems are stated separately.

Remark 17. Theorem 7 also includes Theorem 5 as a special case. However, Theorem 5 admits a simpler proof compared to the one for Theorem 7.

Proof of Theorem 7. It suffices to prove that, for any

satisfies

for some set of injections

, where

. Let

be the set of injections, where, if

- (i)

is a field, is any injection;

- (ii)

satisfies 2,

is the injection such that

when

. The existence of

is guaranteed by Lemma A1.

If

, then

for all

and

. As a consequence,

for all

. Thus,

. ☐

By Theorems 5–7, we draw the conclusion that

Corollary 1. For any SW scenario, there always exists a sequence of linear encoders over some finite rings (fields or non-field rings) which achieves the data compression limit, the SW region.

In fact, LCoR can be optimal even for rings beyond those stated in the above theorems (see Example 1). We classify some of these scenarios in the remaining parts of this section.

5.3. Product Rings

Theorem 8. Let () be a set of finite rings of equal size, and for all feasible i. If the coding rate is achievable with linear encoders over (), then is achievable with linear encoders over .

Proof. By definition,

is a convex combination of coding rates which are achieved by different linear encoding schemes over

(

), respectively. To be more precise, there exist

and positive numbers

with

, such that

. Moreover, there exist injections

(

), where

, such that

where

,

and

is a random variable with sample space

. To show that

is achievable with linear encoders over

, it suffices to prove that

is achievable with linear encoders over

for all feasible

j. Let

. For all

and

with

(

), we have

where

. By (

72), it can be easily seen that

Meanwhile, let

,

,

(Note:

for all

.) and

. It can be verified that

(

) is a function of

, hence,

. Consequently,

which implies that

by Theorem 3. We therefore conclude that

is achievable with linear encoders over

for all feasible

j, so is

. ☐

Obviously, in Theorem 8 are of the same size. Inductively, one can verify the following without any difficulty.

Theorem 9. Let be any finite index set, () be a set of finite rings of equal size, and for all feasible i. If the coding rate is achievable with linear encoders over (), then is achievable with linear encoders over .

Remark 18. There are delicate issues to the situation Theorem 9 (Theorem 8) illustrates. Let () be the set of all symbols generated by the ith source. The hypothesis of Theorem 9 (Theorem 8) implicitly implies the alphabet constraint for all feasible i and l.

Let be s finite rings each of which is isomorphic to either

a ring containing one and only one proper non-trivial left ideal whose order is , e.g., and (p is a prime); or

a ring of a finite product of finite field(s) and/or ring(s) satisfying 1), e.g., (p and ’s are prime) and ( and are non-negative, ’s are prime and ’s are power of primes).

Theorems 7 and 9 ensure that linear encoders over ring are always optimal in any applicable (subject to the condition specified in the corresponding theorem) SW coding scenario. As a very special case, , where p is a prime, is always optimal in any (single source or multiple sources) scenario with alphabet size less than or equal to p. However, using a field or product rings is not necessary. As shown in Theorem 6, neither nor is (isomorphic to) a product of rings nor a field. It is also not required to have a restriction on the alphabet size (see Theorem 7), even for product rings (see Example 1 for a case of ).

5.4. Trivial Case: Uniform Distributions

The following theorem is trivial, however we include it for completeness.

Theorem 10. Regardless which set of rings is chosen, as long as for all feasible i, region (

22)

is the SW region if is a uniform distribution. Proof. If

p is uniform, then, for any

and

,

is uniformly distributed on

. Moreover,

and

are independent, so are

and

. Therefore,

and

. Consequently,

Region (

22) is the SW region. ☐

Remark 19. When p is uniform, it is obvious that the uncoded strategy (all encoders are one-to-one mappings) is optimal in the SW source coding problem. However, optimality stated in Theorem 10 does not come from deliberately fixing the linear encoding mappings, but generating them randomly.

So far, we have only shown that there exist linear encoders over finite non-field rings that are equally good as their field counterparts. In next section, Problem 1 is considered with an arbitrary g. It will be demonstrated that linear coding over finite non-field rings can strictly outperform its field counterpart for encoding some discrete functions, and there are infinitely many such functions.

6. Application: Source Coding for Computing

The problem of Source Coding for Computing, Problem 1, with an arbitrary

g is addressed in this section. Some advantages of LCoR (compared to LCoF) will be demonstrated. We begin with establishing the following theorem which can be recognized as a generalization of Körner–Marton [

4].

Theorem 11. Let be a finite ring, andand ’s are functions mapping to . Thenwhere and . Proof. By Theorem 2,

, there exists a large enough

n, an

matrix

and a decoder

, such that

, if

. Let

(

) be the encoder of the

ith source. Upon receiving

from the

ith source, the decoder claims that

, where

, is the function, namely

, subject to computation. The probability of decoding error is

Therefore, all

, where

, is achievable, i.e.,

. ☐

Corollary 2. In Theorem 11, let . We haveif either of the following conditions holds: - 1.

is isomorphic to a finite field;

- 2.

is isomorphic to a ring containing one and only one proper non-trivial left ideal with , and

Proof. If either (1) or (2) holds, then it is guaranteed that

in Theorem 11. The statement follows. ☐

Remark 20. By Lemma A2, examples of non-field rings satisfying (2) in Corollary 2 includes

- (1)

with satisfying - (2)

withsatisfying (

89),

etc.

Interested readers can figure out even more explicit examples deduced from Lemma A1.

Remark 21. If is isomorphic to and is the modulo-two sum, then Corollary 2 recovers the theorem of Körner–Marton [4]. While if is (isomorphic to) a field, it becomes a special case of ([7] Theorem III.1). Actually, almost all the results in [6,7] can be reproved in the setting of rings in a parallel fashion. We claim that there are functions g for which LCoR outperforms LCoF; in fact, there are infinite many such g’s. To prove this, some definitions are required for the mechanics of our argument.

Definition 8. Let and be two functions. If there exist bijections , , and , such thatthen and are said to be equivalent

(via and ν). Definition 9. Given function , and let . The restriction of g on is defined to be the function such that .

Lemma 4. Let and Ω

be some finite sets. For any discrete function there always exist a finite ring (field) and a polynomial function such thatfor some injections () and . Proof. There are several possible proofs of this lemma. One is provided in

Appendix B. ☐

Remark 22. Up to equivalence, a function can be presented in many different formats. For example, the function defined on (with ordering ) can either be seen as on or be treated as the restriction of defined on to the domain .

Lemma 4 implies that any discrete function defined on a finite domain is equivalent to a restriction of some polynomial function over some finite ring (field). As a consequence, we can restrict Problem 1 to all polynomial functions. This polynomial approach offers valuable insight into the general problem, because the algebraic structure of a polynomial function is clearer than that of an arbitrary function. We often call

in Lemma 4 a

polynomial presentation of

g. On the other hand, the

given by (

78) is named a

nomographic function over

(by terminology borrowed from [

22]), it is said to be a

nomographic presentation of

g if

g is equivalent to a restriction of it.

Lemma 5. Let and Ω

be some finite sets. For any discrete function , there exists a nomographic function over some finite ring (field) such thatfor some injections () and . Proof. There are several proofs of this lemma. One is provided in

Appendix B. ☐

Lemma 5 advances Lemma 4 by claiming that a discrete function with a finite domain is always equivalent to a restriction of some nomographic function. From this, it is seen that Theorem 11 and Corollary 2 have presented a universal solution to Problem 1.

Given some finite ring

, let

of format (

78) be a nomographic presentation of

g. We say that the region

given by (

79) is achievable for computing

g in the sense of Körner–Marton. From Theorem 13 given later, we know that

might not be the largest achievable region one can obtain for computing

g. However,

still captures the ability of linear coding over

when used for computing

g. In other words,

is the region purely achieved with linear coding over

for computing

g. On the other hand, regions from Theorem 13 are achieved by combining the linear coding and the standard random coding techniques. Therefore, it is reasonable to compare LCoR with LCoF in the sense of Körner–Marton.

We show that linear coding over finite rings, non-field rings in particular, strictly outperforms its field counterpart, LCoF, in the following example.

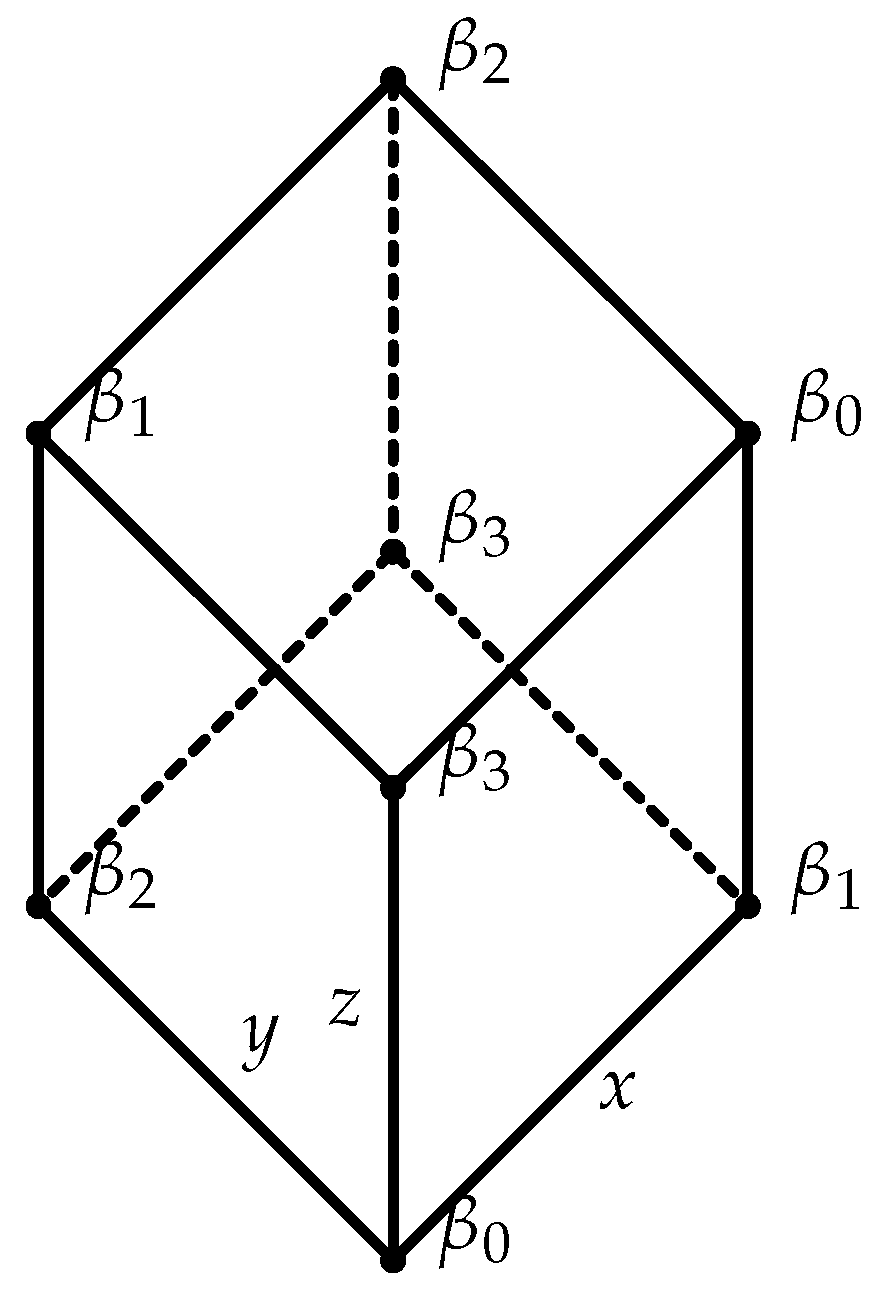

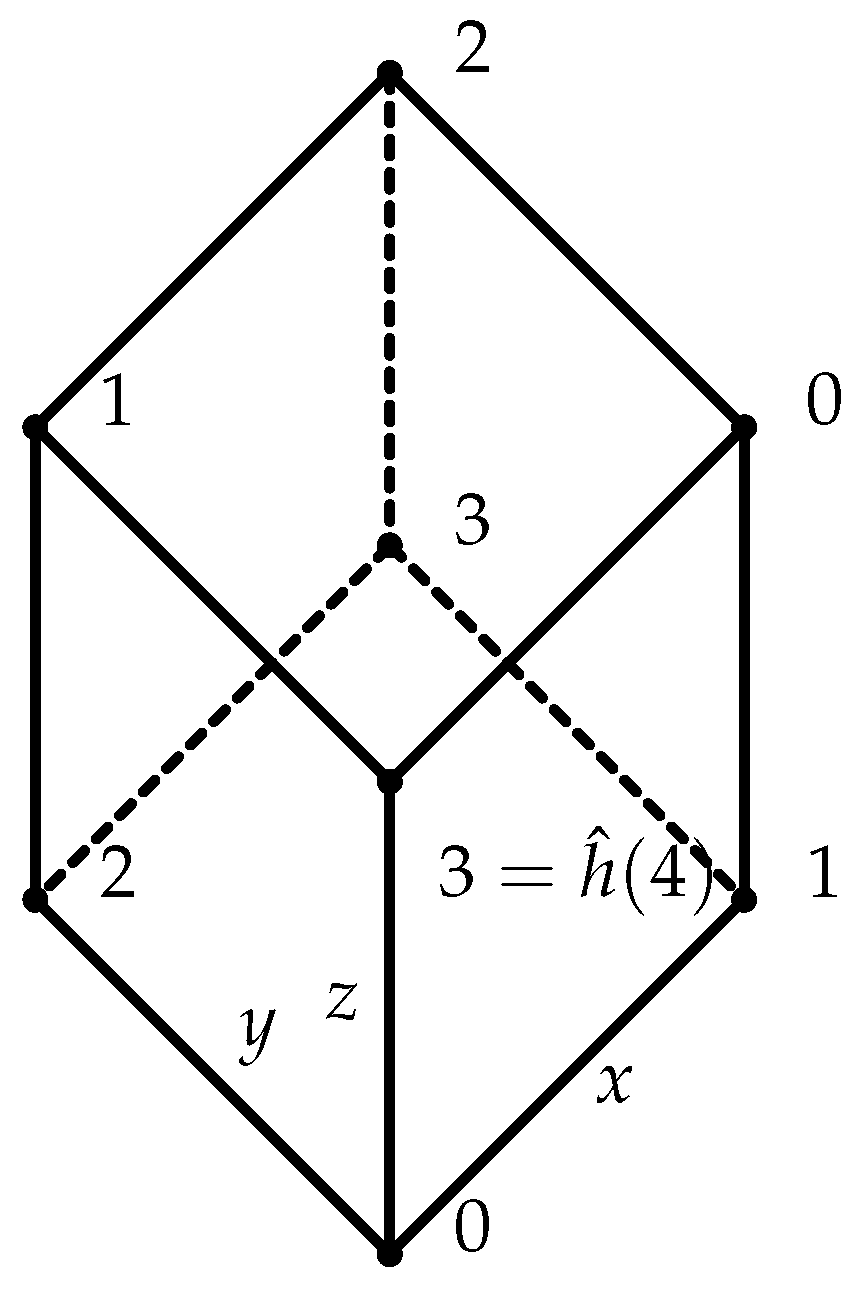

Example 2 ([

23])

. Let (Figure 1) be a function such thatDefine and byrespectively. Obviously, g is equivalent to (Figure 2) via and ν. However, by Proposition 4, there exists no of format (

78)

so that g is equivalent to any restriction of . Although, Lemma 5 ensures that there always exists a bigger field such that g admits a presentation of format (

78)

, the size q must be strictly bigger than 4

. For instance, letThen, g has presentation (Figure 3) via and defined (symbolic-wise) by (

95).

Proposition 4. There exists no polynomial function of format (

78),

such that a restriction of is equivalent to the function g defined by (

94).

Proof. Suppose , where , are injections, and with for all feasible i. We claim that and h are both surjective, since In particular, h is bijective. Therefore, , i.e., g admits a presentation . A contradiction to Lemma A3. ☐

As a consequence of Proposition 4, in the sense of Körner–Marton, in order to use LCoF to encode function g, the alphabet sizes of the three encoders need to be at least 5. However, LCoR offers a solution in which the alphabet sizes are 4, strictly smaller than using LCoF. Most importantly, the region achieved with linear coding over any finite field , is always a subset of the one achieved with linear coding over . This is proved in the following proposition.

Proposition 5. Let g be the function defined by (

94),

be the sample space of and be the distribution of . Ifsatisfying (

89),

then, in the sense of Körner–Marton, the region achieved with linear coding over contains the one, that is , obtained with linear coding over any finite field for computing g. Moreover, if is the whole domain of g, then . Proof. Let

be a polynomial presentation of

g with format (

78). By Corollary 2 and Remark 20, we have

Assume that

, where

and

are injections. Obviously,

is a function of

. Hence,

On the other hand,

. Therefore,

and

. In addition, we claim that

, where

, is not injective. Otherwise,

, where

, is bijective, hence,

. A contradiction to Lemma A3. Consequently,

. If

, then (

100) as well as (

101) hold strictly, thus,

. ☐

A more intuitive comparison (which is not as conclusive as Proposition 5) can be identified from the presentations of g given in Figure 2 and Figure 3. According to Corollary 2, linear encoders over field achieveThe one achieved by linear encoders over ring isClearly, , thus, contains . Furthermore, as long as is strictly larger than , since . To be specific, assume that satisfies Table 1, we have Based on Propositions 4 and 5, we conclude that LCoR dominates LCoF, in terms of achieving better coding rates with smaller alphabet sizes of the encoders for computing g. As a direct conclusion, we have:

Theorem 12. In the sense of Körner–Marton, LCoF is not optimal.

Remark 23. The key property underlying the proof of Proposition 5 is that the characteristic of a finite field must be a prime while the characteristic of a finite ring can be any positive integer larger than or equal to 2. This implies that it is possible to construct infinitely many discrete functions for which using LCoF always leads to a suboptimal achievable region compared to linear coding over finite non-field rings. Examples include for and prime (note: the characteristic of is which is not a prime). One can always find an explicit distribution of sources for which linear coding over strictly dominates linear coding over each and every finite field.

As mentioned,

given by (

79) is sometimes strictly smaller than

. This was first shown by Ahlswede–Han [

5] for the case of

g being the modulo-two sum. Their approach combines the linear coding technique over a binary field with the standard random coding technique. In the following, we generalize the result of Ahlswede–Han ([

5], Theorem 10) to the settings, where

g is arbitrary, and, at the same time, LCoF is replaced by its generalized version, LCoR.

Consider function

admitting

where

and

’s are functions mapping

to

. By Lemma 5, a discrete function with a finite domain is always equivalent to a restriction of some function of format (

107). We call

from (

107) a

pseudo nomographic function over ring

.

Theorem 13. Let . If is of format (

107),

and satisfyingwhere , ; , , ’s are discrete random variables such thatand , , then . Proof. The proof can be completed by applying the tricks from Lemmas 2 and 3 to the approach generalized from Ahlswede–Han ([

5], Theorem 10). Details are found in

Appendix C. ☐

Remark 24. The achievable region given by (

108)

always contains the SW region. Moreover, it is in general larger than the from (

79).

If is the modulo-two sum, namely and ’s are identity functions for all , then (

108)

resumes the region of Ahlswede–Han ([5], Theorem 10).