Opposition-Based Adaptive Fireworks Algorithm

Abstract

:1. Introduction

2. Opposition-Based Adaptive Fireworks Algorithm

2.1. Adaptive Fireworks Algorithm

| Algorithm 1 Generating Explosion Sparks |

| 1: for j = 1 to Si do |

| 2: for each dimension k = 1, 2, …, d do |

| 3: obtain r1 from U(0, 1) |

| 4: if r1 < 0.5 then |

| 5: obtain r from U(−1, 1) |

| 6: |

| 7: if then |

| 8: obtain r again from U(0, 1) |

| 9: |

| 10: end if |

| 11: end if |

| 12: end for |

| 13: end for |

| 14: return |

| Algorithm 2 Generating Gaussian Sparks |

| 1: for j = 1 to NG do |

| 2: Randomly choose i from 1, 2, ..., m |

| 3: obtain r from N(0, 1) |

| 4: for each dimension k = 1, 2, …, d do |

| 5: |

| 6: if then |

| 7: obtain r from U(0, 1) |

| 8: |

| 9: end if |

| 10: end for |

| 11: end for |

| 12: return |

| Algorithm 3 Pseudo-Code of AFWA |

| 1: randomly choosing m fireworks |

| 2: assess their fitness |

| 3: repeat |

| 4: obtain Ai (except for A*) based on Equation (1) |

| 5: obtain Si based on Equations (2) and (3) |

| 6: produce explosion sparks based on Algorithm 1 |

| 7: produce Gaussian sparks based on Algorithm 2 |

| 8: assess all sparks’ fitness |

| 9: obtain A* based on Equation (4) |

| 10: retain the best spark as a firework |

| 11: randomly select other m − 1 fireworks |

| 12: until termination condition is satisfied |

| 13: return the best fitness and a firework location |

2.2. Opposition-Based Learning

2.3. Opposition-Based Adaptive Fireworks Algorithm

2.3.1. Opposition-Based Population Initialization

| Algorithm 4 Opposition-Based Population Initialization |

| 1: randomly initialize fireworks pop with a size of m |

| 2: calculate a quasi opposite fireworks Qpop based on Equation (6) |

| 3: assess 2 × m fireworks’ fitness |

| 4: return the fittest individuals from {pop ∪ Opop} as initial fireworks |

2.3.2. Opposition-Based Generation Jumping

| Algorithm 5 Opposition-Based Generation Jumping |

| 1: if (rand(0, 1) < Jr) |

| 2: dynamically calculate boundaries of current m fireworks |

| 3: calculate a quasi opposite fireworks Qpop based on Equation (6) |

| 4: assess 2 × m fireworks’ fitness |

| 5: end if |

| 6: return the fittest individuals from {pop ∪ Opop} as current fireworks |

2.3.3. Opposition-Based Adaptive Fireworks Algorithm

| Algorithm 6 Pseudo-Code of OAFWA |

| 1: opposition-based population initialization based on Algorithm 4 |

| 2: repeat |

| 3: obtain Ai (except for A*) based on Equation (1) |

| 4: obtain Si based on Equations (2) and (3) |

| 5: produce explosion sparks based on Algorithm 1 |

| 6: produce Gaussian sparks based on Algorithm 2 |

| 7: assess all sparks’ fitness |

| 8: obtain A* based on Equation (4) |

| 9: retain the best spark as a firework |

| 10: randomly select other m – 1 fireworks |

| 11: opposition-based generation jumping based on Algorithm 5 |

| 12: until termination condition is satisfied |

| 13: return the best fitness and a firework location |

3. Benchmark Functions and Implementation

3.1. Benchmark Functions

3.2. Success Criterion

3.3. Initialization

4. Simulation Studies and Discussions

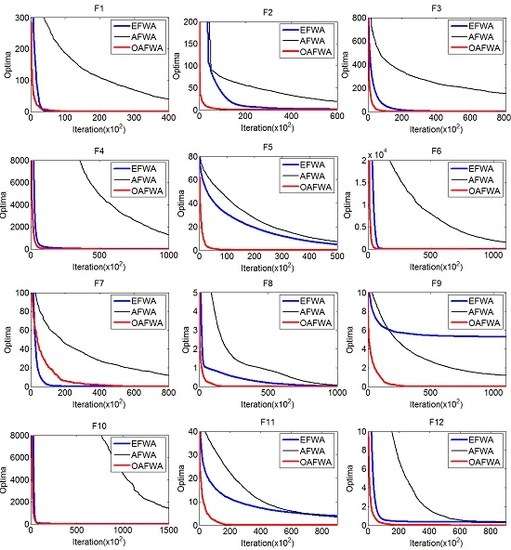

4.1. Comparison with FWA-Based Algorithms

4.1.1. Comparison with AFWA

4.1.2. Comparison with FWA-Based Algorithms

4.2. Comparison with Other Swarm Intelligence Algorithms

5. Conclusions

Acknowledgments

Conflicts of Interest

References

- Dorigo, M. Learning and Natural Algorithms. Ph.D. Thesis, Politecnico di Milano, Milan, Italy, 1992. [Google Scholar]

- Kennedy, J.; Eberhart, R.C. Particles warm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Piscataway, NJ, USA, 27 November–1 December 1995; pp. 1942–1948.

- Chih, M.C.; Yeh, L.L.; Li, F.C. Particle swarm optimization for the economic and economic statistical designs of the control chart. Appl. Soft Comput. 2011, 11, 5053–5067. [Google Scholar] [CrossRef]

- Chih, M.C.; Lin, C.J.; Chern, M.S.; Ou, T.Y. Particle swarm optimization with time-varying acceleration coefficients for the multidimensional knapsack problem. Appl. Math. Model. 2014, 38, 1338–1350. [Google Scholar] [CrossRef]

- Chih, M.C. Self-adaptive check and repair operator-based particle swarm optimization for the multidimensional knapsack problem. Appl. Soft Comput. 2015, 26, 378–389. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Yang, X.S. Firefly algorithms for multimodal optimization. In Proceedings of the 5th International Conference on Stochastic Algorithms: Foundation and Applications, Sapporo, Japan, 26–28 October 2009; Volume 5792, pp. 169–178.

- Yang, X.S. A new metaheuristic bat-inspired algorithm. In Nature Inspired Cooperative Strategies for Optimization (NISCO 2010); Gonzalez, J.R., Ed.; Springer: Berlin, Germany, 2010; pp. 65–74. [Google Scholar]

- Tan, Y.; Zhu, Y.C. Fireworks algorithm for optimization. In Advances in Swarm Intelligence; Springer: Berlin, Germany, 2010; pp. 355–364. [Google Scholar]

- Andreas, J.; Tan, Y. Using population based algorithms for initializing nonnegative matrix factorization. In Advances in Swarm Intelligence; Springer: Berlin, Germany, 2011; pp. 307–316. [Google Scholar]

- Gao, H.Y.; Diao, M. Cultural firework algorithm and its application for digital filters design. Int. J. Model. Identif. Control 2011, 4, 324–331. [Google Scholar] [CrossRef]

- Wen, R.; Mi, G.Y.; Tan, Y. Parameter optimization of local-concentration model for spam detection by using fireworks algorithm. In Proceedings of the 4th International Conference on Swarm Intelligence, Harbin, China, 12–15 June 2013; pp. 439–450.

- Imran, A.M.; Kowsalya, M.; Kothari, D.P. A novel integration technique for optimal network reconfiguration and distributed generation placement in power distribution networks. Int. J. Electr. Power 2014, 63, 461–472. [Google Scholar] [CrossRef]

- Nantiwat, P.; Bureerat, S. Comparative performance of meta-heuristic algorithms for mass minimisation of trusses with dynamic constraints. Adv. Eng. Softw. 2014, 75, 1–13. [Google Scholar] [CrossRef]

- Li, H.; Bai, P.; Xue, J.; Zhu, J.; Zhang, H. Parameter estimation of chaotic systems using fireworks algorithm. In Advances in Swarm Intelligence; Springer: Berlin, Germany, 2015; pp. 457–467. [Google Scholar]

- Liu, Z.B.; Feng, Z.R.; Ke, L.J. Fireworks algorithm for the multi-satellite control. In Proceedings of the 2015 IEEE Congress on Evolutionary Computation, Sendai, Japan, 25–28 May 2015; pp. 1280–1286.

- Zheng, S.Q.; Janecek, A.; Tan, Y. Enhanced fireworks algorithm. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation, Cancun, Mexico, 20–23 June 2013; pp. 2069–2077.

- Li, J.Z.; Zheng, S.Q.; Tan, Y. Adaptive fireworks algorithm. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation, Beijing, China, 6–11 July 2014; pp. 3214–3221.

- Tizhoosh, H.R. Opposition-Based Learning: A New Scheme for Machine Intelligence. In Proceedings of the 2005 International Conference on Computational Intelligence for Modeling, Control and Automation, Vienna, Austria, 28–30 November 2005; pp. 695–701.

- Rahnamayan, S.; Tizhoosh, H.R.; Salama, M.M. Opposition-based differential evolution algorithms. In Proceedings of the 2006 IEEE Congress on Evolutionary Computation, Vancouver, BC, Canada, 16–21 July 2006; pp. 7363–7370.

- Rahnamayan, S.; Tizhoosh, H.R.; Salama, M.M. Quasi-oppositional differential evolution. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; pp. 2229–2236.

- Wang, H.; Liu, Y.; Zeng, S.Y.; Li, H.; Li, C.H. Opposition-based particle swarm algorithm with Cauchy mutation. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; pp. 4750–4756.

- Malisia, A.R.; Tizhoosh, H.R. Applying opposition-based ideas to the ant colony system. In Proceedings of the 2007 IEEE Swarm Intelligence Symposium, Honolulu, HI, USA, 1–5 April 2007; pp. 182–189.

- Yu, S.H.; Zhu, S.L.; Ma, Y. Enhancing firefly algorithm using generalized opposition-based learning. Computing 2015, 97, 741–754. [Google Scholar] [CrossRef]

- Zhao, J.; Lv, L.; Sun, H. Artificial bee colony using opposition-based learning. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2015; Volume 329, pp. 3–10. [Google Scholar]

- Morteza, A.A.; Hosein, A.R. Opposition-based learning in shuffled frog leaping: An application for parameter identification. Inf. Sci. 2015, 291, 19–42. [Google Scholar]

- Mitic, M.; Miljkovic, Z. Chaotic fruit fly optimization algorithm. Knowl. Based Syst. 2015, 89, 446–458. [Google Scholar]

- Derrac, J.; Garcia, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Brest, J.; Greiner, S.; Boskovic, B.; Mernik, M.; Zumer, V. Self-adapting control parameters in differential evolution: A comparative study on numerical benchmark problems. IEEE Trans. Evolut. Comput. 2006, 6, 646–657. [Google Scholar]

- Zambrano, M.; Bigiarini, M.; Rojas, R. Standard particle swarm optimization 2011 at CEC-2013: A baseline for future PSO improvements. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation (CEC), Cancun, Mexico, 20–23 June 2013; pp. 2337–2344.

- Selim, Y.; Ecir, U.K. A new modification approach on bat algorithm for solving optimization problems. Appl. Soft Comput. 2015, 28, 259–275. [Google Scholar]

- Yang, X.S. Nature-Inspired Metaheuristic Algorithms, 2nd ed.; Luniver Press: Frome, UK, 2010; pp. 81–96. [Google Scholar]

| Function | Dimension | Range |

|---|---|---|

| 40 | [−10, 10] | |

| 40 | [−10, 10] | |

| 40 | [−10, 10] | |

| 40 | [−30, 30] | |

| 40 | [−100, 100] | |

| 40 | [−100, 100] | |

| 40 | [−1, 1] | |

| 40 | [−100, 100] | |

| 40 | [−10, 10] | |

| 40 | [−10, 10] | |

| 40 | [−10, 10] | |

| 40 | [−10, 10] |

| Func. | Best | Mean | Median | Worst | Std. Dev. | Time (s) |

|---|---|---|---|---|---|---|

| F1 | <1 × 10−315 | 2.46 × 10−34 | 1.91 × 10−34 | 1.15 × 10−33 | 2.14 × 10−34 | 3.23 |

| F2 | <1 × 10−315 | 8.21 × 10−17 | 3.26 × 10−23 | 5.31 × 10−16 | 1.22 × 10−16 | 5.91 |

| F3 | <1 × 10−315 | 3.56 × 10−38 | 1.95 × 10−38 | 2.74 × 10−37 | 5.32 × 10−38 | 30.56 |

| F4 | <1 × 10−315 | 3.28 × 10−38 | 2.52 × 10−42 | 1.94 × 10−37 | 4.66 × 10−38 | 7.63 |

| F5 | <1 × 10−315 | 7.39 × 10−17 | 8.07 × 10−17 | 1.75 × 10−16 | 4.24 × 10−17 | 5.88 |

| F6 | <1 × 10−315 | 7.17 × 10−46 | 8.21 × 10−47 | 3.18 × 10−44 | 3.24 × 10−45 | 5.54 |

| F7 | <1 × 10−315 | 1.03 × 10−12 | <1 × 10−315 | 1.02 × 10−10 | 1.02 × 10−11 | 4.62 |

| F8 | <1 × 10−315 | <1 × 10−315 | <1 × 10−315 | <1 × 10−315 | <1 × 10−315 | 8.73 |

| F9 | 8.88 × 10−16 | 9.59 × 10−16 | 8.88 × 10−16 | 4.44 × 10−15 | 5.00 × 10−16 | 9.61 |

| F10 | <1 × 10−315 | 2.00 × 10−40 | <1 × 10−315 | 2.15 × 10−39 | 4.11 × 10−40 | 23.63 |

| F11 | <1 × 10−315 | 5.27 × 10−17 | 5.32 × 10−17 | 1.06 × 10−16 | 2.71 × 10−17 | 5.84 |

| F12 | <1 × 10−315 | 1.32 × 10−33 | 1.15 × 10−33 | 5.11 × 10−33 | 1.00 × 10−33 | 7.40 |

| Func. | Best | Mean | Median | Worst | Std. Dev. | Time (s) |

|---|---|---|---|---|---|---|

| F1 | 1.36 × 10−7 | 64.4 | 63.4 | 1.57 × 102 | 36.6 | 3.23 |

| F2 | 1.25 × 10−3 | 1.07 | 2.43 × 10−1 | 38.9 | 3.95 | 5.59 |

| F3 | 6.45 × 10 | 2.45 × 102 | 2.49 × 102 | 4.11 × 102 | 88.2 | 30.20 |

| F4 | 4.42 × 103 | 1.48 × 104 | 1.42 × 104 | 2.54 × 104 | 4.84 × 103 | 7.50 |

| F5 | 31.0 | 43.7 | 44.2 | 52.2 | 4.86 | 5.69 |

| F6 | 1.06 × 104 | 2.19 × 104 | 2.19 × 104 | 3.18 × 104 | 3.69 × 103 | 5.38 |

| F7 | 1.51 × 10−5 | 7.66 | 5.32 | 31.8 | 7.37 | 4.54 |

| F8 | 2.20 × 10−10 | 3.88 × 10−1 | 9.86 × 10−3 | 4.68 | 7.42 × 10−1 | 8.28 |

| F9 | 5.51 × 10−7 | 2.11 | 2.17 | 3.57 | 6.35 × 10−1 | 9.39 |

| F10 | 7.40 × 102 | 6.75 × 103 | 6.28 × 103 | 2.29 × 104 | 4.43 × 103 | 23.35 |

| F11 | 2.11 × 10−1 | 2.39 | 1.98 | 6.69 | 1.56 | 5.64 |

| F12 | 2.00 × 10−1 | 5.58 × 10−1 | 5.00 × 10−1 | 1.10 | 1.85 × 10−1 | 7.17 |

| Func. | Best | Accuracy Improved | Mean | Accuracy Improved | ||

|---|---|---|---|---|---|---|

| AFWA | OAFWA | AFWA | OAFWA | |||

| F1 | 1.36 × 10−7 | <1 × 10−315 | −308 | 64.4 | 2.46 × 10−34 | −35 |

| F2 | 1.25 × 10−3 | <1 × 10−315 | −312 | 1.07 | 8.21 × 10−17 | −17 |

| F3 | 64.5 | <1 × 10−315 | −316 | 2.45 × 102 | 3.56 × 10−38 | −40 |

| F4 | 4.42 × 103 | <1 × 10−315 | −318 | 1.48 × 104 | 3.28 × 10−38 | −42 |

| F5 | 31.0 | <1 × 10−315 | −316 | 43.7 | 7.39 × 10−17 | −18 |

| F6 | 1.06 × 104 | <1 × 10−315 | −319 | 2.19 × 104 | 7.17 × 10−46 | −50 |

| F7 | 1.51 × 10−5 | <1 × 10−315 | −310 | 7.66 | 1.03 × 10−12 | −12 |

| F8 | 2.20 × 10−10 | <1 × 10−315 | −305 | 3.88 × 10−1 | <1 × 10−315 | −314 |

| F9 | 5.51 × 10−7 | 8.88 × 10−16 | −9 | 2.11 | 9.59 × 10−16 | −16 |

| F10 | 7.40 × 102 | <1 × 10−315 | −317 | 6.75 × 103 | 2.00 × 10−40 | −43 |

| F11 | 2.11 × 10−1 | <1 × 10−315 | −314 | 2.39 | 5.27 × 10−17 | −17 |

| F12 | 2.00 × 10−1 | <1 × 10−315 | −314 | 5.58 × 10−1 | 1.32 × 10−33 | −32 |

| Average | −288 | −53 | ||||

| F1 | F2 | F3 | F4 | F5 | F6 | |

| H | 1 | 1 | 1 | 1 | 1 | 1 |

| P | 4.14 × 10−108 | 3.33 × 10−165 | <1 × 10−315 | 2.01 × 10−230 | 1.63 × 10−181 | 1.63 × 10−181 |

| F7 | F8 | F9 | F10 | F11 | F12 | |

| H | 1 | 1 | 1 | 1 | 1 | 1 |

| P | 1.39 × 10−132 | 8.13 × 10−198 | 1.01 × 10−246 | <1 × 10−315 | 3.33 × 10−165 | 2.01 × 10−230 |

| Func. | Best | Mean | Median | Worst | Std. dev. | Time (s) |

|---|---|---|---|---|---|---|

| F1 | 1.66 × 10−3 | 2.63 × 10−3 | 2.63 × 10−3 | 3.44 × 10−3 | 3.83 × 10−4 | 4.34 |

| F2 | 2.07 × 10−1 | 5.67 × 10−1 | 2.75 × 10−1 | 2.84 | 5.89 × 10−1 | 8.88 |

| F3 | 7.52 × 10−3 | 1.44 × 10−2 | 1.40 × 10−2 | 2.77 × 10−2 | 3.67 × 10−3 | 42.54 |

| F4 | 3.31 × 10−1 | 5.22 × 10−1 | 4.92 × 10−1 | 8.91 × 10−1 | 1.23 × 10−1 | 13.55 |

| F5 | 1.62 × 10−1 | 7.01 × 10−1 | 2.18 × 10−1 | 9.26 | 1.47 | 9.22 |

| F6 | 2.01 × 10−1 | 3.28 × 10−1 | 3.12 × 10−1 | 4.75 × 10−1 | 6.67 × 10−2 | 9.11 |

| F7 | 3.22 × 10−3 | 5.04 × 10−3 | 5.07 × 10−3 | 6.87 × 10−3 | 6.73 × 10−4 | 6.46 |

| F8 | 6.01 × 10−3 | 1.63 × 10−2 | 1.48 × 10−2 | 4.91 × 10−2 | 8.80 × 10−3 | 12.90 |

| F9 | 2.39 | 5.05 | 5.08 | 8.51 | 1.20 | 16.82 |

| F10 | 1.68 × 10−1 | 2.69 × 10−1 | 2.64 × 10−1 | 4.57 × 10−1 | 5.86 × 10−2 | 37.31 |

| F11 | 9.73 × 10−1 | 4.14 | 3.80 | 13.6 | 2.16 | 8.98 |

| F12 | 2.00 × 10−1 | 3.15 × 10−1 | 3.00 × 10−1 | 4.00 × 10−1 | 5.39 × 10−2 | 13.46 |

| Func. | EFWA | AFWA | OAFWA | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean Error | Sr | Rank | Mean Error | Sr | Rank | Mean Error | Sr | Rank | |

| F1 | 2.63 × 10−3 | 2 | 2 | 64.4 | 1 | 3 | 2.46 × 10−34 | 100 | 1 |

| F2 | 5.67 × 10−1 | 2 | 2 | 1.07 | 31 | 3 | 8.21 × 10−17 | 100 | 1 |

| F3 | 1.44 × 10−2 | 0 | 2 | 2.45 × 102 | 6 | 3 | 3.56 × 10−38 | 100 | 1 |

| F4 | 5.22 × 10−1 | 0 | 2 | 1.48 × 104 | 0 | 3 | 3.28 × 10−38 | 100 | 1 |

| F5 | 7.01 × 10−1 | 0 | 2 | 43.7 | 0 | 3 | 7.39 × 10−17 | 100 | 1 |

| F6 | 3.28 × 10−1 | 0 | 2 | 2.19 × 104 | 0 | 3 | 7.17 × 10−46 | 100 | 1 |

| F7 | 5.04 × 10−3 | 100 | 2 | 7.66 | 0 | 3 | 1.03 × 10−12 | 100 | 1 |

| F8 | 1.63 × 10−2 | 0 | 2 | 3.88 × 10−1 | 0 | 3 | <1 × 10−315 | 100 | 1 |

| F9 | 5.05 | 0 | 3 | 2.11 | 0 | 2 | 9.59 × 10−16 | 100 | 1 |

| F10 | 2.69 × 10−1 | 0 | 2 | 6.75 × 103 | 0 | 3 | 2.00 × 10−40 | 100 | 1 |

| F11 | 4.14 | 0 | 3 | 2.39 | 0 | 2 | 5.27 × 10−17 | 100 | 1 |

| F12 | 3.15 × 10−1 | 0 | 2 | 5.58 × 10−1 | 0 | 3 | 1.32 × 10−33 | 100 | 1 |

| Average | 8.7 | 2.17 | 3.2 | 2.83 | 100 | 1 | |||

| Algorithms | Parameters |

|---|---|

| BA | A = 0.95, r = 0.8, fmin = 0, f = 1.0 |

| DE | F = 0.5, CR = 0.9 |

| jDE | = 0.1, F [0.1, 1.0], CR [0, 1] |

| FA | = 0.9, = 0.25, = 1.0 |

| SPSO2011 | w = 0.7213, c1 = 1.1931, c2 = 1.1931 |

| Func. | BA | DE | jDE | FA | SPSO2011 | OAFWA | Iteration |

|---|---|---|---|---|---|---|---|

| F1 | 3.27 × 10−3 | 11.7 | 3.67 × 10−35 | 6.45 × 10−7 | 8.15 × 10−23 | 6.75 × 10−23 | 65,000 |

| F2 | 30.4 | 3.37 | 3.49 × 10−35 | 4.37 × 10−2 | 6.27 | 2.02 × 10−16 | 100,000 |

| F3 | 2.10 × 10−2 | 7.70 | 5.42 × 10−4 | 34.8 | 3.70 × 10−6 | 1.19 × 10−38 | 190,000 |

| F4 | 1.53 × 10−1 | 2.27 × 103 | 5.48 × 10−76 | 1.19 × 10−3 | 8.57 × 10−4 | 2.26 × 10−38 | 140,000 |

| F5 | 32.3 | 40.5 | 27.1 | 2.28 × 10−2 | 12.8 | 2.22 × 10−17 | 110,000 |

| F6 | 1.23 × 105 | 3.80 × 103 | 2.54 × 10−58 | 7.37 × 10−5 | 9.85 × 103 | 3.56 × 10−42 | 110,000 |

| F7 | 18.9 | 34.3 | 12.8 | 19.8 | 48.5 | <1 × 10−315 | 80,000 |

| F8 | 1.22 | 1.07 | 9.30 × 10−3 | 1.78 × 10−3 | 8.13 × 10−3 | 2.41 × 10−16 | 120,000 |

| F9 | 6.64 | 4.48 | 5.29 × 10−2 | 3.82 × 10−4 | 2.71 | 3.40 × 10−12 | 150,000 |

| F10 | 4.82 × 10−1 | 5.21 × 103 | 2.06 × 10−45 | 1.69 × 10−2 | 3.23 × 10−3 | 1.17 × 10−40 | 200,000 |

| F11 | 4.85 | 6.58 × 10−1 | 5.66 × 10−16 | 8.21 × 10−1 | 3.45 | 3.03 × 10−17 | 100,000 |

| F12 | 16.7 | 2.41 | 1.92 × 10−1 | 2.58 × 10−1 | 1.92 × 10−1 | 6.38 × 10−34 | 140,000 |

| Func. | BA | DE | jDE | FA | SPSO2011 | OAFWA | Iteration |

|---|---|---|---|---|---|---|---|

| F1 | 3.22 × 10−3 | 7.77 × 10−10 | 3.27 × 10−17 | 2.02 × 10−5 | 4.16 × 10−31 | 2.46 × 10−34 | 65,000 |

| F2 | 7.15 | 1.28 × 10−15 | 1.60 × 10−15 | 1.51 × 10−1 | 3.06 | 8.21 × 10−17 | 100,000 |

| F3 | 2.03 × 10−2 | 3.83 × 10−5 | 7.91 × 10−2 | 40.2 | 3.25 × 10−8 | 3.56 × 10−38 | 190,000 |

| F4 | 1.23 × 10−1 | 5.12 × 10−22 | 1.38 × 10−37 | 1.29 × 10−1 | 5.77 × 10−6 | 3.28 × 10−38 | 140,000 |

| F5 | 25.1 | 23.9 | 7.27 | 9.04 × 10−2 | 5.99 | 7.39E × 10−17 | 110,000 |

| F6 | 8.05 × 104 | 2.21 × 10−6 | 2.07 × 10−28 | 2.26 × 10−3 | 3.56 × 103 | 7.17E × 10−46 | 110,000 |

| F7 | 19.2 | 26.9 | 8.08 | 18.4 | 49.1 | 1.03 × 10−12 | 80,000 |

| F8 | 5.25 × 10−1 | 8.39 × 10−3 | 2.46 × 10−4 | 1.52 × 10−3 | 5.22 × 10−3 | <1 × 10−315 | 120,000 |

| F9 | 5.53 | 5.39 × 10−1 | 7.99 × 10−15 | 3.16 × 10−2 | 1.54 | 9.59 × 10−16 | 150,000 |

| F10 | 4.62 × 10−1 | 2.85 × 10−6 | 1.20 × 10−30 | 8.71 × 10−2 | 1.41 × 10−3 | 2.00 × 10−40 | 200,000 |

| F11 | 3.78 | 2.56 × 10−15 | 1.01 × 10−3 | 9.77 × 10−1 | 2.17 × 10−1 | 5.27 × 10−17 | 100,000 |

| F12 | 11.1 | 1.59 × 10−1 | 9.99 × 10−2 | 2.28 × 10−1 | 1.11 × 10−1 | 1.32 × 10−33 | 140,000 |

| Func. | F1 | F2 | F3 | F4 | F5 | F6 | F7 | F8 | F9 | F10 | F11 | F12 | Rank |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BA | 5 | 6 | 4 | 5 | 5 | 6 | 3 | 6 | 6 | 5 | 6 | 5 | 5.17 |

| DE | 6 | 4 | 5 | 6 | 6 | 4 | 5 | 5 | 5 | 6 | 3 | 4 | 4.92 |

| jDE | 1 | 1 | 3 | 1 | 4 | 1 | 2 | 4 | 3 | 1 | 2 | 2 | 2.08 |

| FA | 4 | 3 | 6 | 4 | 2 | 3 | 4 | 2 | 2 | 4 | 4 | 3 | 3.41 |

| SPSO2011 | 3 | 5 | 2 | 3 | 3 | 5 | 6 | 3 | 4 | 3 | 5 | 2 | 3.67 |

| OAFWA | 2 | 2 | 1 | 2 | 1 | 2 | 1 | 1 | 1 | 2 | 1 | 1 | 1.42 |

| Func. | F1 | F2 | F3 | F4 | F5 | F6 | F7 | F8 | F9 | F10 | F11 | F12 | Rank |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BA | 6 | 6 | 4 | 5 | 5 | 6 | 4 | 6 | 6 | 6 | 6 | 6 | 5.50 |

| DE | 4 | 2 | 3 | 3 | 6 | 3 | 5 | 5 | 4 | 3 | 2 | 4 | 3.67 |

| jDE | 3 | 3 | 5 | 2 | 4 | 2 | 2 | 2 | 2 | 2 | 3 | 2 | 2.67 |

| FA | 5 | 4 | 6 | 6 | 2 | 4 | 3 | 3 | 3 | 5 | 5 | 5 | 4.25 |

| SPSO2011 | 2 | 5 | 2 | 4 | 3 | 5 | 6 | 4 | 5 | 4 | 4 | 3 | 3.91 |

| OAFWA | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1.00 |

© 2016 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, C. Opposition-Based Adaptive Fireworks Algorithm. Algorithms 2016, 9, 43. https://doi.org/10.3390/a9030043

Gong C. Opposition-Based Adaptive Fireworks Algorithm. Algorithms. 2016; 9(3):43. https://doi.org/10.3390/a9030043

Chicago/Turabian StyleGong, Chibing. 2016. "Opposition-Based Adaptive Fireworks Algorithm" Algorithms 9, no. 3: 43. https://doi.org/10.3390/a9030043