A Modified Cloud Particles Differential Evolution Algorithm for Real-Parameter Optimization

Abstract

:1. Introduction

2. Background

2.1. Basic Differential Evolution Algorithm

2.1.1. Mutation

2.1.2. Crossover

2.1.3. Selection

2.2. Related Works

2.2.1. Adapting Control Parameters of Differential Evolution

2.2.2. Generation Strategy of Differential Evolution

2.2.3. Hybridized Versions of Differential Evolution

3. Modified Cloud Particles Differential Evolution Algorithm

3.1. The Proposed MCPDE

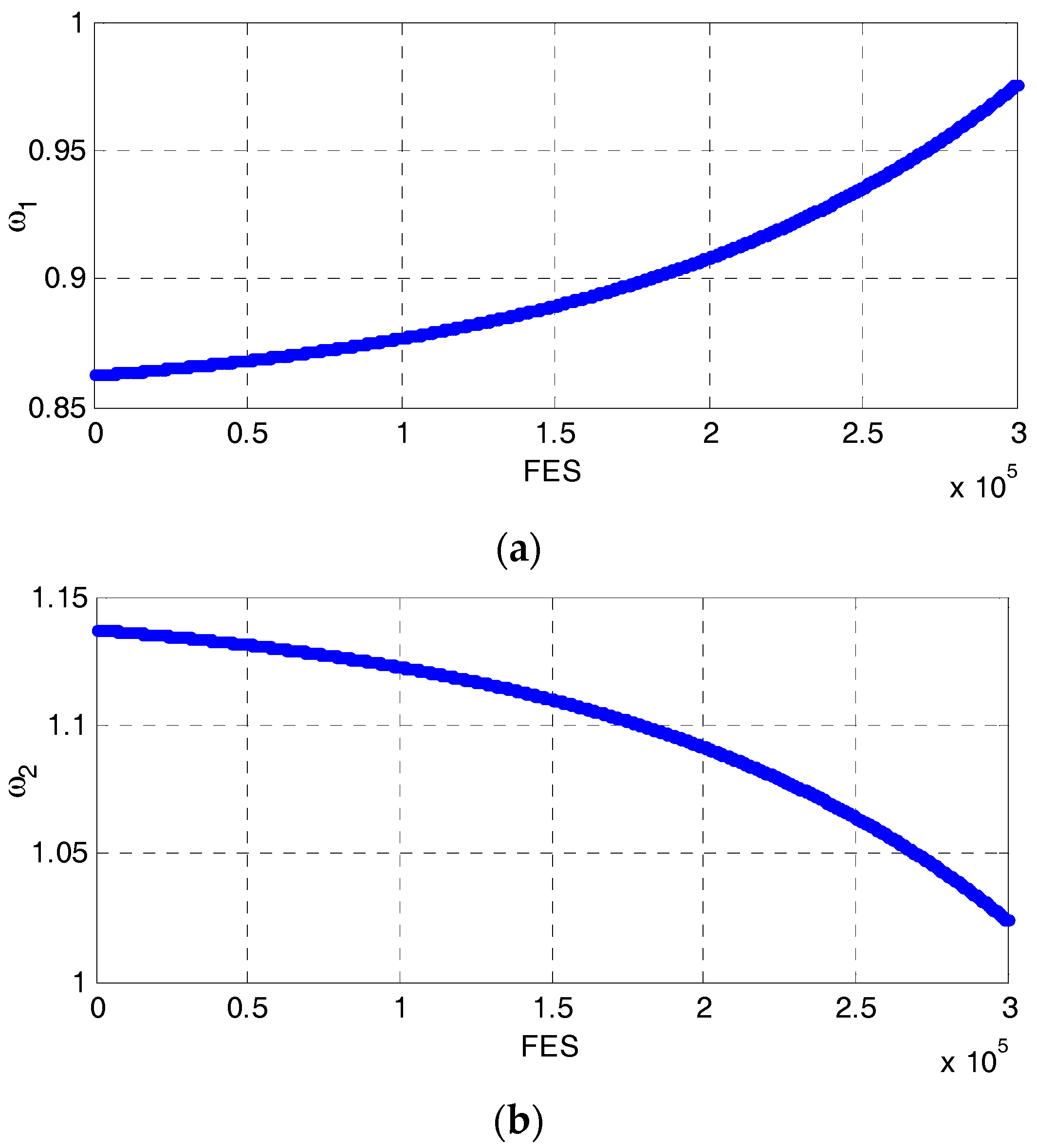

3.2. Control Parameters Assignments

3.3. Orthogonal Crossover

| Algorithm 1. MCPDE Algorithm | |

| 1: | Initialize D (number of dimensions), N (number of population), ; Archive A = φ; |

| 2: | Initialize population randomly |

| 3: | Generate mutation factors F and Cr according to Equations (9) and (10) |

| 4: | while the termination criteria are not met do |

| 5: | Randomly replace N/D inferior solutions by their opposite solutions according to Equation (8) |

| 6: | Generate new individuals according to Equations (5)–(7) |

| 7: | Randomly select an index i from {1, …, N} |

| 8: | Qrthogonal Crossover according to Equations (17)–(19) |

| 9: | for i = 1 to N do |

| 10: | if f(ui) < f(xi) then |

| 11: | xi → A; xi = ui |

| 12: | endif |

| 13: | endfor |

| 14: | Calcute N for the next generation according to Equations (14) and (15) |

| 15: | if |SF| ≥ N then |

| 16: | delete randomly selected elements from the SF and SCr so that the parameters size are N |

| 17: | elseif (|SF| < N and SF ≠ φ) then |

| 18: | Update F and Cr are according to Equations (11)–(13) |

| 19: | elseif SF = φ then |

| 20: | Fg+1 = Fg; Crg+1 = Crg; |

| 21: | endif |

| 22: | endwhile |

4. Experiments and Discussion

4.1. General Experimental Setting

- unimodal problems f1–f5

- basic multimodal problems f6–f20, and

- composition problems f21–f28

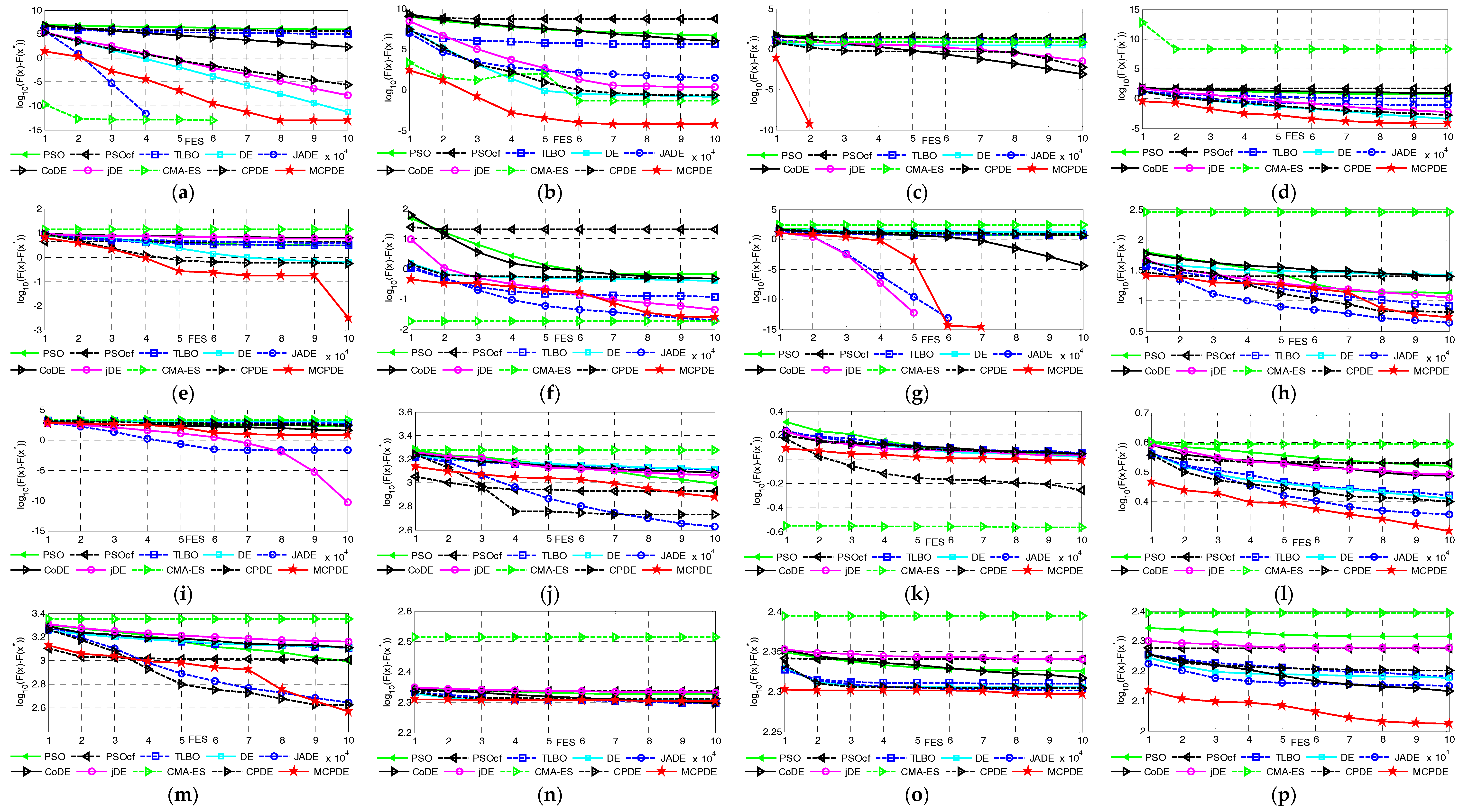

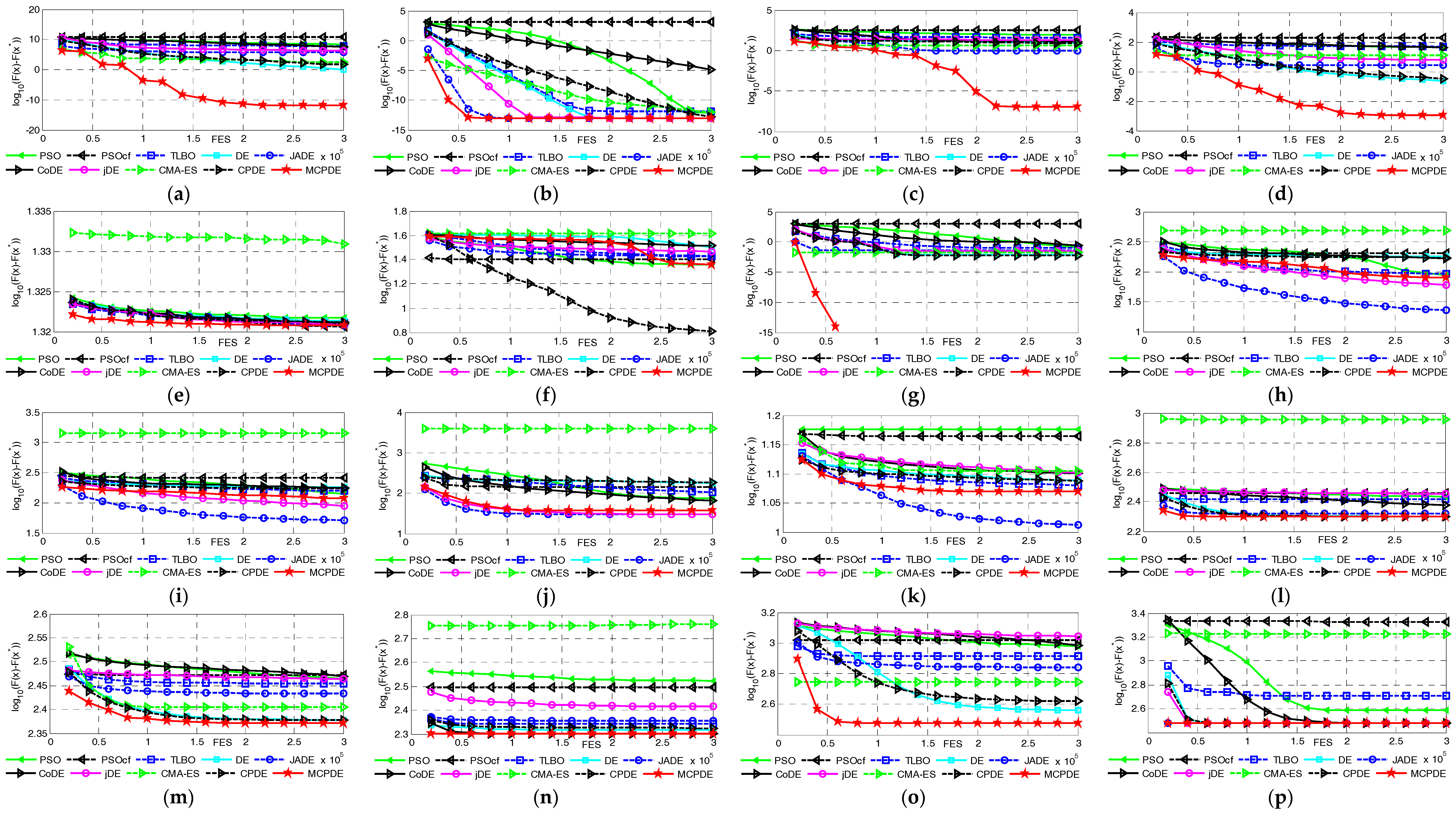

4.2. Comparison with Nine State-of-the-Art Intelligent Algorithms on 10 and 30 Dimension

5. Conclusions

Acknowledgments

Conflicts of Interest

References

- Wolfgang, B.; Guillaume, B.; Steffen, C.; James, A.F.; François, K.; Virginie, L.; Julian, F.M.; Miroslav, R.; Jeremy, J.R. From artificial evolution to computational evolution: A research agenda. Nature 2006, 7, 729–735. [Google Scholar]

- Tian, G.D.; Ren, Y.P.; Zhou, M.C. Dual-Objective Scheduling of Rescue Vehicles to Distinguish Forest Fires via Differential Evolution and Particle Swarm Optimization Combined Algorithm. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3009–3021. [Google Scholar] [CrossRef]

- Zaman, M.F.; Elsayed, S.M.; Ray, T.; Sarker, R.A. Evolutionary Algorithms for Dynamic Economic Dispatch Problems. IEEE Trans. Power Syst. 2016, 31, 1486–1495. [Google Scholar] [CrossRef]

- Mininno, E.; Neri, F.; Cupertino, F.; Naso, D. Compact differential evolution. IEEE Trans. Evolut. Comput. 2011, 15, 32–54. [Google Scholar] [CrossRef]

- Das, S.; Abraham, A.; Konar, A. Automatic clustering using an improved differential evolution algorithm. IEEE Trans. Syst. Man Cybern. Part A 2008, 38, 218–236. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Q.F.; Fernandez, F.V.; Gielen, G.G.E. An Efficient Evolutionary Algorithm for Chance-Constrained Bi-Objective Stochastic Optimization. IEEE Trans. Evol. Comput. 2013, 17, 786–796. [Google Scholar] [CrossRef]

- Segura, C.; Coello, C.A.C.; Hernández-Díaz, A.G. Improving the vector generation strategyof Differential Evolution for large-scale optimization. Inf. Sci. 2015, 323, 106–129. [Google Scholar] [CrossRef]

- Jara, E.C. Multi-Objective Optimization by Using Evolutionary Algorithms: The p-Optimality Criteria. IEEE Trans. Evol. Comput. 2014, 18, 167–179. [Google Scholar] [CrossRef]

- Chen, S.-H.; Chen, S.-M.; Jian, W.-S. Fuzzy time series forecasting based on fuzzy logical relationships and similarity measures. Inf. Sci. 2016, 327, 272–287. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Holland, J.H. Adaptation in Natural and Artificial Systems; University of Michigan Press: Ann Arbor, MI, USA, 1975. [Google Scholar]

- Koza, J.R. Genetic Programming: On the Programming of Computers by Means of Natural Selection; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Storn, R.; Price, K.V. Differential evolution—A simple and efficient heuristic for global optimization over continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Hansen, N.; Ostermeier, A. Completely derandomized self-adaptation in evolution strategies. Evolut. Comput. 2001, 9, 159–195. [Google Scholar] [CrossRef] [PubMed]

- Simon, D. Biogeography-based optimization. IEEE Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D., Jr.; Vecchi, M.P. Optimization by Simulated Annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Černý, V. Thermo dynamical approach to the traveling salesman problem: An efficient simulation algorithm. J. Optim. Theory Appl. 1985, 45, 41–51. [Google Scholar] [CrossRef]

- Shi, Y.H. Brain Storm Optimization Algorithm. Adv. Swarm Intell. Ser. Lect. Notes Comput. Sci. 2011, 6728, 303–309. [Google Scholar]

- Lam, A.Y.S.; Li, V.O.K. Chemical-Reaction-Inspired Metaheuristic for Optimization. IEEE Trans. Evol. Comput. 2010, 14, 381–399. [Google Scholar] [CrossRef] [Green Version]

- Mua, C.H.; Xie, J.; Liu, Y.; Chen, F.; Liu, Y.; Jiao, L.C. Memetic algorithm with simulated annealing strategy and tightness greedy optimization for community detection in networks. Appl. Soft Comput. 2015, 34, 485–501. [Google Scholar] [CrossRef]

- Cheng, S.; Shi, Y.H.; Qin, Q.D.; Ting, T.O.; Bai, R.B. Maintaining Population Diversity in Brain Storm Optimization Algorithm. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 3230–3237.

- Basturk, B.; Karaboga, D. An Artifical BEE Colony(ABC) Algorithm for Numeric Function Optimization. In Proceedings of the IEEE Swarm Intelligence Symposium, Indianapolis, IN, USA, 12–14 May 2006.

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching-learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput. Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching-learning-based optimization: An optimization method for continuous non-linear large scale problems. Inf. Sci. 2012, 183, 1–15. [Google Scholar] [CrossRef]

- Pan, Q.K. An effective co-evolutionary artificial bee colony algorithm for steelmaking-continuous casting scheduling. Eur. J. Oper. Res. 2016, 250, 702–714. [Google Scholar] [CrossRef]

- José, A.; Osuna, D.; Lozano, M.; García-Martínez, C. An alternative artificial bee colony algorithm with destructive–constructive neighbourhood operator for the problem of composing medical crews. Inf. Sci. 2016, 326, 215–226. [Google Scholar]

- Li, W.; Wang, L.; Yao, Q.Z.; Jiang, Q.Y.; Yu, L.; Wang, B.; Hei, X.H. Cloud Particles Differential Evolution Algorithm: A Novel Optimization Method for Global Numerical Optimization. Math. Probl. Eng. 2015, 2015, 497398. [Google Scholar] [CrossRef]

- Qin, A.K.; Huang, V.L.; Suganthan, P.N. Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans. Evolut. Comput. 2009, 13, 398–417. [Google Scholar] [CrossRef]

- Jingqiao, Z.; Arthur, C.S. JADE: Adaptive Differential Evolution with Optional External Archive. IEEE Trans. Evolut. Comput. 2009, 13, 945–957. [Google Scholar] [CrossRef]

- Brest, J.; Greiner, S.; Boskovic, B.; Mernik, M.; Zumer, V. Self-Adapting Control Parameters in Differential Evolution: A Comparative Study on Numerical Benchmark Problems. IEEE Trans. Evol. Comput. 2006, 10, 646–657. [Google Scholar] [CrossRef]

- Tanabe, R.; Fukunaga, A. Success-History Based Parameter Adaptation for Differential Evolution. In Proceedings of the 2013 IEEE Congresson Evolutionary Computation, Cancun, Mexico, 20–23 June 2013; pp. 71–78.

- Ghosh, A.; Das, S.; Chowdhury, A.; Giri, R. An improved differential evolution algorithm with fitness-based adaptation of the control parameters. Inf. Sci. 2011, 181, 3749–3765. [Google Scholar] [CrossRef]

- Qin, F.Q.; Feng, Y.X. Self-adaptive differential evolution algorithm with discrete mutation control parameters. Expert Syst. Appl. 2015, 42, 1551–1572. [Google Scholar]

- Lu, X.F.; Tang, K.; Bernhard, S.; Yao, X. A new self-adaptation scheme for differential evolution. Neurocomputing 2014, 146, 2–16. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, Z.X.; Zhang, Q.F. Differential evolution with composite trial vector generation strategies and control parameters. IEEE Trans. Evol. Comput. 2011, 15, 55–66. [Google Scholar] [CrossRef]

- Tanabe, R.; Fukunaga, A.S. Improving the Search Performance of SHADE Using Linear Population Size Reduction. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 1658–1665.

- Swagatam, D.; Ajith, A.; Uday, K.C.; Amit, K. Differential evolution using a neighborhood-based mutation operator. IEEE Trans. Evol. Comput. 2009, 13, 526–553. [Google Scholar]

- Gong, W.Y.; Cai, Z.H.; Wang, Y. Repairing the crossover rate in adaptive differential evolution. Appl. Soft Comput. 2014, 15, 149–168. [Google Scholar] [CrossRef]

- Rahnamayan, S.; Hamid, R.T.; Magdy, M.A.S. Opposition-based differential evolution. IEEE Trans. Evolut. Comput. 2008, 12, 64–79. [Google Scholar] [CrossRef]

- Zhou, Y.Z.; Li, X.Y.; Gao, L. A differential evolution algorithm with intersect mutation operator. Appl. Soft Comput. 2013, 13, 390–401. [Google Scholar] [CrossRef]

- Michael, G.E.; Dimitris, K.T.; Nicos, G.P.; Vassilis, P.P.; Michael, N.V. Enhancing differential evolution utilizing proximity-based mutation operators. IEEE Trans. Evol. Comput. 2011, 15, 99–119. [Google Scholar]

- Zhu, W.; Tang, Y.; Fang, J.A.; Zhang, W.B. Adaptive population tuning scheme for differential evolution. Inf. Sci. 2013, 223, 164–191. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, Q.; Tsang, E.P. DE/EDA: A new evolutionary algorithm for global optimization. Inf. Sci. 2005, 169, 249–262. [Google Scholar] [CrossRef]

- Adam, P.P. Adaptive Memetic Differential evolution with global and local neighborhood-based mutation operators. Inf. Sci. 2013, 241, 164–194. [Google Scholar]

- Zheng, Y.J.; Xu, X.L.; Ling, H.F.; Chen, S.Y. A hybrid fireworks optimization method with differential evolution operators. Neurocomputing 2015, 148, 75–82. [Google Scholar] [CrossRef]

- Ali, R.Y. A new hybrid differential evolution algorithm for the selection of optimal machining parameters in milling operations. Appl. Soft Comput. 2013, 13, 1561–1566. [Google Scholar]

- Al, R.Y. Hybrid Taguchi-differential evolution algorithm for optimization of multi-pass turning operations. Appl. Soft Comput. 2013, 13, 1433–1439. [Google Scholar]

- Xiang, W.L.; Ma, S.F.; An, M.Q. hABCDE: A hybrid evolutionary algorithm based on artificial bee colony algorithm and differential evolution. Appl. Math. Comput. 2014, 238, 370–386. [Google Scholar] [CrossRef]

- Asafuddoula, M.; Tapabrata, R.; Ruhul, S. An adaptive hybrid differential evolution algorithm for single objective optimization. Appl. Math. Comput. 2014, 231, 601–618. [Google Scholar] [CrossRef]

- Antonin, P.; Carlos, A.C.C. A hybrid Differential Evolution—Tabu Search algorithm for the solution of Job-Shop Scheduling Problems. Appl. Soft Comput. 2013, 13, 462–474. [Google Scholar]

- Zhang, C.M.; Chen, J.; Xin, B. Distributed memetic differential evolution with the synergy of Lamarckian and Baldwinian learning. Appl. Soft Comput. 2013, 13, 2947–2959. [Google Scholar] [CrossRef]

- Rahnamayan, S.; Tizhoosh, H.; Salama, M. Opposition versus randomness in soft computing techniques. Appl. Soft Comput. 2008, 8, 906–918. [Google Scholar] [CrossRef]

- Cui, L.; Li, G.; Lin, Q.; Chen, J.; Lu, N. Adaptive differential evolution algorithm with novel mutation strategies in multiple sub-populations. Comput. Oper. Res. 2016, 67, 155–173. [Google Scholar] [CrossRef]

- Yoon, H.; Moon, B.R. An empirical study on the synergy of multiple crossover operators. IEEE Trans. Evol. Comput. 2002, 6, 212–223. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, Z.X.; Zhang, Q.F. Enhancing the search ability of differential evolution through orthogonal crossover. Inf. Sci. 2012, 185, 153–177. [Google Scholar] [CrossRef]

- Liang, J.J.; Qu, B.Y.; Suganthan, P.N.; Hernndez-Daz, A.G. Problem Definitions and Evaluation Criteria for the CEC 2013 Special Session on Real-Parameter Optimization; Technical Report 201212; Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China, Technical Report; Nanyang Technological University: Singapore, January 2013. [Google Scholar]

- Suganthan, P.N.; Hansen, N.; Liang, J.J.; Deb, K.; Chen, Y.-P.; Auger, A.; Tiwari, S. Problem Definitions and Evaluation Criteria for the CEC2005 Special Session on Real-Parameter Optimization; Technical Report; Nanyang Technological University: Singapore, KanGAL Report Number 2005005; Kanpur Genetic Algorithms Laboratory: Kanpur, India, May 2005. [Google Scholar]

- Clerc, M.; Kennedy, J. The particle swarm-explosion, stability, and convergence in a multidimensional complex space. IEEE Trans. Evol. Comput. 2002, 6, 58–73. [Google Scholar] [CrossRef]

| F | PSO | PSOcf | TLBO | DE | JADE | CoDE | jDE | CMA-ES | CPDE | MCPDE | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| f1 | Fmean | 5.30 × 10−14 | 31.2 | 3.03 × 10−14 | 0 | 0 | 1.80 × 10−11 | 0 | 0 | 0 | 0 |

| SD | 9.78 × 10−14 | 171 | 7.86 × 10−14 | 0 | 0 | 7.98 × 10−12 | 0 | 0 | 0 | 0 | |

| Max | 2.27 × 10−14 | 938 | 2.27 × 10−13 | 0 | 0 | 3.66 × 10−11 | 0 | 0 | 0 | 0 | |

| Min | 0 | 0 | 0 | 0 | 0 | 5.45 × 10−12 | 0 | 0 | 0 | 0 | |

| Compare/Rank | −/8 | −/10 | −/7 | ≈/1 | ≈/1 | −/9 | ≈/1 | ≈/1 | ≈/1 | \/1 | |

| f2 | Fmean | 9.59 × 105 | 5.00 × 105 | 1.06 × 105 | 5.98 × 10−12 | 0 | 198 | 1.61 × 10−8 | 0 | 3.04 × 10−6 | 9.85 × 10−14 |

| SD | 9.33 × 105 | 5.85 × 105 | 9.08 × 104 | 4.39 × 10−12 | 0 | 89 | 7.90 × 10−8 | 0 | 1.61 × 10−6 | 1.14 × 10−13 | |

| Max | 3.48 × 106 | 2.36 × 106 | 3.87 × 105 | 2.18 × 10−11 | 0 | 425 | 4.33 × 10−7 | 0 | 6.71 × 10−6 | 2.27 × 10−13 | |

| Min | 9.70 × 104 | 3.02 × 104 | 1.58 × 104 | 1.13 × 10−12 | 0 | 61.6 | 0 | 0 | 6.78 × 10−7 | 0 | |

| Compare/Rank | −/10 | −/9 | −/8 | −/4 | +/1 | −/7 | −/5 | +/1 | −/6 | \/3 | |

| f3 | Fmean | 4.66 × 106 | 4.99 × 108 | 4.82 × 105 | 0.135 | 26.8 | 1.05 × 106 | 2.15 | 4.35 × 10−2 | 0.194 | 6.34 × 10−5 |

| SD | 1.39 × 107 | 8.24 × 108 | 1.98 × 106 | 0.174 | 35.8 | 7.04 × 105 | 3.70 | 0.238 | 0.288 | 4.82 × 10−5 | |

| Max | 7.39 × 107 | 3.62 × 109 | 1.07 × 107 | 0.688 | 116 | 2.65 × 106 | 15.1 | 1.30 | 1.15 | 1.42 × 10−4 | |

| Min | 4.67 × 10−3 | 8.16 × 105 | 2.29 × 10−2 | 1.06 × 10−9 | 0 | 9.83 × 104 | 8.73 × 10−4 | 0 | 1.21 × 10−5 | 2.27 × 10−13 | |

| Compare/Rank | −/9 | −/10 | −/7 | −/3 | −/6 | −/8 | −/5 | −/2 | −/4 | \/1 | |

| f4 | Fmean | 3.87 × 103 | 2.05 × 103 | 2.98 × 103 | 7.57 × 10−14 | 320 | 9.83 × 10−1 | 3.72 × 10−12 | 0 | 0 | 0 |

| SD | 3.40 × 103 | 3.80 × 103 | 1.23 × 103 | 1.24 × 10−13 | 1.26 × 103 | 4.45 × 10−1 | 1.28 × 10−11 | 0 | 0 | 0 | |

| Max | 1.85 × 104 | 2.12 × 104 | 6.90 × 103 | 4.54 × 10−13 | 6.04 × 103 | 1.89 | 6.91 × 10−11 | 0 | 0 | 0 | |

| Min | 334 | 104 | 1.38 × 103 | 0 | 0 | 2.61 × 10−1 | 0 | 0 | 0 | 0 | |

| Compare/Rank | −/10 | −/8 | −/9 | −/4 | −/7 | −/6 | −/5 | ≈/1 | ≈/1 | \/1 | |

| f5 | Fmean | 1.21 × 10−13 | 18.2 | 1.47 × 10−13 | 0 | 0 | 5.17 × 10−8 | 0 | 2.08 × 10−13 | 0 | 0 |

| SD | 5.92 × 10−14 | 42.2 | 6.08 × 10−14 | 0 | 0 | 1.88 × 10−8 | 0 | 1.12 × 10−13 | 0 | 0 | |

| Max | 2.27 × 10−13 | 136 | 3.41 × 10−13 | 0 | 0 | 1.04 × 10−7 | 0 | 6.82 × 10−13 | 0 | 0 | |

| Min | 0 | 1.13 × 10−13 | 1.13 × 10−13 | 0 | 0 | 1.45 × 10−8 | 0 | 1.13 × 10−13 | 0 | 0 | |

| Compare/Rank | −/6 | −/10 | −/7 | ≈/1 | ≈/1 | −/9 | ≈/1 | −/8 | ≈/1 | \/1 | |

| −/≈/+ | 5/0/0 | 5/0/0 | 5/0/0 | 3/2/0 | 2/2/1 | 5/0/0 | 3/2/0 | 2/2/1 | 2/3/0 | \ | |

| Avg-Rank | 8.60 | 9.40 | 7.60 | 2.60 | 3.20 | 7.80 | 3.40 | 2.60 | 2.60 | 1.40 | |

| F | PSO | PSOcf | TLBO | DE | JADE | CoDE | jDE | CMA-ES | CPDE | MCPDE | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| f1 | Fmean | 6.29 × 10−13 | 7.90 × 103 | 1.11 × 10−12 | 0 | 0 | 3.63 × 10−8 | 0 | 4.32 × 10−13 | 2.27 × 10−14 | 0 |

| SD | 2.78 × 10−13 | 3.56 × 103 | 6.49 × 10−13 | 0 | 0 | 1.18 × 10−8 | 0 | 1.92 × 10−13 | 6.99 × 10−14 | 0 | |

| Max | 1.81 × 10−12 | 1.97 × 104 | 3.63 × 10−12 | 0 | 0 | 6.32 × 10−8 | 0 | 9.09 × 10−13 | 2.27 × 10−13 | 0 | |

| Min | 2.27 × 10−13 | 2.70 × 103 | 4.54 × 10−13 | 0 | 0 | 1.26 × 10−8 | 0 | 2.27 × 10−13 | 0 | 0 | |

| Compare/Rank | −/7 | −/10 | −/8 | ≈/1 | ≈/1 | −/9 | ≈/1 | −/6 | ≈/1 | \/1 | |

| f2 | Fmean | 1.51 × 107 | 3.91 × 107 | 1.23 × 106 | 3.47 × 105 | 6.25 × 103 | 1.24 × 105 | 2.26 × 105 | 4.32 × 10−13 | 2.97 × 105 | 344 |

| SD | 1.12 × 107 | 4.06 × 107 | 5.38 × 105 | 2.59 × 105 | 6.93 × 103 | 1.40 × 105 | 1.59 × 105 | 1.61 × 10−13 | 2.04 × 105 | 252 | |

| Max | 4.32 × 107 | 1.43 × 108 | 2.31 × 106 | 9.68 × 105 | 3.43 × 104 | 7.28 × 105 | 7.46 × 105 | 6.82 × 10−13 | 7.35 × 105 | 951 | |

| Min | 7.68 × 105 | 2.30 × 106 | 2.41 × 105 | 4.85 × 104 | 545 | 2.42 × 104 | 5.69 × 104 | 2.27 × 10−13 | 8.34 × 104 | 21.4 | |

| Compare/Rank | −/9 | −/10 | −/8 | −/7 | −/3 | −/4 | −/5 | +/1 | −/6 | \/2 | |

| f3 | Fmean | 2.64 × 108 | 5.51 × 1010 | 5.44 × 107 | 1.22 | 6.46 × 105 | 2.88 × 107 | 1.53 × 106 | 263 | 44.7 | 1.31 × 10−12 |

| SD | 5.76 × 108 | 3.91 × 1010 | 8.74 × 107 | 5.22 | 1.97 × 106 | 1.40 × 107 | 3.19 × 106 | 1.01 × 103 | 167 | 2.61 × 10−12 | |

| Max | 2.89 × 109 | 1.51 × 1011 | 2.99 × 108 | 28.5 | 9.62 × 106 | 7.14 × 107 | 1.28 × 107 | 5.42 × 103 | 753 | 1.11 × 10−11 | |

| Min | 3.57 × 106 | 5.18 × 109 | 6.45 × 105 | 2.14 × 10−7 | 7.50 × 10−12 | 7.79 × 106 | 2.83 × 10−1 | 2.04 × 10−12 | 1.52 × 10−2 | 0 | |

| Compare/Rank | −/9 | −/10 | −/8 | −/2 | −/5 | −/7 | −/6 | −/4 | −/3 | \/1 | |

| f4 | Fmean | 7.34 × 103 | 4.68 × 103 | 8.05 × 103 | 1.32 × 103 | 1.03 × 104 | 17.8 | 4.90 | 3.94 × 10−13 | 776 | 3.91 × 10−3 |

| SD | 2.77 × 103 | 3.98 × 103 | 2.60 × 103 | 840 | 1.67 × 104 | 14.2 | 4.37 | 1.57 × 10−13 | 303 | 5.19 × 10−3 | |

| Max | 1.60 × 104 | 1.67 × 104 | 1.46 × 104 | 3.61 × 103 | 5.57 × 104 | 60.5 | 19.7 | 6.82 × 10−13 | 1.51 × 103 | 1.55 × 10−2 | |

| Min | 3.13 × 103 | 1.03 × 103 | 3.32 × 103 | 302 | 5.03 × 10−8 | 3.17 | 4.61 × 10−1 | 2.27 × 10−13 | 364 | 1.30 × 10−4 | |

| Compare/Rank | −/8 | −/7 | −/9 | −/6 | −/10 | −/4 | −/3 | +/1 | −/5 | \/2 | |

| f5 | Fmean | 7.50 × 10−13 | 1.29 × 103 | 1.44 × 10−12 | 9.47 × 10−14 | 9.09 × 10−14 | 1.35 × 10−5 | 9.09 × 10−14 | 9.32 × 10−13 | 1.53 × 10−13 | 9.47 × 10−14 |

| SD | 5.11 × 10−13 | 962 | 7.16 × 10−13 | 4.30 × 10−14 | 4.62 × 10−14 | 3.73 × 10−6 | 4.62 × 10−14 | 1.74 × 10−12 | 5.56 × 10−14 | 4.30 × 10−14 | |

| Max | 2.27 × 10−12 | 3.31 × 103 | 4.32 × 10−12 | 1.13 × 10−13 | 1.13 × 10−13 | 2.26 × 10−5 | 1.13 × 10−13 | 7.73 × 10−12 | 2.27 × 10−13 | 1.13 × 10−13 | |

| Min | 3.41 × 10−13 | 191 | 6.82 × 10−13 | 0 | 0 | 7.04 × 10−6 | 0 | 2.27 × 10−13 | 1.13 × 10−13 | 0 | |

| Compare/Rank | −/6 | −/10 | −/8 | ≈/1 | ≈/1 | −/9 | ≈/1 | −/7 | −/5 | \/1 | |

| −/≈/+ | 5/0/0 | 5/0/0 | 5/0/0 | 3/2/0 | 3/2/0 | 5/0/0 | 3/2/0 | 3/0/2 | 4/1/0 | \ | |

| Avg-Rank | 7.80 | 9.40 | 8.20 | 3.40 | 4.00 | 6.60 | 3.20 | 3.80 | 4.00 | 1.40 | |

| F | PSO | PSOcf | TLBO | DE | JADE | CoDE | jDE | CMA-ES | CPDE | MCPDE | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| f6 | Fmean | 16.6 | 26.5 | 6.64 | 2.61 | 6.21 | 8.43 × 10−4 | 3.19 × 10−2 | 7.01 | 5.67 × 10−3 | 0 |

| SD | 24.9 | 27.3 | 4.57 | 4.41 | 4.80 | 2.93 × 10−4 | 4.26 × 10−2 | 4.41 | 2.53 × 10−2 | 0 | |

| Max | 96.8 | 90.8 | 9.81 | 9.81 | 9.81 | 1.42 × 10−3 | 2.04 × 10−1 | 9.81 | 1.13 × 10−1 | 0 | |

| Min | 2.06 × 10−1 | 9.81 | 2.05 × 10−3 | 0 | 0 | 1.75 × 10−4 | 3.31 × 10−4 | 0 | 2.72 × 10−12 | 0 | |

| Compare/Rank | −/9 | −/10 | −/7 | −/5 | −/6 | −/2 | −/4 | −/8 | −/3 | \/1 | |

| f7 | Fmean | 5.56 | 46.3 | 1.07 | 3.43 × 10−4 | 7.97 × 10−2 | 8.29 | 4.96 × 10−3 | 2.07 × 108 | 1.48 × 10−3 | 7.58 × 10−5 |

| SD | 5.33 | 26.4 | 3.17 | 2.50 × 10−4 | 1.17 × 10−1 | 1.93 | 5.68 × 10−3 | 1.13 × 109 | 1.45 × 10−3 | 1.00 × 10−4 | |

| Max | 20.5 | 117 | 17.1 | 9.58 × 10−4 | 4.81 × 10−1 | 12.8 | 2.00 × 10−2 | 6.21 × 109 | 5.79 × 10−3 | 3.65 × 10−4 | |

| Min | 3.80 × 10−1 | 8.06 | 1.90 × 10−4 | 2.98 × 10−5 | 2.04 × 10−8 | 4.30 | 9.58 × 10−5 | 1.09 | 3.73 × 10−4 | 6.56 × 10−8 | |

| Compare/Rank | −/7 | −/9 | −/6 | −/2 | −/5 | −/8 | −/4 | −/10 | −/3 | \/1 | |

| f8 | Fmean | 20.3 | 20.3 | 20.3 | 20.3 | 20.3 | 20.3 | 20.3 | 20.3 | 20.3 | 20.3 |

| SD | 8.88 × 10−2 | 8.26 × 10−2 | 5.93 × 10−2 | 8.49 × 10−2 | 8.25 × 10−2 | 7.42 × 10−2 | 6.74 × 10−2 | 4.55 × 10−1 | 7.43 × 10−2 | 7.12 × 10−2 | |

| Max | 20.5 | 20.4 | 20.4 | 20.4 | 20.5 | 20.4 | 20.4 | 21.6 | 20.4 | 20.4 | |

| Min | 20.1 | 20.1 | 20.2 | 20 | 20.1 | 20.1 | 20.1 | 20 | 20.2 | 20.1 | |

| Compare/Rank | ≈/1 | ≈/1 | ≈/1 | ≈/1 | ≈/1 | ≈/1 | ≈/1 | −/10 | ≈/1 | \/1 | |

| f9 | Fmean | 3.11 | 4.21 | 2.95 | 6.20 × 10−1 | 3.74 | 6.06 | 5.76 | 14.1 | 5.60 × 10−1 | 1.66 × 10−1 |

| SD | 1.54 | 1.65 | 8.69 × 10−1 | 7.40 × 10−1 | 7.45 × 10−1 | 6.31 × 10−1 | 6.96 × 10−1 | 3.72 | 6.41 × 10−1 | 3.23 × 10−1 | |

| Max | 6.99 | 7.01 | 4.35 | 2.24 | 4.98 | 7.04 | 7.04 | 20.3 | 2.39 | 9.78 × 10−1 | |

| Min | 2.65 × 10−1 | 8.70 × 10−1 | 1.19 | 6.92 × 10−8 | 1.89 | 4.61 | 4.50 | 7.71 | 2.60 × 10−4 | 0 | |

| Compare/Rank | −/5 | −/7 | −/4 | −/3 | −/6 | −/9 | −/8 | −/10 | −/2 | \/1 | |

| f10 | Fmean | 6.52 × 10−1 | 20.7 | 1.16 × 10−1 | 3.91 × 10−1 | 1.95 × 10−2 | 4.59 × 10−1 | 4.47 × 10−2 | 1.83 × 10−2 | 4.82 × 10−1 | 2.51 × 10−2 |

| SD | 4.56 × 10−1 | 35.8 | 5.60 × 10−2 | 1.45 × 10−1 | 1.04 × 10−2 | 5.96 × 10−2 | 3.71 × 10−2 | 3.20 × 10−2 | 9.18 × 10−2 | 1.08 × 10−2 | |

| Max | 1.86 | 165 | 2.26 × 10−1 | 5.57 × 10−1 | 4.03 × 10−2 | 5.57 × 10−1 | 1.48 × 10−1 | 1.75 × 10−1 | 6.30 × 10−1 | 4.18 × 10−2 | |

| Min | 1.08 × 10−1 | 1.72 × 10−2 | 2.27 × 10−2 | 1.72 × 10−2 | 2.26 × 10−3 | 3.32 × 10−1 | 2.56 × 10−9 | 0 | 3.10 × 10−1 | 5.68 × 10−14 | |

| Compare/Rank | −/9 | −/10 | −/5 | −/6 | +/2 | −/7 | ≈/3 | +/1 | −/8 | \/3 | |

| f11 | Fmean | 3.78 | 8.29 | 5.29 | 16.7 | 0 | 3.41 × 10−5 | 0 | 286 | 3.79 | 0 |

| SD | 2.11 | 9.65 | 2.33 | 3.81 | 0 | 2.87 × 10−5 | 0 | 331 | 3.14 | 0 | |

| Max | 7.95 | 39.4 | 9.94 | 23.8 | 0 | 1.40 × 10−4 | 0 | 921 | 9.35 | 0 | |

| Min | 9.94 × 10−1 | 0 | 1.99 | 9.08 | 0 | 3.73 × 10−6 | 0 | 3.97 | 2.43 × 10−8 | 0 | |

| Compare/Rank | −/5 | −/8 | −/7 | −/9 | ≈/1 | −/4 | ≈/1 | −/10 | −/6 | \/1 | |

| f12 | Fmean | 13.5 | 25 | 8.18 | 26.8 | 4.38 | 25 | 11.4 | 284 | 6.57 | 5.36 |

| SD | 5.23 | 12 | 3.64 | 4.25 | 1.22 | 5.15 | 3.22 | 327 | 4.06 | 1.75 | |

| Max | 22.8 | 54.1 | 14.8 | 35.5 | 7.07 | 33.4 | 19 | 1.37 × 103 | 19.1 | 8.66 | |

| Min | 4.97 | 6.96 | 1.25 | 17.3 | 1.83 | 11 | 5.20 | 5.96 | 1.98 | 1.52 | |

| Compare/Rank | −/6 | −/7 | −/4 | −/9 | ≈/1 | −/7 | −/5 | −/10 | ≈/2 | \/2 | |

| f13 | Fmean | 22.1 | 33.5 | 11.6 | 24.7 | 5.27 | 26.6 | 14.8 | 311 | 7.92 | 6.69 |

| SD | 7.35 | 11.3 | 5.08 | 3.85 | 2.39 | 4.31 | 3.84 | 412 | 4.50 | 2.83 | |

| Max | 40.1 | 55.9 | 25.6 | 31.8 | 11.5 | 32.6 | 22.4 | 1.36 × 103 | 16.5 | 13.5 | |

| Min | 7.22 | 3.45 | 3.55 | 16.5 | 2.45 | 13.7 | 6.73 | 12.6 | 2.03 | 1.98 | |

| Compare/Rank | −/6 | −/9 | −/4 | −/7 | +/1 | −/8 | −/5 | −/10 | ≈/2 | \/2 | |

| f14 | Fmean | 226 | 236 | 598 | 995 | 2.28 × 10−2 | 38.8 | 4.74 × 10−11 | 1.80 × 103 | 275 | 6.97 |

| SD | 161 | 157 | 256 | 136 | 3.47 × 10-2 | 7.97 | 1.83 × 10-10 | 423 | 115 | 4.94 | |

| Max | 605 | 667 | 1.09 × 103 | 1.17 × 103 | 1.24 × 10-1 | 53.2 | 9.72 × 10-10 | 2.80 × 103 | 506 | 15.1 | |

| Min | 3.47 | 3.60 | 32.3 | 472 | 0 | 19.3 | 0 | 993 | 72.3 | 6.24 × 10-2 | |

| Compare/Rank | −/5 | −/6 | −/8 | −/9 | −/2 | −/4 | +/1 | −/10 | −/7 | \/3 | |

| f15 | Fmean | 982 | 847 | 1.28 × 103 | 1.31 × 103 | 426 | 1.20 × 103 | 1.15 × 103 | 1.88 × 103 | 535 | 760 |

| SD | 345 | 231 | 188 | 155 | 109 | 141 | 151 | 438 | 195 | 142 | |

| Max | 1.55 × 103 | 1.27 × 103 | 1.56 × 103 | 1.53 × 103 | 653 | 1.46 × 103 | 1.48 × 103 | 2.75 × 103 | 772 | 983 | |

| Min | 290 | 187 | 743 | 809 | 189 | 963 | 901 | 1.00 × 103 | 113 | 479 | |

| Compare/Rank | −/5 | −/4 | −/8 | −/9 | +/1 | −/7 | −/6 | −/10 | +/2 | \/3 | |

| f16 | Fmean | 1.09 | 5.56 × 10−1 | 1.13 | 1.04 | 1.11 | 1.10 | 1.07 | 2.72 × 10−1 | 1.10 | 9.92 × 10−1 |

| SD | 2.88 × 10−1 | 1.80 × 10−1 | 2.43 × 10−1 | 2.39 × 10−1 | 2.07 × 10−1 | 2.12 × 10−1 | 1.82 × 10−1 | 2.55 × 10−1 | 2.05 × 10−1 | 1.04 × 10−1 | |

| Max | 1.72 | 9.09 × 10−1 | 1.60 | 1.50 | 1.45 | 1.54 | 1.39 | 1.23 | 1.41 | 1.14 | |

| Min | 4.49 × 10−1 | 2.50 × 10−1 | 6.79 × 10−1 | 5.48 × 10−1 | 6.63 × 10−1 | 6.40 × 10−1 | 7.12 × 10−1 | 3.62 × 10−2 | 5.14 × 10−1 | 7.16 × 10−1 | |

| Compare/Rank | −/6 | +/2 | −/10 | ≈/3 | −/9 | −/7 | −/5 | +/1 | −/7 | \/3 | |

| f17 | Fmean | 14.2 | 13.3 | 24.7 | 27.7 | 10.1 | 11.4 | 10.1 | 956 | 28 | 10.3 |

| SD | 4.68 | 1.46 | 3.31 | 3.28 | 1.44 × 10−14 | 5.07 × 10−1 | 2.05 × 10−10 | 469 | 2.80 | 3.33 × 10−1 | |

| Max | 21.7 | 17.9 | 34.4 | 35.7 | 10.1 | 12.3 | 10.1 | 1.58 × 103 | 33.9 | 11.3 | |

| Min | 4.06 | 11 | 18.2 | 22.2 | 10.1 | 9.56 | 10.1 | 22.4 | 23.6 | 10.1 | |

| Compare/Rank | ≈/4 | ≈/4 | −/7 | −/8 | +/1 | +/4 | +/1 | −/10 | −/9 | \/3 | |

| f18 | Fmean | 34.6 | 21.8 | 32.6 | 36.1 | 18.8 | 42.2 | 31.1 | 925 | 36.3 | 25.9 |

| SD | 10.7 | 7.60 | 4.00 | 3.85 | 2.24 | 5.46 | 3.32 | 462 | 3.30 | 4.38 | |

| Max | 54.2 | 40.2 | 41.5 | 42.9 | 22.9 | 52.2 | 36.5 | 1.85 × 103 | 42.6 | 32.7 | |

| Min | 5.60 | 7.21 | 25.6 | 26.9 | 15.4 | 31.8 | 23.6 | 15.1 | 30.8 | 15.6 | |

| Compare/Rank | −/6 | +/2 | −/5 | −/7 | +/1 | −/9 | −/4 | −/10 | −/8 | \/3 | |

| f19 | Fmean | 6.33 × 10−1 | 1.99 | 9.83 × 10−1 | 2.17 | 3.37 × 10−1 | 9.24 × 10−1 | 4.00 × 10−1 | 1.10 | 1.95 | 4.44 × 10−1 |

| SD | 1.78 × 10−1 | 5.08 | 2.24 × 10−1 | 3.47 × 10−1 | 4.32 × 10−2 | 1.49 × 10−1 | 9.80 × 10−2 | 4.74 × 10−1 | 3.22 × 10−1 | 1.05 × 10−1 | |

| Max | 1.00 | 20.9 | 1.35 | 2.66 | 3.94 × 10−1 | 1.24 | 5.74 × 10−1 | 2.52 | 2.34 | 6.60 × 10−1 | |

| Min | 2.99 × 10−1 | 3.66 × 10−1 | 5.86 × 10−1 | 9.78 × 10−1 | 2.08 × 10−1 | 5.82 × 10−1 | 1.48 × 10−1 | 3.99 × 10−1 | 1.25 | 2.39 × 10−1 | |

| Compare/Rank | ≈/3 | −/9 | −/7 | −/10 | +/1 | −/6 | ≈/2 | −/7 | −/8 | \/2 | |

| f20 | Fmean | 3.31 | 3.39 | 2.64 | 2.57 | 2.27 | 3.06 | 3.10 | 3.92 | 2.50 | 1.99 |

| SD | 7.65 × 10−1 | 4.09 × 10−1 | 4.20 × 10−1 | 2.61 × 10−1 | 4.49 × 10−1 | 2.37 × 10−1 | 1.75 × 10−1 | 4.20 × 10−1 | 2.77 × 10−1 | 1.87 × 10−1 | |

| Max | 5.00 | 4.01 | 3.32 | 3.29 | 3.23 | 3.39 | 3.45 | 4.99 | 3.07 | 2.24 | |

| Min | 1.97 | 2.26 | 1.83 | 1.93 | 1.72 | 2.58 | 2.73 | 2.92 | 2.09 | 1.55 | |

| Compare/Rank | −/8 | −/9 | −/5 | −/4 | ≈/1 | −/6 | −/7 | −/10 | −/3 | \/1 | |

| −/≈/+ | 14/1/0 | 12/1/2 | 14/1/0 | 14/1/0 | 4/3/8 | 14/1/0 | 9/4/2 | 13/0/2 | 11/3/1 | \ | |

| Avg-Rank | 5.67 | 6.47 | 5.87 | 6.20 | 2.60 | 5.93 | 3.80 | 8.47 | 4.73 | 2.00 | |

| F | PSO | PSOcf | TLBO | DE | JADE | CoDE | jDE | CMA-ES | CPDE | MCPDE | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| f6 | Fmean | 90.4 | 339 | 36.6 | 8.88 | 8.80 × 10−1 | 13.5 | 18.1 | 4.40 | 8.05 | 1.18 × 10−7 |

| SD | 41.2 | 252 | 28.2 | 6.12 | 4.82 | 2.51 | 8.43 × 10−1 | 10 | 4.39 | 1.72 × 10−7 | |

| Max | 150 | 1.03 × 103 | 80.1 | 26.4 | 26.4 | 26.4 | 20.5 | 26.4 | 26.4 | 5.58 × 10−7 | |

| Min | 6.90 | 50.4 | 3.35 | 4.18 × 10−2 | 0 | 11.9 | 16.4 | 1.13 × 10−13 | 5.63 | 1.34 × 10−10 | |

| Compare/Rank | −/9 | −/10 | −/8 | −/5 | −/2 | −/6 | −/7 | −/3 | −/4 | \/1 | |

| f7 | Fmean | 45.9 | 197 | 49.2 | 2.40 × 10−1 | 2.68 | 40.4 | 5.90 | 12.1 | 3.30 × 10−1 | 1.07 × 10−3 |

| SD | 18.4 | 61.5 | 18 | 5.52 × 10−1 | 2.58 | 6.67 | 5.11 | 6.38 | 5.47 × 10−1 | 7.68 × 10−4 | |

| Max | 97 | 324 | 84.9 | 2.84 | 12.4 | 55 | 17.7 | 27.9 | 2.20 | 2.99 × 10−3 | |

| Min | 17.7 | 80.2 | 25.7 | 2.39 × 10−3 | 1.09 × 10−1 | 30.3 | 4.15 × 10−1 | 1.85 | 6.64 × 10−3 | 5.42 × 10−5 | |

| Compare/Rank | −/8 | −/10 | −/9 | −/2 | −/4 | −/7 | −/5 | −/6 | −/3 | \/1 | |

| f8 | Fmean | 20.9 | 20.9 | 20.9 | 20.9 | 20.9 | 20.9 | 20.9 | 21.4 | 20.9 | 20.9 |

| SD | 5.97 × 10−2 | 6.20 × 10−2 | 4.74 × 10−2 | 4.61 × 10−2 | 1.13 × 10−1 | 5.74 × 10−2 | 5.75 × 10−2 | 8.31 × 10−2 | 5.46 × 10−2 | 5.16 × 10−2 | |

| Max | 21 | 21 | 21 | 21 | 21 | 21 | 21 | 21.6 | 21 | 20.9 | |

| Min | 20.7 | 20.6 | 20.8 | 20.8 | 20.4 | 20.7 | 20.8 | 21.2 | 20.8 | 20.7 | |

| Compare/Rank | ≈/1 | ≈/1 | ≈/1 | ≈/1 | ≈/1 | ≈/1 | ≈/1 | −/10 | ≈/1 | \/1 | |

| f9 | Fmean | 22.8 | 25.2 | 26.8 | 32 | 26.8 | 32.5 | 29.2 | 41 | 6.48 | 22.9 |

| SD | 3.85 | 4.00 | 4.37 | 11.1 | 1.75 | 1.46 | 1.83 | 10.1 | 2.28 | 3.96 | |

| Max | 2.90 × 10 | 32.8 | 37.2 | 40.1 | 29.7 | 34.2 | 34 | 54.9 | 11.2 | 28 | |

| Min | 15.4 | 18.5 | 16.6 | 9.49 | 23.5 | 29.1 | 25.2 | 19.8 | 2.86 | 15 | |

| Compare/Rank | ≈/2 | ≈/2 | −/6 | −/8 | −/5 | −/9 | −/7 | −/10 | −/1 | \/2 | |

| f10 | Fmean | 1.61 × 10−1 | 1.01 × 103 | 1.20 × 10−1 | 7.88 × 10−3 | 4.54 × 10−2 | 2.46 × 10−1 | 3.80 × 10−2 | 1.78 × 10−2 | 6.53 × 10−3 | 0 |

| SD | 9.72 × 10−2 | 6.21 × 102 | 7.75 × 10−2 | 6.85 × 10−3 | 2.61 × 10−2 | 1.76 × 10−1 | 2.03 × 10−2 | 1.29 × 10−2 | 4.81 × 10−3 | 0 | |

| Max | 4.60 × 10−1 | 2.44 × 103 | 3.40 × 10−1 | 2.95 × 10−2 | 1.03 × 10−1 | 5.98 × 10−1 | 8.86 × 10−2 | 5.66 × 10−2 | 1.47 × 10−2 | 0 | |

| Min | 2.46 × 10−2 | 2.13 × 102 | 2.21 × 10−2 | 0 | 0 | 2.79 × 10−2 | 7.39 × 10−3 | 5.68 × 10−14 | 5.68 × 10−14 | 0 | |

| Compare/Rank | −/8 | −/10 | −/7 | −/3 | −/6 | −/9 | −/5 | −/4 | −/2 | \/1 | |

| f11 | Fmean | 33.9 | 151 | 105 | 129 | 0 | 25.1 | 0 | 109 | 71 | 2.77 |

| SD | 8.52 | 44.1 | 26.6 | 25.8 | 0 | 2.15 | 0 | 337 | 13.4 | 1.69 | |

| Max | 58.7 | 261 | 190 | 176 | 0 | 28.8 | 0 | 1.89 × 103 | 104 | 7.59 | |

| Min | 18.9 | 74.7 | 67.6 | 73 | 0 | 19 | 0 | 26.8 | 48.4 | 5.68 × 10-14 | |

| Compare/Rank | −/5 | −/10 | −/7 | −/9 | +/1 | −/4 | +/1 | −/8 | −/6 | \/3 | |

| f12 | Fmean | 88.2 | 201 | 92.1 | 180 | 22.9 | 165 | 59.6 | 484 | 173 | 80.1 |

| SD | 37.7 | 91.2 | 24.1 | 9.38 | 3.34 | 12.1 | 8.38 | 828 | 7.87 | 22.2 | |

| Max | 227 | 421 | 147 | 196 | 29.8 | 190 | 70.6 | 2.65 × 103 | 191 | 113 | |

| Min | 41.7 | 78.3 | 43.7 | 156 | 25.7 | 141 | 34.3 | 25.8 | 161 | 44.1 | |

| Compare/Rank | ≈/3 | −/9 | ≈/3 | −/8 | +/1 | −/6 | +/2 | −/10 | −/7 | \/3 | |

| f13 | Fmean | 140 | 255 | 156 | 180 | 50.8 | 175 | 89.5 | 1.44 × 103 | 173 | 117 |

| SD | 32.8 | 50.4 | 32.2 | 11.3 | 13.5 | 14.7 | 18.3 | 1.41 × 103 | 8.86 | 21.9 | |

| Max | 186 | 378 | 224 | 198 | 76.5 | 201 | 131 | 5.06 × 103 | 188 | 140 | |

| Min | 83.4 | 179 | 76.7 | 146 | 17.9 | 129 | 58.8 | 79.3 | 155 | 65.7 | |

| Compare/Rank | −/4 | −/9 | −/5 | −/8 | +/1 | −/7 | +/2 | −/10 | −/6 | \/3 | |

| f14 | Fmean | 1.22 × 103 | 2.65 × 103 | 5.64 × 103 | 6.08 × 103 | 3.12 × 10−2 | 1.39 × 103 | 8.13 × 10−1 | 5.27 × 103 | 3.36 × 103 | 292 |

| SD | 317 | 656 | 1.21 × 103 | 549 | 2.49 × 10−2 | 154 | 2.11 | 690 | 644 | 118 | |

| Max | 1.84 × 103 | 3.79 × 103 | 7.11 × 103 | 6.87 × 103 | 1.04 × 10−1 | 1.70 × 103 | 8.89 | 7.44 × 103 | 4.43 × 103 | 592 | |

| Min | 634 | 1.56 × 103 | 1.71 × 103 | 4.43 × 103 | 1.81 × 10−12 | 1.08 × 103 | 5.07 × 10−9 | 4.07 × 103 | 1.94 × 103 | 91.8 | |

| Compare/Rank | +/4 | −/6 | −/9 | −/10 | +/1 | +/5 | +/2 | −/8 | −/7 | \/3 | |

| f15 | Fmean | 6.19 × 103 | 4.34 × 103 | 7.07 × 103 | 7.12 × 103 | 3.20 × 103 | 6.92 × 103 | 5.60 × 103 | 5.16 × 103 | 7.04 × 103 | 6.66 × 103 |

| SD | 1.25 × 103 | 784 | 331 | 216 | 347 | 369 | 392 | 798 | 288 | 391 | |

| Max | 7.92 × 103 | 6.65 × 103 | 7.62 × 103 | 7.47 × 103 | 3.70 × 103 | 7.43 × 103 | 6.57 × 103 | 6.69 × 103 | 7.37 × 103 | 7.19 × 103 | |

| Min | 3.37 × 103 | 3.07 × 103 | 6.18 × 103 | 6.67 × 103 | 2.37 × 103 | 6.13 × 103 | 4.39 × 103 | 3.79 × 103 | 6.37 × 103 | 6.08 × 103 | |

| Compare/Rank | ≈/5 | +/2 | −/9 | −/10 | +/1 | −/7 | +/4 | +/3 | −/8 | \/5 | |

| f16 | Fmean | 2.53 | 1.87 | 2.41 | 2.52 | 2.00 | 2.36 | 2.48 | 8.14 × 10−2 | 2.50 | 1.92 |

| SD | 4.26 × 10−1 | 4.64 × 10−1 | 2.88 × 10−1 | 3.76 × 10−1 | 7.07 × 10−1 | 2.49 × 10−1 | 1.69 × 10−1 | 5.62 × 10−2 | 2.94 × 10−1 | 1.69 × 10−1 | |

| Max | 3.34 | 2.66 | 2.92 | 3.07 | 2.96 | 2.90 | 2.76 | 2.85 × 10−1 | 3.01 | 2.06 | |

| Min | 1.46 | 7.24 × 10−1 | 1.64 | 1.32 | 5.95 × 10−1 | 1.78 | 2.13 | 1.99 × 10−2 | 1.54 | 1.23 | |

| Compare/Rank | −/10 | ≈/2 | −/6 | −/9 | ≈/2 | −/5 | −/7 | +/1 | −/8 | \/2 | |

| f17 | Fmean | 74.6 | 142 | 106 | 180 | 30.4 | 65.1 | 30.4 | 3.88 × 103 | 182 | 37.5 |

| SD | 19 | 88.5 | 27.1 | 16.3 | 1.05 × 10−14 | 3.62 | 1.70 × 10−6 | 665 | 18.4 | 2.55 | |

| Max | 105 | 352 | 173 | 211 | 30.4 | 71.2 | 30.4 | 5.00 × 103 | 213 | 42.3 | |

| Min | 35.7 | 58.9 | 69.8 | 151 | 30.4 | 56.7 | 30.4 | 2.54 × 103 | 146 | 33.6 | |

| Compare/Rank | −/5 | −/7 | −/6 | −/8 | +/1 | −/4 | +/1 | −/10 | −/9 | \/3 | |

| f18 | Fmean | 207 | 156 | 220 | 212 | 78.3 | 230 | 161 | 4.08 × 103 | 206 | 187 |

| SD | 30.2 | 51.7 | 15.5 | 9.55 | 6.43 | 9.76 | 16 | 911 | 10 | 10.1 | |

| Max | 268 | 252 | 253 | 229 | 94.8 | 248 | 187 | 5.97 × 103 | 223 | 199 | |

| Min | 140 | 81.9 | 182 | 193 | 65.5 | 211 | 133 | 1.76 × 103 | 178 | 155 | |

| Compare/Rank | −/6 | +/2 | −/8 | −/7 | +/1 | −/9 | +/3 | −/10 | −/5 | \/4 | |

| f19 | Fmean | 4.43 | 1.83 × 103 | 12.4 | 15 | 1.44 | 8.25 | 1.63 | 3.43 | 14.6 | 2.55 |

| SD | 1.19 | 3.59 × 103 | 5.75 | 8.57 × 10−1 | 1.18 × 10−1 | 8.60 × 10−1 | 1.48 × 10−1 | 8.32 × 10−1 | 1.15 | 4.83 × 10−1 | |

| Max | 6.69 | 1.62 × 104 | 26.2 | 16.5 | 1.70 | 9.60 | 1.87 | 5.24 | 16.2 | 3.26 | |

| Min | 2.31 | 5.85 | 5.00 | 13.1 | 1.11 | 6.46 | 1.31 | 1.66 | 12.4 | 1.53 | |

| Compare/Rank | −/5 | −/10 | −/7 | −/9 | +/1 | −/6 | +/2 | −/4 | −/8 | \/3 | |

| f20 | Fmean | 15 | 14.5 | 12 | 12.2 | 10.3 | 12.5 | 12.6 | 12.7 | 12.2 | 11.7 |

| SD | 0 | 9.53 × 10−1 | 3.30 × 10−1 | 2.38 × 10−1 | 6.17 × 10−1 | 2.28 × 10−1 | 3.51 × 10−1 | 9.28 × 10−1 | 3.22 × 10−1 | 3.37 × 10−1 | |

| Max | 15 | 15 | 12.6 | 12.6 | 11.9 | 13 | 13.3 | 14.3 | 12.7 | 12.1 | |

| Min | 15 | 11.5 | 11.4 | 11.6 | 9.05 | 12 | 12 | 10 | 11.3 | 10.6 | |

| Compare/Rank | −/10 | −/9 | −/3 | −/4 | +/1 | −/6 | −/7 | −/8 | −/5 | \/2 | |

| −/≈/+ | 11/4/0 | 10/3/2 | 13/2/0 | 14/1/0 | 4/2/9 | 14/1/0 | 6/1/8 | 13/0/2 | 13/1/1 | \ | |

| Avg-Rank | 5.67 | 6.60 | 6.27 | 6.73 | 1.93 | 6.07 | 3.73 | 7.00 | 5.33 | 2.47 | |

| F | PSO | PSOcf | TLBO | DE | JADE | CoDE | jDE | CMA-ES | CPDE | MCPDE | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| f21 | Fmean | 360 | 400 | 400 | 373 | 393 | 210 | 400 | 363 | 365 | 393 |

| SD | 85.5 | 2.97 × 10−13 | 1.58 × 10−1 | 69.2 | 36.5 | 54.8 | 2.89 × 10−13 | 96.4 | 87.5 | 36.5 | |

| Max | 400 | 400 | 400 | 400 | 400 | 400 | 400 | 400 | 400 | 400 | |

| Min | 100 | 400 | 399 | 200 | 200 | 100 | 400 | 100 | 100 | 200 | |

| Compare/Rank | ≈/2 | −/8 | −/8 | ≈/2 | ≈/2 | +/1 | −/8 | ≈/2 | ≈/2 | \/2 | |

| f22 | Fmean | 295 | 433 | 366 | 1.04 × 103 | 4.07 | 232 | 56 | 2.31 × 103 | 336 | 27.7 |

| SD | 130 | 219 | 307 | 188 | 4.93 | 47.8 | 18.4 | 475 | 156 | 18.1 | |

| Max | 531 | 876 | 1.24 × 103 | 1.36 × 103 | 17.3 | 318 | 93.4 | 3.11 × 103 | 68.5 | 88 | |

| Min | 69.6 | 33.9 | 41.4 | 491 | 4.42 × 10-6 | 111 | 22.8 | 1.31 × 103 | 120 | 0 | |

| Compare/Rank | −/5 | −/8 | −/7 | −/9 | +/1 | −/4 | −/3 | −/10 | −/6 | \/2 | |

| f23 | Fmean | 981 | 1.02 × 103 | 1.29 × 103 | 1.28 × 103 | 445 | 1.27 × 103 | 1.44 × 103 | 2.24 × 103 | 426 | 371 |

| SD | 369 | 398 | 238 | 134 | 175 | 199 | 212 | 518 | 234 | 157 | |

| Max | 1.65 × 103 | 1.88 × 103 | 1.77 × 103 | 1.61 × 103 | 897 | 1.66 × 103 | 1.82 × 103 | 3.12 × 103 | 793 | 675 | |

| Min | 246 | 245 | 706 | 1.06 × 103 | 163 | 879 | 766 | 1.14 × 103 | 72.9 | 33.9 | |

| Compare/Rank | −/4 | −/5 | −/8 | −/7 | ≈/1 | −/6 | −/9 | −/10 | ≈/1 | \/1 | |

| f24 | Fmean | 211 | 216 | 197 | 202 | 201 | 197 | 214 | 327 | 204 | 202 |

| SD | 4.09 | 18.9 | 19.3 | 16.6 | 6.82 | 28.9 | 11.2 | 149 | 3.09 | 3.31 | |

| Max | 218 | 228 | 219 | 209 | 211 | 215 | 222 | 758 | 209 | 208 | |

| Min | 200 | 119 | 148 | 115 | 168 | 133 | 160 | 107 | 200 | 200 | |

| Compare/Rank | −/7 | −/9 | ≈/2 | −/5 | ≈/2 | +/1 | −/8 | −/10 | −/6 | \/2 | |

| f25 | Fmean | 211 | 218 | 204 | 202 | 200 | 207 | 218 | 247 | 201 | 200 |

| SD | 5.12 | 4.26 | 3.65 | 2.96 | 8.77 | 11.2 | 2.10 | 50.5 | 2.27 | 1.15 | |

| Max | 223 | 227 | 212 | 212 | 209 | 213 | 222 | 350 | 204 | 204 | |

| Min | 201 | 210 | 200 | 200 | 155 | 148 | 213 | 200 | 200 | 200 | |

| Compare/Rank | −/7 | −/8 | −/5 | −/4 | −/2 | −/6 | −/8 | −/10 | −/3 | \/1 | |

| f26 | Fmean | 206 | 188 | 151 | 150 | 141 | 136 | 188 | 247 | 158 | 105 |

| SD | 75.8 | 61.8 | 34.5 | 36.1 | 45.3 | 4.20 | 29.2 | 1202 | 42.8 | 2.17 | |

| Max | 321 | 321 | 200 | 200 | 200 | 146 | 200 | 618 | 200 | 109 | |

| Min | 110 | 105 | 103 | 105 | 102 | 126 | 106 | 40.1 | 104 | 100 | |

| Compare/Rank | −/9 | −/7 | −/5 | −/4 | −/3 | −/2 | −/7 | −/10 | −/6 | \/1 | |

| f27 | Fmean | 506 | 562 | 359 | 323 | 300 | 344 | 480 | 360 | 315 | 300 |

| SD | 104 | 72.5 | 82.7 | 61.3 | 4.88 × 10-1 | 30.8 | 18.4 | 62.8 | 48.8 | 0 | |

| Max | 635 | 652 | 534 | 481 | 302 | 440 | 512 | 520 | 481 | 300 | |

| Min | 300 | 400 | 300 | 300 | 300 | 316 | 435 | 300 | 300 | 300 | |

| Compare/Rank | −/9 | −/10 | −/6 | −/4 | −/2 | −/5 | −/8 | −/7 | −/3 | \/1 | |

| f28 | Fmean | 320 | 403 | 308 | 246 | 293 | 193 | 286 | 1.00 × 103 | 270 | 300 |

| SD | 80.6 | 163 | 90.2 | 89.9 | 36.5 | 101 | 50.7 | 1.07 × 103 | 73.2 | 0 | |

| Max | 664 | 756 | 579 | 300 | 300 | 300 | 300 | 4.00 × 103 | 300 | 300 | |

| Min | 300 | 300 | 100 | 100 | 100 | 100 | 100 | 300 | 100 | 300 | |

| Compare/Rank | −/8 | −/9 | −/7 | ≈/1 | ≈/1 | ≈/1 | ≈/1 | −/10 | ≈/1 | \/1 | |

| −/≈/+ | 7/0/1 | 8/0/0 | 7/1/0 | 6/2/0 | 3/4/1 | 5/1/2 | 7/1/0 | 7/1/0 | 5/3/0 | \ | |

| Avg-Rank | 6.38 | 8.00 | 6.00 | 4.50 | 1.75 | 3.25 | 6.50 | 8.63 | 3.50 | 1.38 | |

| F | PSO | PSOcf | TLBO | DE | JADE | CoDE | jDE | CMA-ES | CPDE | MCPDE | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| f21 | Fmean | 290 | 774 | 318 | 302 | 305 | 330 | 295 | 316 | 269 | 256 |

| SD | 83.1 | 343 | 70.2 | 83.7 | 64.7 | 101 | 72.3 | 94.2 | 76.9 | 50.4 | |

| Max | 443 | 1.89 × 103 | 443 | 443 | 443 | 443 | 443 | 443 | 443 | 300 | |

| Min | 200 | 200 | 200 | 200 | 200 | 200 | 200 | 200 | 200 | 200 | |

| Compare/Rank | −/3 | −/10 | −/8 | −/5 | −/6 | −/9 | −/4 | −/7 | −/2 | \/1 | |

| f22 | Fmean | 1.30 × 103 | 2.60 × 103 | 1.92 × 103 | 6.13 × 103 | 93.5 | 2.21 × 103 | 232 | 7.08 × 103 | 3.56 × 103 | 353 |

| SD | 405 | 627 | 1.18 × 103 | 727 | 30.6 | 268 | 43 | 868 | 760 | 118 | |

| Max | 2.36 × 103 | 3.74 × 103 | 6.04 × 103 | 7.18 × 103 | 122 | 2.64 × 103 | 314 | 8.45 × 103 | 4.58 × 103 | 678 | |

| Min | 754 | 1.48 × 103 | 609 | 4.69 × 103 | 15.3 | 1.66 × 103 | 160 | 4.66 × 103 | 1.73 × 103 | 167 | |

| Compare/Rank | −/4 | −/7 | −/5 | −/9 | +/1 | −/6 | +/2 | −/10 | −/8 | \/3 | |

| f23 | Fmean | 6.19 × 103 | 4.76 × 103 | 7.06 × 103 | 7.18 × 103 | 3.53 × 103 | 7.24 × 103 | 6.18 × 103 | 7.07 × 103 | 7.15 × 103 | 5.96 × 103 |

| SD | 1.26 × 103 | 999 | 315 | 203 | 325 | 223 | 418 | 634 | 381 | 483 | |

| Max | 7.77 × 103 | 7.07 × 103 | 7.57 × 103 | 7.66 × 103 | 4.13 × 103 | 7.65 × 103 | 7.52 × 103 | 8.18 × 103 | 7.76 × 103 | 6.68 × 103 | |

| Min | 2.99 × 103 | 3.01 × 103 | 6.44 × 103 | 6.78 × 103 | 2.73 × 103 | 6.70 × 103 | 5.49 × 103 | 5.51 × 103 | 5.98 × 103 | 4.99 × 103 | |

| Compare/Rank | ≈/3 | +/2 | −/6 | −/9 | +/1 | −/10 | ≈/3 | −/7 | −/8 | \/3 | |

| f24 | Fmean | 272 | 288 | 261 | 200 | 208 | 237 | 284 | 909 | 200 | 200 |

| SD | 10.5 | 10 | 7.89 | 3.25 | 7.42 | 6.97 | 4.30 | 687 | 2.72 × 10-1 | 6.02 × 10-3 | |

| Max | 296 | 303 | 278 | 217 | 228 | 252 | 291 | 2.23 × 103 | 201 | 200 | |

| Min | 255 | 271 | 242 | 200 | 200 | 222 | 275 | 213 | 200 | 200 | |

| Compare/Rank | −/7 | −/9 | −/6 | −/3 | −/4 | −/5 | −/8 | −/10 | −/2 | \/1 | |

| f25 | Fmean | 291 | 296 | 284 | 238 | 271 | 294 | 290 | 254 | 238 | 235 |

| SD | 10 | 9.61 | 9.88 | 4.99 | 15.1 | 5.42 | 5.21 | 27.7 | 4.12 | 2.57 | |

| Max | 315 | 316 | 305 | 247 | 289 | 303 | 297 | 387 | 244 | 238 | |

| Min | 272 | 278 | 266 | 228 | 239 | 279 | 277 | 201 | 230 | 229 | |

| Compare/Rank | −/8 | −/10 | −/6 | −/2 | −/5 | −/9 | −/7 | −/4 | −/2 | \/1 | |

| f26 | Fmean | 333 | 312 | 219 | 20.7 | 226 | 200 | 260 | 574 | 211 | 200 |

| SD | 61 | 84.2 | 50 | 27.5 | 54.2 | 5.20 × 10-3 | 86.9 | 504 | 35.1 | 2.26 × 10-4 | |

| Max | 373 | 391 | 352 | 316 | 345 | 200 | 389 | 1.87 × 103 | 317 | 200 | |

| Min | 200 | 200 | 200 | 200 | 200 | 200 | 200 | 132 | 200 | 200 | |

| Compare/Rank | −/9 | −/8 | −/5 | −/3 | −/6 | −/2 | −/7 | −/10 | −/4 | \/1 | |

| f27 | Fmean | 956 | 1.04 × 103 | 820 | 363 | 691 | 962 | 1.11 × 103 | 555 | 416 | 300 |

| SD | 90.5 | 75.9 | 85.5 × 10 | 85.4 | 228 | 153 | 32.7 | 123 | 109 | 1.19 × 10-1 | |

| Max | 1.10 × 103 | 1.20 × 103 | 961 | 513 | 1.00 × 103 | 1.17 × 103 | 1.17 × 103 | 799 | 617 | 300 | |

| Min | 775 | 861 | 660 | 300 | 309 | 659 | 1.04 × 103 | 387 | 300 | 300 | |

| Compare/Rank | −/7 | −/9 | −/6 | −/2 | −/5 | −/8 | −/10 | −/4 | −/3 | \/1 | |

| f28 | Fmean | 385 | 2.13 × 103 | 514 | 300 | 300 | 300 | 300 | 300 | 300 | 300 |

| SD | 325 | 258 | 639 | 2.27 × 10-13 | 0 | 6.78 × 10-3 | 0 | 3.75 × 103 | 1.84 × 10-9 | 2.65 × 10-13 | |

| Max | 1.63 × 103 | 2.84 × 103 | 2.69 × 103 | 300 | 300 | 300 | 300 | 1.34 × 104 | 300 | 300 | |

| Min | 300 | 1.67 × 103 | 100 | 300 | 300 | 300 | 300 | 100 | 300 | 300 | |

| Compare/Rank | −/7 | −/10 | −/8 | ≈/1 | ≈/1 | −/6 | ≈/1 | −/9 | ≈/1 | \/1 | |

| −/≈/+ | 7/1/0 | 7/0/1 | 8/0/0 | 7/1/0 | 5/1/2 | 8/0/0 | 5/2/1 | 8/0/0 | 7/1/0 | \ | |

| Avg-Rank | 6.00 | 8.13 | 6.25 | 4.25 | 3.63 | 6.88 | 5.25 | 7.63 | 3.75 | 1.50 | |

| D | PSO | PSOcf | TLBO | DE | JADE | CoDE | jDE | CMA-ES | CPDE | MCPDE | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10 | −/≈/+ | 26/2/0 | 25/1/2 | 26/2/0 | 23/5/0 | 9/9/10 | 24/2/2 | 19/7/2 | 22/3/3 | 18/9/1 | \ |

| Avg-rank | 6.39 | 7.43 | 6.21 | 5.07 | 2.46 | 5.50 | 4.50 | 7.46 | 4.00 | 1.71 | |

| 30 | −/≈/+ | 23/5/0 | 22/3/3 | 26/2/0 | 24/4/0 | 12/5/11 | 27/1/0 | 14/5/9 | 24/0/4 | 24/3/1 | \ |

| Avg-rank | 6.14 | 7.54 | 6.61 | 5.43 | 2.79 | 6.39 | 4.07 | 6.61 | 4.64 | 2.00 |

© 2016 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W. A Modified Cloud Particles Differential Evolution Algorithm for Real-Parameter Optimization. Algorithms 2016, 9, 78. https://doi.org/10.3390/a9040078

Li W. A Modified Cloud Particles Differential Evolution Algorithm for Real-Parameter Optimization. Algorithms. 2016; 9(4):78. https://doi.org/10.3390/a9040078

Chicago/Turabian StyleLi, Wei. 2016. "A Modified Cloud Particles Differential Evolution Algorithm for Real-Parameter Optimization" Algorithms 9, no. 4: 78. https://doi.org/10.3390/a9040078