Fast Automatic Airport Detection in Remote Sensing Images Using Convolutional Neural Networks

Abstract

:1. Introduction

2. Methodology

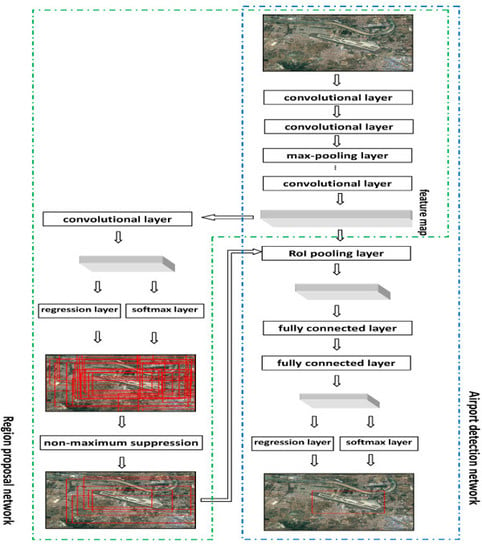

2.1. Convolutional Neural Network for Airport Detection

2.2. Region Proposal Network

2.3. Fine-Tuning and Argumentation

2.4. Improvement Techniques

3. Results

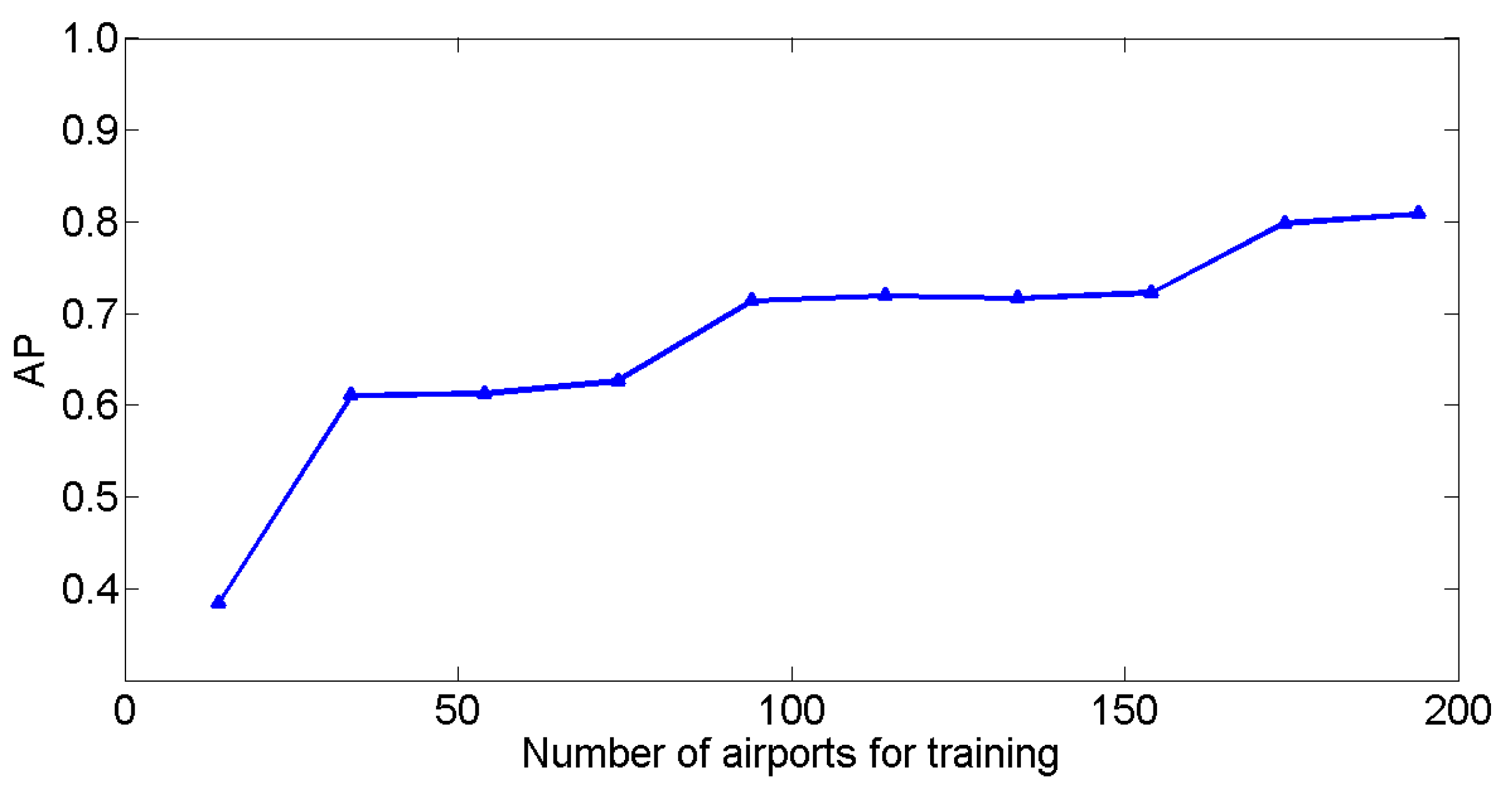

3.1. Dataset and Computational Platform

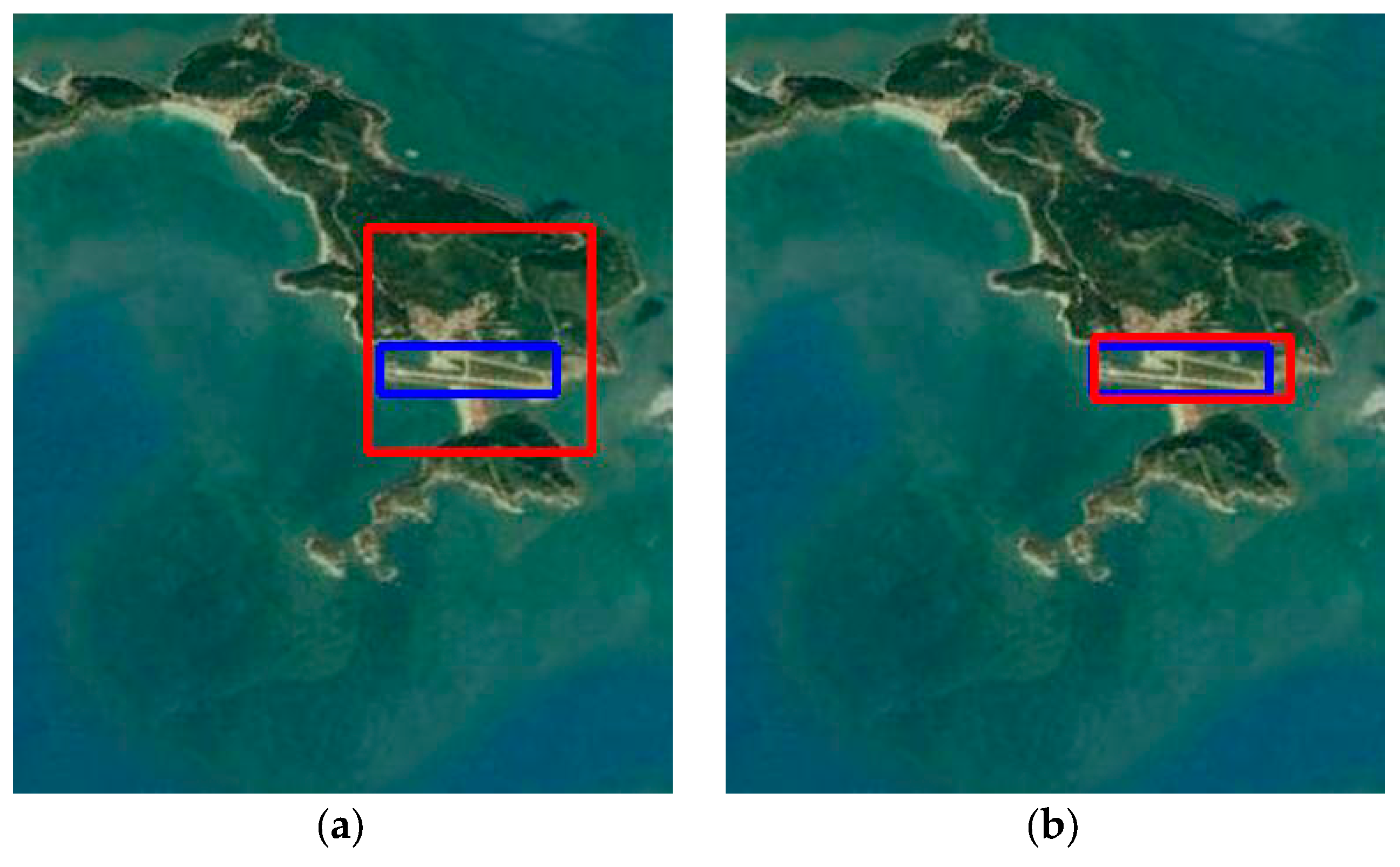

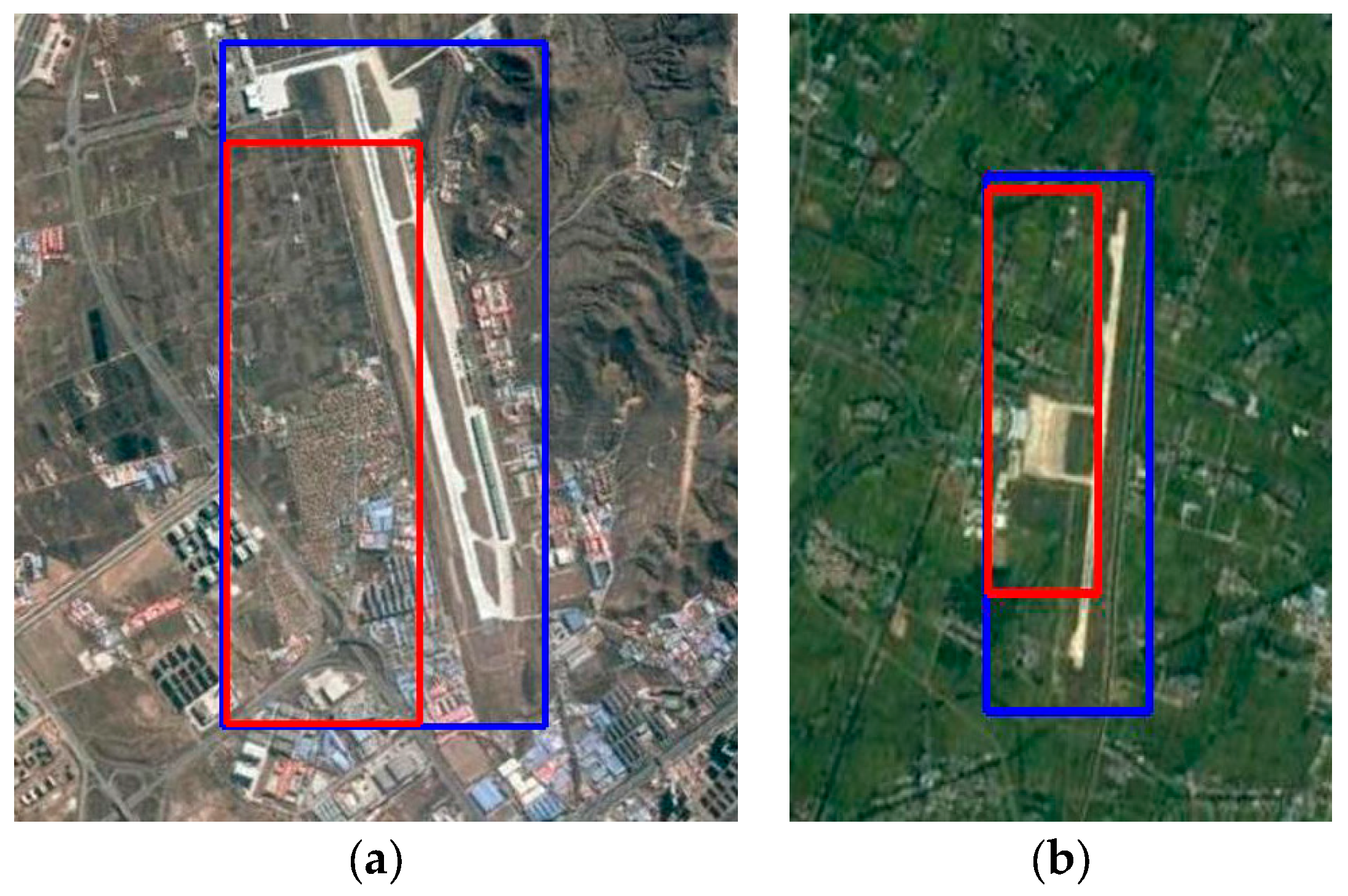

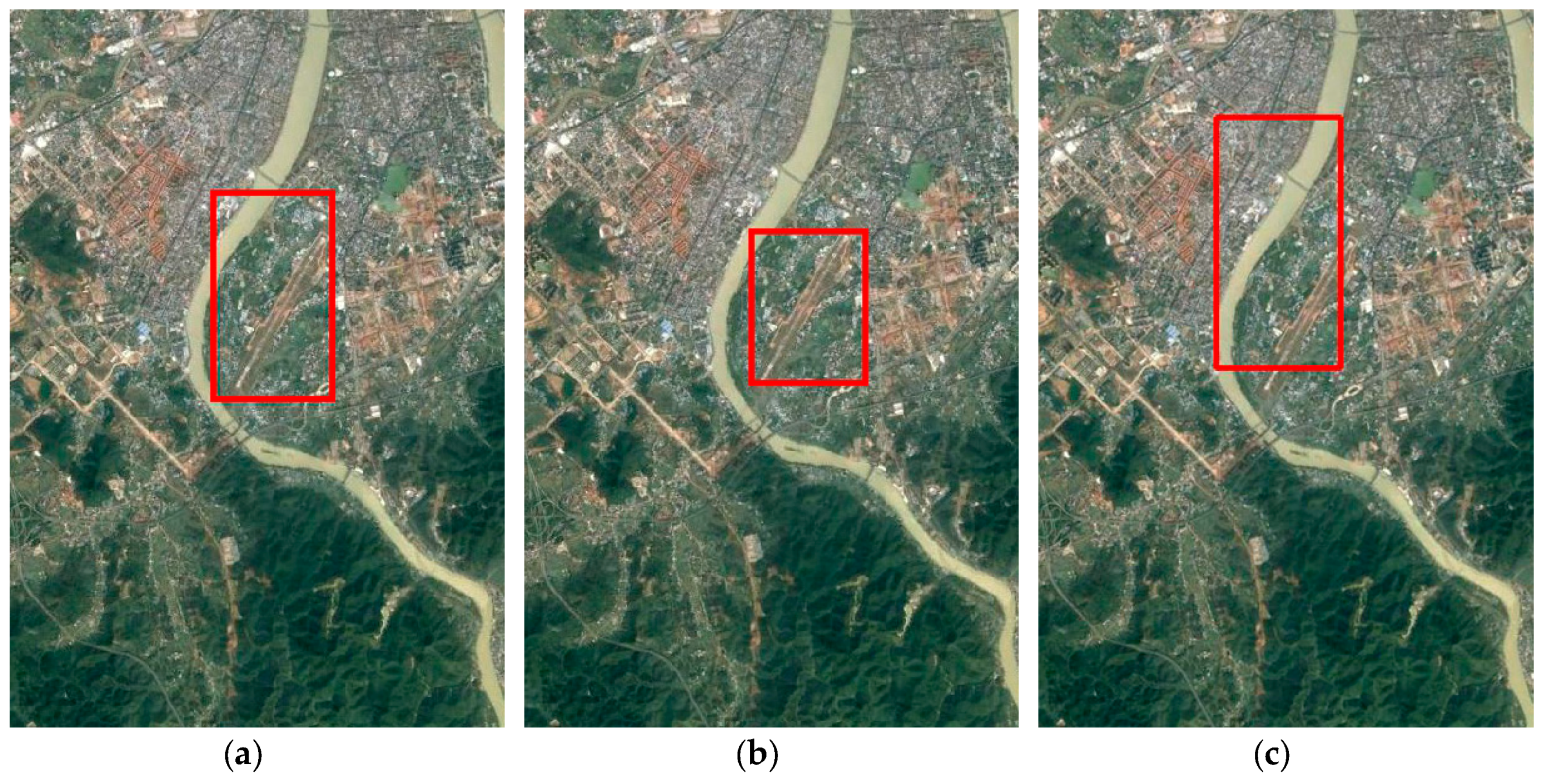

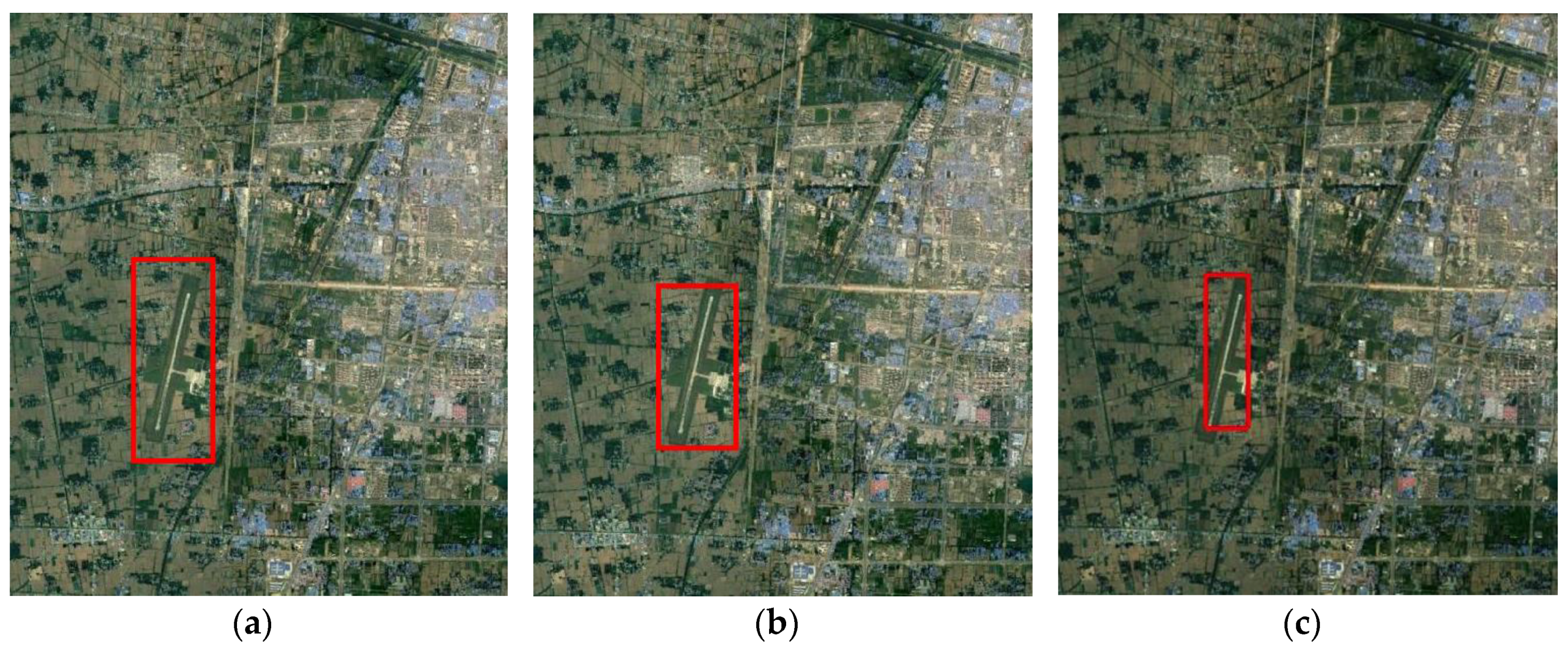

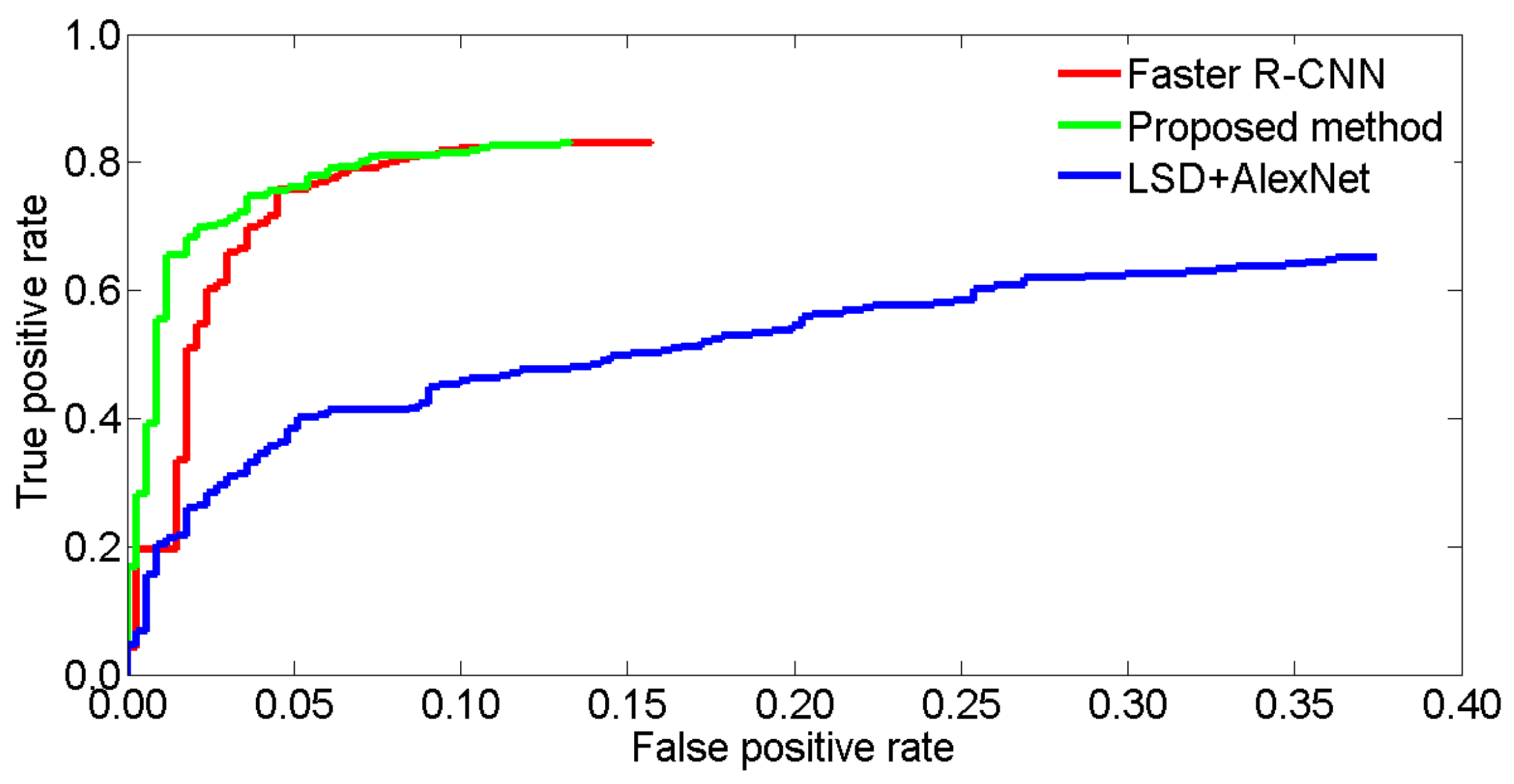

3.2. Results on Test Images

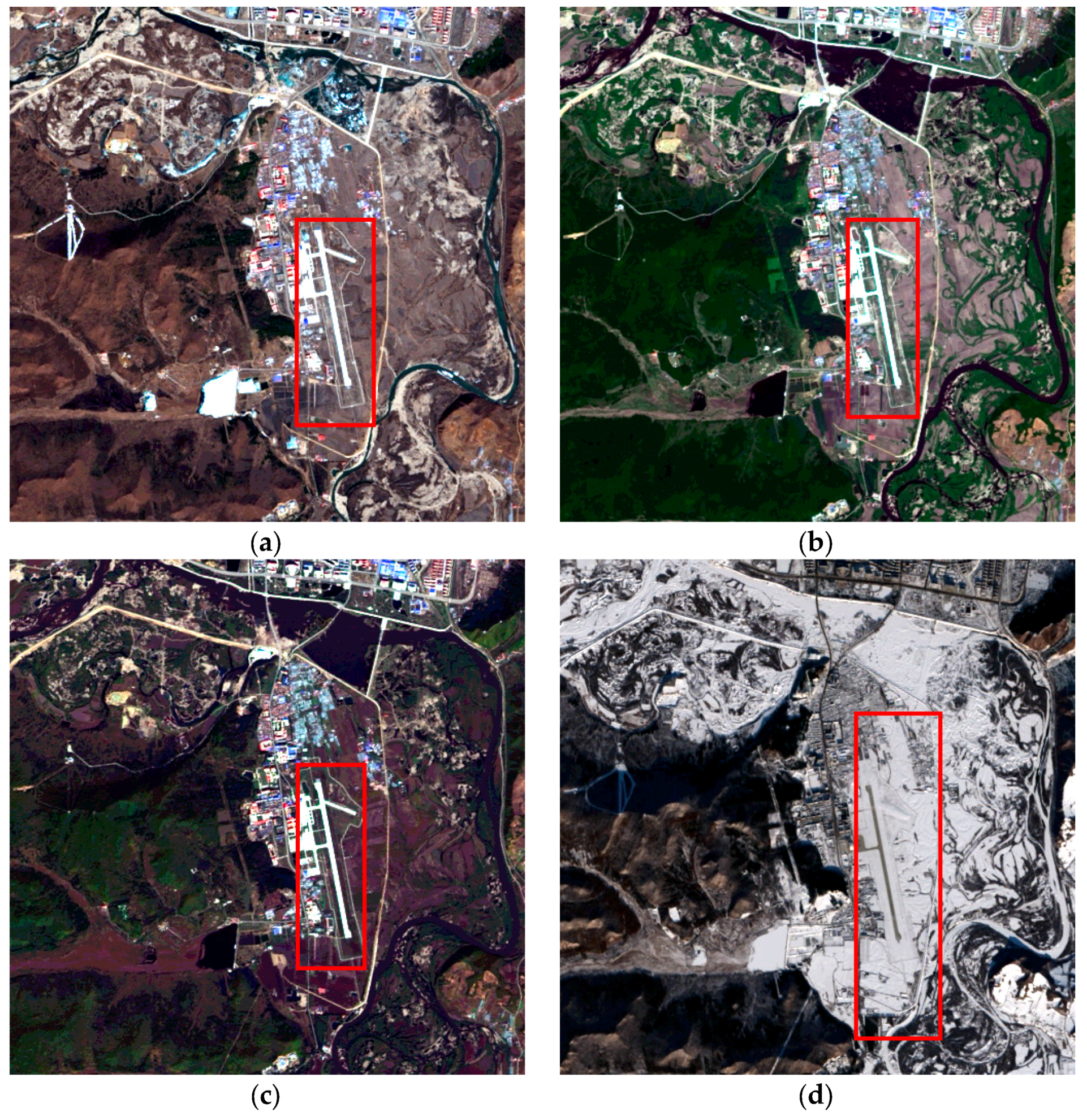

3.3. Results on Landsat 8 Images

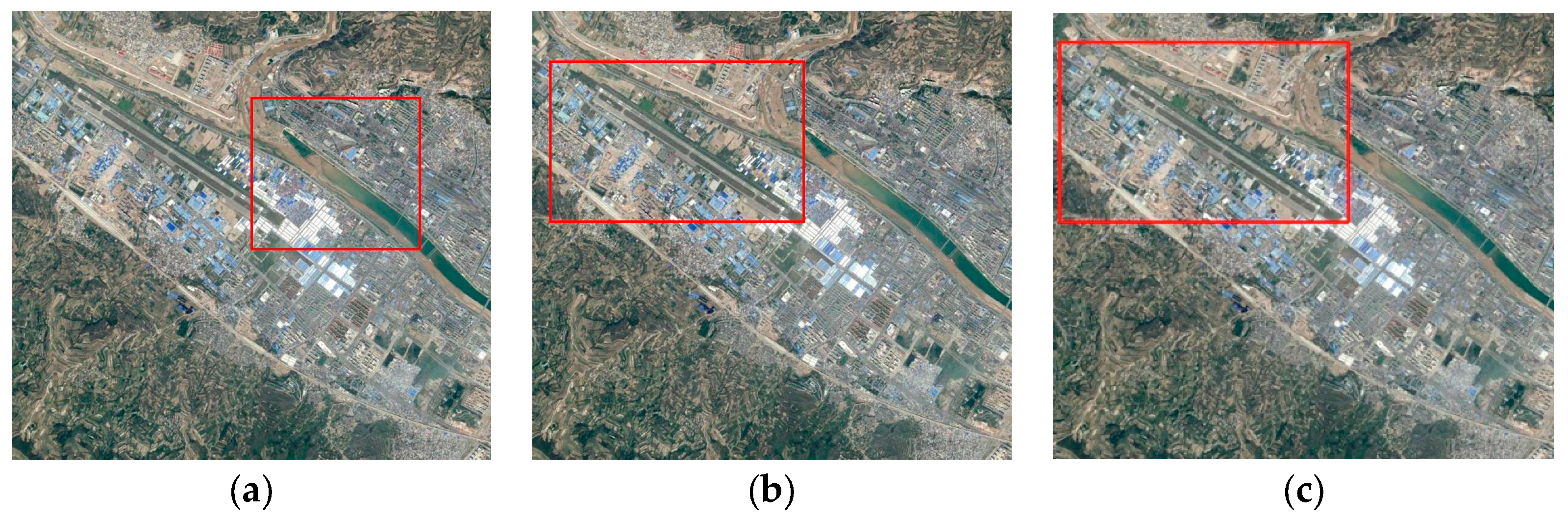

3.4. Results on a Gaofen-1 Scene

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Tao, C.; Tan, Y.; Tian, J. Airport Detection from Large IKONOS Images Using Clustered SIFT Keypoints and Region Information. IEEE Geosci. Remote Sens. Lett. 2011, 8, 128–132. [Google Scholar] [CrossRef]

- Tang, G.; Xiao, Z.; Liu, Q.; Liu, H. A Novel Airport Detection Method via Line Segment Classification and Texture Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2408–2412. [Google Scholar] [CrossRef]

- Pi, Y.; Fan, L.; Yang, X. Airport Detection and Runway Recognition in SAR Images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toulouse, France, 21–25 July 2003; pp. 4007–4009. [Google Scholar]

- Huertas, A.; Cole, W.; Nevatia, R. Detect Runways in Complex Airport Scenes. Comput. Vis., Graph. Image Process. 1990, 51, 107–145. [Google Scholar] [CrossRef]

- Sun, K.; Li, D.; Chen, Y.; Sui, H. Edge-preserve Image Smoothing Algorithm Based on Convexity Model and Its Application in The Airport Extraction. In Proceedings of the SPIE Remotely Sensed Data Information, Nanjing, China, 26 July 2007; pp. 67520M-1–67520M-11. [Google Scholar]

- Chen, Y.; Sun, K.; Zhang, J. Automatic Recognition of Airport in Remote Sensing Images Based on Improved Methods. In Proceedings of the SPIE International Symposium on Multispectral Image Processing and Pattern Recognition: Automatic Target Recognition and Image Analysis; and Multispectral Image Acquisition, Wuhan, China, 15 November 2007; pp. 678645-1–678645-8. [Google Scholar]

- Liu, D.; He, L.; Carin, L. Airport Detection in Large Aerial Optical Imagery. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Montreal, Canada, 17–21 May 2004; pp. 761–764. [Google Scholar]

- Qu, Y.; Li, C.; Zheng, N. Airport Detection Base on Support Vector Machine from A Single Image. In Proceedings of the IEEE International Conference on Information, Communications and Signal Processing, Bangkok, Thailand, 6–9 December 2005; pp. 546–549. [Google Scholar]

- Bhagavathy, S.; Manjunath, B.S. Modeling and Detection of Geospatial Objects Using Texture Motifs. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3706–3715. [Google Scholar] [CrossRef]

- Aytekin, O.; Zongur, U.; Halici, U. Texture-based Airport Runway Detection. IEEE Geosci. Remote Sens. Lett. 2013, 10, 471–475. [Google Scholar] [CrossRef]

- Zhu, D.; Wang, B.; Zhang, L. Airport Target Detection in Remote Sensing Images: A New Method Based on Two-Way Saliency. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1096–1100. [Google Scholar]

- Budak, Ü.; Halici, U.; Şengür, A.; Karabatak, M.; Xiao, Y. Efficient Airport Detection Using Line Segment Detector and Fisher Vector Representation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1079–1083. [Google Scholar] [CrossRef]

- Zhang, P.; Niu, X.; Dou, Y.; Xia, F. Airport Detection on Optical Satellite Images Using Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1183–1187. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Uijlings, J.; Van de Sande, K.; Gevers, T.; Smeulders, A. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 346–361. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016; pp. 580–587. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv, 2015; arXiv:1409.1556v6. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R. E.; Hubbard, W.; Jackel, L.D. Back Propagation Applied to Handwritten Zip Code Recognition. Neural comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional Architecture for Fast Feature Embedding. arXiv, 2014; arXiv:1408.5093. [Google Scholar]

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Fawcett, T. Roc Graphs: Notes and Practical Considerations for Researchers; HP Laboratories: Palo Alto, CA, USA, 2006. [Google Scholar]

- Senthilnath, J.; Sindhu, S.; Omkar, S.N. GPU-based Normalized Cuts for Road Extraction Using Satellite Imagery. J. Earth Syst. Sci. 2014, 123, 1759–1769. [Google Scholar] [CrossRef]

| Algorithm | Average IoU | AP | Recall | Precision | Accuracy | FAR |

|---|---|---|---|---|---|---|

| Faster R-CNN | 0.664 | 0.794 | 0.838 | 0.778 | 0.835 | 0.090 |

| Faster R-CNN + T1 | 0.708 | 0.795 | 0.833 | 0.826 | 0.854 | 0.069 |

| Faster R-CNN + T1 + T2 | 0.715 | 0.800 | 0.837 | 0.841 | 0.859 | 0.066 |

| LSD + AlexNet | 0.366 | 0.557 | 0.655 | 0.597 | 0.637 | 0.203 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, F.; Ren, R.; Van de Voorde, T.; Xu, W.; Zhou, G.; Zhou, Y. Fast Automatic Airport Detection in Remote Sensing Images Using Convolutional Neural Networks. Remote Sens. 2018, 10, 443. https://doi.org/10.3390/rs10030443

Chen F, Ren R, Van de Voorde T, Xu W, Zhou G, Zhou Y. Fast Automatic Airport Detection in Remote Sensing Images Using Convolutional Neural Networks. Remote Sensing. 2018; 10(3):443. https://doi.org/10.3390/rs10030443

Chicago/Turabian StyleChen, Fen, Ruilong Ren, Tim Van de Voorde, Wenbo Xu, Guiyun Zhou, and Yan Zhou. 2018. "Fast Automatic Airport Detection in Remote Sensing Images Using Convolutional Neural Networks" Remote Sensing 10, no. 3: 443. https://doi.org/10.3390/rs10030443

APA StyleChen, F., Ren, R., Van de Voorde, T., Xu, W., Zhou, G., & Zhou, Y. (2018). Fast Automatic Airport Detection in Remote Sensing Images Using Convolutional Neural Networks. Remote Sensing, 10(3), 443. https://doi.org/10.3390/rs10030443