1. Introduction

Change detection with remote sensing images is the process of identifying and locating differences in regions of interest by observing them at different dates [

1]. It is of great significance for many applications of remote sensing images, such as rapid mapping of disaster, land-use and land-cover monitoring and so on. Wessels et al. [

2] use optical images with the reweighted multivariate alteration detection method to identify change areas, and then update the land-cover mapping. A multi-sensor change detection method between optical and synthetic aperture radar (SAR) imagery is proposed in [

3] for earthquake damage assessment of buildings. Taubenbock et al. [

4] propose a post-classification based change detection using optical and SAR data for urbanization monitoring. Multi-temporal airborne laser data is used to monitor forest change in [

5]. In this paper, we tackle the issue of change detection using SAR images. Unlike optical remote sensing images, SAR images can be acquired under any weather condition at day or night; however, there usually are more challenges (i.e., non-linear/non-convex problems) for SAR image visual and machine interpretation due to the coherent imaging mechanism (speckle).

For change detection using remotely sensed optical images, the most widely used criterion is difference operator [

1] (for single channel images) or change vector analysis [

6,

7,

8] (for multi-band/spectral images). Due to the temporal spectral variance caused by different atmospheric conditions, illumination and sensor calibration, image transformation has been widely used to yield robust change detection criteria. The core idea of the image transformation is to transform the multi-band/spectral image into a specific feature space, in which the unchanged temporal pixel pairs have similar representations while the changed ones differ from each other. Principal component analysis (PCA) [

9,

10,

11] is one of the state-of-the-art operators for modeling temporal spectral difference of unchanged pixels. Beyond PCA, Kauth-Thomas transformation [

12], Gram-Schmidt orthonormalization process [

13,

14], multivariate alteration detection [

15,

16] and slow feature analysis [

17,

18] theories have been used for optical image change detection. However, these algorithms are mainly designed for optical images and usually fail to deal with SAR images with speckle.

Given SAR images, we may meet a more complex situation in which the multi-temporal images are in different feature spaces and changed/unchanged pixels are linearly non-sparable, due to the coherent imaging mechanism. Two main approaches have been developed in the literature: coherence change detection and incoherent change detection. The former uses the phase information of SAR time series to study the coherence map, which has strict limitations for the input multi-temporal SAR images [

19]. The incoherent change detection more relies on the amplitude or intensity values of SAR data, for instance, the amplitude ratio or log-ratio [

20]. Improvements have been proposed thanks to automatic thresholding methods [

21] and multi-scale analysis to preserve details [

22]. Lombardo and Oliver [

23] propose a generalized likelihood ratio test given by the ratio between geometric and arithmetic means for SAR images. Quin et al. [

24] extend the SAR ratio to more general cases with an adaptive and nonlinear threshold, which can be applied to not only SAR image pairs but also long-term SAR time series. Beyond change detection, Su et al. [

25] propose a generalized likelihood ratio test based spectral cluster for temporal behaviours analysis of long-term SAR time series. Obviously, non-linear change criteria have been widely used for SAR images in the literature. However, these change criteria usually have noisy results due to the SAR speckle, or face a trade off between spatial resolution and smoothness of detecting results.

Recently, deep learning techniques have been experiencing a rapid growth and have achieved remarkable success in various fields. Given change detection issue using remotely sensed data, a large number of deep network architectures have been proposed. Improved UNet++ [

26] is proposed to solve the error accumulation problems in the deep feature based change detection. Ji et al. [

27] apply a Mask R-CNN based building change detection network with self-training ability, which does not need high-quality training samples. Dual learning-based Siamese framework in [

28] can reduce the domain differences of bi-temporal images by retaining the intrinsic information and translating them into each other’s domain. A set of convolutional neural network features [

29] have been used to compute the difference indices. Similarly, a spare autoencoder is applied in [

30] to extract robust SAR features for change detection.

In this paper, we propose a differentially deep subspace representation (DDSR) for multi-temporal SAR images. The proposed network consists of a non-linear mapping network followed by a linear transform layer to deal with the complex patterns of changed and unchanged pixels in SAR images. The non-linear mapping network is built upon an autoencoder-like (AE-like) deep neural network, which can non-linearly map the noisy SAR data to a low-dimensional latent space. Contrary to normal autoencoder (AE) network, the proposed architecture is trained to minimize the representation difference of unchanged pixel pairs, instead of reconstruction error of the decoder. To better separate the unchanged and changed pixels in the latent space, a single-layer self-expressive network linearly transform the mapped SAR data into a mutually orthogonal subspace. In the transformed subspace, the unchanged pixel pairs have similar representation, while the temporally changed ones are comparatively different from each other. Changed pixels are finally identified by an unsupervised K-Means clustering method [

31]. Note that a similar idea has been proposed in [

32], in which the slow feature analysis [

18] is applied to perform the linear transform, instead of our self-expressive network with the back propagation algorithm.

This paper is organized as follows.

Section 2 briefly recalls the non-linear/linear subspace approaches. The proposed network is presented in

Section 3, which is followed by the evaluation (

Section 4) and the conclusion (

Section 5).

3. Differentially Deep Subspace Representation (DDSR) for Change Detection

As far as we are concerned, the non-linear transformation for change detection generally outperforms linear ones, which can handle the complex patterns of the input data. Non-linear kernel based methods have also been proposed [

35,

36,

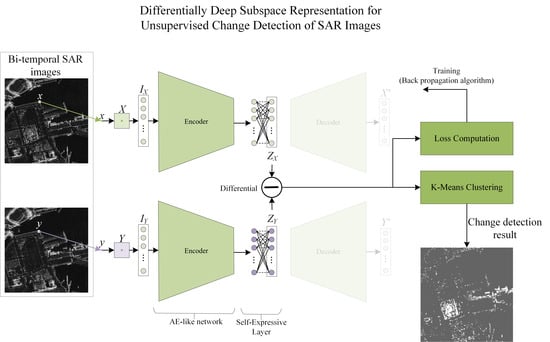

37]; however, it is not clear whether the pre-defined kernels are suitable for SAR image change detection tasks. In this work, our goal is to learn an explicit mapping that makes the changed and unchangded pixel pairs more separable in the transformed subspaces. This section builds our architecture namely differentially deep subspace representation (DDSR) based on the classic autoencoder network. As shown in

Figure 2, the non-linear part (the AE-like network) first maps the input bi-temporal SAR data into a low-dimensional latent space. The linear part (the self-expressive layer) further transforms the mapped SAR data to a subspace. Contrary to minimization of the reconstruction error, the proposed architecture is trained to compact unchanged pixel pairs and explode the changed ones in the subspace.

3.1. AE-Like Network Based Non-Linear Mapping

Basically, the encoder of the AE network is a classical multi-layer deep neural network. Each layer consisting of an input layer, a hidden layer and a output layer can non-linearly transform the input data to latent features. Given a pair of pixels

,

and

are corresponding patches with pixel

x and

y as center, respectively. In the proposed AE-like network,

,

and

(

) denote the input, hidden and output layer of the neural networks. At the first stage, the patch pair

corresponding to pixel pair

are reshaped to form input vector

(i.e.,

and

). The hidden layer can be computed by

where

denotes the weight matrix of the hidden layer,

denotes the bias and

f denotes the activation function performing the non-linear mapping. At the second stage, the latent feature

is mapped to the output by

where

denotes the weight matrix of the output layer,

is the bias and

g denotes the activation function. Finally, the weights

of the AE-like network are learned by minimizing the target loss with the backpropagation algorithm. The so-called representation of the input data is the hidden layer. Generally, as the number of layers increases, the learning features are more abstract and more robust. The encoder with a different number of hidden layers are shown in

Figure 3.

The classic AE networks are designed to extract efficient representation of the input data; thus, the reconstruction error of the decoder is one of the key minimization targets. However, in our work, the AE network trained by minimizing the reconstruction error may lose the efficiency of the change detection purpose, since the key information for the reconstruction might not be useful for the change detection tasks. Consequently, our DDSR architecture only keeps the encoder part that can non-linearly map the input bi-temporal SAR data to the low-dimensional space, and discards the decoder part including the reconstruction error in the loss.

3.2. Self-Expressive Layer Based Linear Transformation

As shown in

Figure 2, the main motivation of the self-expressive layer is based on the PCA and SFA theories. However, unlike PCA or SFA, the linear transformation of our DDSR is learned by the backpropagation algorithm, instead of the classic or generalized eigenvalue decomposition. The data-driven strategy can make the self-expressive aspects more adaptive to the given datasets than PCA and SFA. Let

and

denote the input (i.e., the output of the AE-like network) and the output of the self-expressive layer.

where

denotes the weights of the self-expressive layer. To form a mutually orthogonal subspace, each row vector in

has to be orthogonal to any other row vector in

.

3.3. Network Architecture of DDSR

Since the pixel-wise change detection is strongly affected by the speckle, patch-wise strategy has been applied in this paper, i.e., a square image patch formed by a pixel and its surrounding pixels. Each patch pair

with center pixel

is reshaped to vector

and

(

in this paper), as shown in

Figure 2. Through the AE-like network (

Section 3.1), the input bi-temporal SAR patches

and

are non-linearly mapped to a lower dimensional latent space, denoted by

and

(where

).

and

are then linearly transformed to

and

by the self-expressive layer. The change criterion

between pixel

x and

y can be calculated by

To identify the changed pixels, an unsupervised K-Means cluster is applied to classify into the changed and unchanged groups.

3.4. Training Strategy

As shown in

Figure 2, the classic AE network is adapted to handle the change detection task. The whole network is trained by minimizing the loss computed from the differential representation of the bi-temporal SAR patches.

where the loss can be calculated by

denotes the representation differential,

is the data constraint term and

is the weight regularization term. The weights

control the balance terms in the loss function. The data constraint term ensures that the output of DDSR has significant information (avoiding a meaningless solution, i.e.,

).

where

denotes the is a column vector whose elements are all 1. Note that theoretically the non-zero variance constraint is enough. However, for the sake of simplification, the unite-variance constraint is used in the paper. The weight regularization term is calculated by

where

and

are classic regularization term. The third term controls the orthogonality of

, in which

is the correlation coefficient between the

i-th row vector and the

j-th row vector of

. Theoretically, the self-expressive layer performs like a PCA or SFA approach, for which the orthogonality is needed to have a complete and non-redundant representation. Without this orthogonality term, the output of DDSR will be a vector of a constant number.

3.5. Implementation Details

Since no labeled data is needed in the training stage, our DDSR is unsupervised. However, DDSR makes an assumption that the unchanged pixel pairs are much more than changed ones, since theoretically only unchanged pixel pairs meet the minimization of the proposed loss (Equation (

6)). A similar assumption has also been used in slow feature analysis (SFA) based unsupervised change detection approach in [

18]. In addition, this assumption might not hold when the given bi-temporal SAR images have a very long time interval (changed pixels/regions are more than unchanged ones). However, one can easily discard this assumption by introducing a pre-detection strategy (e.g., the classic log-ratio change detection approach) providing some unchanged pixel pairs as training samples. A similar strategy has been used in [

30].

Since the proposed network focuses on the change detection task instead of the representation of the classic AE network, the network parameters are firstly initialized randomly, not by a pre-trained AE network. In the training stage, all the patch pairs are fed into the DDSR network. The Adam optimization algorithm is applied to minimize the loss (Equation (

6)) and obtain the optimal parameters

and

with 0.1 learning rate. The number of iterations is 1500. In the testing stage, the change criterion

(Equation (

4)) is computed pixel by pixel. The classic K-Means clustering method is then performed on

to group pixel pairs into two groups, in which the group with lower magnitude of cluster center

is the unchanged group.