Let

be a noisy image of size

pixels, with

being the total number of pixels, and let

be a corresponding pixel,

. We denote by

and

the original and denoised intensity at pixel

, respectively. In the formulation [

36] of the NLM filter the restored intensity at a generic pixel

is computed as a weighted average (also called kernel convolution) of all the pixels

in the image belonging to a search volume

, centered at the pixel

:

where

is the weight assigned to

in removing noise from pixel

. To be more specific, the weight is an estimate of the similarity between the intensities of two neighborhoods

and

centered at pixels

and

, respectively, such that

The definition of the classical NLM filter does not make any assumption about the search volume, it only requires that each pixel can be linked to the others. However for computational reasons it is usually assumed that both neighbors and are taken as boxes of size and , with and being the window radii of and , respectively, along each spatial direction x, y.

Indeed, for each pixel in the image, the distances between the intensity neighborhoods

and

are computed for all pixels

contained in

. The complexity of the filter is of the order of

. The NLM algorithm is proved by [

36] to be consistent for every nonnegative

h, even if it is usually chosen of order of the standard deviation of the noise, which means to assume that patches can be considered alike if they differ in such quantity. That notwithstanding, the assumption of uniform variance over the image leads to sub-optimal results wherever nonstationary noise is present, so Manjón et al. [

46] proposed a local adaptive estimate at the pixel

k:

This allows one to regulate the reduction of the overestimation of noise variance occurring in similar patches.

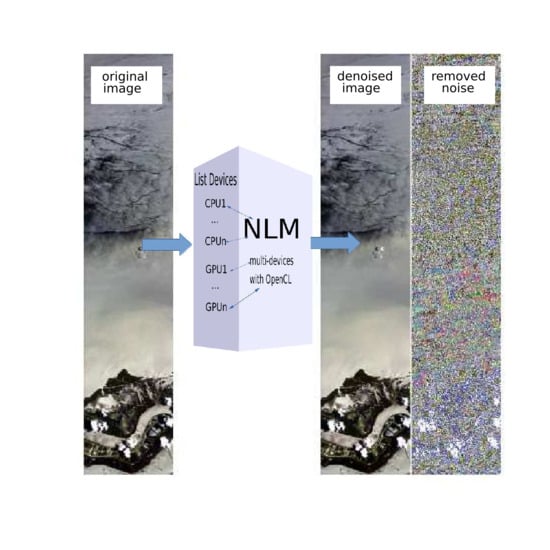

2.1. Multi-Platform NLM OpenCL Implementation

We have developed multi-platform NLM algorithms using an OpenCL C language, a restricted version of the language with extensions appropriate for executing data-parallel code. The level of complexity imposed by OpenCL is similar to other dedicated programming models such as Compute Unified Device Architecture (CUDA) developed by NVIDIA. OpenCL defines an application programming interface (API) for cross-platform modern multiprocessors by a group of manufacturers such as Apple, Intel, AMD, or NVIDIA itself. OpenCL is managed by the no-profit technology consortium KhronosGroup (Apple, IBM, NVIDIA, AMD, Intel, ARM, etc.).

In OpenCL, a system consists of a host (the CPU), and one or more devices, which are massively parallel processors, allowing one to define a kernel or group of kernels that exploit multi-thread-based parallelism and are loaded and executed on a multicore platform.

A kernel is a function which contains a block of instructions that are executed by the work-thread group. The kernels exhibit the important property of data parallelism, allowing arithmetic operations to be simultaneously performed on different parts of the data by means of several work-items. A group of work-items forms a work group that runs on a single compute unit. The maximum dimension of each work-group depends on the specifications of the device in use, usually up to 1024 work-items in GPUs.

In practice, work-items (threads) are gathered in work-groups (threads blocks).

A work-item is distinguished from other executed work-items within the collection by its global ID and local ID. Each work-item is identified by its global ID or by the combination of the work-group ID , the size of each work-group , and the local ID inside the work-group.

The memory hierarchy of GPUs is different from that of CPUs. In practice, there are private memory, local memory, constant memory, and global memory. Global memory is the largest memory, but with high latency; it is typically used to store input and output data structures. Constant memory is a small read-only memory, supporting low latency and high bandwidth access when all threads simultaneously access the same location. Local memory can be allocated to a work-group and accessed at a very high speed in a highly parallel manner. Private memory is the region of memory private to a work-item.

As all threads in a work-group can read and write their own local memory, it is a very efficient way for threads to share their input data, and intermediate results can be synchronized via barriers and memory fences.

In the initial section of the program, the system is queried, defining the appropriate operating context, specifically the characteristics of the available OpenCL devices, such as the amount of processing units and threads available for computation. Discovering the computational devices available we create two different lists of available devices: One for the CPU device type and the other one for GPU device type, allowing one to choose which kind of device to prefer. If no GPU type device is available, then a CPU device is automatically chosen. Consequently, the OpenCL task scheduler can conveniently split the workload and perform a balanced computation across the system’s resources.

Each block image is processed by a corresponding thread block that executes the NLM filter on a portion of the image and writes back the restored values to the output structure from the device memory to the CPU structure.

Furthermore some support structures are defined as device and host buffers and I/O parameters to regulate the algorithm call. These can be listed as follows:

: Device buffers to load the data;

: Host buffers containing the restored data.

: Host/device buffer containing the estimate noise variance.

: boolean host/device variable: If this is true then the adaptive noise variance is performed according to (

2)–(

3).

: Host/device buffer with the noise variance regulation measure whenever

is true (we recall that we use (

3)).

d: Similarity radius, constant device buffer.

v: Window radius, constant device buffer.

and : Constant device buffers representing the image dimension, width and height, respectively.

: Host/device boolean value determining which kind of platform is preferred to operate the computation. If no GPU platform is available then CPU platform is automatically chosen.

Algorithm 1 describes the classical sequential operations of NLM, whereas Algorithm 2 presents our portable OpenCl implementation.

| Algorithm 1NLM_SEQUENTIAL(, , , , , , , d, v) |

for alldo for all do for j from to do for i from to do for m from to d do for n from to d do end for end for end for end for end for end for

|

| Algorithm 2 NLM_OPENCL(, , , , , , , d, v, ) |

// list GPU platforms available // list CPU platforms available // Initialization step: // recover the number of platforms // number of available GPUs on the system // number of available CPUs on the system ifthen else end if // Computation of the NLM filtered signal step: // used by the OpenCL runtime for // managing objects such as command-queues, memory, program and kernel objects // creates a command queue associate with the context // creates a program object for a context, and loads the source code specified values to store into constant memory ifthen // compiles and links a program executable from the program source cl_program passing the constant parameters // creates a kernel read only buffer object and referenced by host ptr thanks to {CL_MEM_READ_ONLY|CL_MEM_USE_HOST_PTR} flags // according to creates a kernel object with a specific name based on the cl_program to call // recover information about the thread work group C_KERNEL_PREFERRED_WORK_GROUP_SIZE_MULTIPLE, According to wg_multiple determines work-group size and number of work-groups all over the data // set the value to kernel arguments number of work-groups, work-group size, // execute all the operations into cl_commandQueue into a cl_kernel on a device Wait until all the kernel operations have been performed Collect the restored data to . else return "Build Program Error!" end if

|

The denoising filter is computed for all the pixels considering the similarity and window search size.

By observing the program flow in Algorithm 2 the OpenCL runtime is defined by a set of functions that can be globally grouped in Query Platform Info, Contexts, Query Devices, and Runtime API as shown in

Figure 1.