Areca Yellow Leaf Disease Severity Monitoring Using UAV-Based Multispectral and Thermal Infrared Imagery

Abstract

:1. Introduction

2. Materials and Methods

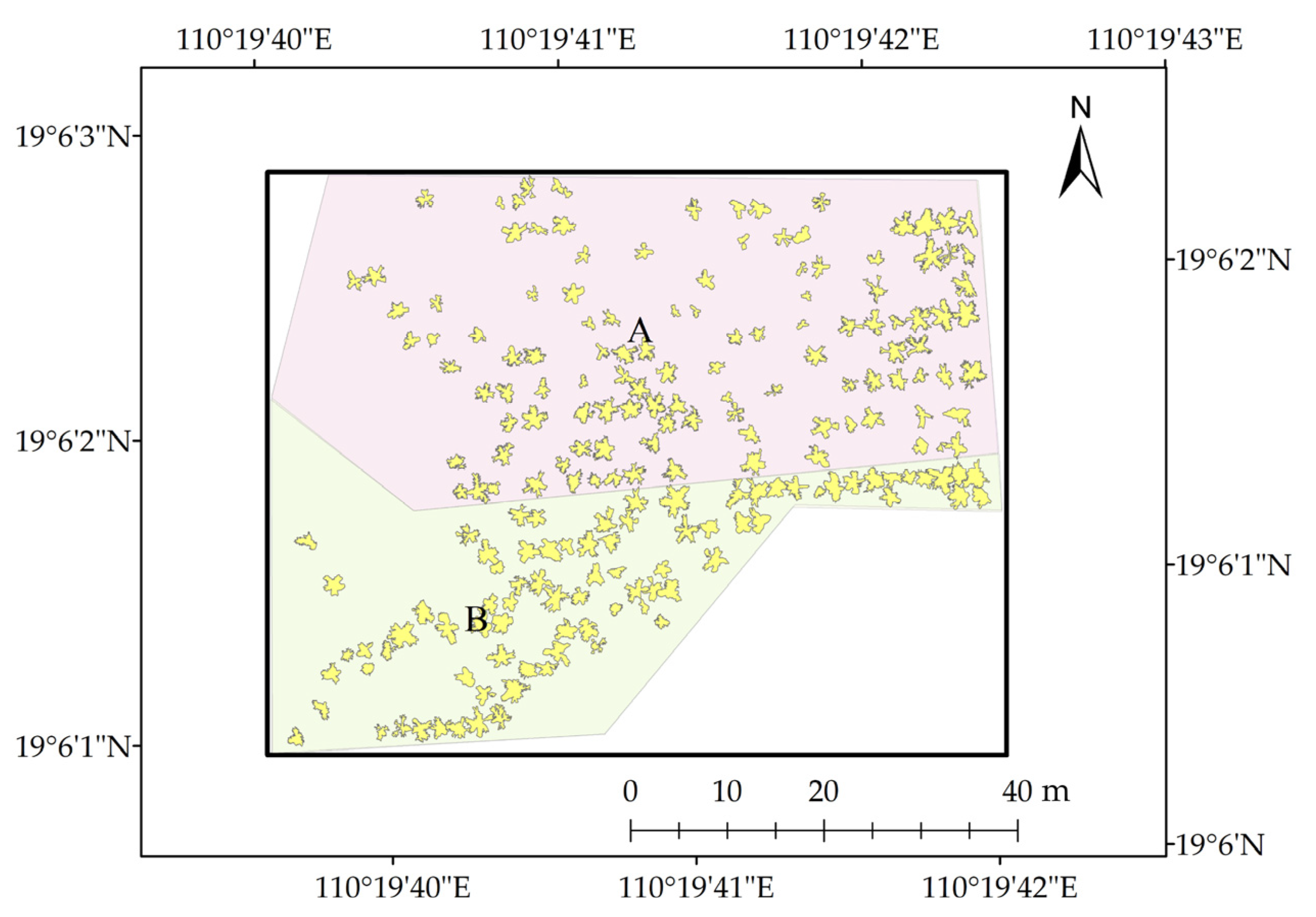

2.1. Overview of the Research Area

2.2. Data Sourses

2.3. Data Preprocessing

2.3.1. Feature Extraction

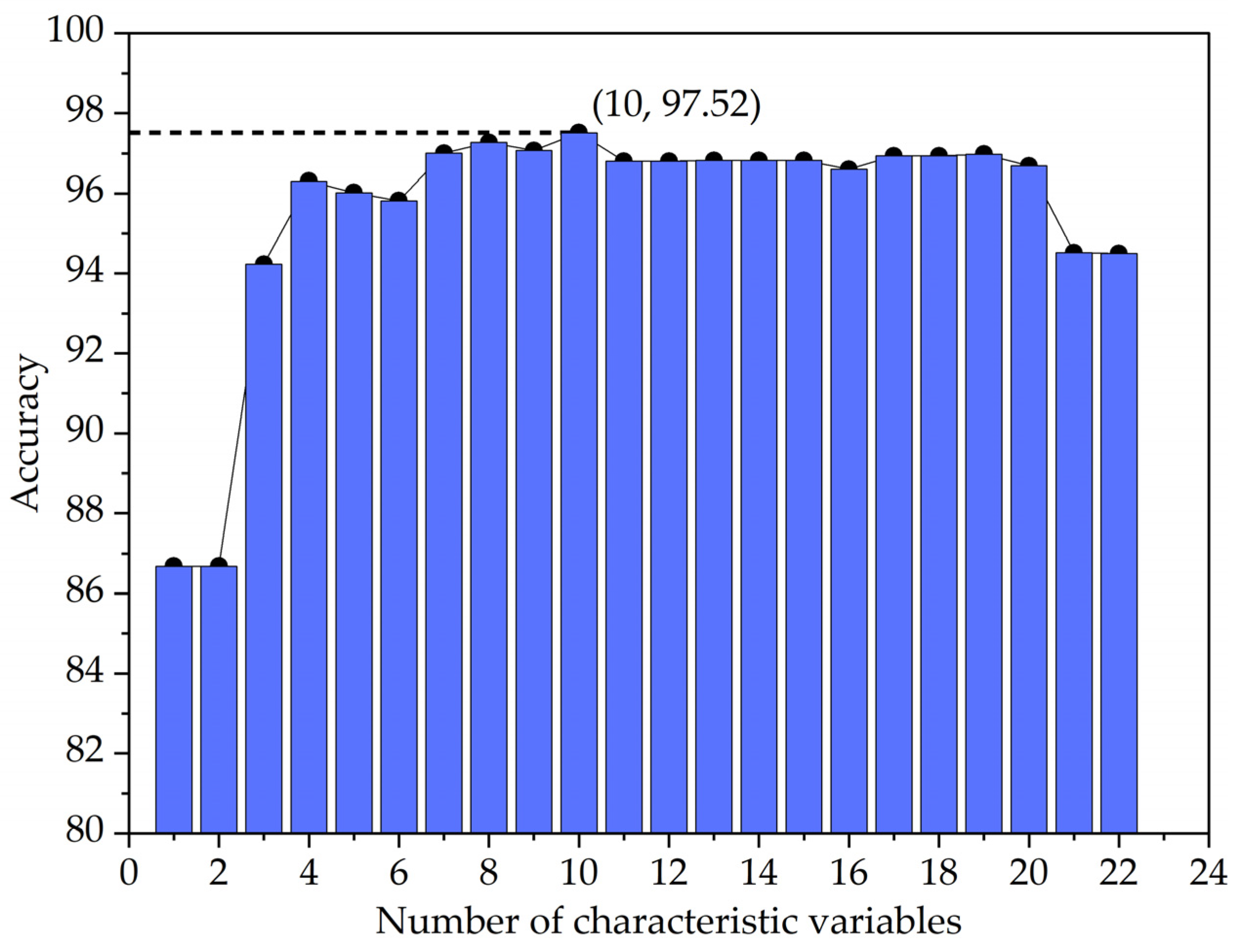

2.3.2. Feature Selection

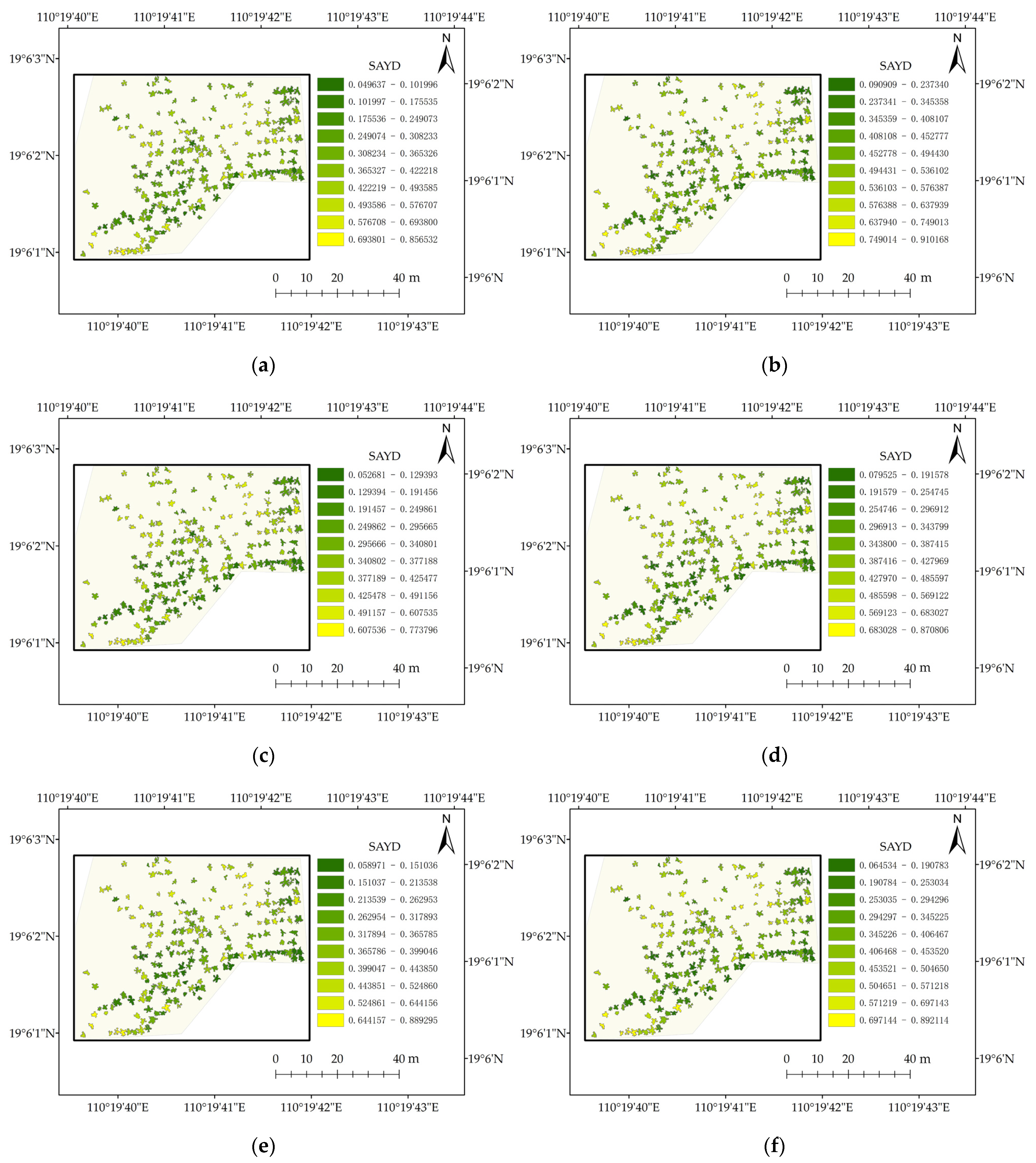

2.3.3. Severity of Areca Yellow Leaf Disease

2.3.4. Canopy Temperature of Areca

2.4. Machine Learning Methods

2.4.1. Neural Network

2.4.2. Naïve Bayes

2.4.3. Support Vector Machine

2.4.4. K Nearest-Neighbors

2.4.5. Decision Tree

2.4.6. Random Forest

2.5. Evaluation Indicators

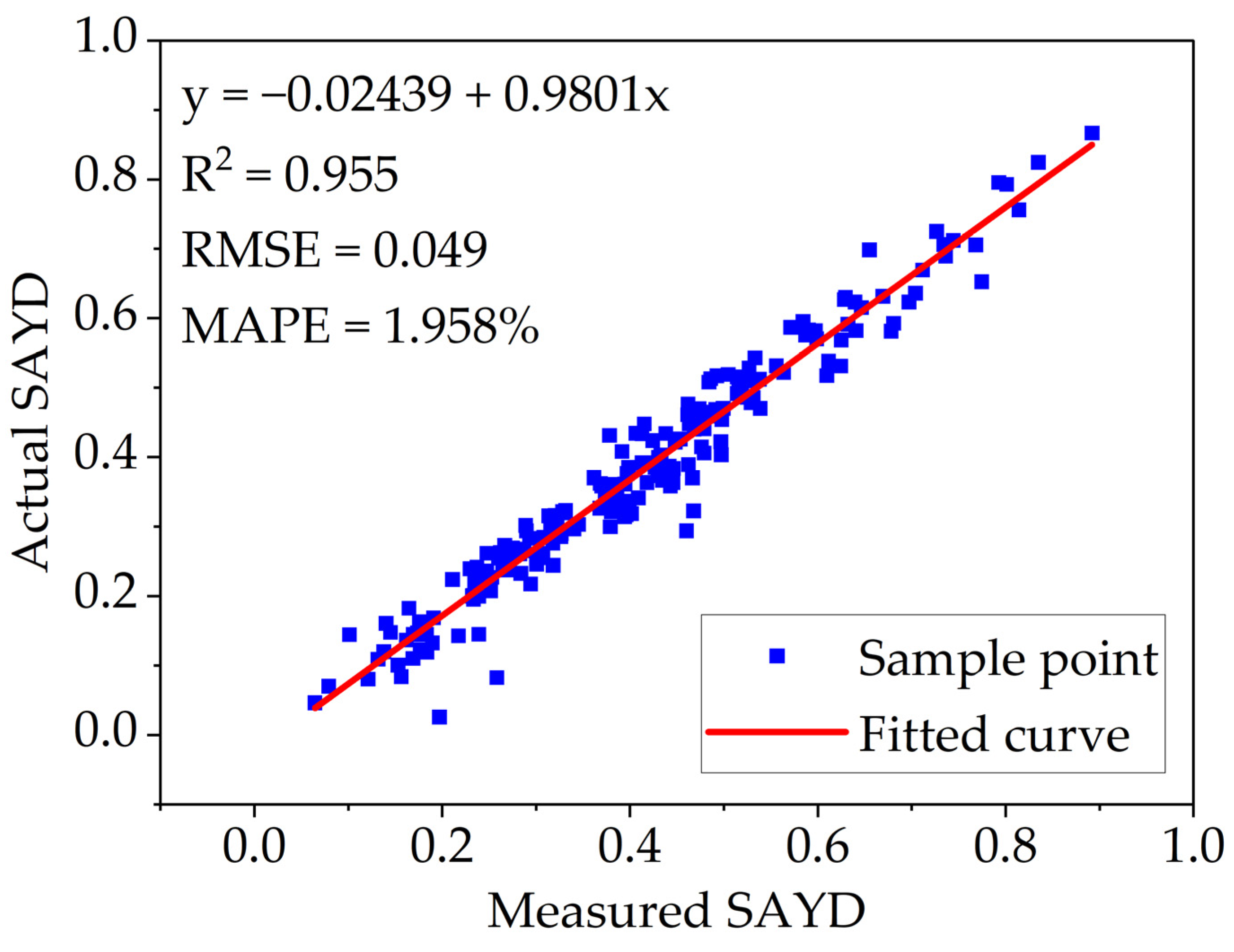

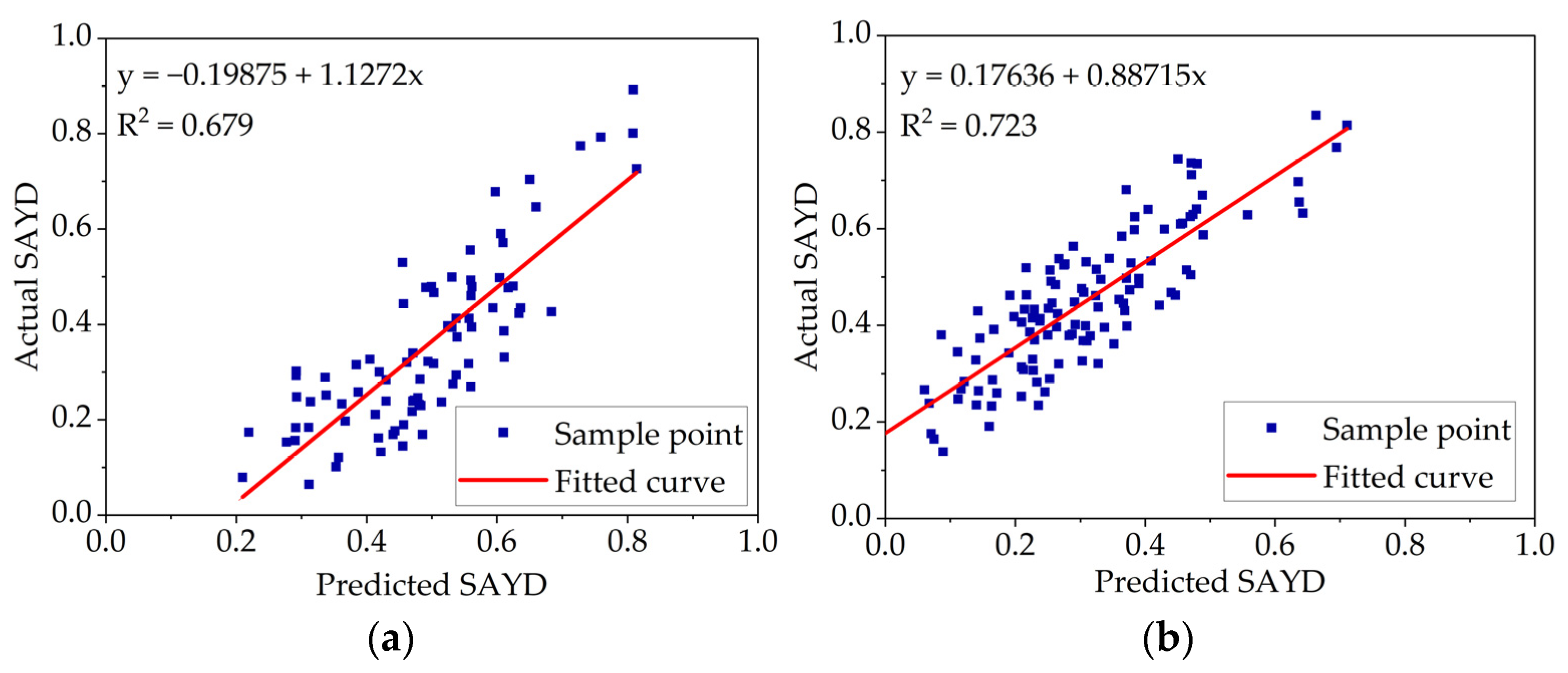

2.6. A Correlation Model between SAYD and CT

3. Results

3.1. Feature Selection

3.2. Analysis of Prediction Results of SAYD based on Different Machine Learning

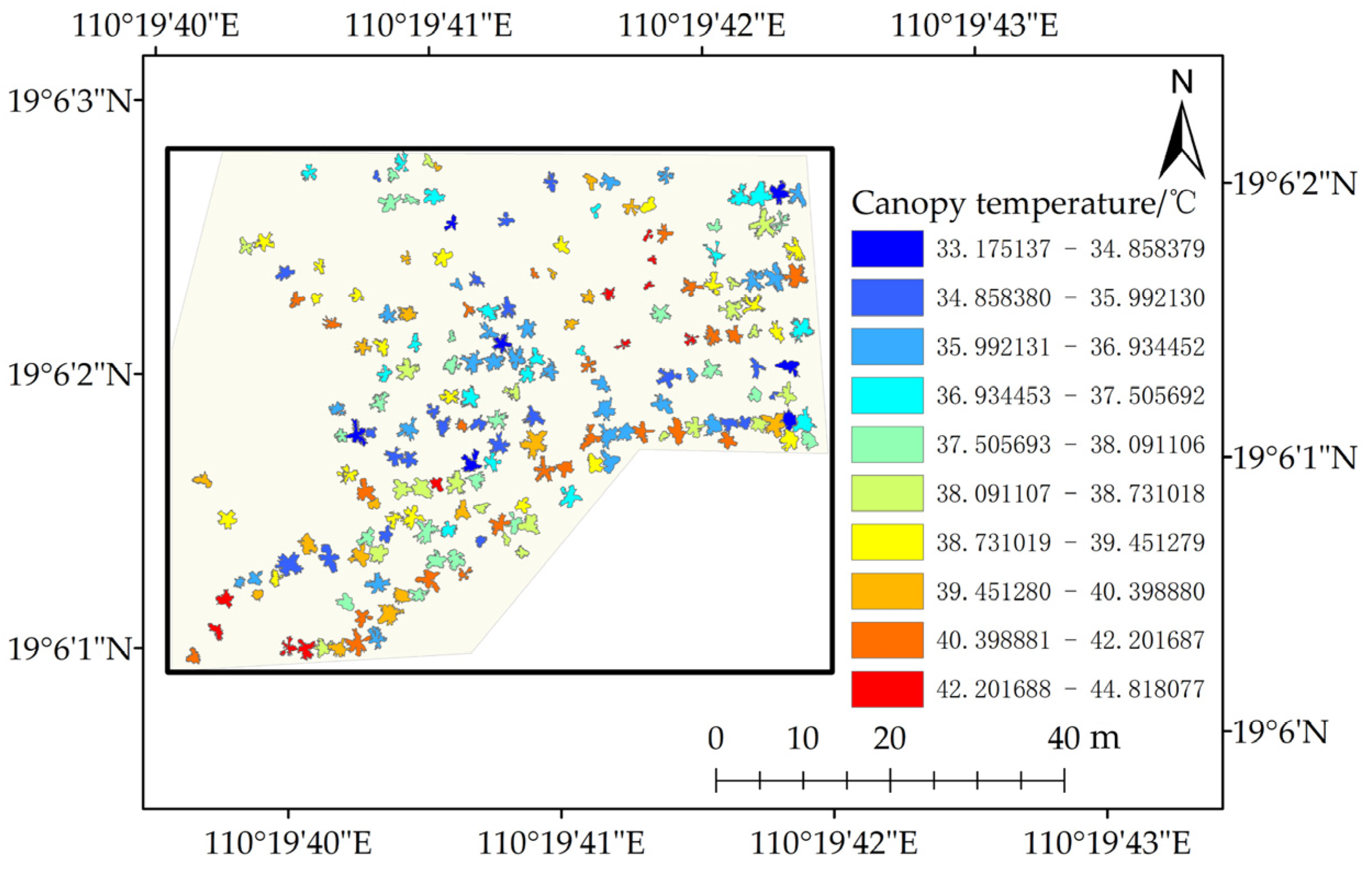

3.3. Areca CT Extraction Results

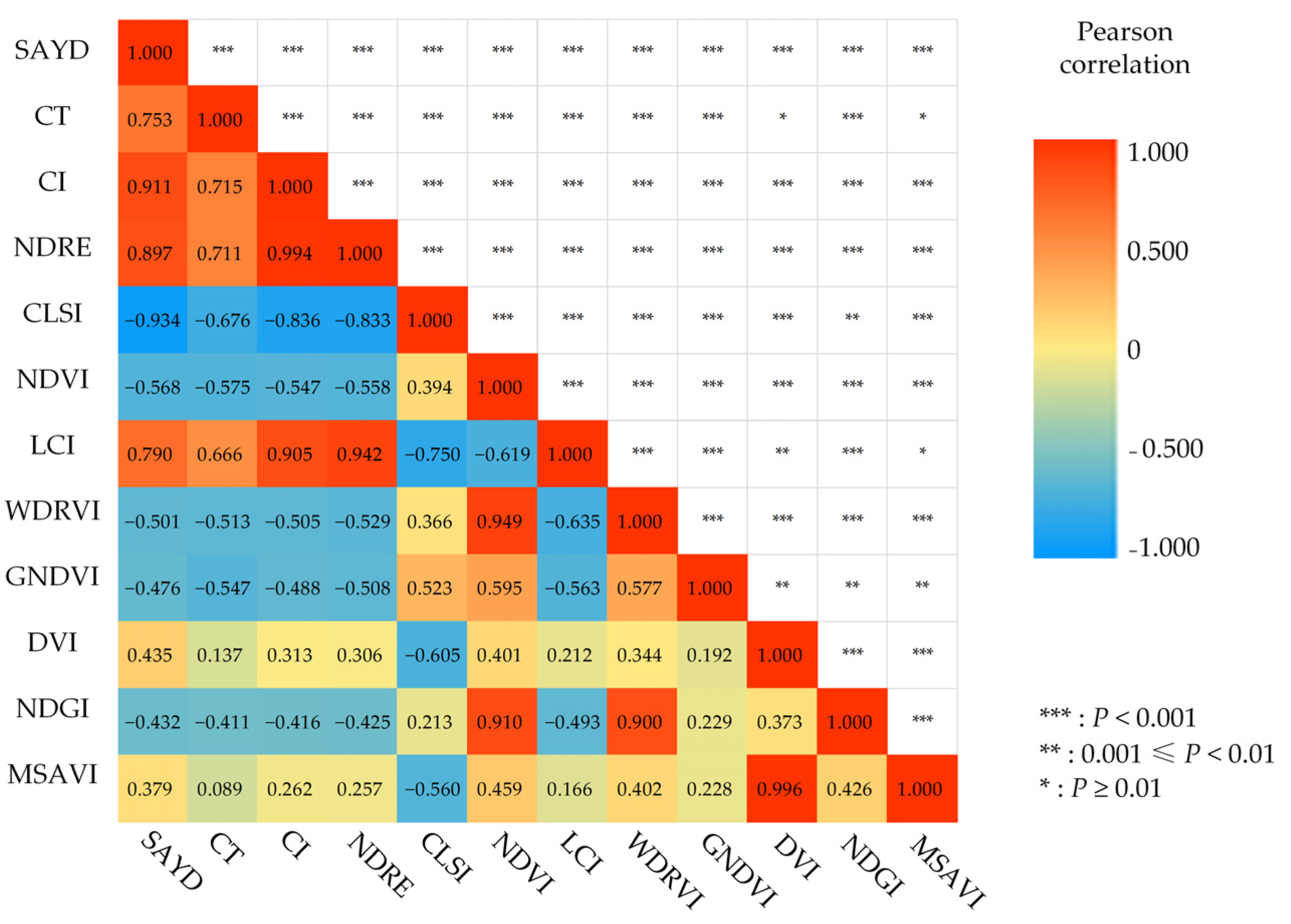

3.4. Pearson’s Correlation Matrix for Traits Associated with Areca YLD

3.5. Results of Model Fitting between CT and SAYD

4. Discussion

4.1. Machine Learning in Areca YLD Prediction

4.2. Feature Selection in Areca YLD Prediction

4.3. CT and Vegetation Indexes’ Relevance Analysis in Areca YLD Prediction

4.4. Contributions and Limitations in the Method

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Khan, L.U.; Cao, X.; Zhao, R.; Tan, H.; Xing, Z.; Huang, X. Effect of temperature on yellow leaf disease symptoms and its associated areca palm velarivirus 1 titer in areca palm (Areca catechu L.). Front. Plant Sci. 2022, 13, 1023386. [Google Scholar] [CrossRef] [PubMed]

- Peng, W.; Liu, Y.-J.; Wu, N.; Sun, T.; He, X.-Y.; Gao, Y.-X.; Wu, C.-J. Areca catechu L. (Arecaceae): A review of its traditional uses, botany, phytochemistry, pharmacology and toxicology. J. Ethnopharmacol. 2015, 164, 340–356. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.E.; Hwang, H.J.; Ha, J.-S.; Jeong, H.-S.; Kim, J.H. Screening of medicinal plant extracts for antioxidant activity. Life Sci. 2003, 73, 167–179. [Google Scholar] [CrossRef]

- Zheng, W.; Li, X.-M.; Wang, F.; Yang, Q.; Deng, P.; Zeng, G.-M. Adsorption removal of cadmium and copper from aqueous solution by areca: A food waste. J. Hazard. Mater. 2008, 157, 490–495. [Google Scholar] [CrossRef] [PubMed]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of Remote Sensing in Precision Agriculture: A Review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovsky, Z.; Turner, D.; Vopenka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef] [Green Version]

- Martin, C.; Parkes, S.; Zhang, Q.; Zhang, X.; McCabe, M.F.; Duarte, C.M. Use of unmanned aerial vehicles for efficient beach litter monitoring. Mar. Pollut. Bull. 2018, 131, 662–673. [Google Scholar] [CrossRef] [Green Version]

- Zhou, H.; Kong, H.; Wei, L.; Creighton, D.; Nahavandi, S. Efficient Road Detection and Tracking for Unmanned Aerial Vehicle. IEEE Trans. Intell. Transp. Syst. 2015, 16, 297–309. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Franke, J.; Menz, G.; Oerke, E.-C.; Rascher, U. Comparison of multi- and hyperspectral imaging data of leaf rust infected wheat plants. In Remote Sensing for Agriculture, Ecosystems, and Hydrology VII; SPIE: Bellingham, DC, USA, 2005. [Google Scholar]

- Jiang, J.-B.; Huang, W.-J.; Chen, Y.-H. Using Canopy Hyperspectral Ratio Index to Retrieve Relative Water Content of Wheat under Yellow Rust Stress. Spectrosc. Spectr. Anal. 2010, 30, 1939–1943. [Google Scholar] [CrossRef]

- Franceschini, M.H.D.; Bartholomeus, H.; van Apeldoorn, D.; Suomalainen, J.; Kooistra, L. Intercomparison of Unmanned Aerial Vehicle and Ground-Based Narrow Band Spectrometers Applied to Crop Trait Monitoring in Organic Potato Production. Sensors 2017, 17, 1428. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abdulridha, J.; Ampatzidis, Y.; Qureshi, J.; Roberts, P. Laboratory and UAV-Based Identification and Classification of Tomato Yellow Leaf Curl, Bacterial Spot, and Target Spot Diseases in Tomato Utilizing Hyperspectral Imaging and Machine Learning. Remote Sens. 2020, 12, 2732. [Google Scholar] [CrossRef]

- Chang, A.; Yeom, J.; Jung, J.; Landivar, J. Comparison of Canopy Shape and Vegetation Indices of Citrus Trees Derived from UAV Multispectral Images for Characterization of Citrus Greening Disease. Remote Sens. 2020, 12, 4122. [Google Scholar] [CrossRef]

- Vanegas, F.; Bratanov, D.; Powell, K.; Weiss, J.; Gonzalez, F. A Novel Methodology for Improving Plant Pest Surveillance in Vineyards and Crops Using UAV-Based Hyperspectral and Spatial Data. Sensors 2018, 18, 260. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lei, S.; Luo, J.; Tao, X.; Qiu, Z. Remote Sensing Detecting of Yellow Leaf Disease of Arecanut Based on UAV Multisource Sensors. Remote Sens. 2021, 13, 4562. [Google Scholar] [CrossRef]

- Calderon, R.; Navas-Cortes, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early, detection of Verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Pu, R.; Gonzalez-Moreno, P.; Yuan, L.; Wu, K.; Huang, W. Monitoring plant diseases and pests through remote sensing technology: A review. Comput. Electron. Agric. 2019, 165, 104943. [Google Scholar] [CrossRef]

- Smigaj, M.; Gaulton, R.; Suarez, J.C.; Barr, S.L. Canopy temperature from an Unmanned Aerial Vehicle as an indicator of tree stress associated with red band needle blight severity. For. Ecol. Manag. 2019, 433, 699–708. [Google Scholar] [CrossRef]

- Wang, Y.; Zia-Khan, S.; Owusu-Adu, S.; Miedaner, T.; Mueller, J. Early Detection of Zymoseptoria tritici in Winter Wheat by Infrared Thermography. Agriculture 2019, 9, 139. [Google Scholar] [CrossRef] [Green Version]

- Cheng, J.J.; Li, H.; Ren, B.; Zhou, C.J.; Kang, Z.S.; Huang, L.L. Effect of canopy temperature on the stripe rust resistance of wheat. N. Z. J. Crop Hortic. Sci. 2015, 43, 306–315. [Google Scholar] [CrossRef] [Green Version]

- Rumpf, T.; Mahlein, A.K.; Steiner, U.; Oerke, E.C.; Dehne, H.W.; Pluemer, L. Early detection and classification of plant diseases with Support Vector Machines based on hyperspectral reflectance. Comput. Electron. Agric. 2010, 74, 91–99. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Deering, D.W.; Schell, J.A.; Harlan, J.C. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation; NASA Technical Reports Server (NTRS): Chicago, IL, USA, 1973.

- Verstraete, M.M.; Pinty, B.; Myneni, R.B. Potential and limitations of information extraction on the terrestrial biosphere from satellite remote sensing. Remote Sens. Environ. 1996, 58, 201–214. [Google Scholar] [CrossRef]

- Pearson, R.L.; Miller, L.D. Remote mapping of standing crop biomass for estimation of the productivity of the shortgrass prairie. Remote Sens. Environ. VIII 1972, 1355. [Google Scholar]

- Yang, C.-M.; Cheng, C.-H.; Chen, R.-K. Changes in Spectral Characteristics of Rice Canopy Infested with Brown Planthopper and Leaffolder. Crop Sci. 2007, 47, 329–335. [Google Scholar] [CrossRef]

- Zhao, C.; Huang, M.; Huang, W.; Liu, L.; Wang, J.J.I.I.I.G.; Symposium, R.S. Analysis of winter wheat stripe rust characteristic spectrum and establishing of inversion models. IEEE Int. Geosci. Remote Sens. Symp. 2004, 6, 4318–4320. [Google Scholar]

- Jordan, C.F.J.E. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Fitzgerald, G.; Rodriguez, D.; O’Leary, G. Measuring and predicting canopy nitrogen nutrition in wheat using a spectral index—The canopy chlorophyll content index (CCCI). Field Crops Res. 2010, 116, 318–324. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.D.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Penuelas, J.; Baret, F.; Filella, I. Semi-empirical indices to assess carotenoids/chlorophyll alpha ratio from leaf spectral reflectance. Photosynthetica 1995, 31, 221–230. [Google Scholar]

- Gitelson, A.A.; Merzlyak, M.N.; Chivkunova, O.B. Optical properties and nondestructive estimation of anthocyanin content in plant leaves. Photochem. Photobiol. 2001, 74, 38–45. [Google Scholar] [CrossRef]

- Mahlein, A.K.; Rumpf, T.; Welke, P.; Dehne, H.W.; Pluemer, L.; Steiner, U.; Oerke, E.C. Development of spectral indices for detecting and identifying plant diseases. Remote Sens. Environ. 2013, 128, 21–30. [Google Scholar] [CrossRef]

- Wang, Z.J.; Wang, J.H.; Liu, L.Y.; Huang, W.J.; Zhao, C.J.; Wang, C.Z. Prediction of grain protein content in winter wheat (Triticum aestivum L.) using plant pigment ratio (PPR). Field Crops Res. 2004, 90, 311–321. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Berjón, A.; López-Lozano, R.; Miller, J.R.; Martín, P.; Cachorro, V.; González, M.R.; de Frutos, A. Assessing vineyard condition with hyperspectral indices: Leaf and canopy reflectance simulation in a row-structured discontinuous canopy. Remote Sens. Environ. 2005, 99, 271–287. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Naidu, R.A.; Perry, E.M.; Pierce, F.J.; Mekuria, T. The potential of spectral reflectance technique for the detection of Grapevine leafroll-associated virus-3 in two red-berried wine grape cultivars. Comput. Electron. Agric. 2009, 66, 38–45. [Google Scholar] [CrossRef]

- Merzlyak, M.N.; Gitelson, A.A.; Chivkunova, O.B.; Rakitin, V.Y. Non-destructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiol. Plant. 1999, 106, 135–141. [Google Scholar] [CrossRef] [Green Version]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Chen, S.F.; Goodman, J. An empirical study of smoothing techniques for language modeling. Comput. Speech Lang. 1999, 13, 359–394. [Google Scholar] [CrossRef] [Green Version]

- Gamon, J.A.; Peñuelas, J.; Field, C.B. A narrow-waveband spectral index that tracks diurnal changes in photosynthetic efficiency. Remote Sens. Environ. 1992, 41, 35–44. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide Dynamic Range Vegetation Index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32. [Google Scholar] [CrossRef] [Green Version]

- Liu, M.; Luo, Y.; Tao, D.; Xu, C.; Wen, Y. Low-Rank Multi-View Learning in Matrix Completion for Multi-Label Image Classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Robnik-Šikonja, M.; Kononenko, I. Theoretical and Empirical Analysis of ReliefF and RReliefF. Mach. Learn. 2003, 53, 23–69. [Google Scholar] [CrossRef] [Green Version]

- Kira, K.; Rendell, L.A. A Practical Approach to Feature Selection. In Machine Learning Proceedings 1992; Sleeman, D., Edwards, P., Eds.; Morgan Kaufmann: San Francisco, CA, USA, 1992; pp. 249–256. [Google Scholar]

- Kononenko, I. Estimating Attributes: Analysis and Extensions of RELIEF; ECML: Berlin/Heidelberg, Germany, 1994; pp. 171–182. [Google Scholar]

- Messina, G.; Modica, G. Applications of UAV Thermal Imagery in Precision Agriculture: State of the Art and Future Research Outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Lassoued, H.; Ketata, R. ECG multi-class classification using neural network as machine learning model. In Proceedings of the 2018 International Conference on Advanced Systems and Electric Technologies (IC_ASET), Hammamet, Tunisia, 22–25 March 2018; pp. 473–478. [Google Scholar]

- Rish, I. An empirical study of the naive Bayes classifier. IJCAI 2001 Workshop Empir. Methods Artif. Intell. 2001, 3, 41–46. [Google Scholar]

- Awad, M.; Khanna, R. Support Vector Machines for Classification. In Efficient Learning Machines; Apress: Berkeley, CA, USA, 2015; pp. 39–66. [Google Scholar]

- Cover, T.M.; Hart, P.E.J.I.T.I.T. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Viera, A.J.; Garrett, J.M. Understanding interobserver agreement: The kappa statistic. Fam. Med. 2005, 37, 360–363. [Google Scholar]

- Loh, W.-Y. Classification and Regression Trees. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote Sens. 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Carter, G.A.; Miller, R.L. Early detection of plant stress by digital imaging within narrow stress-sensitive wavebands. Remote Sens. Environ. 1994, 50, 295–302. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Ippalapally, R.; Mudumba, S.H.; Adkay, M.; HR, N.V. Object Detection Using Thermal Imaging. In Proceedings of the 2020 IEEE 17th India Council International Conference (INDICON), New Delhi, India, 10–13 December 2020; pp. 1–6. [Google Scholar]

- Durner, J.; Klessig, D.F. Salicylic Acid Is a Modulator of Tobacco and Mammalian Catalases. J. Biol. Chem. 1996, 271, 28492–28501. [Google Scholar] [CrossRef] [Green Version]

- Jones, H.G.; Serraj, R.; Loveys, B.R.; Xiong, L.; Wheaton, A.; Price, A.H. Thermal infrared imaging of crop canopies for the remote diagnosis and quantification of plant responses to water stress in the field. Funct. Plant Biol. FPB 2009, 36, 978–989. [Google Scholar] [CrossRef] [Green Version]

- Alves, D.P.; Tomaz, R.S.; Laurindo, B.S.; Laurindo, R.D.F.; Silva, F.F.E.; Cruz, C.D.; Nick, C.; da Silva, D.J.H. Artificial neural network for prediction of the area under the disease progress curve of tomato late blight. Sci. Agric. 2017, 74, 51–59. [Google Scholar] [CrossRef] [Green Version]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef] [Green Version]

- Saito, T.; Kumagai, T.O.; Tateishi, M.; Kobayashi, N.; Otsuki, K.; Giambelluca, T.W. Differences in seasonality and temperature dependency of stand transpiration and canopy conductance between Japanese cypress (Hinoki) and Japanese cedar (Sugi) in a plantation. Hydrol. Process. 2017, 31, 1952–1965. [Google Scholar] [CrossRef]

| Vegetation Index | Computing Formula | References |

|---|---|---|

| Normalized Difference Vegetation Index (NDVI) | NDVI = (RNIR − RRed)/(RNIR + RRed) | [23] |

| Enhanced Vegetation Index (EVI) | EVI = 2.5 × (RNIR − RRed)/(RNIR + 6 × RRed − 7.5 × RBlue + 1) | [24] |

| Ratio Vegetation Index (RVI) | RVI = RNIR/RRed | [25] |

| Green Normalized Difference Vegetation Index (GNDVI) | GNDVI = (RNIR − RGreen)/(RNIR + RGreen) | [26] |

| Triangle Vegetation Index (TVI) | TVI = 60 × (RNIR − RGreen) − 100 × (RRed − RGreen) | [27] |

| Difference Vegetation Index (DVI) | DVI = RNIR − RRed | [28] |

| Normalized Difference of Red Edge (NDRE) | NDRE = (RNIR − RRededge)/(RNIR + RRededge) | [29] |

| Optimized Soil-Adjusted Vegetation Index (OSAVI) | OSAVI = 1.16 × (RNIR − RRed)/(RNIR + RRed + 0.16) | [30] |

| Leaf Chlorophyll Index (LCI) | LCI = (RNIR − RRededge)/(RNIR + RRed) | [31] |

| Anthocyanin Reflection Index (ARI) | ARI = (1/RGreen) − (1/RRed) | [32] |

| Cercospora Leaf Spot Index (CLSI) | CLSI = (RRededge − RGreen)/(RRededge + RGreen) − RRededge | [33] |

| Plant Pigment Radio (PPR) | PPR = (RGreen − RBlue)/(RGreen + RBlue) | [34] |

| Greenness Index (GI) | GI = RGreen/RRed | [35] |

| Transformed Chlorophyll Absorption in Reflectance Index (TCARI) | TCARI = 3 × [(RNIR − RRed) − 0.2 × (RNIR − RGreen) × RNIR/RRed] | [36] |

| Visible light Atmospheric Rated impedance Index (VARI) | VARI = (RGreen − RRed)/(RGreen + RRed − RBlue) | [37] |

| Plant Senescence Reflectance Index (PSRI) | PSRI = (RRed − RGreen)/RNIR | [38] |

| Modified Soil and Adjusted Vegetation Index (MSAVI) | ] | [39] |

| Modified Simple Ratio Index (MSR) | [40] | |

| Normalized Difference Greenness Index (NDGI) | NDGI = (RGreen − RRed)/(RGreen + RRed) | [41] |

| Soil Adjusted Vegetation Index (SAVI) | SAVI = 1.5 × (RNIR − RRed)/(RNIR + RRed + 0.5) | [42] |

| Wide Dynamic Range Vegetation Index (WDRVI) | WDRVI = (0.1 × RNIR-RRed)/(0.1 × RNIR + RRed) | [43] |

| Red edge Chlorophyll Index (CI) | CI = (RNIR/RRededge) − 1 | [44] |

| Model | Parameter Setting |

|---|---|

| ReliefF | K: 10 |

| Expand setting: method-classification | |

| NN | Number of fully connected layers: 1 |

| First layer size: 10 | |

| Activation: ReLU | |

| Iteration limit: 1000 | |

| Regularization strength (lambda): 0 | |

| Standardize data: Yes | |

| NB | Distribution name for numeric predictors: Kernel |

| Distribution name for categorical predictors: Not Applicable | |

| Kernel type: Gaussian | |

| Support: Unbounded | |

| SVM | Kernel function: Gaussian |

| Kernel scale: 0.79 | |

| Box constraint level: 1 | |

| Multiclass method: One-vs-One | |

| Standardize data: true | |

| KNN | Number of neighbors: 1 |

| Distance metric: Euclidean | |

| Distance weights: Equal distance | |

| Standardize data: true | |

| DT | Maximum number of splits: 100 |

| Split criterion: Gini’s diversity index | |

| Surrogate decision splits: Off | |

| RF | Number of trees: 1000 |

| Maximum depth: 5 | |

| Minimum number of samples separated: 5 | |

| Minimum number of samples on leaf nodes after separation: 4 | |

| Number of features: auto |

| Model | Accuracy | Precision | Recall | F1 | Kappa |

|---|---|---|---|---|---|

| NN | 0.985 | 0.984 | 0.990 | 0.987 | 0.968 |

| NB | 0.940 | 0.940 | 0.958 | 0.949 | 0.877 |

| SVM | 0.984 | 0.983 | 0.990 | 0.986 | 0.967 |

| KNN | 0.977 | 0.977 | 0.985 | 0.981 | 0.953 |

| DT | 0.977 | 0.978 | 0.983 | 0.980 | 0.951 |

| RF | 0.987 | 0.990 | 0.988 | 0.989 | 0.972 |

| Region | a | b |

|---|---|---|

| A | 8.16895 | −0.29052 |

| B | 9.20805 | −0.28432 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, D.; Lu, Y.; Liang, H.; Lu, Z.; Yu, L.; Liu, Q. Areca Yellow Leaf Disease Severity Monitoring Using UAV-Based Multispectral and Thermal Infrared Imagery. Remote Sens. 2023, 15, 3114. https://doi.org/10.3390/rs15123114

Xu D, Lu Y, Liang H, Lu Z, Yu L, Liu Q. Areca Yellow Leaf Disease Severity Monitoring Using UAV-Based Multispectral and Thermal Infrared Imagery. Remote Sensing. 2023; 15(12):3114. https://doi.org/10.3390/rs15123114

Chicago/Turabian StyleXu, Dong, Yuwei Lu, Heng Liang, Zhen Lu, Lejun Yu, and Qian Liu. 2023. "Areca Yellow Leaf Disease Severity Monitoring Using UAV-Based Multispectral and Thermal Infrared Imagery" Remote Sensing 15, no. 12: 3114. https://doi.org/10.3390/rs15123114