Remote 3D Displacement Sensing for Large Structures with Stereo Digital Image Correlation

Abstract

:1. Introduction

1.1. Calibration of Extrinsic Parameters for Stereo-DIC

1.2. Establishment of Reference Frame for Stereo-DIC

1.3. Camera Motion Correction

2. Methodology

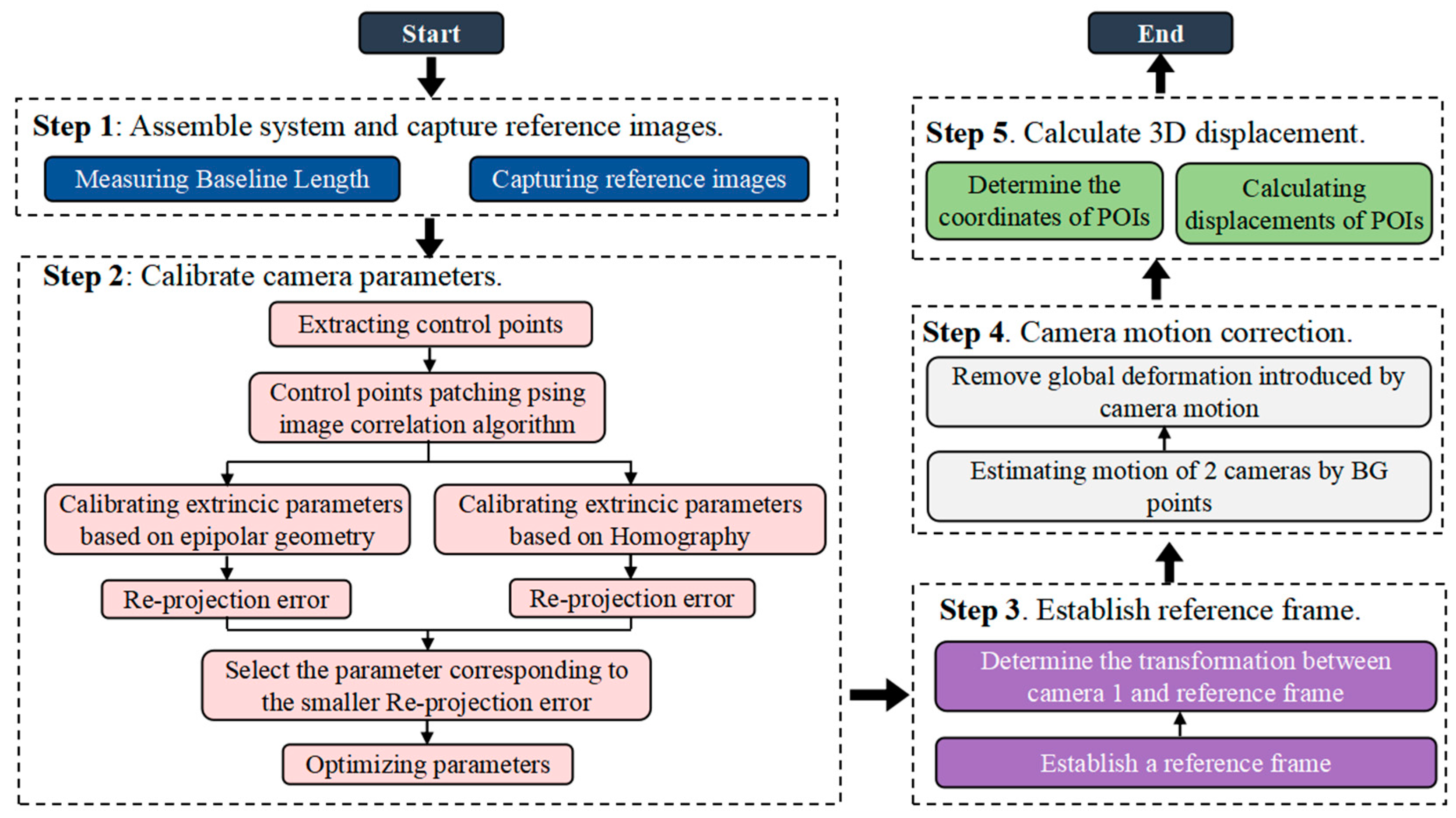

2.1. Working Procedure

2.2. Adaptive Stereo-DIC Extrinsic Parameter Self-Calibration

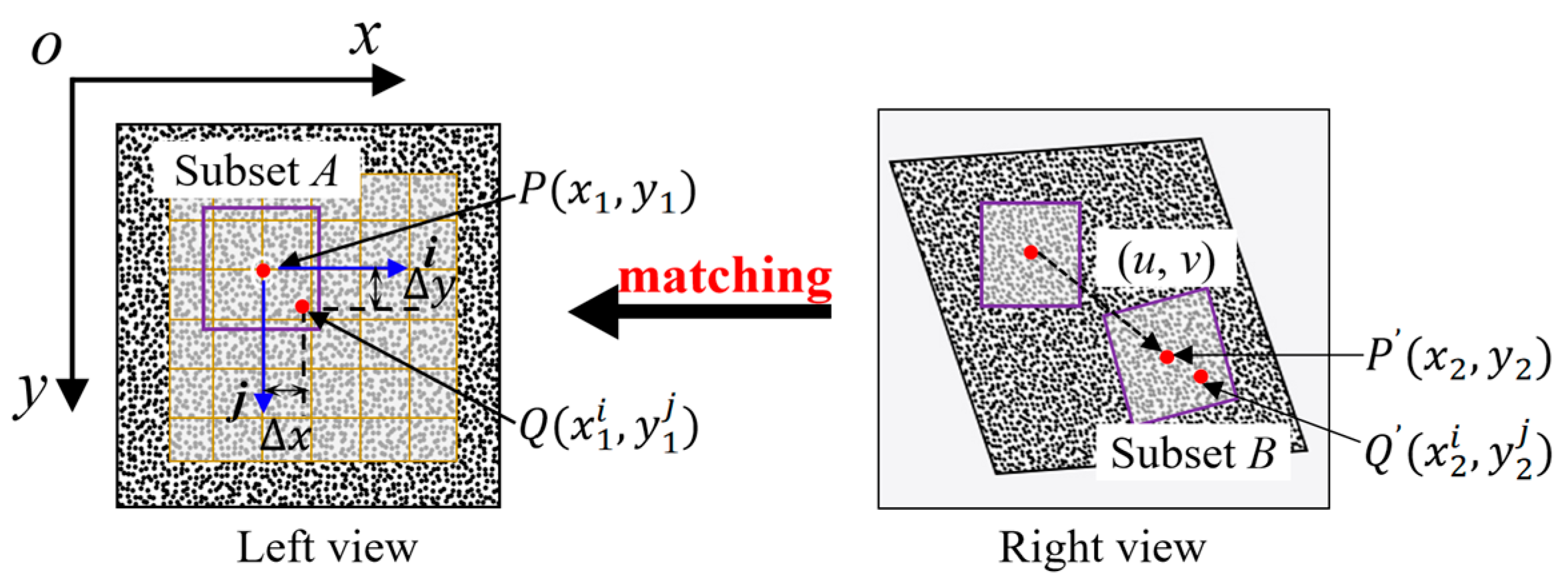

2.2.1. Control Point Matching Using Image Correlation Algorithm

2.2.2. Extrinsic Parameters Calibration

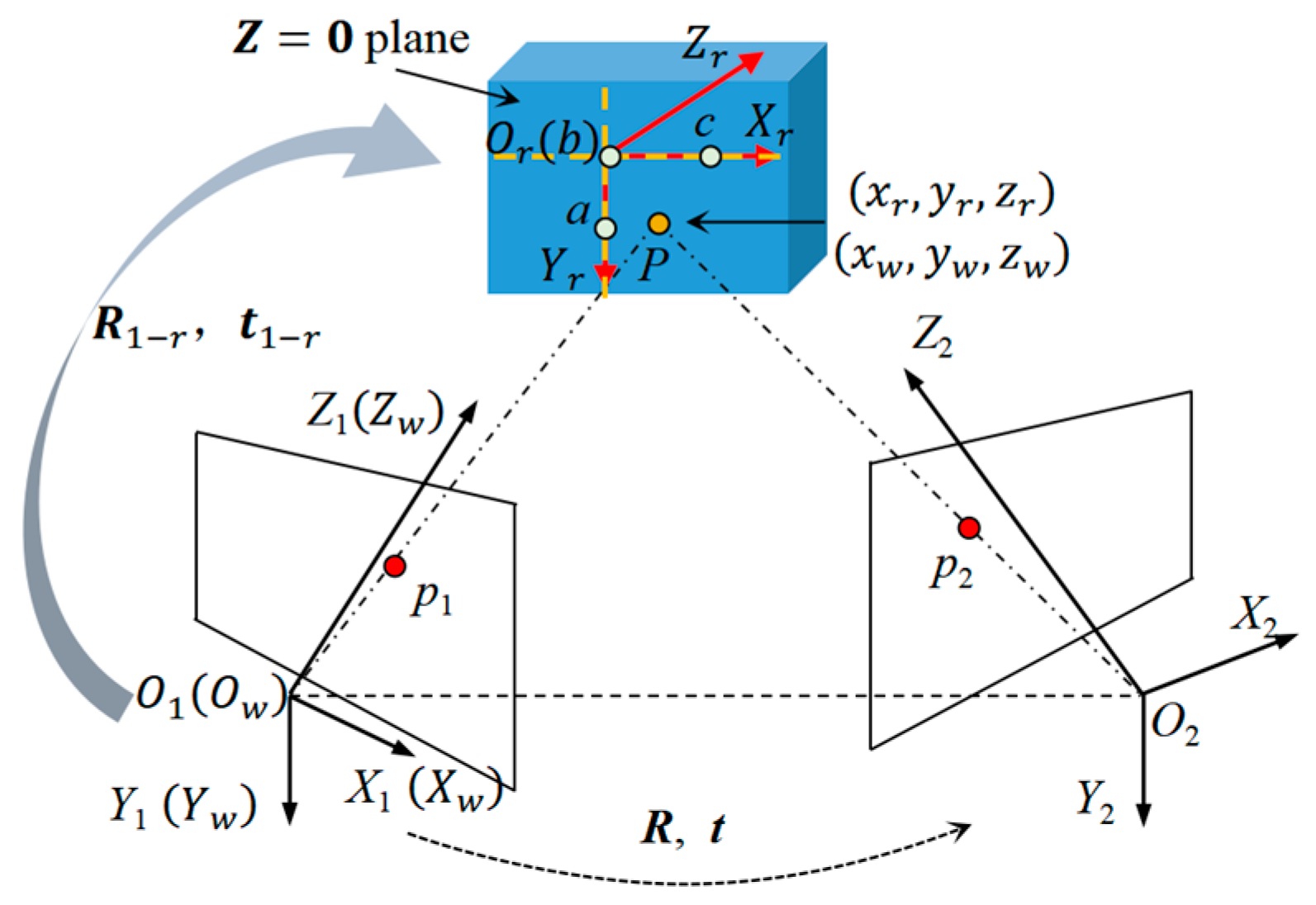

2.3. Establishment of the Reference Frame

2.4. Camera Motion Correction

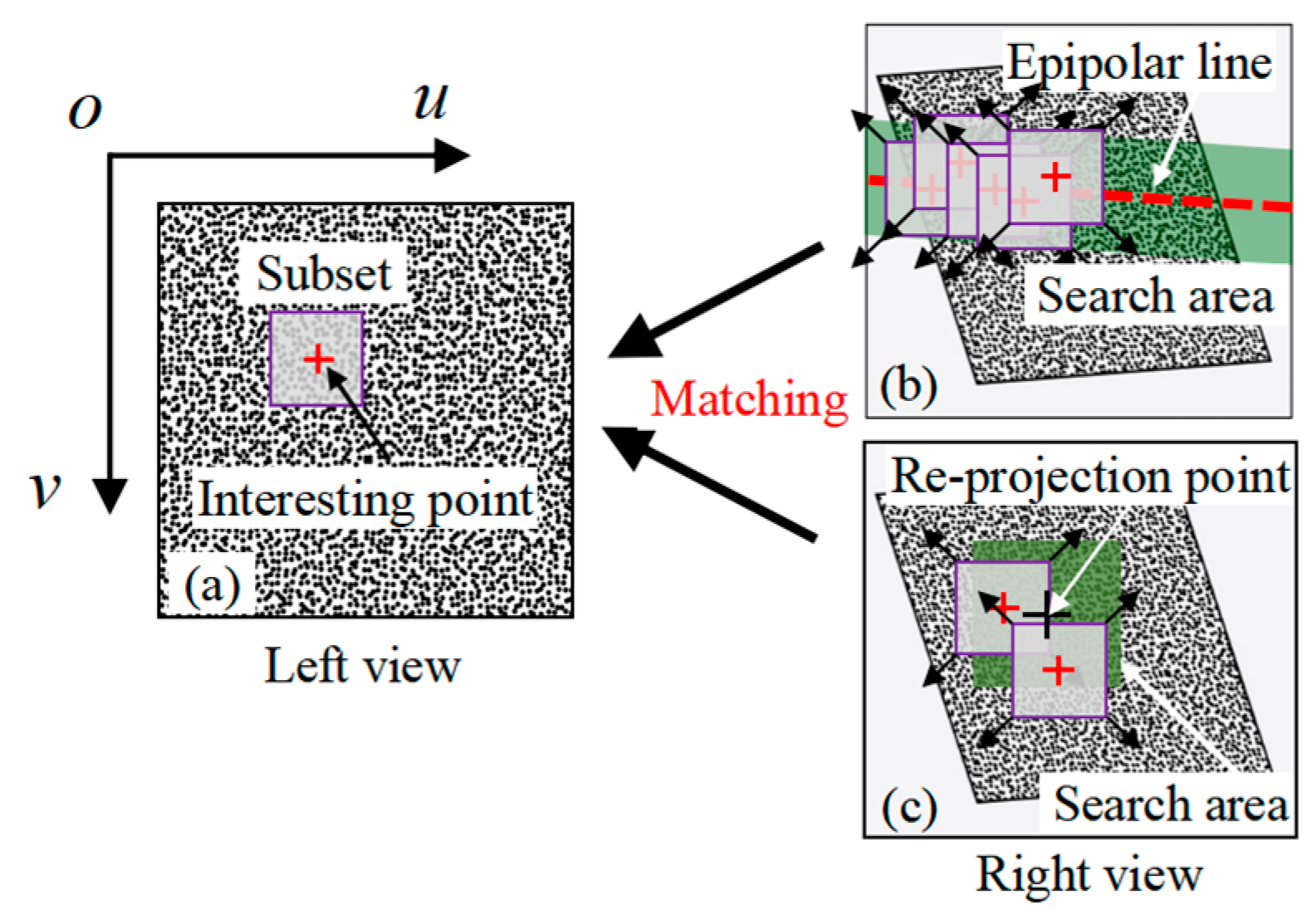

2.5. Coordinate Localization

3. Experiments and Results

3.1. Configuration of Stereo-DIC System

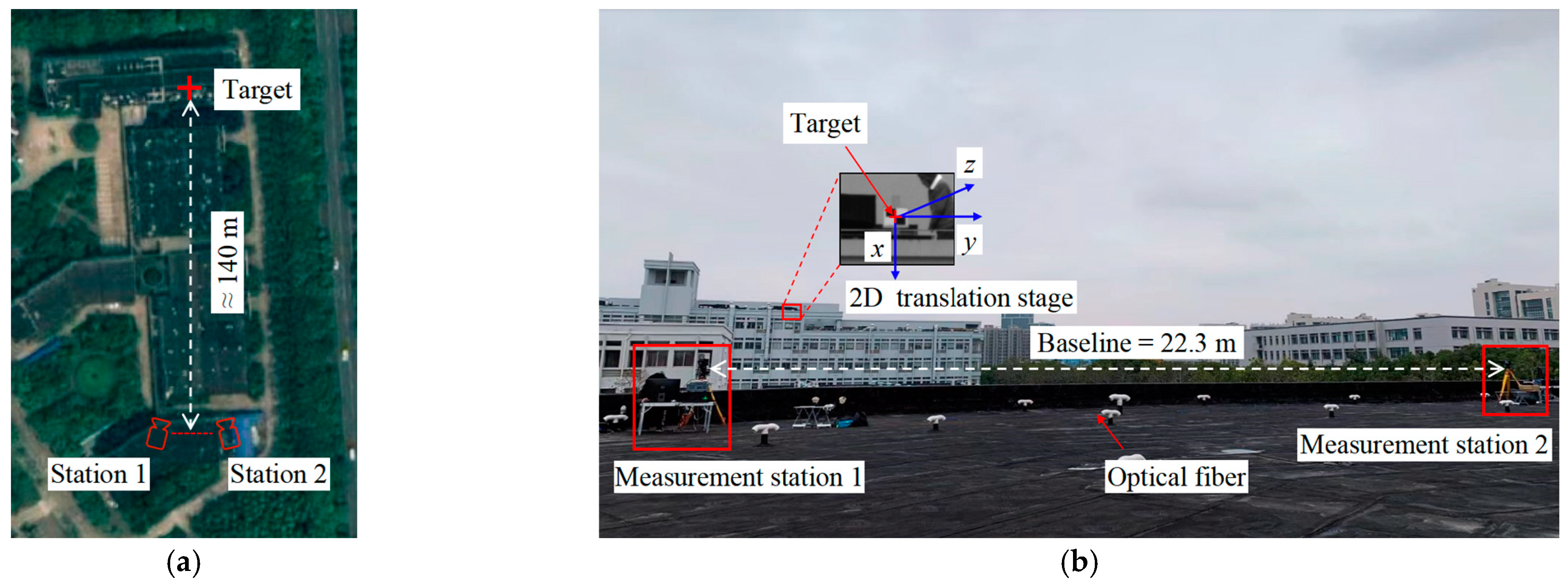

3.2. Validation Experiment

3.2.1. Parameters of the Stereo-DIC System

3.2.2. Reference Frame

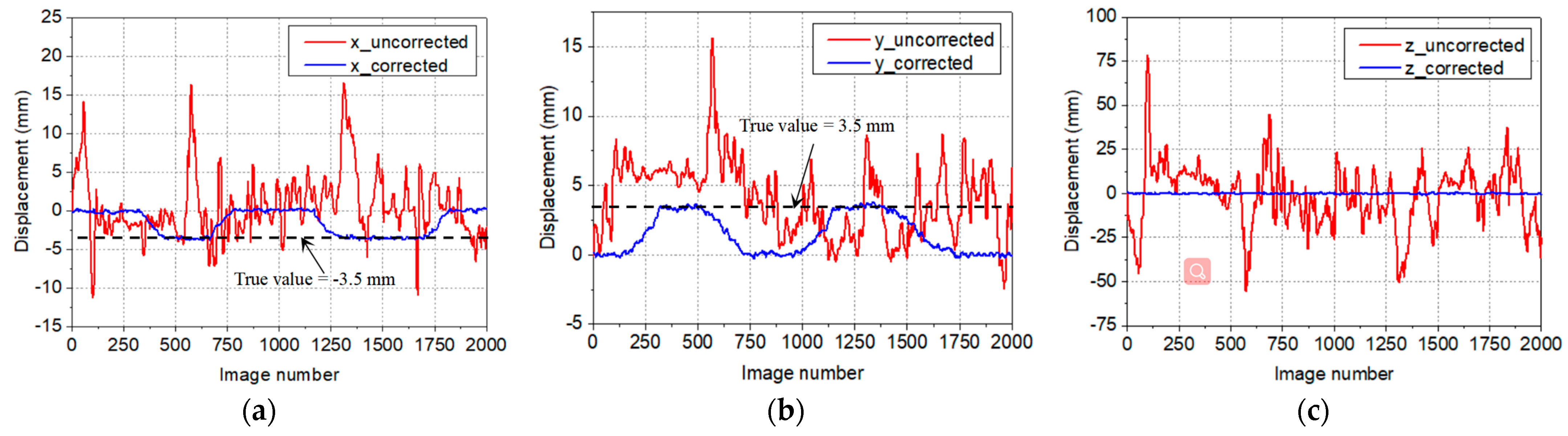

3.2.3. Displacements of Target

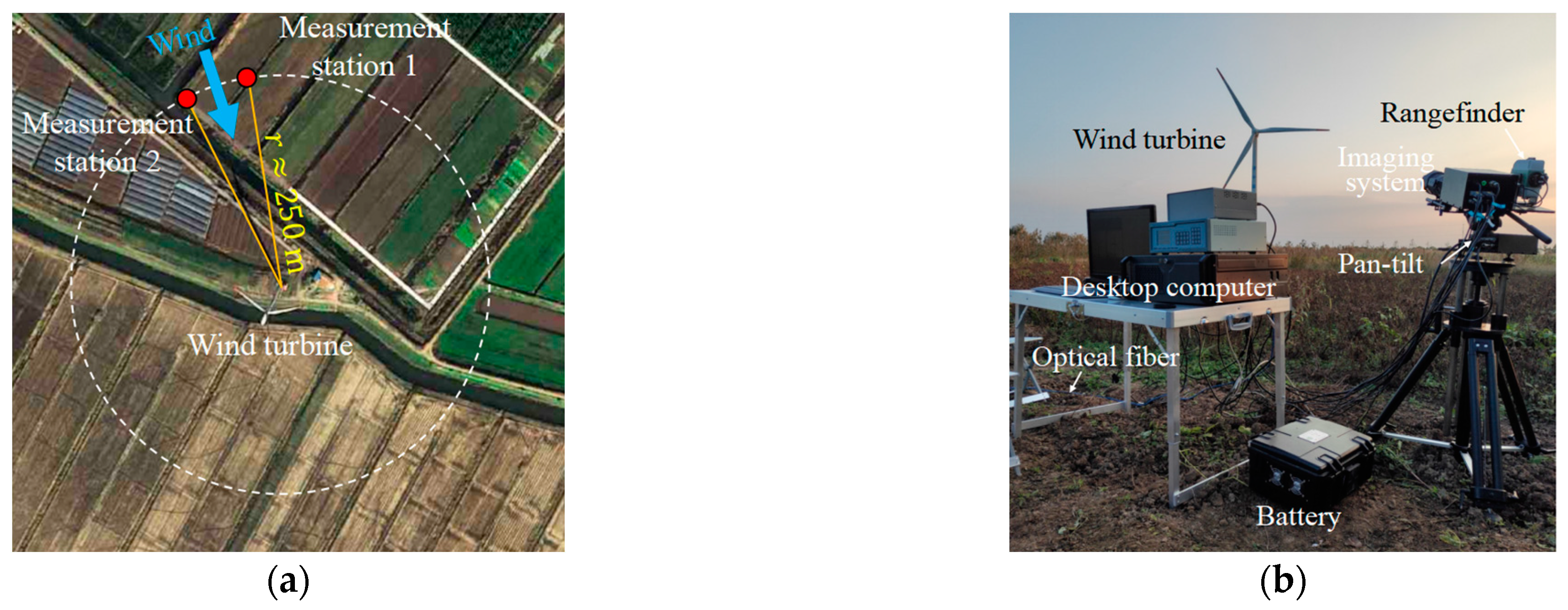

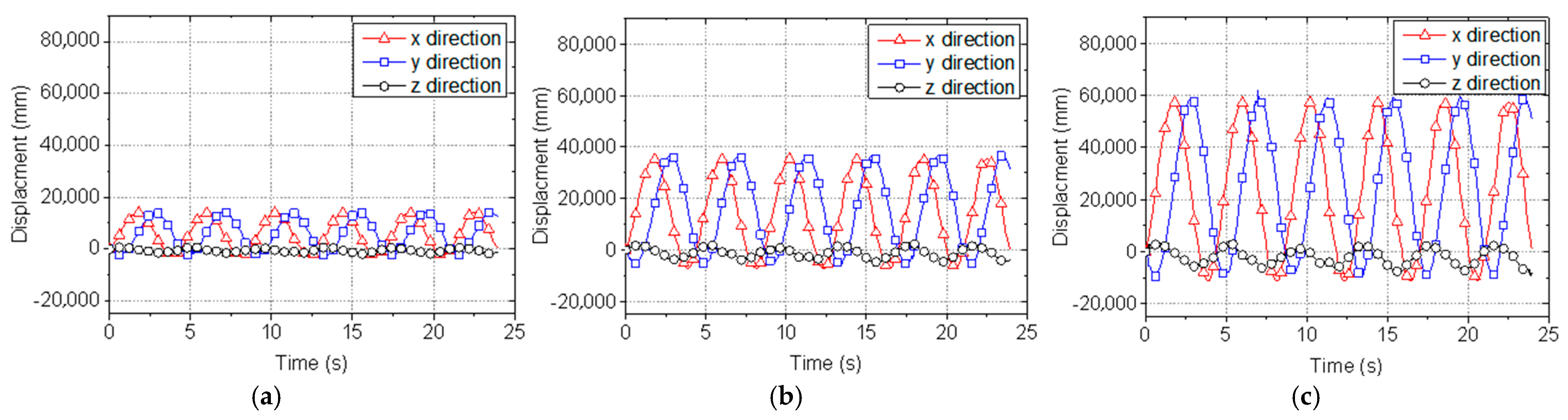

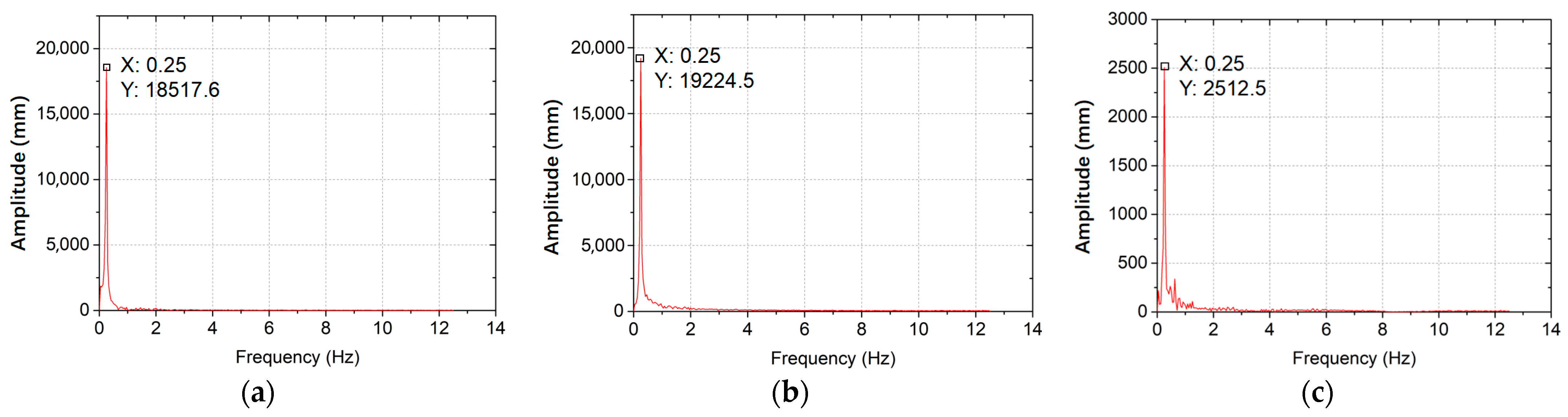

3.3. Health Diagnosis of Wind Turbine Blades

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, D.; Yu, Z.; Xu, Y.; Ding, L.; Ding, H.; Yu, Q.; Su, Z. GNSS aided long-range 3D displacement sensing for high-rise structures with two non-overlapping cameras. Remote Sens. 2022, 14, 379. [Google Scholar] [CrossRef]

- Luo, R.; Zhou, Z.; Chu, X.; Ma, W.; Meng, J. 3D deformation monitoring method for temporary structures based on multi-thread LiDAR point cloud. Measurement 2022, 200, 111545. [Google Scholar] [CrossRef]

- Shen, N.; Chen, L.; Liu, J.; Wang, L.; Tao, T.; Wu, D.; Chen, R. A review of global navigation satellite system (GNSS)-based dynamic monitoring technologies for structural health monitoring. Remote Sens. 2019, 11, 1001. [Google Scholar] [CrossRef] [Green Version]

- Dong, C.; Celik, O.; Catbas, F.N.; O’Brien, E.J.; Taylor, S.; Engineering, I. Structural displacement monitoring using deep learning-based full field optical flow methods. Struct. Infrastruct. Eng. 2020, 16, 51–71. [Google Scholar] [CrossRef]

- Entezami, A.; Arslan, A.N.; De Michele, C.; Behkamal, B. Online hybrid learning methods for real-time structural health monitoring using remote sensing and small displacement data. Remote Sens. 2022, 14, 3357. [Google Scholar] [CrossRef]

- Liu, G.; He, C.; Zou, C.; Wang, A. Displacement measurement based on UAV images using SURF-enhanced camera calibration algorithm. Remote Sens. 2022, 14, 6008. [Google Scholar] [CrossRef]

- Tian, L.; Ding, T.; Pan, B. Generalized scale factor calibration method for an off-axis digital image correlation-based video deflectometer. Sensors 2022, 22, 10010. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, S.; Li, X.; Zou, Y.; Zhang, D. Development of monocular video deflectometer based on inclination sensors. Smart Struct. Syst. 2019, 25, 607–616. [Google Scholar]

- Luo, P.; Wang, L.; Li, D.; Yang, J.; Lv, X. Deformation and failure mechanism of horizontal soft and hard interlayered rock under uniaxial compression based on digital image correlation method. Eng. Fail. Anal. 2022, 142, 106823. [Google Scholar] [CrossRef]

- Bardakov, V.V.; Marchenkov, A.Y.; Poroykov, A.Y.; Machikhin, A.S.; Sharikova, M.O.; Meleshko, N.V. Feasibility of digital image correlation for fatigue cracks detection under dynamic loading. Sensors 2021, 21, 6457. [Google Scholar] [CrossRef]

- Garbowski, T.; Maier, G.; Novati, G. Diagnosis of concrete dams by flat-jack tests and inverse analyses based on proper orthogonal decomposition. J. Mech. Mater. Struct. 2011, 6, 181–202. [Google Scholar] [CrossRef] [Green Version]

- Gajewski, T.; Garbowski, T. Calibration of concrete parameters based on digital image correlation and inverse analysis. Arch. Civ. Mech. Eng. 2014, 14, 170–180. [Google Scholar] [CrossRef]

- Gajewski, T.; Garbowski, T. Mixed experimental/numerical methods applied for concrete parameters estimation. In Proceedings of the 20th International Conference on Computer Methods in Mechanics (CMM2013), Poznań, Poland, 27–31 August 2013; pp. 293–302. [Google Scholar]

- Chuda-Kowalska, M.; Gajewski, T.; Garbowski, T. Mechanical characterization of orthotropic elastic parameters of a foam by the mixed experimental-numerical analysis. J. Theor. App. Mech. 2015, 53, 383–394. [Google Scholar] [CrossRef] [Green Version]

- Garbowski, T.; Maier, G.; Novati, G. On calibration of orthotropic elastic-plastic constitutive models for paper foils by biaxial tests and inverse analyses. Struct. Multidiscip. Optim. 2012, 46, 111–128. [Google Scholar] [CrossRef] [Green Version]

- Garbowski, T.; Grabski, J.K.; Marek, A. Full-field measurements in the edge crush test of a corrugated board—Analytical and numerical predictive models. Materials 2021, 14, 2840. [Google Scholar] [CrossRef]

- Garbowski, T.; Knitter-Piątkowska, A.; Marek, A. New edge crush test configuration enhanced with full-field strain measurements. Materials 2021, 14, 5768. [Google Scholar] [CrossRef]

- Su, Z.; Lu, L.; Yang, F.; He, X.; Zhang, D. Geometry constrained correlation adjustment for stereo reconstruction in 3D optical deformation measurements. Opt. Express 2020, 28, 12219–12232. [Google Scholar] [CrossRef]

- Seo, S.; Ko, Y.; Chung, M. Evaluation of field applicability of high-Speed 3D digital image correlation for shock vibration measurement in underground mining. Remote Sens. 2022, 14, 3133. [Google Scholar] [CrossRef]

- Reu, P.L.; Toussaint, E.; Jones, E.; Bruck, H.A.; Iadicola, M.; Balcaen, R.; Simonsen, M. DIC challenge: Developing images and guidelines for evaluating accuracy and resolution of 2D analyses. Exp. Mech. 2018, 58, 1067–1099. [Google Scholar] [CrossRef]

- Xu, A.; Jiang, G.; Bai, Z. A practical extrinsic calibration method for joint depth and color sensors. Opt. Laser. Eng. 2022, 149, 106789. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Xu, X.; Liu, M.; Peng, S.; Ma, Y.; Zhao, H.; Xu, A. An in-orbit stereo navigation camera self-calibration method for planetary rovers with multiple constraints. Remote Sens. 2022, 14, 402. [Google Scholar] [CrossRef]

- Beaubier, B.; Dufour, J.-E.; Hild, F.; Roux, S.; Lavernhe, S.; Lavernhe-Taillard, K. CAD-based calibration and shape measurement with stereoDIC. Exp. Mech. 2014, 54, 329–341. [Google Scholar] [CrossRef]

- An, Y.; Bell, T.; Li, B.; Xu, J.; Zhang, S. Method for large-range structured light system calibration. Appl. Opt. 2016, 55, 9563–9572. [Google Scholar] [CrossRef] [PubMed]

- Gao, Z.; Gao, Y.; Su, Y.; Liu, Y.; Fang, Z.; Wang, Y.; Zhang, Q. Stereo camera calibration for large field of view digital image correlation using zoom lens. Measurement 2021, 185, 109999. [Google Scholar] [CrossRef]

- Chen, B.; Genovese, K.; Pan, B. Calibrating large-FOV stereo digital image correlation system using phase targets and epipolar geometry. Opt. Laser. Eng. 2022, 150, 106854. [Google Scholar] [CrossRef]

- Liu, Z.; Li, F.; Li, X.; Zhang, G. A novel and accurate calibration method for cameras with large field of view using combined small targets. Measurement 2015, 64, 1–16. [Google Scholar] [CrossRef]

- Chen, B.; Pan, B. Camera calibration using synthetic random speckle pattern and digital image correlation. Opt. Laser. Eng. 2020, 126, 105919. [Google Scholar] [CrossRef]

- Zhong, F.; Indurkar, P.P.; Quan, C. Three-dimensional digital image correlation with improved efficiency and accuracy. Measurement 2018, 128, 23–33. [Google Scholar] [CrossRef]

- Chen, B.; Pan, B. Mirror-assisted multi-view digital image correlation: Principles, applications and implementations. Opt. Laser. Eng. 2022, 149, 106786. [Google Scholar] [CrossRef]

- Shi, B.; Liu, Z.; Zhang, G. Online stereo vision measurement based on correction of sensor structural parameters. Opt. Express 2021, 29, 37987–38000. [Google Scholar] [CrossRef] [PubMed]

- Yu, Q.; Guan, B.; Shang, Y.; Liu, X. Flexible camera series network for deformation measurement of large scale structures. Smart Struct. Syst. 2019, 24, 587–595. [Google Scholar]

- Jiao, J.; Guo, J.; Fujita, K.; Takewaki, I. Displacement measurement and nonlinear structural system identification: A vision-based approach with camera motion correction using planar structures. Struct. Control Health Monit. 2021, 28, e2761. [Google Scholar] [CrossRef]

- Lee, J.; Lee, K.-C.; Jeong, S.; Lee, Y.-J.; Sim, S.H. Long-term displacement measurement of full-scale bridges using camera ego-motion compensation. Mech. Syst. Signal Process. 2020, 140, 106651. [Google Scholar] [CrossRef]

- Chen, R.; Li, Z.; Zhong, K.; Liu, X.; Wu, Y.; Wang, C.; Shi, Y. A stereo-vision system for measuring the ram speed of steam hammers in an environment with a large field of view and strong vibrations. Sensors 2019, 19, 996. [Google Scholar] [CrossRef] [Green Version]

- Barros, F.; Sousa, P.J.; Tavares, P.J.; Moreira, P.M.J.P. Robust reference system for digital image correlation camera recalibration in fieldwork. Procedia Struct. Integr. 2018, 13, 1993–1998. [Google Scholar] [CrossRef]

- Chen, J.; Davis, A.; Wadhwa, N.; Durand, F.; Freeman, W.T.; Büyüköztürk, O. Video camera–based vibrations measurement for civil infrastructure applications. J. Infrastruct. Syst. 2017, 23, B4016013. [Google Scholar] [CrossRef]

- Abolhasannejad, V.; Huang, X.; Namazi, N. Developing an optical image-based method for bridge deformation measurement considering camera motion. Sensors 2018, 18, 2754. [Google Scholar] [CrossRef] [Green Version]

- Yoon, H.; Shin, J.; Spencer, B.F., Jr. Structural displacement measurement using an unmanned aerial system. Comput.-Aided Civ. Inf. 2018, 33, 183–192. [Google Scholar] [CrossRef]

- Yin, Y.; Zhu, H.; Yang, P.; Yang, Z.; Liu, K.; Fu, H. Robust and accuracy calibration method for a binocular camera using a coding planar target. Opt. Express 2022, 30, 6107–6128. [Google Scholar] [CrossRef]

- Zisserman, R.H.A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003; pp. 239–259. [Google Scholar]

- Pastucha, E.; Puniach, E.; Ścisłowicz, A.; Ćwiąkała, P.; Niewiem, W.; Wiącek, P. 3D reconstruction of power lines using uav images to monitor corridor clearance. Remote Sens. 2020, 12, 3698. [Google Scholar] [CrossRef]

- Bansal, M.; Kumar, M.; Kumar, M. 2D object recognition: A comparative analysis of SIFT, SURF and ORB feature descriptors. Multimed. Tools Appl. 2021, 80, 18839–18857. [Google Scholar] [CrossRef]

- Ye, X.; Zhao, J. A novel rotated sigmoid weight function for higher performance in heterogeneous deformation measurement with digital image correlation. Opt. Laser. Eng. 2022, 159, 107214. [Google Scholar] [CrossRef]

- Pan, B. An evaluation of convergence criteria for digital image correlation using inverse compositional Gauss–Newton algorithm. Strain 2014, 50, 48–56. [Google Scholar] [CrossRef]

- Hartley, R.I. In defense of the eight-point algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 580–593. [Google Scholar] [CrossRef] [Green Version]

- Ping, Y.; Liu, Y. A calibration method for line-structured light system by using sinusoidal fringes and homography matrix. Optik 2022, 261, 169192. [Google Scholar] [CrossRef]

- Zhao, K.; Han, Q.; Zhang, C.; Xu, J.; Cheng, M. Deep Hough transform for semantic line detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4793–4806. [Google Scholar] [CrossRef]

- Min, C.; Gu, Y.; Li, Y.; Yang, F. Non-rigid infrared and visible image registration by enhanced affine transformation. Pattern Recogn. 2020, 106, 107377. [Google Scholar] [CrossRef]

- Wu, R.; Zhang, D.; Yu, Q.; Jiang, Y.; Arola, D. Health monitoring of wind turbine blades in operation using three-dimensional digital image correlation. Mech. Syst. Signal Process. 2019, 130, 470–483. [Google Scholar] [CrossRef]

- Yang, D.; Zhang, S.; Wang, S.; Yu, Q.; Su, Z.; Zhang, D. Real-time illumination adjustment for video deflectometers. Struct. Control Health Monit. 2022, 29, e2930. [Google Scholar] [CrossRef]

| Intrinsic Parameters | /Pixels | /Pixels | ||

|---|---|---|---|---|

| Camera 1 | (1261.06, 1275.54) | (15,911.12, 16,069.63) | (0.56, −31.27) | |

| Camera 2 | (1258.26, 1279.05) | (15,870.17, 16,088.24) | (0.38, 15.62) | |

| Extrinsic Parameters | Method | Rotation Vector (°) | Translation Vector (mm) | Error (pixels) |

| Epipolar geometry-based | () | (22,041.47, −186.96, 7551.71) | 0.21 | |

| Homography-based | (0.14, 8.32, −0.64) | (22,035.01, −115.29, 7573.09) | 0.47 |

| Rotation Angle (°) | Translation Vector (mm) |

|---|---|

| (0.25, 7.54, 0.19) | (−19,286.21, −987.17, 135,041.34) |

| Intrinsic Parameters | /Pixels | /Pixels | ||

|---|---|---|---|---|

| Camera 1 | (2194.99, 2374.92) | (16,716.56, 16,554.44) | (−0.06, 4.58) | |

| Camera 2 | (2175.87, 2474.15) | (16,195.47, 16,036.88) | (−0.01, −2.41) | |

| Extrinsic Parameters | Rotation Vector (°) | Translation Vector (mm) | Error (pixels) | |

| (−0.24, 14.19, −2.97) | (63,252.85, 2414.49, 8747.62) | 0.15 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, W.; Li, Q.; Du, W.; Zhang, D. Remote 3D Displacement Sensing for Large Structures with Stereo Digital Image Correlation. Remote Sens. 2023, 15, 1591. https://doi.org/10.3390/rs15061591

Feng W, Li Q, Du W, Zhang D. Remote 3D Displacement Sensing for Large Structures with Stereo Digital Image Correlation. Remote Sensing. 2023; 15(6):1591. https://doi.org/10.3390/rs15061591

Chicago/Turabian StyleFeng, Weiwu, Qiang Li, Wenxue Du, and Dongsheng Zhang. 2023. "Remote 3D Displacement Sensing for Large Structures with Stereo Digital Image Correlation" Remote Sensing 15, no. 6: 1591. https://doi.org/10.3390/rs15061591