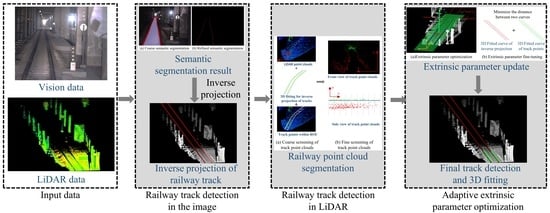

3.1. Railway Track Detection in the Image

In this study, the railway track detection and 3D fitting aims to obtain the spatial coordinates of the tracks to determine their accurate 3D trajectory. It should be noted that we do not intend to model the detailed size and external shape of the tracks [

34,

35]. Therefore, a two-stage method for railway track detection in the image is proposed.

In order to extract the railway track pixels from the image, we carry out the first stage of railway track detection in the image—semantic segmentation. Through this stage, the railway track pixels are extracted from the image.

The geometric properties of the railway tracks in the camera view are not obvious. So, we carry out the second stage of railway track detection in the image—inverse projection. Through this stage, the 3D coordinates of the railway tracks are obtained, allowing us to explore the geometric characteristics of the railway tracks.

3.1.1. Railway Track Semantic Segmentation

A semantic segmentation network is applied to obtain the accurate rail pixels in the image. We employ the on-board camera and LiDAR. To address the stability concerns during the train’s operation, we apply a classical lightweight semantic segmentation network, BiSeNet V2 [

36], which ensures the computational efficiency and speed. The model is trained on a public dataset [

21] for semantic scene understanding for trains and trams, employing three classification labels: rail-raised, rail-track, and background. The segmentation results of the model for the railway track are depicted in

Figure 2b, where red pixels represent the railway tracks, and purple pixels represent the railway track area. From the output results, the semantic segmentation of the railway tracks is extracted, as shown in

Figure 2c.

The semantic segmentation results appear coarse, directly transforming them through inverse projection into a 3D space would hinder the accurate extraction of the railway track trajectory. Therefore, we refine the semantic segmentation by obtaining the centerline of the railway track, which serves as the final representation of the railway track, as shown in

Figure 2d.

3.1.2. Inverse Projection

With the semantic segmentation of the tracks, the inverse projection of the tracks can be performed.

Figure 3 illustrates the positions of the camera and LiDAR on the train, along with the coordinate systems for the camera, LiDAR, and the rail.

To enhance the reflectivity of the railway track surface for obtaining points at a greater distance, the camera and LiDAR are tilted downward. All these coordinate systems adhere to the right-hand coordinate system convention of OpenGL. The transformation relationship between the coordinate systems is defined by Equation (1), where the points

,

, and

are the coordinates of the same point

in the LiDAR coordinate system, camera coordinate system, and rail coordinate system, respectively.

,

, and

are rotation matrices, and

,

, and

are translation matrices between the coordinate systems.

The transformation relationship between the camera coordinate system and the pixel coordinate system is represented by Equation (2), where

represents the inverse of the camera intrinsic parameter matrix

, which is obtained through camera calibration [

37] before the train’s operation, and

is the depth from the object point to the image plane. Clearly, to obtain the 3D coordinates of railway tracks, it is essential to determine the depth

corresponding to each pixel on the tracks.

Two assumptions are proposed based on the geometric characteristics of the railway tracks:

- (1)

Within a short distance in front of the train, the slope of the railway tracks changes slowly [

13].

- (2)

In the semantic segmentation of railway tracks, points represented by the same maintain the same depth .

In assumption (1), we only consider a short distance ahead of the train (200 m), and the variation in slope within this range is negligible. Assessing whether the railway tracks within this range are on a plane should be based on the variation in slope rather than the slope itself. In assumption (2), at curve tracks, the heights of the inner and outer tracks vary, resulting in different v-values for points with the same depth . However, due to the large curvature radius of the railway tracks, during the inverse projection, the differences in v-values mainly manifest in longitudinal deviations, while lateral deviations are relatively smaller. Considering the continuity of the railway tracks, the impact of longitudinal deviations on train operation safety is much smaller than that of lateral deviations, making errors associated with assumption (2) acceptable in practical applications.

Figure 4 illustrates the side view of the camera. Once the camera is installed, the camera’s mounting height

and the angle

between the camera optical axis

and the railway track plane are thereby determined. For any point

on the surface of the railway track, the 3D coordinates of that point can be determined by knowing the angle

of the line

to the

axis, as shown in Equation (3), where

represents the length of the line segment

.

Figure 5 illustrates the pinhole camera model. Based on assumption (2), points projected onto the line

possess the consistent depth

in the camera coordinate system. The angle of the line

to the line

corresponds to

as defined in Equation (3). Based on geometric relationships,

can be computed by Equation (4), where

is the y-coordinate of point

in the image coordinate system,

is the focal length,

is the ordinate of the point

in the pixel coordinate system,

is the ordinate of the camera’s optical center in the coordinate system,

is the physical size of each pixel on the camera sensor, and

is the focal length expressed in pixels. The values

and

can be obtained through camera calibration [

37].

By integrating Equations (2)–(4), the camera coordinates of the railway track can be determined from its pixel coordinates, as expressed in Equation (5).

Based on Equation (1), the camera coordinates of the railway track points are transformed into LiDAR coordinates. The LiDAR and camera are rigidly mounted together, and the extrinsic parameters between them are theoretically constant, which can be determined through LiDAR–camera calibration [

38] before the train operation.

3.3. Adaptive Extrinsic Parameter Optimization

The camera and LiDAR are fixed on a device, which is subsequently installed on the train. This setup ensures the constancy of the extrinsic parameters between the camera and LiDAR. During the actual operation of the train, slight vibrations may occur. Additionally, when the train ascends or descends slopes, the angle between the camera’s optical axis and the railway track plane may change. This indicates that the extrinsic parameters between the camera and the rail coordinate system may change. Applying inverse projection to the semantic segmentation of the railway track and transforming it to the LiDAR coordinate system with incorrect extrinsic parameters leads to deviations from reality. Therefore, the adaptive extrinsic parameter optimization becomes crucial.

In this study, the extrinsic parameters between the camera and LiDAR are assumed to be constant. If the adaptive optimization of the extrinsic parameters between the LiDAR and the rail coordinate systems is achieved, then based on Equation (1), the extrinsic parameters between the camera and the rail coordinate systems can be computed.

As illustrated in

Figure 3, in the rail coordinate system the origin is located at the center of the left and right railway tracks below the LiDAR. The x-axis is oriented perpendicular to the right railway track, pointing towards the left railway track. The y-axis is vertical to the railway track plane pointing upwards. The z-axis aligns with the forward direction of the train’s head, specifically following the tangent direction of the railway track. Using the LiDAR point clouds of the left and right railway tracks, the position of the rail coordinate system in the LiDAR coordinate system can be determined. Subsequently, the extrinsic parameters between the LiDAR coordinate system and the rail coordinate system can be calculated.

The three axes and origin of the rail coordinate system are denoted as

; while in the LiDAR coordinate system, they are denoted as

, as illustrated by the yellow coordinate system in

Figure 8.

Based on Equation (1), there is a relationship between these two sets of parameters as illustrated in Equation (9), where

and

represent the rotation matrix and translation matrix from the rail coordinate system to the LiDAR coordinate system, respectively.

In fact,

are defined as

,

,

, and

, respectively.

are computed from the LiDAR point clouds of the left and right railway tracks. Consequently,

and

can be obtained by Equation (9). In this study, the extrinsic parameter optimization algorithm is proposed, as shown in Algorithm 1.

| Algorithm 1 Extrinsic Parameter Optimization |

| Input: LiDAR points of railway tracks |

| Output: Extrinsic parameters between coordinate systems |

| LiDAR points of the left and right railway tracks |

| : Fitted spatial straight lines based on least-squares |

| , respectively |

| , respectively |

| )/2 |

|

|

| ) |

| )/2 |

|

|

|

|

Step 1: Use the least squares method to perform spatial line fitting on near track points, as illustrated by the red fitted line in

Figure 8. The direction of this line corresponds to

. Apply the RANSAC algorithm to fit the railway track plane, as illustrated by the green fitted plane in

Figure 8. The normal vector of the plane corresponds to

. Compute the cross product of

and

, corresponding to

.

Step 2: Compute the centers of the left and right railway tracks, respectively, as illustrated by the points

and

in

Figure 8. Then, calculate the midpoint between these two points, as illustrated by the point

in

Figure 8. Translate the point along the z-axis to the z-value of 0, and this point corresponds to

. The absence of track points below the LiDAR is the reason for doing this.

Step 3: Calculate the rotation matrix and translation matrix from the rail coordinate system to the LiDAR coordinate system based on Equation (9).

By employing this algorithm and following Equation (1), the update of extrinsic parameters during the train’s operation is ensured. Convert the rotation matrix

to the Euler angle

form rotated in order

, as depicted in Equation (10). It should be noted that when the coordinate systems are defined differently, the corresponding Euler angle directions are not the same. Equation (10) is applicable in the coordinate system defined in this study.

In Equation (5), the angle between the camera’s optical axis and the railway track plane corresponds to the negative of angle ; in other words, . The absolute value of the second component of the translation matrix corresponds to the height from the camera’s optical center to railway track plane. Using the updated extrinsic parameters, the inverse projection on the semantic segmentation of the tracks is performed again.

In fact, the extrinsic parameters obtained from LiDAR–camera calibration or extrinsic parameter optimization may not be entirely accurate, due to various factors such as poor quality of LiDAR point clouds or image distortions. Therefore, an extrinsic parameter fine-tuning algorithm is designed. A slight rigid rotation is applied to the inverse projection of railway tracks, minimizing the distance between the LiDAR railway point clouds and the inverse projection of railway tracks.

As illustrated in Algorithm 2, curve fitting is performed on the LiDAR railway track points and the inverse projection of railway tracks according to Equation (6). Then, sample points along the z-axis on the two curves. Find the optimal Euler angles

, ensuring that upon rotating the sampling points of the fitted curve of the inverse projection of railway tracks, the distance between the two curves is minimized. The selection of Euler angles followed a coarse-to-fine strategy. Appling this algorithm, the extrinsic parameter

in Equation (5) is updated again, as illustrated in Equation (11).

Using the optimized extrinsic parameters, the inverse projection on the semantic segmentation of the tracks is performed again. The result is then transformed into the LiDAR coordinate system and presented jointly with the segmented LiDAR railway track points as the final output.

| Algorithm 2 Extrinsic Parameter Fine-Tuning |

| Input: Sampling points of the fitted curves of LiDAR railway track points and inverse projection of railway tracks |

| Output: Fine-tuned extrinsic parameters |

| : Sampling points of the fitted curve of LiDAR railway track points |

| : Sampling points of the fitted curve of the inverse projection of railway tracks |

| used for rotation |

|

|

|

| : Number of sampling points |

|

| during iteration |

| : The optimal Euler angles during iteration |

| : Initial value during the iterative process from coarse to fine |

|

| = [0,0,0] |

|

|

| to 10 do |

| to 10 do |

| to 10 do |

|

|

|

| then |

| ; |

|

| end |

| end |

| end |

| end |

|

|

| end |

|