Cosmetic Detection Framework for Face and Iris Biometrics

Abstract

:1. Introduction

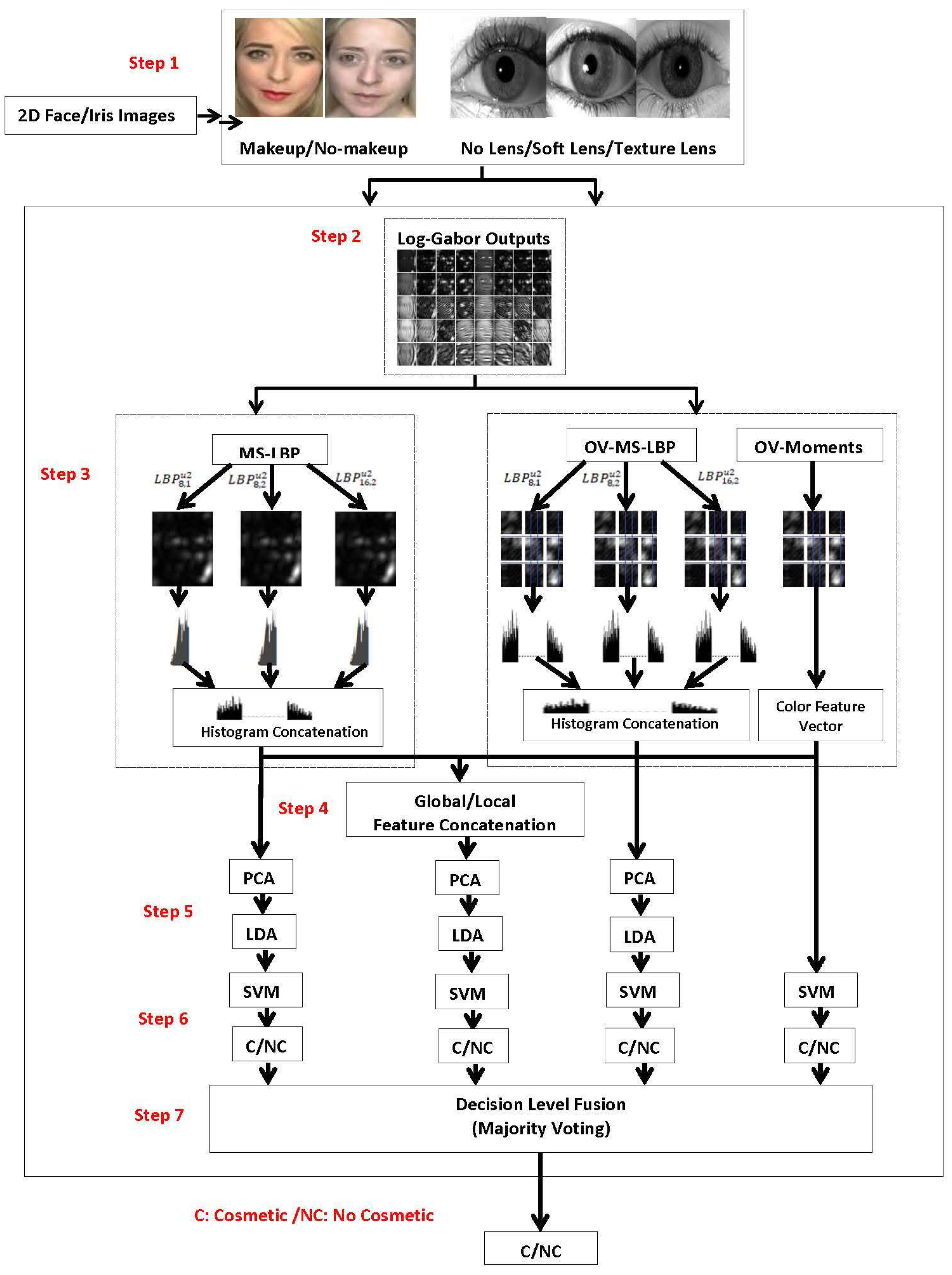

2. Cosmetic Detection for Face–Iris Biometrics

3. Proposed Face–Iris Anti-Cosmetic Scheme

- Step 1

- The image preprocessing step is carried out on all face and iris images separately to detect, scale and localize the face and irises. After this, the images are cropped, aligned and resized to dimensions of 60 × 60 prior to our cosmetic detection experiments. These undergo the histogram equalization method and mean variance normalization.

- Step 2

- All face and/or iris images are used as inputs for the L-Gabor algorithm for analyzing changes in shape, which produces 40 image outputs. Each one of these 40 output images is considered separately in the local and global feature extraction steps to exploit the features.

- Step 3

- The global feature extraction step extracts the histogram of a whole image globally for all 40 output images of one individual using three different operators (, , ) in the multi-scale manner. After this, the concatenation of histograms is considered as the global microtexton information of textures, which is presented in Equation (1):where GFV presents the extracted Global Feature Vector; and S and O describes scales and orientations employed to produce the Log-Gabor transform (five scales and eight orientations).

- Step 4

- The scheme concatenates the advantages of the features achieved using the local and global feature extraction steps as a high dimension feature set according to Equation (3):where CFVF represents Concatenated Feature Vector Fusion.

- Step 5

- In order to reduce the dimensions of the concatenated features in the global step, local step and concatenated feature set of Step 4, the proposed scheme employs PCA and LDA to obtain appropriate feature subsets of the face and/or iris by eliminating irrelevant and redundant information.

- Step 6

- The classification is conducted using the SVM classifier to detect cosmetics in 4 different ways for all 40 output images of individuals in the global feature extraction step, local feature extraction step (histogram concatenation and color feature vector) and global + local concatenation step.

- Step 7

- The last step fuses all decisions achieved using the SVM classifier through the majority voting [29] decision level fusion technique. In fact, for one individual, 160 different decisions (40 × 4) are fused to make the final decision for the detection makeup in face/iris images. In fact, the majority voting combines all 160 decisions obtained by SVM classifiers to produce a final fused decision. In the majority voting technique, usually all classifiers provide an identical confidence in classifying a set of objects via voting. This technique will output the label that receives the majority of the votes. The prediction of each classifier is counted as one vote for the predicted class. At the end of the voting process, the class that received the highest number of votes wins [29].

4. Experimental Results and Databases

5. Conclusions

Author Contributions

Conflicts of Interest

References

- Jain, A.K.; Li, S.Z. Handbook of Face Recognition; Springer: New York, NY, USA, 2011. [Google Scholar]

- Bhatt, H.S. Recognizing surgically altered face images using multiobjective evolutionary algorithm. IEEE Trans. Inf. Forensics Secur. 2013, 8, 89–100. [Google Scholar] [CrossRef]

- Eskandari, M.; Omid, S. Optimum scheme selection for face–iris biometric. IET Biom. 2016, 6, 334–341. [Google Scholar] [CrossRef]

- Sharifi, O.; Eskandari, M.; Yildiz, M.Ç. Scheming an efficient facial recognition system using global and random local feature extraction methods. In Proceedings of the 2017 International Conference on Computer Science and Engineering (UBMK), Antalya, Turkey, 5–8 October 2017. [Google Scholar]

- Yildiz, M.C.; Sharifi, O.; Eskandari, M. Log-Gabor transforms and score fusion to overcome variations in appearance for face recognition. In Proceedings of the International Conference on Computer Vision and Graphics, Warsaw, Poland, 19–20 September 2016; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Määttä, J.; Hadid, A.; Pietikäinen, M. Face spoofing detection from single images using texture and local shape analysis. IET Biometr. 2012, 1, 3–10. [Google Scholar] [CrossRef]

- Sharifi, O.; Maryam, E. Optimal face-iris multimodal fusion scheme. Symmetry 2016, 8, 48. [Google Scholar] [CrossRef]

- Daugman, J. How iris recognition works. In The Essential Guide to Image Processing; Academic Press: Cambridge, MA, USA, 2009; pp. 715–739. [Google Scholar]

- Daugman, J. Demodulation by complex-valued wavelets for stochastic pattern recognition. Int. J. Wavelets Multiresolut. Inf. Process. 2003, 1, 1–17. [Google Scholar] [CrossRef]

- Hollingsworth, K.; Bowyer, K.W.; Flynn, P.J. Pupil dilation degrades iris biometric performance. Comput. Vis. Image Underst. 2009, 113, 150–157. [Google Scholar] [CrossRef]

- Lee, E.C.; Park, K.R.; Kim, J. Fake iris detection by using purkinje image. In Proceedings of the International Conference on Biometrics, Hongkong, China, 5–7 January 2006; Springer: Berlin, Germany, 2006. [Google Scholar]

- Dantcheva, A.; Cunjian, C.; Arun, R. Can facial cosmetics affect the matching accuracy of face recognition systems? In Biometrics: Theory, Applications and Systems (BTAS), Proceedings of the 2012 IEEE Fifth International Conference on Cloud Computing CLOUD 2012, Honolulu, HI, USA, 24–29 June 2012; IEEE: New York, NY, USA, 2012. [Google Scholar]

- Kohli, N. Revisiting iris recognition with color cosmetic contact lenses. In Proceedings of the 2013 International Conference on Biometrics (ICB), Madrid, Spain, 4–7 June 2013. [Google Scholar]

- Derman, E.; Galdi, C.; Dugelay, J.L. Integrating facial makeup detection into multimodal biometric user verification system. In Proceedings of the 2017 5th International Workshop on Biometrics and Forensics (IWBF), Coventry, UK, 4–5 April 2017. [Google Scholar]

- Yadav, D. Unraveling the effect of textured contact lenses on iris recognition. IEEE Trans. Inf. Forensics Secur. 2014, 9, 851–862. [Google Scholar] [CrossRef]

- Chen, C.; Antitza, D.; Arun, R. Automatic facial makeup detection with application in face recognition. In Proceedings of the 2013 International Conference on Biometrics (ICB), Madrid, Spain, 4–7 June 2013. [Google Scholar]

- Hu, J. Makeup-robust face verification. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 October 2013. [Google Scholar]

- Guo, G.; Wen, L.; Yan, S. Face authentication with makeup changes. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 814–825. [Google Scholar]

- He, X.; An, S.; Shi, P. Statistical texture analysis-based approach for fake iris detection using support vector machines. In Proceedings of the International Conference on Biometrics, Seoul, Korea, 27–29 August 2007; Springer: Berlin, Germany, 2007. [Google Scholar]

- Eskandari, M.; Önsen, T. Selection of optimized features and weights on face-iris fusion using distance images. Comput. Vis. Image Underst. 2015, 137, 63–75. [Google Scholar] [CrossRef]

- Wei, Z. Counterfeit iris detection based on texture analysis. In Proceedings of the 19th International Conference on Pattern Recognition 2008 ICPR, Tampa, FL, USA, 8–11 December 2008. [Google Scholar]

- Zhang, H.; Zhenan, S.; Tieniu, T. Contact lens detection based on weighted LBP. In Proceedings of the 2010 20th International Conference on Pattern Recognition (ICPR), Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Chang, C.-C.; Chih-Jen, L. LIBSVM: A library for support vector machines. ACM Trans. Int. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- Chen, C.; Antitza, D.; Arun, R. An ensemble of patch-based subspaces for makeup-robust face recognition. Inf. Fusion 2016, 32, 80–92. [Google Scholar] [CrossRef]

- Ahonen, T.; Abdenour, H.; Matti, P. Face description with local binary patterns: Application to face recognition. IEEE Trans. Pattern Anal. Mach. Int. 2006, 28, 2037–2041. [Google Scholar] [CrossRef] [PubMed]

- Tapia, J.E.; Claudio, A.P.; Kevin, W.B. Gender classification from iris images using fusion of uniform local binary patterns. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–9 September 2014; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Määttä, J.; Abdenour, H.; Matti, P. Face spoofing detection from single images using micro-texture analysis. In Proceedings of the 2011 International Joint Conference on Biometrics (IJCB), Washington, DC, USA, 11–13 October 2011. [Google Scholar]

- Zhitao, X. Research on log Gabor wavelet and its application in image edge detection. In Proceedings of the 2002 6th International Conference on Signal Processing, Beijing, China, 26–30 August 2002; Volume 1. [Google Scholar]

- Kittler, J. On combining classifiers. IEEE Trans. Pattern Mach. Int. 1998, 20, 226–239. [Google Scholar] [CrossRef]

- Toh, K.-A.; Jaihie, K.; Sangyoun, L. Biometric scores fusion based on total error rate minimization. Pattern Recognit. 2008, 41, 1066–1082. [Google Scholar] [CrossRef]

- Liau, H.F.; Dino, I. Feature selection for support vector machine-based face-iris multimodal biometric system. Expert Syst. Appl. 2011, 38, 11105–11111. [Google Scholar] [CrossRef]

- Wang, X.; Chandra, K. A new approach for face recognition under makeup changes. Signal and Information. In Proceedings of the 2015 IEEE Global Conference on Processing (GlobalSIP), Orlando, FL, USA, 14–26 December 2015. [Google Scholar]

- Kose, N.; Ludovic, A.; Jean-Luc, D. Facial makeup detection technique based on texture and shape analysis. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015; Volume 1. [Google Scholar]

| Train Individuals (No-Cosmetic/Cosmetic) | Test Individuals (No-Cosmetic/Cosmetic) | |

|---|---|---|

| Face Dataset | 75 (150/150) | 75 (150/150) |

| Iris dataset | 50 (100/200) | 50 (100/200) |

| Methods | CR (%) | TER (%) |

|---|---|---|

| LBP | 60.00 | 11.02 |

| L-Gabor | 64.00 | 10.90 |

| Color-Moments | 69.67 | 9.52 |

| Ov-Color-Moments | 73.67 | 7.38 |

| Ov-LBP | 74.00 | 7.30 |

| multiS-LBP (Global) | 79.67 | 6.83 |

| multiS-Ov-LBP (Local) | 82.67 | 6.01 |

| multiS-Ov-LBP + Ov-Color-Moments | 86.00 | 5.16 |

| Proposed Scheme | 91.67 | 3.18 |

| Methods | Transparent Contact Lens (Soft) | Color Cosmetic Contact Lens (Texture) | ||

|---|---|---|---|---|

| CR (%) | TER (%) | CR (%) | TER (%) | |

| LBP | 55.00 | 14.00 | 56.50 | 13.33 |

| L-Gabor | 58.50 | 13.56 | 58.50 | 13.04 |

| Color-Moments | 49.00 | 17.81 | 62.50 | 10.96 |

| Ov-Color-Moments | 50.05 | 17.01 | 64.50 | 9.62 |

| Ov-LBP | 62.50 | 11.39 | 66.00 | 9.02 |

| multiS-LBP (Global) | 62.50 | 11.00 | 67.50 | 8.93 |

| multiS-Ov-LBP (Local) | 66.50 | 9.98 | 71.00 | 8.57 |

| multiS-Ov-LBP + Ov-Color-Moments | 68.00 | 9.10 | 74.00 | 7.74 |

| Proposed Scheme | 71.50 | 8.83 | 78.50 | 6.80 |

| Methods | Face Makeup | Transparent Contact Lens (Soft) | Color Cosmetic Contact Lens (Texture) |

|---|---|---|---|

| HOG + SVM [17] | 52.00 | 49.00 | 51.50 |

| SIFT + SVM [17] | 54.34 | 50.50 | 51.50 |

| CCA + SVM [17] | 63.00 | 60.00 | 57.00 |

| CCA + PLS +SVM [32] | 66.67 | 60.00 | 61.50 |

| LBP + Gabor +GIST + EOH + Color-Moments + SVM [16] | 87.00 | 58.50 | 63.00 |

| LGBP + HOG + SVM [33] | 91.34 | 70.67 | 76.00 |

| Proposed Scheme | 91.67 | 71.50 | 78.50 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharifi, O.; Eskandari, M. Cosmetic Detection Framework for Face and Iris Biometrics. Symmetry 2018, 10, 122. https://doi.org/10.3390/sym10040122

Sharifi O, Eskandari M. Cosmetic Detection Framework for Face and Iris Biometrics. Symmetry. 2018; 10(4):122. https://doi.org/10.3390/sym10040122

Chicago/Turabian StyleSharifi, Omid, and Maryam Eskandari. 2018. "Cosmetic Detection Framework for Face and Iris Biometrics" Symmetry 10, no. 4: 122. https://doi.org/10.3390/sym10040122