Section 2.1 describes the RNN-T [

12] model that enables streaming ASR,

Section 2.2 describes the pre-train process, and

Section 2.3 describes three finetuning methods: Basic finetuning (method 1), Fixed Decoder (method 2) and Data Augmentation (method 3) in the speech of CI patients.

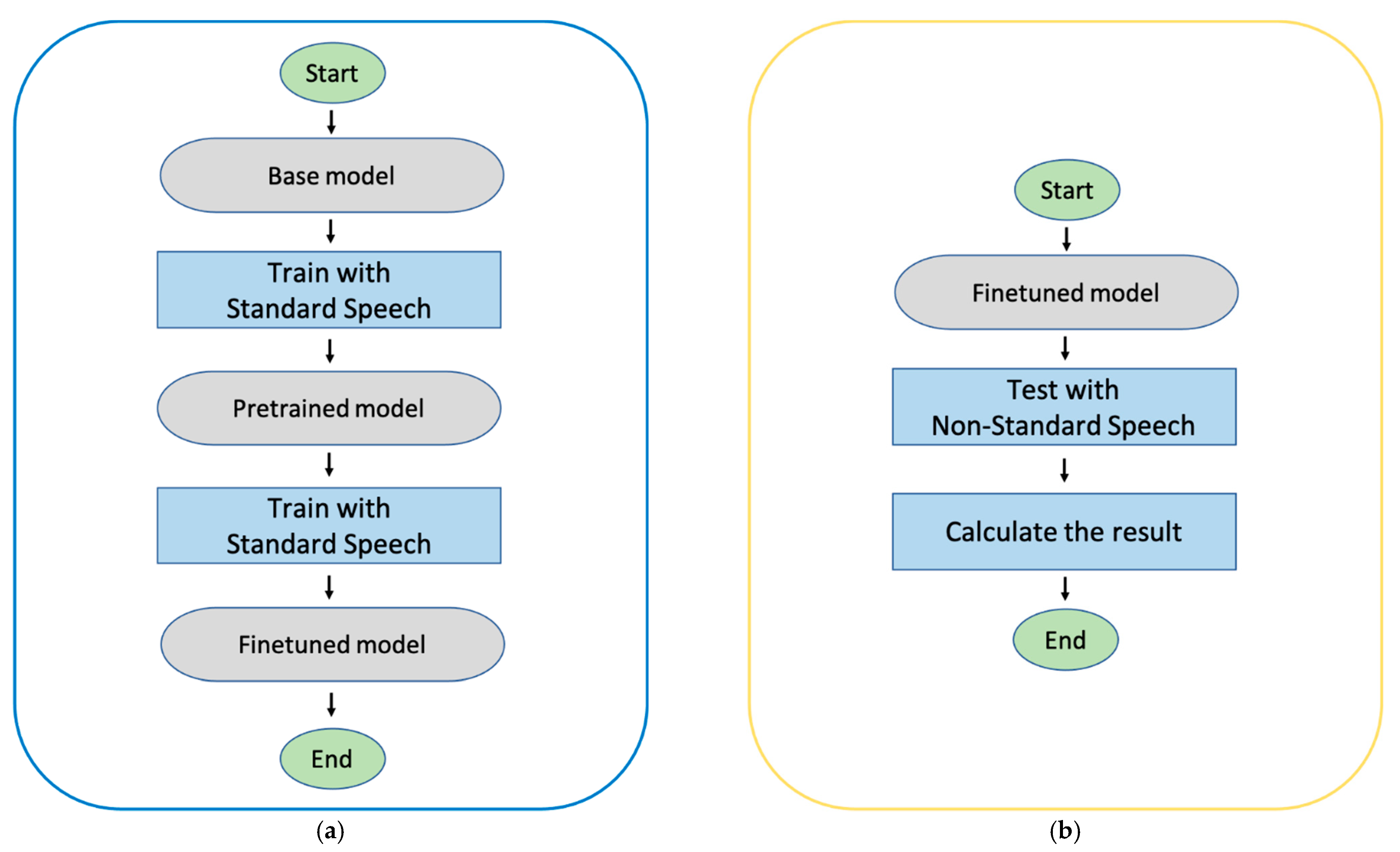

Figure 1 illustrates the sequence of the experiments.

Figure 1a shows the steps of training procedure and

Figure 1a shows the test procedure, respectively. In

Figure 1a, firstly, the base model is pre-trained with the data of standard speech. Second, the pre-train model is finetuned with non-standard train speech. Three finetuned models are derived through training with non-standard speech depending on the method (method 1, 2, 3). In test procedure, we evaluate the performance of the finetuned models with non-standard test speech.

2.1. Base Model

This study uses a unidirectional RNN transducer as a base model (

Figure 2). We used the version presented in [

13]. The RNN-T model consists of an encoder network, a decoder network, and a joint network. Intuitively, encoder networks can be considered as acoustic models, and decoder networks are similar to language models.

All the experiments used 80-dimensional log-Mel features, computed with a 20 ms window which was a hamming window, and shifted every 10 ms.

is the input sequence of length . is the output sequence of length . Set consists of a total of 53 Korean onset, nucleus, and coda. For e.g., , “space”]. , where is a special label indicating the beginning of a sentence. Both and are fixed-length real-valued vectors. In the encoder network, the input sequence is used to calculate the output vector through the unidirectional RNN and projection layer. In the decoder network, the previous label prediction calculates the output vector through the unidirectional RNN and projection layer, where is .

A joint network calculates the output vector

by concatenating

and

:

where

obtained through the joint network defines the probability distribution through the softmax layer.

Our model’s encoder network consists of five unidirectional layers of 512 LSTM cells and 320 projection layers. The decoder network consists of two layers of 512 LSTM cells and 320 projection layers. The joint network consists of 320-feedforward layers and has 13.5 M parameters in total.

2.4. Dataset & Metric

In 2018, the AI Hub released 1000 h of the Korean dialog dataset, KsponSpeech [

14]. We pretrained our model with approximately 600 h of speech (a total of 540,000 speech utterances within a 10 s timeframe from KsponSpeech). Phonometrically, in many cases, the Korean alphabet is made up of onset, nucleus, and coda, so one phoneme is expressed differently depending on the location. Among general sentences, the frequency of consonants and vowels in Korean speech is much different than English. In addition, while English sentences are right branching language as subject-verb-object structure, Korean sentences are left branching language as subject-object-verb structure. Therefore, Korean speech has many different characteristics than English in sentence structure and vocalization [

18]. CI data was collected for about 3 h from 15 CI patients on YouTube. The speech was recorded through the subjects’ cell phones and then uploaded to YouTube. The training data and evaluation data were divided into 2 h and 30 min, respectively.

We use the character error rate (CER) as a metric:

where

X and

Y are predicted, and represent the ground truth scripts. The distance

is the Levenshtein distance between

X and

Y, and the length

L is the length of the ground truth script

Y.