Machine Learning and Cognitive Ergonomics in Air Traffic Management: Recent Developments and Considerations for Certification

Abstract

:1. Introduction

- What VQ&C techniques are likely to be adopted for increasingly autonomous systems that incorporate AI techniques?

- To what extent do these VQ&C techniques and ongoing end-user acceptance (i.e., “trust”) of increasingly autonomous systems require that the AI techniques used be explainable?

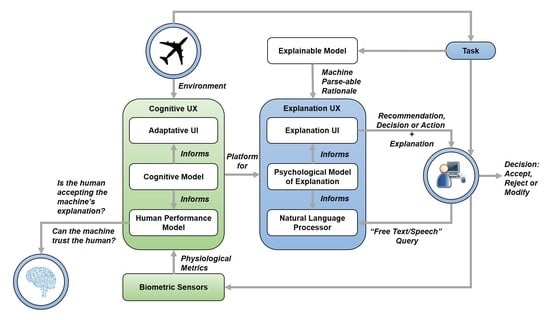

- How can an explanation human–machine interface (HMI) component for explainable AI systems be developed on top of our existing work on cognitive HMIs?

2. Fundamental Concepts

3. Autonomy

3.1. From Automation to Autonomy

3.2. Measuring the Level of Automation/Autonomy

3.3. Trust in Autonomy

4. Application in the Aviation/Air Traffic Management Domain

4.1. Autonomy in Air Traffic Management

- Determining concepts of operation for interoperability between ground systems and aircraft with various autonomous capabilities.

- Predicting the system-level effects of incorporating IA systems and aircraft in controlled airspace.

- Observe: Scan the environment by monitoring many more data sources than a human could.

- Orient.: Synthesise this data into information, e.g., as follows:

- ○

- Monitor voice and data communications for inconsistencies and mistakes.

- ○

- Monitor aircraft tracks for deviations from clearances.

- ○

- Identify flight path conflicts.

- ○

- Monitor weather for potential hazards, as well as potential degradations in capacity.

- ○

- Detect imbalances between airspace demand and capacity.

- Decide: Identify and evaluate traffic management options and recommend a course of action.

- Act: Disseminate controller decisions via voice and/or datalink communications, where the effectiveness of IA in ATM systems would be enhanced by the presence of compatible airborne IA systems.

- Decision-making by adaptive or non-deterministic systems (such as neural networks).

- Trust in adaptive or non-deterministic IA systems.

- Verification, qualification/validation, and certification (VQ&C).

- Determine how the roles of key personnel and systems should evolve as follows:

- ○

- The impact on the human–machine interfaces (HMIs) of associated IA systems during both normal and atypical operations.

- ○

- Assessing the ability of human operators to perform their new roles under realistic operating conditions, coupled with

- ○

- the dynamic reallocation of functions between humans and machines based on factors such as fatigue, risk, and surprise [11] (p. 56)—which can be determined from biometric sensors and a cognitive model of human performance.

- ○

- Developing intuitive HMI techniques with new modalities (such as touch and gesture) to [11] (p. 58) achieve the following:

- ▪

- Support real-time decision-making in high-stress dynamic conditions.

- ▪

- Support the enhanced situational awareness required to integrate IA systems.

- ○

- Effective communication, including at the HMI level, amongst different IA systems and amongst IA and non-IA systems and their operators.

- Develop processes to engender broad stakeholder trust in IA systems as follows:

- ○

- Identifying objective attributes and measures of trustworthiness.

- ○

- Matching authority and responsibility with “earned levels of trust”.

- ○

- Avoiding excessive or inappropriate trust [11] (p.58).

- ○

- Determining the best way to communicate trust-related information.

4.2. Artificial Intelligence (AI) and Machine Learning (ML) in Aviation

- Can you trust a non-deterministic DNN that can potentially deliver a different result each time that it is presented with the same scenario? (Note that for ACAS Xa, the potential for variability is moderated by filtering the generated solution set to find a TCAS-compatible resolution advisory and follow the same negotiation protocols as TCAS—interoperability is required to support mixed equipage. The situation is less clear for ACAS Xu, which supports vertical, horizontal, and merged manoeuvres to accommodate UAVs operating in controlled airspace and potential collisions with manned aircraft.)

- How do you know whether you are getting the right answer for the right reason?

- How do vendors verify such a solution, how does a regulator certify it, and how does an end user have confidence in its recommendations or autonomous actions?

- Cognitive HMI: machine trust in the human;

- HUMS: human trust in today’s machine;

- Explainable AI: human trust in tomorrow’s IA machine.

5. Explainable AI and User Interface Design

- Air traffic controllers in a busy approach environment,

- Military commanders in a command and control hierarchy, and

- Air traffic flow managers in a collaborative decision-making context.

6. Cognitive Human–Machine Interface (HMI)

6.1. From Cognitive HMI to Explanation User Interface Design (UX)

7. Regulatory Framework Evolutions: Certification versus Licensing

8. Key Findings

8.1. ATM–UTM Integration

8.2. Impact of UAS on ATM

- Relief Operations: the construction of segregated airspace corridors for unmanned relief missions. Unmanned freighters fly in formation and are separated from surrounding conventional traffic. During simulations, following aircraft in the formation showed a 15% reduction in fuel consumption, but controller taskload was higher than normal.

- Long-Haul Freight: Unmanned freighters are not segregated, but subject to “sectorless” control. Specially trained controllers monitor the unmanned freighters over long stretches of their route that cut across traditional sector boundaries.

- Airport Integration: Unmanned freighters are integrated into the arrival and departure sequences with consideration of their special requirements. ATM systems were enhanced to permit controllers to recognise the special characteristics of the unmanned freighters permitting, for example, standard surface operations such as towing to and from the runway with handover to a remote pilot. A designated engine start-up area may be required for drones to allow conventional traffic to pass them on the taxiway.

8.3. Air Traffic Flow Management (ATFM)

- Data acquisition,

- Data interpretation,

- Decision selection,

- Action selection.

9. Conclusions and Future Research

- How do we establish appropriate scales and practical measures for both autonomy and trust in that autonomy?

- How do we determine the current trustworthiness of the humans and machines in the team, match authority with “earned levels of trust” and vary responsibility between them while avoiding excessive or inappropriate trust?

- By what criteria do we judge the quality of a machine-provided explanation and how do we present it on the HMI in a manner that the controller is more likely to trust to an appropriate level?

- yield immediate benefits where high degrees of ATM automation are already present (e.g., auto-completion of datalink uplink messages, and arrival sequencing) or already planned (inferring user intent, and re-routing flights),

- engender an appropriate level of calibrated trust, minimising both unwarranted distrust and overtrust,

- address the HMI requirements for variable autonomy in human–machine teaming,

- adapt when new explainable machine learning models become available?

Author Contributions

Funding

Conflicts of Interest

References

- Bisignani, G. Vision 2050 Report; IATA: Singapore, 2011. [Google Scholar]

- Royal Commission on Environmental Pollution. The Environmental Effects of Civil Aircraft in Flight; Royal Commission on Environmental Pollution: London, UK, 2002.

- IATA. 20 Year Passenger Forecast—Global Report; IATA: Geneva, Switzerland, 2008. [Google Scholar]

- Nero, G.; Portet, S. Five Years Experience in ATM Cost Benchmarking. In Proceedings of the 7th USA/Europe Air Traffic Management R&D Seminar, Barcelona, Spain, 2–5 July 2007. [Google Scholar]

- Budget Paper No. 1 Budget Strategy and Outlook 2018–19. Available online: https://www.budget.gov.au/2018-19/content/bp1/download/BP1_full.pdf (accessed on 10 May 2018).

- CANSO Global ATM Summit and 22nd AGM: Air Traffic Management in the Age of Digitisation and Data. Available online: https://www.canso.org/canso-global-atm-summit-and-22nd-agm-air-traffic-management-age-digitisation-and-data (accessed on 14 September 2018).

- Xu, X.; He, H.; Zhao, D.; Sun, S.; Busoniu, L.; Yang, S.X. Machine Learning with Applications to Autonomous Systems. Math. Probl. Eng. 2015, 2015, 385028. [Google Scholar] [CrossRef]

- Arcos-García, Á.; Álvarez-García, J.A.; Soria-Morillo, L.M. Evaluation of Deep Neural Networks for Traffic Sign Detection Systems. Neurocomputing 2018, 316, 332–344. [Google Scholar] [CrossRef]

- De Figueiredo, M.O.; Tasinaffo, P.M.; Dias, L.A.V. Modeling Autonomous Nonlinear Dynamic Systems Using Mean Derivatives, Fuzzy Logic and Genetic Algorithms. Int. J. Innov. Comput. Inf. Control 2016, 12, 1721–1743. [Google Scholar]

- Williams, A.P.; Scharre, P.D. Autonomous Systems: Issues for Defence Policymakers; NATO Communications and Information Agency: The Hague, The Netherlands, 2015. [Google Scholar]

- National Research Councils. Autonomy Research for Civil Aviation: Toward a New Era of Flight; National Academy Press: Washington, DC, USA, 2014; ISBN 987-0-309-38688-3. [Google Scholar] [CrossRef]

- Billings, C.E. Aviation Automation: The Search for A Human-Centered Approach; CRC Press: Boca Raton, FL, USA, 1997. [Google Scholar]

- CAP 1377 ATM Automation: Guidance on Human-Technology Integration. Available online: https://skybrary.aero/bookshelf/content/index.php?titleSearch=ATM+Automation%3A+Guidance+on+human-technology+integration&authorSearch=&summarySearch=&categorySearch=&Submit=Search (accessed on 14 September 2018).

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A Model for Types and Levels of Human Interaction with Automation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef]

- Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles J3016_201609. Available online: http://standards.sae.org/j3016_201609/ (accessed on 14 September 2018).

- Sheridan, T.B. Telerobotics, Automation, and Human Supervisory Control; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Kelly, C.; Boardman, M.; Goillau, P.; Jeannot, E. Guidelines for Trust in Future ATM Systems: A Literature Review; Reference No. 030317-01; EUROCONTROL: Brussels, Belgium, 2003. [Google Scholar]

- Kelly, C. Guidelines for Trust in Future ATM Systems: Principles; HRS/HSP-005-GUI-03; EUROCONTROL: Brussels, Belgium, 2003. [Google Scholar]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mekdeci, B. Calibrated Trust and What Makes A Trusted Autonomous System. Emerging Disruptive Technologies Assessment Symposium in Trusted Autonomous Systems. Available online: https://www.dst.defence.gov.au/sites/default/files/basic_pages/documents/09_Mekdeci_UniSA.pdf (accessed on 14 September 2018).

- Finn, A.; Mekdeci, B. Defence Science & Technology Organisation Report: Trusted Autonomy. Available online: http://search.ror.unisa.edu.au/record/9916158006401831/media/digital/open/9916158006401831/12149369720001831/13149369710001831/pdf (accessed on 14 September 2018).

- Smarter Collision Avoidance. Available online: https://aerospaceamerica.aiaa.org/features/smarter-collision-avoidance/ (accessed on 14 September 2018).

- Katz, G.; Barrett, C.; Dill, D.L.; Julian, K.; Kochenderfer, M.J. Reluplex: An Efficient SMT Solver for Verifying Deep Neural Networks. In Computer Aided Verification; Majumdar, R., Kunčak, V., Eds.; Springer: Cham, Switzerland, 2017; pp. 97–117. [Google Scholar]

- Kirwan, B.; Flynn, M. Investigating Air Traffic Controller Conflict Resolution Strategies; Rep. ASA, 1; EUROCONTROL: Brussels, Belgium, 2002. [Google Scholar]

- Nedelescu, L. A Conceptual Framework for Machine Autonomy. J. Air Traffic Control 2016, 58, 26–31. [Google Scholar]

- DARPA, Explainable Artificial Intelligence (XAI) Program Update, DARPA/I2O. November 2017. Available online: https://www.darpa.mil/attachments/XAIProgramUpdate.pdf (accessed on 19 May 2018).

- Klein, G. Naturalistic Decision Making. Hum. Factors 2008, 50, 456–460. [Google Scholar] [CrossRef] [PubMed]

- Miller, T.; Howe, P.; Sonenberg, L. Explainable AI: Beware of Inmates Running the Asylum. Available online: https://arxiv.org/pdf/1712.00547.pdf (accessed on 19 May 2018).

- Liu, J.; Gardi, A.; Ramasamy, S.; Lim, Y.; Sabatini, R. Cognitive Pilot-Aircraft Interface for Single-Pilot Operations. Knowl. Based Syst. 2016, 112, 37–53. [Google Scholar] [CrossRef]

- Lim, Y.; Liu, J.; Ramasamy, S.; Sabatini, R. Cognitive Remote Pilot-Aircraft Interface for UAS Operations. In Proceedings of the 2016 International Conference on Intelligent Unmanned Systems (ICIUS 2016), Xi’an, China, 23–25 August 2016. [Google Scholar]

- Lim, Y.; Bassien-Capsa, V.; Ramasamy, S.; Liu, J.; Sabatini, R. Commercial Airline Single-Pilot Operations: System Design and Pathways to Certification. IEEE Aerosp. Electron. Syst. Mag. 2017, 32, 4–21. [Google Scholar] [CrossRef]

- Lim, Y.; Gardi, A.; Ramasamy, S.; Sabatini, R. A Virtual Pilot Assistant System for Single Pilot Operations of Commercial Transport Aircraft. In Proceedings of the 17th Australian International Aerospace Congress (AIAC 2017), Melbourne, Australia, 26–28 February 2017. [Google Scholar]

- Lim, Y.; Gardi, A.; Ramasamy, S.; Vince, J.; Pongracic, H.; Kistan, T.; Sabatini, R. A Novel Simulation Environment for Cognitive Human Factors Engineering Research. In Proceedings of the 36th IEEE/AIAA Digital Avionics Systems Conference (DASC), St Petersburg, FL, USA, 17–21 September 2017. [Google Scholar]

- Lim, Y.; Gardi, A.; Ezer, N.; Kistan, T.; Sabatini, R. Eye-Tracking Sensors for Adaptive Aerospace Human-Machine Interfaces and Interactions. In Proceedings of the 2018 5th IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), Rome, Italy, 20–22 June 2018. [Google Scholar]

- Lim, Y.; Ramasamy, S.; Gardi, A.; Kistan, T.; Sabatini, R. Cognitive Human-Machine Interfaces and Interactions for Unmanned Aircraft. J. Intell. Robot. Syst. Theory Appl. 2018, 91, 755–774. [Google Scholar] [CrossRef]

- RMIT. Development of a Cognitive HMI for Air Traffic Management Systems—THALES Report 1; Project 0200315666, Ref. RMIT/SENG/ICTS/AVIATION/001-2017; RMIT University: Melbourne, Australia, 2017. [Google Scholar]

- RMIT. Development of a Cognitive HMI for Air Traffic Management Systems—THALES Report 2; Project 0200315666, Ref. RMIT/SENG/ITS/AVIATION/002-2017; RMIT University: Melbourne, Australia, 2017. [Google Scholar]

- Batuwangala, E.; Gardi, A.; Sabatini, R. The Certification Challenge of Integrated Avionics and Air Traffic Management Systems. In Proceedings of the Australasian Transport Research Forum, Melbourne, Australia, 16–18 November 2016. [Google Scholar]

- Batuwangala, E.; Kistan, T.; Gardi, A.; Sabatini, R. Certification Challenges for Next-Generation Avionics and Air Traffic Management Systems. IEEE Aerosp. Electron. Syst. Mag. in press. [CrossRef]

- Straub, J. Automated Testing of A Self-Driving Vehicle System. In Proceedings of the 2017 IEEE AUTOTESTCON, Schaumburg, IL, USA, 9–15 September 2017; pp. 1–6. [Google Scholar]

- Mullins, G.E.; Stankiewicz, P.G.; Gupta, S.K. Automated Generation of Diverse and Challenging Scenarios for Test and Evaluation of Autonomous Vehicles. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1443–1450. [Google Scholar]

- National Research Councils. In-Time Aviation Safety Management: Challenges and Research for an Evolving Aviation System; National Academy Press: Washington, DC, USA, 2018; ISBN 978-0-309-46880-0. [Google Scholar] [CrossRef]

- National Research Councils. Intelligent Human-Machine Collaboration: Summary of a Workshop; National Academy Press: Washington, DC, USA, 2012; ISBN 978-0-309-26264-4. [Google Scholar] [CrossRef]

- Butterworth-Hayes, P. From ATM to UTM … and back again. CANSO Airspace Magazine, June 2018; 30. [Google Scholar]

- Temme, A.; Helm, S. Unmanned Freight Operations. In Proceedings of the DLRK 2016, Braunschweig, Germany, 13–15 September 2016. [Google Scholar]

- Luchkova, T.; Temme, A.; Schultz, M. Integration of Unmanned Freight Formation Flights in The European Air Traffic Management System. In Proceedings of the ENRI International Workshop on ATM/CNS (EIWAC), Tokyo, Japan, 14–16 November 2017. [Google Scholar]

- Kistan, T.; Gardi, A.; Sabatini, R.; Ramasamy, S.; Batuwangala, E. An Evolutionary Outlook of Air Traffic Flow Management Techniques. J. Prog. Aerosp. Sci. 2017, 88, 15–42. [Google Scholar] [CrossRef]

- Kistan, T. Innovation in ATFM: The Rise of Artificial Intelligence, ICAO ATFM Global Symposium, Singapore. November 2017. Available online: https://www.icao.int/Meetings/ATFM2017/Pages/Session-10-Presentation-Innovation.aspx (accessed on 14 September 2018).

| Characteristic | Automation | Autonomy |

|---|---|---|

| Augments human decision-makers | Usually | Usually |

| Proxy for human actions or decisions | Usually | Usually |

| Reacts at cyber speed | Usually | Usually |

| Reacts to the environment | Usually | Usually |

| Reduces tedious tasks | Usually | Usually |

| Robust to incomplete or missing data | Usually | Usually |

| Adapts behaviour to feedback (learns) | Sometimes | Usually |

| Exhibits emergent behaviour | Sometimes | Usually |

| Reduces cognitive workload for humans | Sometimes | Usually |

| Responds differently to identical inputs (non-deterministic) | Sometimes | Usually |

| Addresses situations beyond the routine | Rarely | Usually |

| Replaces human decision-makers | Rarely | Potentially |

| Robust to unanticipated situations | Limited | Usually |

| Adapts behaviour to unforeseen environmental changes | Rarely | Potentially |

| Behaviour is determined by experience rather than by design | Never | Usually |

| Makes value judgments (weighted decisions) | Never | Usually |

| Makes mistakes in perception and judgment | N/A | Potentially |

| Scale |

|---|

| Sheridan Model of Autonomy |

| Society of Automotive Engineers J3016 |

| Clough’s Levels of Autonomy |

| US Navy Office Naval Research |

| Proud’s OODA Assessment |

| Clough’s Autonomy Control Level |

| Autonomy Levels Unmanned Systems |

| US DoD Defence Science Task Force |

| Billing’s Control-Management Continuum |

| SESAR Levels of Automation Taxonomy |

| a. Sheridan (aviation) | b. SAE J3016 (automobiles) | ||

|---|---|---|---|

| 1 | Human does it all. | 0 | No automation |

| 2 | Machine offers alternatives and | 1 | Driver assistance |

| 3 | narrows selection to a few, or | 2 | Partial automation |

| 4 | suggests one, and | 3 | Conditional automation |

| 5 | executes it if human approves, or | 4 | High automation |

| 6 | allows human a set time to veto then executes automatically, or | 5 | Full automation |

| 7 | executes automatically and informs the human, or | ||

| 8 | informs the human after execution if the human asks it, or | ||

| 9 | informs the human after execution if it decides to. | ||

| 10 | Machine acts autonomously. | ||

| Factor | Description | ATM | C&C | ATFM |

|---|---|---|---|---|

| Contrastive Explanation | “Why” questions are contrastive—they take the form “why P instead of Q”, where Q is a foil to P, the fact that requires explanation. If we can correctly anticipate Q, then we only need to contrast P and Q instead of providing a full causal explanation. | ? | ✓ | ✓ |

| Social Attribution | Similar to the “belief–desire–intention” model used by intelligent agents, and it implies that we need a different explanation framework for actions that fail as opposed to actions that succeed. | ✓ | ✓ | ✓ |

| Causal Connection | People connect causes via a mental “what if” simulation of what would have happened differently if some event had turned out differently (a “counterfactual”). Understanding how people prune the large tree of possible counterfactuals (proximal vs. distal causes, normal vs. abnormal events, controllable vs. uncontrollable events, etc.) is crucial to efficient XAI. | ✓ | ✓ | ✓ |

| Explanation Selection | Humans are good at providing just enough facts for someone to infer a complete explanation. For causal chains with a number of causes, the visualisation techniques employed by the UX are crucial for allowing users to construct a preferred explanation. | ✓ | ✓ | ? |

| Explanation Evaluation | Veracity is not the most important criterion people use to judge explanations. More pragmatic criteria include simplicity, generality, and coherence with prior knowledge or innate heuristics. A simpler explanation (with optional drill-down) may, therefore, be preferable if the primary goal is the establishment of trust as opposed to due-diligence completeness. | ? | ✕ | ✓ |

| Explanation as Conversation | Explanations are usually interactive conversations. This may not be feasible in time-constrained situations; thus, UX design becomes crucial in minimising the need for interaction and ensuring that visual explanations conform to accepted conventions of conversation such as Grice’s maxims (paraphrased by Miller et al. as “only say what you believe; only say as much as is necessary; only say what is relevant; and say it in a nice way.” [28]). | ✓ | ✓ | ✓ |

| Measure | Notes |

|---|---|

| User Satisfaction | • Clarity of the explanation • Utility of the explanation |

| Mental Model | • Understanding individual decisions • Understanding the overall model • Strength/weakness assessment • “What will it do” prediction • “How do I intervene” prediction |

| Task Performance | • Does the explanation improve the user’s decision, task performance? • Artificial decision tasks introduced to diagnose the user’s understanding |

| Trust Assessment | • Appropriate future use and trust |

| Correctability | • Identifying errors • Correcting errors • Continuous training |

| C-HMI Research Framework | |

|---|---|

| Acquire common timestamped physiological data from several disparate biometric sensors. |

| Interpret cognitive and physio-psychological metrics (fatigue, stress, mental workload, etc.) from the following: acquired data of the physiological conditions (brain waves, heart rate, respiration rate, blink rate, etc.), environmental conditions (weather, terrain, etc.), operational conditions (airline constraints, phase of flight, congestion, etc.). |

| Select online adaptation of specific HMI elements and automated tasks, such as adaptive alerting. This is similar in concept to the SESAR project NINA; however, our framework also introduces the following: offline adaptation using machine learning, techniques such as ANFIS, online adaptation using techniques such as state charts and adaptive boolean decision logic. |

| Verify (via simulation) and validate (via experimentation) aspects of adaptive HMIs against a human performance model. |

| Data Acquisition | Data Interpretation | Decision Selection | Action Selection |

|---|---|---|---|

| Smart Sensors: • Space-based ADS–B • 4D weather cube • Biometrics | Identification and prediction: • major traffic flows • workload • congestion • flight delays • arrival time | Decision support: • scheduling • multi-agent flow control • sector planning • airport configuration | Pre-tactical conflict detection and resolution: • hotspots • multiple flights or flows • weather |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kistan, T.; Gardi, A.; Sabatini, R. Machine Learning and Cognitive Ergonomics in Air Traffic Management: Recent Developments and Considerations for Certification. Aerospace 2018, 5, 103. https://doi.org/10.3390/aerospace5040103

Kistan T, Gardi A, Sabatini R. Machine Learning and Cognitive Ergonomics in Air Traffic Management: Recent Developments and Considerations for Certification. Aerospace. 2018; 5(4):103. https://doi.org/10.3390/aerospace5040103

Chicago/Turabian StyleKistan, Trevor, Alessandro Gardi, and Roberto Sabatini. 2018. "Machine Learning and Cognitive Ergonomics in Air Traffic Management: Recent Developments and Considerations for Certification" Aerospace 5, no. 4: 103. https://doi.org/10.3390/aerospace5040103