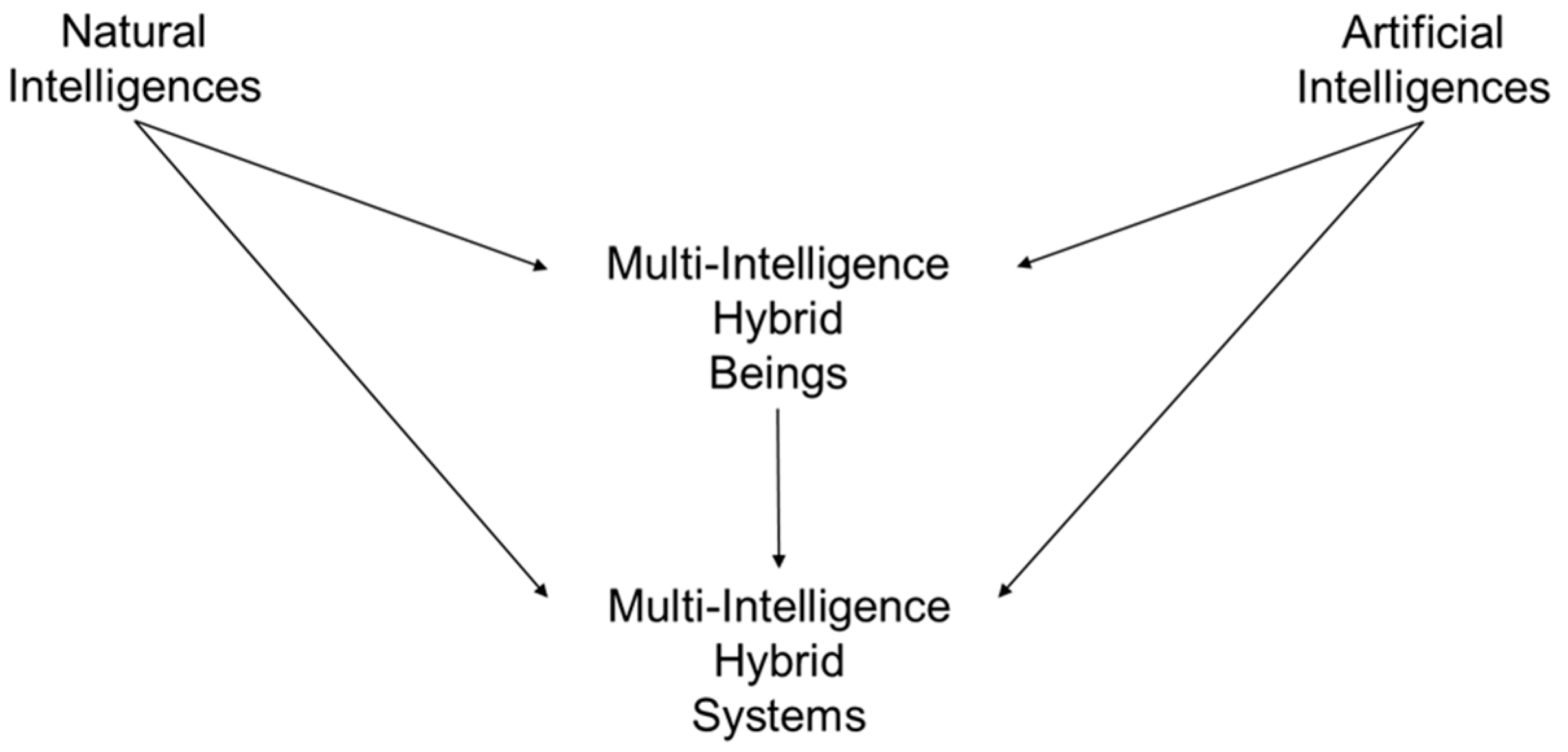

Beyond AI: Multi-Intelligence (MI) Combining Natural and Artificial Intelligences in Hybrid Beings and Systems

Abstract

:1. Introduction

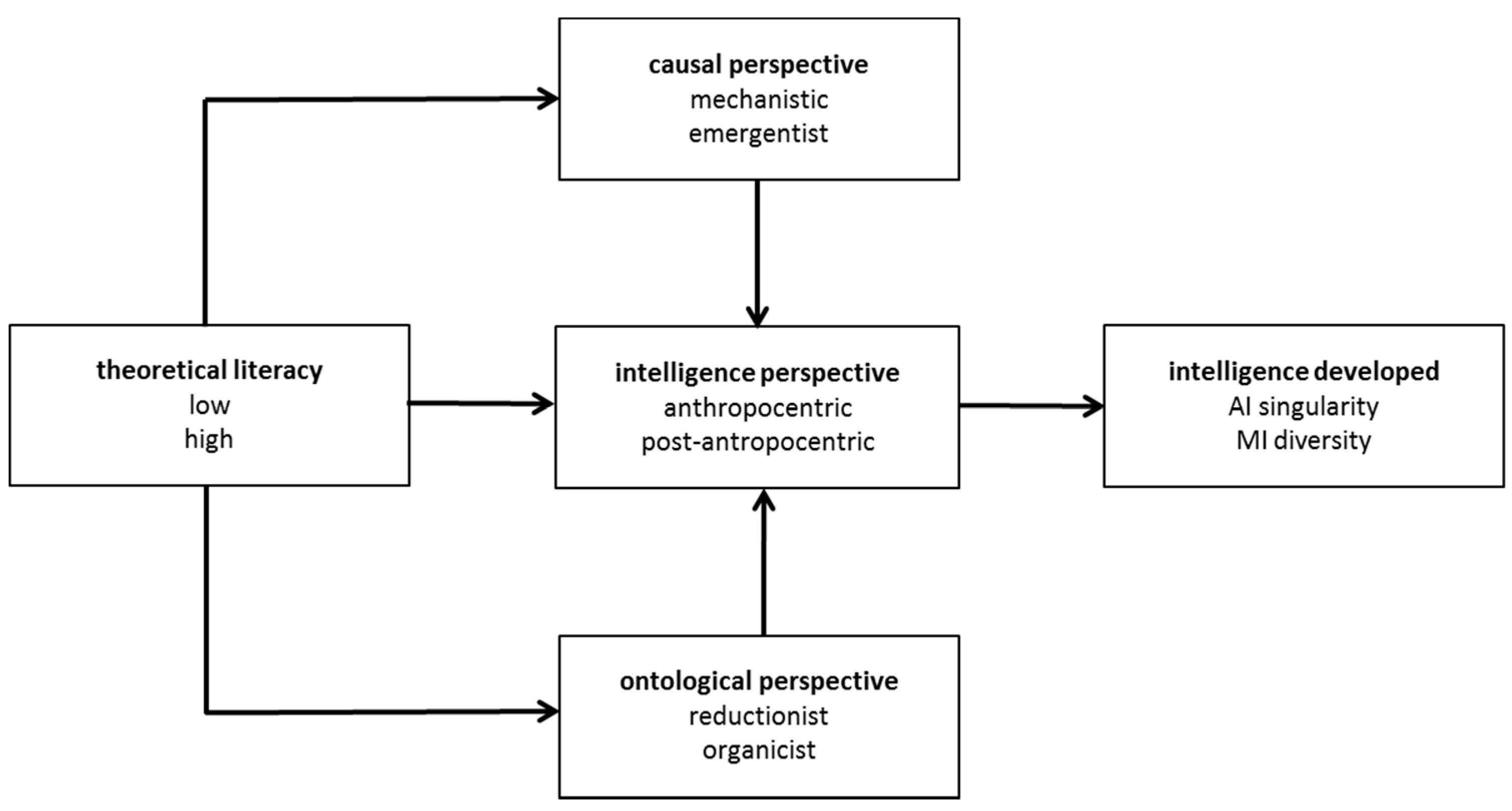

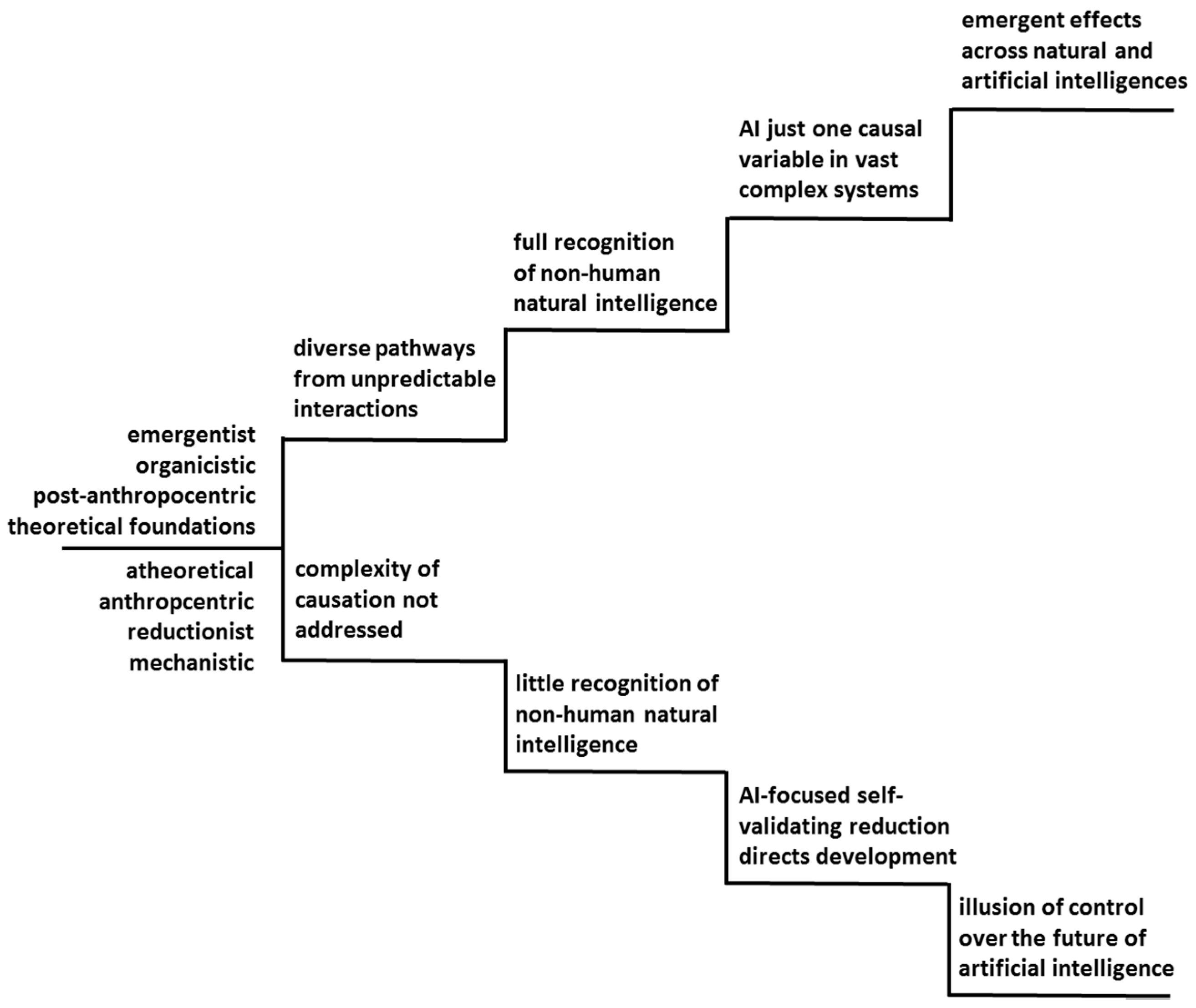

2. Analyses

2.1. Theoretical versus Atheoretical Framing of AI Development

2.2. Post-Anthropocentric versus Anthropocentric Framing of AI Development

2.3. Organicist Emergentism versus Reductionist Mechanistic Framing of AI Development

3. Discussion

3.1. Implications for Research

3.2. Implications for Practice

4. Conclusions

Acknowledgments

Conflicts of Interest

References

- Holley, P. Bill Gates on dangers of artificial intelligence: ‘I don’t understand why some people are not concerned’. The Washington Post, 29 January 2015. [Google Scholar]

- Wainwright, O. Body-hackers: The people who turn themselves into cyborgs. The Guardian, 1 August 2015. [Google Scholar]

- Thielman, S. Eagle-eyed: Dutch police to train birds to take down unauthorised drones. The Guardian, 1 February 2016. [Google Scholar]

- Chittka, L.; Rossiter, S.J.; Skorupski, P.; Fernando, C. What is comparable in comparative cognition? Philos. Trans. R. Soc. 2012, 367, 2677–2685. [Google Scholar] [CrossRef] [PubMed]

- Trewavas, A. Green plants as intelligent organisms. Trends Plant Sci. 2005, 10, 413–419. [Google Scholar] [CrossRef] [PubMed]

- Wystrach, A. We’ve Been Looking at Ant Intelligence the Wrong Way. Scientific American, 30 August 2013. [Google Scholar]

- Westerhoff, H.V.; Brooks, A.N.; Simeonidis, E.; García-Contreras, R.; He, F.; Boogerd, F.C.; Kolodkin, A. Macromolecular networks and intelligence in microorganisms. Front. Microbiol. 2014, 5, 379. [Google Scholar] [CrossRef] [PubMed]

- De Martino, B.; Kumaran, D.; Seymour, B.; Dolan, R.J. Frames, Biases, and Rational Decision-Making in the Human Brain. Science 2006, 313, 684–687. [Google Scholar] [CrossRef] [PubMed]

- Duchon, D.; Dunegan, K.; Barton, S. Framing the problem and making decisions. IEEE Trans. Eng. Manag. 1989, 36, 25–27. [Google Scholar] [CrossRef]

- Nelson, T.E.; Oxleya, Z.M. Issue Framing Effects on Belief Importance and Opinion. J. Politics 1999, 61, 1040–1067. [Google Scholar] [CrossRef]

- Bubela, T. Science communication in transition: Genomics hype, public engagement, education and commercialization pressures. Clin. Genet. 2006, 70, 445–450. [Google Scholar] [CrossRef] [PubMed]

- Bakker, S. The car industry and the blow-out of the hydrogen hype. Energy Polic. 2010, 38, 6540–6544. [Google Scholar] [CrossRef]

- Caulfield, T. Biotechnology and the popular press: Hype and the selling of science. Trends Biotechnol. 2004, 22, 337–339. [Google Scholar] [CrossRef] [PubMed]

- Mähing, M.; Keil, M. Information technology project escalation: A process model. Decis. Sci. 2008, 39, 239–272. [Google Scholar] [CrossRef]

- Rutledge, R.W.; Harrell, A. Escalating commitment to an ongoing project: The effects of responsibility and framing of accounting information. Int. J. Manag. 1993, 10, 300–314. [Google Scholar]

- Griffin, A. Stephen Hawking, Elon Musk and others call for research to avoid dangers of artificial intelligence. The Independent, 12 January 2015. [Google Scholar]

- An Open Letter: Research Priorities for Robust and Beneficial Artificial Intelligence. Available online: https://futureoflife.org/ai-open-letter/ (accessed on 18 May 2017).

- Russell, S.; Dewey, D.; Tegmark, M. Research Priorities for Robust and Beneficial Artificial Intelligence. AI Mag. 2015, 36, 105–114. [Google Scholar]

- Asilomar AI Principles. Available online: https://futureoflife.org/ai-principles/ (accessed on 18 May 2017).

- Cross, N. Designerly Ways of Knowing: Design Discipline versus Design Science. Des. Issues 2001, 17, 49–55. [Google Scholar] [CrossRef]

- Finkelstein, E.A.; Haaland, B.A.; Bilger, M.; Sahasranaman, A.; Sloan, R.A.; Khaing Nang, E.E.; Evenson, K.R. Effectiveness of activity trackers with and without incentives to increase physical activity (TRIPPA): A randomised controlled trial. Lancet Diabetes Endocrinol. 2016, 4, 983–995. [Google Scholar] [CrossRef]

- Scheler, M. The Forms of Knowledge and Culture in Philosophical Perspectives; Beacon Press: Boston, MA, USA, 1925; pp. 13–49. [Google Scholar]

- Simmel, G. The Philosophy of Money; Bottomore, T., Frisby, T., Eds.; Routledge and Kegan Paul: Boston, MA, USA, 1900. [Google Scholar]

- Dubin, R. Theory Building, 2nd ed.; Free Press: New York, NY, USA, 1978. [Google Scholar]

- Gregor, S. The nature of theory in information systems. MIS Q. 2006, 30, 611–642. [Google Scholar]

- Jones, P.H. Systemic Design Principles for Complex Social Systems. In Social Systems and Design, Translational Systems Sciences; Metcalf, G.S., Ed.; Springer: Tokyo, Japan, 2014; Volume 1, pp. 91–128. [Google Scholar]

- Ayer, A.J. Language, Truth, and Logic; Victor Gollancz Ltd.: London, UK, 1936. [Google Scholar]

- Mackie, J.L. Ethics: Inventing Right and Wrong; Pelican Books: London, UK, 1977. [Google Scholar]

- Asch, S.E. Effects of group pressure on the modification and distortion of judgments. In Groups, Leadership and Men; Guetzkow, H., Pittsburgh, P.A., Eds.; Carnegie Press: Lancaster, UK, 1951; pp. 177–190. [Google Scholar]

- Asch, S.E. Social Psychology; Prentice Hall: Englewood Cliffs, NJ, USA, 1952. [Google Scholar]

- Berns, G.; Chappelow, J.; Zink, C.F.; Pagnoni, G.; Martin-Skurski, M.E.; Richards, J. Neurobiological Correlates of Social Conformity and Independence During Mental Rotation. Biol. Psychiatr. 2005, 58, 245–253. [Google Scholar] [CrossRef] [PubMed]

- Janis, I.L. Groupthink: Psychological Studies of Policy Decisions and Fiascoes; Houghton Mifflin: Boston, MA, USA, 1982. [Google Scholar]

- Hodas, N.O.; Lerman, K. The simple rules of social contagion. Sci. Rep. 2014, 4, 4343. [Google Scholar] [CrossRef] [PubMed]

- Kramer, A.D.I.; Guillory, J.E.; Hancock, J.T. Experimental evidence of massive-scale emotional contagion through social networks. PNAS 2014, 111, 8788–8790. [Google Scholar] [CrossRef] [PubMed]

- Kimble, J.J. Rosie’s Secret Identity, or, How to Debunk a Woozle by Walking Backward through the Forest of Visual Rhetoric. Rhetor. Public Aff. 2016, 19, 245–274. [Google Scholar] [CrossRef]

- Deutsch, M.; Gerard, H.B. A study of normative and informational social influences upon individual judgment. J. Abnorm. Soc. Psychol. 1955, 51, 629. [Google Scholar] [CrossRef]

- Nadeau, R.; Cloutier, E.; Guay, J.-H. New Evidence about the Existence of a Bandwagon Effect in the Opinion Formation Process. Int. Polit. Sci. Rev. 1993, 14, 203–213. [Google Scholar] [CrossRef]

- Przybylski, A.K.; Murayama, K.; de Haan, C.R.; Gladwell, V. Motivational, emotional, and behavioral correlates of fear of missing out. Comput. Hum. Behav. 2013, 29, 1841–1848. [Google Scholar] [CrossRef]

- Lazonick, W.; Mazzucato, M.; Tulum, O. Apple’s changing business model: What should the world’s richest company do with all those profits? Account. Forum 2013, 37, 249–267. [Google Scholar] [CrossRef]

- Bhaskar, R.A. Realistic Theory of Science; Harvester Press: Brighton, UK, 1978. [Google Scholar]

- Mingers, J. Systems Thinking, Critical Realism and Philosophy: A Confluence of Ideas; Routledge: Abingdon, Oxford, UK, 2014. [Google Scholar]

- Wynn, D.; Williams, C.K. Principles for conducting critical realist case study research in information systems. MIS Q. 2012, 36, 787–810. [Google Scholar]

- Mingers, J.; Mutch, A.; Willcocks, L. Critical realism in information systems research in information systems research. MIS Q. 2013, 37, 795–802. [Google Scholar]

- Johnson, S. Emergence: The Connected Lives of Ants, Brains, Cities, and Software; Scribner: New York, NY, USA, 2001. [Google Scholar]

- Weaver, W. Science and Complexity. Am. Sci. 1948, 36, 536–567. [Google Scholar] [PubMed]

- Dwoskin, E. Putting a computer in your brain is no longer science fiction. The Washington Post, 15 August 2016. [Google Scholar]

- O’Donnell, D.; Henriksen, L.B. Philosophical foundations for a critical evaluation of the social impact of ICT. J. Inf. Technol. 2002, 17, 89–99. [Google Scholar] [CrossRef]

- Zamansky, A. Dog-drone interations: Towards an ACI perspective. In Proceedings of the ACI 2016 Third International Conference on Animal-Computer Interaction, Milton Keynes, UK, 15–17 November 2016. [Google Scholar]

- Feo Flushing, E.; Gambardella, L.; di Caro, G.A. A mathematical programming approach to collaborative missions with heterogeneous teams. In Proceedings of the 27th IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 2014. [Google Scholar]

- Briggs, F.; Fern, X.Z.; Raich, R.; Betts, M. Multi-instance multi-label class discovery: A computational approach for assessing bird biodiversity. In Proceedings of the Thirtieth AAAI 2016 Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 3807–3813. [Google Scholar]

- Keiper, A.; Schulman, A.N. The Problem with ‘Friendly’ Artificial Intelligence. New Atlantis 2011, 32, 80–89. [Google Scholar]

- Bostrom, N. Superintelligence: Paths, Dangers, Strategies; Oxford University Press: Oxford, UK, 2014. [Google Scholar]

- Yampolskiy, R. Leakproofing the Singularity Artificial Intelligence Confinement Problem. J. Conscious. Stud. 2012, 19, 194–214. [Google Scholar]

- Zalasiewicz, J.; Williams, M.; Waters, C.; Barnosky, A.; palmesino, J.; Rönnskog, A-S.; Edgeworth, M.; Neal, C.; Cearreta, A.; Ellis, E.; et al. Scale and diversity of the physical technosphere: A geological perspective. Anthr. Rev. 2016. [Google Scholar] [CrossRef]

- Brynjolfsson, E.; McAfee, A. The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies; W.W. Norton & Company, Inc.: New York, NY, USA, 2014. [Google Scholar]

- Mitchell, T.; Brynjolfsson, E. Track how technology is transforming work. Nature 2017, 544, 290–292. [Google Scholar] [CrossRef] [PubMed]

- Rubenstein, M.; Cornejo, A.; Nagpal, R. Programmable self-assembly in a thousand-robot swarm. Science 2014, 345, 795–799. [Google Scholar] [CrossRef] [PubMed]

- Amador, G.J.; Hu, D.L. Sticky Solution Provides Grip for the First Robotic Pollinator. Chem 2017, 2, 162–164. [Google Scholar] [CrossRef]

- Horvath, G.; Järverud, G.A.; Horváth, I. Human Ovarian Carcinomas Detected by Specific Odor. Integr. Cancer Ther. 2008, 7, 76. [Google Scholar] [CrossRef] [PubMed]

- Howell, T.J.; Toukhsati, S.; Conduit, R.; Bennett, P. The perceptions of dog intelligence and cognitive skills (PoDI-aCS) survey. J. Vet. Behav. 2013, 8, 418–424. [Google Scholar] [CrossRef]

- Gray, R. Dogs as intelligent as two-year-old children. The Telegraph, 9 August 2009. [Google Scholar]

- Albertin, C.B.; Simakov, O.; Mitros, T.; Wang, Z.Y.; Pungor, J.R.; Edsinger-Gonzales, E.; Brenner, S.; Ragsdale, C.W.; Rokhsar, D.S. The octopus genome and the evolution of cephalopod neural and morphological novelties. Nature 2015, 524, 220–224. [Google Scholar] [CrossRef] [PubMed]

- Godfrey-Smith, P. Other minds: The Octopus, the Sea, and the Deep Origins of Consciousness; Farrar, Straus and Giroux: New York, NY, USA, 2016. [Google Scholar]

- Wilson, M. Six Views of Embodied Cognition. Psychon. Bull. Rev. 2002, 9, 625–636. [Google Scholar] [CrossRef] [PubMed]

- Moravec, H. Mind Children; Harvard University Press: Cambridge, MA, USA, 1988. [Google Scholar]

- Brooks, R.A. Elephants don’t play chess. Robot. Auton. Syst. 1990, 6, 3–15. [Google Scholar] [CrossRef]

- Emery, N.J. Cognitive ornithology: The evolution of avian intelligence. Philos. Trans. R. Soc. 2006, B361, 23–43. [Google Scholar] [CrossRef] [PubMed]

- Bromenshenk, J.; Henderson, C.; Seccomb, R.; Rice, S.; Etter, R.; Bender, S.; Rodacy, P.; Shaw, J.; Seldomridge, N.; Spangler, L.; et al. Can Honey Bees Assist in Area Reduction and Landmine Detection? J. Conv. Weapons Destr. 2003, 7, 24–27. [Google Scholar]

- Eden, A.H.; Moor, J.H.; Soraker, J.H.; Steinhart, E. (Eds.) Singularity Hypotheses: A Scientific and Philosophical Assessment; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Good, I.J. Speculations Concerning the First Ultraintelligent Machine. Adv. Comput. 1966, 6, 31–88. [Google Scholar]

- Kurzweil, R. The Singularity is Near; Viking Books: New York, NY, USA, 2005. [Google Scholar]

- Vinge, V. The Coming Technological Singularity: How to Survive in the Post-Human Era. In Vision-21: Interdisciplinary Science and Engineering in the Era of Cyberspace; Landis, G.A., Ed.; NASA Publication: Washington, DC, USA, 1993; pp. 11–22. [Google Scholar]

- Von Neumann, J.; Ulam, S. Tribute to John von Neumann. Bull. Am. Math. Soc. 1958, 64, 1–49. [Google Scholar]

- Wang, X.; Lei, Y.; Gea, J.; Wu, S. Production forecast of China’s rare earths based on the Generalized Weng model and policy recommendations. Resour. Policy 2015, 43, 11–18. [Google Scholar] [CrossRef]

- Leith, H.; Whittaker, R.H. (Eds.) Primary Productivity of the Biosphere; Springer: New York, NY, USA, 1975. [Google Scholar]

- Anderson, P.W. More is different. Science 1972, 177, 393. [Google Scholar] [CrossRef] [PubMed]

- Conrad, J. Seeking help: The important role of ethical hackers. Netw. Secur. 2012, 8, 5–8. [Google Scholar] [CrossRef]

- Fox, S. Mass imagineering, mass customization, mass production: Complementary cultures for creativity, choice, and convenience. J. Consum. Cult. 2017. [Google Scholar] [CrossRef]

- Levin, S.A. The Princeton Guide to Ecology; Princeton University Press: Princeton, NJ, USA, 2009. [Google Scholar]

- Odum, E.P.; Barrett, G.W. Fundamentals of Ecology, 5th ed.; Andover, Cengage Learning: Hampshire, UK, 2004. [Google Scholar]

- Smith, T.M.; Smith, R.L. Elements of Ecology; Benjamin Cummings: San Francisco, CA, USA, 2009. [Google Scholar]

- Smith, P.A. A Do-It-Yourself revolution in diabetes care. The New York Times, 22 February 2016. [Google Scholar]

- Lyng, S. Edgework: A Social Psychological Analysis of Voluntary Risk Taking. Am. J. Soc. 1990, 95, 851–886. [Google Scholar] [CrossRef]

- Lyng, S. Edgework: The Sociology of Risk-Taking; Routledge, Taylor & Francis Group: London, UK; New York, NY, USA, 2004. [Google Scholar]

- Altman, L.K. Who Goes First? The Story of Self-Experimentation in Medicine; University of California Press: Berkeley, CA, USA, 1998. [Google Scholar]

- Borland, J. Transcending the human, DIY style. Wired, 30 December 2010. [Google Scholar]

- Moor, J.H. The Nature, Importance and Difficulty of Machine Ethics. IEEE Intell. Syst. 2006, 21, 18–21. [Google Scholar] [CrossRef]

- Tzafestas, S.G. Roboethics A Navigating Overview; Springer: Berlin, Germany, 2016. [Google Scholar]

- Davis, J. Program good ethics into artificial intelligence. Nature 2016, 538, 291. [Google Scholar] [CrossRef] [PubMed]

- Eveleth, R. Why did I implant a chip in my hand? Popular Science, 24 May 2016. [Google Scholar]

- Saito, M.; Ono, S.; Kayanuma, H.; Honnami, M.; Muto, M.; Une, Y. Evaluation of the susceptibility artifacts and tissue injury caused by implanted microchips in dogs on 1.5 T magnetic resonance imaging. J. Vet. Med. Sci. 2010, 72, 575–581. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.Y.; Kim, T.G. Specification of multi-resolution modeling space for multiresolution system simulation. Simulation 2013, 89, 28–40. [Google Scholar] [CrossRef]

- Collingridge, D. The Social Control of Technology; Pinter: London, UK, 1980. [Google Scholar]

- Reinmoeller, P.; van Baardwijk, N. The Link between Diversity and Resilience. Summer 2005, 15 July 2005. [Google Scholar]

- Berkeley, A.R.; Wallace, M.A.R.; Wallace, M. A framework for establishing critical infrastructure resilience goals. In Final Report and Recommendations by the Council; National Infrastructure Advisory Council: Washington, DC, USA, 2010. [Google Scholar]

- Bostrom, N. Ethical issues in advanced artificial intelligence. In Cognitive, Emotive and Ethical Aspects of Decision Making in Humans and in Artificial Intelligence; Smit, I., Lasker, G.E., Eds.; International Institute for Advanced Studies in Systems Research and Cybernetics: Windsor, ON, Canada, 2003; Volume 2, pp. 12–17. [Google Scholar]

- Weston, A. Self-validating reduction: A theory of environmental devaluation. Environ. Ethics 1996, 18, 115–132. [Google Scholar] [CrossRef]

- Thompson, S.C.; Armstrong, W.; Thomas, C. Illusions of Control, Underestimations, and Accuracy: A Control Heuristic Explanation. Psychol. Bull. 1998, 123, 143–161. [Google Scholar] [CrossRef] [PubMed]

- Hargreaves, I.; Lewis, J.; Speers, T. Towards a Better Map: Science, the Public and the Media; Economic and Social Research Council: Swindon, London, UK, 2003. [Google Scholar]

- Social Issues Research Centre (SIRC). Guidelines on Science and Health Communication; Social Issues Research Centre: Oxford, UK, 2001. [Google Scholar]

| Characteristic | Summary |

|---|---|

| Theoretical foundations (not atheoretical) | MI positioned within philosophy of science, such as critical realism, which can encompass full complexity of causation. Informed by scientific theories, such as ecology theory, which facilitate explanation, prediction and management. |

| Post-Anthropocentric (not anthropocentric) | MI includes the full range of natural and artificial intelligences, which are defined in fundamental terms, such as self-awareness, robust adaptation, and problem solving. |

| Organicist (not reductionist) | MI considered in terms of whole systems of causal mechanisms and causal contexts encompassing full range of variables that can contribute to intended and unintended consequences. |

| Emergentist (not mechanistic) | MI encompasses hybrid beings and hybrid systems having emergent properties that can be more than, and different to, the various types of intelligence which they are comprised of. |

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fox, S. Beyond AI: Multi-Intelligence (MI) Combining Natural and Artificial Intelligences in Hybrid Beings and Systems. Technologies 2017, 5, 38. https://doi.org/10.3390/technologies5030038

Fox S. Beyond AI: Multi-Intelligence (MI) Combining Natural and Artificial Intelligences in Hybrid Beings and Systems. Technologies. 2017; 5(3):38. https://doi.org/10.3390/technologies5030038

Chicago/Turabian StyleFox, Stephen. 2017. "Beyond AI: Multi-Intelligence (MI) Combining Natural and Artificial Intelligences in Hybrid Beings and Systems" Technologies 5, no. 3: 38. https://doi.org/10.3390/technologies5030038