Abstract

The development and generalization of Digital Volume Correlation (DVC) on X-ray computed tomography data highlight the issue of long-term storage. The present paper proposes a new model-free method for pruning experimental data related to DVC, while preserving the ability to identify constitutive equations (i.e., closure equations in solid mechanics) reflecting strain localizations. The size of the remaining sampled data can be user-defined, depending on the needs concerning storage space. The proposed data pruning procedure is deeply linked to hyper-reduction techniques. The DVC data of a resin-bonded sand tested in uniaxial compression is used as an illustrating example. The relevance of the pruned data was tested afterwards for model calibration. A Finite Element Model Updating (FEMU) technique coupled with an hybrid hyper-reduction method aws used to successfully calibrate a constitutive model of the resin bonded sand with the pruned data only.

1. Introduction

With the development and the generalization of digital image correlation (DIC) (see Chu et al. [1]) or digital volume correlation (DVC) (see Bay et al. [2]) techniques on Computed Tomography (CT) data, the volume of data acquired has drastically increased. This raises new challenges, such as data storage, data mining or the development of relevant experiments-simulations dialog methods such as model validation and model calibration.

In experimental mechanics, the access to full 3D fields such as displacement or strain fields is far richer than 1D load–displacement curves. These data can drive finite element simulations for model calibration. Although extremely convincing, the increasing resolution of the full-field measurement tools, such as X-ray Computed Tomography, leads to an explosion of the volume of data to store. The long term storage of CT datasets is nowadays an issue (see Ooijen et al. [3]).

This paper proposes a numerical method for pruning 3D dataset related to DVC when it becomes necessary to free up storage capacity. Often, when new experimental results need to be saved, storage memory must be released. The pruned data contain information similar to the original data, but with less memory required. The proposed approach aims to prune experimental data while preserving the ability to identify constitutive equations (i.e., closure equations in solid mechanics) reflecting strain localizations. It is a mechanical based approach to prune DVC data. Outside a reduced experimental domain (RED), the experimental data are deleted. Original experimental data are preserved solely in the RED. We also propose a calibration procedure whose computational complexity is consistent with the pruning of the experimental data.

Compression of data is known to be a convenient approach to restore storage capacity. For instance, MP3 files are a fairly common way to reduce the size of audio files for daily use (see Pan [4]). However, a non-negligible loss of information is needed, but controlled. The MP3 compression roughly consists in filtering certain components of the non-reduced audio file that are actually non-audible for most people. In other words, the MP3 algorithm was made to prune the audio data that are not absolutely necessary. Usually, the compression rate is around 12. In the same philosophy, there can be a way to massively compress the experimental data taken from experiments with a controlled loss of information based on an algorithm that detects the pertinent information. This has been proposed in [5] by using a sensitivity analysis with respect to variations of calibration parameters. These parameters are the coefficients of a given model that should reflect the experimental observations. The result is that the pruned data are dedicated to a given model. In this paper, a model-free approach is proposed. It aims to make possible various calibrations with different models after data pruning. Here, the relevant information are local but situated in regions submitted to strain localization. The data submitted to the pruning procedure are the outputs of a Digital Volume Correlation that reconstructs the displacement field from observations at time instants , over a spatial domain , where is a position vector. The geometry of the experimental sample is approximated by a mesh and the determined displacement is decomposed on finite element (FE) shape functions [6].

The proposed method can be linked to data pruning or data cleaning methods described in the literature for machine learning [7]. The aim of these procedures are not to reduce data storage but to improve the data quality by accurate outliers detection for instance [8]. In [9], a data pruning method is employed to filter the noise in the dataset.

Using the FE approximation of the experimental fields paves the way to further simulations. In the calibration procedure, the full-field measurements are used as inputs of an inverse problem that aims to determine a given set of parameters . These parameters are the coefficients of given constitutive equations. Their values are unknown or not known precisely. The most straightforward method is called Finite Element Model Updating (FEMU) (see Kavanagh and Clough [10] and Kavanagh [11]). It is a rather common way to optimize a set of parameters taking into account the experimental data and balance equations in mechanics. It consists in computing the discrepancy between the FE approximation of the experimental fields and the FE simulations. Thus, an optimization loop is done on where the FE method is used as a tool for assessing the relevance of the parameter set. The objective function, or cost function, of the optimization can focus on the difference between the computed and experimental displacement fields (FEMU-U), forces (FEMU-F, or force balance method), or the strain fields (FEMU-) or a mix between all these sub-methods. A review of FEMU applications can be found in [12]. The method is particularly suitable for:

- Non-isotropic materials (e.g., materials having mechanical properties that depend on their orientation [13,14], such as the human skin [15]);

- Heterogeneous materials such as composites [16];

- Heterogeneous tests such as open-hole tests (e.g., [13,14]) or CT-samples [17];

- Special cases of local phenomena such as strain localization or necking (e.g., Forestier et al. [18], Giton et al. [19]) or the illustrating case of the present paper;

- Multi-materials configurations (e.g., solder joints studied in [20] or heterogeneous material identification done in [21]); and

- Determination of the boundary conditions [22].

One of the recent developments concerning FEMU is to couple this method with reduced order models (ROMs) to cut down the computation time in the parameters optimization loop. An example of such recent developments can be found in [23] where a method called FEMU-b is highlighted, or in [24]. The FEMU-b consists in determining an intermediate space of predominant empirical modes associated to a reduction procedure, such as the Proper Orthogonal Decomposition (see Aubry et al. [25]) or the Proper Generalized Decomposition (PGD) [26]. The discrepancy is computed between the experimental and simulated reduced variables, where the reduced variables are solutions of reduced equations. In this paper, we show that the proposed data pruning method is consistent with a reduced order modeling of the equations to be calibrated. A FEMU-b is introduced, so we take into account the lack of experimental data due to the pruning procedure.

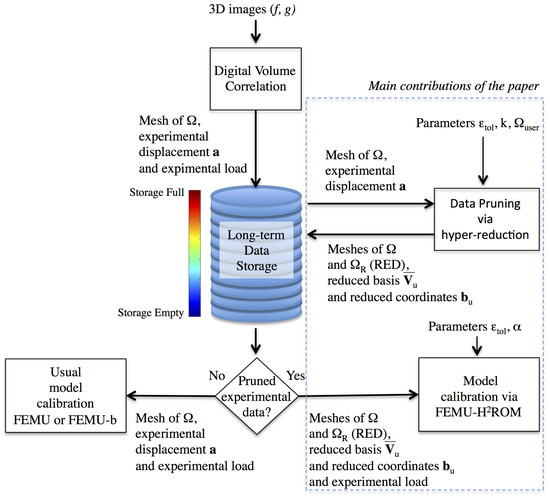

In [27], it has been shown that ROMs can be supplemented by a reduced integration domain (RID), by following a hyper-reduction method. In this method, a RID is a subdomain of a body, where the reduced equations are set up. In the proposed approach, we do not modify the cubature scheme involved in mechanical equations, as proposed by Hernandez et al. [28], but we restrict the cubature to a subdomain. This leads the way for data pruning methods that preserve calibration capabilities. Here, the dimensionality reduction of experimental data enables the restriction of experimental data to a RED. This RED is a subdomain of the specimen where the experimental data are sampled. It is not necessarily a connected domain. The flowchart of the proposed approach to data pruning is shown in Figure 1. After pruning, the data related to the domain occupied by a specimen, denoted by , are restricted to a RED denoted by . The way the model calibration is done, depends on the nature of the data available in a storage system. If the data are not pruned, then a conventional calibration by the FEMU method is possible. Otherwise, calibration by a FEMU-b method is recommended. In this paper, the calibration capabilities after data pruning are assessed by using the FEMU with an hybrid hyper-reduction method (HROM) [29]. Hence, the FEMU-b is not done on the complete domain but on the RED determined by the data pruning. The result is a fast calibration procedure, with low memory requirement and a validated data pruning protocol. Contrary to usual hyper-reduction methods, the domain where the equations to be calibrated are setup is not generated by using simulation data. It derives from the data pruning procedure applied to experimental data.

Figure 1.

Pruning of experimental data related to DVC, via hyper-reduction. Calibration capabilities of constitutive equations are preserved after data pruning. The experimental data related to the domain occupied by a specimen, denoted by , are restricted to a reduced experimental domain denoted by . The way the model calibration is done, depends on the nature of the data available in the storage system.

The remaining part of the paper is structured as follows. In Section 3, the proposed method for data pruning is described. The DVC is recalled. A dimensionality reduction then hyper-reduction are performed to compute the pruned data. The pruning procedure is applied in Section 4 on a resin-bonded sand tested in in situ uniaxial compression with X-ray tomography. In Section 5, the calibration of an elastoplastic model enables validating the pruning protocol. Details on the experimental data are available in the form of supplementary files. These data allow the proposed data pruning to be reproduced.

2. Notations

Second-order tensors are denoted by . Matrices are denoted by capital bold letters and vectors are denoted by bold lowercase characters . The colon notation is used to denote the extraction of a submatrix or a vector (at column i for example): . Sets of indices are denoted by calligraphic characters . The element of a matrix at row i and column j is denoted or when the matrix notation has a subscript. is the restriction of to the reduced experimental domain.

3. Data Pruning by Following an Hyper-Reduction Scheme

In the proposed approach, the experimental displacements observed on the domain occupied by a specimen are restricted to the RED . The smaller is the extent of , the smaller is the memory requirement to store the pruned data. Without any constraint, the best memory saving is obtained by saving the parameters that best replicate the experimental data. In that situation, usual FEMU methods are sufficient. Here, the following constraint is taken into consideration. The data pruning should not prevent changes in the way constitutive equations are set up, as these equations may evolve in the future. Knowledge in mechanics is evolving and so are models. Thus, after the data have been pruned, the experimental data saved in the storage system must allow the calibration of constitutive equations. To ensure consistency between the computational complexity of the calibration procedure and the accuracy of the pruning data, we propose hyper-reduced equations for this calibration. In our opinion, it does not make sense to perform complex simulations during such a calibration with a poor representation of the experimental data.

3.1. Digital Volume Correlation

Let us consider a specimen occupying the domain undergoing a certain mechanical test. With image acquisition techniques, grayscale images are obtained in 3D. The Digital Volume Correlation aims to determine the displacement field at every position in at a given deformed state at time t. f and g are the gray levels at the reference and deformed states. They are related by the equation:

The best displacement field is estimated via the minimization of the following residual:

where is the gradient of f. This is an ill-posed problem. To get a well-posed problem, the displacement field can be restricted to a kinematic subspace. Here, the displacement field is assumed to be decomposed over a set of vector functions that corresponds to the shape functions of a FE model defined on .

where is the number of degrees of freedom of the mesh, the ith nodal degree of freedom in the FE model. denotes the vector of degrees of freedom to be determined. With this restriction to the kinematic subspace, the function is now a quadratic form of the , and its minimization is a linear system, set up for each observation of a deformed state:

where the matrix and the vector are:

In the sequel, observations of the specimen deformation at time instants , , are considered. The DVC gives access to the final correlated displacement field for each observations, through the coefficient vector . From the displacement field, a strain field is extracted assuming small strains:

This strain is thus calculated at each Gauss point of the mesh used for the DVC. For pressure dependent or plastic materials, it can be convenient to subdivide the strain field in its deviatoric part and its hydrostatic part:

where is the unit tensor.

It is worth noting that the pruning procedure only focuses on the displacement and not on the strain. It is considered that the strain can be computed in post-processing (thanks to Equation (7)) and are not worth saving. The strain tensor is actually considered as temporary data used to compute a reduced experimental domain.

3.2. Dimensionality Reduction

The first step of the pruning procedure consists in performing a dimensionality reduction of the experimental data. It is based on singular value decomposition. This approach is similar to the Principal Component Analysis (PCA). However, here, a reduced basis of empirical modes is obtained without centering the data.

The experimental data from DVC are saved into two matrices, and defined as:

and

where is the th Gauss point, and:

with being the number of integration points in the mesh. is a matrix and is a matrix. For the sake of simplicity, we do not account for the symmetry of the strain tensor.

The first step of the pruning procedure consists in performing a first dimensionality reduction of the DVC data. Only the reduced basis and coordinate are kept instead of the snapshot matrix . The procedure is also done on the snapshot matrix of the stain but not in order to reduce storage (as the stain data are not saved). The corresponding reduced basis is used as a temporary tool to compute afterwards the reduced domain. The determination of the empirical modes is performed thanks to a Singular-Value Decomposition (SVD):

where , with or , is an empirical reduced basis for displacement or strain, respectively, , , and . Both and are orthogonal. The residual has a 2-norm such as:

where is a numerical parameter (typically, 10). According to the Eckart–Young theorem, the matrix is the best approximation of rank for by using the reduced basis .

The relevance of the dimensionality reduction of the displacement data appears to be conditioned by the difference between the number of time steps and the order of the approximation , as and . In situ tests observed in X-ray CT tend to have few time steps so the first dimensionality reduction may not be efficient. Moreover, due to the resolution of the Computed Tomography, data have generally an important number of degrees of freedom. In other words, the snapshot matrix has a lot of lines () but few columns (). The memory cost is mostly due to the number of dof of the problem. That is why the proposed pruning protocol is based on a hyper-reduction method in order to reduce significantly this number of dof.

3.3. Reduced Experimental Domain

The proposed pruning method has its roots in the hyper-reduction method [30]. We are not able to prove that the proposed approach has a strong physical basis for pruning data according to an appropriate metric. The proposed approach is heuristic, but it fulfills some mathematical properties. A hyper-reduced order model is a set of FE equations restricted to a RID when seeking an approximate solution of FE equations with a given reduced basis. In other words, this approach accounts for the low rank of the reduced approximation to set up the reduced equations of a given FE model. Let us denote by the solution of FE equations that aims to replicate the experimental vector , by using the same mesh. For a given reduced basis of rank , the approximate reduced solution of the FE equations is denoted by such that:

where are the variables of the reduced order model. It turns out that the rank of the reduced FE equations must be in order to find a unique solution . Since is usually larger than , few FE equations that preserve the rank of the reduced system exist. By following the hyper-reduction method proposed in [30], this selection is achieved by considering balance equations set up on a RID. In former works on hyper-reduction, the RID were generated by using simulation data.

Here, the RED is similar to a RID, but its construction uses solely experimental data, that is to say that the reduced basis used to perform this row selection comes from Equations (12) and (13). That is why the pruning method is called a model-free approach. One of the advantages of such method is that the data pruning does not have to be performed again if the constitutive model is changed. The RED is denoted by .

In the hyper-reduction method, the RID is generated by the assembly of elements containing interpolation points related to various reduced bases. These reduced bases are usually extracted from simulation data generated by a given mechanical model for various parameter variations [30]. Here, the RED construction is based exclusively on the reduced bases related to and . The RED is the union of several subdomains: and generated from the reduced matrices and , a domain denoted by corresponding to a set of neighboring elements to the previous subdomains, and a zone of interest (ZOI) denoted by . In the sequel, is set up to evaluate the force applied by the experimental setup on the specimen.

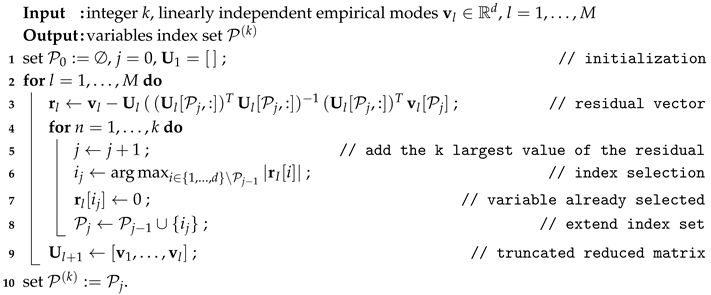

is designed as if we would like to reconstruct experimental displacements outside by using and given experimental displacement in . On a restricted subdomain , we only have access to a restricted set of nodal displacements. The set of their indices is denoted by . The set of remaining displacement indices is denoted by such that is the vector to be reconstructed by knowing . Various approaches have been proposed in the literature to perform this kind of reconstruction. They are related to data completion [31] or data imputation [32] for instance. Here, we have the opportunity to choose the set , because the reconstruction issue is only formal. By using the DEIM method proposed in [33], we can obtain the set such that is a square and invertible matrix. Then, in that situation, the number of selected degrees of freedom in is the number of empirical modes in . However, in the present application, this set could be too small to get robust calibrations after data pruning. Then, we propose a modification of the DEIM algorithm in order to multiply the number of selected indices by a given factor k. We name this algorithm k-Selection with empIrical Modes (k-SWIM). The modified algorithm is shown in Algorithm 1. When , this algorithm is exactly the same as the usual DEIM algorithm in [34]. The issue here is not to replicate experimental data via an interpolation scheme, but via calibrated FE simulations (by using ). In the sequel, the set of selected indices by using k-SWIM is denoted by . The same reasoning is applied to the reconstruction of the experimental strain tensors. The k-SWIM algorithm applied to defines .

For given sets of indices and , the RED is:

where supp is the support of the function and are the shape functions related to the strain tensor in the FE model used to compute .

| Algorithm 1:k-SWIM Selection of Variables with EmpIrical Modes |

|

Algorithm 1 is properly defined if in Line 3 the matrix is invertible, for with , or equivalently if the following property is fulfilled.

Theorem 1.

is invertible for and .

Proof.

Let us assume that is invertible for and . Then, we compute . is a set of linearly independent vectors. Thus, . Let us introduce the first additional index, , and the following residual vector:

Then, and . Thus, is full column rank. Since , then is full column rank and is invertible. In addition, is a non-zero vector. Then, is invertible. □

Another interesting property is the possible cancellation of the data pruning by using a large value of the parameter k in the input of Algorithm 1. The following property holds.

Theorem 2.

If and if , then . The RED covers the full domain and all the data are preserved.

Proof.

By following Algorithm 1, for with and as inputs (in the algorithm, ), we obtain . If , then . Hence, and and . □

The second theorem is quite restrictive. In practice, large values of k, with , enable preserving all the data. The value of k has to be chosen according to the size of the memory that we would like the free up.

3.4. Experimental Data Restricted to the RED

For a given RED, , a set of selected degrees of freedom indices can be defined as:

The degrees of freedom in are not connected to elements outside . We denote by the degrees of freedom on the interface between and :

The union of these two set is denoted by :

It contains all the indices of the degree of freedom in .

We denote by the experimental data restricted to the RED, such that:

An additional SVD is performed on these experimental data such that:

When the RED is available, the experimental data are restricted to and the data to be stored are:

- The pruned reduced basis , and the consecutive reduced coordinates .

- The full mesh of and the mesh of ( and ).

- The load history applied to the specimen on the subdomain .

- Usual metadata related to the experiment (temperature, material parameters, etc.).

It is also advised to store the statistical distribution of a value of interest in the full domain and in the reduced domain. These data can be saved as histograms, for example. In this present paper, the shear strain distribution was saved, as this variable is extremely interesting in the case of strain localization. The additional memory cost is actually negligible as it consists in storing a few hundred floats.

The data concerning the strains are not stored as they can be computed with the displacement data thanks to Equation (7).

Generally, in-situ experiments observed in X-ray CT do not have numerous time steps, hence the above dimensionality reduction via SVD does not reduce drastically the size of the data to store. This is illustrated with the following example in Section 4. The hyper-reduction of the domain is actually the predominant step for data pruning.

3.5. Reduced Mechanical Equations Set Up on the Reduced Experimental Domain

Let us denote by the residual of the FE equations that have to be calibrated such that:

For the sake of simplicity, we do not introduce the parameters in the FE residual. Since the experimental data are restricted to the RED by following a hyper-reduced setting, the mechanical equations submitted to the calibration procedure are also hyper-reduced. We denote by the partial computation of the FE residual restricted to the RED. is the FE residual computed solely on a mesh of the RED. This mesh is termed reduced mesh. To account for the reduced mesh, a renumbering of the set , denoted by , is defined such that:

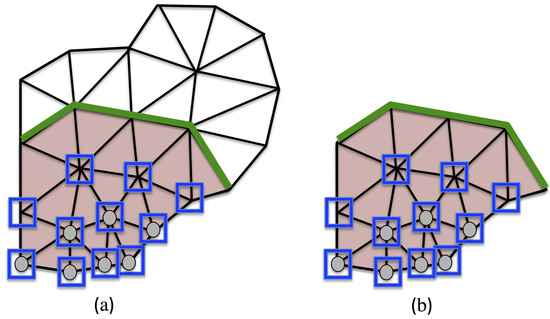

where is the set of degrees of freedom related to the reduced mesh, that corresponds to in the full mesh. They are located in blue squares in Figure 2b. Similarly, we define such that:

where is the set of degrees of freedom related to the reduced mesh that belongs to the interface between the RED and the remaining part of the full domain. The various sets of degrees of freedom are shown in Figure 2.

Figure 2.

Schematic view of the reduced experimental domain, with linear triangular elements. In both figures, is red. On the left, there is the mesh of . On the right, there is the reduced mesh (i.e., the mesh of only). In (a), the nodes having their degrees of freedom in are on the green line, the nodes having their degrees of freedom in are in blue squares, and the grey nodes have their degrees of freedom in . In (b), the green line, the blue squares and the grey nodes are related to , and , respectively.

We assume that:

This assumption means that the FE residuals at lines selected by , for any prediction , can be computed on the reduced mesh, where the residuals depend only on degrees of freedom in . It is relevant in mechanical problems without contact condition, in the framework of first strain-gradient theory. We refer the reader to [35] for the extension of the hyper-reduction method to contact problems.

Both simulation data and experimental data are incorporated in a reduced basis dedicated to the calibration procedure, after the pruning of the experimental data. In the sequel, this reduced basis is extracted from data restrained to the RED, by using the SVD. Let us denote by all the data available on the full mesh. Then, after the restriction of data to the reduced mesh, the empirical reduced basis is related to :

with . is not a submatrix of a given . The way contains both simulation data and experimental data is user dependent. In the proposed example, we are using a derivative extended proper orthogonal decomposition (see Schmidt et al. [36]) as explained in Section 5.2.

When the reduced basis contains empirical modes and few FE shape functions located in , the method is termed hybrid hyper-reduction [29,35]. The hybrid FE/reduced approximation is obtained by adding few columns of the identity matrix to . In this hybrid approximation, we only add FE degrees of freedom that are not connected to the degrees of freedom in . The resulting set of degrees of freedom is denoted by (see Figure 2). In [29] it has been shown that this permits to have strong coupling in the resulting hybrid approximation. Let us define the subdomain connected to :

Then, we get:

and is such that:

The hybrid reduced basis is denoted by . It reads, by using the Kronecker delta ():

The equations of the hybrid hyper-reduced order model (HROM) [35] reads: find such that,

If the reduced equations do not have a full rank, it is suggested to remove the columns of , in , that cause the rank deficiency. When using the SVD to obtain from data, the last columns have the smallest contribution in the data approximation. These columns must be removed first in case of rank deficiency.

Theorem 3.

When , then the hybrid hyper-reduced equations are the original FE equations on the full mesh.

Proof.

If , then , and the reduced mesh is the original mesh. In addition, all the empirical modes have to be removed from to get a full rank system of equations. Hence, is the identity matrix. Thus, the hybrid hyper-reduced equation are exactly the original FE equations. There is no complexity reduction. □

Theorem 4.

If is set to zero; if both hybrid hyper-reduced equations and FE equations have unique solutions; if the FE solution belongs to the subspace spanned by the data ; and if there exists a matrix such that (i.e., the FE solution can be reconstructed by using the FE solution restricted to the RED), with , then and , where is a vector of zero in . This means that the hyper-reduced solution is exact and the FE correction in the hybrid approximation is null.

Proof.

Let us introduce the matrix . Then,

If with , so , then with and fulfills the following equation:

Then, the balance equations of the hybrid hyper-reduced equations are fulfilled by . If both hybrid hyper-reduced equations and FE equations have unique solutions, then the solution of the hybrid hyper-reduced equations is . □

4. Illustrating Example: Polyurethane Bonded Sand Studied with X-ray CT

4.1. Material and Test Description

The material studied here is a polyurethane bonded sand used in casting foundry to mold the internal cavities of foundry parts. The resin makes bonds between grains and improves drastically the mechanical properties of the cores (stiffness, maximum yield stress, traction strength, etc.). The material has been extensively studied with standards laboratory tests, focusing on macroscopic displacement-force curves. This casting sand was experimentally investigated by Jomaa et al. [37], Bargaoui et al. [38]. These macroscopic data are completed with an in-situ uniaxial compression test studied in X-ray CT on an as-received sample. According to Bargaoui et al. [38], the process used to make the cores (cold box process) guarantees the homogeneity of the material. In the sequel, the resin bonded sand is supposed homogeneous.

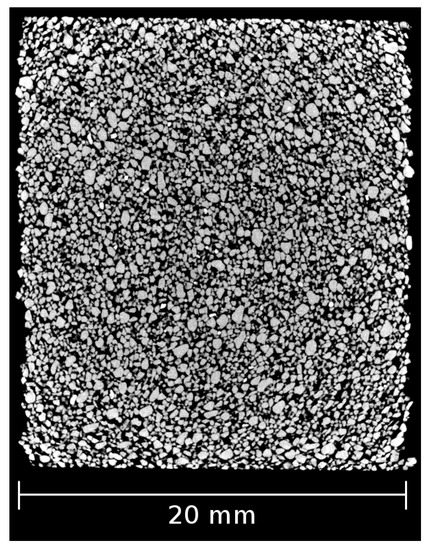

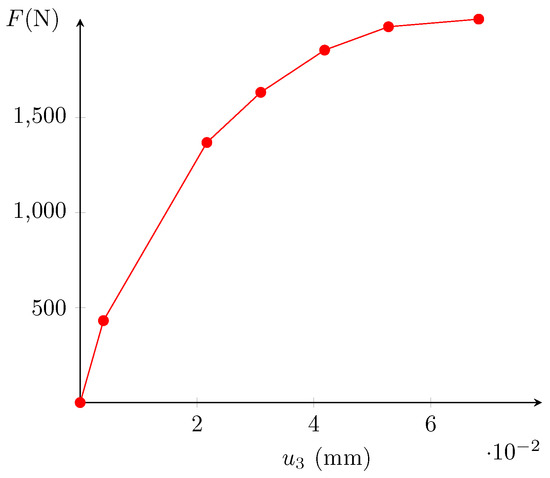

The sample is a parallelepiped (20.0 × 22.4 × 22.5 mm). The load was increased (with a constant displacement rate of 0.5 mm/min) and the displacement was stopped at several levels, noted . During these stopped displacement periods, the sample was scanned with a tension beam of 80 kV and an intensity of 280 A. corresponds to the initial state, before the appliance of the load. Then, seven tomography scans were performed at increasing compressed states. At , the sample is broken. The bottom and top extremities were excluded from the images because of the artifacts induced by the plates. A grayscale image of the tested cemented sand is displayed in Figure 3. During the test, the reaction is measured at the top of the sample. It is plotted in Figure 4. The first six steps (non-broken sample) are situated before the peak of the loading curve.

Figure 3.

View of the sand.

Figure 4.

Measured top reaction.

4.2. DVC and Error Estimation

The displacement fields at these different stages were calculated using a 3D-digital image correlation (DVC) software named Ufreckles, developed by LaMCos (see [6]). A finite element continuum method is used to calculate the displacement field with a non-linear least square error minimization method. The chosen element size is near 0.5 mm. The final region of interest is 20.0 × 22.4 × 15.8 mm. The top of the sample has been excluded. The DVC is performed on a parallelipedic mesh composed of around 470,000 degrees of freedom.

The DVC showed that the pre-peak displacement field is extremely non-homogeneous, as shown in Figure 5. The test showed a complex and rich behavior of the material tested with a non-homogeneous displacement field and pre-peak strain bifurcations. The experimental data are very suited for testing the ability of a given model to predict such phenomena.

Figure 5.

Magnitude of the experimental displacements at the pre-peak steps (deformed × 75).

4.3. Building the Reduced Experimental Basis

For a precise data pruning procedure, the experimental displacement and strain snapshot matrices are computed. The attention is drawn to the fact that the studied test does not have many time steps () and the experimental mesh is not that big. The DVC matrices and are, respectively, 474,405 × 7 and 1,774,080 × 14. If the truncated SVD is applied on these matrices, only six modes are extracted for the displacement and 13 for strain. As the number of time steps is rather small, the use of empirical modes does not reduce the size of the experimental data, as stated before.

In other words, the experimental data are not suited for the dimensionality reduction. This method is efficient on matrices with numerous columns and rather few lines, whereas tomographic data tend to have the exact opposite: few columns (time steps) and a lot of lines (degrees of freedom).

4.4. RED after DVC on the Specimen

During the test, the loading curve was measured at the top of the sample. To compare computed and measured reactions for model assessment, the elements at the top of the mesh are considered as a ZOI. In the remaining, is one layer of elements around .

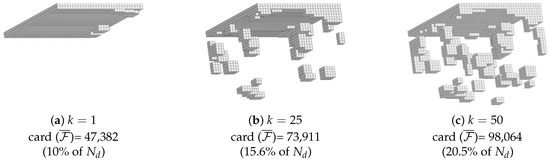

The RED was determined varying the number k of selected lines in the k-SWIM Algorithm. Its influence is assessed in Figure 6. For , the standard DEIM algorithm selects very few degrees of freedom. Most of the RED is actually the ZOI. This is due to the relatively low number of modes contained in the reduced basis (only 6). This apparent issue can be overcome by selecting more lines during the k-SWIM algorithm. When increasing k, the number of degrees of freedom linearly rises. The attention is drawn on the fact that the resultant RED for k = 25 or k = 50 are discontinuous, as is usually the case when using hyper-reduction methods. The newly selected zones are situated in the sheared regions. For the sake of reproducibility, the binary files related to , and are available as supplementary files.

Figure 6.

Influence of k in the k-SWIM algorithm.

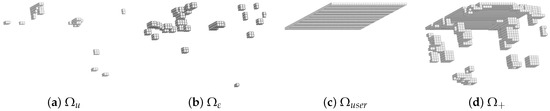

The final RED was arbitrarily selected with (around 15.6% of the total domain ). It is displayed in Figure 6b. The reduced domain construction is analyzed in Figure 7 where the subdomains , , and are displayed.

Figure 7.

Different subdomains for the selected RED for k = 25.

A summary of the different matrix sizes at each step is displayed in Table 1. As stated before, it is clear that for this kind of data, the PCA analysis does not reduce significantly the memory usage. The hyper-reduction scheme used allowed saving up to 85% of the memory space for the illustrating example.

Table 1.

Size of the matrix stored at each step of the data pruning.

5. Assessing the Relevance of the Pruned Data via Finite Element Model Updating-HROM

In this section, the relevance of the pruned data for further usage is discussed. The experimental data extracted from computed tomography can have various purposes. This paper focuses on its use for model calibration, and is illustrated with the in-situ compressive test of a resin bonded sand presented in the previous section. The main aim of this part is to prove that the RED computed thanks to a model free procedure is relevant to assess or calibrate an arbitrary constitutive model.

The model used for the illustrating example is a constitutive elastoplastic model with m unknown parameters to calibrate. The procedure employed is a Finite Element Model Updating (FEMU) technique, coupled with an hybrid hyper-reduction method for the solution of approximate balance equations. The use of such method is straightforward as the input data are actually hyper-reduced. This approach is termed FEMU-HROM.

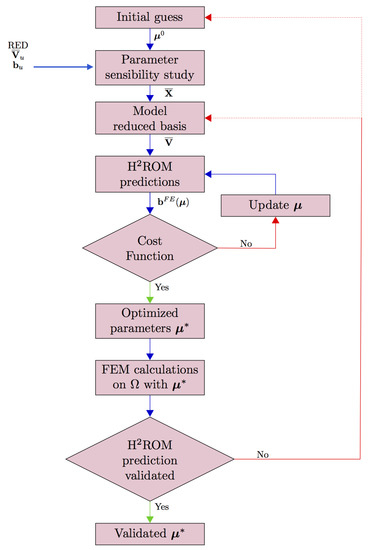

The FEMU-HROM method is resumed in the flowchart in Figure 8. The FEMU-HROM aims to find the best parameter that replicate the experimental data available on the RED by using hyper-reduced equations. During the optimization procedure, the parameters are updated via hybrid hyper-reduced simulations. After few adaptation steps, the optimality of the parameter is checked by using a full FE simulation. If required, the reduced basis involved in the hyper-reduced simulation are updated.

Figure 8.

Flowchart of the FEMU-HROM.

5.1. Constitutive Model MC-CASM

5.1.1. Presentation

The resin-bonded sand behavior is modeled with a relatively simple constitutive model based on the Cemented Clay and Sand Model (C-CASM). It consists in the extension of the Clay And Sand Model developed by Yu [39] for unbonded sand and clay to bonded geomaterials within the framework developed by Gens and Nova [40]. The C-CASM has been extensively described in [41]. The Modified Cemented Clay And Sand Model (MC-CASM) presented here has some modifications of the C-CASM:

- Addition of a damage law whose equation is phenomenological (based on cycled compressive tests).

- The hardening law of the bonding parameter b is different: A first hardening precedes the softening. It is supposed here that the polyurethane resin goes through a first hardening before breaking.

It is supposed here that the yield function was previously calibrated with standard laboratory tests. The calibration concerns the parameters involved in the different damage and hardening laws that can be more difficult to assess with macroscopic loading curves. In the continuation of the paper, the equivalent von Mises stress is denoted q and the mean pressure p. The MC-CASM equations are summarized hereafter.

5.1.2. Yield Function and Plastic Flow

The yield function, f, of the constitutive model is defined by:

where M, r, and n are constant parameters that control the shape of the yield function. is the preconsolidation pressure, that is to say the maximum yield pressure during an isotropic compressive test (see Roscoe et al. [42]). b is the bounding parameter modeling the amplification of the yield surface due to intergranular bonding. is the traction resistance of the soil defined by Gens and Nova [40] as:

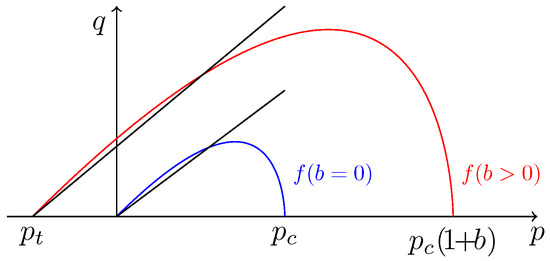

where is a constant parameter modeling the influence of the binder on the traction resistance. The yield function is supposed to be calibrated. This means that M, r, n, and the initial values of and b are known. The yield surfaces of the unbonded (blue) and bonded sand (red) are plotted in Figure 9.

Figure 9.

Yield surfaces in the plane.

5.1.3. Hardening and Damage Laws

The model has two hardening variables: the preconsolidation pressure and the bonding parameter b. The evolution of is directly controlled by the incremental plastic volumetric strain , whereas b relies on a plastic strain damage measure h:

The incremental value of h is defined as a weighting of the effects of the incremental plastic shear strain and the incremental plastic volumetric strain:

The model also includes a damage law whose formulation is purely phenomenological:

The hardening and damage laws provide 7 unknown parameters to calibrate.

5.2. Calibration Protocol by Using the Hybrid Hyper-Reduction Method

The FEMU-HROM is preceded by an off-line phase similar to an unsupervised machine learning phase. It consists in building the empirical reduced basis that is mandatory to set up the hybrid hyper-reduced equations. It is similar to the first step of the data pruning method: a snapshot matrix is constructed based on simulations and experimental results (and not on experiments only).

The starting point of the off-line phase is to assess the parameter sensibilities of the model starting from an initial guess . This guess can come from a previous calibration, or a calibration done using macroscopic force–displacement curves of standard tests without predicting strain localization.

The off-line calculations are performed on the full domain and thus can be time consuming. The boundary conditions are the experimental displacements taken from the computed tomography imposed at the top and the bottom of the sample. The displacement field is not imposed inside the sample because one of the aims of the model is to correctly capture the strain localization appearing inside the sample during the test, under the constraint of balance equations. Imposing the displacement field inside the specimen gives less balance equation to fulfill. m calculations are made on . Attention is drawn to the fact that these calculations can be done in parallel. Only the displacement snapshot matrices are needed. A total of independent calculations are performed:

- One initial calculation where , which gives ;

- m parameters sensibility calculations where , which give for

Once done, these calculations are restricted to the reduced experimental domain . They are denoted for . All these results have to be aggregated in one snapshot matrix before the computation of the empirical modes . Instead of concatenating the matrices into one, a DEPOD method is used (see Schmidt et al. [36]). This approach has been validated in previous works on model calibration with hyper-reduction (see Ryckelynck and Missoum Benziane [43]). This allows capturing the effects of each parameter variation.

where is the Frobenius norm. The first term corresponds to the pruned experimental data. It is weighted by a custom parameter that enables giving more impact to the experimental fluctuations in the empirical modes. The finite element methods tends to smooth these fluctuations, thus provoking a certain loss of information.

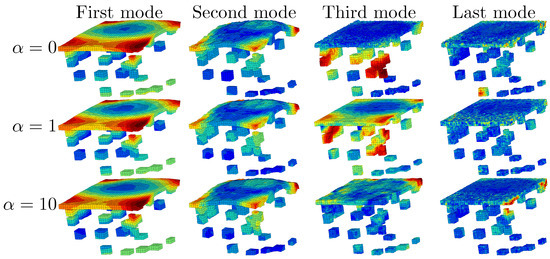

Empirical modes depending on the factor are displayed in Figure 10. For , that is to say without experimental data in the bulk, the empirical modes have strong fluctuations only at the top and the bottom of the specimen, where the experimental boundary conditions are imposed. This can be explained by the natural smoothing that ensures the finite element method with rather elliptic equations. Increasing the importance of the experimental data tends to naturally perturb the displacement field inside the sample. Even for strongly perturbed modes (), the last empirical mode is roughly smooth: this is due to the POD algorithm that filters the data. In the sequel, we choose . The experimental data are as important as simulation data related to FE balance equations.

Figure 10.

Magnitude of the displacement () for each DEPOD mode depending on .

Once is available, the hybrid reduced basis can be defined. Then, the experimental reduced coordinates are projected on the empirical reduced basis to be compared during the optimization loop:

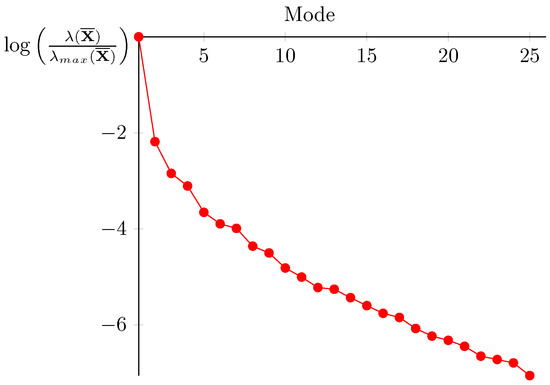

For the proposed example, there is a fast decay of the singular value (see Figure 11 where is set to ). When this decay is not sufficient to provide a small number of empirical modes, we refer the reader to [44,45,46] to cluster the data in order to divide the time interval and construct local reduced basis in time.

Figure 11.

Singular values of verifying .

5.3. Discussion on Dirichlet Boundary Conditions

After the data pruning, experimental data are available in all . When displacements are constrained to follow the experimental data, we loose FE balance equations. The following theorem helps to discuss the Dirichlet boundary conditions.

Theorem 5.

If , ; if the experimental data fulfill the FE equations on with the following additional Dirichlet boundary conditions:

and if both hybrid hyper-reduced equations and FE equations on are unique, then the solution of the hybrid hyper-reduced equation is the exact projection of the experimental data on the empirical reduced basis , with .

Proof.

If the solution of the FE equations in is unique with Dirichlet boundary conditions on equal to , then this solution is . If fulfills the FE equations on , with the additional Dirichlet boundary conditions, then:

and

If and , then

with

Then,

with . Thus, is the unique solution of the hybrid hyper-reduced equations, and the exact projection of the restrained FE solution. □

The last theorem does not imply that imposing as a boundary condition to degrees of freedom in is the best way to fulfill FE balance equations on the full mesh. In fact, with the additional boundary conditions on , the maximum of available FE equations is . Theorem 4 means that if the empirical reduced basis is exact, then all the FE balance equations are fulfilled in . In a sense, in the proposed calibration protocol, we better trust in FE balance equations than in experimental data. Accurate FE balance equations can be obtained by a convenient mesh of , although noise is always present in experimental data.

5.4. Parameters Updating

In the optimization loop (Figure 8), a given set of parameters is assessed. The HROM calculations provide the reduced coordinates associated with the empirical basis previously determined on the RED denoted . The top reaction is also calculated as the average axial stress in the ZOI.

In the example, the cost function that must be minimized, evaluates two scales of error: the microscale error between experimental and computed reduced coordinates and the macroscale error between the measured and computed top reactions. These error functions are, respectively, denoted and .

The microscale error is defined as:

The choice of the norm is user-dependent. The inverse covariance matrix of the displacement is the best norm for a Gaussian noise according to [47,48] for a Bayesian framework. However, in this present study, to keep the treated problem rather simple, a 2-norm has been chosen. The macroscale error is defined as:

Here, is the top surface of the ZOI, where the experimental load was measured and where the experimental displacements are imposed as Dirichlet boundary conditions. The experimental load measurements are supposed uncorrelated and their variance is denoted by . In a Bayesian framework, for a Gaussian noise corrupting the load measurements [23], the previous equation can be written as:

For the the optimization loop, the final objective function is a weighted sum of the two previous sub-objective functions:

where and are the weights. They can be chosen to balance the two cost functions or to privilege one scale to another. In the illustrating example, the cost function is balanced. A classical Levenberg–Marquardt algorithm is employed for the minimization of the error function and the update of the parameters vector .

5.5. Model Calibration and FEM Validation

The optimization loop took 53 iterations. The speed ratio between FEM calculations and HROM predictions is around 70. Moreover, the HROM predictions only needed around 3% of the FEM calculation memory cost. The HROM predictions converge way more easily than the FEM calculations. The problem simulated in the optimization loop is a displacement imposed problem. The use of the reduced basis to predict the displacement field facilitates drastically the convergence. That explains also the important speed-up time that does not come only from the reduction of the integration domain.

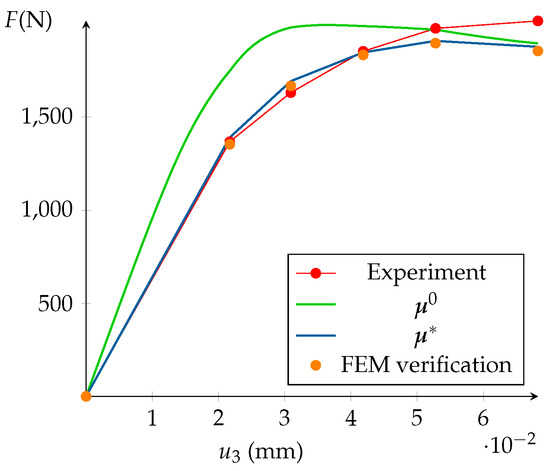

Figure 12 displays the experimental and the computed top reactions (initial and optimized). At the end of the optimization loop, it is mandatory to assess the relevance of the HROM prediction. The FEMU-HROM is dependent on the initial guess . This input determines the relevance of the reduced basis of the model after the parameters sensibility study and the DEPOD analysis. When updating the model, the parameter set may be too different from the initial guess. As a consequence, the empirical reduced basis may not be accurate and the HROM predictions will not be admissible. That is to say that the discrepancy between hyper reduced and Finite Element calculations may not be negligible. That is why the optimized parameters set must be validated with FEM calculations on the full domain . It is worth noting that, if the experimental data are included in the DEPOD, the final HROM prediction should be close to the experiments.

Figure 12.

Result of the HROM optimization.

In a similar manner to the optimization loop, an error function between both calculations can be defined focusing on the microscale (displacement error) and macroscale (top reactions differences).

Concerning the microscale, the discrepancy is only computed in the RED, as HROM predictions are only made on this domain and cannot be reconstructed in the full domain with this particular approach. The microscale discrepancy is estimated by :

In the same manner, the macroscale discrepancy measure the norm of the difference between the two prediction of the load applied to the specimen. This indicator is denoted by . The microscale and macroscale errors should not exceed a few percents of the FEM calculations. In Figure 12, the FEM top reaction is plotted in orange. It is clear that its value is extremely close to the one computed thanks to HROM. The error is around 1% at each step.

This final verification is purely numerical. If the HROM predictions are validated, it is advised to analyze deeper the full field FEM calculation.

In the case of notable differences between HROM prediction and FEM calculations, or between FEM calculations and experiment, the FEMU-HROM is not validated. Two solutions are possible to overcome this issue:

- Perform again the whole parameters sensibility study with .

- Concatenate the previously determined matrix from Equation (42) with and perform a new truncated SVD to determine ultimately an enriched reduced basis . No new FEM calculations are needed.

The first solution should be performed in the case of strong differences between HROM prediction and FEM calculations. The second option “only” costs a FEM calculation. It is also possible to modify the optimization loop to include regularly FEM-HROM comparison and enrich incrementally.

6. Discussion

6.1. Limitations of the Pruning Procedure

The present paper focused on DVC sets and not on the images themselves. Since each element covers several voxels, the images are also known to be particularly heavy and perhaps more problematic than the DVC data. The pruning procedure considers that they can be deleted. Actually, it can be problematic. For instance, new DVC algorithm could improve the determination of the displacement field (for example for complex problems involving cracks).

The images could be pruned too, in the sense that the only the pixels of the images inside the determined RED can be conserved. However, we preconize to store only the reduced DVC data when the data storage is an issue.

In the case of non homogeneous materials, the data concerning the inhomogeneity outside the RED must be saved as well.

6.2. About the Reconstruction of Data outside the RED

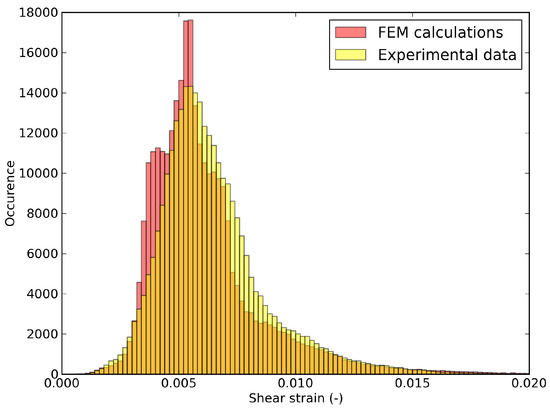

Because of the proposed data pruning, experimental data outside the RED are no more available. However, the finite element verification gives access to an estimation of these data via the finite element model and the optimal parameters . For instance, the shear strain distribution can be estimated by the finite element model with the optimal values of the parameter. In the illustrating example, the computed and measured shear strain distributions, over the integration points in , were compared. The analysis is summarized in the histograms displayed in Figure 13 for the last pre-peak step. The discrepancy between computed (via FE verification) and measured distributions was considered here as satisfying.

Figure 13.

Probability distribution of shear strain at the last pre-peak step in the whole domain , comparing FEM calculation (verification step) and experimental data.

6.3. Shear Strain Distributions in the RED

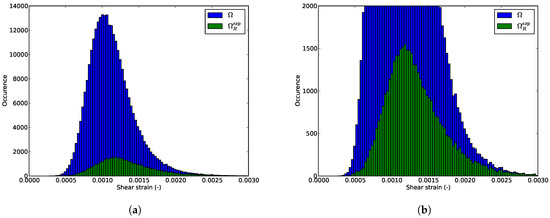

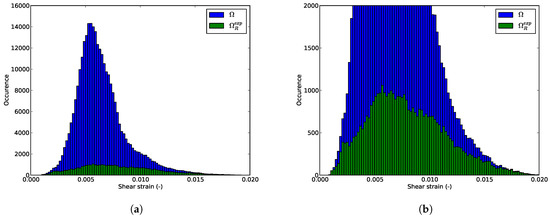

We can also consider the shear strain distributions is inside the whole domain and the RED for the illustrating example. It would be preferable that the pruning procedure stores in the RED the most different configurations. The shear strain distributions in the whole domain and in the RED might be different (not the same mean value for example). Figure 14a and Figure 15a present the shear strain distributions at the first and last pre-peak step. It appears that the statistical distribution of the shear strain inside the RED is not the same than the one inside the full domain. Nevertheless, zooms at both histograms in Figure 14b and Figure 15b reveal that the extremum values of the shear strain are conserved. One can see that the RED contains nearly all the elements where the shear is maximal. Even if the proposed procedure is model-free, it is intimately linked with the mechanics of solids: it will store preferably the data that are mechanically more relevant. For strain localization phenomenon, it is the most sheared zone. The proposed method is not statistical: it actually induces a sampling bias.

Figure 14.

Shear strain distributions (a) in the whole domain and (b) in the RED at the first step.

Figure 15.

Shear strain distributions (a) in the whole domain and (b) in the RED at the last step.

7. Conclusions

The present paper proposes a data pruning procedure for DVC data that is model free and versatile. The k-SWIM algorithm, through its parameter k, enables the user to define the size of the stored data.

The resultant data can still be used afterwards, for instance for calibration. The use of hybrid hyper-reduction is particularly suitable for the pruned data as it enables a non-negligible reduction of memory and time costs in the FEMU optimization loop. The FEMU-HROM method is thus a new way to use massive DVC data for deeper mechanical studies.

Supplementary Materials

The following are available online at https://www.mdpi.com/2297-8747/24/1/18/s1 as supplementary files to make the output of Algorithm 1 reproducible. The ASCII file Node-iXYZ.txt contains the node indices and the related coordinates. The files Vu.npy, bu.npy and Pu_reference.npy, are binary files related to , and , respectively. They have been generated by using the NumPy instruction “save”. The ASCII file k_swim.py contains Algorithm 1 written with SciPy instructions. In the ASCII file run_kswim.py, this algorithm is applied to the data .

Author Contributions

Conceptualization, D.R.; methodology, D.R. and W.H.; experimental data, C.M.; data analysis, W.H.; writing, D.R. and W.H.

Funding

This research was funded by Agence Nationale de la Recherche, in France; grant number is ANR-14-CE07-0038-03 FIMALIPO.

Acknowledgments

The authors would like to acknowledge the Agence Nationale de la Recherche for their financial support for the FIMALIPO project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chu, T.; Ranson, W.; Sutton, M.; Peters, W. Application of digital-image-dorrelation techniques to experimental mechanics. Exp. Mech. 1985, 25, 232–244. [Google Scholar] [CrossRef]

- Bay, B.; Smith, T.; Fyhrie, D.; Saad, M. Digital volume correlation: Three-dimensional strain mapping using X-ray tomography. Exp. Mech. 1999, 39, 217–226. [Google Scholar] [CrossRef]

- Van Ooijen, P.M.A.; Broekema, A.; Oudkerk, M. Use of a Thin-Section Archive and Enterprise 3-Dimensional Software for Long-Term Storage of Thin-Slice CT Datasets—A Reviewers’ Response. J. Digit. Imag. 2008, 21, 188–192. [Google Scholar] [CrossRef] [PubMed]

- Pan, D. A tutorial on MPEG/audio compression. IEEE MultiMed. 1995, 2, 60–74. [Google Scholar] [CrossRef]

- Cioaca, A.; Sandu, A. Low-rank approximations for computing observation impact in 4D-Var data assimilation. Comput. Math. Appl. 2014, 67, 2112–2126. [Google Scholar] [CrossRef]

- Réthoré, J.; Roux, S.; Hild, F. From pictures to extended finite elements: Extended digital image correlation (X-DIC). C. R. Méc. 2007, 335, 131–137. [Google Scholar] [CrossRef]

- Rojanaarpa, T.; Kataeva, I. Density-Based Data Pruning Method for Deep Reinforcement Learning. In Proceedings of the 2016 15th IEEE International Conference on Machine Learning and Applications (ICMLA), Anaheim, CA, USA, 18–20 December 2016; pp. 266–271. [Google Scholar]

- Hu, Y.; Chen, H.; Li, G.; Li, H.; Xu, R.; Li, J. A statistical training data cleaning strategy for the PCA-based chiller sensor fault detection, diagnosis and data reconstruction method. Energy Build. 2016, 112, 270–278. [Google Scholar] [CrossRef]

- Hong, Y.; Kwong, S.; Chang, Y.; Ren, Q. Unsupervised data pruning for clustering of noisy data. Knowl. Based Syst. 2008, 21, 612–616. [Google Scholar] [CrossRef]

- Kavanagh, K.T.; Clough, R.W. Finite element applications in the characterization of elastic solids. Int. J. Solids Struct. 1971, 7, 11–23. [Google Scholar] [CrossRef]

- Kavanagh, K.T. Extension of classical experimental techniques for characterizing composite-material behavior. Exp. Mech. 1972, 12, 50–56. [Google Scholar] [CrossRef]

- Ienny, P.; Caro-Bretelle, A.S.; Pagnacco, E. Identification from measurements of mechanical fields by finite element model updating strategies. Eur. J. Comput. Mech. 2009, 18, 353–376. [Google Scholar] [CrossRef]

- Lecompte, D.; Smits, A.; Sol, H.; Vantomme, J.; Hemelrijck, D.V. Mixed numerical–experimental technique for orthotropic parameter identification using biaxial tensile tests on cruciform specimens. Int. J. Solids Struct. 2007, 44, 1643–1656. [Google Scholar] [CrossRef]

- Molimard, J.; Le Riche, R.; Vautrin, A.; Lee, J.R. Identification of the four orthotropic plate stiffnesses using a single open-hole tensile test. Exp. Mech. 2005, 45, 404–411. [Google Scholar] [CrossRef]

- Meijer, R.; Douven, L.F.A.; Oomens, C.W.J. Characterisation of Anisotropic and Non-linear Behaviour of Human Skin In Vivo. Comput. Methods Biomech. Biomed. Eng. 1999, 2, 13–27. [Google Scholar] [CrossRef] [PubMed]

- Bruno, L. Mechanical characterization of composite materials by optical techniques: A review. Opt. Lasers Eng. 2018, 104, 192–203. [Google Scholar] [CrossRef]

- Mahnken, R.; Stein, E. A unified approach for parameter identification of inelastic material models in the frame of the finite element method. Comput. Methods Appl. Mech. Eng. 1996, 136, 225–258. [Google Scholar] [CrossRef]

- Forestier, R.; Massoni, E.; Chastel, Y. Estimation of constitutive parameters using an inverse method coupled to a 3D finite element software. J. Mater. Process. Technol. 2002, 125–126, 594–601. [Google Scholar] [CrossRef]

- Giton, M.; Caro-Bretelle, A.S.; Ienny, P. Hyperelastic Behaviour Identification by a Forward Problem Resolution: Application to a Tear Test of a Silicone-Rubber. Strain 2006, 42, 291–297. [Google Scholar] [CrossRef]

- Cugnoni, J.; Botsis, J.; Janczak-Rusch, J. Size and Constraining Effects in Lead-Free Solder Joints. Adv. Eng. Mater. 2006, 8, 184–191. [Google Scholar] [CrossRef]

- Latourte, F.; Chrysochoos, A.; Pagano, S.; Wattrisse, B. Elastoplastic behavior identification for heterogeneous loadings and materials. Exp. Mech. 2008, 48, 435–449. [Google Scholar] [CrossRef]

- Padmanabhan, S.; Hubner, J.P.; Kumar, A.V.; Ifju, P.G. Load and Boundary Condition Calibration Using Full-field Strain Measurement. Exp. Mech. 2006, 46, 569–578. [Google Scholar] [CrossRef]

- Neggers, J.; Allix, O.; Hild, F.; Roux, S. Big Data in Experimental Mechanics and Model Order Reduction: Today’s Challenges and Tomorrow’s Opportunities. Arch. Comput. Methods Eng. 2017. [Google Scholar] [CrossRef]

- Cugnoni, J.; Gmür, T.; Schorderet, A. Inverse method based on modal analysis for characterizing the constitutive properties of thick composite plates. Comput. Struct. 2007, 85, 1310–1320. [Google Scholar] [CrossRef]

- Aubry, N.; Holmes, P.; Lumley, J.L.; Stone, E. The dynamics of coherent structures in the wall region of a turbulent boundary layer. J. Fluid Mech. 1988, 192, 115–173. [Google Scholar] [CrossRef]

- Passieux, J.C.; Perie, J.N. High resolution digital image correlation using proper generalized decomposition: PGD-DIC. Int. J. Numer. Methods Eng. 2012, 92, 531–550. [Google Scholar] [CrossRef]

- Ryckelynck, D. A priori hyperreduction method: An adaptive approach. J. Comput. Phys. 2005, 202, 346–366. [Google Scholar] [CrossRef]

- Hernandez, J.A.; Caicedo, M.A.; Ferrer, A. Dimensional hyper-reduction of nonlinear finite element models via empirical cubature. Comput. Methods Appl. Mech. Eng. 2017, 313, 687–722. [Google Scholar] [CrossRef]

- Baiges, J.; Codina, R.; Idelson, S. A domain decomposition strategy for reduced order models. Application to the incompressible Navier-Stokes equations. Comput. Methods Appl. Mech. Eng. 2013, 267, 23–42. [Google Scholar] [CrossRef]

- Ryckelynck, D.; Lampoh, K.; Quilici, S. Hyper-reduced predictions for lifetime assessment of elasto-plastic structures. Meccanica 2015. [Google Scholar] [CrossRef]

- Liu, J.; Musialski, P.; Wonka, P.; Ye, J. Tensor Completion for Estimating Missing Values in Visual Data. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 208–220. [Google Scholar] [CrossRef] [PubMed]

- Shang, Q.; Yang, Z.; Gao, S.; Tan, D. An Imputation Method for Missing Traffic Data Based on FCM Optimized by PSO-SVR. J. Adv. Transp. 2018. [Google Scholar] [CrossRef]

- Chaturantabut, S.; Sorensen, D.C. Nonlinear Model Reduction via Discrete Empirical Interpolation. J. Sci. Comput. 2010, 32, 2737–2764. [Google Scholar] [CrossRef]

- Chaturantabut, S.; Sorensen, D.C. A state space error estimate for POD-DEIM nonlinear model reduction. J. Numer. Anal. 2012, 50, 46–63. [Google Scholar] [CrossRef]

- Fauque, J.; Ramière, I.; Ryckelynck, D. Hybrid hyper-reduced modeling for contact mechanics problems. Int. J. Numer. Methods Eng. 2018, 115, 117–139. [Google Scholar] [CrossRef]

- Schmidt, A.; Potschka, A.; Koerkel, S.; Bock, H.G. Derivative-Extended POD Reduced-Order Modeling for Parameter Estimation. J. Sci. Comput. 2013, 35, A2696–A2717. [Google Scholar] [CrossRef]

- Jomaa, G.; Goblet, P.; Coquelet, C.; Morlot, V. Kinetic modeling of polyurethane pyrolysis using non-isothermal thermogravimetric analysis. Thermochim. Acta 2015, 612, 10–18. [Google Scholar] [CrossRef]

- Bargaoui, H.; Azzouz, F.; Thibault, D.; Cailletaud, G. Thermomechanical behavior of resin bonded foundry sand cores during casting. J. Mater. Process. Technol. 2017, 246, 30–41. [Google Scholar] [CrossRef]

- Yu, H.S. CASM: A unified state parameter model for clay and sand. Int. J. Numer. Anal. Methods Geomechan. 1998, 22, 621–653. [Google Scholar] [CrossRef]

- Gens, A.; Nova, R. Conceptual bases for a constitutive model for bonded soils and weak rocks. Geotech. Eng. Hard Soils Soft Rocks 1993, 1, 485–494. [Google Scholar]

- Rios, S.; Ciantia, M.; Gonzalez, N.; Arroyo, M.; Viana da Fonseca, A. Simplifying calibration of bonded elasto-plastic models. Comput. Geotech. 2016, 73, 100–108. [Google Scholar] [CrossRef]

- Roscoe, K.; Schofield, A.; Wroth, C. On the yielding of soils. Geotechnique 1958, 8, 22–52. [Google Scholar] [CrossRef]

- Ryckelynck, D.; Missoum Benziane, D. Hyper-reduction framework for model calibration in plasticity-induced fatigue. Adv. Model. Simul. Eng. Sci. 2016, 3, 15. [Google Scholar] [CrossRef]

- Ghavamian, F.; Tiso, P.; Simone, A. POD-DEIM model order reduction for strain-softening viscoplasticity. Comput. Methods Appl. Mech. Eng. 2017, 317, 458–479. [Google Scholar] [CrossRef]

- Peherstorfer, B.; Butnaru, D.; Willcox, K.; Bungartz, H.J. Localized Discrete Empirical Interpolation Method. J. Sci. Comput. 2014, 36, 168–192. [Google Scholar] [CrossRef]

- Haasdonk, B.; Dihlmann, M.; Ohlberger, M. A training set and multiple bases generation approach for parameterized model reduction based on adaptive grids in parameter space. Math. Comput. Model. Dyn. Syst. 2011, 17, 423–442. [Google Scholar] [CrossRef]

- Tarantola, A. Inverse Problem Theory: Methods For Data Fitting and Model Parameter Estimation; Elsevier: Amsterdam, The Netherlands, 1987. [Google Scholar]

- Kaipio, J.; Somersalo, E. Statistical Inverse Problems: Discretization, Model Reduction and Inverse Crimes. J. Comput. Appl. Math. 2007, 198, 493–504. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).