Multi-Stage Meta-Learning for Few-Shot with Lie Group Network Constraint

Abstract

1. Introduction

- (1)

- Reducing the number of parameters that needs to be updated during meta-train and meta-test phases by using a deeper network structure in which a pretrain operation on meta-learner’s low-level layers is performed;

- (2)

- Improving the utilization level of training data with limited updating steps by introducing a multi-stage optimization mechanism in which the model sets some checkpoints during the middle and late stage in the process of adaption;

- (3)

- Constraining a network to Stiefel manifold so that a meta-learner could perform a more stable gradient descent in limited steps and accelerate the adapting process.

2. Related Work

2.1. MAML-Based Meta-Learning Models

2.2. Gradient Descent Optimization in Meta-Learning Models

3. Methods

3.1. Pretrained by Large Scale Data

| Algorithm 1 Pretrain |

| Require:: on large dataset Require: : learning rate and number of training epochs

|

3.2. Multi-Stage Optimization

3.3. Lie Group Network Constrained

| Algorithm 2 Meta-Train |

| Require:: pretrained feature extractor parameters Require:: learning rates for inner loop and outer loop Require:: tasks from training data

|

| Algorithm 3 Inner Loop |

| Require:: a task in current batch Require:: learning rates of gradient descent

|

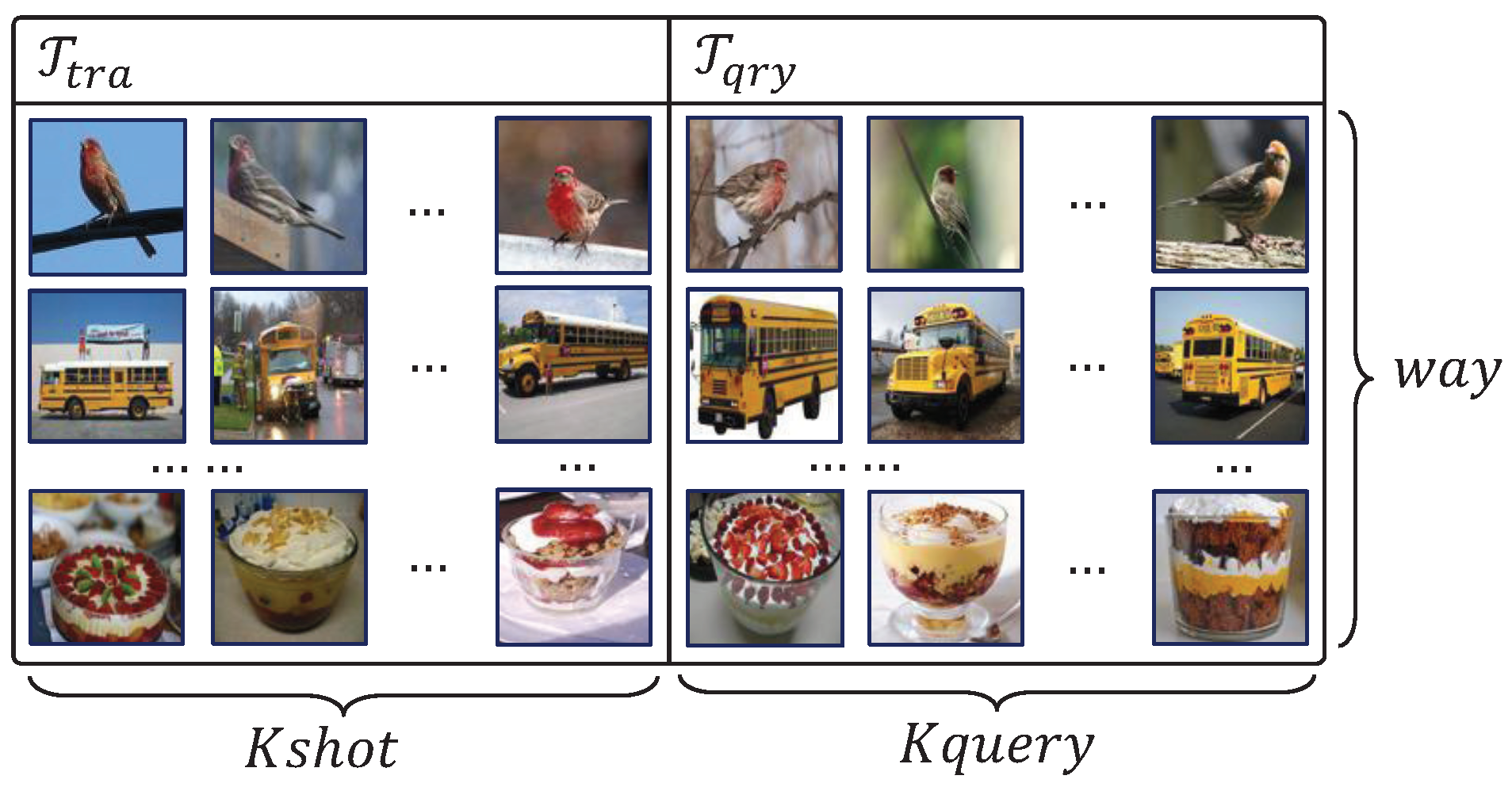

4. Experiments

4.1. Dataset

4.2. Model Detail

4.2.1. Pretrain Phase

4.2.2. Meta Train Phase

5. Results

6. Discussion

7. Conclusions

8. Materials and Methods

8.1. Computational Requirements

8.2. Program Availability

8.3. Running Experiment

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Glass, G.V. Primary, secondary, and meta-analysis of research. Educ. Res. 1976, 5, 3–8. [Google Scholar] [CrossRef]

- Powell, G. A Meta-Analysis of the Effects of “Imposed” and “Induced” Imagery Upon Word Recall. 1980. Available online: https://eric.ed.gov/?id=ED199644 (accessed on 29 May 2020).

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Wierstra, D. Matching networks for one shot learning. In Proceedings of the 30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 4–9 December 2016; pp. 3630–3638. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 4077–4087. [Google Scholar]

- Oreshkin, B.; López, P.R.; Lacoste, A. Tadam: Task dependent adaptive metric for improved few-shot learning. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; pp. 721–731. [Google Scholar]

- Mishra, N.; Rohaninejad, M.; Chen, X.; Abbeel, P. A simple neural attentive meta-learner. arXiv 2017, arXiv:1707.03141. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Nichol, A.; Achiam, J.; Schulman, J. On first-order meta-learning algorithms. arXiv 2018, arXiv:1803.02999. [Google Scholar]

- Li, Z.; Zhou, F.; Chen, F.; Li, H. Meta-SGD: Learning to learn quickly for few-shot learning. arXiv 2017, arXiv:1707.09835. [Google Scholar]

- Gupta, A.; Eysenbach, B.; Finn, C.; Levine, S. Unsupervised meta-learning for reinforcement learning. arXiv 2018, arXiv:1806.04640. [Google Scholar]

- Nagabandi, A.; Clavera, I.; Liu, S.; Fearing, R.S.; Abbeel, P.; Levine, S.; Finn, C. Learning to adapt in dynamic, real-world environments through meta-reinforcement learning. arXiv 2018, arXiv:1803.11347. [Google Scholar]

- Al-Shedivat, M.; Bansal, T.; Burda, Y.; Sutskever, I.; Mordatch, I.; Abbeel, P. Continuous adaptation via meta-learning in nonstationary and competitive environments. arXiv 2017, arXiv:1710.03641. [Google Scholar]

- Kim, T.; Yoon, J.; Dia, O.; Kim, S.; Bengio, Y.; Ahn, S. Bayesian model-agnostic meta-learning. arXiv 2018, arXiv:1806.03836. [Google Scholar]

- Yoon, J.; Kim, T.; Dia, O.; Kim, S.; Bengio, Y.; Ahn, S. Bayesian model-agnostic meta-learning. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; pp. 7332–7342. [Google Scholar]

- Finn, C.; Xu, K.; Levine, S. Probabilistic model-agnostic meta-learning. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; pp. 9516–9527. [Google Scholar]

- Ravi, S.; Larochelle, H. Optimization as a Model for Few-Shot Learning. 2016. Available online: https://openreview.net/forum?id=rJY0-Kcll (accessed on 29 May 2020).

- Jamal, M.A.; Qi, G.J. Task Agnostic Meta-Learning for Few-Shot Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 11719–11727. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

- Qiao, S.; Liu, C.; Shen, W.; Yuille, A.L. Few-shot image recognition by predicting parameters from activations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7229–7238. [Google Scholar]

- Arnold, S.M.; Iqbal, S.; Sha, F. Decoupling Adaptation from Modeling with Meta-Optimizers for Meta Learning. arXiv 2019, arXiv:1910.13603. [Google Scholar]

- Rusu, A.A.; Rao, D.; Sygnowski, J.; Vinyals, O.; Pascanu, R.; Osindero, S.; Hadsell, R. Meta-learning with latent embedding optimization. arXiv 2018, arXiv:1807.05960. [Google Scholar]

- Sun, Q.; Liu, Y.; Chua, T.S.; Schiele, B. Meta-transfer learning for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 403–412. [Google Scholar]

- Cai, D.; He, X.; Han, J.; Zhang, H.J. Orthogonal laplacianfaces for face recognition. IEEE Trans. Image Process. 2006, 15, 3608–3614. [Google Scholar] [CrossRef]

- Zhang, Z.; Chow, T.W.; Zhao, M. M-Isomap: Orthogonal constrained marginal isomap for nonlinear dimensionality reduction. IEEE Trans. Cybern. 2012, 43, 180–191. [Google Scholar] [CrossRef]

- Fan-Zhang, L.; Huan, X. SO(3) Classifier of Lie Group Machine Learning. 2007. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.114.4200 (accessed on 29 May 2020).

- Li, F.Z.; Xu, H. SO(3) classifier of Lie group machine learning. Learning 2007, 9, 12. [Google Scholar]

- Huang, L.; Liu, X.; Lang, B.; Yu, A.W.; Wang, Y.; Li, B. Orthogo nal weight normalization: Solution to optimization over multiple dependent stiefel manifolds in deep neural networks. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Nishimori, Y. A Neural Stiefel Learning based on Geodesics Revisited. WSEAS Trans. Syst. 2004, 3, 513–520. [Google Scholar]

- Yang, M.; Li, F.; Zhang, L.; Zhang, Z. Lie group impression for deep learning. Inf. Process. Lett. 2018, 136, 12–16. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Battaglia, P.W.; Hamrick, J.B.; Bapst, V.; Sanchez-Gonzalez, A.; Zambaldi, V.; Malinowski, M.; Tacchetti, A.; Raposo, D.; Santoro, A.; Faulkner, R.; et al. Relational inductive biases, deep learning, and graph networks. arXiv 2018, arXiv:1806.01261. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide residual networks. arXiv 2016, arXiv:1605.07146. [Google Scholar]

- Keskar, N.S.; Socher, R. Improving generalization performance by switching from adam to sgd. arXiv 2017, arXiv:1712.07628. [Google Scholar]

- Zeiler, M.D. ADADELTA: An adaptive learning rate method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- Bottou, L. Large-scale machine learning with stochastic gradient descent. In Proceedings of COMPSTAT’2010; Springer: Cham, Switzerland, 2010; pp. 177–186. [Google Scholar]

- Belkin, M.; Niyogi, P. Semi-supervised learning on Riemannian manifolds. Mach. Learn. 2004, 56, 209–239. [Google Scholar] [CrossRef]

- Li, F. Lie Group Machine Learning; Press of University of Science and Technology of China: Hefei, China, 2013. [Google Scholar]

- Nishimori, Y.; Akaho, S. Learning algorithms utilizing quasi-geodesic flows on the Stiefel manifold. Neurocomputing 2005, 67, 106–135. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceeding of the 2009 IEEE conference on computer vision and pattern recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

| Methods | 1-Shot | 5-Shot | Memory Cost (MB) | |

|---|---|---|---|---|

| 1-Shot | 5-Shot | |||

| MSML without Sti and Stage | 60.80 ± 0.87% | 76.23 ± 0.66% | 4062 | 6443 |

| MSML without Sti | 61.12 ± 1.02% | 76.19 ± 0.41% | 8655 | 9984 |

| MSML without Stage | 61.50 ± 0.82% | 77.02 ± 0.73% | 4165 | 6521 |

| MSML | 62.42 ± 0.76% | 77.32 ± 0.66% | 8767 | 10,157 |

| Few-Shot Learning Method | 1-Shot | 5-Shot |

|---|---|---|

| Metric Based | ||

| Matching Nets [4] | ||

| Relation Nets [5] | ||

| Prototypical Nets [6] | ||

| Gradient descent based | ||

| MAML (4 Conv) [9] | ||

| Reptile (4 Conv) [10] | ||

| meta-SGD (4 Conv) [11] | ||

| LEO (WRN-28-10) [24] | ||

| MTL (ResNet-12) [25] | ||

| TAML (4 Conv) [19] | ||

| MSML (The proposed method) | 62.42 ± 0.76% | 77.32 ± 0.66% |

| Memory augmented based | ||

| TADAM [7] | ||

| SNAIL [8] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, F.; Liu, L.; Li, F. Multi-Stage Meta-Learning for Few-Shot with Lie Group Network Constraint. Entropy 2020, 22, 625. https://doi.org/10.3390/e22060625

Dong F, Liu L, Li F. Multi-Stage Meta-Learning for Few-Shot with Lie Group Network Constraint. Entropy. 2020; 22(6):625. https://doi.org/10.3390/e22060625

Chicago/Turabian StyleDong, Fang, Li Liu, and Fanzhang Li. 2020. "Multi-Stage Meta-Learning for Few-Shot with Lie Group Network Constraint" Entropy 22, no. 6: 625. https://doi.org/10.3390/e22060625

APA StyleDong, F., Liu, L., & Li, F. (2020). Multi-Stage Meta-Learning for Few-Shot with Lie Group Network Constraint. Entropy, 22(6), 625. https://doi.org/10.3390/e22060625