Highlights

2nd law and entropy based on 5 simple observations aligning with daily experience.

Entropy defined for nonequilibrium and equilibrium states.

Positivity of thermodynamic temperature ensures dissipation of kinetic energy, and stability of the rest state.

Global balance laws based on assumption of local thermodynamic equilibrium.

Agreement with Classical Equilibrium Thermodynamics and Linear Irreversible Thermodynamics.

Entropy definition in agreement with Boltzmann’s H-function.

Discussion of thermodynamic engines and devices, incl. Carnot engines and cycles, only after the laws of thermodynamics are established.

Preamble

This text centers on the introduction of the 2nd Law of Thermodynamics from a small number of everyday observations. The emphasis is on a straight path from the observations to identifying the 2nd law, and thermodynamic temperature. There are only few examples or applications, since these can be found in textbooks. The concise presentation aims at the more experienced reader, in particular those that are interested to see how the 2nd law can be introduced without Carnot engines and cycles.

Nonequilibrium states and nonequilibrium processes are at the core of thermodynamics, and the present treatment puts these at center stage—where they belong. Throughout, all thermodynamic systems considered are allowed to be in nonequilibrium states, which typically are inhomogeneous in certain thermodynamic properties, with homogeneous states (for the proper variables) assumed only in equilibrium.

The content ranges from simple observation in daily life over the equations for systems used in engineering thermodynamics to the partial differential equations of thermo-fluid-dynamics; short discussions of kinetic theory of gases and the microscopic interpretation of entropy are included.

The presentation is split into many short segments to give the reader sufficient pause for consideration and digestion. For better flow, some material is moved to the Appendix which provides the more difficult material, in particular excursions into Linear Irreversible Thermodynamics and Kinetic Theory.

While it would be interesting to compare the approach to entropy presented below to what was or is done by others, this will not be done. My interest here is to give a rather concise idea of the introduction of entropy on the grounds of nonequilibrium states and irreversible processes, together with a smaller set of significant problems that can be tackled best from this viewpoint. The reader interested in the history of thermodynamics, and other approaches is referred to the large body of literature, of which only a small portion is referenced below.

1. Intro: What’s the Problem with the 2nd Law?

After teaching thermodynamics to engineering students for more than two decades (from teaching assistant to professor) I believe that a lot of the trouble, or the conceived trouble, with understanding of the 2nd law is related to how it is taught to the students. Most engineering textbooks introduce the 2nd law, and its main quantity,

Entropy, following the classical Carnot-Clausius arguments [

1] using reversible and irreversible engines [

2,

3,

4,

5]. To follow this approach properly, one needs a lot of background on processes, systems, property relations, engines and cycles. For instance in a widely adopted undergraduate textbook [

2], the mathematical formulation of the 2nd law—the balance law for entropy—appears finally on page 380, more than 300 pages after the conservation law for energy, which is the mathematical expression of the

1st Law of Thermodynamics.Considering that the full discussion of processes, systems, property relations, engines and cycles requires

both, the 1st

and the 2nd law, it should be clear that a far more streamlined access to the body of thermodynamics can be achieved when both laws—in their full mathematical formulation—are available as early as possible. The doubtful reader will find some examples in the course of this treatise. Some authors are well aware of this advantage, which is common in Germany [

6], but seems to come only slowly to the North-American market [

7,

8].

Early introduction of the 2nd law cannot be based on the Carnot-Clausius argument using engines and cycles, but must find other ways. In References [

6,

7] this problem is solved by simply postulating the 2nd law, and then showing first that is makes perfect sense, by analyzing simple systems, before using it to find less intuitive results, such as the limited efficiency of heat engines, and any other results of (applied) thermodynamics.

The problem with the postulative approach is that assumptions and restrictions of the equations are not always clearly stated. While the 1st law of thermodynamics is universally valid, this is not the case for most formulations of the 2nd law of thermodynamics. The presentation below aims at introducing the 2nd law step by step, with clear discussion of all assumptions used.

2. Outline: Developing the 2nd Law

Below, I will develop the 2nd law from a small number of observations. This approach is also outlined in my undergraduate textbook [

8], but with some shortcuts and omissions that I felt are required for the target audience, that is, students who are exposed to the topic for the first time. The presentation below is aimed more at the experienced reader, therefore when it comes to the 2nd law, I aim to avoid these shortcuts, and discuss several aspects in more depth, in particular the all-important question of nonequilibrium states, while reducing the discussion of basic elements like properties and their relations as much as possible.

Many treatments of thermodynamics claim that it can only make statements on equilibrium states, and cannot deal with nonequilibrium states and irreversible processes. Would this be true, simple thermodynamic elements such as heat conductors or irreversible turbines could not be treated in the context of thermodynamics.

Indeed, classical [

9,

10,

11] or more recent [

12,

13,

14] treatments focus on equilibrium states, and avoid the definition of entropy in nonequilibrium.

However, in his famous treatment of kinetic theory of gases, Boltzmann gave proof of the H-theorem [

15,

16,

17], which can be interpreted as the 2nd law of thermodynamics for ideal gases in

any state. Entropy and its balance follow directly by integration from the particle distribution function and the Boltzmann equation, and there is no limitation at all to equilibrium states. Of course, for general nonequilibrium states one cannot expect to find a simple property relation for entropy, but this does not imply that entropy as an extensive system property does not exist. All frameworks of nonequilibrium thermodynamics naturally include a nonequilibrium entropy [

18,

19,

20,

21,

22].

The arguments below focus on the approach to equilibrium from nonequilibrium states. Entropy is introduced as the property to describe equilibration processes, hence is defined for general nonequilibrium states.

Luckily, most engineering problems, and certainly those that are the typical topics in engineering thermodynamics, can be described in the framework of

local thermodynamic equilibrium [

18], where the well-known property relations for equilibrium are valid locally, but states are inhomogeneous (global equilibrium states normally are homogeneous in certain properties, see

Section 40). Textbook discussions of open systems like nozzles, turbines, heat exchangers, and so forth, rely on this assumption, typically without explicitly stating this fact [

2,

3,

4,

5,

6,

7,

8]. It is also worthwhile to note that local thermodynamic equilibrium arises naturally in kinetic theory, when one considers proper limitations on processes, viz. sufficiently small Knudsen number, see

Appendix D.5 and References [

16,

17].

Obviously, the 2nd law does not exist on its own, that is for its formulation we require some background—and language—on systems, processes, and the 1st law, which will be presented first, as concisely as possible, before we will embark on the construction of the balance law for entropy, the property relations, and some analysis of its consequences. For completeness, short sections on Linear Irreversible Thermodynamics and Kinetic Theory of Gases are included in the Appendix.

For better accessibility, in most of the ensuing discussion we consider closed systems, the extension to open systems will be outlined only briefly. Moreover, mixtures, reacting or not, are not included, mainly for space reasons. Larger parts of the discussion, and the figures, are adapted from my textbook on technical thermodynamics [

8], albeit suitably re-ordered, tailored, and re-formulated. The interested reader is referred to the book for many application to technical processes in open and closed systems, as well as the discussion of inert and reacting mixtures.

In an overview paper [

13] of their detailed account of entropy as an equilibrium property [

12], Lieb and Yngvason state in the subtitle that

“The existence of entropy, and its increase, can be understood without reference to either statistical mechanics or heat engines.”

This is the viewpoint of this contribution as well, hence I could not agree more. However, these authors’ restriction of entropy to equilibrium states is an unnecessary limitation. The analysis of irreversible processes is central in modern engineering thermodynamics, where systems in nonequilibrium states must be evaluated, and the entropy generation rate is routinely studied to analyze irreversible losses, and to redesign processes with the goal of entropy generation minimization [

8,

23]. Hence, while the goal appears to be the same, the present philosophy differs substantially from authors who restrict entropy to equilibrium states.

3. The 2nd Law in Words

In the present approach, the 2nd law summarizes everyday experiences in a few generalized observations which are then used to conclude on its mathematical formulation, that is, the balance law for entropy. Only the mathematical formulation of thermodynamics, which also includes the 1st law and property relations, allows to generalize from simple experience to the unexpected.

Below, the observations used, and the mathematical formulation of the 2nd law, are summarized as an introduction of what will come for the thermodynamically experienced reader, and for future reference for the newcomer. The definitions of terms used, explanations of the observations, and their evaluation will be presented in subsequent sections

The observation based form of the 2nd law reads:

Basic Observations

- Observation 1.

A closed system can only be manipulated by heat and work transfer.

- Observation 2.

Over time, an isolated thermodynamic system approaches a unique and stable equilibrium state.

- Observation 3.

In a stable equilibrium state, the temperature of a thermally unrestricted system is uniform.

- Observation 4.

Work transfer is unrestricted in direction, but some work might be lost to friction.

- Observation 5.

Heat will always go from hot to cold by itself, but not vice versa.

The experienced reader should not be surprised by any of these statements. The second, third and fifth probably are most familiar, since they appear as input, or output, in any treatment of the 2nd law. The first and the fourth are typically less emphasized, if stated at all, but are required to properly formulate the transfer of entropy, and to give positivity of thermodynamic temperature.

Careful elaboration will show that these observations, with the assumption of local thermodynamic equilibrium, will lead to the 2nd law for closed systems in the form

Here,

S is the concave entropy of the system,

is energy transfer by heat over the system boundary at positive thermodynamic temperature

, and

is the non-negative generation rate of entropy within the system, which vanishes in equilibrium.

4. Closed System

The first step in any thermodynamic consideration is to identify the system that one wishes to describe. Any complex system, for example, a power plant, can be seen as a compound of some—or many—smaller and simpler systems that interact with each other. For the basic understanding of the thermodynamic laws it is best to begin with the simplest system, and study more complex systems later as assemblies of these simple systems.

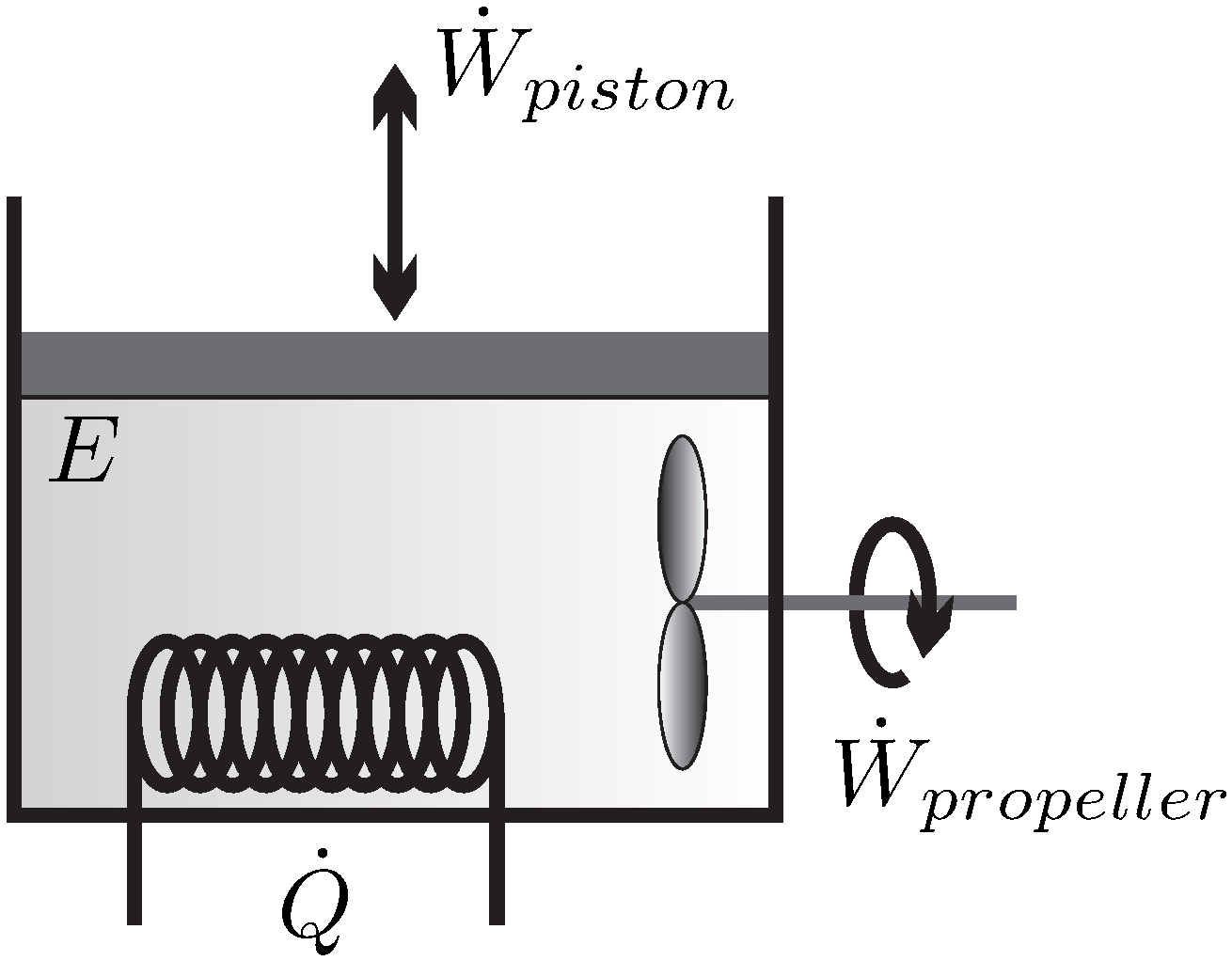

The simplest system of interest, and the one we will consider for most of the discussion, is the

closed system where a simple substance (i.e., no chemical changes) is enclosed by walls, and no mass flows over the system boundaries, for example, the piston-cylinder device depicted in

Figure 1.

There is only a small number of manipulations possible to change the state of a closed system, which are indicated in the figure—the volume of the system can be changed by moving the piston, the system can be stirred with a propeller, and the system can be heated or cooled by changing the temperature of the system boundary, as indicated by the heating (or cooling) coil. Another possibility to heat or cool the system is through absorption and emission of radiation, and transfer of radiation across the system boundary (as in a microwave oven)–this is just another way of heating. One could also shake the system, which is equivalent to stirring.

The statement that there is no other possible manipulation of the system than these is formulated in Observation 1.

These manipulative actions lead to exchange of energy between the system and its surroundings, either by work in case of piston movement and stirring, or by the exchange of heat. The transfer of energy (

E) by work (

) and heat (

) will be formulated in the

1st Law of Thermodynamics (

Section 12). The fundamental difference between piston and propeller work, as (possibly) reversible and irreversible processes will become clear later.

5. Properties

To get a good grip on properties that describe the system, we consider a system of volume

V which is filled by a mass

m of substance. To describe variation of properties in space, it is useful to divide the system into infinitesimal elements of size

which contain the mass

, as sketched in

Figure 2.

The volume filled by the substance can, in principle, be measured by means of a ruler. The mass of the substance can be measured using a scale. The pressure p of the substance can be measured as the force required to keep a piston in place, divided by the surface area of the piston.

One distinguishes between extensive properties, which are related to the size of the system, and intensive properties, which are independent of the overall size of the system. Mass m and volume V are extensive quantities, for example, they double when the system is doubled; pressure p and temperature T (yet to be defined) are intensive properties, they remain unchanged when the system is doubled.

A particular class of intensive properties are the specific properties, which are defined as the ratio between an extensive property and the corresponding mass. In inhomogeneous states intensive and specific properties vary locally, that is they have different values in different volume elements

. The

local specific properties are defined through the values of the extensive property

and the mass

in the volume element,

For example, the local specific volume

v, and the local mass density

, are defined as

The values of the extensive properties for the full system are determined by integration of the specific properties over the mass or volume elements,

As an example,

Figure 2 shows the inhomogeneous distribution of mass density

in a system (i.e.,

). Note that due to inhomogeneity, the density is a function of location

of the element

, hence

.

For homogeneous states, the integrands can be taken out of the integrals, and we find simple relations such as

6. Micro and Macro

A macroscopic amount of matter filling the volume V, say a steel rod or a gas in a box, consists of an extremely large number—to the order of 10—of atoms or molecules. These are in constant interaction which each other and exchange energy and momentum, for example, a gas particle in air at standard conditions undergoes about 10 collisions per second.

From the viewpoint of mechanics, one would have to describe each particle by its own (quantum mechanical) equation of motion, in which the interactions with all other particles would have to be taken into account. Obviously, due to the huge number of particles, this is not feasible. Fortunately, the constant interaction between particles leads to a collective behavior of the matter already in very small volume elements , in which the state of the matter can be described by few macroscopic properties like pressure, mass density, temperature and others. This allows us to describe the matter not as an assembly of atoms, but as a continuum where the state in each volume element is described by these few macroscopic properties.

Note that the underlying assumption is that the volume element contains a sufficiently large number of particles, which interact with high frequency. Indeed, the continuum hypothesis breaks down under certain circumstances, in particular for highly rarefied gases [

17]. In all of what follows, however, we shall only consider systems in which the assumption is well justified.

Appendix D provides a short discussion of kinetic gas theory, where macroscopic thermodynamics arises in the limit of high collision frequency between particles (equivalent to small mean free path).

7. Processes and Equilibrium States

A

process is any change in one or more properties occurring within a system. The system depicted in

Figure 1 can be manipulated by moving the piston or propeller, and by changing the temperature of the system boundary (heating/cooling coil). Any manipulation changes the state of the system locally and globally—a process occurs.

After all manipulation stops, the states, that is, the values of the local intensive properties in the volume elements, will keep changing for a while—that is the process continues—until a stable final state is assumed. This stable final state is called the equilibrium state. The system will remain in the equilibrium state until a new manipulation commences.

Simple examples from daily life are:

- (a)

A cup of coffee is stirred with a spoon. After the spoon is removed, the coffee will keep moving for a while until it comes to rest. It will stay at rest indefinitely, unless stirring is recommenced or the cup is moved.

- (b)

Milk is poured into coffee. Initially, there are light-brown regions of large milk content and dark-brown regions of low milk content. After a while, however, coffee and milk are well-mixed, at mid-brown color, and remain in that state. Stirring speeds the process up, but the mixing occurs also when no stirring takes place. Personally, I drink standard dip-coffee into which I pour milk: I have not used a spoon for mixing both in years.

- (c)

A spoon used to stir hot coffee becomes hot at the end immersed in the coffee. A while after it is removed from the cup, it will have assumed a homogeneous temperature.

- (d)

Oil mixed with vinegar by stirring will separate after a while, with oil on top of the vinegar.

In short, observation of daily processes, and experiments in the laboratory, show that a system that is left to itself for a sufficiently long time will approach a stable equilibrium state, and will remain in this state as long as the system is not subjected to further manipulation. This experience is the content of Observation 2. Example (d) shows that not all equilibrium states are homogeneous; however, temperature will always be homogeneous in equilibrium, which is laid down as Observation 3.

The details of the equilibrium state depend on the constraints on the system, in particular material, size, mass, and energy; this will become clear further below (

Section 39).

The time required for reaching the equilibrium state, and other details of the process taking place, depend on the initial deviation from the equilibrium state, the material, and the geometry. Some systems may remain for rather long times in metastable states—these will not be further discussed.

Physical constraints between different parts of a system can lead to different equilibrium states within the parts. For instance, a container can be divided by a rigid wall, with different materials at both sides. Due to the physical division, the materials in the compartments might well be at different pressures, and different temperatures, and they will not mix. However, if the wall is diathermal, that is, it allows heat transfer, then the temperature will equilibrate between the compartments. If the wall is allowed to move, it will do so, until the pressures in both parts are equal. If the wall is removed, depending on their miscibility the materials might mix, see examples (b) and (d).

Unless otherwise stated, the systems discussed in the following are free from internal constraints.

8. Reversible and Irreversible Processes

When one starts to manipulate a system that is initially in equilibrium, the equilibrium state is disturbed, and a new process occurs.

All real-life applications of thermodynamics involve some degree of nonequilibrium. For the discussion of thermodynamics it is customary, and useful, to consider idealized processes, for which the manipulation happens sufficiently slow. In this case, the system has sufficient time to adapt so that it is in an equilibrium state at any time. Slow processes that lead the system through a series of equilibrium states are called quasi-static, or quasi-equilibrium, or reversible, processes.

If the manipulation that causes a quasi-static process stops, the system is already in an equilibrium state, and no further change will be observed.

Equilibrium states are simple, quite often they are homogenous states, or can be approximated as homogeneous states (see

Section 40). The state of the system is fully described by few extensive properties, such as mass, volume, energy, and the corresponding pressure and temperature.

When the manipulation is fast, so that the system has no time to reach a new equilibrium state, it will be in nonequilibrium states. If the manipulation that causes a nonequilibrium process stops, the system will undergo further changes until it has reached its equilibrium state. The equilibration process takes place while no manipulation occurs, that is, the system is left to itself. Thus, the equilibration is an uncontrolled process.

Nonequilibrium processes typically involve inhomogeneous states, hence their proper description requires values of the properties at all locations

(i.e., in all volume elements

) of the system. Accordingly, the detailed description of nonequilibrium processes is more complex than the description of quasi-static processes. This is the topic of theories of nonequilibrium thermodynamics, where the processes are described through partial differential equations, see

Appendix C. For instance, the approach of Linear Irreversible Thermodynamics yields the Navier-Stokes and Fourier laws that are routinely used in fluid dynamics and heat transfer. Apart from giving the desired spatially resolved description of the process, these equations are also useful in examining under which circumstances a process can be approximated as quasi-static. For the moment, we state that a process must be sufficiently slow for this to be the case.

The approach to equilibrium introduces a timeline for processes—As time progresses, an isolated system, that is, a system that is not further manipulated in any way, so that heat and work vanish, will talways approach, and finally reach, its unique equilibrium state. The opposite will not be observed, that is an isolated system will never be seen spontaneously leaving its equilibrium state when no manipulation occurs.

Indeed, we immediately detect whether a movie of a nonequilibrium process is played forward or backwards: well mixed milk coffee will not separate suddenly into milk and coffee; a spoon of constant temperature will not suddenly become hot at one end, and cold at the other; a propeller immersed in a fluid at rest will not suddenly start to move and lift a weight (

Figure 3); oil on top of water will not suddenly mix with the water; and so forth. We shall call processes with a time-line

irreversible.

Only for quasi-static processes, where the system is always in equilibrium states, we cannot distinguish whether a movie is played forwards or backwards. This is why these processes are also called reversible. Since equilibration requires time, quasi-static, or reversible, processes typically are slow processes, so that the system always has sufficient time to adapt to an imposed change.

To be clear, we define quasi-static processes as reversible. One could consider irreversible slow processes, such as the compression of a gas with a piston subject to friction. For the gas itself, the process would be reversible, but for the system of gas and piston, the process would be irreversible.

9. Temperature and the 0th Law

By touching objects we can distinguish between hot and cold, and we say that hotter states have a higher temperature. Objective measurement of temperature requires (a) a proper definition, and (b) a proper device for measurement—a thermometer.

Experience shows that physical states of systems change with temperature. For instance, the gas thermometer in

Figure 4 contains a certain amount of gas enclosed in a container at fixed volume

V. Increase of its temperature

T by heating leads to a measurable change in the gas pressure

p. Note that pressure is a mechanical property, which is measured as force per area. An arbitrary temperature scale can be defined, for example, as

with arbitrary constants

a and

b.

To study temperature, we consider two systems, initially in their respective equilibrium, both not subject to any work interaction, that is, no piston or propeller motion in

Figure 1, which are manipulated by bringing them into physical contact, such that energy can pass between the systems (thermal contact), see

Figure 5. Then, the new system that is comprised of the two initial systems will exhibit a process towards its equilibrium state. Consider first equilibration of a body

A with the gas thermometer, so that the compound system of body and thermometer has the initial temperature

, which can be read of the thermometer. Next, consider the equilibration of a body

B with the gas thermometer, so that the compound system of body and thermometer has the initial temperature

, as shown on the thermometer.

Now, we bring the two bodies and the thermometer into thermal contact, and let them equilibrate. It is observed that both systems change their temperature such the hotter system becomes colder, and vice versa. Independent of whether the thermometer is in thermal contact only with system A or in thermal contact with system B, it shows the same temperature. Hence, the equilibrium state is characterized by a common temperature of both systems. Since no work interaction took place, one speaks of the thermal equilibrium state.

Expressed more formally, we conclude that if body C (the thermometer in the above) is in thermal equilibrium with body A and in thermal equilibrium with body B, than also bodies A and B will be in thermal equilibrium. All three bodies will have the same temperature. The extension to an arbitrary number of bodies is straightforward, and since any system under consideration can be thought of as a compund of smaller subsystems, we can conclude that a system in thermal equilibrium has a homogeneous temperature.

The observation outlined above defines temperature, hence its important enough to be laid out as a law (Observation 3)

The 0th Law of Thermodynamics

In a stable equilibrium state, the temperature of a thermally unrestricted system is uniform. Or, two bodies in thermal equilibrium have the same temperature.

The 0th law introduces temperature as a measurable quantity. Indeed, to measure the temperature of a body, all we have to do is to bring a calibrated thermometer into contact with the body and wait until the equilibrium state of the system (body and thermometer) is reached. When the size of the thermometer is sufficiently small compared to the size of the body, the final temperature of body and thermometer will be (almost) equal to the initial temperature of the body.

10. Ideal Gas Temperature Scale

For proper agreement and reproducibility of temperature measurement, it is helpful to agree on a temperature scale.

Any gas at sufficiently low pressures and large enough temperatures, behaves as an ideal gas. From experiments one observes that for an ideal gas confined to a fixed volume the pressure increases with temperature. The temperature scale is

defined such that the relation between pressure and temperature is linear, that is

The Celsius scale was originally defined based on the boiling and freezing points of water at to define the temperatures of 100 and 0 . For the Celsius scale one finds independent of the ideal gas used. The constant b depends on the volume, mass and type of the gas in the thermometer.

By shifting the temperature scale by

a, one can define an alternative scale, the

ideal gas temperature scale, as

The ideal gas scale has the unit Kelvin [

] and is related to the Celsius scale as

It will be shown later that this scale coincides with the thermodynamic temperature scale that follows from the 2nd law (

Section 27).

11. Thermal Equation of State

Careful measurements on simple substances show that specific volume v (or density ), pressure p and temperature T cannot be controlled independently. Indeed, in equilibrium states they are linked through a relation of the form , or , known as the thermal equation of state. For most substances, this relation cannot be easily expressed as an actual equation, but is laid down in property tables.

The thermal equation of state relates measurable properties. It suffices to know the values of two properties to determine the values of others. This will still be the case when we add energy and entropy in equilibrium states to the list of thermodynamic properties, which can be determined through measurement of any two of the measurable properties, that is, or or .

To summarize: If we assume local thermal equilibrium, the complete knowledge of the macroscopic state of a system requires the values of two intensive properties in each location (i.e., in each infinitesimal volume element), and the local velocity. The state of a system in global equilibrium, where properties are homogeneous, is described by just two intensive properties (plus the size of the system, that is either total volume, or total mass). In comparison, full knowledge of the microscopic state would require the knowledge of location and velocity of each particle.

The ideal gas is one of the simplest substances to study, since it has simple property relations. Careful measurements have shown that for an ideal gas pressure

p, total volume

V, temperature

T (in

), and mass

m are related by an explicit thermal equation of state, the

ideal gas lawHere,

R is the

gas constant that depends on the type of the gas. With this, the constant in (

7) is

.

Alternative forms of the ideal gas equation result from introducing the specific volume

or the mass density

so that

12. The 1st Law of Thermodynamics

It is our daily experience that heat can be converted to work, and that work can be converted to heat. A propeller mounted over a burning candle will spin when the heated air rises due to buoyancy: heat is converted to work. Rubbing our hands makes them warmer: work is converted to heat. Humankind has a long and rich history of making use of both conversions.

While the heat-to-work and work-to-heat conversions are readily observable in simple and more complex processes, the governing law is not at all obvious from simple observation. It required groundbreaking thinking and careful experiments to unveil the Law of Conservation of Energy. Due to its importance in thermodynamics, it is also known as the 1st Law of Thermodynamics, which expressed in words, reads:

1st Law of Thermodynamics

Energy cannot be produced nor destroyed, it can only be transferred, or converted from one form to another. In short, energy is conserved.

It took quite some time to formulate the 1st law in this simple form, the credit for finding and formulating it goes to Robert Meyer (1814–1878), James Prescott Joule (1818–1889), and Hermann Helmholtz (1821–1894). Through careful measurements and analysis, they recognized that thermal energy, mechanical energy, and electrical energy can be transformed into each other, which implies that energy can be transferred by doing work, as in mechanics, and by heat transfer.

The 1st law is generally valid, no violation was ever observed. As knowledge of physics has developed, other forms of energy had to be included, such as radiative energy, nuclear energy, or the mass-energy equivalence of the theory of relativity, but there is no doubt today that energy is conserved under all circumstances.

We formulate the 1st law for the simple closed system of

Figure 1, where all three possibilities to manipulate the system from the outside are indicated. For this system, the conservation law for energy reads

where

E is the total energy of the system,

is the total heat transfer rate in or out of the system, and

is the total power—the work per unit time—exchanged with the surroundings. Energy is an extensive property, hence also heat and work scale with the size of the system. For instance, doubling the system size, doubles the energy, and requires twice the work and heat to observe the same changes of the system.

This equation states that the change of the system’s energy in time () is equal to the energy transferred by heat and work per unit time (). The sign convention used is such that heat transferred into the system is positive, and work done by the system is positive.

13. Energy

There are many forms of energy that must be accounted for. For the context of the present discussion, the total energy

E of the system is the sum of its kinetic energy

, potential energy

, and internal—or thermal—energy

U,

The kinetic energy is well-known from mechanics. For a homogeneous system of mass

m and barycentric velocity

, kinetic energy is given by

For inhomogeneous states, where each mass element has its own velocity, the total kinetic energy of the system is obtained by integration of the specific kinetic energy

over all mass elements

;

Also the potential energy in the gravitational field is well-known from mechanics. For a homogeneous system of mass

m, potential energy is given by

where

is the elevation of the system’s center of mass over a reference height, and

is the gravitational acceleration on Earth. For inhomogeneous states the total potential energy of the system is obtained by integration of the specific potential energy

over all mass elements

; we have

Even if a macroscopic element of matter is at rest, its atoms move about (in a gas or liquid) or vibrate (in a solid) fast, so that each atom has microscopic kinetic energy. The atoms are subject to interatomic forces, which contribute microscopic potential energies. Moreover, energy is associated with the atoms’ internal quantum states. Since the microscopic energies cannot be observed macroscopically, one speaks of the internal energy, or thermal energy, of the material, denoted as U.

For inhomogeneous states the total internal energy of the system is obtained by integration of the specific internal energy

u over all mass elements

. For homogeneous and inhomogeneous systems we have

14. Caloric Equation of State

Internal energy cannot be measured directly. The caloric equation of state relates the specific internal energy u to measurable quantities in equilibrium states, it is of the form , or . Recall that pressure, volume and temperature are related by the thermal equation of state, ; therefore it suffices to know two properties in order to determine the others.

We note that internal energy summarizes all microscopic contributions to energy. Hence, a system, or a volume element within a system, will always have internal energy u, independent of whether the system is in (local) equilibrium states or in arbitrarily strong nonequilibrium states. Only in the former, however, does the caloric equation of state provide a link between energy and measurable properties.

The caloric equation of state must be determined by careful measurements, where the response of the system to heat or work supply is evaluated by means of the first law. For most materials the results cannot be easily expressed as equations, and are tabulated in property tables.

We consider a closed system heated slowly at constant volume (

isochoric process), with homogeneous temperature

T at all times. Then, the first law (

27) reduces to (recall that

and

)

Here, we use the standard notation of thermodynamics, where

denotes the partial derivative of internal energy with temperature at constant specific volume

. This derivative is known as the

specific heat (or

specific heat capacity)

at constant volume,

As defined here, based on SI units, the specific heat

is the amount of heat required to increase the temperature of

of substance by

at constant volume. It can be measured by controlled heating of a fixed amount of substance in a fixed volume system, and measurement of the ensuing temperature difference; its SI unit is

.

In general, internal energy

is a function of a function of temperature and specific volume. For incompressible liquids and solids the specific volume is constant,

, and the internal energy is a function of temperature alone,

. Interestingly, also for ideal gases the internal energy turns out to be a function of temperature alone, both experimentally and from theoretical considerations. For these materials the specific heat at constat volume depends only on temperature,

and its integration gives the caloric equation of state as

Only energy differences can be measured, where the first law is used to evaluate careful experiments. The choice of the energy constant

fixes the energy scale. The actual value of this constant is relevant for the discussion of chemical reactions [

8]. Note that proper mathematical notation requires to distinguish between the actual temperature

T of the system, and the integration variable

.

For materials in which the specific heat varies only slightly with temperature in the interval of interest, the specific heat can be approximated by a suitable constant average

, so that the caloric equation of state assumes the particularly simple linear form

15. Work and Power

Work, denoted by

W, is the product of a force and the displacement of its point of application. Power, denoted by

, is work done per unit time, that is the force times the velocity of its point of application. The total work for a process is the time integral of power over the duration

of the process,

For the closed system depicted in

Figure 1 there are two contributions to work:

moving boundary work, due to the motion of the piston, and

rotating shaft work, which moves the propeller. Other forms of work, for example, spring work or electrical work could be added as well.

Work and power can be positive or negative. We follow the sign convention that work done by the system is positive and work done to the system is negative.

For systems with homogeneous pressure

p, which might change with time as a process occurs (e.g., the piston moves), one finds the following expressions for moving boundary work with finite and infinitesimal displacement, and for power,

Moving boundary work depends on the process path, so that the work exchanged for an infinitesimal process step,

, is not an exact differential (see next section). Closed equilibrium systems are characterized by a single homogeneous pressure

p, a single homogeneous temperature

T, and the volume

V. In quasi-static (or reversible) processes, the system passes through a series of equilibrium states which can be indicated in suitable diagrams, for example, the p-V-diagram.

In a closed system the propeller stirs the working fluid and creates inhomogeneous states. The power is related to the torque and the revolutionary speed (revolutions per unit time) as . Fluid friction transmits fluid motion (i.e., momentum and kinetic energy) from the fluid close to the propeller to the fluid further away. Due to the inherent inhomogeneity, stirring of a fluid in a closed system cannot be a quasi-static process, and is always irreversible.

In general, there might be several work interactions

of the system, then the total work for the system is the sum over all contributions; for example, for power

For reversible processes with additional work contributions, one has

, where

are pairs of conjugate work variables, such as

.

Finally, we know from the science of mechanics that by using gears and levers, one can transfer energy as work from slow moving to fast moving systems and vice versa, and one can transmit work from high pressure to low pressure systems and vice versa. However, due to friction within the mechanical system used for transmission of work, some of the work may be lost. This experience is formulated in Observation 4.

16. Exact and Inexact Differentials

Above we have seen that work depends on the process path. In the language of mathematics this implies that the work for an infinitesimal step is not an exact differential, and that is why a Greek delta () is used to denote the work for an infinitesimal change as . As will be seen in the next section, heat is path dependent as well.

State properties like pressure, temperature, volume and energy describe the momentary state of the system, or, for inhomogeneous states, the momentary state in the local volume element. State properties have exact differentials for which we write, for example, and . The energy change and the volume change are independent of the path connecting the states.

It is important to remember that work and heat, as path functions, do not describe states, but the processes that leads to changes of the state. Hence, for a process connecting two states we write , , where and are the energy transferred across the system boundaries by heat or work.

A state is characterized by state properties (pressure, temperature, etc.), it does not possess work or heat.

Quasi-static (reversible) processes go through well defined equilibrium states, so that the whole process path can be indicated in diagrams, for example, the p-V-diagram.

Nonequilibrium (irreversible) processes, for which typically the states are different in all volume elements, cannot be drawn into diagrams. Often irreversible processes connect homogeneous equilibrium states which can be indicated in the diagram. It is recommended to use dashed lines to indicate nonequilibrium processes that connect equilibrium states. As an example,

Figure 6 shows a p-V-diagram of two processes, one reversible, one irreversible, between the same equilibrium states 1 and 2. We emphasize that the dashed line does not refer to actual states of the system. The corresponding work for the nonequilibrium process cannot be indicated as the area below the curve, since its computation requires the knowledge of the—inhomogeneous!—pressures at the piston surface at all times during the process.

17. Heat Transfer

Heat is the transfer of energy due to differences in temperature. Experience shows that for systems in thermal contact the direction of heat transfer is restricted, such that heat will always go from hot to cold by itself, but not vice versa. This experience is formulated in Observation 5.

This restriction of direction is an important difference to energy transfer by work between systems in mechanical contact, which is not restricted.

Since heat flows only in response to a temperature difference, a quasi-static (reversible) heat transfer process can only be realized in the limit of infinitesimal temperature differences between the system and the system boundary, and for infinitesimal temperature gradients within the system.

We use the following notation:

denotes the heat transfer rate, that is the amount of energy transferred as heat per unit time. Heat depends on the process path, so that the heat exchanged for an infinitesimal process step,

, is not an exact differential. The total heat transfer for a process between states 1 and 2 is

By the convention used, heat transferred into the system is positive, heat transferred out of the system is negative.

A process in which no heat transfer takes place, , is called adiabatic process.

In general, there might be several heat interactions

of the system, then the total heat for the system is the sum over all contributions; for example, for the heating rate

For the discussion of the 2nd law we will consider the

as heat crossing the system boundary at locations where the boundary has the temperature

.

18. 1st Law for Reversible Processes

The form (

11) of the first law is valid for

all closed systems. When only reversible processes occur within the system, so that the system is in equilibrium states at any time, the equation can be simplified as follows: From our discussion of equilibrium states we know that for reversible processes the system will be homogeneous and that all changes must be very slow, which implies very small velocities relative to the center of mass of the system. Therefore, kinetic energy, which is velocity squared, can be ignored,

. Stirring, which transfers energy by moving the fluid and friction, is irreversible, hence in a reversible process only moving boundary work can be transferred, where piston friction is absent. As long as the system location does not change, the potential energy does not change, and we can set

.

With all this, for reversible (quasi-static) processes the 1st law of thermodynamics reduces to

where the second form results from integration over the process duration.

19. Entropy and the Trend to Equilibrium

The original derivation of the 2nd law is due to Sadi Carnot (1796–1832) and Rudolf Clausius (1822–1888), where discussions of thermodynamic engines combined with

Observation 5 were used to deduce the 2nd law [

1]. Even today, many textbooks present variants of their work [

2,

3,

4,

5]. As discussed in the introduction, we aim at introducing entropy without the use of heat engines, only using the 5 observations.

We briefly summarize our earlier statements on processes in closed systems: a closed system can be manipulated by exchange of work and heat with its surroundings only. In nonequilibrium—that is, irreversible—processes, when all manipulation stops, the system will undergo further changes until it reaches a final equilibrium state. This equilibrium state is stable, that is the system will not leave the equilibrium state spontaneously. It requires new action—exchange of work or heat with the surroundings—to change the state of the system. This paragraph is summarized in Observations 1–3.

The following nonequilibrium processes are well-known from experience, and will be used in the considerations below:

- (a)

Work can be transferred without restriction, by means of gears and levers. However, in transfer some work might be lost to friction (Observation 4).

- (b)

Heat goes from hot to cold. When two bodies at different temperatures are brought into thermal contact, heat will flow from the hotter to the colder body until both reach their common equilibrium temperature (Observation 5).

The process from an initial nonequilibrium state to the final equilibrium state requires some time. However, if the actions on the system (only work and heat!) are sufficiently slow, the system has enough time to adapt and will be in equilibrium states at all times. We speak of quasi-static—or, reversible—processes. When the slow manipulation is stopped at any time, no further changes occur.

If a system is not manipulated, that is there is neither heat or work exchange between the systems and its surroundings, we speak of an isolated system. The behavior of isolated systems described above—a change occurs until a stable state is reached—can be described mathematically by an inequality. The final stable state must be a maximum (alternatively, a minimum) of a suitable property describing the system. For a meaningful description of systems of arbitrary size, the new property should scale with system size, that is it must be extensive.

We call this new extensive property

entropy, denoted

S, and write an inequality for the isolated system,

is called the

entropy generation rate. The entropy generation rate is positive in nonequilibrium (

), and vanishes in equilibrium (

). The new Equation (28) states that in an isolated system the entropy will grow in time (

) until the stable equilibrium state is reached (

). Non-zero entropy generation,

, describes the irreversible process towards equilibrium, for example, through internal heat transfer and friction. There is no entropy generation in equilibrium, where entropy is constant. Since entropy only grows before the isolated system reaches its equilibrium state, the latter is a maximum of entropy.

While this equation describes the observed behavior in principle, it does not give a hint at what the newly introduced quantities S and —entropy and entropy generation rate—are, or how they can be determined. Hence, an important part of the following discussion concerns the relation of entropy to measurable quantities, such as temperature, pressure, and specific volume. Moreover, it will be seen that entropy generation rate describes the irreversibility in, for example, heat transfer across finite temperature difference, or frictional flow.

The above postulation of an inequality is based on phenomenological arguments. The discussion of irreversible processes has shown that over time all isolated systems will evolve to a unique equilibrium state. The first law alone does not suffice to describe this behavior. Nonequilibrium processes aim to reach equilibrium, and the inequality is required to describe the clear direction in time.

As introduced here, entropy and the above rate equation describe irreversible processes, where initial nonequilibrium states evolve towards equilibrium. Not only is there no reason to restrict entropy to equilibrium, but rather, in this philosophy, it is essential to define entropy as a nonequilibrium property.

In the next sections we will extend the second law to non-isolated systems, identify entropy as a measurable property—at least in equilibrium states—and discuss entropy generation in irreversible processes.

20. Entropy Transfer

In non-isolated systems, which are manipulated by exchange of heat and work with their surroundings, we expect an exchange of entropy with the surroundings which must be added to the entropy inequality. We write

where

is the

entropy transfer rate. This equation states that the change of entropy in time (

) is due to transport of entropy over the system boundary (

) and generation of entropy within the system boundaries (

). This form of the second law is valid for all processes in closed systems. The entropy generation rate is positive (

) for irreversible processes, and it vanishes (

) in equilibrium and for reversible processes, where the system is in equilibrium states at all times.

All real technical processes are somewhat irreversible, since friction and heat transfer cannot be avoided. Reversible processes are idealizations that can be used to study the principal behavior of processes, and best performance limits.

We apply

Observation 1: Since a closed system can only be manipulated through the exchange of heat and work with the surroundings, the transfer of any other property, including the transfer of entropy, must be related to heat and work, and must vanish when heat and work vanish. Therefore the entropy transfer

can only be of the form

Recall that total heat and work transfer are the sum of many different contributions,

and

. In the above formulation, the coefficients

and

are used to distinguish heat and work transfer at different conditions at that part of the system boundary where the transfer (

or

) takes place. Since work and heat scale with the size of the system, and entropy is extensive, the coefficients

and

must be intensive, that is, independent of system size.

At this point, the coefficients

,

depend in an unknown manner on properties describing the state of the system and its interaction with the surroundings. While the relation between the entropy transfer rate

and the energy transfer rates

,

is not necessarily linear, the form (

30) is chosen to clearly indicate that entropy transfer is zero when no energy is transferred,

if

(isolated system).

With this expression for entropy transfer, the 2nd law assumes the form

This equation gives the mathematical formulation of the trend to equilibrium for a non-isolated closed system (exchange of heat and work, but not of mass). The next step is to identify entropy

S and the coefficients

,

in the entropy transfer rate

in terms of quantities we can measure or control.

21. Direction of Heat Transfer

A temperature reservoir is defined as a large body in equilibrium whose temperature does not change when heat is removed or added (this requires that the reservoir’s thermal mass, , approaches infinity).

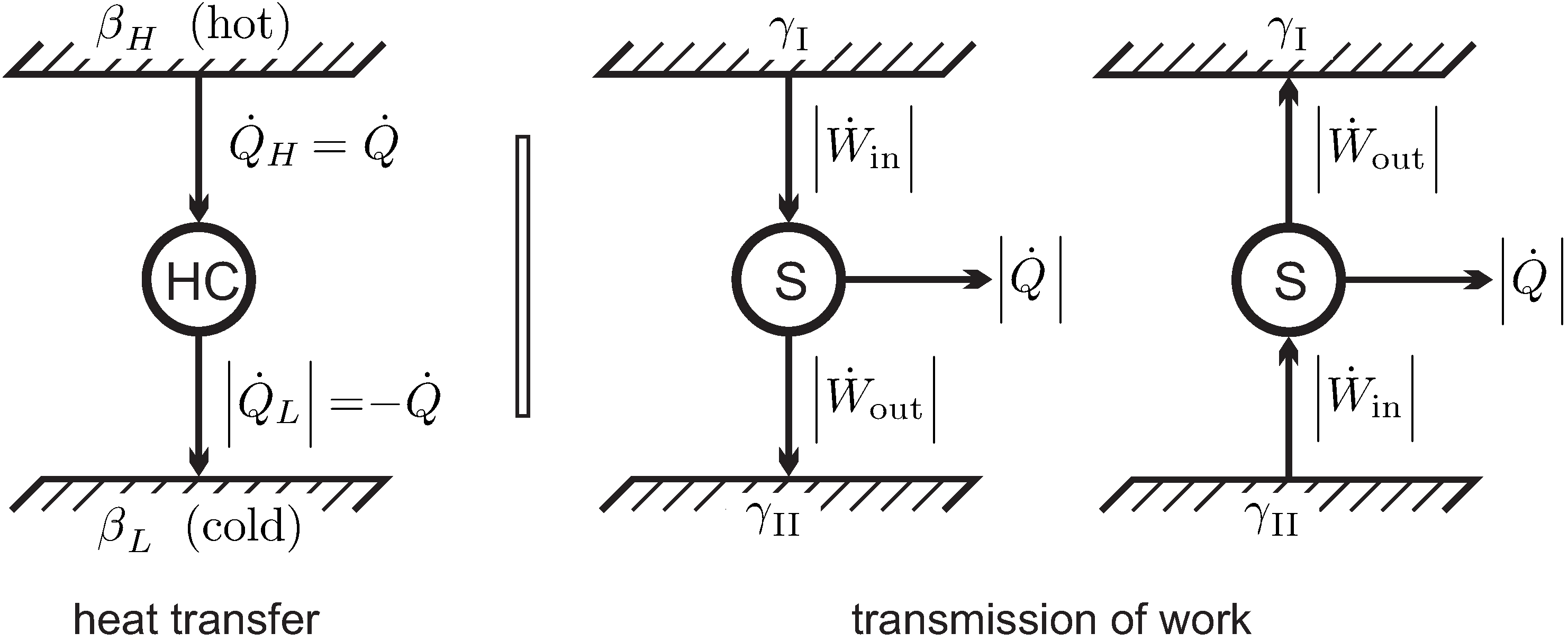

We consider heat transfer between two reservoirs of temperatures and , where is the temperature of the hotter reservoir. The heat is transferred through a heat conductor (HC), which is the thermodynamic system to be evaluated. A pure steady state heat transfer problem is studied, where the conductor receives the heat flows and , and exchanges no work with the surroundings, .

The left part of

Figure 7 shows a schematic of the heat transfer process. For steady state conditions no change over time is observed in the conductor, so that

. We emphasize that for this process the heat conductor will be in a nonequilibrium state, for example, it could be a solid heat conductor with an imposed temperature gradient, or, possibly, a gas in a state of natural convection in the gravitational field. To proceed with the argument, it is not necessary to quantify energy and entropy of the conductor, since both do not change in steady state processes.

For steady state, the first and second law (

11,

31) applied to the heat conductor

HC reduce to

Here,

and

are the values of

at the hot and cold sides of the conductor, respectively. Combining both we have

We apply

Observation 5: Since heat must go from hot to cold (from reservoir

to reservoir

), the heat must be positive,

, which requires

. Thus, the coefficient

must be smaller for the part of the system which is in contact with the hotter reservoir,

. This must be so irrespective of the values of

any other properties at the system boundaries (

), that is, independent of the conductor material or its mass density, or any other material properties, and also for all possible values

of the heat transferred. It follows, that

,

must depend on temperature of the respective reservoir

only.

Moreover, must be a decreasing function of reservoir temperature alone, if temperature of the hotter reservoir is defined to be higher.

22. Work Transfer and Friction Loss

For the discussion of the coefficient

we turn our attention to the transmission of work. The right part of

Figure 7 shows two “work reservoirs” characterized by different values

,

between which work is transmitted by a steady state system

.

We apply Observation 4. The direction of work transfer is not restricted: by means of gears and levers work can be transmitted from low to high force and vice versa, and from low to high velocity and vice versa. Therefore, transmission might occur from to , and as well from to . Accordingly, there is no obvious interpretation of the coefficient . Indeed, we will soon be able to remove the coefficient from the discussion.

According to the second part of Observation 4, friction might occur in the transmission. Thus, in the transmission process we expect some work being lost to frictional heating, therefore . In order to keep the transmission system at constant temperature, some heat must be removed to a reservoir (typically the outside environment). Work and heat for both cases are indicated in the figure, the arrows indicate the direction of transfer.

The first law for both transmission processes reads (steady state,

)

where the signs account for the direction of the flows. Since work loss in transmission means

, this implies that heat must leave the system,

, as indicated in the figure.

Due to the different direction of work in the two processes considered, the second law (

31) gives different conditions for both situations (steady state,

),

where, as we have seen in the previous section,

is a measure for the temperature of the reservoir that accepts the heat. Elimination of the heat

between first and second laws gives two inequalities,

or, after some reshuffling,

Combining the two equations (

38) gives the two inequalities

From the these follows, since

, that

must be non-negative,

.

Both inequalities (

38) must hold for arbitrary transmission systems, that is for all

, and all temperatures of the heat receiving reservoir, that is for all

. For a reversible transmission, where

, both inequalities (

38) can only hold simultaneously if

. Accordingly,

must be a constant, and

for all

.

With

as a constant, the entropy balance (

31) becomes

where

is the net power for the system. The energy balance solved for power,

, allows us to eliminate work, so that the 2nd law becomes

23. Entropy and Thermodynamic Temperature

Without loss of generality, we can absorb the energy term

into entropy, that is, we set

this is equivalent to setting

. Note that, since energy is conserved, any multiple of energy can be added to entropy without changing the principal features of the 2nd law; obviously, the most elegant formulation is the one where work does not appear.

Moreover, we have found that

is a non-negative monotonously decreasing function of temperature, and we

define thermodynamic temperature as

Note that non-negativity of inverse temperature implies that temperature itself is strictly positive.

With this, we have the 2nd law in the form

The above line of arguments relied solely on the temperatures of the reservoirs with which the system exchanges heat; in order to emphasize this, we write the reservoir temperatures as

.

The form (

44) is valid for

any system

S, in

any state, that exchanges heat with reservoirs which have thermodynamic temperatures

. The entropy of the system is

S, and it should be clear from the derivation that it is defined for any state, equilibrium, or nonequilibrium! Thermodynamic temperature must be positive to ensure dissipation of work due to friction. The discussion below will show that for systems in local thermal equilibrium, the reservoir temperature can be replaced by the system boundary temperature.

24. Entropy in Equilibrium: Gibbs Equation

Equilibrium entropy can be related to measurable quantities in a straightforward manner, so that it is measurable as well, albeit indirectly. We consider an equilibrium system undergoing a quasi-static processes, in contact with a heater at temperature

T; for instance we might think of a carefully controlled resistance heater. Due to the equilibrium condition, the temperature of the system must be

T as well (0th law!), and the entropy generation vanishes,

. Then, Equation (

44) for entropy becomes

while for this case the the 1st law (

45) reads

In both equations we added the index

E to highlight the equilibrium state;

p is the homogeneous pressure of the equilibrium state.

We are only interested in an infinitesimal step of the process, of duration

. Eliminating the heat between the two laws, we find

This relation is known as the Gibbs equation, named after Josiah Willard Gibbs (1839–1903). The Gibbs equation is a differential relation between properties of the system and valid for

all simple substances—in equilibrium states.

We note that

T and

p are intensive, and

U,

V and

S are extensive properties. The specific entropy

can be computed from the Gibbs equation for specific properties, which is obtained by division of (

47) with the constant mass

m. We ignore the subscript

E for streamlined notation, so that the Gibbs equation for specific properties reads

Solving the first law for reversible processes (

27) for heat and comparing the result with the Gibbs equation we find, with

,

We recall that heat is a path function, that is,

is an inexact differential, but entropy is a state property, that is,

is an exact differential. In the language of mathematics, the inverse thermodynamic temperature

serves as an integrating factor for

, such that

becomes an exact differential.

It must be noted that one can always find an integrating factor for a differential form of two variables. Hence, it must be emphasized that thermodynamic temperature

T remains an integrating factor if additional contributions to reversible work (conjugate work variables) are considered in the first law, which leads to the Gibbs equation in the form

, where

are pairs of conjugate work variables, such as

. For instance, this becomes clear in Caratheodory’s axiomatic treatment of thermodynamics (for adiabatic processes) [

9], which is briefly discussed in

Appendix A.

From the above, we see that for reversible processes

. Accordingly, the total heat exchanged in a reversible process can be computed from temperature and entropy as the area below the process curve in the temperature-entropy diagram (T-S-diagram),

This is analogue to the computation of the work in a reversible process as

.

25. Measurability of Properties

Some properties are easy to measure, and thus quite intuitive, for example, pressure

p, temperature

T and specific volume

v. Accordingly, the thermal equation of state,

can be measured with relative ease, for systems in equilibrium. Other properties cannot be measured directly, for instance internal energy

u, which must be determined by means of applying the first law to a calorimeter, or equilibrium entropy

s, which must be determined from other properties by integration of the Gibbs Equation (

48).

The Gibbs equation gives a differential relation between properties for any simple substance. Its analysis with the tools of multivariable calculus shows that specific internal energy

u, specific enthalpy

, specific Helmholtz free energy

and specific Gibbs free energy

are potentials when considered as functions of particular variables. The evaluation of the potentials leads to a rich variety of relations between thermodynamic properties. In particular, these relate properties that are more difficult, or even impossible, to measure to those that are more easy to measure, and thus reduce the necessary measurements to determine data for all properties. The discussion of the thermodynamic potentials energy

u, enthalpy

h, Helmholtz free energy

f and Gibbs free energy

g, based on the Gibbs equation is one of the highlights of equilibrium thermodynamics [

8,

10]. Here, we refrain from a full discussion and only consider one important result in the next section.

To avoid misunderstanding, we point out that the following

Section 26,

Section 27,

Section 28,

Section 29 concern thermodynamic properties of systems in equilibrium states. We also stress that entropy and internal energy are system properties also in nonequilibrium states.

26. A Useful Relation

The Gibbs equation formulated for the Helmholtz free energy

arises from a Legendre transform

in the Gibbs equation as

Hence,

is a thermodynamic potential [

8,

10], with

The last equation is the Maxwell relation for this potential, it results from exchanging the order of derivatives,

. Remarkably, the Maxwell relation (

52)

contains the expression

, which can be interpreted as the change of pressure

p with temperature

T in a process at constant volume

v. Since

p,

T and

v can be measured, this expression can be found experimentally. In fact, measurement of

gives the thermal equation of state

, and we can say that

can be determined from the thermal equation of state. The other expression,

, cannot be measured by itself, since it contains entropy

s, which cannot be measured directly. Hence, with the Maxwell relation the expression

can be measured indirectly, through measurement of the thermal equation of state.

To proceed, we consider energy and entropy in the Gibbs Equation (48) as functions of temperature and volume,

,

. We take the partial derivative of the Gibbs equation with respect to

v while keeping

T constant, to find

With the Maxwell relation (

52)

to replace the entropy derivative

in (

53)

, we find an equation for the volume dependence of internal energy that is entirely determined by the thermal equation of state

,

Since internal energy cannot be measured directly, the left hand side cannot be determined experimentally. The equation states that the volume dependence of the internal energy is known from measurement of the thermal equation of state.

27. Thermodynamic and Ideal Gas Temperatures

In the derivation of the 2nd law, thermodynamic temperature T appears as the factor of proportionality between the heat transfer rate and the entropy transfer rate . In previous sections we have seen that this definition of thermodynamic temperature stands in agreement with the direction of heat transfer: heat flows from hot (high T) to cold (low T) by itself. The heat flow aims at equilibrating the temperature within any isolated system that is left to itself, so that two systems in thermal equilibrium have the same thermodynamic temperature. Moreover, the discussion of internal friction showed that thermodynamic temperature must be positive.

While we have claimed agreement of thermodynamic temperature with the ideal gas temperature scale in

Section 9, we have yet to give proof of this. To do so, we use (

54) together with the experimental result stated in

Section 14, that for an ideal gas the internal energy does

not depend on volume, but only on temperature (see also

Section 35). This implies, for the ideal gas,

Accordingly, ideal gas pressure must be a linear function of the thermodynamic temperature

T,

The volume dependency

must be measured, for example, in a piston cylinder system in contact with a temperature reservoir, so that the temperature is constant. Measurements show that pressure is inversely proportional to volume, so that

with a constant

that fixes the thermodynamic temperature scale.

The Kelvin temperature scale, named after William Thomson, Lord Kelvin (1824–1907), historically used the triple point of water

,

as reference. The triple point is the unique equilibrium state at which a substance can coexist in all three phases, solid, liquid and vapor. The Kelvin scales assigns the value of

to this unique point, which can be reproduced with relative ease in laboratories, so that calibration of thermometers is consistent. With this choice, the constant

is the specific gas constant

, where

is the universal gas constant, and

M is the molecular mass with unit

(e.g.,

for helium,

for water,

for air), so that, as already stated in

Section 11,

In 2018, the temperature scale became independent of the triple point of water. Instead, it is now set by fixing the Boltzmann constant

, which is the gas constant per particle, that is,

where

is the Avogadro constant [

24]. At the same time, other SI units were fredefined by assigning fixed values to physical constants, including the Avogadro constant, which defines the number of particles in one mole [

25].

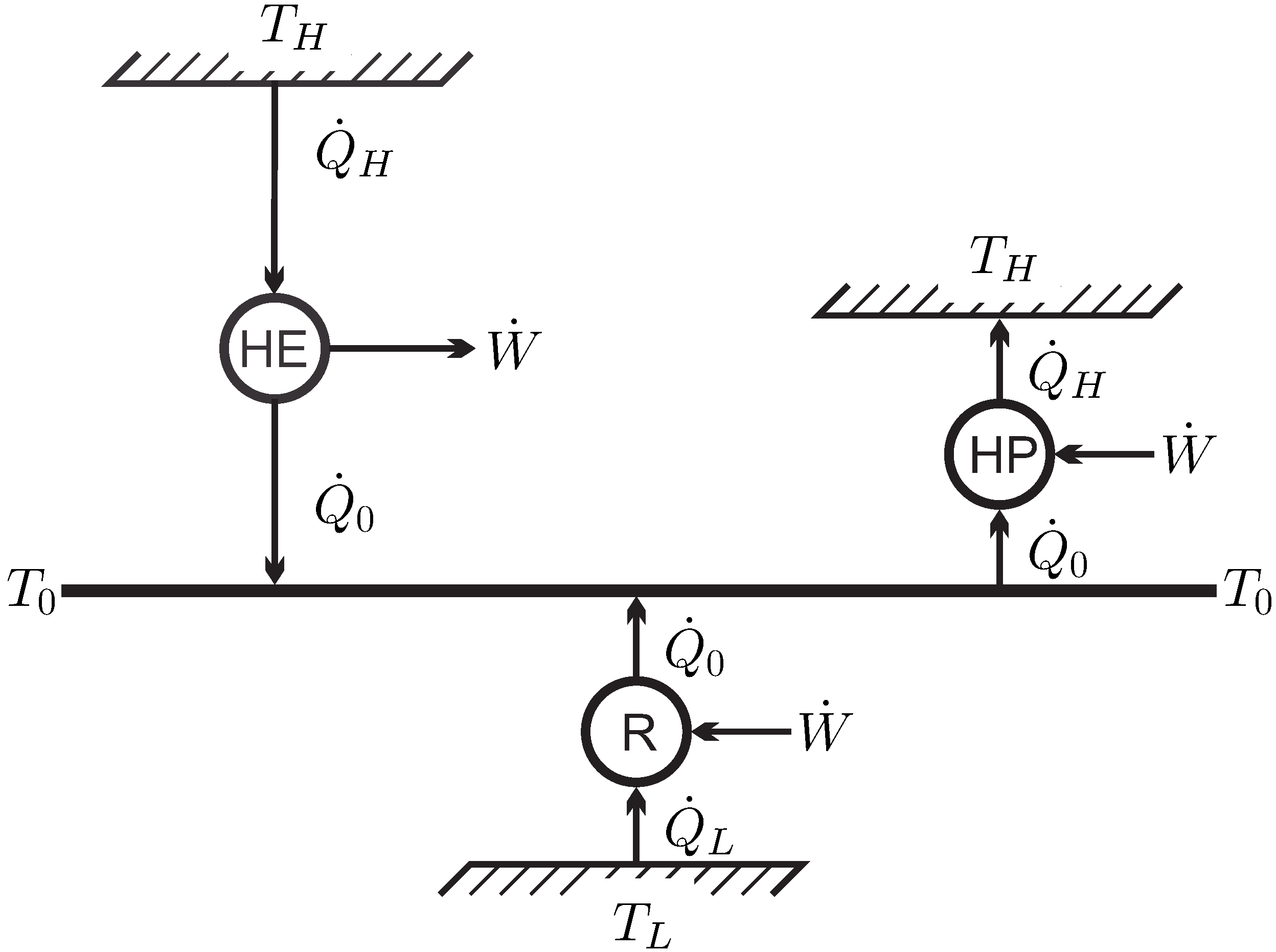

The historic development of the 2nd law relied on the use of Carnot engines, that is, a fully reversible engine between two reservoirs, and the Carnot process—which is a particular realization of a Carnot engine. Evaluation of the Carnot cycle for an ideal gas then shows the equivalence of ideal gas temperature and thermodynamic temperature. In the present treatment, all statements about engines are derived from the laws of thermodynamics, after they are found, based on simple experience.

The positivity of thermodynamic temperature implies positive ideal gas temperature and hence positive gas pressures. In

Section 41, positive thermodynamic temperature is linked to mechanical stability. The ideal gas equation provides an intuitive example for this: A gas under negative pressure would collapse, hence be in an unstable state.

28. Measurement of Properties

Only few thermodynamic properties can be measured easily, namely temperature T, pressure p, and volume v. These are related by the thermal equation of state which is therefore relatively easy to measure.

The specific heat can be determined from careful measurements. These calorimetric measurements employ the first law, where the change in temperature in response to the heat (or work) added to the system is measured.

Other important quantities, however, for example, , cannot be measured directly. We briefly study how they can be related to measurable quantities, that is, T, p, v, and by means of the Gibbs equation and the differential relations derived above.

We consider the measurement of internal energy. The differential of

is

Therefore, the internal energy

can be determined by integration when

and

are known from measurements. By (

54) the term

is known through measurement of the thermal equation of state, and we can write

Integration is performed from a reference state

to the actual state

. Since internal energy is a point function, its differential is exact, and the integration is independent of the path chosen. The easiest integration is in two steps, first at constant volume

from

to

, then at constant temperature

T from

to

,

Accordingly, in order to determine the internal energy

for all

T and

v it is sufficient to measure the thermal equation of state

for all

and the specific heat

for all temperatures

T but

only one volume

. For the ideal gas, the volume contribution vanishes, and the above reduces to (

20).

The internal energy can only be determined apart from a reference value . As long as no chemical reactions occur, the energy constant can be arbitrarily chosen.

Entropy

follows by integration of the Gibbs equation, for example, in the form, again with (

54),

as

Also entropy can be determined only apart from a reference value

which only plays a role when chemical reactions occur; the third law of thermodynamics fixes the scale properly.

After

u and

s are determined, enthalpy

h, Helmholtz free energy

f, and Gibbs free energy

g simply follow by means of their definitions. Thus the measurement of

all thermodynamic quantities requires only the measurement of the thermal equation of state

for all

and the measurement of the specific heat at constant volume

for all temperatures, but only one volume, for example, in a constant volume calorimeter. All other quantities follow from differential relations that are based on the Gibbs equation, and integration [

8,

10].

Above we have outlined the necessary measurements to fully determine all relevant thermodynamic properties for systems in equilibrium. We close this section by pointing out that all properties can be determined if just one of the thermodynamic potentials

is known [

8,

10]. Since all properties can be derived from the potential, the expression for the potential is sometimes called the

fundamental relation.

29. Property Relations for Entropy

For incompressible liquids and solids, the specific volume is constant, hence

. The caloric equation of state (

59) implies

and the Gibbs equation reduces to

. For constant specific heat,

, integration gives entropy as explicit function of temperature,

where

is the entropy at the reference temperature

.

For the ideal gas, where

and the specific heat depends on

T only, entropy assumes the familiar form

For a gas with constant specific heat, the integration can be performed to give

Of course, a substance behaves as an ideal gas only for sufficiently low pressures or sufficiently hight temperatures, so that these relations have a limited range of applicability. In particular for low temperatures, the ideal gas law and the equations above are not valid.

30. Local Thermodynamic Equilibrium

In the previous sections, we considered homogeneous systems that undergo equilibrium processes, and discussed how to determine thermodynamic properties of systems in equilibrium states. To generalize for processes in inhomogeneous systems, we now consider the system as a compound of sufficiently small subsystems. The key assumption is that each of the subsystems is in local thermodynamic equilibrium, so that it can be characterized by the same state properties as a macroscopic equilibrium system. To simplify the proceedings somewhat, we consider numbered subsystems of finite size, and summation.

The exact argument for evaluation of local thermodynamic equilibrium considers infinitesimal cells

, partial differential equations, and, to arrive at the equations for systems, integration. This detailed approach, known as

Linear Irreversible Thermodynamics (LIT), is presented in

Appendix C. The simplified argument below avoids the use of partial differential equations, and aims only on the equations for systems, hence this might be the preferred approach for use in an early undergraduate course [

8].

Figure 8 indicates the splitting into subsystems, and highlights a subsystem

i inside the system and a subsystem

k at the system boundary. Temperature and pressure in the subsystems are given by

,

and

,

, respectively. Generally, temperature and pressure are inhomogeneous, that is adjacent subsystems have different temperatures and pressures. Accordingly, each subsystem interacts with its neighborhood through heat and work transfer as indicated by the arrows. Heat and work exchanged with the surroundings of the system are indicated as

and

.

Internal energy and entropy in a subsystem

i are denoted as

and

, and, since both are extensive, the corresponding quantities for the complete system are obtained by summation over all subsystems,

,

. Note that in the limit of infinitesimal subsystems the sums become integrals, as in

Section 5. The balances of energy and entropy for a subsystem

i read

where

is the net heat exchange, and

is the net work exchange for the subsystem. Here, the summation over

j indicates the exchange of heat and work with the neighboring cells, such that, for example,

is the heat that

i receives from the neighboring cell

j.

The boundary cells of temperatures

are either adiabatically isolated to the outside, or they exchange heat with external systems (reservoirs) of temperature

, which, in fact, are the temperatures that appear in the 2nd law in the form of Equation (

44). For systems in local thermodynamic equilibrium, temperature differences at boundaries, such at those between a gas and a container wall, are typically extremely small. Hence, temperature jumps at boundaries are usually ignored, so that

, and we will proceed with this assumption.

Appendix C.4 provides a more detailed discussion of temperature jumps and velocity slip within the context of Linear Irreversible Thermodynamics.