Abstract

High-dimensional variable selection is an important research topic in modern statistics. While methods using nonlocal priors have been thoroughly studied for variable selection in linear regression, the crucial high-dimensional model selection properties for nonlocal priors in generalized linear models have not been investigated. In this paper, we consider a hierarchical generalized linear regression model with the product moment nonlocal prior over coefficients and examine its properties. Under standard regularity assumptions, we establish strong model selection consistency in a high-dimensional setting, where the number of covariates is allowed to increase at a sub-exponential rate with the sample size. The Laplace approximation is implemented for computing the posterior probabilities and the shotgun stochastic search procedure is suggested for exploring the posterior space. The proposed method is validated through simulation studies and illustrated by a real data example on functional activity analysis in fMRI study for predicting Parkinson’s disease.

1. Introduction

With the increasing ability to collect and store data in large scales, we are facing the opportunities and challenges to analyze data with a large number of covariates per observation, the so-called high-dimensional problem. When this situation arises, variable selection is one of the most commonly used techniques, especially in radiological and genetic research, due to the nature of high-dimensional data extracted from imaging scans and gene sequencing. In the context of regression, when the number of covariates is greater than the sample size, the parameter estimation problem becomes ill posed, and variable selection is usually the first step for dimension reduction.

A good amount of work has recently been done for variable selection from both frequentist and Bayesian perspectives. On the frequentist side, extensive studies on variable selection have emerged ever since the appearance of least absolute shrinkage and selection operator (Lasso) [1]. Other penalization approaches for sparse model selection including smoothly clipped absolute deviation (SCAD) [2], minimum concave penalty (MCP) [3] and many variations have also been introduced. Most of these methods are first considered in the context of linear regression and then extended to generalized linear models. Because all the methods share the basic desire of shrinkage toward sparse models, it has been understood that most of these frequentist methods can be interpreted from a Bayesian perspective and many analogous Bayesian methods have also been proposed. See for example [4,5,6] that discuss the connection between penalized likelihood-based methods and Bayesian approaches. These Bayesian methods employed local priors, which still preserve positive values at null parameter values, to achieve desirable shrinkage.

In this paper, we are interested in nonlocal densities [7] that are identically zero whenever a model parameter is equal to its null value. Compared to local priors, nonlocal prior distributions have relatively appealing properties for Bayesian model selection. In particular, nonlocal priors discard spurious covariates faster as the sample size grows, while preserving exponential learning rates to detect nontrivial coefficients [7]. Johnson and Rossell [8] and Shin et al. [9] study the behavior of nonlocal densities for variable selection in a linear regression setting. When the number of covariates is much smaller than the sample size, [10] establish the posterior convergence rate for nonlocal priors in a logistic regression model and suggest a Metropolis–Hastings algorithm for computation.

To the best of our knowledge, a rigorous investigation of high-dimensional posterior consistency properties for nonlocal priors has not been undertaken in the context of generalized linear regression. Although [11] investigated the model selection consistency of nonlocal priors in generalized linear models, they assumed a fixed dimension p. Motivated by this gap, our first goal was to examine the model selection property for nonlocal priors, particularly, the product moment (pMOM) prior [8] in a high-dimensional generalized linear model. It is known that the computation problem can arise for Bayesian approaches due to the non-conjugate structure in generalized linear regression. Hence, our second goal was to develop efficient algorithms for exploring the massive posterior space. These were challenging goals of course, as the posterior distributions are not available in closed form for this type of nonlocal priors.

As the main contributions of this paper, we first establish model selection consistency for generalized linear models with pMOM prior on regression coefficients (Theorems 1–3) when the number of covariates grows at a sub-exponential rate of the sample size. Next, n terms of computation, we first obtain the posteriors via Laplace approximation and then implement an efficient shotgun stochastic search (SSS) algorithm for exploring the sparsity pattern of the regression coefficients. In particular, the SSS-based methods have been shown to significantly reduce the computational time compared with standard Markov chain Monte Carlo (MCMC) algorithms in various settings [9,12,13]. We demonstrate that our model can outperform existing state-of-the-art methods including both penalized likelihood and Bayesian approaches in different settings. Finally, the proposed method is applied to a functional Magnetic Resonance Imaging (fMRI) data set for identifying alternative brain activities and for predicting Parkinson’s disease.

The rest of paper is organized as follows. Section 2 provides background material regarding generalized linear models and revisits the pMOM distribution. We detail strong selection consistency results in Section 3, and proofs are provided in the Appendix A. The posterior computation algorithm is described in Section 4, and we show the performance of the proposed method and compare it with other competitors through simulation studies in Section 5. In Section 6, we conduct a real data analysis for predicting Parkinson’s disease and show our method yields better prediction performance compared with other contenders. To conclude our paper, a discussion is given in Section 7.

2. Preliminaries

2.1. Model Specification for Logistic Regression

We first describe the framework for Bayesian variable selection in logistic regression followed by our hierarchical model specification. Let be the binary response vector and be the design matrix. Without loss of generality, we assume that the columns of are standardized to have zero mean and unit variance. Let denote the ith row vector of that contains the covariates for the ith subject. Let be the vector of regression coefficients. We first consider the following standard logistic regression model:

We will work in a scenario where the dimension of predictors, p grows with the sample size n. Thus, we consider the number of predictors is function of n, i.e., , but we denote it as p for notational simplicity.

Our goal is variable selection, i.e., the correct identification of all non-zero regression coefficients. In light of that, we denote a model by if and only if all the nonzero elements of are and denote , where is the cardinality of . For any matrix A, let denote the submatrix of containing the columns of indexed by model . In particular, for , we denote as the subvector of containing the entries of corresponding to model .

The class of pMOM densities [8] can be used for model selection through the following hierarchical model

Here is a nonsingular matrix, r is a positive integer referred to as the order of the density and is the normalizing constant independent of the positive constant . Please note that prior (2) is obtained as the product of the density of multivariate normal distribution and even powers of parameters, . This results in at , which is desirable because (2) is a prior for the nonzero elements of . Some standard regularity assumptions on the hyperparameters will be provided later in Section 3. In (3), is a positive integer restricting the size of the largest model, and a uniform prior is placed on the model space restricting our analysis to models having size less than or equal to . Similar structure has also been considered in [5,9,14]. An alternative is to use a complexity prior [15] that takes the form of

for some positive constants . The essence is to force the estimated model to be sparse by penalizing dense models. As noted in [9], the model selection consistency result based on the nonlocal priors derives strength directly from the marginal likelihood and does not require strong penalty over model size. This is indeed reflected in the simulation studies in [14], where the authors compare the model selection performance under uniform prior and complexity prior. The result under uniform prior is much better than that under complexity prior, as the complexity prior always tends to prefer the sparse models.

By the hierarchical model (1) to (3) and Bayes’ rule, the resulting posterior probability for model is denoted by,

where is the marginal density of , and is the marginal density of under model given by

where

is the log likelihood function. In particular, these posterior probabilities can be used to select a model by computing the posterior mode defined by

Of course, the closed form of these posterior probabilities cannot be obtained due to not only the nature of logistic regression but also the structure of nonlocal prior. Therefore, special efforts need to be devoted to both consistency analysis and computational strategy as we shall see in the following sections.

2.2. Extension to Generalized Linear Model

We can easily extend our previous discussion on logistic regression to a generalized linear model (GLM) [16]. Given predictors and an outcome for , a probability density function (or probability mass function) of a generalized linear model has the following form of the exponential family

in which is a continuously differentiable function with respect to with nonzero derivative, is also a continuously differentiable function of , is some constant function of y, and is also known as the natural parameter that relates the response to the predictors through the linear function . The mean function is , where is the inverse of some chosen link function.

3. Main Results

In this section, we show that the proposed Bayesian model enjoys desirable theoretical properties. Let be the true model, which means that the nonzero locations of the true coefficient vector are . We consider to be a fixed vector. Let be the true coefficient vector and be the vector of the true nonzero coefficients. In the following analysis, we will focus on logistic regression, but our argument can be easily extended to any other GLM as well. In particular,

as the negative Hessian of , where , and

In the rest of the paper, we denote and for simplicity.

Before we establish our main results, the following notations are needed for stating our assumptions. For any , and mean the maximum and minimum of a and b, respectively. For any sequences and , we denote , or equivalently , if there exists a constant such that for all large n. We denote , or equivalently , if as . Without loss of generality, if and there exist constants such that , we denote . For a given vector , the vector -norm is denoted as . For any real symmetric matrix , and are the maximum and minimum eigenvalue of , respectively. To attain desirable asymptotic properties of our posterior, we assume the following conditions:

Condition (A1) and for some .

Condition (A1) ensures our proposed method can accommodate high dimensions where the number of predictors grows at a sub-exponential rate of n. Condition (A1) also specifies the parameter in the uniform prior (3) that restricts our analysis on a set of reasonably large models. Similar assumptions restricting the model size have been commonly assumed in the sparse estimation literature [4,5,9,17].

Condition (A2) For some constant and ,

and for any integer . Furthermore, .

Condition (A2) gives lower and upper bounds of and , respectively, where is a large model satisfying . The lower bound condition can be regarded as a restricted eigenvalue condition for -sparse vectors. Restricted eigenvalue conditions are routinely assumed in high-dimensional theory to guarantee some level of curvature of the objective function and are satisfied with high probability for sub-Gaussian design matrices [5]. Similar conditions have also been used in the linear regression literature [18,19,20]. The last assumption in Condition (A2) says that the magnitude of true signals is bounded above up to some constant, which allows the magnitude of signals to increase to infinity.

Condition (A3) For some constant ,

Condition (A3) gives a lower bound for nonzero signals, which is called the beta-min condition. In general, this type of condition is necessary for catching every nonzero signal. Please note that due to Conditions (A1) and (A2), the right-hand side of (9) decreases to zero as . Thus, it allows the smallest nonzero coefficients to tend to zero as we observe more data.

Condition (A4) For some small constant , the hyperparameters and r satisfy

Condition (A4) suggests appropriate conditions for the hyperparameter in (2). A similar assumption has also been considered in [9]. The scale parameter in the nonlocal prior density reflects the dispersion of the nonlocal prior density around zero, and implicitly determines the size of the regression coefficients that will be shrunk to zero [8,9]. For the below theoretical results, we assume that for simplicity, but our results are still valid for other choices of as long as and .

Theorem 1

(No super set). Under conditions (A1), (A2) and (A4),

Theorem 1 says that, asymptotically, our posterior will not overfit the model, i.e., not include unnecessarily many variables. Of course, it does not guarantee that the posterior will concentrate on the true model. To capture every significant variable, we require the magnitudes of nonzero entries in not to be too small. Theorem 2 shows that with an appropriate lower bound specified in Condition (A3), the true model will be the mode of the posterior.

Theorem 2

(Posterior ratio consistency). Under conditions (A1)–(A4) with for some small constant ,

Posterior ratio consistency is a useful property especially when we are interested in the point estimation with the posterior mode, but does not provide how large probability the posterior puts on the true model. In the following theorem, we state that our posterior achieves strong selection consistency. By strong selection consistency, we mean that the posterior probability assigned to the true model t converges to 1. Since strong selection consistency implies posterior ratio consistency, it requires a slightly stronger condition on the lower bound for the magnitudes of nonzero entries in , i.e., a larger value of , compared to that in Theorem 2.

Theorem 3

(Strong selection consistency). Under conditions (A1)–(A4) with for some small constant , the following holds:

4. Computational Strategy

In this section, we describe how to approximate the marginal density of the data and to conduct the model selection procedure. The integral formulation in (4) leads to the posterior probabilities not available in closed form. Hence, we use Laplace approximation to compute and . A similar approach to compute posterior probabilities has been used in [8,9,10].

Please note that for any model , when , the normalization constant in (2) is given by . Let

For any model , the Laplace approximation of is given by

where obtained via the optimization function optim in R using a quasi-Newton method and is a symmetric matrix which can be calculated as:

The above Laplace approximation can be used to compute the log of the posterior probability ratio between any given model and true model , and select a model with the highest probability.

We then adopt the shotgun stochastic search (SSS) algorithm [9,12] to efficiently navigate through the massive model space and identify the global maxima. Using the Laplace approximations of the marginal probabilities in (11), the SSS method aims at exploring “interesting” regions of the resulting high-dimensional model spaces and quickly identifies regions of high posterior probability over models. Let containing all the neighbors of model , in which , and . The SSS procedure is described in Algorithm 1.

| Algorithm 1 Shotgun Stochastic Search (SSS) |

| Choose an initial model for to do Compute for all Sample , and , from , and with probabilities proportional to Sample the next model from with probability proportional to end for |

5. Simulation Studies

In this section, we demonstrate the performance of the proposed method in various settings. Let be the design matrix whose first columns correspond to the active covariates for which we have nonzero coefficients, while the rest correspond to the inactive ones with zero coefficients. In all the simulation settings, we generate for under the following two different cases of :

- Case 1: Isotropic design, where , i.e., no correlation imposed between different covariates.

- Case 2: Autoregressive correlated design, where for all . The correlations among different covariates are set to different values.

Following the simulation settings in [9,10], we consider the following two designs, each with the same sample size and number of predictors being either or 150:

- Design 1 (Dense model): The number of predictors and

- Design 2 (High-dimensional): The number of predictors and

We investigate the following two settings for the true coefficient vector to include different combinations of small and large signals.

- Setting 1: All the entries of are set to 3.

- Setting 2: All the entries of are generated from .

Finally, for given and , we sample from the following logistic model as in (1)

We will refer to our proposed method as “nonlocal” and its performance will then be compared with other existing methods including Spike and Slab prior-based model selection [21], empirical Bayesian LASSO (EBLasso) [22], Lasso [23] and SCAD [24]. The tuning parameters in the regularization approaches are chosen by 5-fold cross-validation. Spike and slab prior method is implemented via the BoomSpikeSlab package in R. For the nonlocal prior, the hyperparameters are set at , and we tune for four different values of . We choose the optimal by the mean squared prediction error through 5-fold cross-validation. Please note that this implies that is data-dependent and the resulting procedure is similar to an empirical-Bayesian approach in the high-dimensional Bayesian literature given the prior knowledge about the sparse true model [13]. For the SSS procedure, the initial model was set by randomly taking three coefficients to be active and the remaining to be inactive. The detailed steps for our method are coded in R and publicly available at https://github.com/xuan-cao/Nonlocal-Logistic-Selection. In particular, the stochastic search is implemented via the SSS function in the R package BayesS5.

To evaluate the performance of variable selection, the precision, sensitivity, specificity, Matthews correlation coefficient (MCC) [25] and mean-squared prediction error (MSPE) are reported at Table 1, Table 2, Table 3 and Table 4, where each simulation setting is repeated for 20 times. The criteria are defined as

where TP, TN, FP and FN are true positive, true negative, false positive and false negative, respectively. Here we denote , where is the estimated coefficient based on each method. For Bayesian methods, the usual GLM estimates based on the selected support are used as . We generated test samples with to calculate the MSPE.

Table 1.

The summary statistics for Design 1 (Dense model design) are represented for each setting of the true regression coefficients under the first isotropic covariance case. Different setting means different choice of the true coefficient .

Table 2.

The summary statistics for Design 1 (Dense model design) are represented for each setting of the true regression coefficients under the second autoregressive covariance case. Different setting means different choice of the true coefficient .

Table 3.

The summary statistics for Design 2 (High-dimensional design) are represented for each setting of the true regression coefficients under the first isotropic covariance case. Different setting means different choice of the true coefficient .

Table 4.

The summary statistics for Design 2 (High-dimensional design) are represented for each setting of the true regression coefficients under the second autoregressive covariance case. Different setting means different choice of the true coefficient .

Based on the above simulation results, we notice that under the first isotropic covariance case, the nonlocal-based approach overall works better than other methods especially in the strong signal setting (i.e., Setting 1), where our method is able to consistently achieve perfect estimation accuracy. This is because as signal strength gets stronger, the consistency conditions of our method are easier to satisfy which leads to better performance. When the covariance is autoregressive, our method suffers from lower sensitivity compared with the frequentist approaches in high-dimensional design (Table 4), but still has higher precision, specificity and MCC. The poor precision of the regularization methods has also been discussed in previous literature in the sense that selection of the regularization parameter using cross-validation is optimal with respect to prediction but tends to include too many noise predictors [26]. Again we observe under the autoregressive design, the performance of our method improves as the true signals strengthen. To sum up, the above simulation studies indicate that the proposed method can perform well under a variety of configurations with different data generation mechanisms.

6. Application to fMRI Data Analysis

In this section, we apply the proposed model selection method to an fMRI data set for identifying aberrant functional brain activities to aid the diagnosis of Parkinson’s Disease (PD) [27]. Data consists of 70 PD patients and 50 healthy controls (HC). All the demographic characteristics and clinical symptom ratings have been collected before MRI scanning. In particular, we adopt the mini-mental state examination (MMSE) for cognitive evaluation and the Hamilton Depression Scale (HAMD) for measuring the severity of depression.

6.1. Image Feature Extraction

Functional imaging data for all subjects are collected and retrieved from the archive by neuroradiologists. Image preprocessing procedure is carried out via Statistical Parametric Mapping (SPM12) operated on the Matlab platform. For each subject, we first discard the first 5 time points for signal equilibrium and the remaining 135 images underwent slice-timing and head motion corrections. Four subjects with more than 2.5 mm maximum displacement in any of the three dimensions or of any angular motion are removed. The functional images are spatially normalized to the Montreal Neurological Institute space with mm cubic voxels and smoothed with a 4 mm full width at half maximum (FWHM) Gaussian kernel. We further regress out nuisance covariates and applied temporal filter (0.01 Hz 0.08 Hz) to diminish high-frequency noise.

Zang et al. [28] proposed the method of Regional Homogeneity (ReHo) to analyze characteristics of regional brain activity and to reflect the temporal homogeneity of neural activity. Since some preprocessing methods especially spatial smoothing fMRI time series may significantly change the ReHo magnitudes [29], preprocessed fMRI data without the spatial smoothing step are used for calculating ReHo. In particular, we focus on the mReHo maps obtained by dividing the mean ReHo of the whole brain within each voxel in the ReHo map. We further segment the mReHo maps and extract all the 112 ROI signals based on the Harvard-Oxford atlas (HOA) using the Resting-State fMRI Data Analysis Toolkit.

Slow fluctuations in activity are fundamental features of the resting brain for determining correlated activity between brain regions and resting state networks. The relative magnitude of these fluctuations can discriminate between brain regions and subjects. Amplitude of Low Frequency Fluctuations (ALFF) [30] are related measures that quantify the amplitude of these low frequency oscillations. Leveraging the preprocessed data, we retain the standardized mALFF maps after dividing the ALFF of each voxel by the global mean ALFF. Using the HOA, we again obtain 112 mALFF values via extracting the ROI signals based on the mALFF maps. Voxel-Mirrored Homotopic Connectivity (VMHC) quantifies functional homotopy by providing a voxel-wise measure of connectivity between hemispheres [31]. By segmenting the VMHC maps according to HOA, we also extract 112 VHMC values.

6.2. Results

Our candidate features consist of 336 radiomic variables along with all the clinical characteristics. We now consider a standard logistic regression model with the binary disease indicator as the outcome and all the radiomic variables together with five clinical factors as predictors. Various models including the proposed and other competing methods will then be implemented for classifying subjects based on these extracted features. The dataset is randomly divided into a training set (80%) and a testing set (20%) while maintaining the PD:HC ratio in both sets. For Bayesian methods, we first obtain the identified variables, and then evaluate the testing set performance using standard GLM estimates based on the selected features. The penalty parameters in all frequentist methods are tuned via 5-fold cross validation in the training set. The hyperparameters for the proposed method are set as in simulation studies.

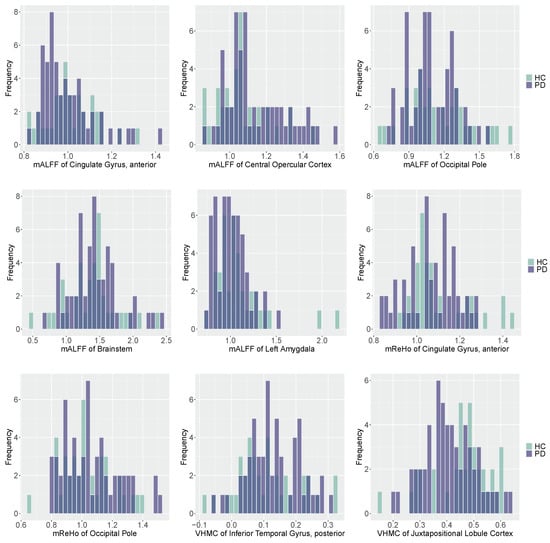

The HAMD score and nine radiomic features including five mALFFs, two ReHos, two VHMCs are selected by the SSS procedure under pMOM prior. In Figure 1, we plot the histograms of selected radiomic features with different colors representing different groups. The predictive performance of various methods in the test set is summarized in Table 5. We can tell from Table 5 that the nonlocal prior-based approach has overall better prediction performance compared with other methods. Our nonlocal approach has higher precision and specificity compared with all the other methods, but yields a lower sensitivity than the frequentist approaches. Based on the most comprehensive measure MCC, our method outperforms all the other methods.

Figure 1.

Histograms of selected radiomic features for PD and HC subjects with darker color representing overlapping values. Purple: PD group; Green: HC group.

7. Conclusions

In this paper, we propose a Bayesian hierarchical model with a pMOM prior specification over regression coefficients to perform variable selection in high-dimensional generalized linear models. The model selection consistency of our method is established under mild conditions and the shotgun stochastic search algorithm can be used for the implementation of our proposed approach. Our simulation and real data studies indicate that the proposed method has better performance for variable selection compared to a variety of state-of-the-art competing methods. In the fMRI data analysis, our method is able to identify abnormal functional brain activities for PD that occur in the regions of interest including cingulate gyrus, central opercular cortex, occipital pole, brainstem, left amygdala, occipital pole, inferior temporal gyrus, and juxtapositional lobule cortex. These findings suggest disease-related alterations of functional activities that provide physicians sufficient information to get involved with early diagnosis and treatment. Our findings are also coherent with the alternative functional features in cortical regions, brainstem, and limbic regions discovered in previous studies [32,33,34,35].

Our fMRI study certainly has limitations. First, we would like to note that fMRI data are typically treated as spatio-temporal objects and a generalized linear model with spatially varying coefficients can be implemented for brain decoding [36]. However, in our application, for each subject, a total of 135 fMRI scans were obtained, each with the dimension of . If we take each voxel as a covariate to perform the whole-brain functional analysis, it would be computationally challenging and impractical given the extremely high dimension. Hence, we adopt the radiomics approach to extract three different types of features that can summarize the functional activity of the brain, and take these radiomic features as covariates in our generalized linear model. For future studies, we will focus on several regions of interest rather than the entire brain and take the spatio-temporal dependency among voxels into consideration.

Second, although ReHo, ALFF, and VHMC are different types of radiomic features that quantify the functional activity of the brain, it is definitely possible that in some regions, three measures are highly correlated with each other. Our current theoretical and computational strategy can accommodate a reasonable amount of correlations among covariates, but might not work in the presence of high correlation structure. For future studies, we will first carefully examine the potential correlations among features and might only retain one feature for each region if significant correlations are detected.

One possible extension of our methodology is to address the potential misspecification of the hyperparameter . The scale parameter is of particular importance in the sense that it can reflect the dispersion of the nonlocal density around zero, and implicitly determine the size of the regression coefficients that will be shrunk to zero [8]. Cao et al. [14] investigated the model selection consistency for the hyper-pMOM priors in linear regression setting, where an additional inverse-gamma prior is placed over . Wu et al. [11] proved the model selection consistency using hyper-pMOM prior in generalized linear models, but assumed a fixed dimension p. For future study, we will consider this fully Bayesian approach to carefully examine the theoretical and empirical properties for such hyper-pMOM prior in the context of high-dimensional generalized linear regression. We can also extend our method to develop a Bayesian approach for growth models in the context of non-linear regression [37], where the log-transformation is typically used to recover the additive structure. However, then the model does not fall into the category of GLMs, which is beyond the current setting in this paper. Therefore, we leave it as a future work.

Author Contributions

Conceptualization, X.C.; Methodology, X.C. and K.L.; Software, X.C.; Supervision, K.L.; Validation, K.L.; Writing—original draft, X.C.; Writing—review & editing, K.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Simons Foundation: No.635213; University of Cincinnati: Taft Summer Research Fellowship; National Research Foundation of Korea: No.2019R1F1A1059483; INHA UNIVERSITY Research Grant.

Acknowledgments

We would like to thank two referees for their valuable comments which have led to improvements of an earlier version of the paper. This research was supported by Simons Foundation’s collaboration grant (No.635213), Taft Summer Research Fellowship at University of Cincinnati and the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No.2019R1F1A1059483).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| Lasso | least absolute shrinkage and selection operator |

| SCAD | smoothly clipped absolute deviation |

| MCP | minimum concave penalty |

| pMOM | product moment |

| SSS | shotgun stochastic search |

| MCMC | Markov chain Monte Carlo |

| fMRI | functional Magnetic Resonance Imaging |

| GLM | generalized linear model |

| EBLasso | empirical Bayesian LASSO |

| MCC | Matthews correlation coefficient |

| MSPE | mean-squared prediction error |

| PD | Parkinson’s Disease |

| HC | healthy controls |

| MMSE | mini-mental state examination |

| HAMD | Hamilton Depression Scale |

| SPM12 | Statistical Parametric Mapping |

| FWHM | full width at half maximum |

| HOA | Harvard-Oxford atlas |

| ALFF | Amplitude of Low Frequency Fluctuations |

| VMHC | Voxel-Mirrored Homotopic Connectivity |

Appendix A

Throughout the Supplementary Material, we assume that for any

and any model , there exists such that

for any . However, as stated in [5], there always exists satisfying inequality (A1), so it is not really a restriction. Since we will focus on sufficiently large n, can be considered an arbitrarily small constant, so we can always assume that .

Proof of Theorem 1.

Let and

where is the true model. We will show that

By Taylor’s expansion of around , which is the MLE of under the model , we have

for some such that . Furthermore, by Lemmas A.1 and A.3 in [5] and Condition (A2), with probability tending to 1,

for any and such that , where , for some constants . Please note that for such that ,

where the second inequality holds due to Condition (A2). It also holds for any such that by concavity of and the fact that maximizes .

Define the set then we have for some large and any , with probability tending to 1.

Please note that for and , we have

where denotes the expectation with respect to a multivariate normal distribution with mean and covariance matrix . It follows from Lemma 6 in the supplementary material for [8] that

where , and

for some constant . Further note that

for some constant and some large constant , by Conditions (A1), (A2) and (A4). Therefore, it follows from (A3) that

for some constant . Next, note that it follows from Lemma A.3 in the supplementary material for [5] that

Therefore,

by Conditions (A1) and (A2). Combining with (A4), we obtain the following upper bound for ,

for any and some constant . Similarly, by Lemma 4 in the supplementary material for [8] and the similar arguments leading up to (A5), with probability tending to 1, we have

by Lemma A1, where . Therefore, with probability tending to 1,

for any , where the second inequality holds by Lemma 2 in [38], Conditions (A2) and (A4), and the third inequality follows from Lemma 3 in [38], which implies

for any with probability tending to 1, where with some small constant satisfying .

Hence, with probability tending to 1, it follows from (A6) that

for some constant . Using and (A8), we get

Thus, we have proved the desired result (A2). □

Proof of Theorem 2.

Let . For any , let , so that . Let be the -dimensional vector including for and zeros for . Then by Taylor’s expansion and Lemmas A.1 and A.3 in [5], with probability tending to 1,

for any such that for some large constant . Please note that

Let and ,

where denotes the expectation with respect to a multivariate normal distribution with mean and covariance matrix . It follows from Lemma 6 in the supplementary material for [8] that

where . Define the set for some large constant , then by similar arguments used for super sets, with probability tending to 1,

for any and for some constant .

Since the lower bound for can be derived as before, it leads to

for any with probability tending to 1.

We first focus on (A9). Please note that

for some constant . Furthermore, by the same arguments used in (A7), we have

for some constant and for any with probability tending to 1. Here we choose if or if so that

where the inequality holds by Condition (A4). To be more specific, we divide into two disjoint sets and , and will show that as with probability tending to 1. Thus, we can choose different for and as long as . On the other hand, with probability tending to 1, by Condition (A3),

for any and some large constants , where . Here, by the proof of Lemma A.3 in [5].

Hence, (A9) for any is bounded above by

with probability tending to 1, where the last term is of order because we assume for some small .

Proof of Theorem 3.

Let . Since we have Theorem 1, it suffices to show that

By the proof of Theorem 2, the summation of (A9) over is bounded above by

with probability tending to 1, where is defined in the proof of Theorem 2. Please note that the last term is of order because we assume for some small . It is easy to see that the summation of (A10) over is also of order with probability tending to 1 by the similar arguments. □

Lemma A1.

Under Condition (A2), we have

for any with probability tending to 1.

Proof.

Please note that by Condition (A2),

which implies that

Thus, we complete the proof if we show that

for some constant and any with probability tending to 1. By Lemma A.3 in [5] and Condition (A2),

for any with probability tending to 1. □

References

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. (Methodol.) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Zhang, C. Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 2010, 38, 894–942. [Google Scholar] [CrossRef]

- Liang, F.; Song, Q.; Yu, K. Bayesian subset modeling for high-dimensional generalized linear models. J. Am. Stat. Assoc. 2013, 108, 589–606. [Google Scholar] [CrossRef]

- Narisetty, N.; Shen, J.; He, X. Skinny gibbs: A consistent and scalable gibbs sampler for model selection. J. Am. Stat. Assoc. 2018, 1–13. [Google Scholar] [CrossRef]

- Ročková, V.; Georg, E. The spike-and-slab lasso. J. Am. Stat. Assoc. 2018, 113, 431–444. [Google Scholar] [CrossRef]

- Johnson, V.; Rossell, D. On the use of non-local prior densities in bayesian hypothesis tests hypothesis. J. R. Statist. Soc. B 2010, 72, 143–170. [Google Scholar] [CrossRef]

- Johnson, V.; Rossell, D. Bayesian model selection in high-dimensional settings. J. Am. Stat. Assoc. 2012, 107, 649–660. [Google Scholar] [CrossRef]

- Shin, M.; Bhattacharya, A.; Johnson, V. Scalable bayesian variable selection using nonlocal prior densities in ultrahigh-dimensional settings. Stat. Sin. 2018, 28, 1053–1078. [Google Scholar]

- Shi, G.; Lim, C.; Maiti, T. Bayesian model selection for generalized linear models using non-local priors. Comput. Stat. Data Anal. 2019, 133, 285–296. [Google Scholar] [CrossRef]

- Wu, H.; Ferreira, M.R.; Elkhouly, M.; Ji, T. Hyper nonlocal priors for variable selection in generalized linear models. Sankhya A 2020, 82, 147–185. [Google Scholar] [CrossRef]

- Hans, C.; Dobra, A.; West, M. Shotgun stochastic search for “large p” regression. J. Am. Stat. Assoc. 2007, 102, 507–516. [Google Scholar] [CrossRef]

- Yang, X.; Narisetty, N. Consistent group selection with bayesian high dimensional modeling. Bayesian Anal. 2018. [Google Scholar] [CrossRef]

- Cao, X.; Khare, K.; Ghosh, M. High-dimensional posterior consistency for hierarchical non-local priors in regression. Bayesian Anal. 2020, 15, 241–262. [Google Scholar] [CrossRef]

- Castillo, I.; Schmidt-Hieber, J.; Van der Vaart, A. Bayesian linear regression with sparse priors. Ann. Stat. 2015, 43, 1986–2018. [Google Scholar] [CrossRef]

- McCullagh, P.; Nelder, J.A. Generalized Linear Models, 2nd ed.; Chapman & Hall: London, UK, 1989. [Google Scholar]

- Lee, K.; Lee, J.; Lin, L. Minimax posterior convergence rates and model selection consistency in high-dimensional dag models based on sparse cholesky factors. Ann. Stat. 2019, 47, 3413–3437. [Google Scholar] [CrossRef]

- Ishwaran, H.; Rao, J. Spike and slab variable selection: Frequentist and bayesian strategies. Ann. Stat. 2005, 33, 730–773. [Google Scholar] [CrossRef]

- Song, Q.; Liang, F. Nearly optimal bayesian shrinkage for high dimensional regression. arXiv 2017, arXiv:1712.08964. [Google Scholar]

- Yang, Y.; Wainwright, M.; Jordan, M. On the computational complexity of high-dimensional bayesian variable selection. Ann. Stat. 2016, 44, 2497–2532. [Google Scholar] [CrossRef]

- Tüchler, R. Bayesian variable selection for logistic models using auxiliary mixture sampling. J. Comput. Graph. Stat. 2008, 17, 76–94. [Google Scholar] [CrossRef]

- Cai, X.; Huang, A.; Xu, S. Fast empirical bayesian lasso for multiple quantitative trait locus mapping. BMC Bioinform. 2011, 12, 211. [Google Scholar] [CrossRef] [PubMed]

- Friedman, J.; Hastie, T.; Tibshirani, R. Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 2010, 33, 1–22. [Google Scholar] [CrossRef] [PubMed]

- Breheny, P.; Huang, J. Coordinate descent algorithms for nonconvex penalized regression, with applications to biological feature selection. Ann. Appl. Stat. 2011, 5, 232–253. [Google Scholar] [CrossRef] [PubMed]

- Matthews, B.W. Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochim. Biophys. Acta (BBA) Protein Struct. 1975, 405, 442–451. [Google Scholar] [CrossRef]

- Meinshausen, N.; Bühlmann, P. High-dimensional graphs and variable selection with the lasso. Ann. Stat. 2006, 34, 1436–1462. [Google Scholar] [CrossRef]

- Wei, L.; Hu, X.; Zhu, Y.; Yuan, Y.; Liu, W.; Chen, H. Aberrant intra-and internetwork functional connectivity in depressed Parkinson’s disease. Sci. Rep. 2017, 7, 1–12. [Google Scholar] [CrossRef]

- Zang, Y.; Jiang, T.; Lu, Y.; He, Y.; Tian, L. Regional homogeneity approach to fmri data analysis. NeuroImage 2004, 22, 394–400. [Google Scholar] [CrossRef]

- Zuo, X.; Xu, T.; Jiang, L.; Yang, Z.; Cao, X.; He, Y.; Zang, Y.; Castellanos, F.; Milham, M. Toward reliable characterization of functional homogeneity in the human brain: Preprocessing, scan duration, imaging resolution and computational space. NeuroImage 2013, 65, 374–386. [Google Scholar] [CrossRef]

- Zang, Y.; He, Y.; Zhu, C.; Cao, Q.; Sui, M.; Liang, M.; Tian, L.; Jiang, T.; Wang, Y. Altered baseline brain activity in children with adhd revealed by resting-state functional mri. Brain Dev. 2007, 29, 83–91. [Google Scholar]

- Zuo, X.; Kelly, C.; Di Martino, A.; Mennes, M.; Margulies, D.; Bangaru, S.; Grzadzinski, R.; Evans, A.; Zang, Y.; Castellanos, F.; et al. Growing together and growing apart: Regional and sex differences in the lifespan developmental trajectories of functional homotopy. J. Neurosci. 2010, 30, 15034–15043. [Google Scholar] [CrossRef]

- Liu, Y.; Li, M.; Chen, H.; Wei, X.; Hu, G.; Yu, S.; Ruan, X.; Zhou, J.; Pan, X.; Ze, L.; et al. Alterations of regional homogeneity in parkinson’s disease patients with freezing of gait: A resting-state fmri study. Front. Aging Neurosci. 2019, 11, 276. [Google Scholar] [CrossRef] [PubMed]

- Mi, T.; Mei, S.; Liang, P.; Gao, L.; Li, K.; Wu, T.; Chan, P. Altered resting-state brain activity in parkinson’s disease patients with freezing of gait. Sci. Rep. 2017, 7, 16711. [Google Scholar] [CrossRef] [PubMed]

- Prell, T. Structural and functional brain patterns of non-motor syndromes in parkinson’s disease. Front. Neurol. 2018, 9, 138. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Zhang, J.; Zang, Y.; Wu, T. Consistent decreased activity in the putamen in Parkinson’s disease: A meta-analysis and an independent validation of resting-state fMRI. GigaScience 2018, 7, 6. [Google Scholar] [CrossRef]

- Zhang, F.; Jiang, W.; Wong, P.; Wang, J. A Bayesian probit model with spatially varying coefficients for brain decoding using fMRI data. Stat. Med. 2016, 35, 4380–4397. [Google Scholar] [CrossRef][Green Version]

- Quintero, F.O.L.; Contreras-Reyes, J.E.; Wiff, R.; Arellano-Valle, R.B. Flexible Bayesian analysis of the von Bertalanffy growth function with the use of a log-skew-t distribution. Fish. Bull. 2017, 115, 13–26. [Google Scholar] [CrossRef]

- Lee, K.; Cao, X. Bayesian group selection in logistic regression with application to mri data analysis. In Biometrics, to Appear; Wiley: Hoboken, NJ, USA, 2020. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).