Security-Informed Safety Analysis of Autonomous Transport Systems Considering AI-Powered Cyberattacks and Protection

Abstract

:1. Introduction

1.1. Motivation

1.2. State of the Art

- Access and penetration (Acc&Pen) stage. This stage covers automated payload generation/phishing, password guessing/cracking, intelligent capture attack/manipulation, smart abnormal behaviour generation, and AI model manipulation.

- Reconnaissance (Rec) stage. This stage involves intelligence target profiling, smart vulnerability detection/intelligent malware, intelligent collection/automated learn behaviour, and intelligent vulnerability/outcome prediction.

- The delivery (Del) stage. This stage entails intelligent concealment and evasive malware.

- The exploitation (Exp) stage. This stage addresses intelligent lateral movement and behavioural analysis to identify new exploitable vulnerabilities.

- The command and control (C&C). This stage deals with intelligent self-learning malware, automated domain generation, and denial of service attacks.

- Human lives at stake: ATSs are designed to operate without direct human control, thus placing a significant responsibility on ensuring the safety of ordinary citizens and users of other ATSs/non-ATSs. Any compromise in cybersecurity could result in accidents, injuries, or even loss of life.

- Public trust and acceptance: The successful implementation of ATSs relies on public trust and acceptance. If safety is not prioritised and incidents occur frequently, it can erode public confidence in the technology. To gain widespread adoption, cybersecurity measures should be effective and transparent enough to demonstrate that ATSs are at least as safe, if not safer, than human-operated vehicles.

- Legal and regulatory compliance: Governments and regulatory bodies play a crucial role in establishing rules and regulations for ATSs. The cybersecurity measures applied should align with the safety standards and guidelines. Failing to meet these standards can result in legal and regulatory consequences, including restrictions or bans on deployment.

- Reputation and liability: Reputation management is essential to businesses creating and utilising ATSs. Safety incidents can severely damage a company’s reputation, resulting in financial losses and a loss of market share. Additionally, when accidents happen, liability issues arise, and figuring out responsibility can be problematic in the absence of explicit safety procedures.

- Ethical considerations: ATSs often face challenging ethical decisions, such as determining how to prioritise the safety of one group of people versus another in potential collision scenarios. Safety protocols need to address these ethical dilemmas in a transparent and accountable manner to ensure that the systems make the most ethically sound decisions possible.

1.3. Objectives and Contribution

- To suggest a methodology, principles, and stages of Security-Informed Safety (SIS) and AI Quality Model (AIQM)-based assessment of autonomous transport systems, considering the application of artificial intelligence as a means to increase the power of cyberattacks and protect ATS assets;

- To develop modifications of known FMECA/IMECA (XMECA) techniques (table-based templates, algorithms of analysing failures/intrusions modes and effects criticality, criticality matrixes, and sets of countermeasures applied by various actors), their integrated option called SISMECA, and the corresponding ontology model;

- To investigate user stories related to ATSs in three domains (maritime, aviation, and space), connected with the application of AI for providing security and safety by use of AIQM and SISMECA-based techniques;

- To analyse the influence of countermeasures to decrease risks of successful attacks and ATS failures in the context of the SISMECA approach;

- To discuss the application of AIQM and SISMECA-based techniques to develop a roadmap of AI cybersecurity for ATSs in the context of the SIS approach.

2. Methodology

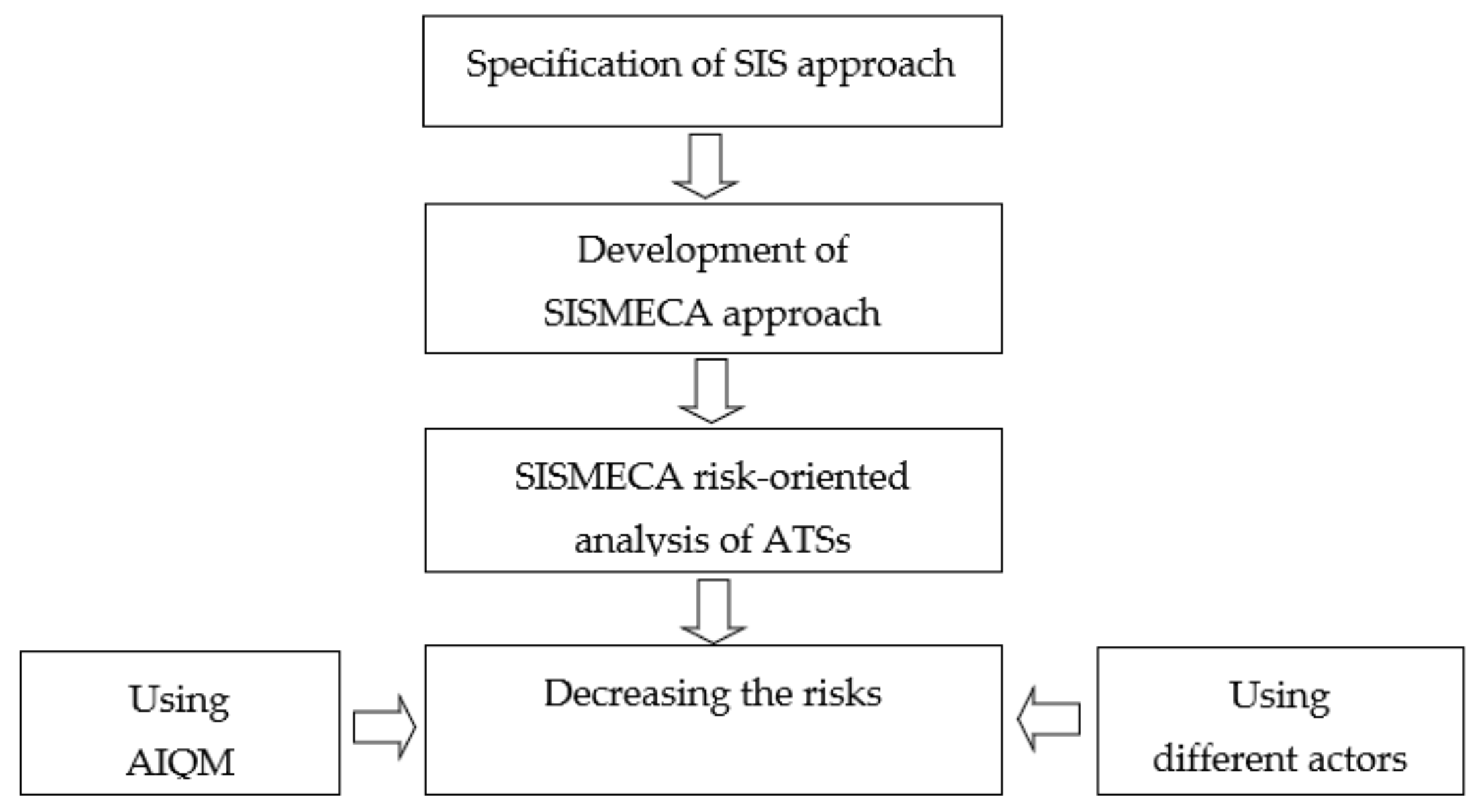

2.1. General Scheme

- Specification of the SIS approach to the assessment of the ATS. The safety assessment is carried out considering the security threats and the analysis of the consequences of cyber intrusions for the functional safety of the ATS.

- Development of the modified and extended XMECA technique [39] considering the SIS/CSIS approach called SISMECA to assess the ATS security and safety step by step. The evolution from FMEA to SISMECA is illustrated by suggested ontology and templates. The SISMECA technique is a natural modernisation and generalisation of XMECA.

- User story/scenario-based SISMECA risk-oriented analysis of ATSs. Such analysis allows step-by-step formalisation of verbal information about incidents when AI has been applied or could be applied to protect cyber and physical assets.

- Decreasing risks considering:

- possibilities of actors (regulators, developers, operators, customers) to choose countermeasures and provide acceptable risks [40]. The offered methodology favours the efficiency of the implemented countermeasures through the analysis of their consistency, costs, and so on.

2.2. Safety as a Top ATS Attribute: SIS Approach in the Context of Entropy

- human lives are at stake: autonomous transport systems have the potential to save thousands of lives by reducing the number of accidents caused by human error. However, if autonomous transport systems are not designed and tested to be safe, they can also pose a significant risk to human life.

- public perception: the success of autonomous transport systems depends on public acceptance. They will not be adopted if the public does not perceive them as safe. Safety is, therefore, crucial to building trust in autonomous transport systems.

- legal and regulatory requirements: autonomous transport systems are subject to legal and regulatory requirements that dictate safety standards. Failure to comply with these requirements can result in legal and financial consequences.

- reputation and liability: companies developing autonomous transport systems are vested in ensuring their vehicles are safe. If a severe accident were to occur, it could damage the reputation of the company and result in legal liability.

- technical challenges: developing safe autonomous transport systems is a complex technical challenge that requires significant research and development. Safety is, therefore, a top priority throughout the development process to ensure that the technology is reliable and robust enough to operate safely in a wide range of scenarios.

2.3. SISMECA Technique

2.3.1. FMECA

- system analysis: a thorough examination of the autonomous vehicle system is conducted to identify the various components and subsystems that make up the system;

- failure modes (Failure Mode, MOD) identification: potential failure modes for each component and subsystem are identified through brainstorming, previous experience, and research;

- failure modes analysis: each failure mode is analysed to determine its potential effects (Failure Effect, EFF) on the system and its criticality;

- criticality analysis: the criticality of each failure mode is assessed based on its severity (Failure Severity, SEV), frequency of occurrence (Failure Probability, PRO), and the likelihood of detection (Detectability, DET);

- risk prioritisation: the identified failure modes are prioritised based on their criticality (Risk Priority Number, RPN), and appropriate mitigation measures (Countermeasures, CTM) are implemented to address the highest-priority risks.

2.3.2. IMECA

- threats to system operation (THR);

- vulnerabilities of system components and operation processes (VLN);

- attacks on system assets (ATA);

- effects for system operation (EFF);

- assessment of the criticality of effects (CRT) estimated by the probability of an event and corresponding effects of the attacks (PRE), as well as the severity of the effects (SVE):

- Determination (collection) of stories and sub-stories set for the analysed system considering the domain experience;

- Decomposition and analysis of sub-stories, filling in the IMECA table and the matrix of risks;

- Analysis of the matrix or cube of criticality and assessment of the risk value;

- Determination of a set of countermeasures considering the responsibility of actors;

- Specification of criteria for the choice of countermeasures;

- Choice of countermeasures and verification of acceptable risk.

2.3.3. SISMECA Ontology and Template for Assessment

2.4. SISMECA-Based Safety Assessment and Ensuring

- development of scenarios set describing the operation of ATS under cyberattacks and actor activities considering AI contribution to system protection for the analysed domains;

- scenario-based development and analysis of user stories describing different cyberattacks, their influence, and ways to protect ATS from them via AI means/platforms;

- profiling of AI platform requirements by use of characteristics-based AI quality models;

- SISMECA-based assessment of cyberattack criticality and effect on safety, as well as efficiency of countermeasures that actors can implement.

- AI means can reduce, but not completely eliminate, the risks of dangerous ATS failures caused by cyberattacks;

- failures or other anomalies of AI means can cause dangerous states of ATSs.

3. Security-Informed Safety Assessment of ATSs

- determine the need to consider the safety issue in this model from the point of view of the effects of failures and any anomalies of AI tools on the ATS safety.

- based on the built AI model, determine which of its characteristics affect safety-related risks. As part of this work, only the impacts related to security (cybersecurity) are analysed, since the SIS approach is being investigated. Analysis of the impact of other AI characteristics on safety is a separate task.

- AI tools within the framework of the quality model and follow-up assessment using SISMECA tables can and should also be analysed from the point of view of the presence of their vulnerabilities and their impact on the ATS safety.

- expanding the number of cases of cyberattacks, considering their reinforcement using AI, which will lead to an increase in the number of rows of IMECA/SISMECA tables and the corresponding tracing of analysis results (modes, effects, and criticality).

- increasing opportunities for asset protection (countermeasures) thanks to the use of AI (AI-powered protection).

- analysing the corresponding user story from the point of view of security and safety issues and the role of AI means.

- profiling of AI quality model based on the general one.

- carrying out IMECA and SISMECA analysis and developing relevant tables and criticality matrixes.

- analysing acceptable risks after the application of countermeasures.

3.1. Case Study for the Maritime Domain

3.1.1. User Story US-ATS.M: Human Machine Interface of the Shore Control Centre

3.1.2. Profiling of the User Story US-ATS.M

- for the first level of AI quality: LFL (Lawfulness), EXP (Explainability), and TST (Trustworthiness). In this case, the characteristic ETH (Ethics) is not obvious.

- for the second level of AI quality: CMT (Completeness), CMH (Comprehensibility), TRP (Transparency), INP (Interpretability), INR (Interactivity), and VFB (Verifiability) for AI explainability; RSL (Resiliency), RBS (Robustness), SFT (Safety), SCR (security), and ACR (Accuracy) for AI trustworthiness.

- for the first level of AIP quality: ADT (Auditability), AVL (Availability), EFS (Effectiveness), RLB (Reliability), MNT (Maintainability), and USB (Usability).

3.1.3. IMECA Cybersecurity Assessment for the User Story US-ATS.M

3.1.4. SISMECA Safety Assessment for the User Story US-ATS.M

- security ensuring means (information technologies level, CTMsec);

- safety ensuring means (information and operation technologies level CTMsaf);

- combined usage of ensuring means at IT and OT levels.

3.2. Case Study for the Aviation Domain

3.2.1. User Story US-ATS.A: UAV-Based Surveillance System

3.2.2. Profiling of the User Story US-ATS.A

- for the first level of AI quality: ETH, LFL, EXP, and TST (in this case, all characteristics of the first level of AI quality are essential for this AIS);

- for the second level of AI quality: FRN for AI ethics; CMT, CMH, TRP, and VFB for AI explainability; RSL, RBS, SFT, SCR, and ACR for AI trustworthiness;

- for the first level of AIP quality: ADT, AVL, CNT, EFS, and RLB.

3.2.3. IMECA Cybersecurity Assessment for the User Story US-ATS.A

3.2.4. SISMECA Safety Assessment for the User Story US-ATS.A

3.3. Case Study for the Space Domain

3.3.1. User Story US-ATS.S: Smart Satellite Network

3.3.2. Profiling of the User Story US-ATS.S

- for the first level of AI quality: LFL, EXP, and TST (in this case, characteristic ETH is not obvious);

- for the second level of AI quality: CMT, TRP, INR, and VFB for AI explainability; DVS, RSL, SFT, and SCR for AI trustworthiness;

- for the first level of AIP quality: ADT, AVL, EFS, RLB, MNT, and USB.

3.3.3. IMECA Cybersecurity Assessment for the User Story US-ATS.S

3.3.4. SISMECA Safety Assessment for the User Story US-ATS.I

4. Towards the Roadmap of AI Cybersecurity for ATS in the Context of the SIS Approach

4.1. AI Cybersecurity for the ATS Roadmap Evolution

- Constantly reassess the current threat landscape: conduct an ongoing and comprehensive assessment of the current cybersecurity threats facing ATS, considering various domains (maritime, aviation, space, ground). Identify potential vulnerabilities and attack vectors specific to AI-powered ATS.

- Implement advanced threat detection: deploy AI-powered threat detection systems that leverage machine learning algorithms, including behaviour-based analysis and anomaly detection. Continuously update and enhance these systems to adapt to evolving cyber threats.

- Strengthen AI governance: develop robust AI governance frameworks that ensure the ethical, secure, and safe development, deployment, and operation of AI systems in ATS. Establish accountability, transparency, and monitoring mechanisms to mitigate cybersecurity risks and functional safety threats.

- Enhance intrusion detection and prevention: continuously improve intrusion detection and prevention capabilities by integrating advanced cybersecurity tools with AI algorithms. Leverage AI techniques, such as deep learning, for the more accurate and proactive identification of cyber threats in real time.

- Foster collaboration for threat intelligence: establish partnerships and alliances with industry stakeholders, cybersecurity experts, and academia to share threat intelligence and best practices. Leverage collective knowledge to enhance the overall cybersecurity and safety posture of ATS.

- Implement secure data sharing: develop the frameworks that ensure the confidentiality, integrity, privacy, and cybersafety (attribute connecting cybersecurity with safety) of data exchanged within and between ATS. Utilise encryption, access controls, and blockchain technology for secure and trusted data sharing.

- Embrace generative AI security and cybersafety: address the potential cybersecurity risks and safety threats associated with generative AI algorithms in ATS. Develop robust security testing and validation procedures specific to generative AI models to identify and mitigate vulnerabilities.

- Continuously monitor and update security measures: establish a continuous monitoring system to track the effectiveness of cybersecurity measures in AI-powered ATS. Regularly update security protocols, leverage threat intelligence, and adapt to emerging cyber threats.

- Foster AI-driven threat hunting: utilise AI-driven threat hunting techniques to proactively search for potential cyber threats in ATS. Develop AI models to analyse large datasets, identify patterns, and detect anomalies to enhance proactive defence strategies.

4.2. Evolution of Cybersecurity Tools, AI as a Whole, and Generative AI in Particular

- Evolution of cybersecurity tools:

- Advanced threat detection: as cybersecurity tools evolve, they may incorporate more advanced techniques such as behaviour-based analysis, anomaly detection, and machine learning algorithms. This can enhance the effectiveness of threat detection in ATS, enabling earlier detection and response to cyber threats, thus decreasing the risk of safety violation.

- Real-time monitoring and response: advanced cybersecurity tools may provide real-time monitoring capabilities, allowing immediate response to potential cyberattacks. This can enable faster incident containment and mitigation, reducing the impact on the safety and functionality of ATS.

- Integration with AI systems: integrating cybersecurity tools with AI systems can result in more intelligent and adaptive defence. AI algorithms can analyse vast amounts of data to identify patterns, detect anomalies, and respond to emerging threats in real-time, enhancing the overall cybersecurity and, at the end of the day, safety posture of ATS.

- Evolution of AI as a whole:

- Improved threat intelligence: AI algorithms can analyse large datasets and identify patterns humans might overlook. This can improve threat intelligence, allowing for more accurate identification and prediction of cyber threats in ATS, which can influence safety.

- Advanced intrusion detection and prevention: AI-powered intrusion detection systems can continuously learn and adapt to evolving cyber threats. They can detect and prevent sophisticated attacks that traditional rule-based systems might miss, bolstering the cybersecurity defences of autonomous transport systems.

- Enhanced authentication and access control: AI can facilitate advanced authentication mechanisms, such as biometrics and behavioural analysis, to strengthen access control in autonomous transport systems. This can minimise the risk of unauthorised access and mitigate potential cyberattacks.

- Influence of generative AI:

- Potential cybersecurity risks: using generative AI algorithms in ATS introduces potential cybersecurity risks. These algorithms can be vulnerable to adversarial attacks or manipulation, leading to safety risks. The roadmap needs to account for developing robust defences against such attacks.

- Security testing and validation: generative AI algorithms require thorough security testing and validation to ensure their integrity and attack resilience. The roadmap should incorporate processes for testing and validating generative AI models to identify potential vulnerabilities which can target safety threats and address them before deployment.

- Robust AI governance: the roadmap should consider robust AI governance frameworks that ensure the ethical, secure, and safe development, deployment, and operation of generative AI algorithms in ATS. This provides accountability, transparency, and continuous monitoring of AI systems to mitigate cybersecurity risks.

5. Discussion

5.1. Interconnection between OT and IT

- identifying and assessing the risks and vulnerabilities associated with both OT and IT domains. This includes evaluating the potential impact of cyber threats on the safety and reliability of the autonomous transport system.

- implementing appropriate safety and security controls in both OT and IT domains. This may include implementing physical, technical, and procedural safeguards to prevent or mitigate the effects of cyber threats.

- establishing clear communication and collaboration between OT and IT teams. This includes sharing information about potential risks and vulnerabilities and ensuring that safety and security controls are implemented and maintained consistently across both domains.

- incorporating AI into the functional safety and cybersecurity analysis process. AI can identify potential threats and vulnerabilities in the system and develop and implement effective safety and security controls.

- decomposition of reasons related to cybersecurity that affect safety risks, significantly reducing the uncertainty of system behaviour and the consequences of cyberattacks on the vulnerability of ATSs. This is due to the possibility of careful analysis of the chain “threat–vulnerability–attack–consequences–countermeasures” from the point of view of safety. The accuracy of determining the effect of the use of AI, as well as the consequences of cyberattacks on the components of ATSs, which are implemented using AI, is increased thanks to the decomposition of the set of characteristics of AI using a quality model.

5.2. Application of Hardware-Oriented Techniques Which Were Developed for Functional Safety Analysis to Software-Oriented Cybersecurity Analysis

- They have been used successfully for safety and can be adapted for cybersecurity because of the experience and gained lessons learned during application in safety-critical domains;

- They can be very effective at identifying vulnerabilities and threats in systems;

- They can help ensure that critical systems continue to function even if attackers target them;

- They can help build resilience into systems, making them more attack resistant;

- Cons of using hardware-oriented techniques for cybersecurity are as follows:

- They can be expensive and time-consuming to implement;

- They may not be effective against all types of attacks;

- They may be less effective against attacks specifically designed to bypass them;

- They may not be appropriate for all types of systems.

5.3. EU Initiative on AI: Ensuring the Appropriate Safety and Liability Regulations

5.4. Application of AI-Powered Cyberattacks: CBRNe Issues

5.5. Challenges of Security-Informed Safety Analysis of ATSs

- Multiple interconnected components, such as sensors, actuators, control systems, and communication networks, are used in ATSs. It can be difficult to analyse the safety and security implications of such complex systems since flaws or vulnerabilities in one component might have cascading effects on the system as a whole.

- ATSs operate in dynamic and unpredictable environments, interacting with other transportation systems and infrastructure. Assessing the safety and security of these systems requires considering various scenarios and potential risks associated with different operational conditions, such as adverse weather or unexpected events.

- As autonomous transport technology evolves rapidly, there is a lack of standardised frameworks, guidelines, and regulations specifically addressing the safety and security aspects of these systems. This creates challenges in conducting comprehensive analysis, as there is no universally accepted methodology or set of criteria to evaluate the safety and security of ATSs.

- ATSs often involve human interaction, such as operators or maintenance personnel. Considering human factors, such as user behaviour, training, and response to system failures, is essential for comprehensive analysis. However, analysing and incorporating these factors into the analysis can be complex and requires a multidisciplinary approach.

6. Conclusions

- Addressing emerging challenges: The integration of AI in autonomous transport systems introduces new challenges and risks, particularly in terms of cybersecurity. By adopting this approach, the investigation aims to proactively address these emerging challenges and develop methodologies and techniques that consider the application of AI in the context of cybersecurity and safety.

- Holistic assessment: The approach combines SIS and AIQM to provide a holistic assessment of autonomous transport systems. It considers not only the traditional safety considerations but also the specific implications of AI-powered attacks and the quality attributes of AI systems. This comprehensive assessment allows for a more accurate understanding of the risks and vulnerabilities of ATS and provides a foundation for effective risk mitigation.

- Transparency and traceability: The proposed SISMECA technique enhances the transparency of assessing the consequences of cyberattacks. By integrating known FMECA/IMECA techniques and developing an ontology model, the approach enables a structured analysis of failure and intrusion modes. This transparency and traceability facilitate a clear understanding of the risks and help identify suitable countermeasures to mitigate those risks.

- User-centred analysis: By investigating user stories in the maritime, aviation, and space domains, the approach ensures a user-centred analysis of the application of AI in the context of cybersecurity and safety. This user-centric perspective helps identify specific challenges, requirements, and best practices that are relevant to different domains. The insights gained from these user stories contribute to the development of effective assessment techniques and risk mitigation strategies tailored to the needs of different autonomous transport systems.

- Minimising uncertainty and enhancing risk assessment: The proposed AIQM- and SISMECA-based techniques aim to minimise uncertainty in risk assessment. By decomposing safety and AI quality attributes and applying entropy measures, the approach provides a more accurate and reliable assessment of cybersecurity and safety risks in ATS. This reduction in uncertainty enables stakeholders to make informed decisions and allocate resources effectively to address the identified risks.

- Researchers and academics: The investigation contributes to the theoretical understanding of integrating security, safety, and AI quality in the context of ATS. It provides a methodological base and techniques that can be further explored and expanded upon by researchers and academics in the field.

- ATS developers and manufacturers: The approach offers a framework and assessment techniques that help ATS developers and manufacturers identify and address cybersecurity and safety risks associated with AI-powered attacks. By integrating these considerations early in the design and development stages, they can enhance the security and reliability of their systems.

- Regulatory bodies and policymakers: The investigation provides insights and recommendations for regulatory bodies and policymakers in developing standards, guidelines, and regulations for the cybersecurity and safety of autonomous transport systems. It offers a structured approach to assess and evaluate the risks, ensuring that appropriate measures are implemented to protect against AI-driven cyber threats.

- Operators and service providers: The proposed approach helps operators and service providers of autonomous transport systems to assess and manage cybersecurity risks effectively. By utilising the AIQM and SISMECA-based techniques, they can enhance the protection of their assets, minimise potential disruptions, and ensure the safe and secure operation of their systems.

- End-users and the public: The adoption of this approach benefits end-users and the general public by increasing the security and safety of autonomous transport systems. By implementing robust cybersecurity measures and considering AI quality attributes, the risk of accidents, disruptions, and unauthorised access to sensitive information can be minimised, thereby ensuring public trust and confidence in these systems.

- Complexity and implementation challenges: The proposed approach involves integrating multiple techniques, methodologies, and models. Implementing such a comprehensive approach may be complex and require significant resources, including expertise, time, and funding. It could pose challenges for organisations with limited capabilities or smaller budgets, potentially limiting the widespread adoption of these techniques.

- Difficulty in capturing evolving AI threats: The field of AI cybersecurity is rapidly evolving, with new attack vectors and techniques constantly emerging. The proposed approach may face challenges in keeping up with these evolving threats and ensuring that the assessment techniques remain up to date. It may require continuous monitoring and updating to effectively address the dynamic nature of AI-powered attacks.

- Potential bias and subjectivity in assessments: The integration of AI quality attributes and the subjective nature of assessing risks and criticality could introduce potential bias or subjectivity in the assessment process. Different assessors or organisations may have varying interpretations or weighting of risk factors, which could lead to inconsistent results and decisions.

- Trade-off between security and usability: Enhancing cybersecurity measures often involves introducing additional layers of security controls, which can impact the usability and user experience of autonomous transport systems. Striking the right balance between security and usability is crucial to ensure that the systems remain efficient and user-friendly, and that they do not hinder their intended functionality.

- Limited focus on emergent threats and zero-day vulnerabilities: The investigation’s emphasis on known failure and intrusion modes may overlook emergent threats and zero-day vulnerabilities that have not yet been identified or documented. Rapidly evolving cyber threats require continuous monitoring and proactive measures to identify and address new attack vectors effectively.

- enhancing models and techniques combining AIQM and SISMECA approaches, including refinement of AI quality and various XMECA templates models to minimise uncertainties and dependencies on expert errors;

- quantitative assessment of cybersecurity and safety evaluations entropy in the context of attributes decomposition on the application of AIQM and SISMECA;

- completing tool-based support of AIQM&SISMECA-based techniques for different actors (regulators, developers, operators, and customers) considering their specific responsibility and scenarios of AI-powered protection against AI-powered attacks;

- developing multi-criteria techniques for choosing countermeasures considering security, reliability, and safety attributes at component and system levels;

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations and Acronyms

| Abbreviation/Acronym | Meaning |

| Acc&Pen | Access and Penetration |

| ACR | Accuracy |

| AIG | General for AI and AIP |

| AIP | AI Platform |

| AIQM | AI quality model |

| AIS | AI System |

| AI | Artificial Intelligence |

| ATA | Attacks on System Assets |

| ADT | Auditability |

| ATS | Autonomous Transport Systems |

| AVL | Availability |

| CBRNe | Chemical, Biological, Radiological, Nuclear, and Explosives |

| CMT | Completeness |

| CMH | Comprehensibility |

| CSIS | Cybersecurity-Informed Safety |

| C&C | Command and Control |

| CRT | Assessment of the Criticality of Effects |

| DNN | Deep Neural Network |

| Del | Delivery |

| DEV | Developer |

| EFS | Effectiveness |

| EFF | Effects for System Operation |

| ETH | Ethics |

| EXP | Explainability |

| Exp | Exploitation |

| FMECA | Failure Mode, Effects, and Criticality Analysis |

| GAN | Generative Adversarial Network |

| GBRT | Gradient Boosted Regression Trees |

| GCS | Ground Control Station |

| HMI | Human–Machine Interface |

| INR | Interactivity |

| INP | Interpretability |

| IDS | Intrusion Detection System |

| IMECA | Intrusion Modes, Effects, and Criticality Analysis |

| FNR | K-Nearest Neighbour |

| LFL | Lawfulness |

| LR | Logistic Regression |

| MNT | Maintainability |

| MASS | Maritime Autonomous Surface Ship |

| NN | Neural Network |

| OPR | Operator |

| XMECA | Overall designation of Modes and Effects Criticality Analysis modifications |

| PRE | Probability of an Event |

| RF | Random Forest |

| Rec | Reconnaissance |

| RNN | Recurrent Neural Network |

| REG | Regulator |

| RLB | Reliability |

| RSL | Resiliency |

| RBS | Robustness |

| SFT | Safety |

| SCR | Security |

| SIS | Security Informed Safety |

| SISMECA | Security or Cybersecurity Informed Safety Event Modes, Effects, and Criticality Analysis |

| SVE | Severity of Effects |

| SCC | Shore Control Centre |

| SS | Sub-Stories |

| SVC | Support Vector Classification |

| SVM | Support Vector Machine |

| STPA | System-Theoretic Process Analysis |

| STPA-SynSS | System-Theoretic Process Analysis-Based Methodology for MASS Safety and Security Assessment |

| THR | Threats to System Operation |

| TRP | Transparency |

| TST | Trustworthiness |

| UAV | Unmanned Aerial Vehicle |

| USR | User |

| VFB | Verifiability |

| VLN | Vulnerabilities of System Components and Operation Processes |

| UMV | Unmanned Maritime Vehicle |

| US-ATS.A | User Story for Autonomous Transport Systems from Aviation Domain |

| US-ATS.M | User Story for Autonomous Transport Systems from Maritime Domain |

| US-ATS.S | User Story for Autonomous Transport Systems from Satellite Domain |

| USB | Usability |

References

- Javed, M.A.; Ben Hamida, E.; Znaidi, W. Security in Intelligent Transport Systems for Smart Cities: From Theory to Practice. Sensors 2016, 16, 879. [Google Scholar] [CrossRef] [Green Version]

- Zeddini, B.; Maachaoui, M.; Inedjaren, Y. Security Threats in Intelligent Transportation Systems and Their Risk Levels. Risks 2022, 10, 91. [Google Scholar] [CrossRef]

- Fursov, I.; Yamkovyi, K.; Shmatko, O. Smart Grid, and Wind Generators: An Overview of Cyber Threats and Vulnerabilities of Power Supply Networks. Radioelectron. Comput. Syst. 2022, 4, 50–63. [Google Scholar] [CrossRef]

- Yamin, M.M.; Ullah, M.; Ullah, H.; Katt, B. Weaponized AI for cyber attacks. J. Inform. Secur. Appl. 2021, 57, 102722. [Google Scholar] [CrossRef]

- Kaloudi, N.; Jingyue, L.I. The AI-based Cyber Threat Landscape: A survey. ACM Comput. Surv. 2020, 53, 20. [Google Scholar] [CrossRef] [Green Version]

- Guembe, B.; Azeta, A.; Misra, S.; Osamor, V.C.; Fernandez-Sanz, L.; Pospelova, V. The Emerging Threat of AI-driven Cyber Attacks: A Review. Appl. Art. Intell. 2022, 36, 2037254. [Google Scholar] [CrossRef]

- Kasabji, D. How Could AI Simplify Malware Attacks, and Why Is This Worrying? Available online: https://conscia.com/blog/how-could-ai-simplify-malware-attacks-and-why-is-this-worrying (accessed on 20 March 2023).

- Hitaj, B.; Gasti, P.; Ateniese, G.; Perez-Cruz, F. PassGAN: A Deep Learning Approach for Password Guessing. In Applied Cryptography and Network Security. ACNS 2019; Lecture Notes in Computer Science; Deng, R., Gauthier-Umaña, V., Ochoa, M., Yung, M., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11464, pp. 217–237. [Google Scholar] [CrossRef] [Green Version]

- Trieu, K.; Yang, Y. Artificial Intelligence-Based Password Brute Force Attacks. In Proceedings of the 2018 Midwest Association for Information Systems Conference, St. Louis, MO, USA, 17–18 May 2018; pp. 1–7. [Google Scholar]

- Lee, K.; Yim, K. Cybersecurity Threats Based on Machine Learning-Based Offensive Technique for Password Authentication. Appl. Sci. 2020, 10, 1286. [Google Scholar] [CrossRef] [Green Version]

- Hu, W.; Tan, Y. Generating Adversarial Malware Examples for Black-Box Attacks Based on GAN. arXiv 2021, arXiv:1702.05983. [Google Scholar] [CrossRef]

- Chung, K.; Kalbarczyk, Z.T.; Iyer, R.K. Availability Attacks on Computing Systems Through Alteration of Environmental Control: Smart Malware Approach. In Proceedings of the 10th ACM/IEEE International Conference on Cyber-Physical Systems (ICCPS), Montreal, QC, Canada, 16–18 April 2019; pp. 1–12. [Google Scholar] [CrossRef]

- Kirat, D.; Jang, J.; Stoecklin, M. DeepLocker Concealing Targeted Attacks with AI Locksmithing. Available online: https://www.blackhat.com/us-18/briefings/schedule/index.html#deeplocker—concealing-targeted-attacks-with-ailocksmithing-11549 (accessed on 20 April 2023).

- Yahuza, M.; Idris, M.Y.I.; Ahmedy, I.B.; Wahab, A.W.A.; Nandy, T.; Noor, N.M.; Bala, A. Internet of Drones Security and Privacy Issues: Taxonomy and Open Challenges. IEEE Access 2021, 9, 57243–57270. [Google Scholar] [CrossRef]

- Kavallieratos, G.; Katsikas, S.; Gkioulos, V. Cyber-attacks Against the Autonomous Ship. In Computer Security. SECPRE CyberICPS 2018; Lecture Notes in Computer Science; Katsikas, S., Cuppens, F., Cuppens, N., Lambrinoudakis, C., Antón, A., Gritzalis, S., Mylopoulos, J., Kalloniatis, C., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11387, pp. 20–36. [Google Scholar] [CrossRef]

- Manulis, M.; Bridges, C.P.; Harrison, R.; Sekar, V.; Davis, A. Cyber Security in New Space: Analysis of Threats, Key Enabling Technologies and Challenges. Int. J. Inf. Secur. 2021, 20, 287–311. [Google Scholar] [CrossRef]

- Zhu, J.; Wang, C. Satellite Networking Intrusion Detection System Design Based on Deep Learning Method. In Communications, Signal Processing, and Systems. CSPS 2017; Lecture Notes in Electrical Engineering; Liang, Q., Mu, J., Jia, M., Wang, W., Feng, X., Zhang, B., Eds.; Springer International Publishing: Singapore, 2019; Volume 463, pp. 2295–2304. [Google Scholar] [CrossRef]

- Abu Al-Haija, Q.; Al Badawi, A. High-performance Intrusion Detection System for Networked UAVs via Deep Learning. Neural Comput. Appl. 2022, 34, 10885–10900. [Google Scholar] [CrossRef]

- Gecgel, S.; Kurt, G.K. Intermittent Jamming Against Telemetry and Telecommand of Satellite Systems and a Learning-driven Detection Strategy. In Proceedings of the 3rd ACM Workshop on Wireless Security and Machine Learning (WiseML), Abu Dhabi, United Arab Emirates, 28 June–2 July 2021; pp. 43–48. [Google Scholar] [CrossRef]

- Whelan, J.; Almehmadi, A.; El-Khatib, K. Artificial Intelligence for Intrusion Detection Systems in Unmanned Aerial Vehicles. Comput. Electr. Eng. 2022, 99, 107784. [Google Scholar] [CrossRef]

- Koroniotis, N.; Moustafa, N.; Slay, J. A New Intelligent Satellite Deep Learning Network Forensic Framework for SSNs. Comput. Electr. Eng. 2022, 99, 107745. [Google Scholar] [CrossRef]

- Ashraf, I.; Narra, M.; Umer, M.; Majeed, R.; Sadiq, S.; Javaid, F.; Rasool, N. A Deep Learning-Based Smart Framework for Cyber-Physical and Satellite System Security Threats Detection. Electronics 2022, 11, 667. [Google Scholar] [CrossRef]

- Yaacoub, J.P.; Noura, H.; Salman, O.; Chehab, A. Security Analysis of Drones Systems: Attacks, limitations, and Recommendations. IoT 2020, 11, 100218. [Google Scholar] [CrossRef]

- Furumoto, K.; Kolehmainen, A.; Silverajan, B.; Takahashi, T.; Inoue, D.; Nakao, K. Toward Automated Smart Ships: Designing Effective Cyber Risk Management. In Proceedings of the 2020 IEEE Congress on Cybermatics, Rhodes Island, Greece, 2–6 November 2020; pp. 100–105. [Google Scholar] [CrossRef]

- Torianyk, V.; Kharchenko, V.; Zemlianko, H. IMECA Based Assessment of Internet of Drones Systems Cyber Security Considering Radio Frequency Vulnerabilities. In Proceedings of the 2nd International Workshop on Intelligent Information Technologies and Systems of Information Security, Khmelnytskyi, Ukraine, 24–26 March 2021; Volume 2853, pp. 460–470. Available online: https://ceur-ws.org/Vol-2853/paper50.pdf (accessed on 20 March 2023).

- Piumatti, D.; Sini, J.; Borlo, S.; Sonza Reorda, M.; Bojoi, R.; Violante, M. Multilevel Simulation Methodology for FMECA Study Applied to a Complex Cyber-Physical System. Electronics 2020, 9, 1736. [Google Scholar] [CrossRef]

- Waleed Al-Khafaji, A.; Solovyov, A.; Uzun, D.; Kharchenko, V. Asset Access Risk Analysis Method in the Physical Protection Systems. Radioelectron. Comput. Syst. 2019, 4, 94–104. [Google Scholar] [CrossRef] [Green Version]

- Kharchenko, V.; Fesenko, H.; Illiashenko, O. Basic Model of Non-functional Characteristics for Assessment of Artificial Intelligence Quality. Radioelectron. Comput. Syst. 2022, 2, 131–144. [Google Scholar] [CrossRef]

- Kharchenko, V.; Fesenko, H.; Illiashenko, O. Quality Models for Artificial Intelligence Systems: Characteristic-Based Approach, Development and Application. Sensors 2022, 22, 4865. [Google Scholar] [CrossRef]

- Siebert, J.; Joeckel, L.; Heidrich, J.; Trendowicz, A.; Nakamichi, K.; Ohashi, K.; Namba, I.; Yamamoto, R.; Aoyama, M. Construction of a Quality Model for Machine Learning Systems. Softw. Qual. J. 2021, 30, 307–335. [Google Scholar] [CrossRef]

- Vasyliev, I.; Kharchenko, V. A Framework for Metric Evaluation of AI Systems Based on Quality Model. Syst. Control Navig. 2022, 2, 41–46. [Google Scholar] [CrossRef]

- Felderer, M.; Ramler, R. Quality Assurance for AI-Based Systems: Overview and Challenges (Introduction to Interactive Session). In Software Quality: Future Perspectives on Software Engineering Quality. SWQD 2021; Lecture Notes in Business Information Processing; Winkler, D., Biffl, S., Mendez, D., Wimmer, M., Bergsmann, J., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 404, pp. 33–42. [Google Scholar] [CrossRef]

- Dovbysh, A.; Liubchak, V.; Shelehov, I.; Simonovskiy, J.; Tenytska, A. Information-extreme Machine Learning of a Cyber attack Detection System. Radioelectron. Comput. Syst. 2022, 3, 121–131. [Google Scholar] [CrossRef]

- Kolisnyk, M. Vulnerability Analysis and Method of Selection of Communication Protocols for Information Transfer in Internet of Things Systems. Radioelectron. Comput. Syst. 2021, 1, 133–149. [Google Scholar] [CrossRef]

- Bloomfield, R.; Netkachova, K.; Stroud, R. Security-Informed Safety: If It’s Not Secure, It’s Not Safe. In Software Engineering for Resilient Systems. SERENE 2013; Lecture Notes in Computer Science; Gorbenko, A., Romanovsky, A., Kharchenko, V., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2013; Volume 8166, pp. 17–32. [Google Scholar] [CrossRef] [Green Version]

- Zhou, X.Y.; Liu, Z.J.; Wang, F.W.; Wu, Z.L. A System-theoretic Approach to Safety and Security Co-Analysis of Autonomous Ships. Ocean Eng. 2021, 222, 108569. [Google Scholar] [CrossRef]

- Pascarella, D.; Gigante, G.; Vozella, A.; Bieber, P.; Dubot, T.; Martinavarro, E.; Barraco, G.; Li Calzi, G. A Methodological Framework for the Risk Assessment of Drone Intrusions in Airports. Aerospace 2022, 9, 747. [Google Scholar] [CrossRef]

- Breda, P.; Markova, R.; Abdin, A.; Jha, D.; Carlo, A.; Mantı, N.P. Cyber Vulnerabilities and Risks of AI Technologies in Space Applications. In Proceedings of the 73rd International Astronautical Congress (IAC), Paris, France, 18–22 September 2022; p. hal-03908014. Available online: https://hal.science/hal-03908014/document (accessed on 9 March 2023).

- Babeshko, I.; Illiashenko, O.; Kharchenko, V.; Leontiev, K. Towards Trustworthy Safety Assessment by Providing Expert and Tool-Based XMECA Techniques. Mathematics 2022, 10, 2297. [Google Scholar] [CrossRef]

- Kharchenko, V.; Illiashenko, O.; Fesenko, H.; Babeshko, I. AI Cybersecurity Assurance for Autonomous Transport Systems: Scenario, Model, and IMECA-Based Analysis. In Multimedia Communications, Services and Security. MCSS 2022. Communications in Computer and Information Science; Dziech, A., Mees, W., Niemiec, M., Eds.; Springer: Cham, Switzerland, 2022; Volume 1689. [Google Scholar] [CrossRef]

- Kharchenko, V.; Kliushnikov, I.; Rucinski, A.; Fesenko, H.; Illiashenko, O. UAV Fleet as a Dependable Service for Smart Cities: Model-Based Assessment and Application. Smart Cities 2022, 5, 1151–1178. [Google Scholar] [CrossRef]

- IEC 60812:2018; Failure Modes and Effects Analysis (FMEA and FMECA). International Organization for Standardization: Geneva, Switzerland, 2018.

- Deliverables—ECHO Network. Available online: https://echonetwork.eu/deliverables/ (accessed on 9 March 2023).

- European Commission. Communication from the Commission to the European Parliament, the European Council, the Council, the European economic and Social Committee and the Committee of the Regions. Artificial Intelligence for Europe. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:52018DC0237&from=EN (accessed on 9 March 2023).

- European Agency for Safety and Health at Work, EU Strategic Framework on Health and Safety at Work 2021–2027. Available online: https://osha.europa.eu/en/safety-and-health-legislation/eu-strategic-framework-health-and-safety-work-2021-2027 (accessed on 9 March 2023).

- European Agency for Safety and Health at Work, Directive 2006/42/EC—New Machinery Directive. Available online: https://osha.europa.eu/en/legislation/directives/directive-2006-42-ec-of-the-european-parliament-and-of-the-council (accessed on 9 March 2023).

- European Commission. Radio Equipment Directive (RED). Available online: https://single-market-economy.ec.europa.eu/sectors/electrical-and-electronic-engineering-industries-eei/radio-equipment-directive-red_en (accessed on 9 March 2023).

- European Parliament. Council of the European Union, Directive 2001/95/EC of the European Parliament and of the Council of 3 December 2001 on General Product Safety. Available online: https://eur-lex.europa.eu/legal-content/EN/ALL/?uri=celex%3A32001L0095 (accessed on 9 March 2023).

- Bertuzzi, L. EU Finalises New Product Safety Requirements: Here Is What Changes. Available online: https://www.euractiv.com/section/digital-single-market/news/eu-finalises-new-product-safety-requirements-here-is-what-changes/ (accessed on 9 March 2023).

- European CBRN Innovation for the Market Cluster ENCIRCLE, EU CBRNe Policy. Available online: https://encircle-cbrn.eu/resources/eu-cbrn-policy/ (accessed on 9 March 2023).

- European Commission. EU Develops Strategic Reserves for Chemical, Biological and Radio-Nuclear Emergencies. Available online: https://ec.europa.eu/commission/presscorner/detail/en/ip_22_2218 (accessed on 9 March 2023).

- Veprytska, O.; Kharchenko, V. AI Powered Attacks Against AI Powered Protection: Classification, Scenarios and Risk Analysis. In Proceedings of the 2022 12th International Conference on Dependable Systems, Services and Technologies (DESSERT), Athens, Greece, 9–11 December 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Ozirkovskyy, L.; Volochiy, B.; Shkiliuk, O.; Zmysnyi, M.; Kazan, P. Functional Safety Analysis of Safety-Critical System Using State Transition Diagram. Radioelectron. Comput. Syst. 2022, 1, 145–158. [Google Scholar] [CrossRef]

- Kharchenko, V.; Ponochovnyi, Y.; Ivanchenko, O.; Fesenko, H.; Illiashenko, O. Combining Markov and Semi-Markov Modelling for Assessing Availability and Cybersecurity of Cloud and IoT Systems. Cryptography 2022, 6, 44. [Google Scholar] [CrossRef]

- Bisikalo, O.; Kovtun, V.; Kovtun, O.; Romanenko, V. Research of safety and survivability models of the information system for critical use. In Proceedings of the 2020 IEEE 11th International Conference on Dependable Systems, Services and Technologies (DESSERT), Kyiv, Ukraine, 14–18 May 2020; pp. 7–12. [Google Scholar] [CrossRef]

| AI-Powered Attack | Ref. | Technique | Stage | Safety Issues | Targets in the ATS Infrastructure for Attacks | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| On-Board Equipment | GCS | Channel | ||||||||

| AI-Based | Non- AI-Based | AI-Based | Non- AI-Based | ATS-ATS | ATS-GCS | |||||

| Intelligent malware | [7] | ChatGPT | Rec | - | - | √ | - | √ | √ | √ |

| PassGAN | [8] | GAN | Acc&Pen | - | - | - | - | √ | - | - |

| Intelligent password brute force attack | [9] | RNN | Acc&Pen | - | - | - | - | √ | - | - |

| Offensive password authentication | [10] | LR, SVM, SVC, RF, KNN, GBRT | Acc&Pen | - | - | - | - | √ | - | - |

| Adversarial malware generation | [11] | GAN | Del | - | √ | - | √ | - | - | - |

| Self-learning malware | [12] | K-means clustering | Exp | - | √ | √ | √ | √ | - | - |

| DeepLocker | [13] | DNN | C&C | - | - | - | - | √ | - | - |

| Considered Type of ATS | Ref. | Analysed Component of the ATS Infrastructure | Main Contribution | AI-Based Intrusion Detection/ Prevention Solution |

|---|---|---|---|---|

| UAV | [14] | on-board equipment, UAV–UAV, UAV–GCS | The level of security threats for the various drone categories. A comprehensive taxonomy of the attacks on the Internet of Drones (IoD) network. The results of the review of the recent IoD attack mitigation techniques. | - |

| [18] | on-board equipment, UAV–GCS | An autonomous intrusion detection system utilising deep convolutional neural networks (CNNs) to detect the malicious threats invading the UAV efficiently. | √ | |

| [20] | on-board equipment, UAV–GCS | A detection method based on principal component analysis and one-class classifiers for identifying and mitigating spoofing and jamming attacks. | √ | |

| [23] | on-board equipment, UAV–GCS | The results of the experiments to detect, intercept, and hijack a UAV through either de-authentication or jamming. | - | |

| Satellite | [16] | on-board equipment, satellite–satellite, satellite–GCS | The results of the analysis of the past satellite security threats and incidents. The results of segment and sector analysis of satellite security incidents. | - |

| [17] | on-board equipment, satellite–satellite, satellite–GCS | Deep Learning (DL)-based flexible satellite network intrusion detection system for detecting unforeseen and unpredictable attacks. | √ | |

| [19] | on-board equipment, satellite–GCS | A lightweight CNN-based detection scheme for detecting barrage, pilot tone, and intermittent jamming attacks against satellite systems. | √ | |

| [21] | GCS, satellite–satellite, satellite–GCS | DL-based network forensic framework for the detection and investigation of cyber-attacks in smart satellite networks. | √ | |

| [22] | GCS, satellite–satellite, satellite–GCS | A robust and generalised DL-based intrusion detection approach for terrestrial and satellite network environments. | √ | |

| Maritime Autonomous Surface Ship (MASS) | [15] | on-board equipment, MASS–MASS, MASS–GCS | STRIDE (Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service and Elevation of Privilege) threat modelling methodology for analysing the accordant risk. A special risk matrix and threat/likelihood criteria for assessing the risk. | - |

| [24] | on-board equipment, MASS–MASS, MASS–GCS | The results of the analysis of attack scenarios for MASS cybersecurity risk management. A secure ship network topology for realising MASS operations. | - |

| Threats (THR) | Vulnerabilities (VLN) | Attacks (ATA) | Effects (EFF) | Probability (PRE) | Severity (SVE) | Criticality (CRT) | Sub-Stories (SSX) |

|---|---|---|---|---|---|---|---|

| SS1 | |||||||

| … | |||||||

| SSN |

| Threats (THR) | Vulnerabilities (VLN) | Attacks (ATA) | Effects (EFF) | Probability (PRE) | Severity (SVE) | Recovery (REC) | Criticality (CRT) | Countermeasures (CTM) | Sub-Stories (SSX) |

|---|---|---|---|---|---|---|---|---|---|

| SS1 | |||||||||

| … | |||||||||

| SSN |

| Threats (THR) | Vulnerabilities (VLN) | Attacks (ATA) | Effects (EFF) | Probability (PRE) | Severity (SVE) | Recovery (REC) | Criticality (CRT) | Countermeasures (CTM) | Actors (ACT) | Sub-Stories (SSX) |

|---|---|---|---|---|---|---|---|---|---|---|

| SS1 | ||||||||||

| … | ||||||||||

| SSN |

| Threats (THR) | Vulnerabilities (VLN) | Attacks (ATA) | Effects on security (EFF_SEC) | Effects on safety (EFF_SAF) | Probability (PRE) | Severity (SVE) | Recovery (REC) | Criticality (CRT) | Countermeasures (CTM), (Actors, ACT) | CRT/CTM | Sub-Stories (SSX) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| SS1 | |||||||||||

| … | |||||||||||

| SSN |

| THR | VLN | ATA | EFF | PRE | SVE | REC | CRT (no REC/ REC) | AI-Based CTM (Criticality via PRE Decreasing) | SS | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DEV | REG | OPR | USR | |||||||||

| Activities of hacker centres | Human machine interface weaknesses | Spoofing | Access to the system and critical information | M | H | M | H/M | PRE: L/M; CRT: M/M | PRE: L/M; CRT: M/M | PRE: M/M; CRT: H/H | PRE: L/M; CRT: M/M | SS1 |

| Tampering | Control and monitor the ship from the shore | M | H | M | H/M | PRE: L/M; CRT: M/M | PRE: L/M; CRT: M/M | PRE: M/M; CRT: H/M | PRE: L/M; CRT: M/M | SS2 | ||

| Information disclosure | Damages related to the vessel’s navigation and management | M | H | M | H/M | PRE: L/M; CRT: M/M | PRE: L/M; CRT: M/M | PRE: M/M; CRT: H/H | PRE: L/M; CRT: M/M | SS3 | ||

| Denial of service | A ship can be control-less and invisible to the Shore Control Centre | M | H | M | H/M | PRE: L/M; CRT: M/M | PRE: L/M; CRT: M/M | PRE: M/M; CRT: H/M | PRE: L/M; CRT: M/M | SS4 | ||

| Elevation of privilege | Access sensitive data about the vessel’s condition, its customers, and passengers | M | H | M | H/M | PRE: L/M; CRT: M/M | PRE: L/M; CRT: M/M | PRE: M/M; CRT: H/M | PRE: L/M; CRT: M/M | SS5 | ||

| Repudiation | Distortion of data, which is stored in logs | L | M | M | L/L | PRE: L/L; CRT: L/L | PRE: L/L; CRT: L/L | PRE: L/L; CRT: L/L | PRE: L/L; CRT: L/L | SS6 | ||

| Threats (THR) | Vulnerabilities (VLN) | Attacks (ATA) | Effects on Security (EFF_SEC) | Effects on Safety (EFF_SAF) | Probability (PRE) | Severity (SVE) | Recovery (REC) | Criticality (CRT) | Countermeasures (CTM), (Actors, ACT) | CRT/CTM | Sub-Stories (SSX) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Activities of hacker centres | Human Machine Interface weaknesses | Spoofing | Access to the system and critical information | Issue of malicious commands | M | H | L | H/H | (1) CTMsec (2) CTMsaf (3) CTMcomb | M/H M/M L/M | SS1sec SS1saf SS1comb |

| THR | VLN | ATA | EFF | PRE | SVE | REC | CRT (no REC/ REC) | AI-Based CTM (Criticality via PRE Decreasing) | SS | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DEV | REG | OPR | USR | |||||||||

| Activities of hacker centres | Navigation system weaknesses | GPS spoofing attacks | Ability to force the UAV to land in a pre-picked zone | M | H | H | H/H | PRE: L/M; CRT: M/M | PRE: L/M; CRT: M/M | PRE: M/M; CRT: H/H | PRE: L/M; CRT: M/M | SS1 |

| GPS jamming attacks | Loss of control of the UAV | M | M | L | M/M | PRE: L/M; CRT: L/L | PRE: L/M; CRT: L/L | PRE: M/M; CRT: M/M | PRE: L/M; CRT: L/M | SS2 | ||

| Threats (THR) | Vulnerabilities (VLN) | Attacks (ATA) | Effects on Security (EFF_SEC) | Effects on Safety (EFF_SAF) | Probability (PRE) | Severity (SVE) | Recovery (REC) | Criticality (CRT) | Countermeasures (CTM), (Actors, ACT) | CRT/CTM | Sub-Stories (SSX) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Activities of hacker centres | Navigation system weaknesses | GPS jamming attacks | Loss of control of the UAV | Damages caused by uncontrolled UAV | M | M | L | M/M | (1) sec (2) saf (3) comb | M/M M/L L/L | SS1sec SS1saf SS1comb |

| THR | VLN | ATA | EFF | PRE | SVE | REC | CRT (no REC/ REC) | AI-Based CTM (Criticality via PRE Decreasing) | SS | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DEV | REG | OPR | USR | |||||||||

| Activities of hacker centres | Communication protocol weaknesses | DDoS attack | Increased network interactions | M | M | L | M/L | PRE: L/M; CRT: L/L | PRE: L/M; CRT: L/L | PRE: MM; CRT: M/L | PRE: L/M; CRT: M/L | SS1 |

| Power supply devices | DDoS or targeted attack | Power depletion | L | M | H | L/M | PRE: L/L; CRT: L/M | PRE: L/L; CRT: L/L | PRE: L/L; CRT: L/L | PRE: L/L; CRT: L/L | SS2 | |

| Possibility of tampering with the OS/firmware | Tampering attack | A non-functional state known as bricks | L | H | M | M/M | PRE: M/M; CRT: M/M | PRE: M/M; CRT: M/M | PRE: M/M; CRT: M/M | PRE: M/M; CRT: M/M | SS3 | |

| Vulnerabilities of transmitting data between satellites and ground station | Man-In-The-Middle (MITM) attack | Violation of confidentiality and integrity of smart satellites data | M | M | L | M/L | PRE: L/L; CRT: L/L | PRE: L/L; CRT: L/L | PRE: M/L; CRT: M/L | PRE: L/L; CRT: L/L | SS4 | |

| Data manipulation attacks | M | H | M | H/H | PRE: L/L; CRT: M/M | PRE: L/L; CRT: M/M | PRE: M/M; CRT: H/H | PRE: L/L; CRT: M/M | SS5 | |||

| Threats (THR) | Vulnerabilities (VLN) | Attacks (ATA) | Effects on Security (EFF_SEC) | Effects on Safety (EFF_SAF) | Probability (PRE) | Severity (SVE) | Recovery (REC) | Criticality (CRT) | Countermeasures (CTM), (Actors, ACT) | CRT/CTM | Sub-Stories (SSX) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Activities of hacker centres | Power supply devices | DDoS or targeted attack | Power depletion | Uncontrolled drop | L | M | H | M/M | (1) sec (2) saf (3) comb | L/M L/L L/L | SS1sec SS1saf SS1comb |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Illiashenko, O.; Kharchenko, V.; Babeshko, I.; Fesenko, H.; Di Giandomenico, F. Security-Informed Safety Analysis of Autonomous Transport Systems Considering AI-Powered Cyberattacks and Protection. Entropy 2023, 25, 1123. https://doi.org/10.3390/e25081123

Illiashenko O, Kharchenko V, Babeshko I, Fesenko H, Di Giandomenico F. Security-Informed Safety Analysis of Autonomous Transport Systems Considering AI-Powered Cyberattacks and Protection. Entropy. 2023; 25(8):1123. https://doi.org/10.3390/e25081123

Chicago/Turabian StyleIlliashenko, Oleg, Vyacheslav Kharchenko, Ievgen Babeshko, Herman Fesenko, and Felicita Di Giandomenico. 2023. "Security-Informed Safety Analysis of Autonomous Transport Systems Considering AI-Powered Cyberattacks and Protection" Entropy 25, no. 8: 1123. https://doi.org/10.3390/e25081123