Abstract

In the acquisition process of 3D cultural relics, it is common to encounter noise. To facilitate the generation of high-quality 3D models, we propose an approach based on graph signal processing that combines color and geometric features to denoise the point cloud. We divide the 3D point cloud into patches based on self-similarity theory and create an appropriate underlying graph with a Markov property. The features of the vertices in the graph are represented using 3D coordinates, normal vectors, and color. We formulate the point cloud denoising problem as a maximum a posteriori (MAP) estimation problem and use a graph Laplacian regularization (GLR) prior to identifying the most probable noise-free point cloud. In the denoising process, we moderately simplify the 3D point to reduce the running time of the denoising algorithm. The experimental results demonstrate that our proposed approach outperforms five competing methods in both subjective and objective assessments. It requires fewer iterations and exhibits strong robustness, effectively removing noise from the surface of cultural relic point clouds while preserving fine-scale 3D features such as texture and ornamentation. This results in more realistic 3D representations of cultural relics.

1. Introduction

Three-dimensional laser scanning technology has become increasingly popular in various fields of society, such as digitization, virtual display, and virtual restoration of cultural relics. However, the acquisition process of cultural relic point clouds often results in noise in geometry and color due to the inherent limitations of 3D laser scanners or depth cameras. This noise can be caused by occlusion resulting from various view angles, reflective materials, dust on the surface of objects, light intensities, and the operation of scanning personnel [1]. The cultural relic point cloud surface typically contains significant fine details, such as ornamentation or textures, which can be intertwined with surface noise. Effectively removing noise from the surface of the cultural relic point cloud while preserving the fine-scale 3D features is a significant challenge.

In order to acquire a high-precision 3D model of a cultural relic with realistic texture, it is essential to remove noise from the raw 3D point cloud. The noise in the point cloud can be divided into two categories based on their distribution: surface noise and outliers [2]. Each outlier will usually be far away from the surface of the point cloud with a sparse neighborhood, which means that they are easy to remove using methods such as the boxplot method or special software. However, eliminating surface noise presents a greater challenge as it is often closely intertwined with the underlying surface of the 3D point cloud. This is especially true when the surface of the 3D point cloud features texture and ornamentation.

To obtain clean point clouds for further processing, various surface smoothing techniques have been developed in the past two decades. These techniques include filtering-based methods [3,4,5,6], moving least squares (MLS)-based methods [7,8], locally optimal projection (LOP)-based methods [9,10], non-local methods [11,12,13], and sparsity-based methods [14,15,16,17]. Although these methods have been successful in achieving excellent denoising effects for 3D models with smooth surfaces, they have not yielded satisfactory results for point clouds of cultural relics. This often results in over-smoothing and the loss of surface details. Striking a balance between preserving fine details and achieving effective denoising with these methods is challenging.

In recent years, several methods have been proposed for denoising point clouds, including the graph feature learning method [18,19,20,21,22,23,24,25,26] and the deep learning method [27,28,29,30,31,32,33].

The effectiveness of deep learning in denoising point clouds depends heavily on factors such as the geometric structure, the scale of the data, and the noise characteristics of the training set. When faced with an unknown scene or limited data, the method based on deep learning may not necessarily outperform traditional methods. For instance, a model trained using commonly available 3D point clouds may experience a significant decrease in performance when applied to point clouds of cultural relics, which are considered to be rare samples.

Graph-based denoising methods utilize graph filters to remove noise from point clouds represented by graphs [34]. Previous methods such as graph Laplacian regularization (GLR) [20] and the feature graph learning [23] algorithm have shown promising results in inferring the underlying graph structure of clean point clouds. However, these methods primarily rely on geometric priors, making it challenging to achieve effective denoising while preserving fine detail.

We raise an interesting question: if color perception information is added to guide the graph signal processing, can a balance between denoising effectiveness and detail preservation be achieved?

To investigate this, we propose a novel 3D point cloud denoising method based on graph signal processing specifically designed for cultural relic point clouds. Our contributions are twofold. First, we incorporate not only geometric information such as 3D coordinates and surface normals but also color distribution as a feature. The use of a multi-modal representation for vertex features leads to superior denoising performance. Second, we introduce a 3D point cloud simplification module to dynamically adjust the number of 3D point clouds to reduce the running time of the denoising algorithm.

This paper is organized as follows: In Section 2, we introduce previous point cloud denoising methods. In Section 3, we describe the basic concepts of graph signal processing. In Section 4, we provide the details of our proposed method, which mainly focuses on surface noise removal. In Section 5, we present the experimental results and discussion. Finally, we present our conclusions.

2. Related Work

Point cloud denoising techniques can be divided into two main types: outlier removal techniques and surface noise smoothing techniques. Outlier removal is a relatively straightforward process, as outliers are usually distinct from other data points and can be easily identified and removed. On the other hand, surface noise removal can be more challenging, as surface noise is often random and irregular and requires more sophisticated techniques to be removed. In this paper, we will primarily focus on surface noise removal methods.

Filtering-based methods: Filtering-based methods were initially used for 2D image smoothing and were later extended to denoise 3D point clouds [2]. These methods assume that the noise on the surface of point clouds is high-frequency noise, and they use filters that target vertices or face normals. Early approaches utilized Laplacian smoothing or improved Laplacian transform based on vertex positions to denoise triangular meshes. However, this often resulted in the excessive smoothing of surface features and was not effective when dealing with large amounts of noise. In recent years, filtering-based methods have been significantly improved. Notable examples include guided normal filtering [3,4] and rolling guidance normal filtering [5], which have demonstrated successful denoising effects in practical applications [6]. Nevertheless, a major drawback of these methods is that the normal filtering process tends to blur the small-scale features of the 3D model surface, resulting in the over-smoothing of 3D models with intricate surface details.

MLS-based and LOP-based methods: Early in the development of denoising technology, moving least squares (MLS) and local optimal projection (LOP) methods were well-known and popular denoising methods. However, their denoising effect is limited, and they are no longer the mainstream methods. MLS-based methods [7] approximate the point cloud using a smooth surface and project the points from the input point cloud onto the fitted surface. These methods are unstable in cases of a low sampling rate or high curvature and are highly sensitive to outliers [8]. LOP-based methods [9] aim to find the best possible solution to represent the underlying surface within a local region of the search space while ensuring an even distribution across the input point cloud. However, these methods can suffer from over-smoothing [10].

Non-local methods: Non-local methods [11,12,13] establish self-similarity among surface patches in the point cloud by solving an optimization problem. However, these methods often suffer from high computational complexity when searching for non-local similar patches.

Sparsity-based methods: Sparsity-based methods [14,15,16] transform the denoising problem of a 3D point cloud into an optimization problem. This is achieved by obtaining a sparse representation of the surface normal by minimizing the number of non-zero coefficients with sparsity regularization. To preserve the sharp features of the 3D point cloud, either the or norm is used. It should be noted that sparsity-based methods tend to give better denoising results when the noise is small. However, for high noise levels, these methods can suffer from either over-sharpening or over-smoothing [17].

Graph-based methods: Graph-based denoising methods [18,19,20,21,22,23,24,25,26] transform the problem of removing noise into a graph-constrained optimization problem and perform noise removal through the structure and connectivity of the graph. However, a drawback of these approaches is that they often misestimate the local surface by relying solely on the geometry information of the vertices. In addition, the performance of graph-based denoising methods remains unstable for highly noisy point clouds.

Deep learning methods: Deep learning denoising methods [27,28,29,30,31,32,33,34,35] train an end-to-end neural network to remove noise. During the training stage, the model learns the mapping between noisy points and clean clouds. In the testing stage, the trained model is used to denoise point clouds with similar noise characteristics and geometric shapes. Deep learning methods are more effective at denoising and preserving fine features. However, these methods require a large volume of training data, making them time-consuming and impractical for unknown scenes. In addition, optimizing and improving the efficiency of the algorithm is also an important consideration.

Several alternative denoising methods have been proposed by other scholars. For instance, there is a point cloud denoising algorithm based on a method library [36], as well as a laser point cloud denoising method that uses principal component analysis (PCA) and surface fitting [37]. However, these methods often encounter the common issue of inadequate denoising of sharp edges, resulting in excessive smoothing.

Recently, some scholars have proposed deep-unfolding denoising [38,39,40] and quantum-based denoising [41,42], which have achieved competitive results compared to state-of-the-art image denoising tasks. How to draw on the ideas of these methods to denoise the point cloud is a very valuable research work in the future.

3. Preliminaries

3.1. Graph Signal and Graph Laplacian

In this section, we present a brief overview of fundamental concepts in graph signal processing. We define an undirected weighted graph for a vertex set of cardinality , where the edge set connects vertices of the form . Each edge is assigned a non-negative weight , and the adjacency matrix is a real matrix with values ranging from 0 to 1. The combinatorial graph Laplacian is defined as , where represents the degree matrix of the graph , with denoting the degree of each vertex.

3.2. Graph Laplacian Prior

Graph signal data reside on the vertices of a graph, which include 3D coordinates, normal vectors, and color information on a 3D point cloud. A graph signal is considered to be smooth with respect to the topology of if it satisfies the following conditions:

where is a positive scalar, and the Laplacian matrix is a symmetric positive semi-definite matrix. The larger is, the more similar and are and the smaller the value of is.

Formula (1) forces signal to adapt to the topology of , which is referred to as graph Laplacian regularization (GLR), also known as graph signal smoothness prior. By minimizing the graph Laplacian regularization term, the signal can be smoothed. This prior is used in our paper to remove the surface noise, as discussed in Section 4.

If we consider as a function for signals , then reweighted prior is redefined as

where , and is the (i, j)-th element of the corresponding adjacency matrix W.

4. The Proposed Method

Considering a point cloud contaminated by noise with a Gaussian noise distribution, our basic strategy can be viewed from the theory of graph signal processing; our goal is to move noisy points to the underlying surface to generate a clean point cloud .

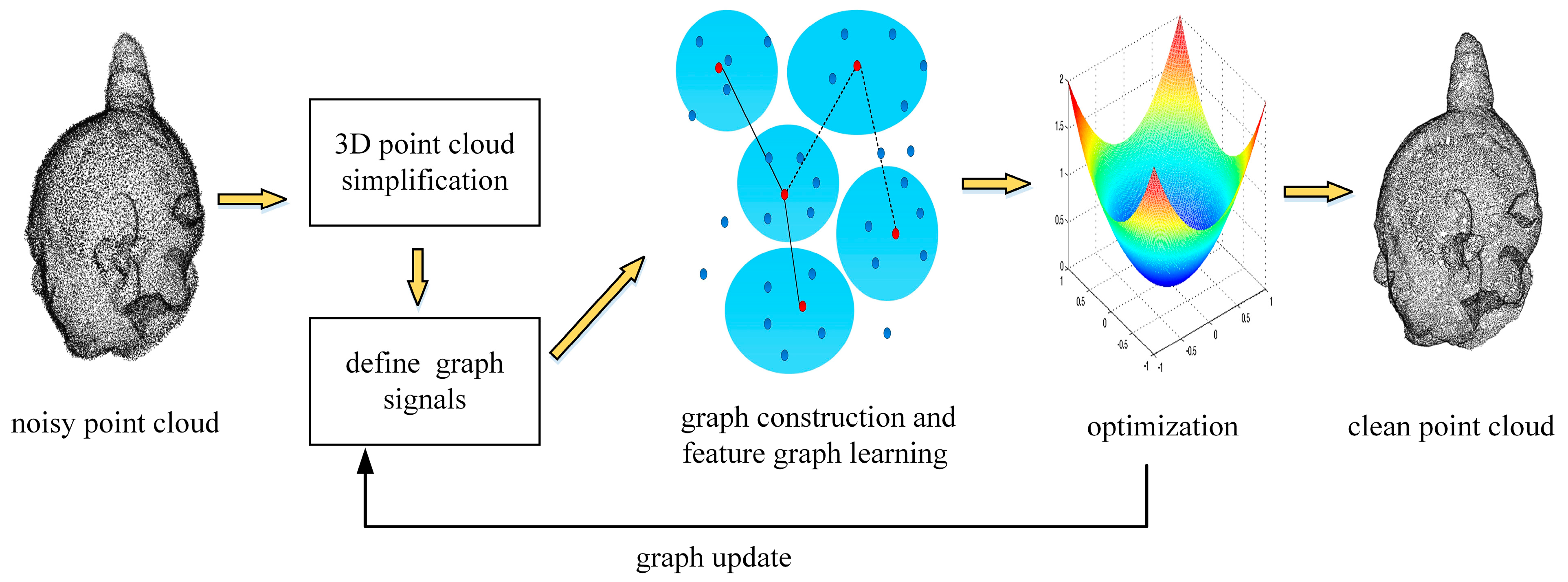

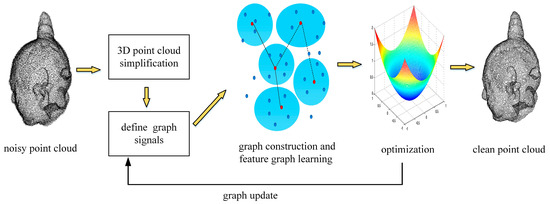

Figure 1 illustrates the implementation of our denoising approach, which consists of the following four modules:

Figure 1.

The proposed denoising approach.

- Simplification of 3D point cloud: reducing the collection of points to a smaller subset that retains its fundamental topology, thereby reducing the running time of the denoising algorithm;

- Definition of graph signals: interpreting the geometric and color information of the vertices in the input point clouds as graph signals; the geometric information includes geometry coordinates and normal vectors;

- Graph construction and feature graph learning: defining local patches within the point cloud and constructing a graph model with Markovian properties; using a feature graph learning scheme to determine edge weights and solving a maximum a posteriori (MAP) estimation problem with GLR as the signal prior;

- Application of an optimization algorithm to enforce smoothness on the graph signal: we alternately optimize the feature metric matrix M by minimizing the GLR, and M and noisy point cloud are updated alternately until the algorithm converges, and finally, we obtain the clean point cloud.

4.1. Three-Dimensional Point Cloud Simplification

High-precision 3D artifact point clouds usually have many points, inevitably leading to high computational complexity and long processing times during denoising. Therefore, it is necessary to simplify the raw data to a more appropriate size without affecting the denoising effect. We use the method described in [43] for simplifying point clouds. The simplification process is divided into the following four steps:

- A bounding box for the point cloud is created. A local kd−tree consisting of 27 cubes of size 3 × 3 × 3 is constructed. The advantage of using this form to organize the points in the point cloud is that the neighborhood points and leaf nodes of the given point can be accurately identified.

- Five feature indexes are calculated to extract features from the point cloud. These five feature indexes include the curvature feature index, the density feature index, the edge feature index, the terrain feature index, and the 3D feature index, denoted as a, b, c, d, and e, respectively. The advantage of this multiple-feature indexing approach is that it can deal with different types of point clouds and discover more intrinsic characteristics of the point cloud.

- The weights of the five feature indexes are calculated using the analytic hierarchy process (AHP) method based on data features. Assuming that is the weight index of feature indexes a, b, c, d, and e, the quantification result of point p is .

- Points with larger z-values are identified as feature points, and points with smaller z-values are identified as non-feature points. All feature points form a simplified point cloud. According to the kd−tree constructed earlier, if there is no feature point in each leaf node, the non-feature point closest to the center of gravity of the node is selected to be added to the simplified point cloud.

4.2. Defining the Graph Signal by Combining Geometry and Color

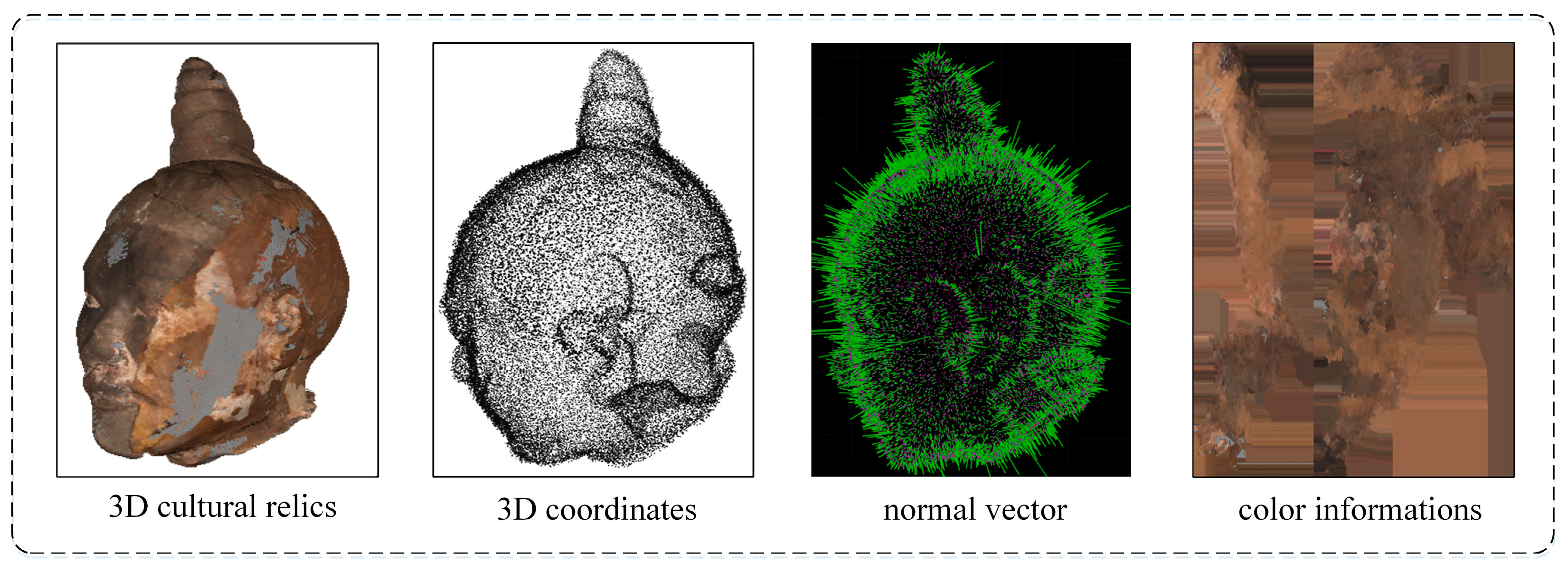

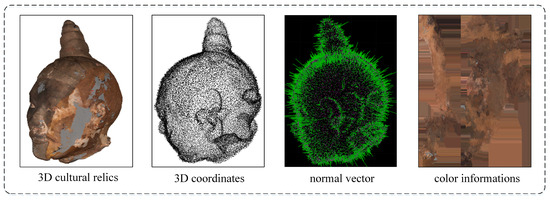

Color, an important piece of information of a point cloud, has been used for 3D model retrieval [44] and point cloud segmentation [45,46]. The combination of color and geometry can positively affect graph construction, which is more semantically meaningful than using geometry alone [47]. In this paper, we aim to use both the color and geometry attributes of a vertex in the point cloud to investigate their crucial role in denoising. Figure 2 illustrates the color and geometry information of the point cloud.

Figure 2.

Geometry and color information of a vertex in a point cloud.

We constructed a k-NN graph with Markovian properties, where each vertex is connected to its k-nearest neighbors by connecting edges with associated weights. In addition to using the 3D coordinates and normal vector of the vertices as signals, we added the color attributes as graph signals. The feature vector of a vertex in the graph is denoted as

where 3D coordinates , normal vector , and RGB color information for vertex .

The normal vector is one of the important properties of the points in a point cloud. The normal vector of a point cloud is the orientation of each point in a point cloud. For example, the direction of the normal vector in Figure 3 points to the outside of the surface of the point cloud. The normal vector of a point cloud is usually a 3D vector that describes the normal properties of the point cloud surface, such as the flatness, curvature, and normal variation in the point cloud surface.

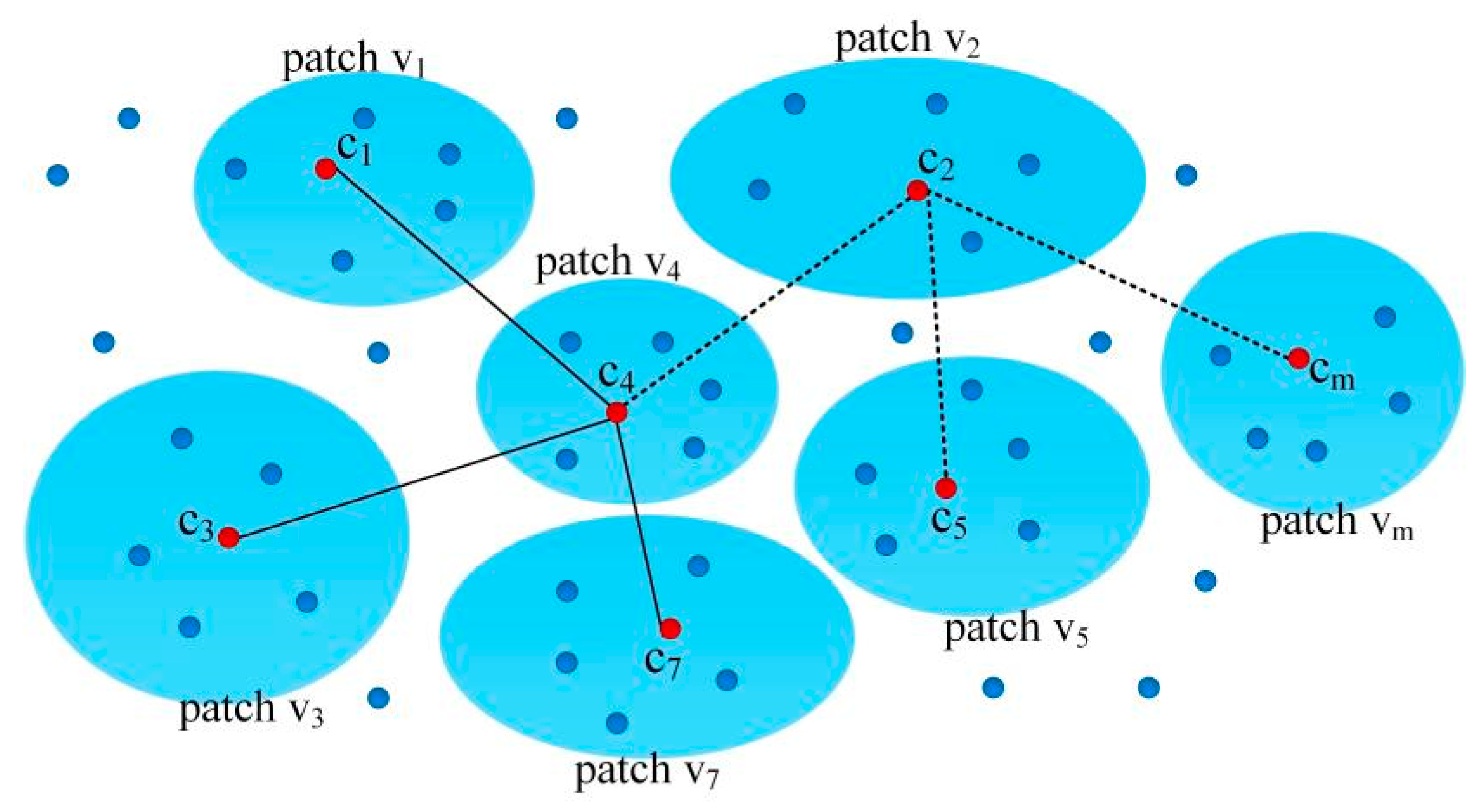

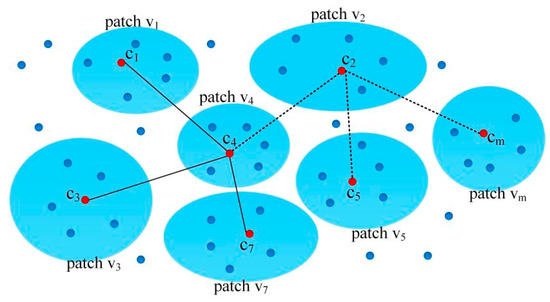

Figure 3.

Illustration of the patch , and their adjacent patches.

4.3. Constructing a Graph Based on Self-Similarity Theory

We followed the method described in [26] to define the local patches in a point cloud. These patches may overlap with each other. We assumed that these patches were self-similar [48] and established connections between corresponding points, forming a k-NN graph. We considered each local patch of the point cloud as a matrix, which had a low rank. Consequently, the problem of denoising the point cloud can be reformulated as a task for minimizing the rank of the matrix.

In this study, we used a uniform sampling method to select center points from point cloud Y. For each center point , we used the k-nearest-neighbor (k-NN) [49] algorithm to identify -nearest-neighbor points in terms of Euclidean distance. A patch is a set of points that is composed of one center point and nearest neighbors. The number of nearest neighbors was determined based on an empirical value, denoted as , where is the number of points in the point cloud . As a result, we obtained local patches from the point cloud , as shown in Figure 3. The collection of all points within these patches is referred to as a patch set, denoted as .

Then, we identified adjacent patches for patch . We used the k-NN algorithm to find the nearest center point for the center point of patch in a set of patches. The patches that the nearest center points are located in are recognized as adjacent patches of . As shown in Figure 3, , and the adjacent patches of are , , and . For a point , there exists a nearest corresponding point , and the Euclidean distance between and is the smallest.

In the process of constructing the local patches mentioned above, each patch is only related to its adjacent patches. The vertices in the patch are only connected to the corresponding vertices in the adjacent patch. As a result, these vertices and edges form a graph model with Markov properties.

4.4. Graph Feature Learning

We aim to calculate an optimal Mahalanobis distance for the given signals, which are represented as length-9 vectors of relevant features in a graph. We assumed two sets, and , which denote the k-nearest neighbors to vertices and , respectively. If or , then

where and represent the normal vector of vertices and ; and represent the color information of vertices and ; and represent the relative contribution of the 3D coordinates and normal vectors in the constructed graph; and represents the relative contribution of color.

Defining , we express (4) in matrix form as

where is the feature difference between the two connected nodes and . The appropriate parameters , , and play an important role in achieving good denoising performance. How to determine these parameters is the next aspect to consider.

The 3D coordinates, normal vector, and color information are features of different scales. In this context, we used the Mahalanobis distance as a measure of the similarity between the two signals. The Mahalanobis distance is written as

where is the Mahalanobis distance matrix, which is a measure of the relative importance of individual features in the calculation of .

In the context of a graph, the edge weight represents the similarity of the signals between two samples. We define the edge weight using the Gaussian kernel, a commonly used method, which guarantees that the resulting graph Laplacian matrix is positive semi-definite.

The GLR, expressed in Formula (2), is redefined as

4.5. Optimization Algorithm

We considered the solution of a clean point cloud as a feature graph learning problem. As discussed in [23], we minimized the GLR and determined the appropriate underlying graph based on signal z.

Additionally, we assumed a point cloud with added noise, namely

where denotes the 3D coordinates of the point cloud with added noise, denotes the 3D coordinates of the clean point cloud, and denotes the white Gaussian noise (AWGN) [50] that appears near the underlying surface. The AWGN has zero mean and standard deviation.

Given a noisy set , the goal is to minimize the noise and obtain a noiseless set . This is achieved by applying the maximum a posteriori criterion, which involves finding the most probable given the observed .

where is the prior probability distribution of and is the likelihood function.

In the case of additive Gaussian white noise, the likelihood function is defined as follows:

where is the Frobenius norm.

If is a graph with Markov properties [51], and GLR is taken as the prior probability distribution of the set , then the following is evident:

where and is the Markov distance matrix.

The denoising formula can be obtained by combining (11)–(13).

It should be noted that is a constraint parameter closely related to the algorithm performance.

Denoising a 3D point cloud is an iterative process. In the first iteration, is initialized with the identity matrix. Then, the Laplacian matrix is computed, and the conjugate gradient method [52] is used to solve it. In the subsequent iteration, is updated, and the optimization problem of is solved using the near-end gradient method (PG) [53]. The values of and are updated alternately until they converge.

The optimization algorithm is presented in Algorithm 1.

| Algorithm 1: Optimization algorithm |

| Input: Noisy point cloud , number of patches m, number of nearest neighbors k, |

| number of adjacent patches , trace constraint . |

| Output: Denoised point cloud Y. |

| 1 Initialize Y with ; |

| 2 for iter = 1, 2,… do |

| 3 estimate normal for Y; |

| 4 initialize m empty patches V; |

| 5 find the adjacent patches; |

| 6 initialize M with identity matrix; |

| 7 compute the feature distance for each vertex pair(i,j); |

| 8 solve M; |

| 9 compute adjacency matrix W over all patches; |

| 10 compute Laplacian matrix L; |

| 11 solve Y with (14); |

| 12 end |

5. Experiment Results and Analysis

5.1. Experiment Environment and Dataset

Our method was implemented on a desktop computer running MS Windows 10. The computer was equipped with an Intel® Core™ i9-9900k CPU (3.60 GHz), 64 GB of RAM, and two GeForce RTX 2070 GPUs. We used MATLAB R2019b programming for the implementation.

To demonstrate the state of the art of our approach, we performed experiments on 3D point clouds of terracotta warrior fragments, tiles from the Qin Dynasty, and Tang tri-color Hu terracotta sculptures, as shown in Figure 4. We achieved the best performance of the algorithm by selecting the optimal parameters. We repeated the experiments thirty times and calculated the average results for three metrics: SNR, MSE, and running time.

Figure 4.

Three-dimensional point clouds of cultural relics: (a–f) terracotta warrior fragments numbered G3-I-b-70, 4#yt, G10-52, G10-46-5, G3-I-C-94, and G10-11-43(47); (g,h) Qin Dynasty tiles numbered Q002789 and Q003418; (i,j) Tang tri-color Hu terracotta sculptures numbered H73 and H80.

5.2. Evaluation Metrics

The evaluation of the denoising results was performed using visual effects, SNR, and MSE, following recent point cloud denoising research. Let us assume that the real point cloud and the predicted point cloud are denoted as and , respectively., and and may not be equal here.

To measure the fidelity of the denoising result, we used , which is the minimum absolute error sum of the normal direction difference between the noisy point cloud and the denoised point cloud. A lower MSE value indicates a better denoising effect. The calculation of the MSE is as follows:

The is a measure of the signal-to-noise ratio in a 3D point cloud, usually expressed in decibels. A higher signal-to-noise ratio indicates better denoising reliability of the algorithm. The can be calculated using the following formula:

5.3. Algorithm Performance Analysis

5.3.1. The Effect of Parameters on Algorithm Performance

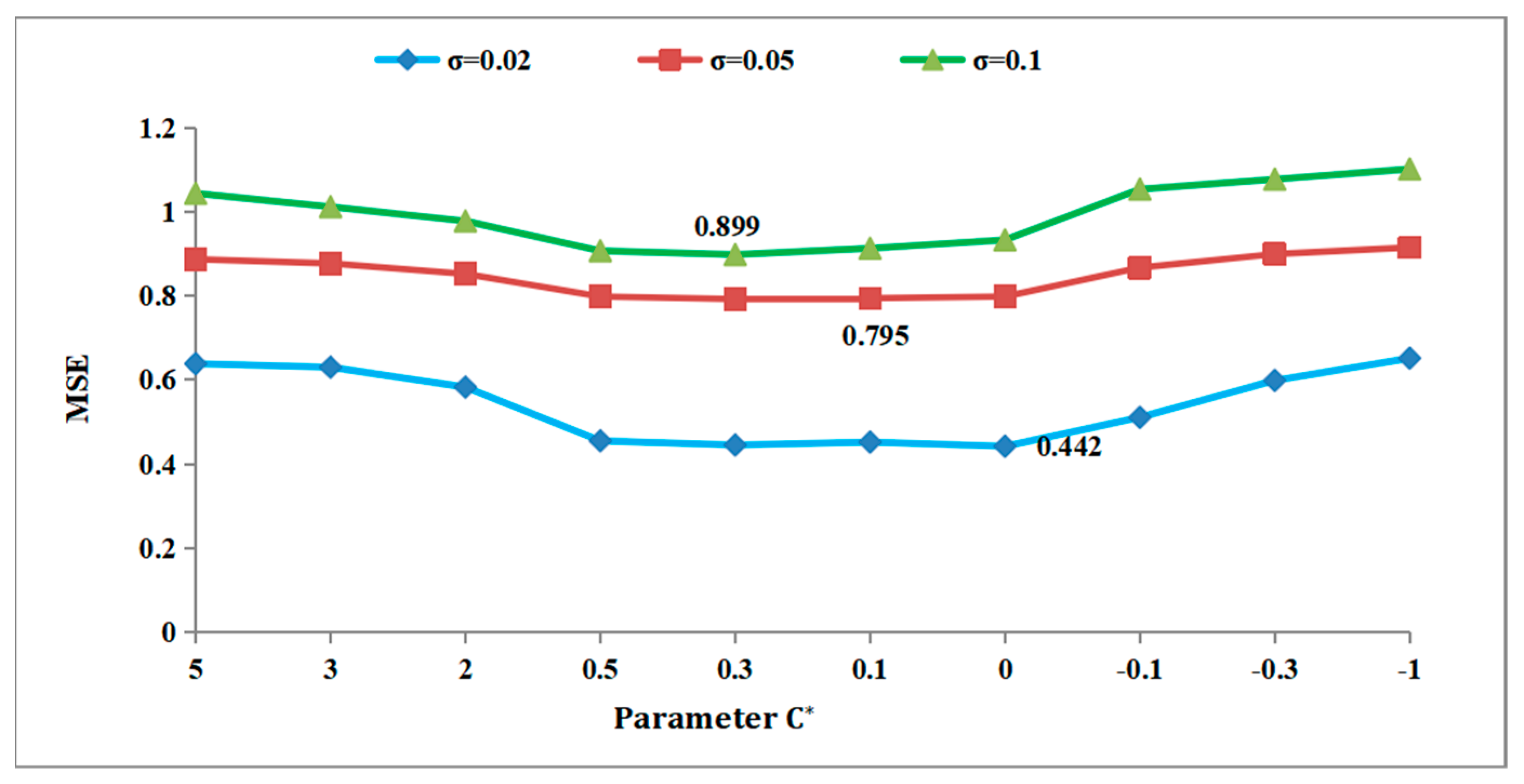

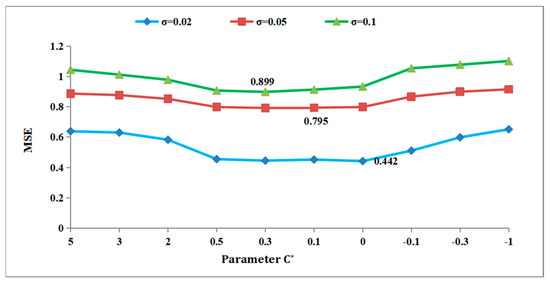

Our algorithm has four main parameters: the number of patches m, the number of points in each patch k, the number of nearest neighboring patches , and the constraint parameter . Among these parameters, has a significant impact on the denoising effect. Therefore, it is important to determine the optimal value for C. To do so, we can first choose an initial value based on experience and then explore values around this initial value with a certain step size to determine the optimal value.

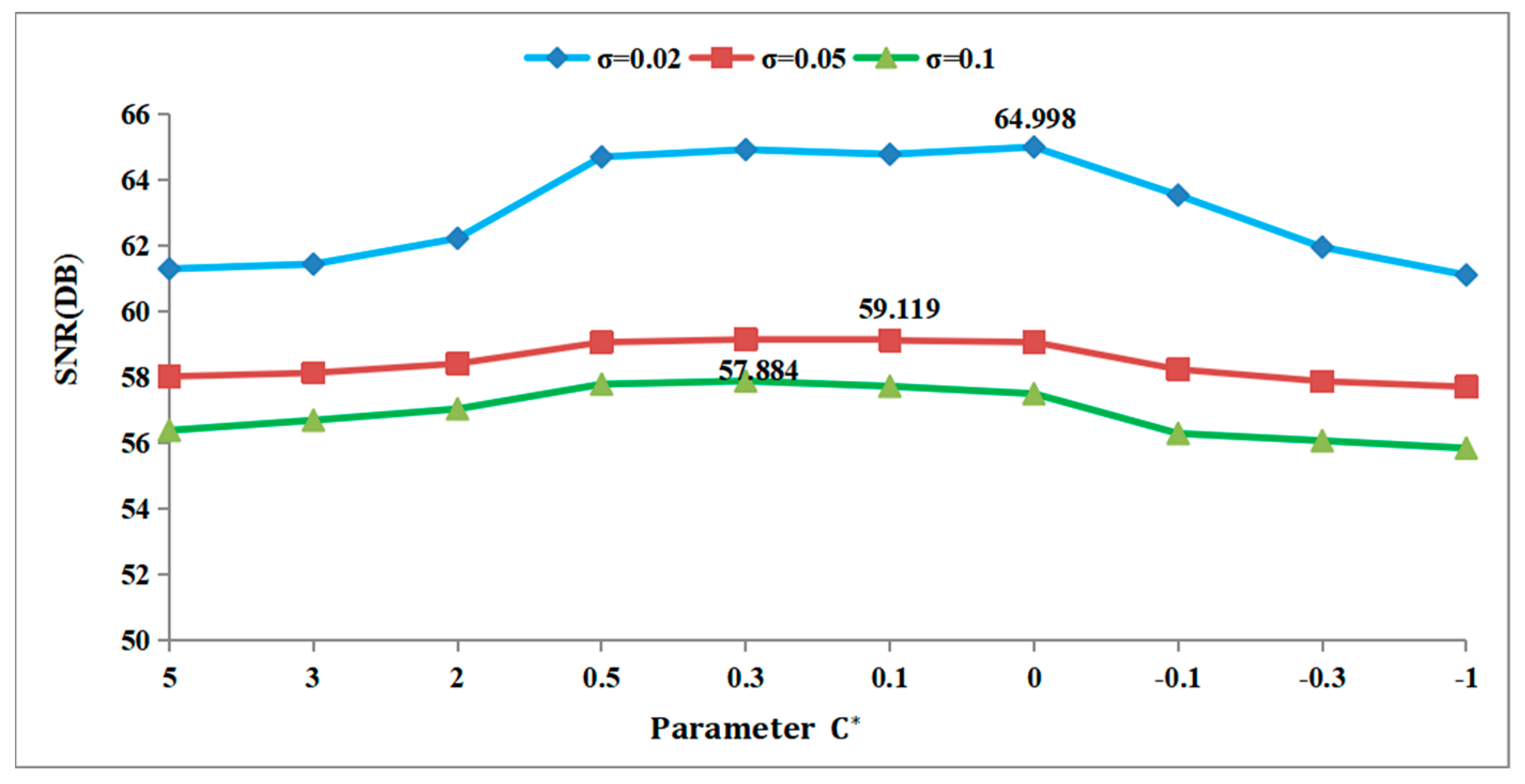

In this study, we analyzed the effect of parameter values on the MSE and SNR under Gaussian white noise with standard deviations σ = 0.02, σ = 0.05, and σ = 0.1. To illustrate this, we chose the 3D fragment numbered G3-I-b-70 as our experimental data source.

As illustrated in Figure 5, the blue line represents the MSE value for noise with a σ of 0.02. The red trend line represents the MSE value for noise with a σ of 0.05, while the green trend line represents the MSE value for noise with a σ of 0.1. When the value of is 0, the minimum MSE value on the blue line is 0.442, indicating the algorithm’s optimal denoising effect of the algorithm at this point. Similarly, when the value of is 0.1, the minimum MSE on the red line is 0.795, signifying the best denoising effect. Lastly, with a value of 0.3, the minimum MSE on the green line is 0.899, denoting the optimal denoising effect of the algorithm at this particular point.

Figure 5.

Effect of on MSE for G3−Ib−70.

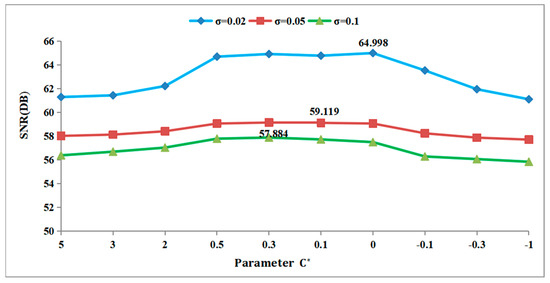

As shown in Figure 6, as the noise levels vary, and the value changes, the denoising effect of the algorithm, as indicated by the SNR value, remains consistent with the MSE value. When the value of is 0.3, the maximum SNR on the green line is 57.884, indicating the best denoising effect. Similarly, when the value of is 0.1, the maximum SNR value on the red line is 59.119, indicating the optimal denoising effect of the algorithm at this point. Finally, with a value of 0, the maximum SNR on the blue line is 64.998, indicating the optimal denoising effect of the algorithm at this particular point.

Figure 6.

Effect of on SNR for G3−I−b−70.

5.3.2. Ablation Experiment

In this study, we present the experimental results for two proposed methods: one using only geometry and the other using both geometry and color. We identified four main parameters that gave the best results for the proposed approach, as shown in Table 1.

Table 1.

Parameter setting.

The algorithm was evaluated based on four quantitative indicators: SNR, MSE, iterations, and running time. For the sake of clarity, the subjective results of the comparison between the proposed algorithm using only geometry and combined geometry and color are presented in Table 2. To illustrate this, we chose the 3D fragment numbered 4#yt as our experimental data source.

Table 2.

Comparison of the proposed approach using only geometry and combined geometry and color on 4#yt.

Table 2 shows that the SNR and MSE of the denoising algorithm with the combined geometry and color serving as graph signals outperform the geometry-only approach. Furthermore, the inclusion of color information does not result in a significant increase in iterations or running time.

As shown in Table 3, the 3D point cloud is simplified by reducing the number of points from 58,380 to 30,000. When σ = 0.02, the number of iterations of the denoising algorithm is reduced by 3, and the running time of the algorithm is reduced by 129 s. Similarly, when σ = 0.05, the number of iterations of the denoising algorithm is reduced by 9, and the running time is reduced by 731 s. These results show that when σ is less than 0.1, the value of SNR and MSE is almost unchanged. Therefore, in low-noise scenarios, it is advisable to first simplify the high-precision 3D cultural relic model obtained using scanning and then proceed with denoising.

Table 3.

Comparison of experimental results before and after point cloud simplification on 4#yt.

When σ = 0.1, the number of iterations of the denoising algorithm is reduced by 41, and the running time of the algorithm is reduced by 3718 s. Similarly, when σ = 0.2, the number of iterations of the denoising algorithm is reduced by 77, and the running time is reduced by 7899 s. It can be seen that the running time of the algorithm is greatly reduced, and the denoising effect is not significantly weakened when the point cloud is simplified to a reasonable size. Therefore, for some real-time application scenarios, it is necessary to simplify the point cloud before denoising.

5.3.3. Iterations

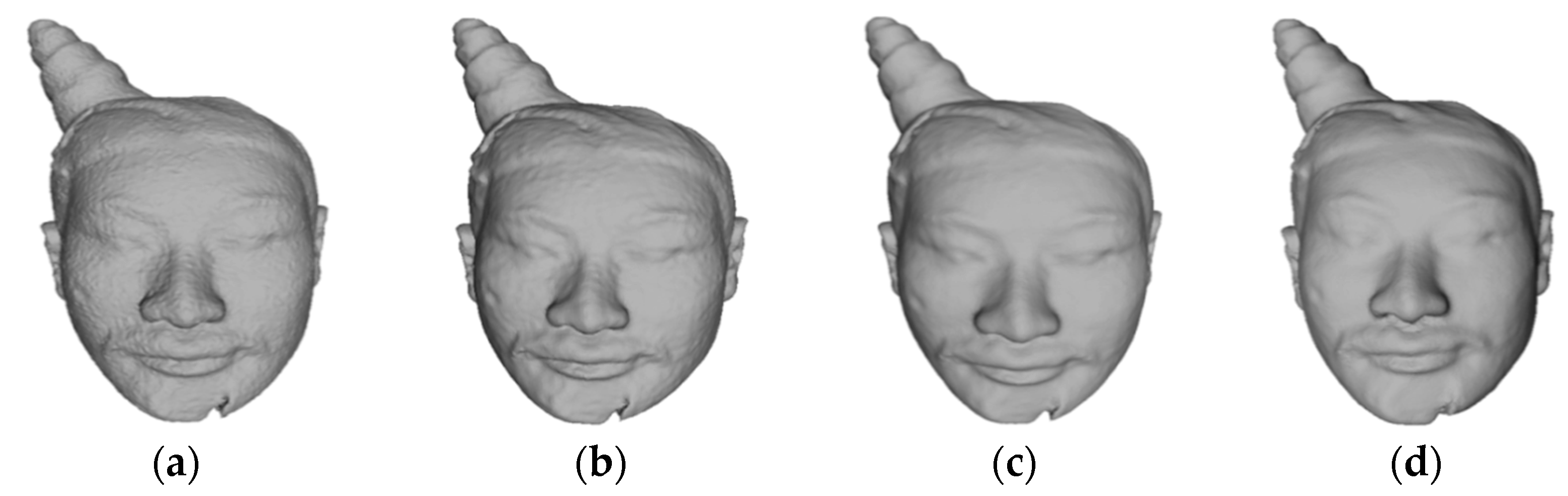

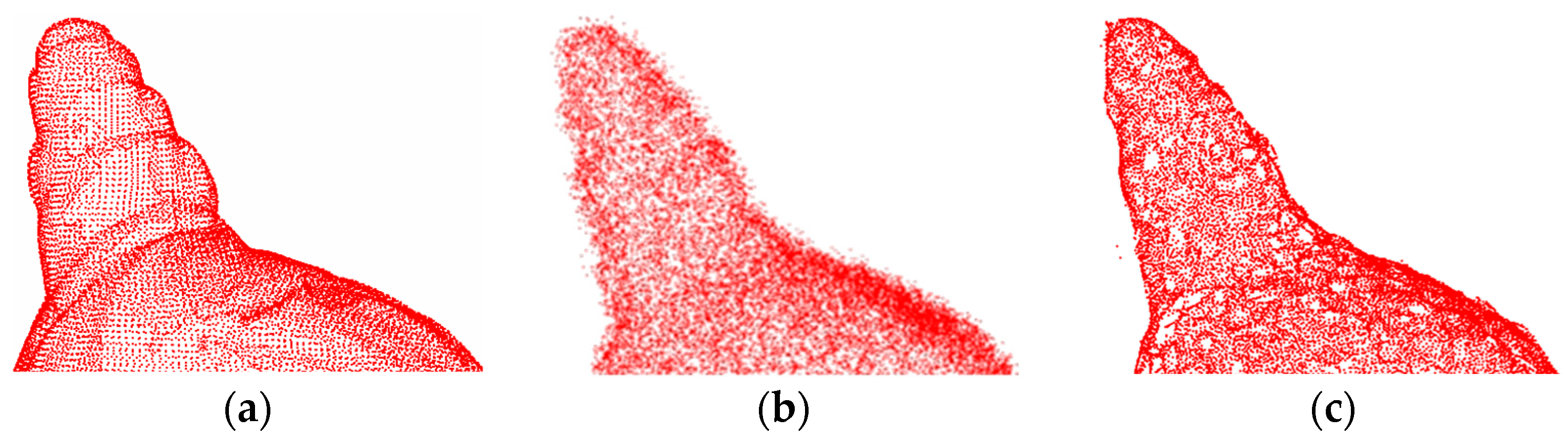

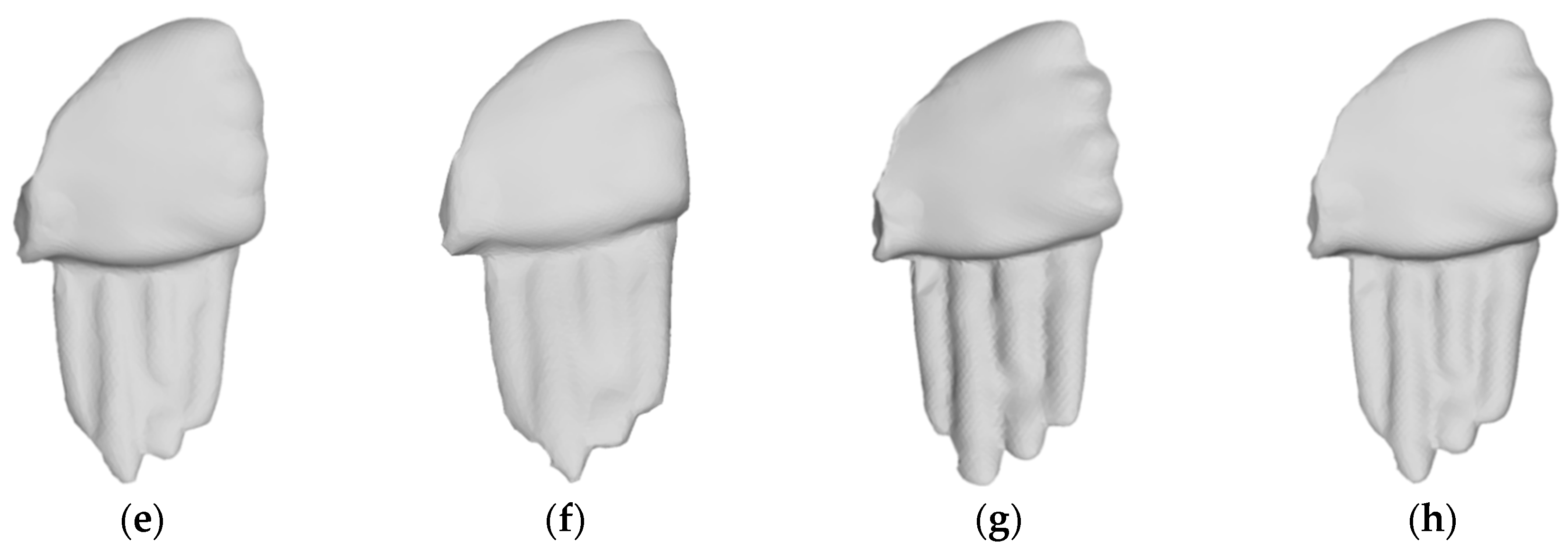

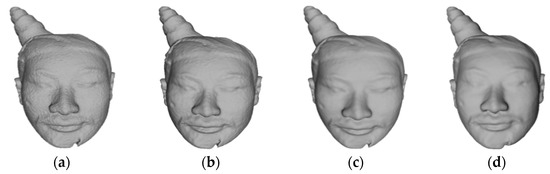

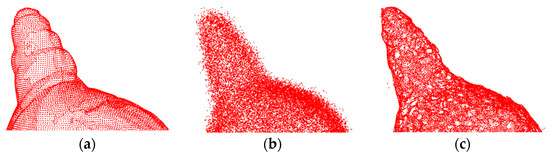

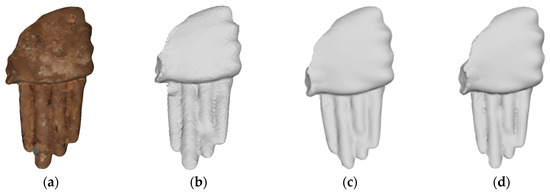

This section presents the subjective results of the proposed combined color and geometry denoising approach. Figure 7a shows the presence of numerous noise points on the surface of 4#yt, resulting in an uneven surface. Subsequently, in Figure 7b, after the third iteration, the sharp noise points on the surface of the 3D model appear smoother. Furthermore, Figure 7c shows that as the number of iterations increases to 6, the rough areas on the surface of the 3D model gradually become smoother. Finally, Figure 7d shows that when the number of iterations reaches 10, the surface noise points are effectively eliminated.

Figure 7.

The denoising effect of our algorithm in different iterations on 4#yt: (a) description of the noisy input; (b) description of the third iteration; (c) description of the sixth iteration; (d) description of the tenth iteration.

The experimental results in Table 2 show that the number of iterations of the algorithm is influenced by the noise level. For σ = 0.02, the denoising algorithm needs 4 iterations; for σ = 0.05, the denoising algorithm needs 11 iterations. It can be observed that as the noise level increases, more iterations are required. Conversely, when the noise level is low, the proposed method shows the advantages of fewer iterations and a faster convergence speed.

5.3.4. Robustness

The robustness of the proposed method was tested under different noise levels. Gaussian white noise was added to the clean 3D model in reverse, and the denoising effect of the proposed method was verified. In the experiment, the standard deviation σ of the white Gaussian noise was set to 0.02, 0.05, 0.1, and 0.2.

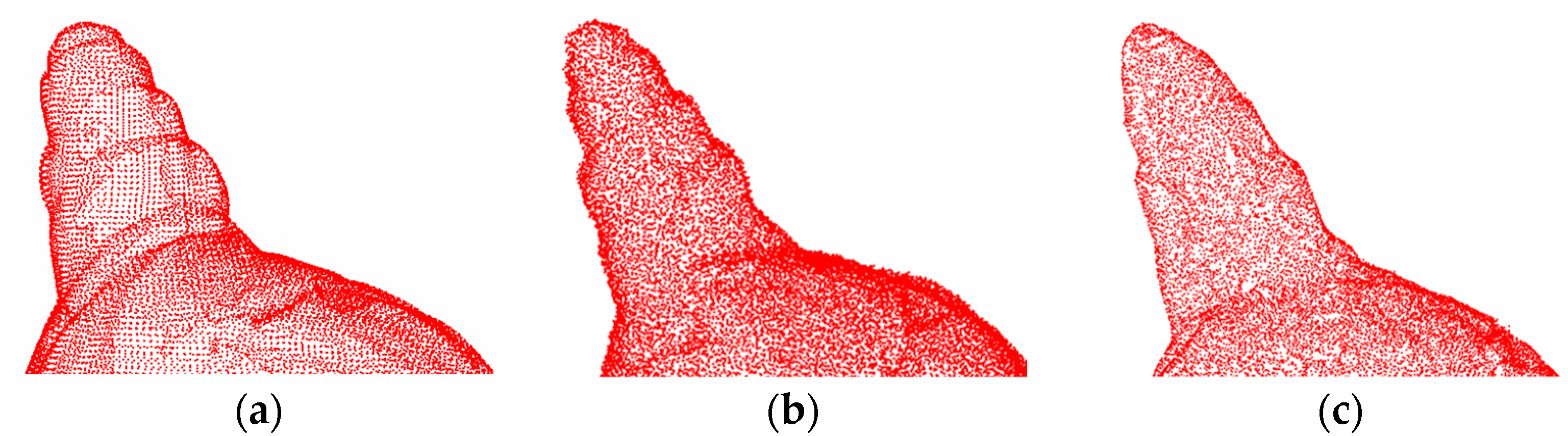

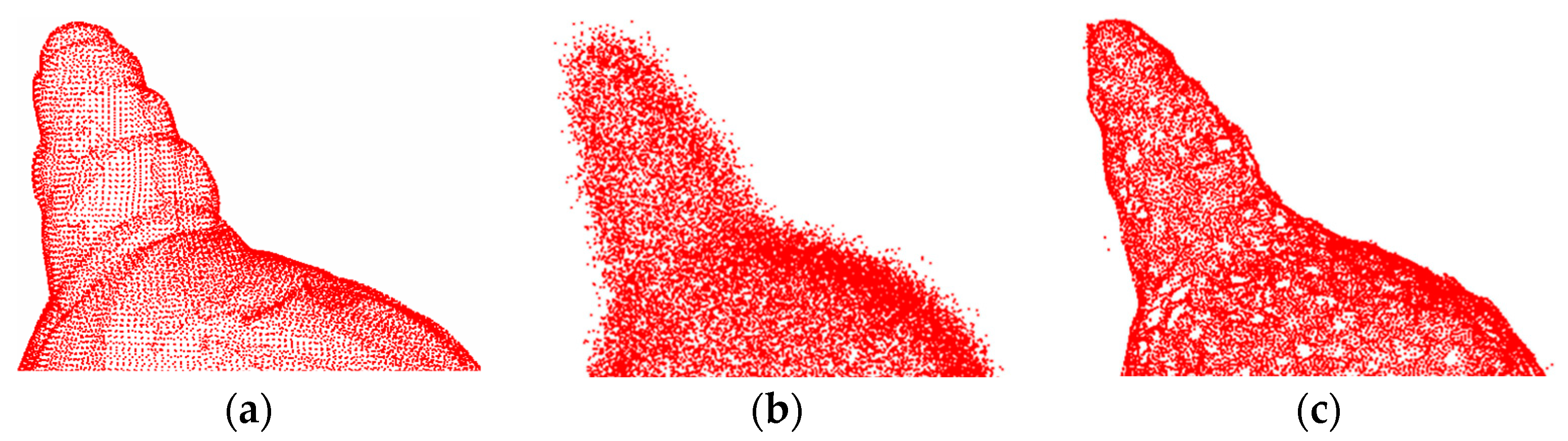

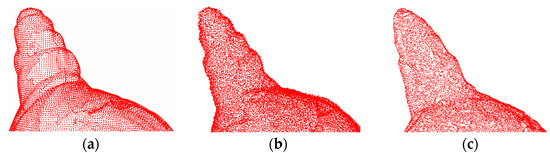

Figure 8 and Figure 9 show the denoising effects of the proposed method after adding Gaussian white noise with σ = 0.02 and σ = 0.05, respectively. The experimental results indicate that at a low noise level, the surface smoothness of this method is almost indistinguishable from that of clean point clouds.

Figure 8.

The denoising effect of 4#yt with Gaussian white noise with σ = 0.02 added: (a) description of the clean point cloud; (b) description of the noisy input; (c) description of the denoising effect.

Figure 9.

The denoising effect of 4#yt with Gaussian white noise with σ = 0.05 added: (a) description of the clean point cloud; (b) description of the noisy input; (c) description of the denoising effect.

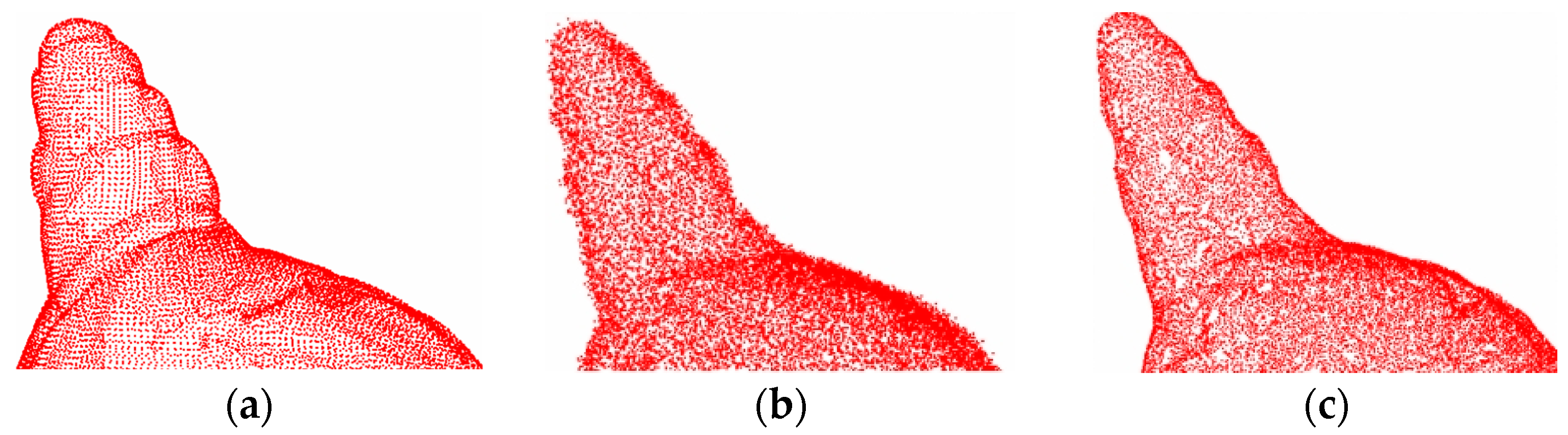

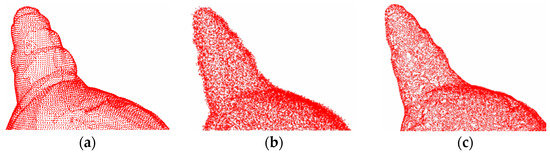

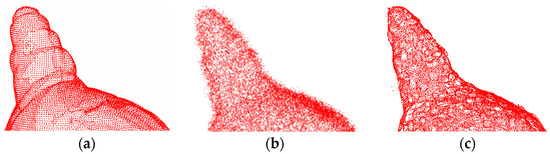

Figure 10 and Figure 11 show the denoising effects of the proposed method after adding Gaussian white noise with σ = 0.1 and σ = 0.2, respectively. Clearly, the proposed method exhibits excellent denoising performance even at high noise levels, effectively removing a significant amount of noise from the 3D point cloud surface, with only a few outliers remaining. These results demonstrate the strong robustness of the proposed method.

Figure 10.

The denoising effect of 4#yt with Gaussian white noise with σ = 0.1 added: (a) description of the clean point cloud; (b) description of the noisy input; (c) description of the denoising effect.

Figure 11.

The denoising effect of 4#yt with Gaussian white noise with σ = 0.2 added: (a) description of clean point cloud; (b) description of the noisy input; (c) description of the denoising effect.

5.4. Comparison with Competing Methods

This section focuses on analyzing the experimental results by comparing them with other methods, both subjective and objective, using a dataset of cultural relics obtained using a 3D scanner and three public 3D point clouds. To validate the superiority of the proposed method, we compared it with MRPCA [16], LR [11], the method proposed in [31], the method proposed in [19], and the method proposed in [24] in our experiments.

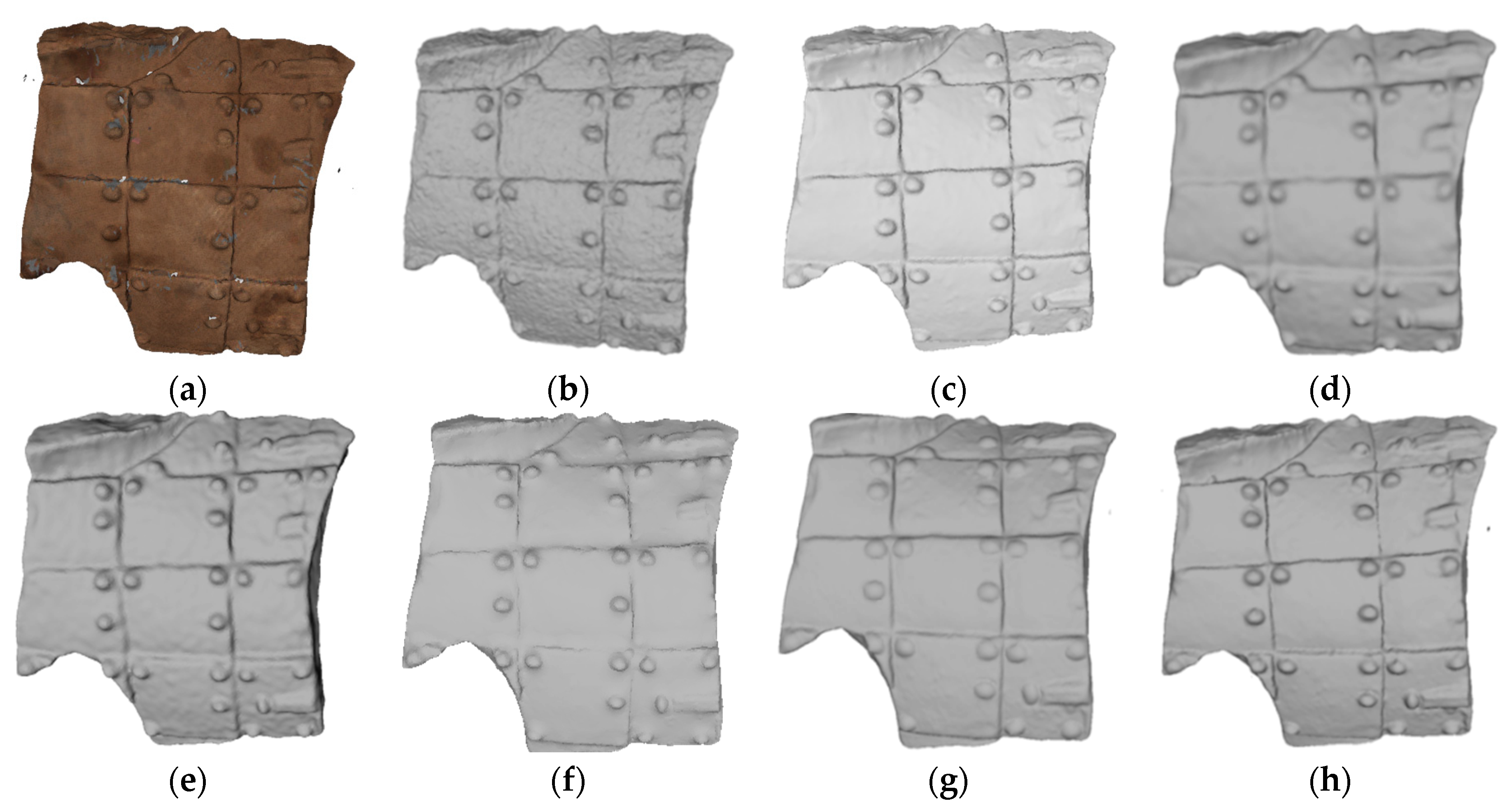

5.4.1. Subjective Assessment

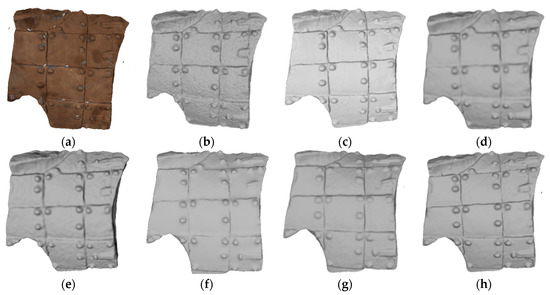

Figure 12 shows the denoising results using different methods on the 3D fragment numbered G10-11-43(47)4. It can be seen from Figure 12f,g that both LR [11] and the method described in [19] effectively remove surface noise from the armor of the terracotta warriors. However, these methods also result in the smoothing of sharp features such as the rivets on the armor. Figure 12d,e show that the methods mentioned in [16,31] manage to better preserve the fine features of the rivets, but the surface of the armor still remains rough and uneven, and the noise is not completely removed.

Figure 12.

Denoising effect of several methods on G10-11-43(47)4: (a) description of ground truth; (b) description of noisy input; (c) description of our method; (d) description of MRPCA method; (e) description of the method in [31]; (f) description of LR method; (g) description of the method in [19]; (h) description of the method in [24].

The method proposed in [24] successfully eliminates surface noise while preserving the surface decoration of cultural relics, as shown in Figure 12h. In particular, our denoising method ensures the clear visibility of the rivets on the armor and achieves a satisfactory smoothing effect on the model surface, as shown in Figure 12c. The resulting 3D model, after noise removal, closely resembles the real cultural relic.

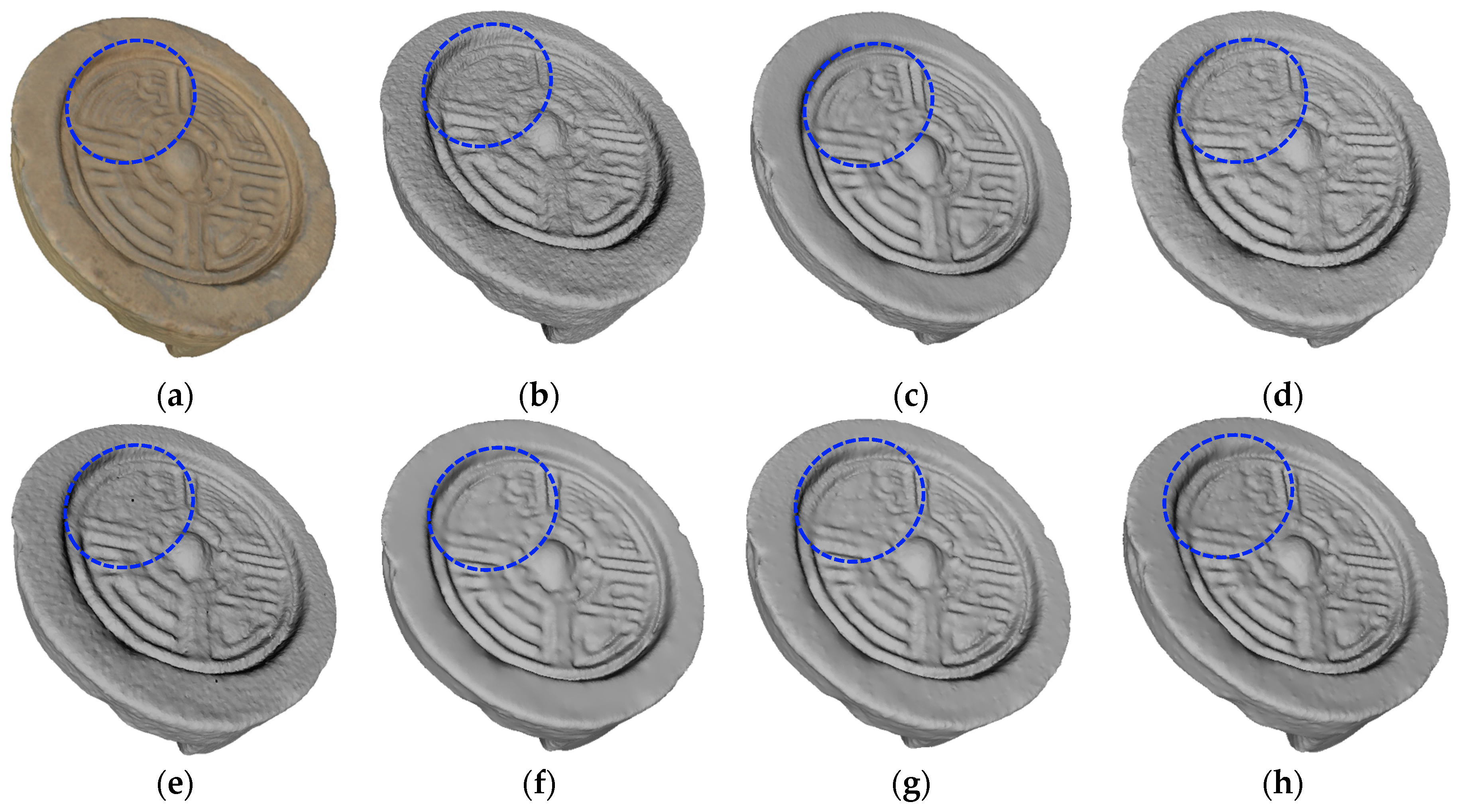

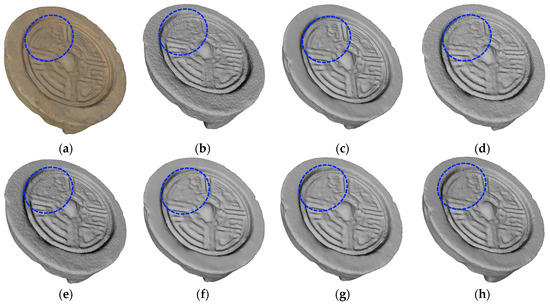

The denoising effects of different methods on Q002789 are shown in Figure 13. Although the methods in Figure 13d,e can remove most of the noise on the surface of the 3D point cloud, there is still a small amount of noise attached to the surface that has not been removed. The denoising effect of the methods in Figure 13f–h is better than that of the methods in Figure 13d,e, but the pattern in the blue dotted circle is very blurred. In Figure 13c, the denoising effect of the proposed method is the most ideal and most similar to the real cultural relics, especially the area enclosed by the blue dotted circle, whose fine details are completely preserved.

Figure 13.

Denoising effect of several methods on Q002789: (a) description of the ground truth; (b) description of the noisy input; (c) description of our method; (d) description of MRPCA method; (e) description of the method in [31]; (f) description of LR method; (g) description of the method in [19]; (h) description of the method in [24].

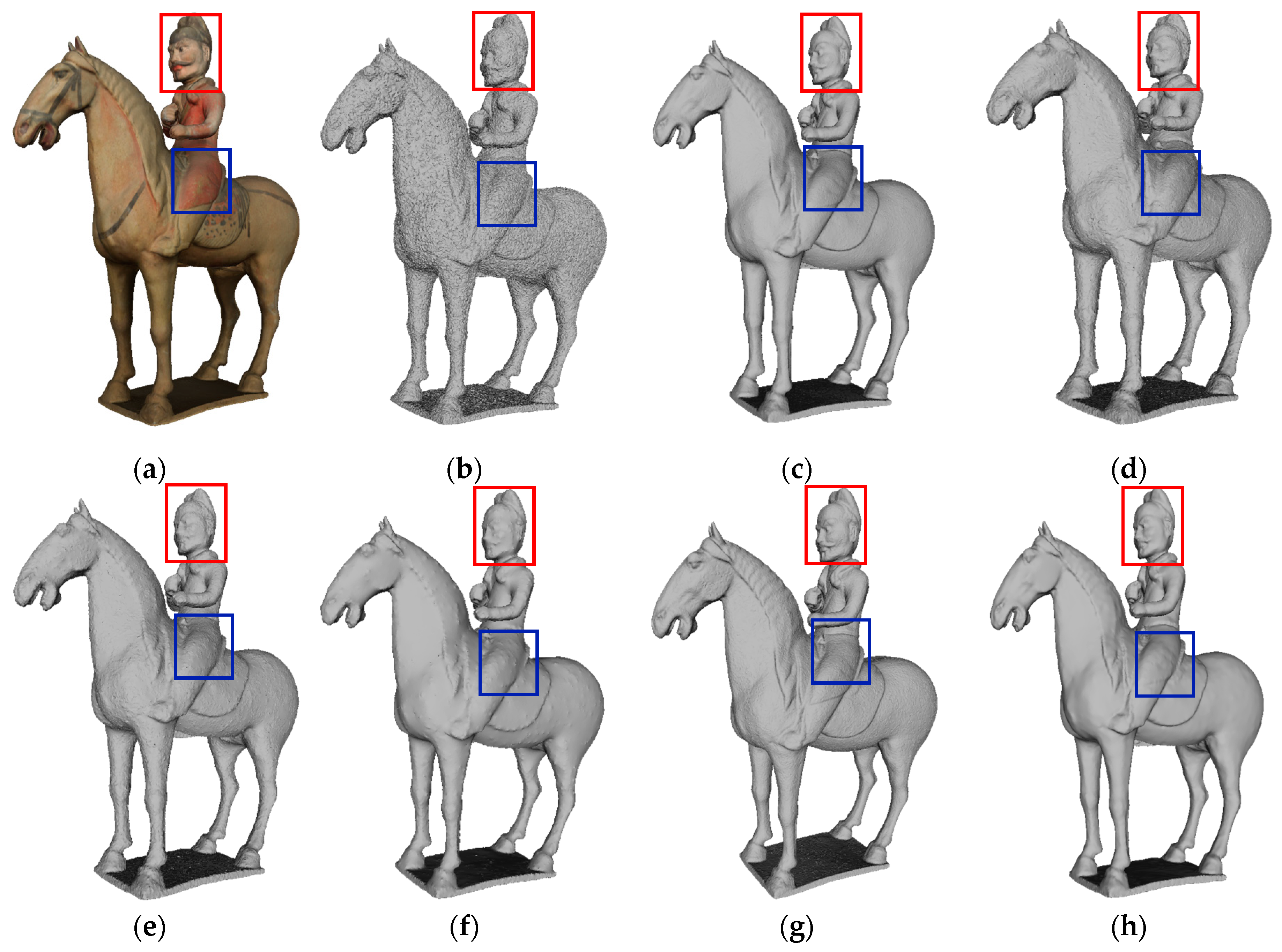

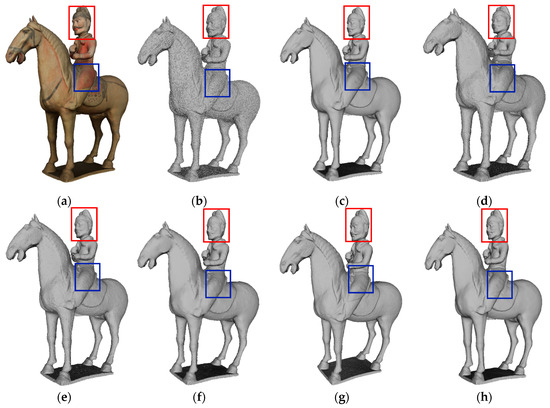

The denoising effect of different methods on H73 is shown in Figure 14. It can be seen that, when using the denoising methods in Figure 14d,e, the surface of the sculpture of Hu terracotta army is uneven; in particular, the denoising of the face is insufficient, resulting in blurred facial contours and features. Evidently, the smoothing effect of the method in Figure 14f is better than that in Figure 14d,e. The methods described in Figure 14c,g,h overall show better performance compared to the methods in Figure 14d–f. These methods effectively enhance the clarity of facial contours and features in the Hu terracotta sculpture. For example, the details of the eyes and beards are well preserved. The method in Figure 14g is the worst of the three methods because there is still a small amount of noise on the surface that has not been completely removed. Both our method shown in Figure 14c and the method shown in Figure 14h demonstrate advanced denoising effects. It is important to highlight that the area encircled by the blue box is the hem of the dress, and several other competing methods produce unsatisfactory denoising results for this specific area, whereas our method successfully preserves the fine details of this dress.

Figure 14.

Denoising effect of several methods on H73: (a) description of the ground truth; (b) description of the noisy input; (c) description of our method; (d) description of MRPCA method; (e) description of the method in [31]; (f) description of LR method; (g) description of the method in [19]; (h) description of the method in [24].

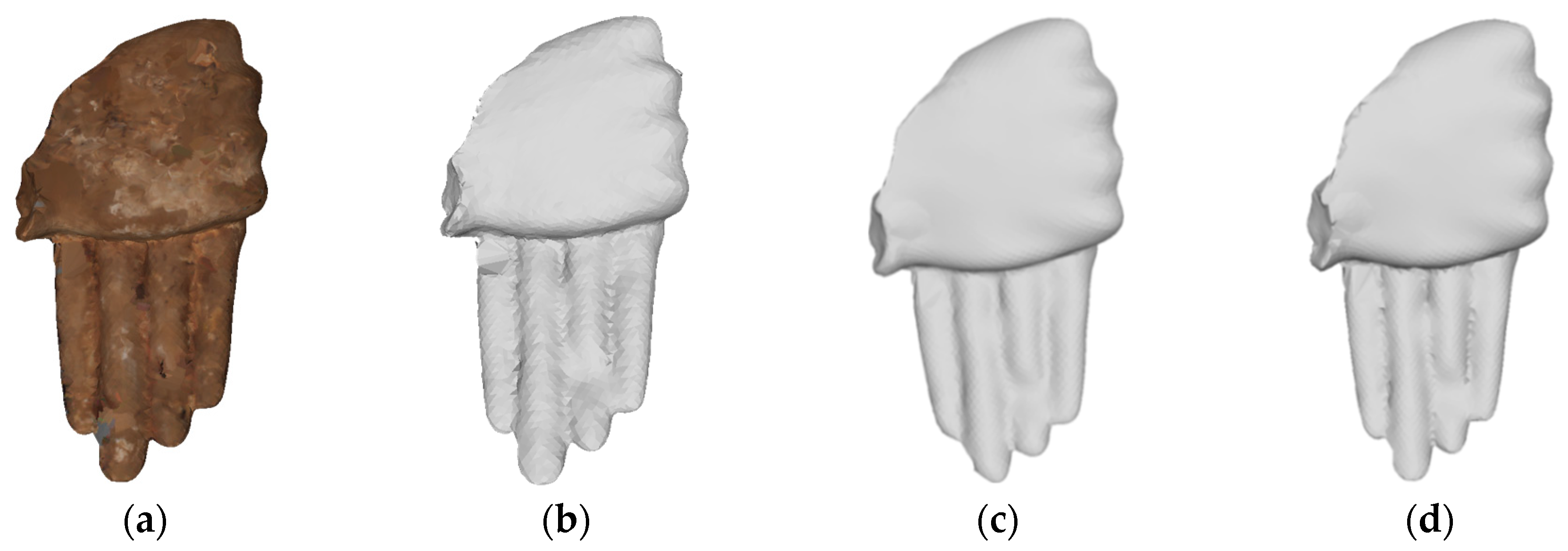

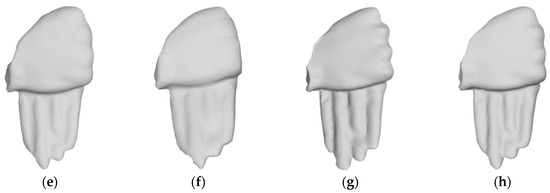

The denoising results of different methods on the 3D fragment numbered G3-I-C-94 are shown in Figure 15. From Figure 15e,f, it can be seen that the finger part is too smooth after applying the denoising techniques proposed in [11,31]. Figure 15h shows that the method in [24] successfully preserves the intricate features of the palm and finger joint after noise removal. In Figure 15d,g, MRPCA [16] and the method in [19] effectively remove most of the noise, albeit with a slightly coarse denoising effect. Figure 15c shows that our proposed method is able to remove the noise substantially while still preserving the fine features of the finger cracks.

Figure 15.

Denoising effect of several methods on G3-I-C-94: (a) description of ground truth; (b) description of noisy input; (c) description of our method; (d) description of MRPCA method; (e) description of the method in [31]; (f) description of LR method; (g) description of the method in [19]; (h) description of the method in [24].

5.4.2. Objective Assessment

We evaluated the proposed approach on cultural relic point clouds. To evaluate the denoising results, we introduced noise of different intensities into the clean 3D point cloud and quantitatively analyzed the results using the MSE and SNR. The experimental results in Table 4 and Table 5 show that as the noise intensity increases, the mean square error between the denoised point cloud and the clean point cloud also increases. When considering the EMS or SNR, our proposed method outperforms other competing denoising methods in terms of denoising effectiveness. Furthermore, even in the presence of high-level noise, our method maintains a small deviation between the two metrics, indicating its strong robustness.

Table 4.

MSE metric comparison of six methods using cultural relic data.

Table 5.

SNR metric comparison of six methods using cultural relic data.

6. Conclusions

The acquisition of cultural relic point clouds can be achieved directly using 3D scanning equipment. However, this process is often imperfect, resulting in noise corruption in the point clouds. Removing noise from the surface of the cultural relic point cloud while preserving sharp details is a challenging task. To address this problem, we proposed an approach that combines color and geometric features to denoise the cultural relic point cloud. Our approach is based on graph signal processing, in which we formulated the denoising process as a minimization of graph Laplacian regularization. Utilizing color and geometric characteristics as signals, we approached the elimination of surface noise as an optimization dilemma with a graph signal smoothness prior. To evaluate the effectiveness of our denoising approach, we applied it to 3D cultural relic point clouds. It is important to highlight that the proposed approach is versatile and can be used in different applications where data are limited. The experimental results show that our approach outperforms five competing methods, effectively removing noise from the surface of cultural relic point clouds while preserving important details such as texture and ornamentation to a great extent.

Author Contributions

Conceptualization, H.G.; methodology, H.G.; software, H.W.; validation, H.G.; writing—original draft preparation, H.G.; writing—review and editing, H.G.; visualization, S.Z.; supervision, H.G.; project administration, H.G.; funding acquisition, H.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Ningxia Province, grant number 2022AAC03005, and the Key Research and Development Projects program of Ningxia Province, grant number 2023BDE03006.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments and suggestions, which have improved the overall quality of this manuscript. The authors would like to thank Geng Guohua for providing the experimental data.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, Z.; Song, S.; Wang, B.; Gong, W.; Ran, Y.; Hou, X.; Chen, Z.; Li, F. Multispectral LiDAR point cloud highlight removal based on color information. Opt. Express 2022, 30, 28614–28631. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.Q.; Mao, X.Y.; Wang, J.; Guo, Y. Feature-preserving triangular mesh surface denoising: A survey and prospective. J. Comput.-Aided Des. Comput. Graph. 2020, 32, 1–15. [Google Scholar]

- Zhang, W.; Deng, B.; Zhang, J.; Bouaziz, S.; Liu, L. Guided mesh normal filtering. Comput. Graph. Forum 2015, 34, 23–34. [Google Scholar] [CrossRef]

- Li, N.; Yue, S.; Li, Z.; Wang, S.; Wang, H. Adaptive and feature-preserving mesh denoising schemes based on developmental guidance. IEEE Access 2020, 8, 172412–172427. [Google Scholar] [CrossRef]

- Wang, P.-S.; Fu, X.-M.; Liu, Y.; Tong, X.; Liu, S.-L.; Guo, B. Rolling guidance normal filter for geometric processing. ACM Trans. Graph. 2015, 34, 1–9. [Google Scholar] [CrossRef]

- Liu, B.; Cao, J.; Wang, W.; Ma, N.; Li, B.; Liu, L.; Liu, X. Propagated mesh normal filtering. Comput. Graph. 2018, 74, 119–125. [Google Scholar] [CrossRef]

- Huang, H.; Wu, S.; Gong, M.; Cohen-Or, D.; Ascher, U.; Zhang, H. Edge-aware point set resampling. ACM Trans. Graph. 2013, 32, 1–12. [Google Scholar] [CrossRef]

- Zheng, Y.; Li, G.; Wu, S.; Liu, Y.; Gao, Y. Guided point cloud denoising via sharp feature skeletons. Vis. Comput. 2017, 33, 857–867. [Google Scholar] [CrossRef]

- Sun, Y.; Schaefer, S.; Wang, W. Denoising point sets via L0 minimization. Comput. Aided Geom. Des. 2015, 35, 2–15. [Google Scholar] [CrossRef]

- Huang, H.; Li, D.; Zhang, H.; Ascher, U.; Cohen-Or, D. Consolidation of unorganized point clouds for surface reconstruction. ACM Trans. Graph. 2009, 28, 1–7. [Google Scholar] [CrossRef]

- Sarkar, K.; Bernard, F.; Varanasi, K.; Theobalt, C.; Stricker, D. Structured low-rank matrix factorization for point-cloud denoising. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 444–453. [Google Scholar] [CrossRef]

- Li, X.; Zhu, L.; Fu, C.; Heng, P. Non-local low-rank normal filtering for mesh denoising. Comput. Graph. Forum 2018, 37, 155–166. [Google Scholar] [CrossRef]

- Osher, S.; Shi, Z.; Zhu, W. Low dimensional manifold model for image processing. SIAM J. Imaging Sci. 2017, 10, 1669–1690. [Google Scholar] [CrossRef]

- Avron, H.; Sharf, A.; Greif, C.; Cohen-Or, D. L1-sparse reconstruction of sharp point set surfaces. ACM Trans. Graph. 2010, 29, 1–12. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, L.; Shan, X.; Wang, S.; Qin, S.; Wang, H. A L0 denoising algorithm for 3D shapes. J. Comput -Aided Des. Comput. Graph. 2018, 30, 772–777. [Google Scholar]

- Mattei, E.; Castrodad, A. Point cloud denoising via moving RPCA. Comput. Graph. Forum 2017, 36, 123–137. [Google Scholar] [CrossRef]

- Han, X.-F.; Jin, J.S.; Wang, M.-J.; Jiang, W.; Gao, L.; Xiao, L. A review of algorithms for filtering the 3D point cloud. Signal Process. Image Commun. 2017, 57, 103–112. [Google Scholar] [CrossRef]

- Gao, X.; Hu, W.; Tang, J.; Liu, J.; Guo, Z. Optimized skeleton-based action recognition via sparsified graph regression. In Proceedings of the 27th ACM International Conference on Multimedia, New York, NY, USA, 21–25 October 2019; pp. 601–610. [Google Scholar]

- Dinesh, C.; Cheung, G.; Bajic, I.V. Point cloud denoising via feature graph laplacian regularization. IEEE Trans. Image Process. 2020, 29, 4143–4158. [Google Scholar] [CrossRef] [PubMed]

- Shang, X.; Ye, R.; Feng, H.; Jiang, X. Robust Feature Graph for Point Cloud Denoising. In Proceedings of the 7th International Conference on Communication, Image and Signal Processing (CCISP), Chengdu, China, 18–20 November 2022. [Google Scholar]

- Egilmez, H.E.; Pavez, E.; Ortega, A. Graph learning from data under laplacian and structural constraints. IEEE J. Sel. Top. Signal Process. 2017, 11, 825–841. [Google Scholar] [CrossRef]

- Jiang, B.; Zhang, Z.Y.; Lin, D.D.; Tang, J.; Luo, B. Semi-supervised learning with graph learning-convolutional networks. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Hu, W.; Gao, X.; Cheung, G.; Guo, Z. Feature graph learning for 3D point cloud denoising. IEEE Trans. Signal Process. 2020, 68, 2841–2856. [Google Scholar] [CrossRef]

- Hu, W.; Hu, Q.; Wang, Z.; Gao, X. Dynamic point cloud denoising via manifold-to-manifold distance. IEEE Trans. Image Process. 2021, 30, 6168–6183. [Google Scholar] [CrossRef]

- Hu, W.; Pang, J.; Liu, X.; Tian, D.; Lin, C.W.; Vetro, A. Graph signal processing for geometric data and beyond: Theory and applications. IEEE Trans. Multimed. 2022, 24, 3961–3977. [Google Scholar] [CrossRef]

- Zeng, J.; Cheung, G.; Ng, M.; Pang, J.; Yang, C. 3D point cloud denoising using graph laplacian regularization of a low dimensional manifold model. IEEE Trans. Image Process. 2020, 29, 3474–3489. [Google Scholar] [CrossRef]

- Wang, J.; Huang, J.; Wang, F.L.; Wei, M.; Xie, H.; Qin, J. Data-driven geometry-recovering mesh denoising. Comput. Des. 2019, 114, 133–142. [Google Scholar] [CrossRef]

- Zhao, W.B.; Liu, X.M.; Zhao, Y.S.; Fan, X.P.; Zhao, D.B. NormalNet: Learning based guided normal filtering for mesh denoising. arXiv 2019, arXiv:1903.04015v2. Available online: https://arxiv.org/abs/1903.04015v2 (accessed on 4 November 2019).

- Li, Z.; Pan, W.; Wang, S.; Tang, X.; Hu, H. A point cloud denoising network based on manifold in an unknown noisy environment. Infrared Phys. Technol. 2023, 132, 104735. [Google Scholar] [CrossRef]

- Huang, A.; Xie, Q.; Wang, Z.; Lu, D.; Wei, M.; Wang, J. MODNet: Multi-offset point cloud denoising network customized for multi-scale patches. Comput. Graph. Forum 2022, 41, 109–119. [Google Scholar] [CrossRef]

- Cattai, T.; Delfino, A.; Scarano, G.; Colonnese, S. VIPDA: A visually driven point cloud denoising algorithm based on anisotropic point cloud filtering. Front. Signal Process. 2022, 2, 842570. [Google Scholar] [CrossRef]

- Hu, X.; Wei, X.; Sun, J. A noising-denoising framework for point cloud upsampling via normalizing flows. Pattern Recognit. J. Pattern Recognit. Soc. 2023, 140, 109569. [Google Scholar] [CrossRef]

- Liu, Y.; Sheng, H.K. A single-stage point cloud cleaning network for outlier removal and denoising. Pattern Recognit. 2023, 138, 109366. [Google Scholar] [CrossRef]

- Shuman, D.I.; Narang, S.K.; Frossard, P.; Ortega, A.; Vandergheynst, P. The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains. IEEE Signal Process. Mag. 2013, 30, 83–98. [Google Scholar] [CrossRef]

- Li, X.; Han, J.; Yuan, Q.; Zhang, Y.; Fu, Z.; Zou, M.; Huang, Z. FEUSNet: Fourier Embedded U-Shaped Network for Image Denoising. Entropy 2023, 25, 1418. [Google Scholar] [CrossRef]

- Li, R.Z.; Yang, M.; Ran, Y.; Zhang, H.H.; Jing, J.F.; Li, P.F. Point cloud denoising and simplification algorithm based on method library. Laser Optoelectron. Prog. 2018, 55, 251–257. [Google Scholar]

- Liu, Y.; Sun, Y. Laser point cloud denoising based on principal component analysis and surface fitting. Laser Technol. 2020, 44, 103–108. [Google Scholar]

- Yang, D.; Sun, J. BM3D-Net: A convolutional neural network for transform-domain collaborative filtering. IEEE Signal Process. Lett. 2017, 25, 55–59. [Google Scholar] [CrossRef]

- Wei, X.; van Gorp, H.; Carabarin, L.G.; Freedman, D.; Eldar, Y.C.; van Sloun, R.J.G. Image denoising with deep unfolding and normalizing flows. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022. [Google Scholar]

- Wei, X.; Van Gorp, H.; Gonzalez-Carabarin, L.; Freedman, D.; Eldar, Y.C.; van Sloun, R.J. Deep unfolding with normalizing flow priors for inverse problems. IEEE Trans. Signal Process. 2022, 70, 2962–2971. [Google Scholar] [CrossRef]

- Dutta, S.; Basarab, A.; Georgeot, B.; Kouame, D. Quantum mechanics-based signal and image representation: Application to denoising. IEEE Open J. Signal Process. 2021, 2, 190–206. [Google Scholar] [CrossRef]

- Dutta, S.; Basara, A.; Georgeot, B.; Kouamé, D. A novel image denoising algorithm using concepts of quantum many-body theory. Signal Process. 2022, 201, 108690. [Google Scholar] [CrossRef]

- Shi, Z.; Xu, W.; Meng, H. A point cloud simplification algorithm based on weighted feature indexes for 3D scanning sensors. Sensors 2022, 22, 7491. [Google Scholar] [CrossRef]

- Pasqualotto, G.; Zanuttigh, P.; Cortelazzo, G.M. Combining color and shape descriptors for 3D model retrieval. Signal Process. Image Commun. 2013, 28, 608–623. [Google Scholar] [CrossRef]

- Musicco, A.; Galantucci, R.A.; Bruno, S.; Verdoscia, C.; Fatiguso, F. Automatic point cloud segmentation for the detection of alterations on historical buildings through an unsupervised and clustering-based machine learing approch. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 2, 129–136. [Google Scholar] [CrossRef]

- Vinodkumar, P.K.; Karabulut, D.; Avots, E.; Ozcinar, C.; Anbarjafari, G. A Survey on Deep Learning Based Segmentation, Detection and Classification for 3D Point Clouds. Entropy 2023, 25, 635. [Google Scholar] [CrossRef]

- Irfan, A.M.; Magli, E. Exploiting color for graph-based 3D point cloud denoising. J. Vis. Commun. Image Represent. 2021, 75, 103027. [Google Scholar] [CrossRef]

- Rosman, G.; Dubrovina, A.; Kimmel, R. Patch-collaborative spectral point-cloud denoising. Comput. Graph. Forum 2013, 32, 1–12. [Google Scholar] [CrossRef]

- Barkalov, K.; Shtanyuk, A.; Sysoyev, A. A Fast kNN Algorithm Using Multiple Space-Filling Curves. Entropy 2022, 24, 767. [Google Scholar] [CrossRef] [PubMed]

- Miranda-González, A.A.; Rosales-Silva, A.J.; Mújica-Vargas, D.; Escamilla-Ambrosio, P.J.; Gallegos-Fune, F.J.; Vianney-Kinani, J.M.; Velázquez-Lozada, E.; Pérez-Hernández, L.M.; Lozano-Vázquez, L.V. Denoising Vanilla Autoencoder for RGB and GS Images with Gaussian Noise. Entropy 2023, 25, 1467. [Google Scholar] [CrossRef] [PubMed]

- De Gregorio, J.; Sánchez, D.; Toral, R. Entropy Estimators for Markovian Sequences: A Comparative Analysis. Entropy 2024, 26, 79. [Google Scholar] [CrossRef] [PubMed]

- Paige, C.; Saunders, M. LSQR: An Algorithm for Sparse Linear Equations and Sparse Least Squares. ACM Trans. Math. Softw. 1982, 8, 43–71. [Google Scholar] [CrossRef]

- Parikh, N.; Boyd, S. Proximal Algorithms. Found. Trends Optim. 2013, 1, 123–231. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).