Generation of Conventional 18F-FDG PET Images from 18F-Florbetaben PET Images Using Generative Adversarial Network: A Preliminary Study Using ADNI Dataset

Abstract

:1. Background

2. Materials and Methods

2.1. Datasets

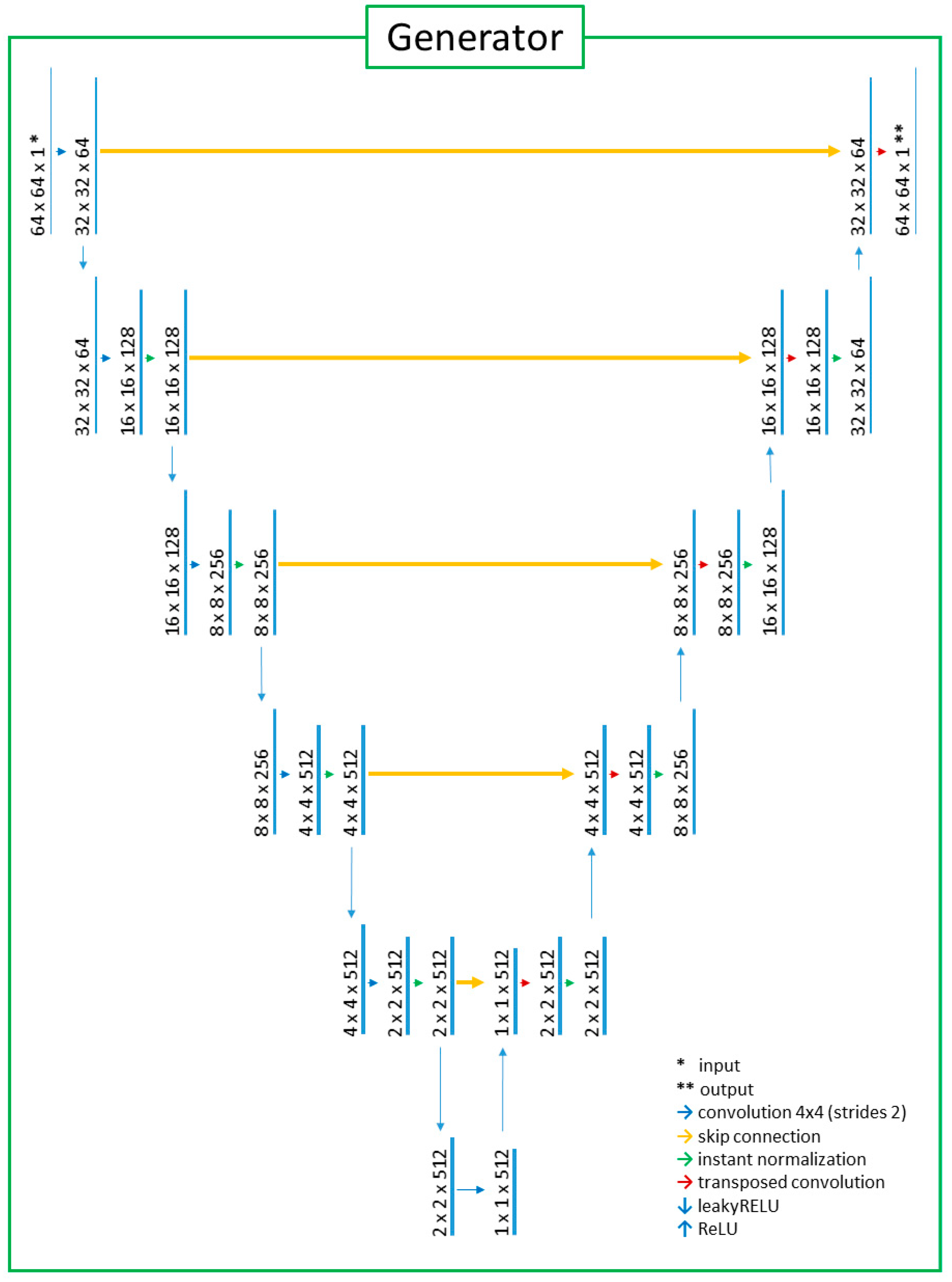

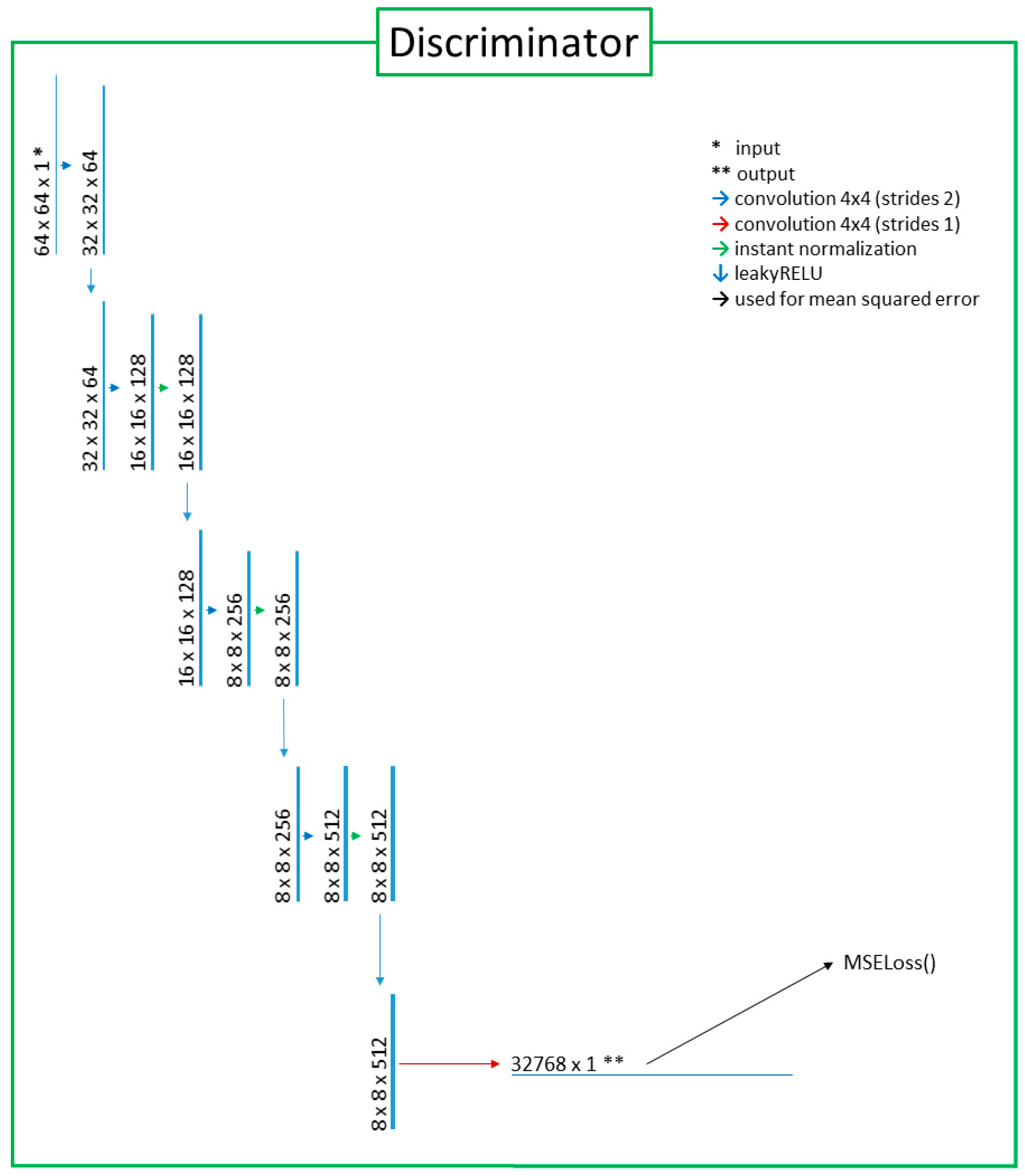

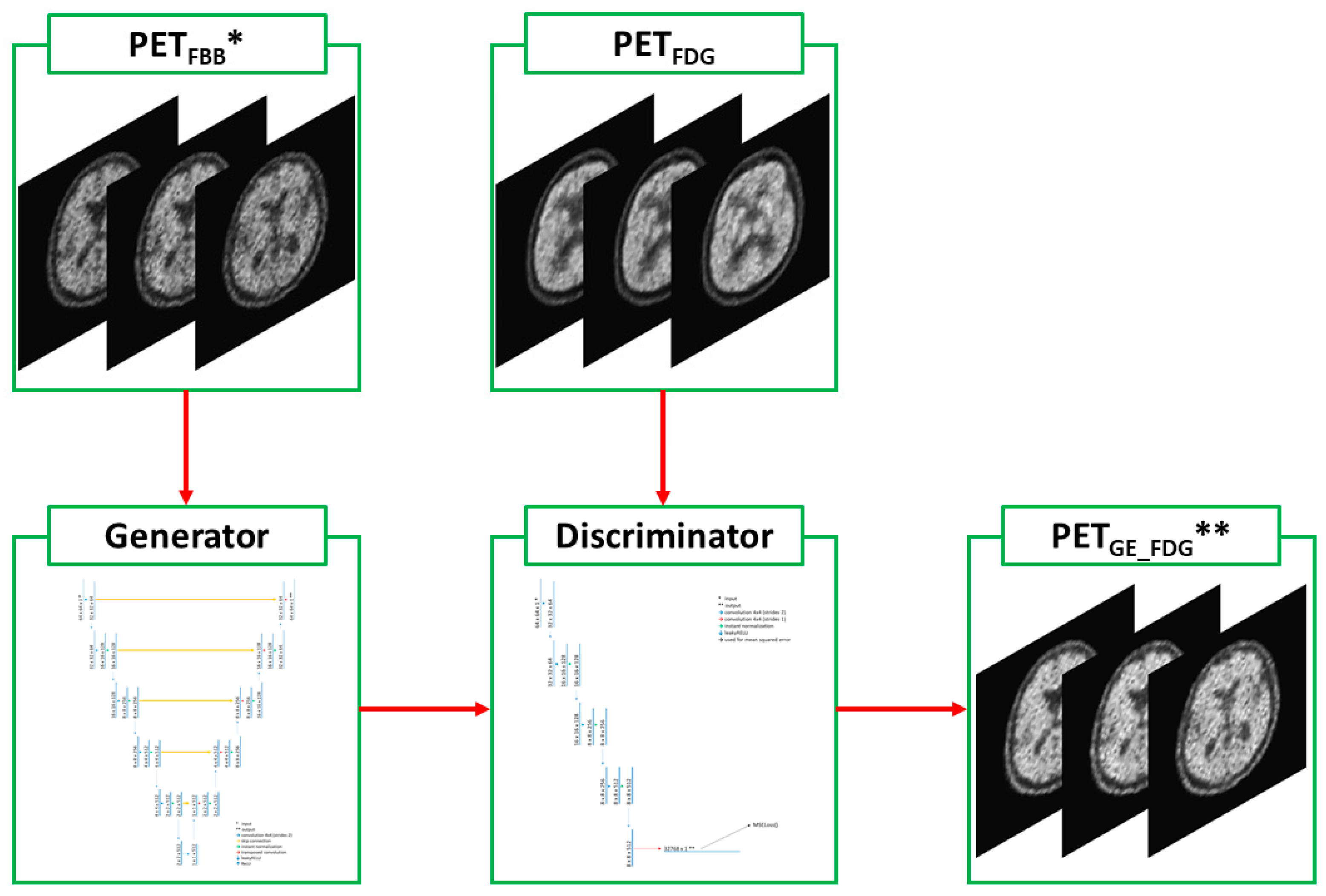

2.2. Deep-Learning Model with Image Preprocessing

2.3. Statistical Analysis

3. Results

3.1. Baseline Demographics

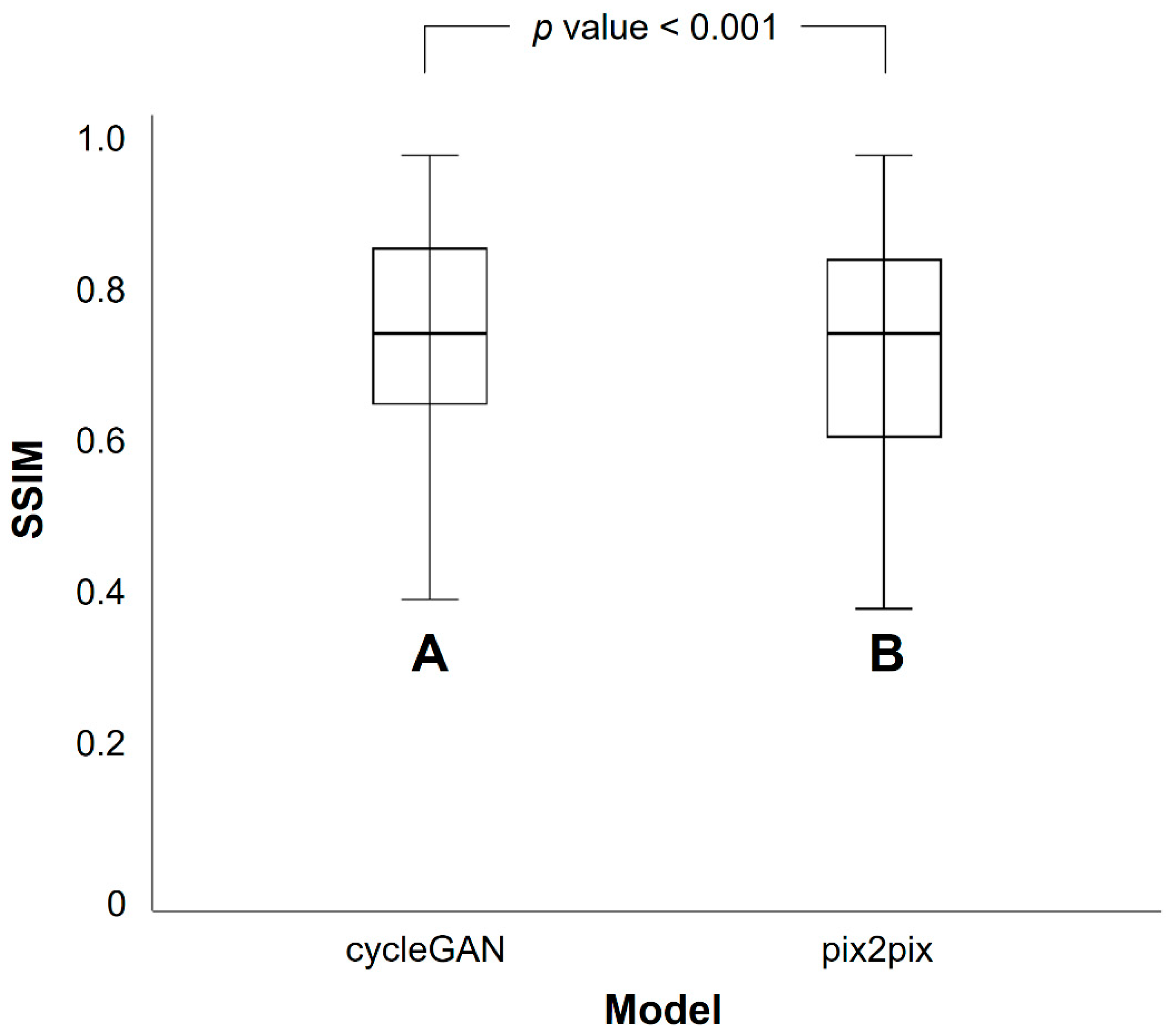

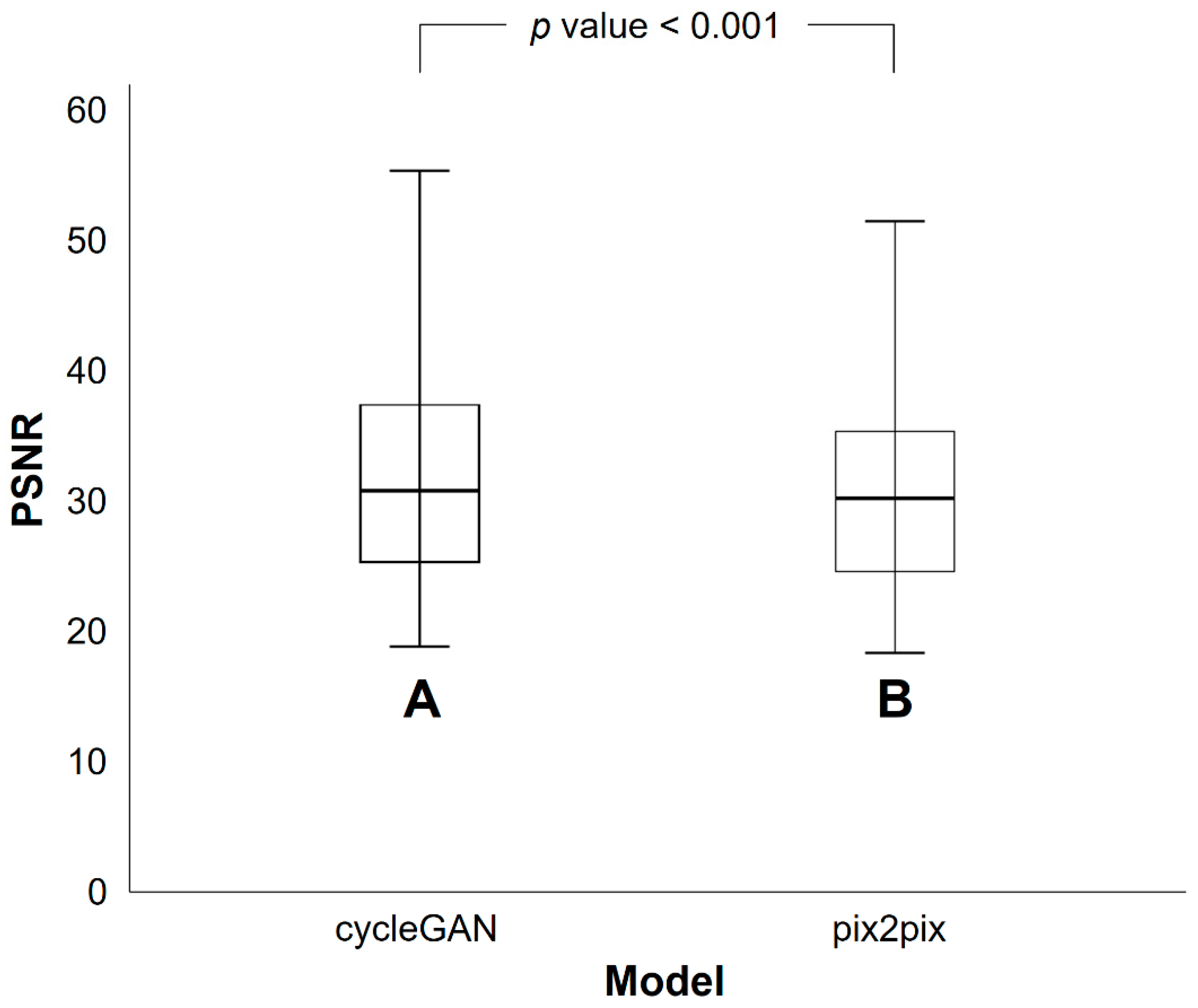

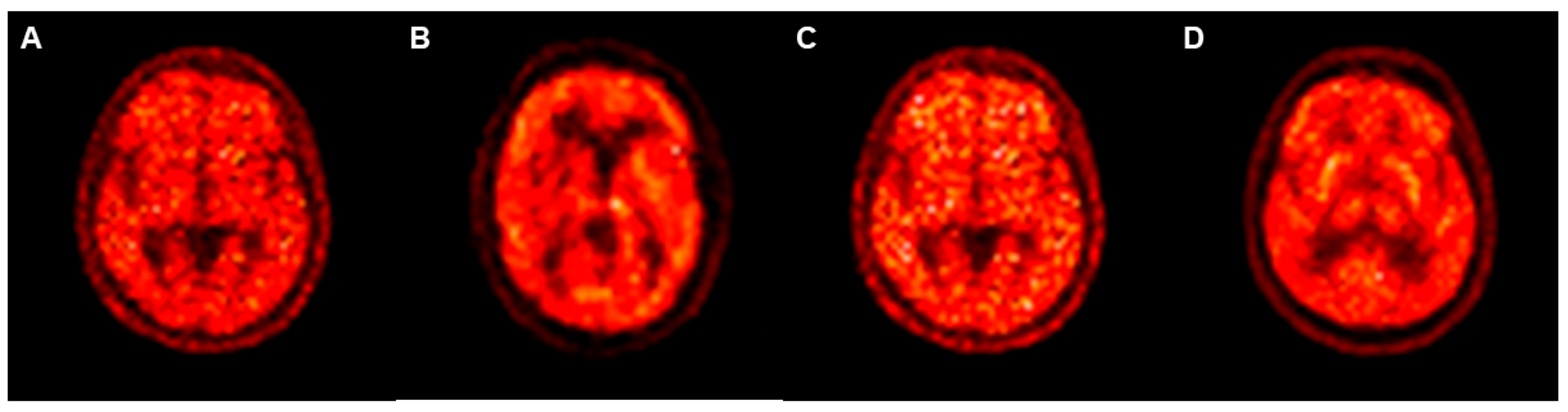

3.2. Differences in SSIM and PSNR Values between PETRE-FDG and PETGE-FDG Images

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Reitz, C.; Mayeux, R. Alzheimer disease: Epidemiology, diagnostic criteria, risk factors and biomarkers. Biochem. Pharmacol. 2014, 88, 640–651. [Google Scholar] [CrossRef] [Green Version]

- Chung, S.E.; Kim, H.J.; Jo, S.; Lee, S.; Lee, Y.; Roh, J.H.; Lee, J.H. Patterns of focal amyloid deposition using (18)F-Florbetaben PET in patients with cognitive impairment. Diagnostics 2022, 12, 1357. [Google Scholar] [CrossRef]

- Kadir, A.; Almkvist, O.; Forsberg, A.; Wall, A.; Engler, H.; Långström, B.; Nordberg, A. Dynamic changes in PET amyloid and FDG imaging at different stages of Alzheimer’s disease. Neurobiol. Aging 2012, 33, 198.e1–198.e14. [Google Scholar] [CrossRef] [PubMed]

- Donaghy, P.; Thomas, A.J.; O’Brien, J.T. Amyloid PET Imaging in Lewy body disorders. Am. J. Geriatr. Psychiatry 2015, 23, 23–37. [Google Scholar] [CrossRef] [Green Version]

- Vandenberghe, R.; Adamczuk, K.; Dupont, P.; Laere, K.V.; Chetelat, G. Amyloid PET in clinical practice: Its place in the multidimensional space of Alzheimer’s disease. Neuroimage Clin. 2013, 2, 497–511. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shokouhi, S.; Claassen, D.; Kang, H.; Ding, Z.; Rogers, B.; Mishra, A.; Riddle, W.R. Longitudinal progression of cognitive decline correlates with changes in the spatial pattern of brain 18F-FDG PET. J. Nucl. Med. 2013, 54, 1564–1569. [Google Scholar] [CrossRef] [Green Version]

- Minoshima, S.; Cross, D.; Thientunyakit, T.; Foster, N.L.; Drzezga, A. (18)F-FDG PET Imaging in Neurodegenerative Dementing Disorders: Insights into Subtype Classification, Emerging Disease Categories, and Mixed Dementia with Copathologies. J. Nucl. Med. 2022, 63, 2S–12S. [Google Scholar] [CrossRef]

- Rostomian, A.H.; Madison, C.; Rabinovici, G.D.; Jagust, W.J. Early 11C-PIB frames and 18F-FDG PET measures are comparable: A study validated in a cohort of AD and FTLD patients. J. Nucl. Med. 2011, 52, 173–179. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Meyer, P.T.; Hellwig, S.; Amtage, F.; Rottenburger, C.; Sahm, U.; Reuland, P.; Weber, W.A.; Hull, M. Dual-biomarker imaging of regional cerebral amyloid load and neuronal activity in dementia with PET and 11C-labeled Pittsburgh compound B. J. Nucl. Med. 2011, 52, 393–400. [Google Scholar] [CrossRef] [Green Version]

- Tiepolt, S.; Hesse, S.; Patt, M.; Luthardt, J.; Schroeter, M.L.; Hoffmann, K.T.; Weise, D.; Gertz, H.J.; Sabri, O.; Barthel, H. Early [(18)F]florbetaben and [(11)C]PiB PET images are a surrogate biomarker of neuronal injury in Alzheimer’s disease. Eur. J. Nucl. Med. Mol. Imaging 2016, 43, 1700–1709. [Google Scholar] [CrossRef]

- Choi, H.; Lee, D.S. Generation of Structural MR Images from Amyloid PET: Application to MR-Less Quantification. J. Nucl. Med. 2018, 59, 1111–1117. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Choi, H.J. Virtual 18F-FDG Positron Emission Tomography Images Generated From Early Phase Images of 18F-FP-CIT Positron Emission Tomography Computed Tomography Using A Generative Adversarial Network in Patients with Suspected Parkinsonism. Doctorial Dissertation, Hanyang University, Seoul, Republic of Korea, 2021. [Google Scholar]

- Kang, S.K.; Choi, H.; Lee, J.S.; Alzheimer’s Disease Neuroimaging Initiative. Translating amyloid PET of different radiotracers by a deep generative model for interchangeability. Neuroimage 2021, 232, 117890. [Google Scholar] [CrossRef] [PubMed]

- Weiner, M.W.; Veitch, D.P.; Aisen, P.S.; Beckett, L.A.; Cairns, N.J.; Green, R.C.; Harvey, D.; Jack, C.R., Jr.; Jagust, W.; Morris, J.C.; et al. The Alzheimer’s Disease Neuroimaging Initiative 3: Continued innovation for clinical trial improvement. Alzheimers Dement. 2017, 13, 561–571. [Google Scholar] [CrossRef] [Green Version]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. arXiv 2017, arXiv:1703.10593. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. arXiv 2016, arXiv:1611.07004. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Scheltens, P.; De Strooper, B.; Kivipelto, M.; Holstege, H.; Chételat, G.; Teunissen, C.E.; Cummings, J.; van der Flier, W.M. Alzheimer’s disease. Lancet 2021, 397, 1577–1590. [Google Scholar] [CrossRef]

- Lane, C.A.; Hardy, J.; Schott, J.M. Alzheimer’s disease. Eur. J. Neurol. 2018, 25, 59–70. [Google Scholar] [CrossRef]

- Crous-Bou, M.; Minguillon, C.; Gramunt, N.; Molinuevo, J.L. Alzheimer’s disease prevention: From risk factors to early intervention. Alzheimers Res. Ther. 2017, 9, 71. [Google Scholar] [CrossRef] [Green Version]

- Braak, H.; Braak, E. Neuropathological stageing of Alzheimer-related changes. Acta Neuropathol. 1991, 82, 239–259. [Google Scholar] [CrossRef] [PubMed]

- Perani, D.; Schillaci, O.; Padovani, A.; Nobili, F.M.; Iaccarino, L.; Della Rosa, P.A.; Frisoni, G.; Caltagirone, C. A survey of FDG- and amyloid-PET imaging in dementia and GRADE analysis. Biomed. Res. Int. 2014, 2014, 785039. [Google Scholar] [CrossRef] [PubMed]

- Tanner, J.A.; Iaccarino, L.; Edwards, L.; Asken, B.M.; Gorno-Tempini, M.L.; Kramer, J.H.; Pham, J.; Perry, D.C.; Possin, K.; Malpetti, M.; et al. Amyloid, tau and metabolic PET correlates of cognition in early and late-onset Alzheimer’s disease. Brain 2022, 145, 4489–4505. [Google Scholar] [CrossRef] [PubMed]

- Daerr, S.; Brendel, M.; Zach, C.; Mille, E.; Schilling, D.; Zacherl, M.J.; Burger, K.; Danek, A.; Pogarell, O.; Schildan, A.; et al. Evaluation of early-phase [(18)F]-florbetaben PET acquisition in clinical routine cases. Neuroimage Clin. 2017, 14, 77–86. [Google Scholar] [CrossRef] [PubMed]

- McKhann, G.M.; Knopman, D.S.; Chertkow, H.; Hyman, B.T.; Jack, C.R., Jr.; Kawas, C.H.; Klunk, W.E.; Koroshetz, W.J.; Manly, J.J.; Mayeux, R.; et al. The diagnosis of dementia due to Alzheimer’s disease: Recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement. 2011, 7, 263–269. [Google Scholar] [CrossRef] [Green Version]

- Dubois, B.; Feldman, H.H.; Jacova, C.; Hampel, H.; Molinuevo, J.L.; Blennow, K.; DeKosky, S.T.; Gauthier, S.; Selkoe, D.; Bateman, R.; et al. Advancing research diagnostic criteria for Alzheimer’s disease: The IWG-2 criteria. Lancet Neurol. 2014, 13, 614–629. [Google Scholar] [CrossRef]

- Khosravi, M.; Peter, J.; Wintering, N.A.; Serruya, M.; Shamchi, S.P.; Werner, T.J.; Alavi, A.; Newberg, A.B. 18F-FDG Is a Superior Indicator of Cognitive Performance Compared to 18F-Florbetapir in Alzheimer’s Disease and Mild Cognitive Impairment Evaluation: A Global Quantitative Analysis. J. Alzheimers Dis. 2019, 70, 1197–1207. [Google Scholar] [CrossRef]

- Landau, S.M.; Harvey, D.; Madison, C.M.; Koeppe, R.A.; Reiman, E.M.; Foster, N.L.; Weiner, M.W.; Jagust, W.J.; Alzheimer’s Disease Neuroimaging Initiative. Associations between cognitive, functional, and FDG-PET measures of decline in AD and MCI. Neurobiol. Aging 2011, 32, 1207–1218. [Google Scholar] [CrossRef] [Green Version]

- Smailagic, N.; Lafortune, L.; Kelly, S.; Hyde, C.; Brayne, C. 18F-FDG PET for Prediction of Conversion to Alzheimer’s Disease Dementia in People with Mild Cognitive Impairment: An Updated Systematic Review of Test Accuracy. J. Alzheimers Dis. 2018, 64, 1175–1194. [Google Scholar] [CrossRef]

- Levin, F.; Ferreira, D.; Lange, C.; Dyrba, M.; Westman, E.; Buchert, R.; Teipel, S.J.; Grothe, M.J.; Alzheimer’s Disease Neuroimaging Initiative. Data-driven FDG-PET subtypes of Alzheimer’s disease-related neurodegeneration. Alzheimers Res. Ther. 2021, 13, 49. [Google Scholar] [CrossRef]

- Drzezga, A.; Altomare, D.; Festari, C.; Arbizu, J.; Orini, S.; Herholz, K.; Nestor, P.; Agosta, F.; Bouwman, F.; Nobili, F.; et al. Diagnostic utility of 18F-Fluorodeoxyglucose positron emission tomography (FDG-PET) in asymptomatic subjects at increased risk for Alzheimer’s disease. Eur. J. Nucl. Med. Mol. Imaging 2018, 45, 1487–1496. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blomquist, G.; Engler, H.; Nordberg, A.; Ringheim, A.; Wall, A.; Forsberg, A.; Estrada, S.; Frandberg, P.; Antoni, G.; Langstrom, B. Unidirectional Influx and Net Accumulation of PIB. Open Neuroimag. J. 2008, 2, 114–125. [Google Scholar] [CrossRef] [PubMed]

- Schroeter, M.L.; Neumann, J. Combined Imaging Markers Dissociate Alzheimer’s Disease and Frontotemporal Lobar Degeneration—An ALE Meta-Analysis. Front. Aging Neurosci. 2011, 3, 10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schroeter, M.L.; Stein, T.; Maslowski, N.; Neumann, J. Neural correlates of Alzheimer’s disease and mild cognitive impairment: A systematic and quantitative meta-analysis involving 1351 patients. Neuroimage 2009, 47, 1196–1206. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hsiao, I.T.; Huang, C.C.; Hsieh, C.J.; Hsu, W.C.; Wey, S.P.; Yen, T.C.; Kung, M.P.; Lin, K.J. Correlation of early-phase 18F-florbetapir (AV-45/Amyvid) PET images to FDG images: Preliminary studies. Eur. J. Nucl. Med. Mol. Imaging 2012, 39, 613–620. [Google Scholar] [CrossRef] [PubMed]

- Son, S.H.; Kang, K.; Ko, P.W.; Lee, H.W.; Lee, S.W.; Ahn, B.C.; Lee, J.; Yoon, U.; Jeong, S.Y. Early-Phase 18F-Florbetaben PET as an Alternative Modality for 18F-FDG PET. Clin. Nucl. Med. 2020, 45, e8–e14. [Google Scholar] [CrossRef]

- Segovia, F.; Gomez-Rio, M.; Sanchez-Vano, R.; Gorriz, J.M.; Ramirez, J.; Trivino-Ibanez, E.; Carnero-Pardo, C.; Martinez-Lozano, M.D.; Sopena-Novales, P. Usefulness of Dual-Point Amyloid PET Scans in Appropriate Use Criteria: A Multicenter Study. J. Alzheimers Dis. 2018, 65, 765–779. [Google Scholar] [CrossRef]

- Mirkin, S.; Albensi, B.C. Should artificial intelligence be used in conjunction with Neuroimaging in the diagnosis of Alzheimer’s disease? Front. Aging Neurosci. 2023, 15, 1094233. [Google Scholar] [CrossRef]

- Nie, D.; Cao, X.; Gao, Y.; Wang, L.; Shen, D. Estimating CT Image from MRI Data Using 3D Fully Convolutional Networks. Deep Learn. Data Label. Med. Appl. 2016, 2016, 170–178. [Google Scholar]

- Yi, X.; Walia, E.; Babyn, P. Generative adversarial network in medical imaging: A review. Med. Image Anal. 2019, 58, 101552. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; Lee, P.; Oh, K.T.; Byun, M.S.; Yi, D.; Lee, J.H.; Kim, Y.K.; Ye, B.S.; Yun, M.J.; Lee, D.Y.; et al. Deep learning-based amyloid PET positivity classification model in the Alzheimer’s disease continuum by using 2-[(18)F]FDG PET. EJNMMI Res. 2021, 11, 56. [Google Scholar] [CrossRef] [PubMed]

- Ben Bouallègue, F.; Mariano-Goulart, D.; Payoux, P. Joint Assessment of Quantitative 18F-Florbetapir and 18F-FDG Regional Uptake Using Baseline Data from the ADNI. J. Alzheimers Dis. 2018, 62, 399–408. [Google Scholar] [CrossRef] [PubMed]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Rukundo, O. Effects of Image Size on Deep Learning. Electronics 2023, 12, 985. [Google Scholar] [CrossRef]

| Training Group | Validation Group | Total | |

|---|---|---|---|

| Number | 82 (75%) | 28 (25%) | 110 |

| Age *, years | 72.8 ± 7.8 | 72.0 ± 9.1 | |

| Sex *, n (%) | |||

| Male | 50 (61%) | 16 (57%) | 66 (60%) |

| Female | 32 (39%) | 12 (43%) | 44 (40%) |

| Diagnosis †, n (%) | |||

| Normal | 1 (1%) | 1 (4%) | 2 (2%) |

| MCI | 60 (73%) | 21 (75%) | 81 (74%) |

| AD | 21 (26%) | 6 (21%) | 27 (24%) |

| CycleGAN Model | Pix2pix Model | p Value * | |

|---|---|---|---|

| Mean | 0.768 | 0.745 | <0.001 |

| Standard deviation | 0.135 | 0.143 |

| CycleGAN Model | Pix2pix Model | p Value * | |

|---|---|---|---|

| Mean | 32.4 | 30.7 | <0.001 |

| Standard deviation | 9.5 | 8.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, H.J.; Seo, M.; Kim, A.; Park, S.H. Generation of Conventional 18F-FDG PET Images from 18F-Florbetaben PET Images Using Generative Adversarial Network: A Preliminary Study Using ADNI Dataset. Medicina 2023, 59, 1281. https://doi.org/10.3390/medicina59071281

Choi HJ, Seo M, Kim A, Park SH. Generation of Conventional 18F-FDG PET Images from 18F-Florbetaben PET Images Using Generative Adversarial Network: A Preliminary Study Using ADNI Dataset. Medicina. 2023; 59(7):1281. https://doi.org/10.3390/medicina59071281

Chicago/Turabian StyleChoi, Hyung Jin, Minjung Seo, Ahro Kim, and Seol Hoon Park. 2023. "Generation of Conventional 18F-FDG PET Images from 18F-Florbetaben PET Images Using Generative Adversarial Network: A Preliminary Study Using ADNI Dataset" Medicina 59, no. 7: 1281. https://doi.org/10.3390/medicina59071281