Detection of Abnormal Changes on the Dorsal Tongue Surface Using Deep Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Collection of Clinical Photographic Images

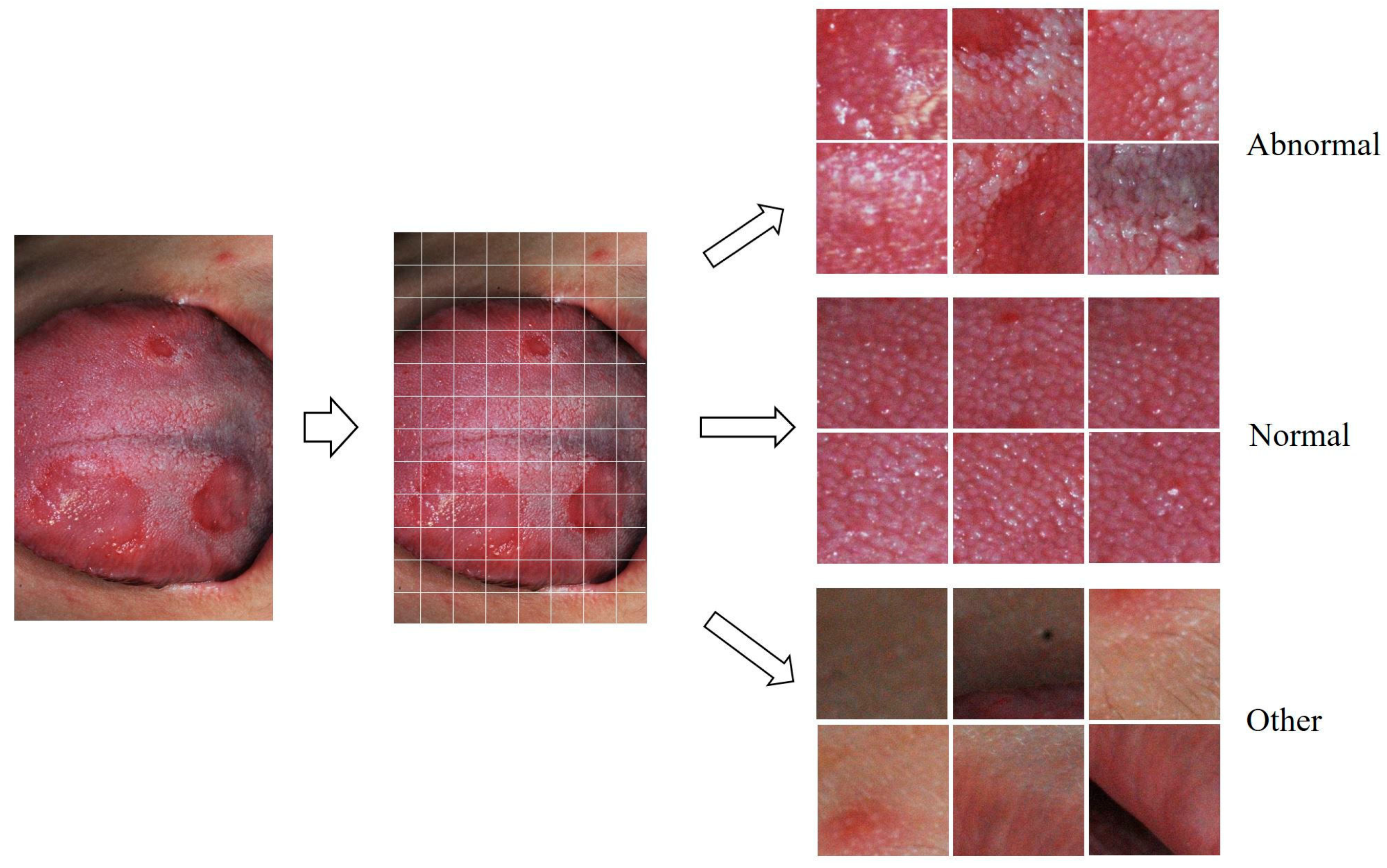

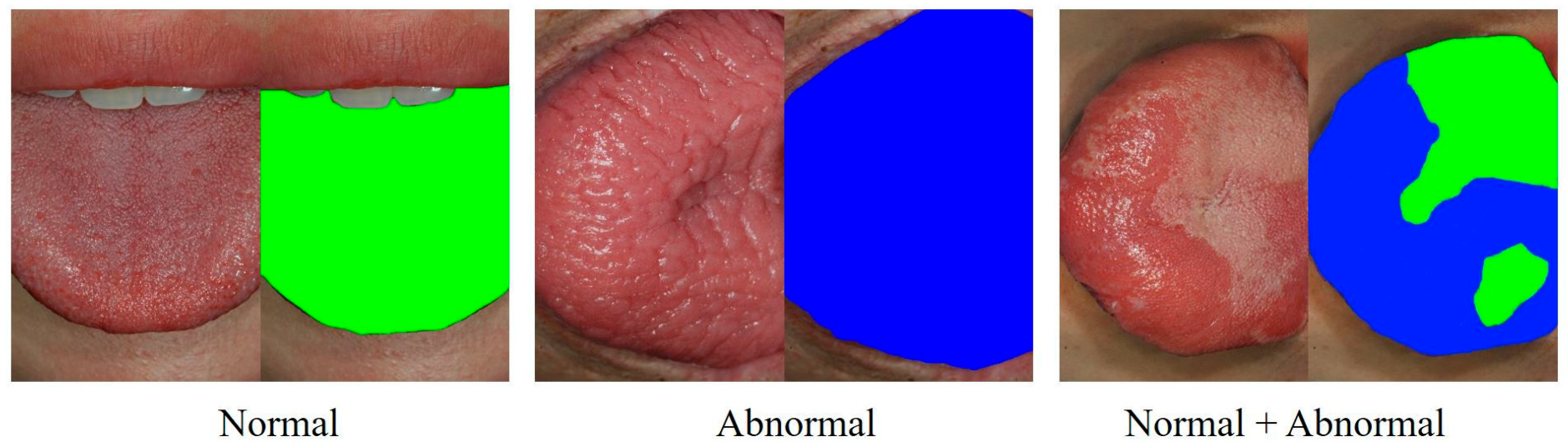

2.2. Preprocessing of Photographic Images for Deep Learning

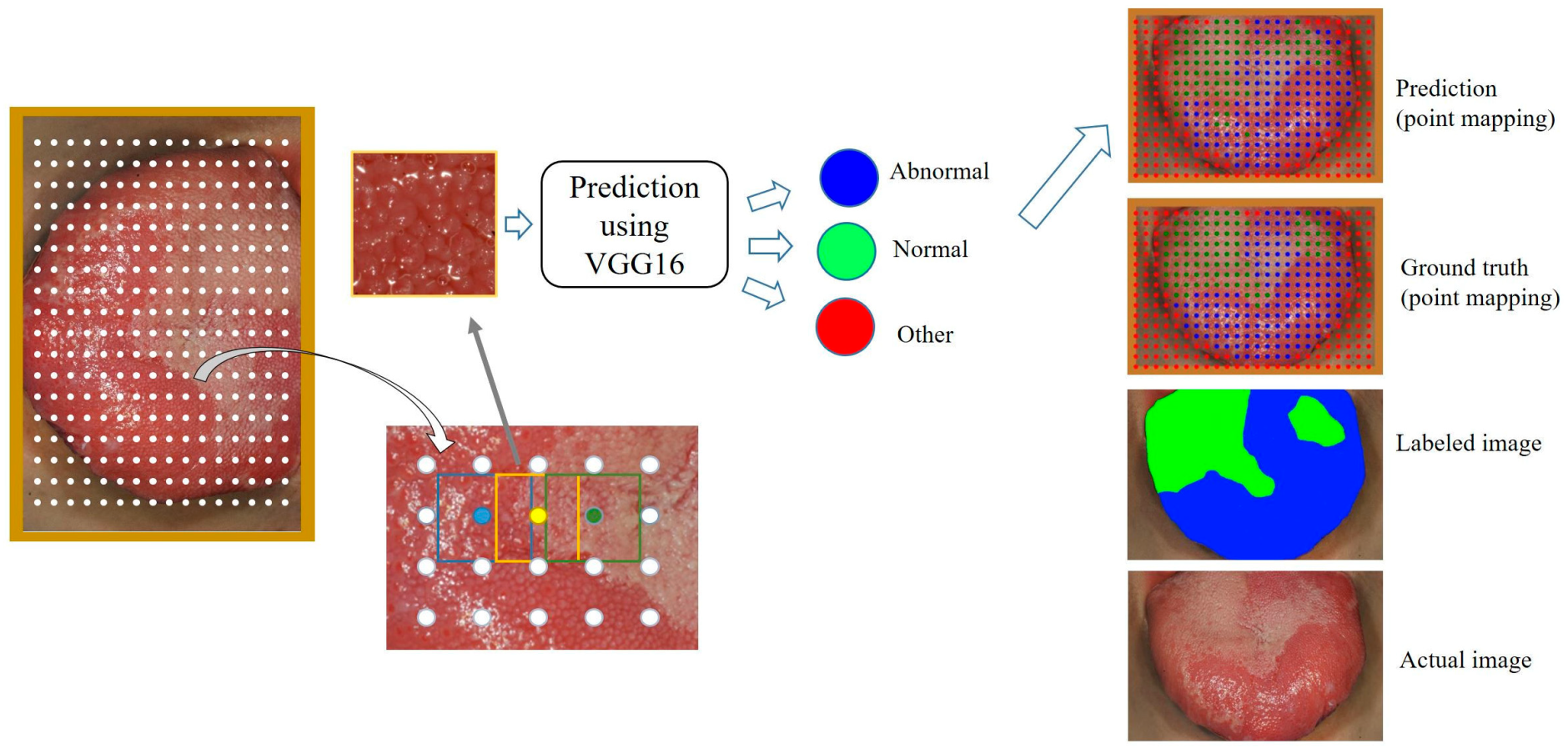

2.3. Classification of Crop Images Using the VGG16 Model

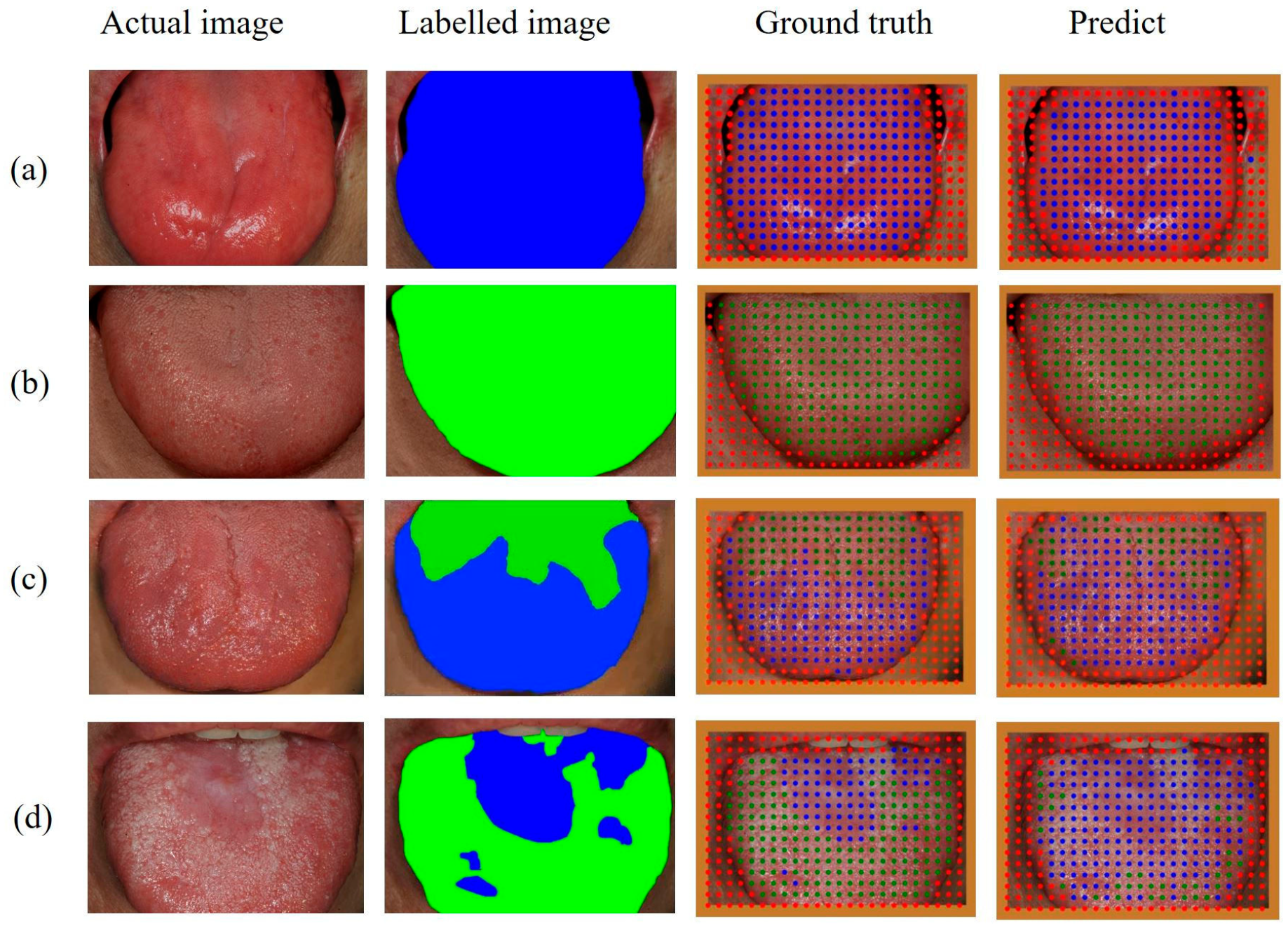

2.4. Point Mapping on the Dorsal Tongue Surface

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jacobs, A.; Cavill, I. The Oral Lesions of Iron Deficiency Anaemia: Pyridoxine and Riboflavin Status. Br. J. Haematol. 1968, 14, 291–295. [Google Scholar] [CrossRef]

- Chen, G.-Y.; Tang, Z.-Q.; Bao, Z.-X. Vitamin B12 deficiency may play an etiological role in atrophic glossitis and its grading: A clinical case-control study. BMC Oral Health 2022, 22, 456. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, K.; Kurihara, M.; Matsusue, Y.; Komatsu, Y.; Tsuyuki, M.; Fujimoto, T.; Nakamura, S.; Kirita, T. Atrophic change of tongue papilla in 44 patients with Sjögren syndrome. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2009, 107, 801–805. [Google Scholar] [CrossRef] [PubMed]

- Rashid, H.; Lamberts, A.; Diercks, G.F.H.; Pas, H.H.; Meijer, J.M.; Bolling, M.C.; Horváth, B. Oral Lesions in Autoimmune Bullous Diseases: An Overview of Clinical Characteristics and Diagnostic Algorithm. Am. J. Clin. Dermatol. 2019, 20, 847–861. [Google Scholar] [CrossRef] [Green Version]

- Stankler, L.; Kerr, N.W. Prominent fungiform papillae in guttate psoriasis. Br. J. Oral Maxillofac. Surg. 1984, 22, 123–128. [Google Scholar] [CrossRef]

- Silverberg, N.B.; Singh, A.; Echt, A.F.; Laude, T.A. Lingual fungiform papillae hypertrophy with cyclosporin A. Lancet 1996, 348, 967. [Google Scholar] [CrossRef]

- Messadi, D.V. Diagnostic aids for detection of oral precancerous conditions. Int. J. Oral Sci. 2013, 5, 59–65. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, Y.; Deng, X.; Sun, Y.; Wang, X.; Xiao, Y.; Li, Y.; Chen, Q.; Jiang, L. Optical Imaging in the Diagnosis of OPMDs Malignant Transformation. J. Dent. Res. 2022, 101, 749–758. [Google Scholar] [CrossRef]

- Lee, K.-S.; Jung, S.-K.; Ryu, J.-J.; Shin, S.-W.; Choi, J. Evaluation of Transfer Learning with Deep Convolutional Neural Networks for Screening Osteoporosis in Dental Panoramic Radiographs. J. Clin. Med. 2020, 9, 392. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Huang, D.; Zheng, S.; Song, Y.; Liu, B.; Sun, J.; Niu, Z.; Gu, Q.; Xu, J.; Xie, L. Deep learning enables discovery of highly potent anti-osteoporosis natural products. Eur. J. Med. Chem. 2021, 210, 112982. [Google Scholar] [CrossRef]

- Yamamoto, N.; Sukegawa, S.; Kitamura, A.; Goto, R.; Noda, T.; Nakano, K.; Takabatake, K.; Kawai, H.; Nagatsuka, H.; Kawasaki, K.; et al. Deep Learning for Osteoporosis Classification Using Hip Radiographs and Patient Clinical Covariates. Biomolecules 2020, 10, 1534. [Google Scholar] [CrossRef] [PubMed]

- Pauwels, R.; Brasil, D.M.; Yamasaki, M.C.; Jacobs, R.; Bosmans, H.; Freitas, D.Q.; Haiter-Neto, F. Artificial intelligence for detection of periapical lesions on intraoral radiographs: Comparison between convolutional neural networks and human observers. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2021, 131, 610–616. [Google Scholar] [CrossRef] [PubMed]

- Ariji, Y.; Yanashita, Y.; Kutsuna, S.; Muramatsu, C.; Fukuda, M.; Kise, Y.; Nozawa, M.; Kuwada, C.; Fujita, H.; Katsumata, A.; et al. Automatic detection and classification of radiolucent lesions in the mandible on panoramic radiographs using a deep learning object detection technique. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2019, 128, 424–430. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Zhang, K.; Lyu, P.; Li, H.; Zhang, L.; Wu, J.; Lee, C.-H. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci. Rep. 2019, 9, 3840. [Google Scholar] [CrossRef] [Green Version]

- Anantharaman, R.; Velazquez, M.; Lee, Y. Utilizing Mask R-CNN for Detection and Segmentation of Oral Diseases. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2197–2204. [Google Scholar]

- Koch, T.L.; Perslev, M.; Igel, C.; Brandt, S.S. Accurate Segmentation of Dental Panoramic Radiographs with U-NETS. In Proceedings of the IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; pp. 15–19. [Google Scholar]

- Khan, H.A.; Haider, M.A.; Ansari, H.A.; Ishaq, H.; Kiyani, A.; Sohail, K.; Muhammad, M.; Khurram, S.A. Automated feature detection in dental periapical radiographs by using deep learning. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2021, 131, 711–720. [Google Scholar] [CrossRef] [PubMed]

- Alabi, R.O.; Almangush, A.; Elmusrati, M.; Mäkitie, A.A. Deep Machine Learning for Oral Cancer: From Precise Diagnosis to Precision Medicine. Front. Oral Health 2022, 2, 794248. [Google Scholar] [CrossRef]

- Alabi, R.O.; Elmusrati, M.; Leivo, I.; Almangush, A.; Mäkitie, A.A. Advanced-stage tongue squamous cell carcinoma: A machine learning model for risk stratification and treatment planning. Acta Oto-Laryngol. 2023, 143, 206–214. [Google Scholar] [CrossRef]

- Heo, J.; Lim, J.H.; Lee, H.R.; Jang, J.Y.; Shin, Y.S.; Kim, D.; Lim, J.Y.; Park, Y.M.; Koh, Y.W.; Ahn, S.-H.; et al. Deep learning model for tongue cancer diagnosis using endoscopic images. Sci. Rep. 2022, 12, 6281. [Google Scholar] [CrossRef]

- Yuan, L.; Yang, L.; Zhang, S.; Xu, Z.; Qin, J.; Shi, Y.; Yu, P.; Wang, Y.; Bao, Z.; Xia, Y.; et al. Development of a tongue image-based machine learning tool for the diagnosis of gastric cancer: A prospective multicentre clinical cohort study. Eclinicalmedicine 2023, 57, 101834. [Google Scholar] [CrossRef]

- Warin, K.; Limprasert, W.; Suebnukarn, S.; Jinaporntham, S.; Jantana, P. Automatic classification and detection of oral cancer in photographic images using deep learning algorithms. J. Oral Pathol. Med. 2021, 50, 911–918. [Google Scholar] [CrossRef]

- Tanriver, G.; Tekkesin, M.S.; Ergen, O. Automated Detection and Classification of Oral Lesions Using Deep Learning to Detect Oral Potentially Malignant Disorders. Cancers 2021, 13, 2766. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chollet, F. Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Padilla, S.; Netto, L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Hao, S.; Zhou, Y.; Guo, Y. A Brief Survey on Semantic Segmentation with Deep Learning. Neurocomputing 2020, 406, 302–321. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

| Type of Change | Description |

|---|---|

| Tongue coating | accumulation of foreign substances on the tongue surface |

| Hairy tongue | hair-like growth of the filiform papillae |

| Fissures | deep furrows between tongue papillae |

| Papillary atrophy | a smooth, reddish tongue surface with no detailed structure of the papillae |

| Erosion | a pale-whitish lesion with loss of the epithelial layer only |

| Ulcer | a concave yellowish-white lesion with loss of the epithelial layer and part of the lamina propria |

| Lichenoid change | whitish epithelial thickening resembling the reticular form of oral lichen planus |

| Hyperkeratotic change | whitish epithelial thickening seen in lesions such as oral hyperkeratosis |

| Papillary hypertrophy | enlargement of the tongue papillae |

| Artifacts | saliva bubbles or camera flashlight reflections |

| Class | Total (100%) | Training (68.8%) | Validation (15.4%) | Testing (15.8%) |

|---|---|---|---|---|

| Abnormal | 3220 | 2254 | 483 | 483 |

| Normal | 2813 | 1881 | 449 | 483 |

| Other | 1749 | 1223 | 263 | 263 |

| Total | 7782 | 5358 | 1195 | 1229 |

| Predict | |||||

|---|---|---|---|---|---|

| Abnormal | Normal | Other | |||

| Actual | VGG16 | Abnormal | 476 | 5 | 2 |

| Normal | 23 | 458 | 1 | ||

| Other | 10 | 1 | 252 | ||

| ResNet-50 | Abnormal | 473 | 6 | 4 | |

| Normal | 15 | 463 | 4 | ||

| Other | 7 | 2 | 254 | ||

| Xeption | Abnormal | 462 | 13 | 8 | |

| Normal | 8 | 473 | 1 | ||

| Other | 9 | 2 | 252 | ||

| Group | Precision | Recall | F1-Score | Accuracy | AUC |

|---|---|---|---|---|---|

| Abnormal | 0.935 | 0.986 | 0.960 | 0.967 | 0.996 |

| Normal | 0.987 | 0.950 | 0.968 | 0.976 | 0.998 |

| Other | 0.988 | 0.958 | 0.973 | 0.989 | 0.998 |

| Class | Precision | Recall | F1-Score | IoU | AP |

|---|---|---|---|---|---|

| Abnormal | 0.717 | 0.737 | 0.727 | 0.695 | 0.842 |

| Normal | 0.650 | 0.641 | 0.645 | 0.590 | 0.706 |

| Other | 0.851 | 0.975 | 0.909 | 0.831 | 1.000 |

| Mean value | 0.739 | 0.784 | 0.760 | 0.705 | 0.849 |

| AI Judgment | |||

|---|---|---|---|

| Normal | Abnormal | ||

| Human judgment | Normal | A normal uniform pattern of tongue papillae | A shallow groove that looks like a fissure (mostly the midline groove on the tongue dorsum). Areas with few filiform but many fungiform papillae (mainly at the tongue apex and lateral borders) Slightly enlarged fungiform papillae (mainly at the tongue apex and lateral borders) |

| Abnormal | Mildly hairy tongue Moderate tongue coating Areas with light-toned atrophic and hyperkeratotic filiform papillae | Deep fissures Smooth and reddish areas due to atrophy of tongue papillae Thick tongue coating Erosive and ulcerous lesions Thick whitish keratotic lesions | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, H.-J.; Park, Y.-J.; Jeong, H.-Y.; Kim, B.-G.; Kim, J.-H.; Im, Y.-G. Detection of Abnormal Changes on the Dorsal Tongue Surface Using Deep Learning. Medicina 2023, 59, 1293. https://doi.org/10.3390/medicina59071293

Song H-J, Park Y-J, Jeong H-Y, Kim B-G, Kim J-H, Im Y-G. Detection of Abnormal Changes on the Dorsal Tongue Surface Using Deep Learning. Medicina. 2023; 59(7):1293. https://doi.org/10.3390/medicina59071293

Chicago/Turabian StyleSong, Ho-Jun, Yeong-Joon Park, Hie-Yong Jeong, Byung-Gook Kim, Jae-Hyung Kim, and Yeong-Gwan Im. 2023. "Detection of Abnormal Changes on the Dorsal Tongue Surface Using Deep Learning" Medicina 59, no. 7: 1293. https://doi.org/10.3390/medicina59071293