Abstract

Evidence syntheses of randomized clinical trials (RCTs) offer the highest level of scientific evidence for informing clinical practice and policy. The value of evidence synthesis itself depends on the trustworthiness of the included RCTs. The rising number of retractions and expressions of concern about the authenticity of RCTs has raised awareness about the existence of problematic studies, sometimes called “zombie” trials. Research integrity, i.e., adherence to ethical and professional standards, is a multi-dimensional concept that is incompletely evaluated for the RCTs included in current evidence syntheses. Systematic reviewers tend to rely on the editorial and peer-review system established by journals as custodians of integrity of the RCTs they synthesize. It is now well established that falsified and fabricated RCTs are slipping through. Thus, RCT integrity assessment becomes a necessary step in systematic reviews going forward, in particular because RCTs with data-related integrity concerns remain available for use in evidence syntheses. There is a need for validated tools for systematic reviewers to proactively deploy in the assessment of integrity deviations without having to wait for RCTs to be retracted by journals or expressions of concern issued. This article analyzes the issues and challenges in conducting evidence syntheses where the literature contains RCTs with possible integrity deficits. The way forward in the form of formal RCT integrity assessments in systematic reviews is proposed, and implications of this new initiative are discussed. Future directions include emphasizing ethical and professional standards, providing tailored integrity-specific training, and creating systems to promote research integrity, as improvements in RCT integrity will benefit evidence syntheses.

1. Introduction

Evidence syntheses of randomized clinical trials (RCTs) offer the highest level of effectiveness evidence validity for informing clinical practice and policy [1]. They make the results of RCTs available to practitioners and allow them to reach patients through systematic reviews and clinical practice guidelines. The rising number of allegations of data fabrication and falsification in retractions and expressions of concern about questionable research practices or faulty research methodology [2,3,4,5] has raised awareness about the authenticity of RCTs. (Un)intentional errors may lead to the existence of problematic studies, sometimes called “zombie” trials [6], within the literature. It has recently been recognized that: “Even though the process for the detection and correction of error and fraud might be fairly well established and “standardized”, such as in COPE or ICMJE guidelines, inter-journal and inter-publisher variability, including editorial responsibilities, will continue to limit the effective correction of erroneous and fraudulent literature globally” [7]. This background has important implications for evidence syntheses, including both systematic reviews and guidelines, that have the potential to widen the impact of faulty RCTs. This commentary describes challenges in evidence syntheses, with respect to included RCT integrity, with particular focus on data fabrication and falsification.

2. How Can We Trust Evidence Syntheses?

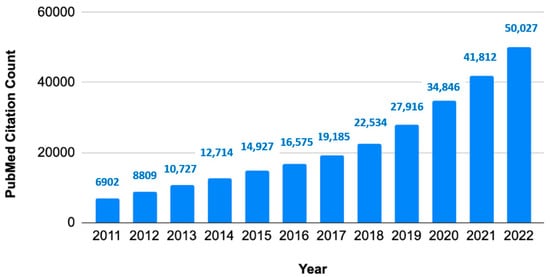

Evidence synthesis is a type of research method that collates all relevant studies and interprets their collective findings. There has been sustained growth in systematic review publications during the last decade (Figure 1).

Figure 1.

Growth of systematic reviews to synthesize evidence. Annual citation counts of article type systematic reviews in PubMed database.

The fundamental steps of evidence synthesis are as follows: defining the question(s); searching for relevant studies (screening and selection against defined inclusion and exclusion criteria); appraising the quality of the studies included and extracting relevant data; collating data, undertaking meta-analyses where appropriate; and interpreting the findings [1]. The approach to quality assessment of the evidence attempts to minimize the risk of bias through the use of an explicit and transparent methodology. A typical example of evidence synthesis is a systematic review of treatment effectiveness [8]. The product will be a guideline which provides evidence-based statements for clinical decision-making, practice, and policy. The critical appraisal of evidence is key to the derivation of trustworthy practice recommendations and is the essence of evidence-based medicine [9].

The evidence used in the systematic reviews may include a range of study designs, from RCTs to observational studies, including case series too. RCTs are ranked the highest in the hierarchy of evidential validity due to their unique design that randomly assigns participants into experimental or control groups to compare outcomes. Randomization targets the minimization of selection bias in generating evidence about the effectiveness of interventions. To robustly implement and report trials, researchers performing RCTs are required to undertake regular training (good clinical practice courses), and trials are required to be prospectively registered in registries such as Clinicaltrials.gov, amongst other requirements [10,11]. Therefore, whenever available, systematic reviews and guidelines endeavor to include RCTs over other designs [12,13]. To enable the appraisal of evidence syntheses, several instruments or tools exist. As examples of tools for assessing systematic reviews, two widely used tools are AMSTAR-2 and ROBIS [14,15]. Other examples of tools for practicing guidelines are RIGHT and AGREE [16,17]. These tools include domains on how studies included in the synthesis were identified, selected, appraised, and analyzed, among others. They cover the risk of bias assessment. Nevertheless, the risk of bias assessment only partially targets integrity as the two concepts are not synonymous. They do not explicitly target study integrity assessment within evidence syntheses, a topic on which this paper will focus.

3. Relationship between Primary Research Integrity and Evidence Syntheses

Research integrity is a term that captures compliance with ethical and professional standards in the conduct of scientific studies [18]. In order to elucidate the research integrity concept, we provide, in Table 1, key research integrity terms and definitions.

Focusing evidence syntheses on the best available study designs, carrying out selected study appraisal, and basing inferences on the highest quality subgroup of studies targets avoidance or minimization of the risk of bias. Current review methodology does not explicitly target study integrity. It is important to recognize that, conceptually, study quality assessment and integrity evaluation are not synonymous. Inherently, systematic reviewers tend to rely on the editorial and peer-review systems established by journals, which appear as a custodian of research integrity assessment. However, there are well-known gaps in journals’ authors’ instructions, editorial and peer-review evaluations, and investigation policies about post-publication allegations of scientific misconduct [22]. Study integrity assessment can be time-consuming, permitting fabricated or falsified studies to remain usable and to be included into evidence syntheses [23]. Only 5% of systematic reviews or clinical practice guidelines have corrected or retracted their results, with respect to retractions of included studies [24]. Without actual integrity assessment, the underpinned source studies behind evidence syntheses may include those that do not comply with responsible research conduct as genuine data.

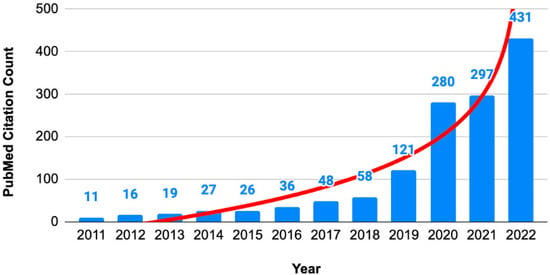

The incorporation of RCT integrity assessment in evidence syntheses is an important consideration because the number of studies with expressions of concern has been rising exponentially (Figure 2).

Figure 2.

Numbers of articles with expressions of concern. Annual citation counts of expression of concern about articles in PubMed database.

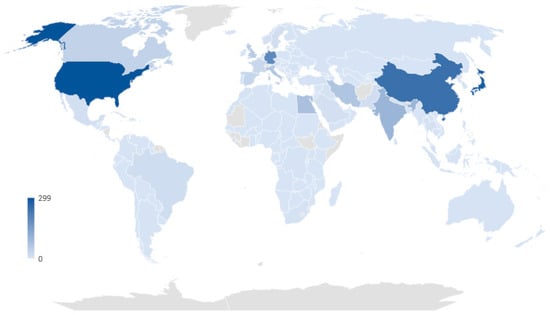

Based on data retrieved on 2 February 2023, from the Retraction Watch Database, the top five countries with the highest number of retracted clinical studies per country are the United States, Japan, China, Germany, and India, with 299, 281, 245, 192, and 98 retractions, respectively. This data indicates that the retraction of studies is a global issue, and developed countries bear a great deal of responsibility for studies lacking integrity (Figure 3).

Figure 3.

The number of retracted clinical studies per country based on Retraction Watch Database (http://retractiondatabase.org, data extracted on 2 February 2023).

These issues, in turn, raise concerns about the integrity of evidence syntheses as inclusion of studies with retractions due to issues in “data/analyses/results” lead to summary estimates in systematic reviews that depart from the studies without these issues [25].

4. Importance of Assessing the Integrity of RCTs Included in Evidence Syntheses

In this paper, we focus on evidence syntheses that deploy RCTs, as it is a high-validity study design to underpin evidence-based medicine. We focus specifically on potential data-related integrity issues in RCTs. Behind every disease prevention and treatment breakthrough, there are thousands of volunteer participants in RCTs whose data are collated in evidence syntheses. Despite the need for obtaining ethics approval, confirming informed consent, and applying independent oversight during trial conduct, RCTs are not exempt from the possibility of (un)intentional integrity deviations. The general fact that expressions of concern (Figure 2) and retractions (Figure 3) are numerous has shaken public confidence, being markedly astounding during the COVID-19 pandemic [26]. It is likely that not all retractions are the result of deliberate fraud, falsification, and fabrication. Unintentional errors, spin, or flawed techniques are bound to have played their part [27,28]. However, every RCT with integrity concerns that remains usable poses a threat to patients and public health. Therefore, systematic reviewers and guideline developers need to be vigilant about problematic or “zombie” RCTs [29].

Evidence syntheses affected by inclusion of RCTs that have integrity deficits are not difficult to find. The need for change in the attitude towards integrity assessment within reviews is highlighted by the following examples. Recently, Hill et al. retracted a meta-analysis of RCTs concerning COVID-19. The significant benefits initially observed could not be sustained after several of the included studies in the meta-analysis were withdrawn due to fraudulent data or other additional problems [30,31]. Avenell et al. reviewed the impact of the inclusion of retracted RCTs on evidence syntheses [29]. This group of retracted RCTs were published in the late nineties, and the reason for the retractions was serious misconduct, including concerns related to data integrity. RCT retractions in this case were only applied nearly two decades after publication [32]. Following the retractions, at least one of the retracted RCTs had been included in 32 evidence syntheses published. Avenell et al. judged that the conclusion of 13/32 evidence syntheses would have changed if the retracted RCTs had been excluded. Marret et al. reviewed the impact of the inclusion of another group of retracted RCTs on evidence syntheses and judged that the conclusion of 2/14 would have changed if the retracted RCTs had been excluded [33]. Habib et al., in a commentary concerning the impact of the inclusion of yet another group of retracted RCTs on evidence syntheses, indicated that the likelihood of compromise was modest with some systematic reviews that performed sensitivity analyses, noting that their conclusions were different after excluding the retracted data [34]. Fanelli et al. similarly concluded that the potential epistemic cost of retraction was modest, with emphasis on the reason for retraction as the key issue [25].

These examples confirm that a specific methodology is required to address the issue of RCT integrity in systematic reviews head-on. It is important to recognize that the purpose of this methodology ought to focus on the protection of patients and public health. The precursors of failure to comply with responsible research conduct are many, including misconduct, recklessness, carelessness, lack of training, etc. [35]. It is not the systematic reviewers’ role to judge original authors’ motivations; journals, employers, funders, etc. have investigative and sanctioning roles. What systematic reviewers need is a proactive attitude towards synthesizing evidence that does not harbor integrity deviations [27]. This will protect the trustworthiness of evidence syntheses and evidence-based medicine.

5. How to Incorporate RCT Integrity Assessment in Evidence Syntheses

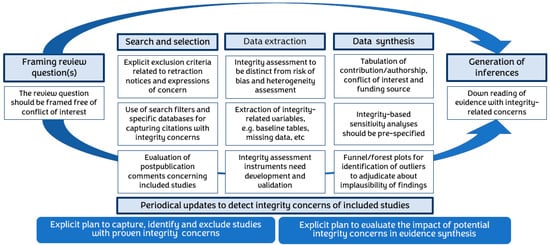

A systematic approach is required on various evidence synthesis fronts, including but not limited to the identification of honestly conducted research in searching, assessment of integrity (separately from bias assessments) of included studies, prior planning of sensitivity analyses for integrity, and transparency in generating inferences, given the cautious possibility of compromise in underlying data (Figure 4).

Figure 4.

Infographic of the key steps during evidence synthesis to maximize the integrity of included research.

To assess the integrity of included RCTs, it is important to follow rigorous tool development methodology that has been widely applied previously in the development of study quality or risk of bias assessment tools [36,37]. Once developed, these should be fed back into revisions of current reporting guidelines [38]. Statistical analyses would need to be refocused, addressing integrity issues, e.g., funnel plot analyses may be used to inspect small studies that have implausibly large effects. In study-level published data meta-analyses, sub-group and meta-regression analyses may routinely include an integrity assessment-based variable. In individual patient data meta-analyses, statistical techniques can be applied for the detection of anomalous patterns in the underlying numerical data to check for data integrity [39,40,41,42]. These more sophisticated analyses should feed into evidence grading for the generation of judicious inferences.

6. Implications, Issues, Challenges, and Limitations

Evidence synthesis, mindful of research integrity, will need to attempt to collate all empirical evidence that fits pre-specified eligibility criteria, excluding studies with proven integrity concerns, using explicit methods to detect and quantify these concerns, evaluating their impact in planned statistical analyses and minimizing or eliminating the pollution of the inferences that may arise due to inclusion of studies with possibly compromised data. Periodical updates of reviews to detect integrity concerns of included studies should be performed (Table 2).

There are many issues to consider. A controversial aspect here is whether to include studies with expressed concerns but without proven misconduct. This controversy is not too different to the inclusion of RCTs with varying levels of risk of bias arising due to faulty randomization, lack of allocation concealment, or blinding. This is now routine in effectiveness reviews. The development, validation, and application of advanced methods that can accurately detect integrity breaches in publicly available RCTs are needed [43,44,45]. Handling the integrity assessment of selected studies after excluding those with confirmed integrity breaches requires further consideration in methodological development of evidence syntheses as, at present, there are no clear procedures established. We could only find one article providing a method for detecting retracted literature cited in systematic reviews and meta-analyses [46]. Unfortunately, there are no validated tools for integrity assessment yet [40]. One important aspect to highlight is that to date, there is no standard definition of the term “research integrity”. Thus, the concepts of bias, quality, validity, and integrity can be confusing for readers as well as reviewers. A precise characterization of research integrity distinct from the idea of risk of bias assessments is needed as a starting point for the required methodological developments to take place in the right direction in the future.

A particularly important issue that impinges on the critical appraisal of integrity is the enormous literature size and growth of publication rates. In 2015, there were about 28,100 and 6450 English-language and non-English-language science, technology, and medicine journals, respectively, growing at about 3% annually [47]. Defective studies should never get to enter circulation, but the growing volume complicates the challenge. To put a lid on the integrity-related concern that will grow with this literature expansion, automated checks will be required just as they have been used for the detection of plagiarism. This is required in part because the peer review process might not be able to cope with new ways of capturing defective literature since editors and peer reviewers would have to upgrade their knowledge/skill sets [7]. Computer sciences are being deployed for critical appraisal [48].

7. Artificial Intelligence for Integrity Assessment

The inclusion of efficient tools that will automate integrity assessment in evidence synthesis is the next methodological advance required. Review projects usually require a team of reviewers who screen and identify literature and evaluate included study quality. They will additionally need to perform integrity assessments before collating findings and generating recommendations. Currently, reviews take up human effort and take too long to collect and evaluate the data included, undertaking double-checks to minimize errors. The review process is estimated to take, on average, 67.3 weeks (IQR 41.6) to complete an evidence synthesis, and publishing the synthesis involves 5.3 review team members (IQR 3), on average [49]. In addition to being slow, there is an inherent error rate associated with human effort, e.g., the selection process suffers a 10% error (false inclusion and false exclusion) rate [50]. Thus, it has been concluded that: “Systematic reviews presently take much time and require large amounts of human resources. In the light of the ever-increasing volume of published studies, application of existing computing and informatics technology should be applied to decrease this time and resource burden” [49]. The use of automation is also emphasized for integrity assessment [27] and, although infrequently used until now, it is impactful [51].

These assessments need development and validation of new instruments to enable the detection and exclusion of questionable evidence from evidence syntheses, without the need to wait for retractions. Automated detection of retractions, specifically for data-related misconduct associated with fabrication, falsification, and other types of forgery, are needed [44]. This way, integrity assessments in systematic reviews will streamline the literature correction process and may include alerts for journals to trigger investigations. The tools, once developed and validated, may also be used for improving peer-reviews, reducing the circulation of “zombie” trials. This will improve the validity of the evidence syntheses going forward and will assist in the pre- and post-publication review process in cases of allegations.

8. Current Conclusions

Evidence syntheses collating RCTs influence practice and health policies, directly impacting patient care. The investigation and retraction of RCTs with integrity concerns is a slow process. Thus, defective RCTs remain in circulation, putting patients at risk. Even after retraction, defective studies continue to be cited in systematic reviews as they are not removed from databases and their signposting is poor. Evidence syntheses fail to issue corrections even when retractions are identified. All this entails risk as patients remain exposed to interventions that are futile or even risky for their health. Evidence syntheses need to urgently upgrade their methods to incorporate integrity assessments as a routine, as outlined in Figure 4.

9. Future Direction

Research integrity, a broad concept holistically incorporating both ethical and professional standards [18], needs to be considered in evidence synthesis covering the whole range of issues inherent in responsible RCT conduct. This needs to be defined explicitly through further research. However, the illegal Tuskegee syphilis experiment from the recent past is a case in point where unethical research can permeate within the literature without comment. In a 2022 journal article [51], Tobin wrote: “Despite 15 journal articles detailing the results, no physician published a letter criticizing the Tuskegee study. Informed consent was never sought; instead, Public Health Service researchers deceived the men into believing they were receiving expert medical care”. These articles remain formally unretracted from the literature to this date. Note that ethics and consent standards were not covered by us in this article as we focused on data-related integrity, but it remains a key aspect demanding future research and development for its proper implementation and monitoring in RCTs. There are many articles showing deficits in informed consents in clinical studies [52,53,54], and this type of integrity assessment ought to be featured in evidence syntheses.

There ought to be an emphasis on prevention, metaphorically nipping the evil studies in the bud [55]. This begins with clarifying integrity-related definitions, e.g., what are questionable research practices that raise integrity concerns. Then, it would be appropriate to identify modifiable factors and barriers that may affect best practice compliance [3]. Future direction would take the above forward emphasizing routine adherence to ethical and professional standards through periodic integrity-specific training tailored to educational environment for all stakeholders involved in the RCT research lifecycle, including but not limited to researchers, ethics committee members, funders, editors, peer reviewers, systematic reviewers, guideline makers, drug regulators, medical journalists, as well as lay readers. Integrity training in clinical trials has been recommended in a recent international multi-stakeholder consensus statement [44], with emphasis on enabling research teams from low resource settings to make contributions. The creation of solid systems backed by valid and robust instruments and methods for inculcating research integrity are urgently needed. Future evidence syntheses will directly benefit from these improvements in the integrity of the conduct, analysis, and reporting of primary RCTs collated within literature reviews.

Author Contributions

Conceptualization, K.S.K. and A.B.-C.; methodology, K.S.K. and A.B.-C.; investigation, N.C.-I. and M.N.-N.; data curation, N.C.-I. and M.N.-N.; writing—original draft preparation, K.S.K. and M.N.-N.; writing—review and editing, K.S.K., A.B.-C., N.C.-I., J.Z. and M.N.-N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

K.S.-K. is a distinguished investigator at the University of Granada funded by the Beatriz Galindo (senior modality) program of the Spanish Ministry of Education; M.N.-N. is granted by the Research institute Carlos III under the Rio Hortega training program (CM20/00074).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khan, K.S.; Zamora, J. Systematic Reviews to Support Evidence-Based Medicine, 3rd ed.; Taylor & Francis Publishing: London, UK, 2022. [Google Scholar]

- De Vrieze, J. Large survey finds questionable research practices are common. Science 2021, 373, 265. [Google Scholar] [CrossRef] [PubMed]

- Gopalakrishna, G.; ter Riet, G.; Vink, G.; Stoop, I.; Wicherts, J.M.; Bouter, L.M. Prevalence of questionable research practices, research misconduct and their potential explanatory factors: A survey among academic researchers in the Netherlands. PLoS ONE 2022, 17, e0263023. [Google Scholar] [CrossRef] [PubMed]

- Steen, R.G.; Casadevall, A.; Fang, F.C. Why Has the Number of Scientific Retractions Increased? PLoS ONE 2013, 8, e68397. [Google Scholar] [CrossRef]

- Vinkers, C.H.; Lamberink, H.J.; Tijdink, J.K.; Heus, P.; Bouter, L.; Glasziou, P.; Moher, D.; Damen, J.A.; Hooft, L.O.W. The methodological quality of 176,620 randomized controlled trials published between 1966 and 2018 reveals a positive trend but also an urgent need for improvement. PLoS Biol. 2021, 19, e3001162. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A. Hundreds of thousands of zombie randomised trials circulate among us. Anaesthesia 2021, 76, 444–447. [Google Scholar] [CrossRef]

- Teixeira da Silva, J.A. A Synthesis of the Formats for Correcting Erroneous and Fraudulent Academic Literature, and Associated Challenges. J. Gen. Philos. Sci. 2022, 53, 583–599. Available online: https://link.springer.com/article/10.1007/s10838-022-09607-4 (accessed on 30 January 2023). [CrossRef]

- Higgins, G. Cochrane Handbook for Systematic Reviews of Interventions; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Twells, L.K. Evidence-Based Decision-Making 1: Critical Appraisal. Methods Mol. Biol. 2021, 2249, 389–404. [Google Scholar]

- World Medical Association (WMA). Declaration on Guidelines for Continuous Quality Improvement in Healthcare. Available online: https://www.wma.net/policies-post/wma-declaration-on-guidelines-for-continuous-quality-improvement-in-health-care/ (accessed on 9 April 2023).

- ICH Official Web Site: ICH. Available online: https://www.ich.org/ (accessed on 17 January 2022).

- Bauchner, H.; Golub, R.M.; Fontanarosa, P.B. Reporting and Interpretation of Randomized Clinical Trials. JAMA 2019, 322, 732–735. [Google Scholar] [CrossRef]

- Stavale, R.; Ferreira, G.I.; Galvão, J.A.M.; Zicker, F.; Novaes, M.R.C.G.; De Oliveira, C.; Guilhem, D. Research misconduct in health and life sciences research: A systematic review of retracted literature from Brazilian institutions. PLoS ONE 2019, 14, e0214272. [Google Scholar] [CrossRef]

- Shea, B.J.; Reeves, B.C.; Wells, G.; Thuku, M.; Hamel, C.; Moran, J.; Moher, D.; Tugwell, P.; Welch, V.; Kristjansson, E.; et al. AMSTAR 2: A critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ 2017, 358, j4008. [Google Scholar] [CrossRef]

- Whiting, P.; Savović, J.; Higgins, J.P.; Caldwell, D.M.; Reeves, B.C.; Shea, B.; Davies, P.; Kleijnen, J.; Churchill, R.; ROBIS group. ROBIS: A new tool to assess risk of bias in systematic reviews was developed. J. Clin. Epidemiol. 2016, 69, 225–234. [Google Scholar] [CrossRef]

- Molino, C.d.G.R.C.; Ribeiro, E.; Romano-Lieber, N.S.; Stein, A.T.; de Melo, D.O. Methodological quality and transparency of clinical practice guidelines for the pharmacological treatment of non-communicable diseases using the AGREE II instrument: A systematic review protocol. Syst. Rev. 2017, 6, 220. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, K.; Marušić, A.; Qaseem, A.; Meerpohl, J.J.; Flottorp, S.; Akl, E.A.; Schünemann, H.J.; Chan, E.S.Y.; Falck-Ytter, Y.; et al. A reporting tool for practice guidelines in health care: The RIGHT statement. Ann. Intern. Med. 2017, 166, 128–132. [Google Scholar] [CrossRef]

- Steneck, N.H. Fostering integrity in research: Definitions, current knowledge, and future directions. Sci. Eng. Ethics 2006, 12, 53–74. [Google Scholar] [CrossRef]

- H2020 INTEGRITY—Glossary. Available online: https://h2020integrity.eu/resources/glossary/ (accessed on 27 January 2023).

- National Library of Medicine. Errata, Retractions, and Other Linked Citations in PubMed. 2015. Available online: http://wayback.archive-it.org/org-350/20180312141525/https://www.nlm.nih.gov/pubs/factsheets/errata.html (accessed on 9 February 2023).

- COPE. COPE Forum 26 February 2018: Expressions of Concern. Available online: https://publicationethics.org/resources/forum-discussions/expressions-of-concern (accessed on 11 April 2023).

- Malički, M.; Jerončić, A.; Aalbersberg, I.J.J.; Bouter, L.; ter Riet, G. Systematic review and meta-analyses of studies analysing instructions to authors from 1987 to 2017. Nat Commun. 2021, 12, 5840. [Google Scholar] [CrossRef]

- Schneider, J.; Ye, D.; Hill, A.M.; Whitehorn, A.S. Continued post-retraction citation of a fraudulent clinical trial report, 11 years after it was retracted for falsifying data. Scientometrics 2020, 125, 2877–2913. [Google Scholar] [CrossRef]

- Kataoka, Y.; Banno, M.; Tsujimoto, Y.; Ariie, T.; Taito, S.; Suzuki, T.; Oide, S.; Furukawa, T.A. Retracted randomized controlled trials were cited and not corrected in systematic reviews and clinical practice guidelines. J. Clin. Epidemiol. 2022, 150, 90–97. [Google Scholar] [CrossRef]

- Fanelli, D.; Wong, J.; Moher, D. What difference might retractions make? An estimate of the potential epistemic cost of retractions on meta-analyses. Account. Res. 2022, 29, 442–459. [Google Scholar] [CrossRef]

- Fleming, T.R.; Labriola, D.; Wittes, J. Conducting Clinical Research During the COVID-19 Pandemic: Protecting Scientific Integrity. JAMA 2020, 324, 33–34. [Google Scholar] [CrossRef]

- Núñez-Núñez, M.; Andrews, J.C.; Fawzy, M.; Bueno-Cavanillas, A.; Khan, K.S. Research integrity in clinical trials: Innocent errors and spin versus scientific misconduct. Curr. Opin. Obstet. Gynecol. 2022, 34, 332–339. [Google Scholar] [CrossRef]

- Fletcher, R.H.B.B. “Spin” in scientific writing: Scientific mischief and legal jeopardy. Med. Law 2007, 26, 511–525. [Google Scholar] [PubMed]

- Avenell, A.; Stewart, F.; Grey, A.; Gamble, G.; Bolland, M. An investigation into the impact and implications of published papers from retracted research: Systematic search of affected literature. BMJ Open 2019, 9, e031909. [Google Scholar] [CrossRef] [PubMed]

- Hill, A.; Mirchandani, M.; Pilkington, V. Ivermectin for COVID-19: Addressing Potential Bias and Medical Fraud. Open Forum Infect. Dis. 2022, 9, ofab645. [Google Scholar] [CrossRef] [PubMed]

- Hill, A.; Garratt, A.; Levi, J.; Falconer, J.; Ellis, L.; McCann, K.; Pilkington, V.; Qavi, A.; Wang, J.; Wentzel, H. Retracted: Meta-analysis of Randomized Trials of Ivermectin to Treat SARS-CoV-2 Infection. Open Forum Infect. Dis. 2021, 8, ofab358. [Google Scholar] [CrossRef] [PubMed]

- Bolland, M.J.; Avenell, A.; Gamble, G.D.; Grey, A. Systematic review and statistical analysis of the integrity of 33 randomized controlled trials. Neurology 2016, 87, 2391–2402. [Google Scholar] [CrossRef]

- Marret, E.; Elia, N.; Dahl, J.B.; McQuay, H.J.; Møiniche, S.; Moore, R.A.; Straube, S.; Tramèr, M.R. Susceptibility to fraud in systematic reviews: Lessons from the reuben case. Anesthesiology 2009, 111, 1279–1289. [Google Scholar] [CrossRef] [PubMed]

- Habib, A.S.G.T. Scientific fraud: Impact of Fujii’s data on our current knowledge and practice for the management of postoperative nausea and vomiting. Anesth. Analg. 2013, 116, 520–522. [Google Scholar] [CrossRef]

- Resnik, D.B.; Smith, E.M.; Chen, S.H.G.C. What is Recklessness in Scientific Research? The Frank Sauer Case. Account. Res. 2017, 24, 497–502. [Google Scholar] [CrossRef]

- Sterne, J.A.C.; Savović, J.; Page, M.J.; Elbers, R.G.; Blencowe, N.S.; Boutron, I.; Cates, C.J.; Cheng, H.Y.; Corbett, M.S.; Eldridge, S.M.; et al. RoB 2: A revised tool for assessing risk of bias in randomised trials. BMJ 2019, 366, l4898. [Google Scholar] [CrossRef]

- Jüni, P.; Witschi, A.; Bloch, R.; Egger, M. The hazards of scoring the quality of clinical trials for meta-analysis. JAMA 1999, 282, 1054–1060. [Google Scholar] [CrossRef]

- Schulz, K.F.; Altman, D.G.; Moher, D. CONSORT 2010 statement: Updated guidelines for reporting parallel group randomized trials. Ann. Intern. Med. 2010, 152, 726–732. [Google Scholar] [CrossRef]

- van den Bor, R.M.; Vaessen, P.W.J.; Oosterman, B.J.; Zuithoff, N.P.A.; Grobbee, D.E.; Roes, K.C.B. A computationally simple central monitoring procedure, effectively applied to empirical trial data with known fraud. J. Clin. Epidemiol. 2017, 87, 59–69. [Google Scholar] [CrossRef]

- Pogue, J.M.; Devereaux, P.J.; Thorlund, K.; Yusuf, S. Central statistical monitoring: Detecting fraud in clinical trials. Clin. Trials 2013, 10, 225–235. [Google Scholar] [CrossRef]

- de Viron, S.; Trotta, L.; Schumacher, H.; Hans-Juergen, L.; Höppner, S.; Young, S.; Buyse, M. Detection of Fraud in a Clinical Trial Using Unsupervised Statistical Monitoring. Ther. Innov. Regul. Sci. 2022, 56, 130–136. [Google Scholar] [CrossRef]

- O’Kelly, M. Using statistical techniques to detect fraud: A test case. Pharm. Stat. 2004, 3, 237–246. [Google Scholar] [CrossRef]

- Núñez-Núñez, M.; Maes-Carballo, M.; Mignini, L.E.; Chien, P.F.W.; Khalaf, Y.; Fawzy, M.; Zamora, J.; Khan, K.S.; Bueno-Cavanillas, A. Research integrity in randomized clinical trials: A scoping umbrella review. Int. J. Gynecol. Obstet. 2023, 1–17. [Google Scholar] [CrossRef]

- Khan, K.S. Cairo Consensus Group on Research Integrity. International multi-stakeholder consensus statement on clinical trial integrity. BJOG 2023, 1–16. [Google Scholar] [CrossRef]

- Khan, K.S.; Fawzy, M.; Chien, P.F.W. Integrity of randomized clinical trials: Performance of integrity tests and checklists requires assessment. Int. J. Gynaecol. Obstet. 2023; in press. [Google Scholar] [CrossRef]

- Morán, J.M.; Santillán-García, A.; Herrera-Peco, I. SCRUTATIOm: How to detect retracted literature included in systematics reviews and metaanalysis using SCOPUS© and ZOTERO©. Gac. Sanit. 2022, 36, 64–66. [Google Scholar] [CrossRef]

- Ware, M.; Mabe, M. The STM Report: An Overview of Scientific and Scholarly Journal Publishing Fourth Edition; International Association of Scientific, Technical and Medical Publishers: Oxford, UK, 2015. [Google Scholar]

- The Systematic Review Toolbox. Available online: http://systematicreviewtools.com/software.php (accessed on 18 January 2023).

- Borah, R.; Brown, A.W.; Capers, P.L.; Kaiser, K.A. Analysis of the time and workers needed to conduct systematic reviews of medical interventions using data from the PROSPERO registry. BMJ Open 2017, 7, e012545. [Google Scholar] [CrossRef]

- Wang, Z.; Nayfeh, T.; Tetzlaff, J.; O’Blenis, P.; Murad, M.H. Error rates of human reviewers during abstract screening in systematic reviews. PLoS ONE 2020, 15, e0227742. [Google Scholar] [CrossRef] [PubMed]

- Tercero-Hidalgo, J.R.; Khan, K.S.; Bueno-Cavanillas, A.; Fernández-López, R.; Huete, J.F.; Amezcua-Prieto, C.; Zamora, J.; Fernández-Luna, J.M. Artificial intelligence in COVID-19 evidence syntheses was underutilized, but impactful: A methodological study. J. Clin. Epidemiol. 2022, 148, 124–134. [Google Scholar] [CrossRef] [PubMed]

- Pietrzykowski, T.; Smilowska, K. The reality of informed consent: Empirical studies on patient comprehension—Systematic review. Trials 2021, 22, 57. [Google Scholar] [CrossRef] [PubMed]

- Schellings, R.; Kessels, A.G.; ter Riet, G.; Knottnerus, J.A.; Sturmans, F. Randomized consent designs in randomized controlled trials: Systematic literature search. Contemp. Clin. Trials 2006, 27, 320–332. [Google Scholar] [CrossRef]

- Timmermann, C.; Orzechowski, M.; Kosenko, O.; Woniak, K.; Steger, F. Informed Consent in Clinical Studies Involving Human Participants: Ethical Insights of Medical Researchers in Germany and Poland. Front. Med. 2022, 19, 901059. [Google Scholar] [CrossRef]

- Khan, K.S. Comment on Khan: “Flawed Use of Post Publication Data Fabrication Tests’. Research Misconduct Tests: Putting Patients” Interests First. J. Clin. Epidemiol. 2021, 138, 227. Available online: https://pubmed.ncbi.nlm.nih.gov/34343640/ (accessed on 9 April 2023). [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).