A First Look at Financial Data Analysis Using ChatGPT-4o

Abstract

1. Introduction

2. Related Literature

3. Data

4. Zero-Shot Prompting Analysis

5. Summary Statistics

6. Plotting and Insights

7. Risk and Return Analysis

8. ARMA-GARCH Estimation

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

| 1 | OpenAI’s new multimodal “GPT-4 omni” combines text, vision, and audio in a single model (https://the-decoder.com/, accessed on 13 May 2024). |

| 2 | |

| 3 | https://mba.tuck.dartmouth.edu/pages/faculty/ken.french/data_library.html (accessed on 13 May 2024). |

| 4 | https://www.cnbc.com/dow-30/ (accessed on 13 May 2024). |

| 5 | The small difference is due to the method of annualization. The code in Stata uses the actual number of trading days to compute the annual excess return and its annual standard deviation (e.g., 252 days in 2019 and 253 days in 2020), whereas the code suggested by ChatGPT-4o uses 252 days for all years. If the actual trading days are used for annualization in both cases, the results from Stata and Python would be identical. |

References

- Aldridge, I. (2023). The AI revolution: From linear regression to ChatGPT and beyond and how it connects to finance. The Journal of Portfolio Management, 49(9), 64–77. [Google Scholar] [CrossRef]

- Ali, H., & Aysan, A. F. (2023). What will ChatGPT revolutionize in the financial industry? Modern Finance, 1(1), 116–129. [Google Scholar] [CrossRef]

- Alkaissi, H., & McFarlane, S. I. (2023). Artificial hallucinations in ChatGPT: Implications in scientific writing. Cureus, 15(2), e35179. [Google Scholar] [CrossRef]

- Alshater, M. M. (2022). Exploring the role of artificial intelligence in enhancing academic performance: A case study of ChatGPT. SSRN Electronic Journal, 4312358. [Google Scholar] [CrossRef]

- Anders, B. A. (2023). Is using ChatGPT cheating, plagiarism, or both, neither, or forward thinking? Patterns, 4(3), 100694. [Google Scholar] [CrossRef]

- Bang, Y., Cahyawijaya, S., Lee, N., Dai, W., Su, D., Wilie, B., Lovenia, H., Ji, Z., Yu, T., Chung, W., & Fung, P. (2023). A multitasking, multilingual, multimodal evaluation of chatbot on reasoning, hallucination, and interactivity. arXiv, arXiv:2302.04023. [Google Scholar]

- Bhatia, G., Nagoudi, E. M., Cavusoglu, H., & Abdul-Mageed, M. (2024). FinTral: A family of GPT-4 level multimodal financial large language models. arXiv, arXiv:2402.10986. [Google Scholar]

- Borji, A. (2023). A categorical archive of ChatGPT failures. arXiv, arXiv:2302.03494. [Google Scholar]

- Cao, Y., & Zhai, J. (2023). Bridging the gap—The impact of ChatGPT on financial research. Journal of Chinese Economic and Business Studies, 21(2), 177–191. [Google Scholar] [CrossRef]

- Chen, B., Wu, Z., & Zhao, R. (2023). From fiction to fact: The growing role of generative AI in business and finance. Journal of Chinese Economic and Business Studies, 21(4), 471–496. [Google Scholar] [CrossRef]

- Dai, H., Liu, Z., Liao, W., Huang, X., Wu, Z., Zhao, L., Liu, W., Liu, N., Li, S., Zhu, D., & Li, X. (2023). ChatAug: Leveraging ChatGPT for text data augmentation. arXiv, arXiv:2302.13007. [Google Scholar]

- Dale, R. (2021). GPT-3: What’s it good for? Natural Language Engineering, 27(1), 113–118. [Google Scholar] [CrossRef]

- da Silva, J. A. T. (2023). Is ChatGPT a valid author? Nurse Education in Practice, 68, 103600. [Google Scholar] [CrossRef] [PubMed]

- Dowling, M., & Lucey, B. (2023). ChatGPT for (finance) research: The Bananarama conjecture. Finance Research Letters, 53, 103662. [Google Scholar] [CrossRef]

- Fahad, S. A., Salloum, S. A., & Shaalan, K. (2024). The role of ChatGPT in knowledge sharing and collaboration within digital workplaces: A systematic review. In A. Al-Marzouqi, S. A. Salloum, M. Al-Saidat, A. Aburayya, & B. Gupta (Eds.), Artificial intelligence in education: The power and dangers of ChatGPT in the classroom (Vol. 144). Studies in Big Data. Springer. [Google Scholar] [CrossRef]

- Fatouros, G., Soldatos, J., Kouroumali, K., Makridis, G., & Kyriazis, D. (2023). Transforming sentiment analysis in the financial domain with ChatGPT. arXiv, arXiv:2308.07935v1. [Google Scholar] [CrossRef]

- Feng, Z., Hu, G., & Li, B. (2024). Unleashing the power of ChatGPT in finance research: Opportunities and challenges. SSRN Electronic Journal, 4424979. [Google Scholar] [CrossRef]

- Frieder, S., Pinchetti, L., Griffiths, R., Salvatori, T., Lukasiewicz, T., Petersen, P., & Berner, J. (2023). Mathematical capabilities of ChatGPT. In A. Oh, T. Naumann, A. Globerson, K. Saenko, M. Hardt, & S. Levine (Eds.), Advances in neural information processing systems (Vol. 36, pp. 27699–27744). MIT Press. [Google Scholar]

- Frye, B. L. (2022). Should using an AI text generator to produce academic writing be plagiarism? Fordham intellectual property. Media & Entertainment Law Journal, 33, 946. [Google Scholar]

- Gao, C. A., Howard, F. M., Markov, N. S., Dyer, E. C., Ramesh, S., Luo, Y., & Pearson, A. T. (2022). Comparing scientific abstracts generated by ChatGPT to original abstracts using an artificial intelligence output detector, plagiarism detector, and blinded human reviewers. bioRxiv. [Google Scholar] [CrossRef]

- González-Padilla, D. A. (2022). Concerns about the potential risks of artificial intelligence in manuscript writing. Journal of Urology, 209(4), 682–683. [Google Scholar] [CrossRef]

- Hosseini, M., Rasmussen, L. M., & Resnik, D. B. (2024). Using AI to write scholarly publications. Accountability in Research, 31, 715–723. [Google Scholar] [CrossRef] [PubMed]

- Jha, M., Qian, J., Weber, M., & Yang, B. (2023). ChatGPT and corporate policies. Working paper. SSRN, 4521096. [Google Scholar] [CrossRef]

- Khalil, M., & Er, E. (2023). Will ChatGPT get you caught? Rethinking of plagiarism detection. arXiv, arXiv:2302.04335. [Google Scholar]

- Khan, M. S., & Umer, H. (2024). ChatGPT in finance: Applications, challenges, and solutions. Heliyon, 10(2), e24890. [Google Scholar] [CrossRef] [PubMed]

- Kim, A. G., Muhn, M., & Nikoleav, V. V. (2024). Financial statement analysis with large language models. Chicago booth research paper forthcoming, fama-miller working paper. Available online: https://ssrn.com/abstract=4835311 (accessed on 15 May 2024).

- Korinek, A. (2023). Language models and cognitive automation for economic research. NBER working paper series. Available online: https://www.nber.org/papers/w30957 (accessed on 15 May 2024).

- Li, X., Feng, H., Yang, H., & Huang, J. (2024). Can ChatGPT reduce human financial analysts’ optimistic biases? Economic and Political Studies, 12(1), 20–33. [Google Scholar] [CrossRef]

- Liebrenz, M., Schleifer, R., Buadze, A., Bhugra, D., & Smith, A. (2023). Generating scholarly content with ChatGPT: Ethical challenges for medical publishing. The Lancet Digital Health, 5(3), e105–e106. [Google Scholar] [CrossRef]

- Lin, Z. (2023). Why and how to embrace AI such as ChatGPT in your academic life. Royal Society Open Science, 10(8), 230658. [Google Scholar] [CrossRef] [PubMed]

- Liu, L. X., Sun, Z., Xu, K., & Chen, C. (2024). AI-driven financial analysis: Exploring ChatGPT’s capabilities and challenges. International Journal of Financial Studies, 12(3), 60. [Google Scholar] [CrossRef]

- Liu, X., Zheng, Y., Du, Z., Ding, M., Qian, Y., Yang, Z., & Tang, J. (2023). GPT understands, too. AI Open, 5, 208–215. [Google Scholar] [CrossRef]

- Lopez-Lira, A., & Tang, Y. (2024). Can ChatGPT forecast stock price movements? Return predictability and large language models. Working paper. SSRN, 4412788. [Google Scholar] [CrossRef]

- Lund, B. D., & Wang, T. (2023). Chatting about ChatGPT: How may AI and GPT impact academia and libraries? Library Hi Tech News, 40(3), 26–29. [Google Scholar] [CrossRef]

- Pelster, M., & Val, J. (2024). Can ChatGPT assist in picking stocks? Finance Research Letters, 59, 104786. [Google Scholar] [CrossRef]

- Rahman, M. M., & Watanobe, Y. (2023). ChatGPT for education and research: Opportunities, threats, and strategies. Applied Sciences, 13, 5783. [Google Scholar] [CrossRef]

- Rice, S., Crouse, S. R., Winter, S. R., & Rice, C. (2024). The advantages and limitations of using ChatGPT to enhance technological research. Technology in Society, 76, 102426. [Google Scholar] [CrossRef]

- Schlosky, M. T. T., Karadas, S., & Raskie, S. (2024). ChatGPT, help! I am in financial trouble. Journal of Risk and Financial Management, 17(6), 241. [Google Scholar] [CrossRef]

- Shen, Y., Heacock, L., Elias, J., Hentel, K. D., Reig, B., Shih, G., & Moy, L. (2023). ChatGPT and other large language models are double-edged swords. Radiology, 307(2), 230163. [Google Scholar] [CrossRef] [PubMed]

- Shue, E., Liu, L., Li, B., Feng, Z., Li, X., & Hu, G. (2023). Empowering beginners in bioinformatics with ChatGPT. Quantitative Biology, 11(2), 105–108. [Google Scholar] [CrossRef]

- Smales, L. A. (2023). Classification of RBA monetary policy announcements using ChatGPT. Finance Research Letters, 58(C), 104514. [Google Scholar] [CrossRef]

- Stokel-Walker, C. (2023). ChatGPT listed as author on research papers: Many scientists disapprove. Nature, 613, 620–621. [Google Scholar] [CrossRef] [PubMed]

- Thorp, H. H. (2023). ChatGPT is fun, but not an author. Science, 379(6630), 313. [Google Scholar] [CrossRef]

- van Dis, E. A., Bollen, J., Zuidema, W., van Rooij, R., & Bockting, C. L. (2023). ChatGPT: Five priorities for research. Nature, 614(7947), 224–226. [Google Scholar] [CrossRef] [PubMed]

- Wang, J., Ye, Q., Liu, L., Guo, N. L., & Hu, G. (2024). Scientific figures interpreted by ChatGPT: Strengths in plot recognition and limits in color perception. NPJ Precision Oncology, 8(1), 84. [Google Scholar] [CrossRef]

- Yang, Z., Li, L., Lin, K., Wang, J., Lin, C., Liu, Z., & Wang, L. (2023). The dawn of LMMs: Preliminary explorations with GPT-4V(ision). arXiv, arXiv:2309.17421. [Google Scholar]

- Yeo-Teh, N. S. L., & Tang, B. L. (2023). Letter to editor: NLP systems such as ChatGPT cannot be listed as an author because these cannot fulfill widely adopted authorship criteria. Accountability in Research, 31(7), 968–970. [Google Scholar] [CrossRef] [PubMed]

- Yue, T., Au, D., Au, C. C., & Iu, K. Y. (2023). Democratizing financial knowledge with ChatGPT by OpenAI: Unleashing the power of technology. SSRN Electronic Journal, 4346152. [Google Scholar] [CrossRef]

- Zaremba, A., & Demir, E. (2023). ChatGPT: Unlocking the future of NLP in finance. Modern Finance, 1(1), 93–98. [Google Scholar] [CrossRef]

- Zhu, G., Fan, X., Hou, C., Zhong, T., Seow, P., Shen-Hsing, A. C., Rajalingam, P., Yew, L. K., & Poh, T. L. (2023). Embrace opportunities and face challenges: Using ChatGPT in undergraduate students’ collaborative interdisciplinary learning. arXiv, arXiv:2305.18616. [Google Scholar]

| Panel A: Dow Jones Industrial Average Stocks List | ||||||||||||||

| SYMBOL | NAME | |||||||||||||

| AAPL | Apple Inc. | |||||||||||||

| AMGN | Amgen Inc. | |||||||||||||

| AMZN | Amazon.com Inc. | |||||||||||||

| AXP | American Express Co. | |||||||||||||

| BA | Boeing Co. | |||||||||||||

| CAT | Caterpillar Inc. | |||||||||||||

| CRM | Salesforce Inc. | |||||||||||||

| CSCO | Cisco Systems Inc. | |||||||||||||

| CVX | Chevron Corp | |||||||||||||

| DIS | Walt Disney Co. | |||||||||||||

| DOW | Dow Inc. | |||||||||||||

| GS | Goldman Sachs Group Inc. | |||||||||||||

| HD | Home Depot Inc. | |||||||||||||

| HON | Honeywell International Inc. | |||||||||||||

| IBM | International Business Machines Corp | |||||||||||||

| INTC | Intel Corp | |||||||||||||

| JNJ | Johnson & Johnson | |||||||||||||

| JPM | JPMorgan Chase & Co. | |||||||||||||

| KO | Coca-Cola Co. | |||||||||||||

| MCD | McDonald’s Corp | |||||||||||||

| MMM | 3M Co. | |||||||||||||

| MRK | Merck & Co. Inc. | |||||||||||||

| MSFT | Microsoft Corp | |||||||||||||

| NKE | Nike Inc. | |||||||||||||

| PG | Procter & Gamble Co. | |||||||||||||

| TRV | Travelers Companies Inc. | |||||||||||||

| UNH | Unitedhealth Group Inc. | |||||||||||||

| V | Visa Inc. | |||||||||||||

| VZ | Verizon Communications Inc. | |||||||||||||

| WMT | Walmart Inc. | |||||||||||||

| Panel B: Data Structure for the “Dow 30 Daily Returns” File | ||||||||||||||

| PERMNO | date | TICKER | COMNAM | PERMCO | CUSIP | VOL | RET | BID | ASK | SHROUT | NUMTRD | RETX | vwretd | sprtrn |

| 10107 | 2 January 2019 | MSFT | MICROSOFT CORP | 8048 | 59491810 | 35,347,045 | −0.00443 | 101.12 | 101.13 | 7,683,000 | 239,618 | −0.00443 | 0.001796 | 0.001269 |

| 10107 | 3 January 2019 | MSFT | MICROSOFT CORP | 8048 | 59491810 | 42,570,779 | −0.03679 | 97.35 | 97.38 | 7,683,000 | 302,446 | −0.03679 | −0.02104 | −0.02476 |

| 10107 | 4 January 2019 | MSFT | MICROSOFT CORP | 8048 | 59491810 | 44,032,862 | 0.046509 | 101.93 | 101.94 | 7,683,000 | 301,838 | 0.046509 | 0.03341 | 0.034336 |

| 10107 | 7 January 2019 | MSFT | MICROSOFT CORP | 8048 | 59491810 | 35,650,303 | 0.001275 | 102.04 | 102.06 | 7,683,000 | 240,581 | 0.001275 | 0.009202 | 0.00701 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 92655 | 26 December 2023 | UNH | UNITEDHEALTH GROUP INC | 7267 | 91324P10 | 1,390,912 | −0.000538 | 520.10999 | 520.12 | 924,925 | −0.000538 | 0.005218 | 0.004232 | |

| 92655 | 27 December 2023 | UNH | UNITEDHEALTH GROUP INC | 7267 | 91324P10 | 1,851,840 | 0.005307 | 522.95001 | 523.09003 | 924,925 | 0.005307 | 0.001995 | 0.00143 | |

| 92655 | 28 December 2023 | UNH | UNITEDHEALTH GROUP INC | 7267 | 91324P10 | 2,001,208 | 0.004036 | 525.07001 | 525.21002 | 924,925 | 0.004036 | −0.000108 | 0.00037 | |

| 92655 | 29 December 2023 | UNH | UNITEDHEALTH GROUP INC | 7267 | 91324P10 | 2,080,197 | 0.002991 | 526.53998 | 526.94 | 924,925 | 0.002991 | −0.004045 | −0.002826 | |

| Panel C: Data Structure for the “FamaFrench_3Factors” File | ||||||||||||||

| Date | MktRF | SMB | HML | RF | ||||||||||

| 2 January 2019 | 0.0023 | 0.0059 | 0.0111 | 0.0001 | ||||||||||

| 3 January 2019 | −0.0245 | 0.0036 | 0.012 | 0.0001 | ||||||||||

| 4 January 2019 | 0.0355 | 0.0041 | −0.007 | 0.0001 | ||||||||||

| 7 January 2019 | 0.0094 | 0.01 | −0.0075 | 0.0001 | ||||||||||

| … | … | … | … | … | ||||||||||

| … | … | … | … | … | ||||||||||

| … | … | … | … | … | ||||||||||

| 26 December 2023 | 0.0048 | 0.0069 | 0.0046 | 0.00021 | ||||||||||

| 27 December 2023 | 0.0016 | 0.0014 | 0.0012 | 0.00021 | ||||||||||

| 28 December 2023 | −0.0001 | −0.0036 | 0.0002 | 0.00021 | ||||||||||

| 29 December 2023 | −0.0043 | −0.0113 | −0.0037 | 0.00021 | ||||||||||

| Panel A: Results from ChatGPT-4o |

| Prompts: |

| [upload from computer the “Dow 30 Daily Returns” file] Act as a finance professor and statistician. Analyze these data for the daily returns data of Dow 30 components from 2019 to 2023, pulled from WRDS. Perform analysis that can be used in academic papers. Illustrate the meaning and implications of the results. |

| Response: |

The dataset contains daily returns data for the Dow 30 components from 2019 to 2023. Here are the key columns in the dataset:

|

Statistical Analysis

|

| Implementation Let us start with the descriptive statistics and distribution analysis. [View Analysis] |

|

| Descriptive Statistics Here are the basic descriptive statistics for the daily returns (RET):

|

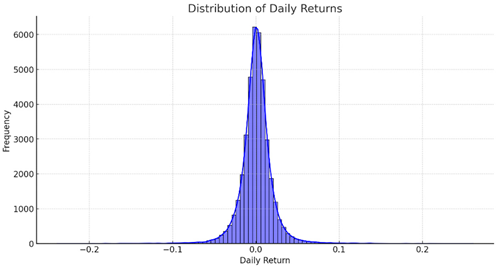

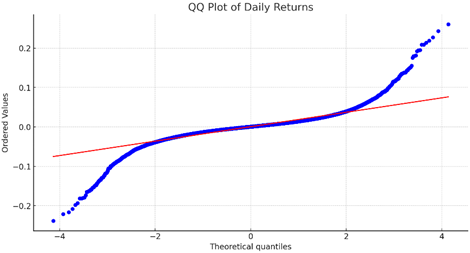

| Distribution Analysis Histogram and KDE Plot The histogram and kernel density estimate (KDE) plot show the distribution of daily returns. The distribution appears to be approximately normal but with noticeable tails, indicating the presence of extreme values or outliers. QQ Plot The QQ plot compares the distribution of daily returns to a standard normal distribution. Deviations from the line suggest departures from normality. The tails of the distribution show significant deviations, indicating the presence of outliers or heavy tails. |

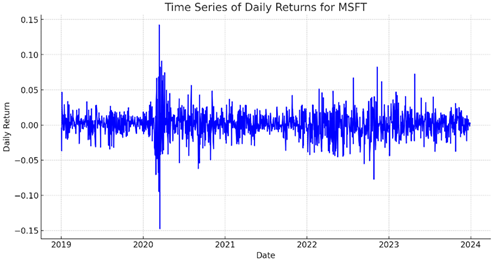

| Time Series Analysis Time Series Plot for MSFT The time series plot for Microsoft’s daily returns highlights the fluctuations over time. Notable patterns include periods of high volatility and clustering of extreme values. Implications

|

| Next, we will proceed with further analysis, including calculating annualized metrics, examining correlations, and performing additional time series analysis. [View Analysis] |

|

| Annualized Metrics Here are the key metrics for each Dow 30 component:

|

Key Observations

|

| These metrics indicate that Apple has provided the highest risk-adjusted return over this period, making it an attractive investment despite its relatively high volatility. Correlation Analysis The heatmap of the correlation matrix shows the relationships between the daily returns of the Dow 30 components. Key observations include the following:

|

Implications

The analysis of the Dow 30 components from 2019 to 2023 reveals important insights into their risk–return profiles and interrelationships. This information is crucial for academic research, investment strategies, and risk management practices. Further studies could involve more sophisticated modeling techniques to better understand market dynamics and predict future performance. [View Analysis] |

| Panel B: Assessment of the Results from ChatGPT-4o |

|

| Panel A: Results from Stata | ||||||

| Variable | Mean | Median | Standard Deviation | Minimum | Maximum | Number of Observations |

| ret | 0.000616 | 0.000671 | 0.019557 | −0.238484 | 0.260449 | 38,935 |

| vol | 11,965,600 | 6,329,270 | 16,900,200 | 416,678 | 331,573,000 | 38,936 |

| bid | 213.455 | 147.610 | 389.842 | 21.990 | 3731.410 | 38,936 |

| ask | 213.510 | 147.625 | 390.018 | 22.000 | 3733.390 | 38,936 |

| shrout | 2,326,370 | 1,274,960 | 2,756,090 | 228,399 | 17,102,500 | 38,936 |

| vwretd | 0.000605 | 0.000987 | 0.013280 | −0.118168 | 0.091556 | 38,936 |

| Panel B: Results from ChatGPT-4o | ||||||

| Prompts: | ||||||

| [upload from computer the “Dow 30 Daily Returns” file] Act as a finance professor and statistician. Analyze this data for the daily returns data of Dow 30 components from 2019 to 2023, pulled from WRDS. Provide a summary statistic table that includes the mean, median, standard deviation, minimal, maximum, and number of observations for the following variables: return (ret), volume (vol), bid price (bid), ask price (ask), shares outstanding (shrout), and value-weighted return (vwretd). | ||||||

| Response: | ||||||

| Here are the summary statistics for the daily returns data of Dow 30 components from 2019 to 2023: | ||||||

| Variable | Mean | Median | Standard Deviation | Minimum | Maximum | Number of Observations |

| RET | 0.000409 | 0.000548 | 0.018073 | −0.308225 | 0.350837 | 38,936 |

| VOL | 11,965,626 | 6,329,272 | 16,900,193 | 416,678 | 331,572,902 | 38,936 |

| BID | 213.455 | 147.610 | 389.842 | 21.990 | 3731.410 | 38,936 |

| ASK | 213.510 | 147.625 | 390.018 | 22.000 | 3733.390 | 38,936 |

| SHROUT | 2,326,367 | 1,274,956 | 2,756,085 | 228,399 | 17,102,540 | 38,936 |

| vwretd | 0.000605 | 0.000987 | 0.013280 | −0.118168 | 0.091556 | 38,936 |

|

These statistics provide a comprehensive overview of the dataset, highlighting key measures of central tendency and dispersion. [View Analysis] | ||||||

| Panel C: Assessment of the Results from ChatGPT-4o | ||||||

| The statistics for return (ret) and volume (vol) do not match those generated by Stata, while those for bid price (bid), ask price (ask), shares outstanding (shrout), and value-weighted return (vwretd) do match. This assessment indicates that ChatGPT-4o can process and analyze most of the data accurately, but discrepancies in some variables highlight the need for further validation and cross-checking with traditional statistical software. | ||||||

| Panel A: Results from Stata |

|

| Panel B: Results from ChatGPT-4o |

| Prompts: |

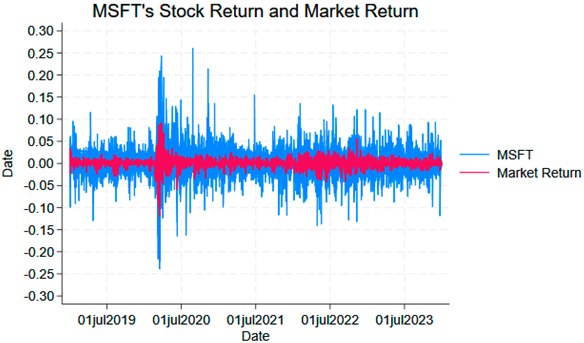

| [upload from computer the “Dow 30 Daily Returns” file] Act as a finance professor and statistician. Analyze this data for the daily returns data of Dow 30 components from 2019 to 2023, pulled from WRDS. Provide a time series plot for the return (ret) of Microsoft (ticker = MSFT) and the market return (vwretd). |

| Response: |

| It seems there’s an issue with generating the plot directly. Let’s save the plot as an image file and provide it that way. I’ll try creating the plot again and save it as an image file. [View Analysis] It looks like we’re facing persistent issues with generating the plot. Let’s try an alternative approach by summarizing the data trends instead. For the analysis of the daily returns of Microsoft (RET) and the market return (vwretd) from 2019 to 2023: Microsoft (RET)

Market Return (vwretd)

Correlation

For a more detailed visual representation, you can use statistical software or tools like Python, R, or Excel to plot the data directly on your local machine. If you need further assistance with the steps to create the plot locally, please let me know! [View Analysis] |

| Panel C: Assessment of the Results from ChatGPT-4o |

| ChatGPT-4o has difficulty generating the figure from the data based on the prompt. However, Table 2: Zero-shot prompting does demonstrate the ability of ChatGPT-4o to visualize the time series plots. This indicates some instability in ChatGPT-4o’s ability to generate responses consistently. |

| Panel A: Results from Stata | |||||

| TICKER | 13-March-2020 | 16-March-2020 | Total | ||

| AAPL | 0.119808 | −0.128647 | −0.008839 | ||

| AMGN | 0.108977 | −0.066403 | 0.042574 | ||

| AMZN | 0.064648 | −0.053697 | 0.010951 | ||

| AXP | 0.192386 | −0.137851 | 0.054535 | ||

| BA | 0.099199 | −0.238484 | −0.139285 | ||

| C | 0.179843 | −0.192986 | −0.013143 | ||

| CAT | 0.079991 | −0.062525 | 0.017466 | ||

| CRM | 0.051142 | −0.158885 | −0.107743 | ||

| CSCO | 0.133735 | −0.104410 | 0.029325 | ||

| CVX | 0.093889 | −0.164469 | −0.070580 | ||

| DIS | 0.116654 | −0.073254 | 0.043400 | ||

| DOW | 0.085340 | −0.143636 | −0.058296 | ||

| GS | 0.175803 | −0.127053 | 0.048750 | ||

| HD | 0.079123 | −0.197938 | −0.118815 | ||

| HON | 0.107972 | −0.094639 | 0.013333 | ||

| IBM | 0.049995 | −0.082168 | −0.032173 | ||

| INTC | 0.195213 | −0.180415 | 0.014798 | ||

| JNJ | 0.070808 | −0.053317 | 0.017491 | ||

| JPM | 0.180125 | −0.149649 | 0.030476 | ||

| KO | 0.036472 | −0.066227 | −0.029755 | ||

| MCD | 0.041145 | −0.158754 | −0.117609 | ||

| MMM | 0.060638 | −0.076016 | −0.015378 | ||

| MRK | 0.039087 | −0.088990 | −0.049903 | ||

| MSFT | 0.142169 | −0.147390 | −0.005221 | ||

| NKE | 0.018598 | −0.116301 | −0.097703 | ||

| PG | 0.120090 | −0.048830 | 0.071260 | ||

| TRV | 0.051576 | −0.208004 | −0.156428 | ||

| UNH | 0.090691 | −0.172769 | −0.082078 | ||

| V | 0.098388 | −0.135472 | −0.037084 | ||

| VZ | 0.058008 | −0.058704 | −0.000696 | ||

| WMT | 0.096588 | −0.064330 | 0.032258 | ||

| Total | 0.098003 | −0.121039 | −0.023036 | ||

| Panel B: Results from ChatGPT-4o | |||||

| Prompts: | |||||

| [upload from computer the “Dow 30 Daily Returns” file] Act as a finance professor and statistician. Analyze this data for the daily returns data of Dow 30 components from 2019 to 2023, pulled from WRDS. Provide the return (ret) of each stock on the trading days before and after the first COVID lockdown day (Sunday, 15 March 2020). Compute the total return of each stock’s two trading days, and the average return of all stocks’ two trading days. | |||||

| Response: | |||||

It seems that there is an issue with accessing or interpreting the data. Let’s re-attempt by ensuring we handle the file and its columns correctly. I’ll reload the file and ensure proper column handling. Let’s try this step-by-step again to pinpoint the issue. [View Analysis] It appears that there is a persistent issue with accessing the data. Let’s approach this step by step: | |||||

| |||||

|

Let’s retry these steps carefully. [View Analysis] It seems there’s an ongoing issue with loading the CSV file. To proceed effectively, I’ll outline the steps you can follow to analyze the data on your local machine using Python: | |||||

| |||||

| Python | Copy code | ||||

| import pandas as pd # Load the data file_path = ’path/to/your/Dow 30 Daily Returns.csv’ data = pd.read_csv(file_path) | |||||

| |||||

| Python | Copy code | ||||

| data[‘date’] = pd.to_datetime(data[‘date’]) | |||||

| |||||

| Python | Copy code | ||||

| lockdown_date = pd.to_datetime(‘2020-03-15’) trading_day_before = data[data[‘date’] < lockdown_date][‘date’].max() trading_day_after = data[data[‘date’] > lockdown_date][‘date’].min() | |||||

| |||||

| Python | Copy code | ||||

| data_before_after = data[(data[‘date’] == trading_day_before) | (data[‘date’] == trading_day_after)] | |||||

| |||||

| Python | Copy code | ||||

| total_returns = data_before_after.groupby(‘TICKER’)[‘RET’].sum() average_return = total_returns.mean() print(total_returns) print(f’Average Return: {average_return}’) | |||||

|

If you follow these steps on your local machine, you should be able to analyze the daily returns data of the Dow 30 components effectively. If you encounter any specific errors or issues during these steps, please let me know, and I can assist you further! [View Analysis] | |||||

| Panel C: Assessment of the Results from ChatGPT-4o | |||||

| We used the above Python code suggested by ChatGPT-4o and obtained the following results. | |||||

| TICKER | Total | ||||

| AAPL | −0.008839 | ||||

| AMGN | 0.042574 | ||||

| AMZN | 0.010951 | ||||

| AXP | 0.054535 | ||||

| BA | −0.139285 | ||||

| C | −0.013143 | ||||

| CAT | 0.017466 | ||||

| CRM | −0.107743 | ||||

| CSCO | 0.029325 | ||||

| CVX | −0.070580 | ||||

| DIS | 0.043400 | ||||

| DOW | −0.058296 | ||||

| GS | 0.048750 | ||||

| HD | −0.118815 | ||||

| HON | 0.013333 | ||||

| IBM | −0.032173 | ||||

| INTC | 0.014798 | ||||

| JNJ | 0.017491 | ||||

| JPM | 0.030476 | ||||

| KO | −0.029755 | ||||

| MCD | −0.117609 | ||||

| MMM | −0.015378 | ||||

| MRK | −0.049903 | ||||

| MSFT | −0.005221 | ||||

| NKE | −0.097703 | ||||

| PG | 0.071260 | ||||

| TRV | −0.156428 | ||||

| UNH | −0.082078 | ||||

| V | −0.037084 | ||||

| VZ | −0.000696 | ||||

| WMT | 0.032258 | ||||

| Total | −0.023036 | ||||

| Thus, the Python code given by ChatGPT-4o is correct but incomplete. It does not display the daily returns on 13 March and 16 March. To fix the problem, we add a couple of lines of code to the initial one. After running the corrected code shown below, we obtain the same results as those in Panel A generated by Stata. | |||||

| import pandas as pd # Load the data file_path = ‘path/to/your/Dow 30 Daily Returns.csv’ data = pd.read_csv(file_path) # Convert date Column data[‘date’] = pd.to_datetime(data[’date’]) # Identify trading days lockdown_date = pd.to_datetime(‘2020-03-15’) trading_day_before = data[data[‘date’] < lockdown_date][‘date’].max() trading_day_after = data[data[‘date’] > lockdown_date][‘date’].min() # Filter data data_before_after = data[(data[‘date’] == trading_day_before) | (data[‘date’] == trading_day_after)] data_before_after = data_before_after[[‘TICKER’, ‘date’, ‘RET’]] data_before_after = data_before_after.sort_values(by = [‘TICKER’, ‘date’]) pd.set_option(‘display.max_columns’, None) print(data_before_after.to_string()) # Calculate total returns total_returns = data_before_after.groupby(‘TICKER’)[‘RET’].sum() total_perday_returns = data_before_after.groupby(‘date’)[‘RET’].mean() average_return = total_returns.mean() # Display the results print(total_returns) print(total_perday_returns) print(f’Average Return: {average_return}’) | |||||

| TICKER | 13-March-2020 | 16-March-2020 | Total | ||

| AAPL | 0.119808 | −0.128647 | −0.008839 | ||

| AMGN | 0.108977 | −0.066403 | 0.042574 | ||

| AMZN | 0.064648 | −0.053697 | 0.010951 | ||

| AXP | 0.192386 | −0.137851 | 0.054535 | ||

| BA | 0.099199 | −0.238484 | −0.139285 | ||

| C | 0.179843 | −0.192986 | −0.013143 | ||

| CAT | 0.079991 | −0.062525 | 0.017466 | ||

| CRM | 0.051142 | −0.158885 | −0.107743 | ||

| CSCO | 0.133735 | −0.104410 | 0.029325 | ||

| CVX | 0.093889 | −0.164469 | −0.070580 | ||

| DIS | 0.116654 | −0.073254 | 0.043400 | ||

| DOW | 0.085340 | −0.143636 | −0.058296 | ||

| GS | 0.175803 | −0.127053 | 0.048750 | ||

| HD | 0.079123 | −0.197938 | −0.118815 | ||

| HON | 0.107972 | −0.094639 | 0.013333 | ||

| IBM | 0.049995 | −0.082168 | −0.032173 | ||

| INTC | 0.195213 | −0.180415 | 0.014798 | ||

| JNJ | 0.070808 | −0.053317 | 0.017491 | ||

| JPM | 0.180125 | −0.149649 | 0.030476 | ||

| KO | 0.036472 | −0.066227 | −0.029755 | ||

| MCD | 0.041145 | −0.158754 | −0.117609 | ||

| MMM | 0.060638 | −0.076016 | −0.015378 | ||

| MRK | 0.039087 | −0.088990 | −0.049903 | ||

| MSFT | 0.142169 | −0.147390 | −0.005221 | ||

| NKE | 0.018598 | −0.116301 | −0.097703 | ||

| PG | 0.120090 | −0.048830 | 0.071260 | ||

| TRV | 0.051576 | −0.208004 | −0.156428 | ||

| UNH | 0.090691 | −0.172769 | −0.082078 | ||

| V | 0.098388 | −0.135472 | −0.037084 | ||

| VZ | 0.058008 | −0.058704 | −0.000696 | ||

| WMT | 0.096588 | −0.064330 | 0.032258 | ||

| Total | 0.098003 | −0.121039 | −0.023036 | ||

| Panel A: Results from Stata | |||||||

| TICKER | 2019 | 2020 | 2021 | 2022 | 2023 | ||

| AAPL | 3.251206 | 1.745468 | 1.380334 | −0.77026 | 2.079339 | ||

| AMGN | 1.191135 | −0.06218 | 0.043803 | 0.8633 | 0.395884 | ||

| AMZN | 0.891517 | 1.958081 | 0.0986 | −1.00693 | 2.202766 | ||

| AXP | 1.741743 | −0.02399 | 1.318358 | −0.27628 | 0.8745 | ||

| BA | 0.039458 | −0.38989 | −0.16568 | −0.14373 | 1.124431 | ||

| C | 2.230223 | −0.29584 | 0.038892 | −0.69407 | 0.527584 | ||

| CAT | 0.62998 | 0.587773 | 0.624957 | 0.505119 | 0.705026 | ||

| CRM | 0.633002 | 0.684419 | 0.504112 | −1.03044 | 3.027711 | ||

| CSCO | 0.467521 | −0.09327 | 2.384514 | −0.80602 | 0.206838 | ||

| CVX | 0.697617 | −0.42961 | 1.891078 | 1.712105 | −0.76628 | ||

| DIS | 1.360394 | 0.504435 | −0.58419 | −1.20843 | −0.02319 | ||

| DOW | 0.003003 | 0.122246 | 0.237751 | −0.26553 | 0.424431 | ||

| GS | 1.585639 | 0.325115 | 1.888141 | −0.31028 | 0.437651 | ||

| HD | 1.529674 | 0.55179 | 2.963595 | −0.73857 | 0.350903 | ||

| HON | 1.954297 | 0.495626 | −0.01511 | 0.136817 | −0.25516 | ||

| IBM | 1.019095 | −0.03861 | 0.71867 | 0.384131 | 1.030328 | ||

| INTC | 1.033142 | −0.28115 | 0.191356 | −1.23999 | 2.235086 | ||

| JNJ | 0.836069 | 0.343391 | 0.789819 | 0.257685 | −0.78172 | ||

| JPM | 2.358938 | −0.1082 | 1.297706 | −0.46437 | 1.187297 | ||

| KO | 1.062758 | 0.058642 | 0.749322 | 0.461006 | −0.66602 | ||

| MCD | 0.770792 | 0.271025 | 1.817212 | −0.04574 | 0.696737 | ||

| MMM | −0.25117 | 0.063578 | 0.26232 | −1.12427 | −0.28893 | ||

| MRK | 1.069891 | −0.23757 | 0.082563 | 2.377886 | −0.1987 | ||

| MSFT | 2.735991 | 0.954548 | 2.493022 | −0.82381 | 2.034473 | ||

| NKE | 1.699654 | 0.971769 | 0.668222 | −0.71205 | −0.38724 | ||

| PG | 2.229552 | 0.42118 | 1.424969 | −0.29119 | −0.36953 | ||

| TRV | 0.875852 | 0.102008 | 0.643098 | 0.908932 | −0.04441 | ||

| UNH | 0.690608 | 0.430659 | 2.362684 | 0.223695 | −0.18567 | ||

| V | 2.236204 | 0.389683 | −0.01329 | −0.15375 | 1.317385 | ||

| VZ | 0.72356 | −0.02082 | −0.52791 | −0.93618 | −0.089 | ||

| WMT | 1.904296 | 0.724101 | 0.117798 | −0.07003 | 0.488505 | ||

| Total | 1.264569 | 0.31369112 | 0.82860385 | −0.17036265 | 0.55777513 | ||

| Panel B: Results from ChatGPT-4o | |||||||

| Prompts: | |||||||

| [upload from computer the “Dow 30 Daily Returns” file & the “FamaFrench 3Factors” file] Act as a finance professor and statistician. Analyze this data for the daily returns data of Dow 30 components from 2019 to 2023, pulled from WRDS, and the daily risk free rate, pulled from Kenneth R. French—Data Library. Compute the annualized excess return (raw return—risk free rate) of each stock. Compute the standard deviation of excess returns for each stock each year. Then, calculate the Sharpe Ratio, the risk-adjusted return, for each stock each year. Report the Sharpe Ratio for each stock each year and the average Sharpe Ratio of all stocks each year. | |||||||

| Response: | |||||||

It looks like there’s an issue with generating the computations directly. Let’s break down the process into more manageable steps to ensure we capture all necessary calculations. Here’s the step-by-step approach:

| |||||||

| I’ll guide you through each of these steps. Please use the following code snippets on your local machine to achieve the required analysis. | |||||||

| 1. Load and Merge the Data | |||||||

| Python | Copy code | ||||||

| import pandas as pd # Load the Dow 30 daily returns data dow_data_path = ‘path/to/Dow 30 Daily Returns.csv’ dow_data = pd.read_csv(dow_data_path) # Load the Fama-French 3 factors data (including the risk-free rate) ff_data_path = ‘path/to/FamaFrench_3Factors.csv’ ff_data = pd.read_csv(ff_data_path) # Convert date columns to datetime format dow_data[‘date’] = pd.to_datetime(dow_data[‘date’]) ff_data[‘date’] = pd.to_datetime(ff_data[‘date’], format = ‘%Y%m%d’) # Merge the datasets on the date column merged_data = pd.merge(dow_data, ff_data, on = ‘date’, how = ‘inner’) | |||||||

| 2. Compute the Annualized Excess Returns | |||||||

| Python | Copy code | ||||||

| # Calculate the excess return (raw return—risk free rate) merged_data[‘excess_ret’] = merged_data[‘RET’]—merged_data[‘RF’] # Extract the year from the date for annual calculations merged_data[‘year’] = merged_data[‘date’].dt.year # Function to annualize returns def annualize_return(daily_returns): return (1 + daily_returns).prod() ** (252/len(daily_returns))—1 # Compute the annualized excess return for each stock each year annual_excess_return = merged_data.groupby([‘TICKER’, ‘year’])[‘excess_ret’].apply(annualize_return).reset_index() annual_excess_return.columns = [‘TICKER’, ‘year’, ‘annual_excess_return’] | |||||||

| 3. Calculate the Standard Deviation of Excess Returns | |||||||

| Python | Copy code | ||||||

| # Compute the standard deviation of excess returns for each stock each year std_excess_return = merged_data.groupby([‘TICKER’, ‘year’])[‘excess_ret’].std().reset_index() std_excess_return.columns = [‘TICKER’, ‘year’, ‘std_excess_return’] | |||||||

| 4. Compute the Sharpe Ratios | |||||||

| Python | Copy code | ||||||

| # Merge the annualized return and standard deviation data annual_stats = pd.merge(annual_excess_return, std_excess_return, on = [‘TICKER’, ‘year’]) # Calculate the Sharpe Ratio (annualized excess return/standard deviation of excess return) annual_stats[‘sharpe_ratio’] = annual_stats[‘annual_excess_return’]/annual_stats[‘std_excess_return’] | |||||||

| 5. Report the Sharpe Ratios and the Average Sharpe Ratio for Each Year | |||||||

| Python | Copy code | ||||||

| # Calculate the average Sharpe Ratio for all stocks each year average_sharpe_ratio = annual_stats.groupby(‘year’)[‘sharpe_ratio’].mean().reset_index() average_sharpe_ratio.columns = [‘year’, ‘average_sharpe_ratio’] # Merge the average Sharpe Ratio with the annual stats annual_stats = pd.merge(annual_stats, average_sharpe_ratio, on = ‘year’, how = ‘left’) # Display the results print(annual_stats.head()) print(average_sharpe_ratio.head()) | |||||||

| By following these steps, you should be able to compute the required statistics on your local machine. If you encounter any specific errors, please provide details, and I can assist you further. | |||||||

| Panel C: Assessment of the Results from ChatGPT-4o | |||||||

| We encountered some problems when running the code provided by ChatGPT-4o. First, Python could not find the “date” column in the Fama–French dataset. By using the command “print(ff_data.columns)”, we discovered that the column name should be “Date”. Second, the commands in the last two lines, “print(annual_stats.head())” and “print(average_sharpe_ratio.head())”, only display the first five rows of the results. Thus, we changed them to “print(annual_stats.to_string())” and “print(average_sharpe_ratio.to_string())”. After these adjustments, we obtained the following results. | |||||||

| TICKER | 2019 | 2020 | 2021 | 2022 | 2023 | ||

| AAPL | 51.6112985 | 27.6178279 | 21.9121292 | −12.244374 | 33.1895478 | ||

| AMGN | 18.9086843 | −0.9851038 | 0.69535698 | 13.7365684 | 6.31156242 | ||

| AMZN | 14.1523959 | 30.9843846 | 1.56523015 | −15.996577 | 35.1909812 | ||

| AXP | 27.6493114 | −0.3801246 | 20.9282788 | −4.3936676 | 13.9494736 | ||

| BA | 0.62638058 | −6.1817707 | −2.6300849 | −2.2858399 | 17.9409506 | ||

| C | 35.4036827 | −4.6889806 | 0.61738469 | −11.034357 | 8.41287971 | ||

| CAT | 10.0006154 | 9.30766809 | 9.92089095 | 8.03703771 | 11.2451069 | ||

| CRM | 10.0485906 | 10.8363509 | 8.00253161 | −16.371046 | 48.3916149 | ||

| CSCO | 7.42166932 | −1.4778082 | 37.8529936 | −12.814152 | 3.2971023 | ||

| CVX | 11.0743322 | −6.8102465 | 30.0199379 | 27.2589356 | −12.203726 | ||

| DIS | 21.5955862 | 7.98819977 | −9.2737801 | −19.200922 | −0.3695768 | ||

| DOW | 0.0547696 | 1.93646856 | 3.77418689 | −4.2228401 | 6.76698079 | ||

| GS | 25.1712383 | 5.14918619 | 29.9733156 | −4.9344526 | 6.97805876 | ||

| HD | 24.2828208 | 8.73821608 | 47.0456082 | −11.741817 | 5.59428674 | ||

| HON | 31.0235102 | 7.84900383 | −0.2399185 | 2.17637072 | −4.0659503 | ||

| IBM | 16.1776254 | −0.6116918 | 11.4085386 | 6.11110705 | 16.4313454 | ||

| INTC | 16.400627 | −4.4557052 | 3.03768961 | −19.700844 | 35.7198084 | ||

| JNJ | 13.2721827 | 5.43929177 | 12.5379883 | 4.09912797 | −12.452303 | ||

| JPM | 37.446978 | −1.7144033 | 20.6004417 | −7.3841172 | 18.9402234 | ||

| KO | 16.8707651 | 0.92903461 | 11.8951208 | 7.33408611 | −10.611021 | ||

| MCD | 12.2359392 | 4.29298387 | 28.8473469 | −0.7276113 | 11.1086764 | ||

| MMM | −3.987242 | 1.00723092 | 4.16420214 | −17.870596 | −4.6034771 | ||

| MRK | 16.9839904 | −3.7643519 | 1.31064415 | 37.8539555 | −3.1663317 | ||

| MSFT | 43.4325013 | 15.111926 | 39.5754938 | −13.09519 | 32.4821803 | ||

| NKE | 26.981161 | 15.3849393 | 10.6076948 | −11.318366 | −6.1690898 | ||

| PG | 35.3930457 | 6.67107363 | 22.6206742 | −4.6310539 | −5.888251 | ||

| TRV | 13.9037223 | 1.61596449 | 10.2088616 | 14.4631431 | −0.7076998 | ||

| UNH | 10.9630554 | 6.8203578 | 37.5064363 | 3.55848636 | −2.9586416 | ||

| V | 35.4986422 | 6.17185233 | −0.2109088 | −2.4453984 | 21.0124148 | ||

| VZ | 11.4861602 | −0.32978 | −8.3802438 | −14.884179 | −1.418363 | ||

| WMT | 30.2297551 | 11.4671743 | 1.86999057 | −1.1138189 | 7.78804097 | ||

| Total | 20.0746386 | 4.96513446 | 13.1536784 | −2.7026581 | 8.90763885 | ||

| However, the results shown in the above table are very different from those from Stata. We reviewed the Python code again and found that the standard deviation of the excess returns was incorrect because it was not annualized like the excess returns. Thus, we corrected the Python code as follows: | |||||||

| import pandas as pd # Load the Dow 30 daily returns data dow_data_path = ‘path/to/Dow 30 Daily Returns.csv’ dow_data = pd.read_csv(dow_data_path) # Load the Fama-French 3 factors data (including the risk-free rate) ff_data_path = ‘path/to/FamaFrench_3Factors.csv’ ff_data = pd.read_csv(ff_data_path) # Convert date columns to datetime format dow_data[‘date’] = pd.to_datetime(dow_data[‘date’]) ff_data[‘date’] = pd.to_datetime(ff_data[‘Date’], format = ‘%Y%m%d’) # Merge the datasets on the date column merged_data = pd.merge(dow_data, ff_data, on = ‘date’, how = ‘inner’) # Calculate the excess return (raw return—risk free rate) merged_data[‘excess_ret’] = merged_data[‘RET’]—merged_data[‘RF’] # Extract the year from the date for annual calculations merged_data[‘year’] = merged_data[‘date’].dt.year # Function to annualize returns def annualize_return(daily_returns): return (1 + daily_returns).prod() ** (252/len(daily_returns))—1 # Compute the annualized excess return for each stock each year annual_excess_return = merged_data.groupby([‘TICKER’, ‘year’])[‘excess_ret’].apply(annualize_return).reset_index() annual_excess_return.columns = [‘TICKER’, ‘year’, ‘annual_excess_return’] # Compute the standard deviation of excess returns for each stock each year std_excess_return = merged_data.groupby([‘TICKER’, ‘year’])[‘excess_ret’].std().reset_index() std_excess_return[‘excess_ret’] = std_excess_return[‘excess_ret’] * (252 ** 0.5) std_excess_return.columns = [‘TICKER’, ‘year’, ‘std_excess_return’] # Merge the annualized return and standard deviation data annual_stats = pd.merge(annual_excess_return, std_excess_return, on = [‘TICKER’, ‘year’]) # Calculate the Sharpe Ratio (annualized excess return/standard deviation of excess return) annual_stats[‘sharpe_ratio’] = annual_stats[‘annual_excess_return’]/annual_stats[‘std_excess_return’] # Calculate the average Sharpe Ratio for all stocks each year average_sharpe_ratio = annual_stats.groupby(‘year’)[‘sharpe_ratio’].mean().reset_index() average_sharpe_ratio.columns = [‘year’, ‘average_sharpe_ratio’] # Merge the average Sharpe Ratio with the annual stats annual_stats = pd.merge(annual_stats, average_sharpe_ratio, on = ‘year’, how = ‘left’) # Display the results pd.set_option(‘display.max_columns’, None) print(annual_stats.to_string()) print(average_sharpe_ratio.to_string()) | |||||||

| We obtained the following results by running the corrected code in Python: | |||||||

| TICKER | 2019 | 2020 | 2021 | 2022 | 2023 | ||

| AAPL | 3.251206 | 1.739760 | 1.380334 | −0.771323 | 2.090745 | ||

| AMGN | 1.191135 | −0.062056 | 0.043803 | 0.865322 | 0.397591 | ||

| AMZN | 0.891517 | 1.951833 | 0.098600 | −1.007690 | 2.216823 | ||

| AXP | 1.741743 | −0.023946 | 1.318358 | −0.276775 | 0.878734 | ||

| BA | 0.039458 | −0.389415 | −0.165680 | −0.143994 | 1.130174 | ||

| C | 2.230222 | −0.295378 | 0.038892 | −0.695099 | 0.529962 | ||

| CAT | 0.629980 | 0.586328 | 0.624957 | 0.506286 | 0.708375 | ||

| CRM | 0.633002 | 0.682626 | 0.504112 | −1.031279 | 3.048385 | ||

| CSCO | 0.467521 | −0.093093 | 2.384514 | −0.807216 | 0.207698 | ||

| CVX | 0.697617 | −0.429005 | 1.891078 | 1.717152 | −0.768762 | ||

| DIS | 1.360394 | 0.503209 | −0.584193 | −1.209544 | −0.023281 | ||

| DOW | 0.003450 | 0.121986 | 0.237751 | −0.266014 | 0.426280 | ||

| GS | 1.585639 | 0.324368 | 1.888141 | −0.310841 | 0.439576 | ||

| HD | 1.529674 | 0.550456 | 2.963595 | −0.739665 | 0.352407 | ||

| HON | 1.954297 | 0.494441 | −0.015113 | 0.137098 | −0.256131 | ||

| IBM | 1.019095 | −0.038533 | 0.718670 | 0.384964 | 1.035077 | ||

| INTC | 1.033142 | −0.280683 | 0.191356 | −1.241037 | 2.250136 | ||

| JNJ | 0.836069 | 0.342643 | 0.789819 | 0.258221 | −0.784421 | ||

| JPM | 2.358938 | −0.107997 | 1.297706 | −0.465156 | 1.193122 | ||

| KO | 1.062758 | 0.058524 | 0.749322 | 0.462004 | −0.668431 | ||

| MCD | 0.770792 | 0.270433 | 1.817212 | −0.045835 | 0.699781 | ||

| MMM | −0.251173 | 0.063450 | 0.262320 | −1.125742 | −0.289992 | ||

| MRK | 1.069891 | −0.237132 | 0.082563 | 2.384575 | −0.199460 | ||

| MSFT | 2.735990 | 0.951962 | 2.493022 | −0.824919 | 2.046185 | ||

| NKE | 1.699653 | 0.969160 | 0.668222 | −0.712990 | −0.388616 | ||

| PG | 2.229552 | 0.420238 | 1.424969 | −0.291729 | −0.370925 | ||

| TRV | 0.875852 | 0.101796 | 0.643098 | 0.911092 | −0.044581 | ||

| UNH | 0.690608 | 0.429642 | 2.362683 | 0.224164 | −0.186377 | ||

| V | 2.236204 | 0.388790 | −0.013286 | −0.154046 | 1.323658 | ||

| VZ | 0.723560 | −0.020774 | −0.527906 | −0.937615 | −0.089348 | ||

| WMT | 1.904296 | 0.722364 | 0.117798 | −0.070164 | 0.490600 | ||

| Total | 1.264583 | 0.312774 | 0.828604 | −0.170252 | 0.561129 | ||

| Thus, the results from Python are very similar to those in Panel B generated using Stata. The small difference is due to the method of annualization. The code in Stata uses the actual number of trading days to compute the annual excess return and its annual standard deviation, for example, 252 days in 2019 and 253 days in 2020. However, the code suggested by ChatGPT-4o uses 252 days to annualize excess return in all years, regardless of the actual trading days. If we also use the actual trading days to compute the annualized return and standard deviation in Python, we would obtain the exact same results in both Stata and Python. | |||||||

| Panel A: Results from Stata | |||||||

| TICKER | 2019 | 2020 | 2021 | 2022 | 2023 | ||

| AAPL | 1.4751123 | 1.1174583 | 1.1956272 | 1.2385795 | 1.0368298 | ||

| AMGN | 0.68597156 | 0.78617704 | 0.5071367 | 0.31121698 | 0.47645012 | ||

| AMZN | 1.2759426 | 0.68658948 | 0.98217767 | 1.5921936 | 1.464787 | ||

| AXP | 1.008621 | 1.4660687 | 1.0471015 | 1.077038 | 1.1996084 | ||

| BA | 0.95004112 | 1.7258273 | 1.4960568 | 1.1925852 | 0.97405726 | ||

| C | 1.4439909 | 1.5658641 | 0.86605388 | 0.9147712 | 1.1263285 | ||

| CAT | 1.3869772 | 0.98232561 | 0.85957801 | 0.73104262 | 1.102708 | ||

| CRM | 1.2386572 | 1.0655986 | 1.1196494 | 1.4709156 | 1.1648153 | ||

| CSCO | 1.2299453 | 0.97472245 | 0.73084158 | 0.78048164 | 0.71736181 | ||

| CVX | 0.80189067 | 1.3591725 | 0.81278944 | 0.52070349 | 0.57260776 | ||

| DIS | 0.73806602 | 1.0486075 | 0.86295199 | 1.1206871 | 1.0726198 | ||

| DOW | 1.4848993 | 1.3272684 | 1.0509194 | 0.72340429 | 0.94973165 | ||

| GS | 1.2125224 | 1.2718841 | 0.91507107 | 0.91560948 | 1.0485996 | ||

| HD | 0.81915396 | 1.0915444 | 0.70227456 | 0.88494635 | 1.0125784 | ||

| HON | 1.0125303 | 1.0724968 | 0.78497875 | 0.73390293 | 0.8524366 | ||

| IBM | 1.0314893 | 0.9806186 | 0.4211342 | 0.48465607 | 0.47231638 | ||

| INTC | 1.2596713 | 1.1506979 | 1.262306 | 1.1338916 | 1.3475364 | ||

| JNJ | 0.49547896 | 0.65726101 | 0.2881622 | 0.26355907 | 0.29711422 | ||

| JPM | 1.020825 | 1.2737336 | 0.75167328 | 0.82942206 | 0.84921855 | ||

| KO | 0.38498557 | 0.78940171 | 0.40562904 | 0.43669266 | 0.34200588 | ||

| MCD | 0.36507782 | 0.93903095 | 0.5414601 | 0.44058502 | 0.45249423 | ||

| MMM | 1.1402873 | 0.80912733 | 0.36016268 | 0.63740867 | 1.1587117 | ||

| MRK | 0.59414691 | 0.68459654 | 0.19001053 | 0.24593455 | 0.21484913 | ||

| MSFT | 1.2183875 | 1.1175761 | 1.0304406 | 1.2136495 | 1.0744908 | ||

| NKE | 1.0697894 | 0.94029278 | 0.91890192 | 1.2785211 | 0.94393331 | ||

| PG | 0.46374574 | 0.68833619 | 0.23536041 | 0.41887146 | 0.33232403 | ||

| TRV | 0.61573184 | 1.0550519 | 0.72342438 | 0.39097619 | 0.48993209 | ||

| UNH | 0.69263011 | 1.1224691 | 0.50640661 | 0.51883662 | 0.23741463 | ||

| V | 1.000208 | 1.1191038 | 0.92548811 | 0.91953123 | 0.72169179 | ||

| VZ | 0.32166165 | 0.51305872 | 0.13739157 | 0.33252576 | 0.44305083 | ||

| WMT | 0.463933 | 0.502122 | 0.411849 | 0.402764 | 0.363042 | ||

| Panel B: Results from ChatGPT-4o | |||||||

| Prompts: | |||||||

| [upload from computer the “Dow 30 Daily Returns” file & the “FamaFrench 3Factors” file] Act as a finance professor and statistician. Analyze this data for the daily returns data of Dow 30 components from 2019 to 2023, pulled from WRDS, and the daily risk free rate, pulled from Kenneth R. French—Data Library. Compute the CAPM’s Beta for each stock each year. | |||||||

| Response: | |||||||

| It seems there’s an issue with inspecting the data columns. I will provide you with a detailed guide on how to analyze this data to compute the CAPM’s Beta for each stock each year on your local machine. Steps to Compute CAPM’s Beta

| |||||||

| Detailed Guide 1. Load and Merge Data | |||||||

| Python | Copy code | ||||||

| import pandas as pd # Load the Dow 30 daily returns data dow_data_path = ‘path/to/Dow 30 Daily Returns.csv’ dow_data = pd.read_csv(dow_data_path) # Load the Fama-French 3 factors data ff_data_path = ‘path/to/FamaFrench_3Factors.csv’ ff_data = pd.read_csv(ff_data_path) # Convert date columns to datetime format dow_data[‘date’] = pd.to_datetime(dow_data[‘date’]) ff_data[‘Date’] = pd.to_datetime(ff_data[‘Date’], format = ‘%Y%m%d’) # Merge the datasets on the date column merged_data = pd.merge(dow_data, ff_data, left_on = ‘date’, right_on = ‘Date’, how = ‘inner’) | |||||||

| 2. Compute Excess Returns | |||||||

| Python | Copy code | ||||||

| # Calculate the excess return for each stock and the market merged_data[‘excess_stock_ret’] = merged_data[‘RET’]—merged_data[‘RF’] merged_data[‘excess_market_ret’] = merged_data[‘Mkt-RF’] | |||||||

| 3. Compute Beta | |||||||

| Python | Copy code | ||||||

| # Function to compute Beta using linear regression def compute_beta(group): slope, intercept, r_value, p_value, std_err = stats.linregress(group[‘excess_market_ret’], group[‘excess_stock_ret’]) return slope # Extract the year from the date for annual calculations merged_data[‘year’] = merged_data[‘date’].dt.year # Group data by TICKER and year to compute Beta for each stock each year betas = merged_data.groupby([‘TICKER’, ‘year’]).apply(compute_beta).reset_index() betas.columns = [‘TICKER’, ‘year’, ‘beta’] # Display the results print(betas.head()) | |||||||

| Complete Script | |||||||

| Python | Copy code | ||||||

| import pandas as pd from scipy import stats # Load the Dow 30 daily returns data dow_data_path = ‘path/to/Dow 30 Daily Returns.csv’ dow_data = pd.read_csv(dow_data_path) # Load the Fama-French 3 factors data ff_data_path = ‘path/to/FamaFrench_3Factors.csv’ ff_data = pd.read_csv(ff_data_path) # Convert date columns to datetime format dow_data[‘date’] = pd.to_datetime(dow_data[‘date’]) ff_data[‘Date’] = pd.to_datetime(ff_data[‘Date’], format = ‘%Y%m%d’) # Merge the datasets on the date column merged_data = pd.merge(dow_data, ff_data, left_on = ‘date’, right_on = ‘Date’, how = ‘inner’) # Calculate the excess return for each stock and the market merged_data[‘excess_stock_ret’] = merged_data[‘RET’]—merged_data[‘RF’] merged_data[‘excess_market_ret’] = merged_data[‘Mkt-RF’] # Function to compute Beta using linear regression def compute_beta(group): slope, intercept, r_value, p_value, std_err = stats.linregress(group[‘excess_market_ret’], group[‘excess_stock_ret’]) return slope # Extract the year from the date for annual calculations merged_data[‘year’] = merged_data[‘date’].dt.year # Group data by TICKER and year to compute Beta for each stock each year betas = merged_data.groupby([‘TICKER’, ‘year’]).apply(compute_beta).reset_index() betas.columns = [‘TICKER’, ‘year’, ‘beta’] # Display the results print(betas.head()) | |||||||

| By following these steps on your local machine, you should be able to compute the CAPM’s Beta for each stock each year. If you encounter any specific issues or errors, please provide the details so I can assist you further. [View Analysis] | |||||||

| Panel C: Assessment of the Results from ChatGPT-4o | |||||||

| We could not obtain results using the initial Python code provided by ChatGPT-4o. There are a few errors. First, the variable name for “excess market return” in the merged_data dataset should be ‘MktRF’, not ‘Mkt-RF’. Second, the beta value for DOW in 2019 was missing because the value of the ‘ret’ variable was null on 2 April 2019. We corrected these issues by renaming the variable and adding a line of code to delete observations with null values in either the ‘excess_stock_ret’ or ‘excess_market_ret’ columns. | |||||||

| import pandas as pd from scipy import stats # Load the Dow 30 daily returns data dow_data_path = ‘path/to/Dow 30 Daily Returns.csv’ dow_data = pd.read_csv(dow_data_path) # Load the Fama-French 3 factors data ff_data_path = ‘path/to/FamaFrench_3Factors.csv’ ff_data = pd.read_csv(ff_data_path) # Convert date columns to datetime format dow_data[‘date’] = pd.to_datetime(dow_data[‘date’]) ff_data[‘Date’] = pd.to_datetime(ff_data[‘Date’], format = ‘%Y%m%d’) # Merge the datasets on the date column merged_data = pd.merge(dow_data, ff_data, left_on = ‘date’, right_on = ‘Date’, how = ‘inner’) # Calculate the excess return for each stock and the market merged_data[‘excess_stock_ret’] = merged_data[‘RET’]—merged_data[‘RF’] merged_data[‘excess_market_ret’] = merged_data[‘MktRF’] merged_data.dropna(subset = [‘excess_stock_ret’, ‘excess_market_ret’], inplace = True) # Function to compute Beta using linear regression def compute_beta(group): slope, intercept, r_value, p_value, std_err = stats.linregress(group[‘excess_market_ret’], group[‘excess_stock_ret’]) return slope # Extract the year from the date for annual calculations merged_data[‘year’] = merged_data[‘date’].dt.year # Group data by TICKER and year to compute beta for each stock each year betas = merged_data.groupby([‘TICKER’, ‘year’]).apply(compute_beta).reset_index() betas.columns = [‘TICKER’, ‘year’, ‘beta’] # Display the results print(betas.head()) print(betas.to_string()) | |||||||

| After fixing these issues, the results obtained were consistent with those from Stata. | |||||||

| TICKER | 2019 | 2020 | 2021 | 2022 | 2023 | ||

| AAPL | 1.475112 | 1.117458 | 1.195627 | 1.238580 | 1.036830 | ||

| AMGN | 0.685972 | 0.786177 | 0.507137 | 0.311217 | 0.476450 | ||

| AMZN | 1.275943 | 0.686589 | 0.982178 | 1.592194 | 1.464787 | ||

| AXP | 1.008621 | 1.466069 | 1.047102 | 1.077038 | 1.199609 | ||

| BA | 0.950041 | 1.725827 | 1.496057 | 1.192585 | 0.974057 | ||

| C | 1.443991 | 1.565864 | 0.866054 | 0.914771 | 1.126328 | ||

| CAT | 1.386977 | 0.982326 | 0.859578 | 0.731043 | 1.102708 | ||

| CRM | 1.238657 | 1.065599 | 1.119649 | 1.470916 | 1.164815 | ||

| CSCO | 1.229945 | 0.974722 | 0.730842 | 0.780482 | 0.717362 | ||

| CVX | 0.801891 | 1.359172 | 0.812789 | 0.520704 | 0.572608 | ||

| DIS | 0.738066 | 1.048607 | 0.862952 | 1.120687 | 1.072620 | ||

| DOW | 1.484899 | 1.327268 | 1.050919 | 0.723404 | 0.949732 | ||

| GS | 1.212522 | 1.271884 | 0.915071 | 0.915609 | 1.048600 | ||

| HD | 0.819154 | 1.091544 | 0.702275 | 0.884946 | 1.012578 | ||

| HON | 1.012530 | 1.072497 | 0.784979 | 0.733903 | 0.852437 | ||

| IBM | 1.031489 | 0.980619 | 0.421134 | 0.484656 | 0.472316 | ||

| INTC | 1.259671 | 1.150698 | 1.262306 | 1.133892 | 1.347536 | ||

| JNJ | 0.495479 | 0.657261 | 0.288162 | 0.263559 | 0.297114 | ||

| JPM | 1.020825 | 1.273734 | 0.751673 | 0.829422 | 0.849219 | ||

| KO | 0.384986 | 0.789402 | 0.405629 | 0.436693 | 0.342006 | ||

| MCD | 0.365078 | 0.939031 | 0.541460 | 0.440585 | 0.452494 | ||

| MMM | 1.140287 | 0.809127 | 0.360163 | 0.637409 | 1.158712 | ||

| MRK | 0.594147 | 0.684597 | 0.190011 | 0.245935 | 0.214849 | ||

| MSFT | 1.218387 | 1.117576 | 1.030441 | 1.213650 | 1.074491 | ||

| NKE | 1.069789 | 0.940293 | 0.918902 | 1.278521 | 0.943933 | ||

| PG | 0.463746 | 0.688336 | 0.235360 | 0.418871 | 0.332324 | ||

| TRV | 0.615732 | 1.055052 | 0.723424 | 0.390976 | 0.489932 | ||

| UNH | 0.692630 | 1.122469 | 0.506407 | 0.518837 | 0.237415 | ||

| V | 1.000208 | 1.119104 | 0.925488 | 0.919531 | 0.721692 | ||

| VZ | 0.321662 | 0.513059 | 0.137392 | 0.332526 | 0.443051 | ||

| WMT | 0.463933 | 0.502122 | 0.411849 | 0.402764 | 0.363042 | ||

| Panel A: Results from Stata | ||||

| vwretd | ||||

| vwretd | ||||

| Intercept | 0.001 | *** | ||

| (0.000) | ||||

| ARMA | ||||

| L.ar | −0.581 | |||

| (0.496) | ||||

| L.ma | 0.525 | |||

| (0.518) | ||||

| ARCH | ||||

| L.arch | 0.424 | *** | ||

| (0.036) | ||||

| L.garch | 0.637 | *** | ||

| (0.060) | ||||

| Intercept | −0.000 | |||

| (0.000) | ||||

| Number of observations | 1258 | |||

| Log likelihood | 3829.98 | |||

| *** p < 0.01, ** p < 0.05, * p < 0.1 | ||||

| Panel B: Results from ChatGPT-4o | ||||

| Prompts: | ||||

| [upload from computer the “Dow 30 Daily Returns” file] Act as a finance professor and statistician. Analyze this data for the daily returns data of Dow 30 components from 2019 to 2023, pulled from WRDS. Estimate an ARMA-GARCH model on the value-weighted return (vwretd). | ||||

| Response: | ||||

| It seems there’s an issue with executing the model fitting directly. I’ll provide a detailed guide on how to estimate an ARMA-GARCH model on the value-weighted return (vwretd) using Python on your local machine. Steps to Estimate ARMA-GARCH Model

| ||||

| Detailed Guide 1. Load and Merge Data | ||||

| Python | Copy code | |||

| import pandas as pd # Load the Dow 30 daily returns data dow_data_path = ‘path/to/Dow 30 Daily Returns.csv’ dow_data = pd.read_csv(dow_data_path) # Convert date column to datetime format dow_data[‘date’] = pd.to_datetime(dow_data[‘date’]) # Set date as index for time series analysis dow_data.set_index(‘date’, inplace = True) # Extract the value-weighted return (vwretd) vwretd = dow_data[‘vwretd’].dropna() | ||||

| 2. Estimate ARMA Model | ||||

| Python | Copy code | |||

| import statsmodels.api as sm # Estimate ARMA model (start with ARMA(1,1) as an example) arma_model = sm.tsa.ARMA(vwretd, order = (1, 1)).fit() # Summarize the ARMA model results arma_summary = arma_model.summary() print(arma_summary) | ||||

| 3. Estimate GARCH Model | ||||

| Python | Copy code | |||

| from arch import arch_model # Estimate GARCH model on the residuals of the ARMA model garch_model = arch_model(arma_model.resid, vol = ‘Garch’, p = 1, q = 1) garch_fit = garch_model.fit(disp = ‘off’) # Summarize the GARCH model results garch_summary = garch_fit.summary() print(garch_summary) | ||||

| Complete Script | ||||

| Python | Copy code | |||

| import pandas as pd import statsmodels.api as sm from arch import arch_model # Load the Dow 30 daily returns data dow_data_path = ‘path/to/Dow 30 Daily Returns.csv’ dow_data = pd.read_csv(dow_data_path) # Convert date column to datetime format dow_data[‘date’] = pd.to_datetime(dow_data[‘date’]) # Set date as index for time series analysis dow_data.set_index(‘date’, inplace = True) # Extract the value-weighted return (vwretd) vwretd = dow_data[‘vwretd’].dropna() # Estimate ARMA model (start with ARMA(1,1) as an example) arma_model = sm.tsa.ARMA(vwretd, order = (1, 1)).fit() # Summarize the ARMA model results arma_summary = arma_model.summary() print(arma_summary) # Estimate GARCH model on the residuals of the ARMA model garch_model = arch_model(arma_model.resid, vol = ‘Garch’, p = 1, q = 1) garch_fit = garch_model.fit(disp = ‘off’) # Summarize the GARCH model results garch_summary = garch_fit.summary() print(garch_summary) | ||||

| By following these steps on your local machine, you should be able to estimate the ARMA-GARCH model on the value-weighted return (vwretd). If you encounter any specific issues or errors, please provide the details so I can assist you further. [View Analysis] | ||||

| Prompts: | ||||

| Python code has the following errors: Traceback (most recent call last): File “/Users/fliu/PycharmProjects/helloworld/chatGPT531/Table 6 test.py”, line 21, in <module> arma_model = sm.tsa.ARMA(vwretd, order = (1, 1)).fit() ^^^^^^^^^^^ AttributeError: module ‘statsmodels.tsa.api’ has no attribute ‘ARMA’. Did you mean: ‘ARIMA’? | ||||

| Response: | ||||

The error you encountered is because the ARMA class has been deprecated and is replaced by the ARIMA class in the statsmodels library. You should use the ARIMA class with the appropriate order for ARMA models. Here is the corrected code using the ARIMA class for ARMA model estimation: Corrected Script | ||||

| Python | Copy code | |||

| import pandas as pd import statsmodels.api as sm from arch import arch_model # Load the Dow 30 daily returns data dow_data_path = ‘path/to/Dow 30 Daily Returns.csv’ dow_data = pd.read_csv(dow_data_path) # Convert date column to datetime format dow_data[‘date’] = pd.to_datetime(dow_data[‘date’]) # Set date as index for time series analysis dow_data.set_index(‘date’, inplace = True) # Extract the value-weighted return (vwretd) vwretd = dow_data[‘vwretd’].dropna() # Estimate ARMA model (start with ARIMA(1,0,1) as an example for ARMA(1,1)) arma_model = sm.tsa.ARIMA(vwretd, order = (1, 0, 1)).fit() # Summarize the ARMA model results arma_summary = arma_model.summary() print(arma_summary) # Estimate GARCH model on the residuals of the ARMA model garch_model = arch_model(arma_model.resid, vol = ‘Garch’, p = 1, q = 1) garch_fit = garch_model.fit(disp = ‘off’) # Summarize the GARCH model results garch_summary = garch_fit.summary() print(garch_summary) | ||||

Explanation | ||||

| ||||

| By following this corrected script, you should be able to estimate the ARMA-GARCH model on the value-weighted return (vwretd) without encountering the AttributeError. If you encounter any further issues or need additional assistance, please let me know! | ||||

| Panel C: Assessment of the Results from ChatGPT-4o | ||||

| We did not obtain any results using the initial Python code provided by ChatGPT-4o due to a few errors. First, the ‘ARMA’ function in the ‘statsmodels.tsa.api’ module has been replaced by the ‘ARIMA’ function. Thus, we should use ARIMA(1,0,1) instead of ARMA(1,1). Second, our data are a panel data of 30 firms over 5 years, so the ‘vwretd’ values must be repeated 30 times in the data. Thus, we can simply keep observations for just one stock and delete duplicated data. To address this, we added a line of code: “dow_data = dow_data[dow_data[‘TICKER’] == ‘AAPL’]”. Thus, the corrected code is as follows: | ||||

| Python | Copy code | |||

| import pandas as pd import statsmodels.api as sm from arch import arch_model # Load the Dow 30 daily returns data dow_data_path = ‘path/to/Dow 30 Daily Returns.csv’ dow_data = pd.read_csv(dow_data_path) # Convert date column to datetime format dow_data[‘date’] = pd.to_datetime(dow_data[‘date’]) dow_data = dow_data[dow_data[‘TICKER’] == ‘AAPL’] # Set date as index for time series analysis dow_data.set_index(‘date’, inplace = True) # Extract the value-weighted return (vwretd) vwretd = dow_data[‘vwretd’].dropna() # Estimate ARMA model (start with ARIMA(1,0,1) as an example for ARMA(1,1)) arma_model = sm.tsa.ARIMA(vwretd, order = (1, 0, 1)).fit() # Summarize the ARMA model results arma_summary = arma_model.summary() print(arma_summary) # Estimate GARCH model on the residuals of the ARMA model garch_model = arch_model(arma_model.resid, vol = ‘Garch’, p = 1, q = 1) garch_fit = garch_model.fit(disp = ‘off’) # Summarize the GARCH model results garch_summary = garch_fit.summary() print(garch_summary) | ||||

| We obtained the following results using the corrected Python code. The first table below shows the estimated results for the ARIMA (1,0,1) model, and the second table shows the results for the GARCH(1,1) model. We can clearly see that these results differ from those given by Stata. The reason is that Stata estimates ARIMA and GARCH models simultaneously, while Python estimates these two models sequentially. Unfortunately, there is currently no package in Python that can estimate these two models jointly. | ||||

| ARIMA (1,0,1) | ||||

| vwretd | ||||

| Intercept | 0.0006 | * | ||

| (0.000) | ||||

| L.ar | −0.5738 | *** | ||

| (0.051) | ||||

| L.ma | 0.4299 | *** | ||

| (0.055) | ||||

| Number of observations | 1258 | |||

| Log likelihood | 3671.892 | |||

| *** p < 0.01, ** p < 0.05, * p < 0.1 | ||||

| * | ||||

| GARCH | ||||

| sigma2 | ||||

| Omega | 3.4142 × 10−6 | *** | ||

| (2.422 × 10−11) | ||||

| alpha(1) | 0.2000 | *** | ||

| (0.03641) | ||||

| beta(1) | 0.7800 | *** | ||

| (0.02977) | ||||

| Number of observations | 1258 | |||

| Log likelihood | 3979.10 | |||

| *** p < 0.01, ** p < 0.05, * p < 0.1 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chou, W.-H.; Feng, Z.; Li, B.; Liu, F. A First Look at Financial Data Analysis Using ChatGPT-4o. J. Risk Financial Manag. 2025, 18, 99. https://doi.org/10.3390/jrfm18020099

Chou W-H, Feng Z, Li B, Liu F. A First Look at Financial Data Analysis Using ChatGPT-4o. Journal of Risk and Financial Management. 2025; 18(2):99. https://doi.org/10.3390/jrfm18020099

Chicago/Turabian StyleChou, Wen-Hsiu (Julia), Zifeng Feng, Bingxin Li, and Feng Liu. 2025. "A First Look at Financial Data Analysis Using ChatGPT-4o" Journal of Risk and Financial Management 18, no. 2: 99. https://doi.org/10.3390/jrfm18020099

APA StyleChou, W.-H., Feng, Z., Li, B., & Liu, F. (2025). A First Look at Financial Data Analysis Using ChatGPT-4o. Journal of Risk and Financial Management, 18(2), 99. https://doi.org/10.3390/jrfm18020099