DGX-A100 Face to Face DGX-2—Performance, Power and Thermal Behavior Evaluation

Abstract

1. Introduction

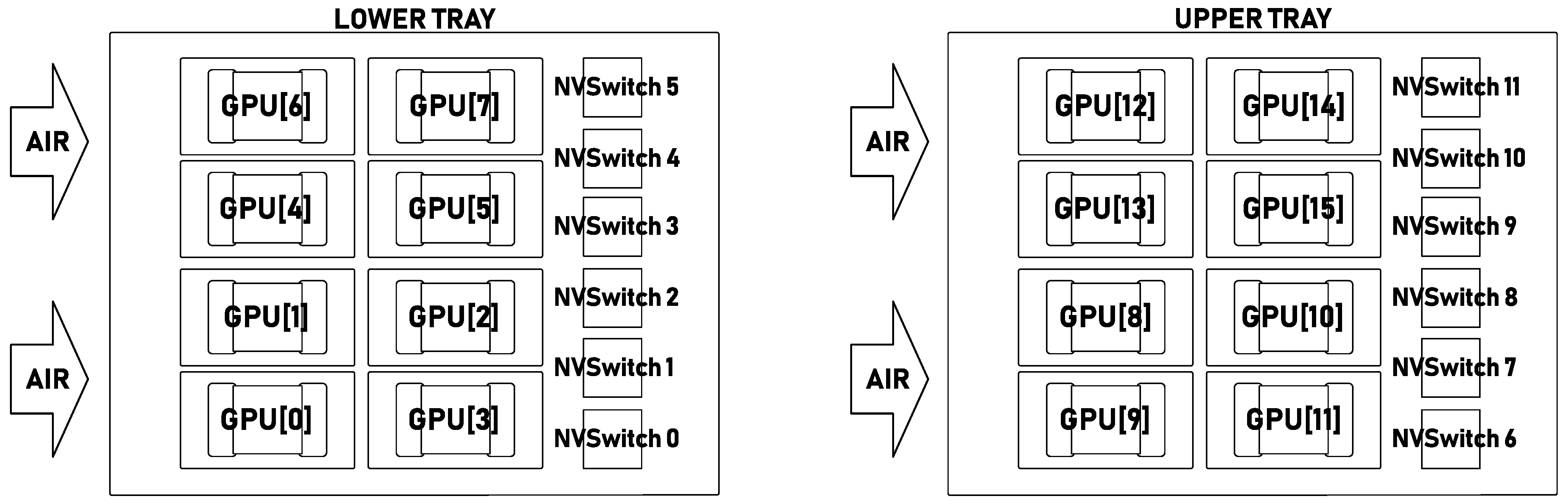

1.1. DGX-2 Platform Description

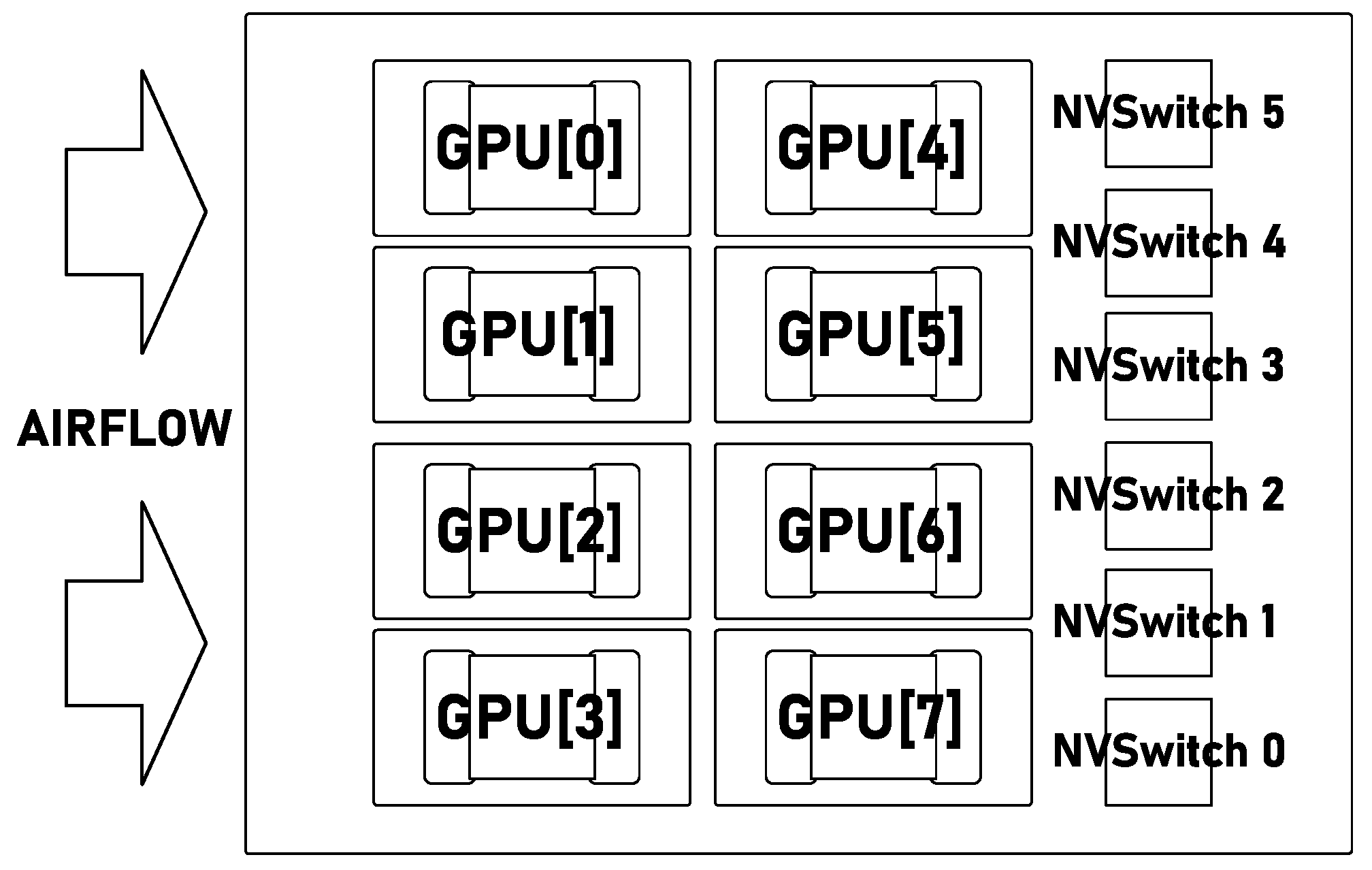

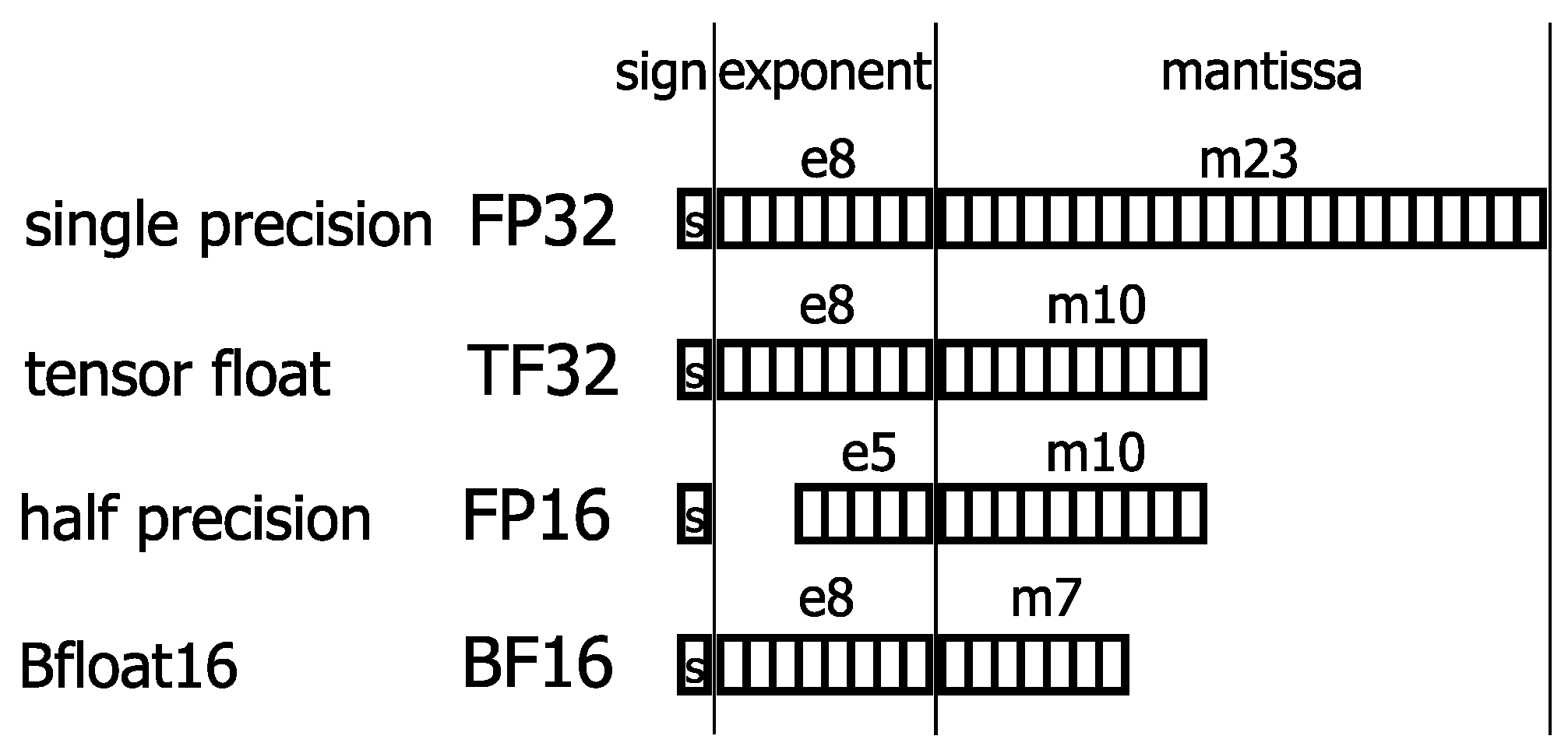

1.2. DGX-A100 Platform Description

2. Measurement Methodology Description

2.1. Benchmarks

2.2. Frequency Scaling and Energy Measurement Methodology

3. Results

3.1. Performance

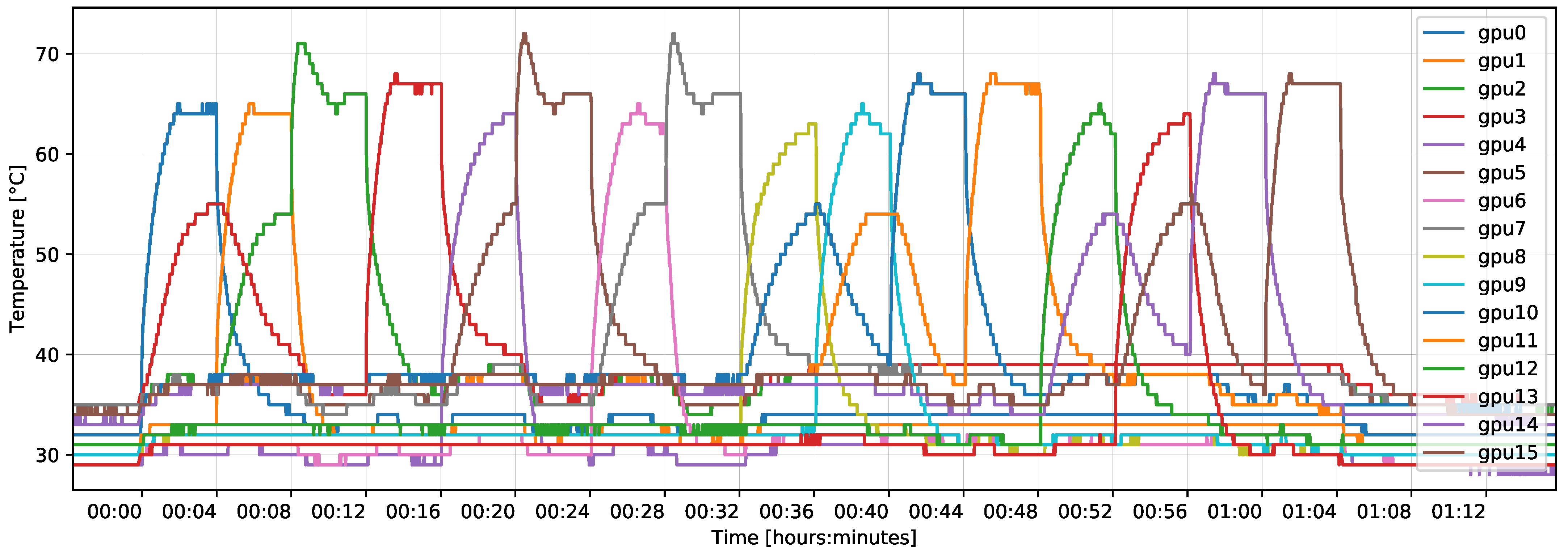

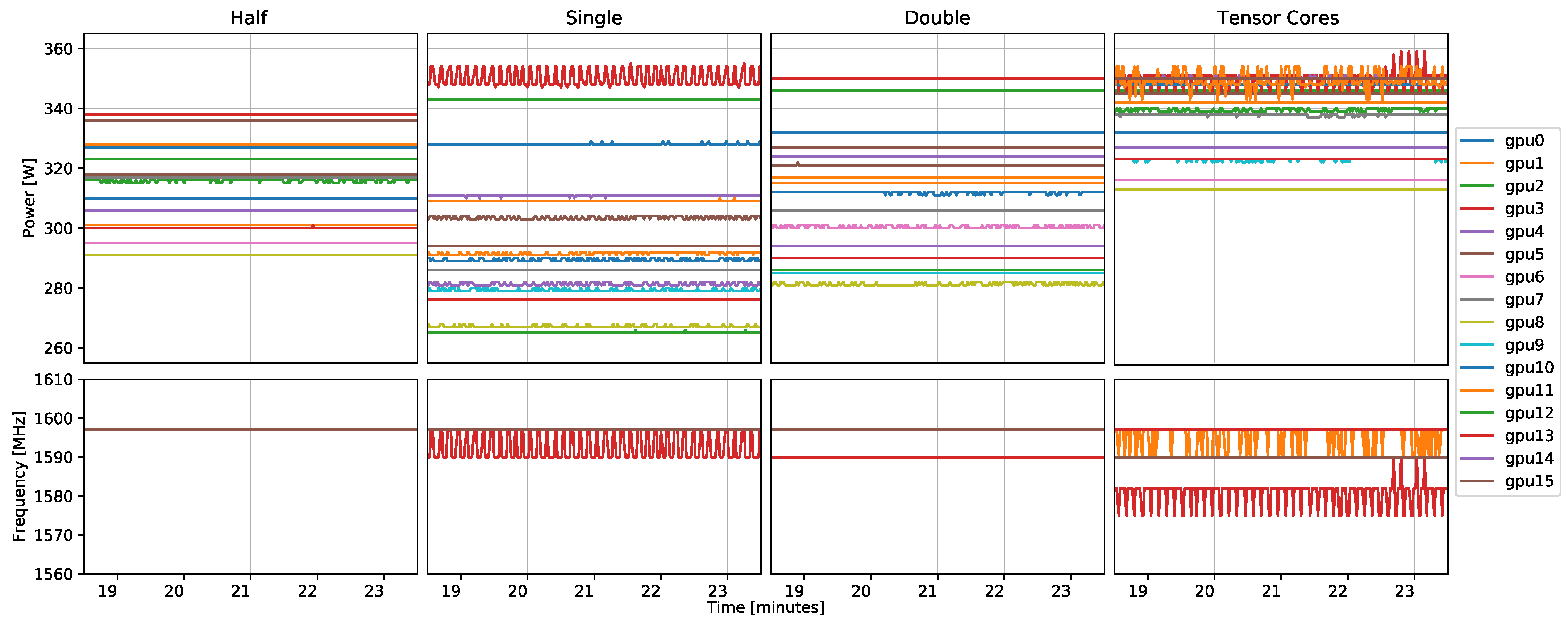

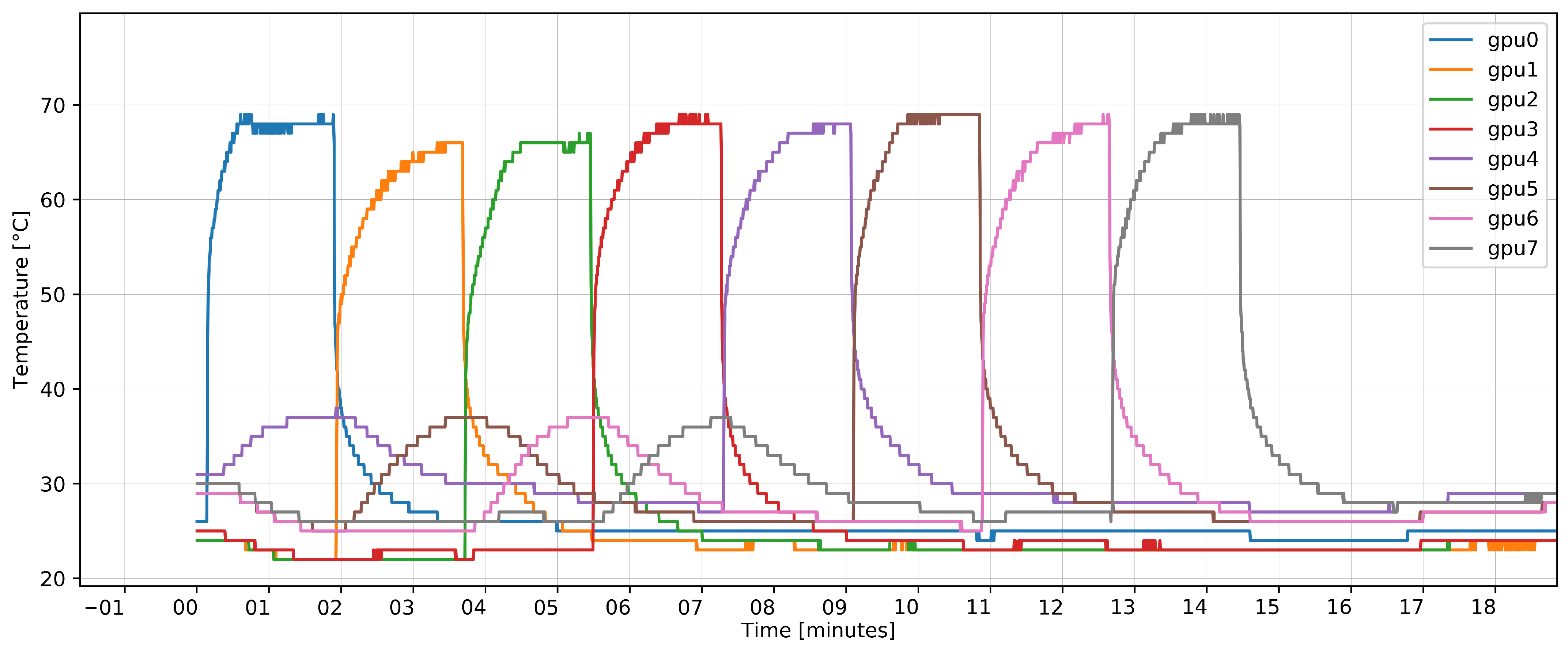

3.2. Power and Thermal Properties

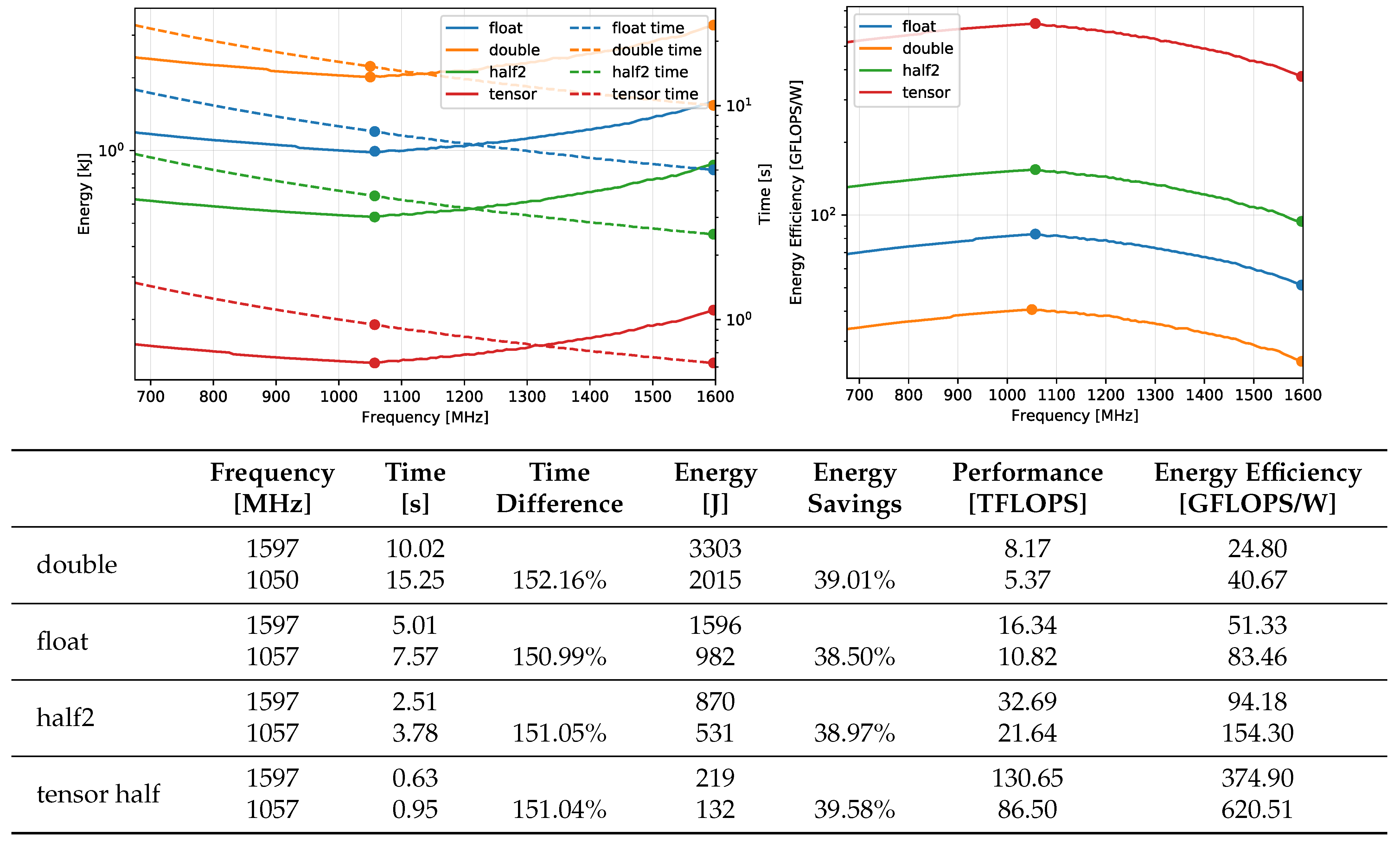

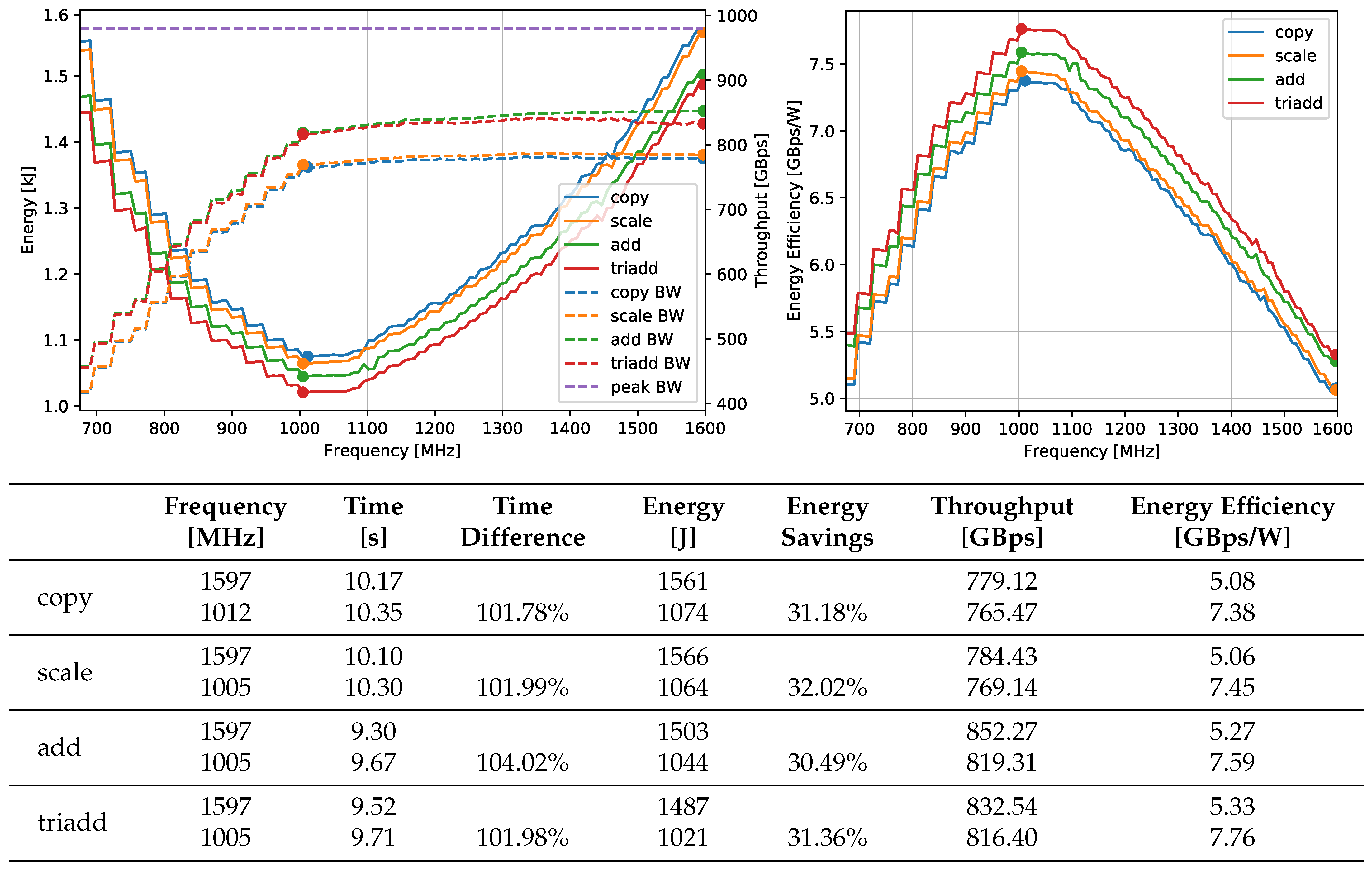

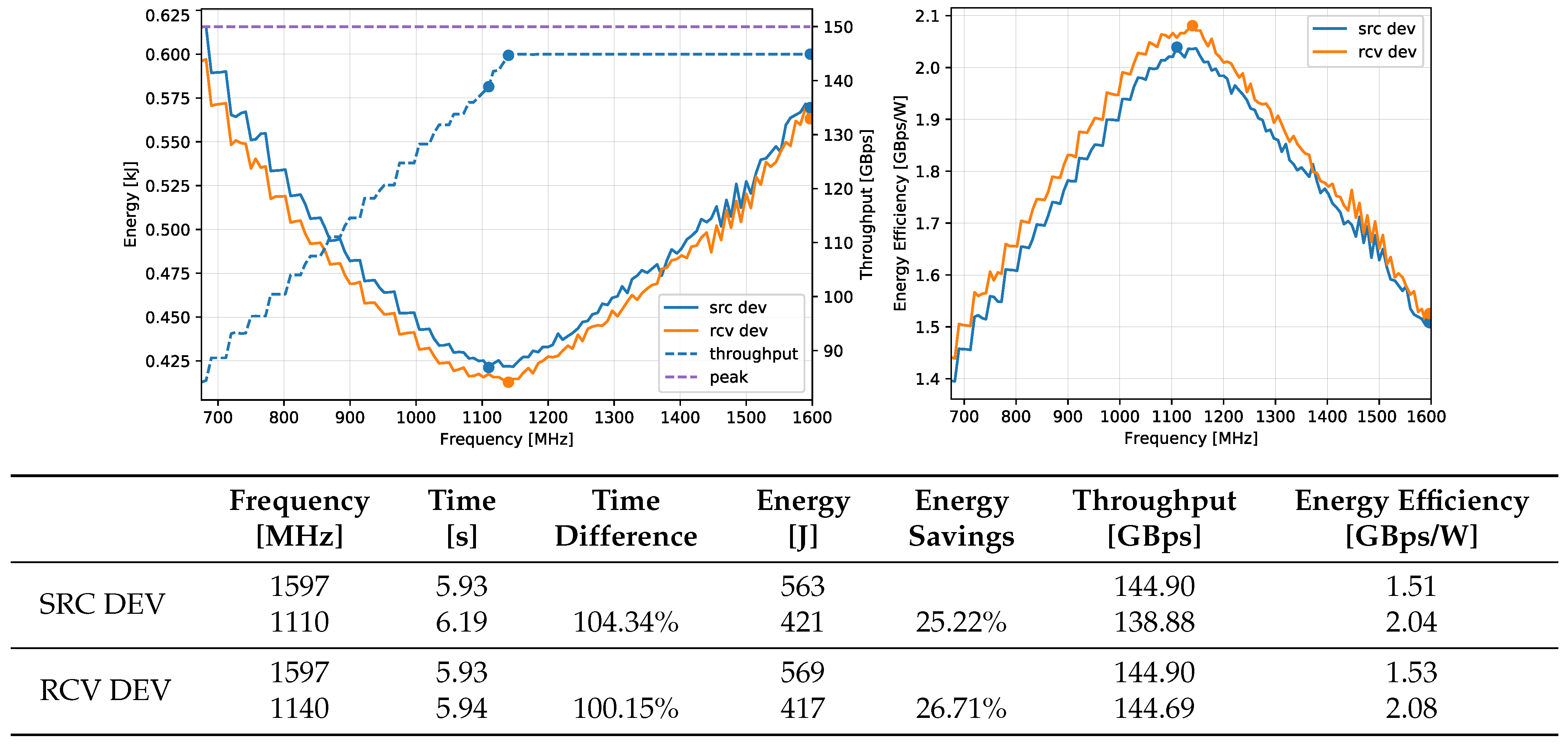

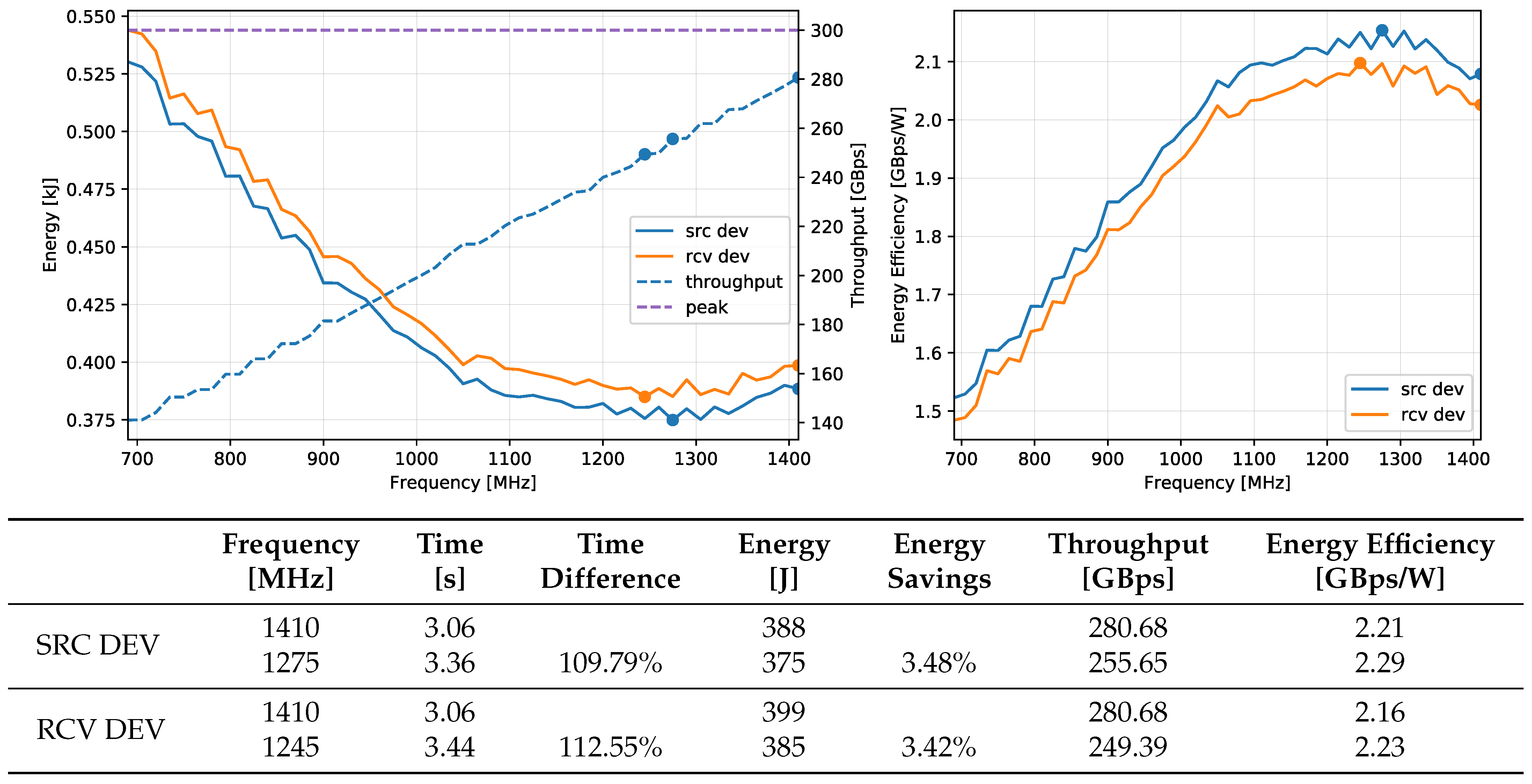

3.3. Frequency Scaling

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Strochmaier, E. Highlights of the 56th TOP500 List. 2020. Available online: https://sc20.supercomputing.org/presentation/?id=bof130&sess=sess314 (accessed on 4 January 2021).

- TOP500 List. Highlights—November 2020. 2020. Available online: https://www.top500.org/lists/top500/2020/11/highs/ (accessed on 4 January 2021).

- NVIDIA Corp. NVIDIA DGX-1 System Architecture White Paper. 2017. Available online: https://images.nvidia.com/content/pdf/dgx1-system-architecture-whitepaper1.pdf (accessed on 15 December 2020).

- NVIDIA Corp. DGX-2/2H SYSTEM User Guide. 2019. Available online: https://docs.nvidia.com/dgx/pdf/dgx2-user-guide.pdf (accessed on 15 July 2019).

- NVIDIA Corp. DGX A100 SYSTEM User Guide. 2020. Available online: https://docs.nvidia.com/dgx/pdf/dgxa100-user-guide.pdf (accessed on 1 December 2020).

- NVIDIA Corp. NVIDIA Tesla V100 GPU Architecture. 2017. Available online: http://images.nvidia.com/content/volta-architecture/pdf/volta-architecture-whitepaper.pdf (accessed on 12 July 2019).

- NVIDIA Corp. NVIDIA A100 Tensor Core GPU Architecture. 2020. Available online: https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/nvidia-ampere-architecture-whitepaper.pdf (accessed on 3 December 2020).

- NVIDIA Corp. NVIDIA DGX SuperPOD: Scalable Infrastructure for AI Leadership. 2020. Available online: http://images.nvidia.com/aem-dam/Solutions/Data-Center/gated-resources/nvidia-dgx-superpod-a100.pdf (accessed on 11 December 2020).

- Spetko, M.; Riha, L.; Jansik, B. Performance, power consumption and thermal behavioral evaluation of the DGX-2 platform. In Parallel Computing: Technology Trends; IOS Press: Amsterdam, The Netherlands, 2020; Volume 36, pp. 614–623. [Google Scholar] [CrossRef]

- Raihan, M.A.; Goli, N.; Aamodt, T.M. Modeling Deep Learning Accelerator Enabled GPUs. In Proceedings of the 2019 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), Madison, WI, USA, 24–26 March 2019; pp. 79–92. [Google Scholar] [CrossRef]

- Jia, Z.; Maggioni, M.; Staiger, B.; Scarpazza, D.P. Dissecting the NVIDIA Volta GPU Architecture via Microbenchmarking. arXiv 2018, arXiv:1804.06826. [Google Scholar]

- Li, A.; Song, S.; Chen, J.; Li, J.; Liu, X.; Tallent, N.R.; Barker, K.J. Evaluating Modern GPU Interconnect: PCIe, NVLink, NV-SLI, NVSwitch and GPUDirect. arXiv 2019, arXiv:1903.04611. [Google Scholar] [CrossRef]

- Von Kaenel, V.; Macken, P.; Degrauwe, M.G.R. A voltage reduction technique for battery-operated systems. IEEE J. Solid State Circuits 1990, 25, 1136–1140. [Google Scholar] [CrossRef]

- Fan, K.; Cosenza, B.; Juurlink, B. Predictable GPUs Frequency Scaling for Energy and Performance. In Proceedings of the 48th International Conference on Parallel Processing, Kyoto, Japan, 5–8 August 2019; Association for Computing Machinery: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Ge, R.; Vogt, R.; Majumder, A.J.; Alam, M.A.U.; Burtscher, M.; Zong, Z. Effects of Dynamic Voltage and Frequency Scaling on a K20 GPU. In Proceedings of the 2013 42nd International Conference on Parallel Processing, Lyon, France, 1–4 October 2013; pp. 826–833. [Google Scholar] [CrossRef]

- Mei, X.; Wang, Q.; Chu, X. A survey and measurement study of GPU DVFS on energy conservation. Digit. Commun. Netw. 2017, 3, 89–100. [Google Scholar] [CrossRef]

- NVIDIA Corp. The schematic of GPU layout placed on the DGX-2 server’s chassis. Unpublished.

- NVIDIA Corp. Parallel Thread Execution ISA. 2019. Available online: https://docs.nvidia.com/cuda/pdf/ptx_isa_6.4.pdf (accessed on 12 July 2019).

- AMD Inc. AMD EPYC 7002 Series Processors Data Sheet. 2020. Available online: https://www.amd.com/system/files/documents/AMD-EPYC-7002-Series-Datasheet.pdf (accessed on 15 December 2020).

- NVIDIA Corp. NVIDIA A100 Tensor Core GPU. 2020. Available online: https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/a100/pdf/a100-80gb-datasheet-update-a4-nvidia-1485612-r12-web.pdf (accessed on 1 December 2020).

- IT4Innovations. Mandelbrot CPU Benchmark. Available online: https://code.it4i.cz/jansik/mandelbrot. (accessed on 12 July 2019).

- IEEE Standard for Binary Floating-Point Arithmetic. ANSI/IEEE Std 754-1985 1985, 1–20. [CrossRef]

- McCalpin, J.D. Memory Bandwidth and Machine Balance in Current High Performance Computers; IEEE Computer Society Technical Committee on Computer Architecture (TCCA) Newsletter; IEEE: Piscataway, NJ, USA, 1995; pp. 19–25. [Google Scholar]

- NVIDIA Corp. CUDA Runtime API. 2019. Available online: https://docs.nvidia.com/pdf/CUDA_Runtime_API.pdf (accessed on 24 September 2019).

- Asifuzzaman, K.; Radulovic, M.; Radojkovic, P. ExaNoDe: Reporton the HPC Application Bottlenecks. Deliverable 2.5, BSC. 2017. Available online: https://exanode.eu/wp-content/uploads/2017/04/D2.5.pdf (accessed on 20 December 2020).

- Haidar, A.; Jagode, H.; Vaccaro, P.; YarKhan, A.; Tomov, S.; Dongarra, J. Investigating power capping toward energy-efficient scientific applications. Concurr. Comput. Pract. Exp. 2019, 31, e4485. [Google Scholar] [CrossRef]

- Vysocky, O.; Beseda, M.; Riha, L.; Zapletal, J.; Nikl, V.; Lysaght, M.; Kannan, V. Evaluation of the HPC Applications Dynamic Behavior in Terms of Energy Consumption. In Proceedings of the Fifth International Conference on Parallel, Distributed, Grid and Cloud Computing for Engineering, Pecs, Hungary, 30–31 May 2017; pp. 1–19, Paper 3. [Google Scholar] [CrossRef]

- Patki, T.; Lowenthal, D.K.; Rountree, B.; Schulz, M.; de Supinski, B.R. Exploring Hardware Overprovisioning in Power-Constrained, High Performance Computing. In Proceedings of the 27th International ACM Conference on International Conference on Supercomputing, Eugene, OR, USA, 10–14 June 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 173–182. [Google Scholar] [CrossRef]

- Ge, R.; Feng, X.; Pyla, H.; Cameron, K.; Feng, W. Power Measurement Tutorial for the Green500 List. 2007. Available online: https://www.top500.org/files/green500/tutorial.pdf (accessed on 24 September 2019).

- NVIDIA Corp. Nvidia-Smi—NVIDIA System Management Interface Program. 2016. Available online: http://developer.download.nvidia.com/compute/DCGM/docs/nvidia-smi-367.38.pdf (accessed on 4 December 2020).

- NVIDIA Corp. NVML Reference Manual. 2019. Available online: https://docs.nvidia.com/pdf/NVML_API_Reference_Guide.pdf (accessed on 16 September 2019).

- Gholkar, N.; Mueller, F.; Rountree, B. Power Tuning HPC Jobs on Power-Constrained Systems. In Proceedings of the 2016 International Conference on Parallel Architectures and Compilation, Haifa, Israel, 11–15 September 2016; ACM: New York, NY, USA, 2016; pp. 179–191. [Google Scholar] [CrossRef]

- Bergman, K.; Borkar, S.; Campbell, D.; Carlson, W.; Dally, W.; Denneau, M.; Franzon, P.; Harrod, W.; Hiller, J.; Karp, S.; et al. ExaScale Computing Study: Technology Challenges in Achieving Exascale Systems. 2008. Available online: https://people.eecs.berkeley.edu/~yelick/papers/Exascale_final_report.pdf (accessed on 20 December 2020).

- Argonne National Laboratory. Aurora. 2020. Available online: https://www.alcf.anl.gov/aurora (accessed on 20 December 2020).

- Spetko, M.; Vysocky, O.; Jansik, B.; Riha, L. DGX-A100 Face to Face DGX-2—Performance, Power and Thermal Behavior Evaluation. Zenodo. 2021. Available online: https://dx.doi.org/10.5281/zenodo.4432801 (accessed on 20 December 2020).

| DGX-2 | DGX-A100 | |

|---|---|---|

| CPU model | Intel Xeon 8168 | AMD EPYC 7742 |

| PCI Express Version Support | 3.0 | 4.0 |

| FP64 Performance (double) | 125 TFLOPS | 77 TFLOPS |

| FP32 Performance (float) | 250 TFLOPS | 156 TFLOPS |

| BF16 Performance (Bfloat16) | – | 312 TFLOPS |

| FP16 Tensor Core Performance (half) | 2 PFLOPS | 2.5 PFLOPS |

| TF32 Tensor Core Performance (tensor float TC) | – | 1248 TFLOPS |

| FP64 Tensor Core Performance (double TC) | – | 156 TFLOPS |

| Number of GPUs | 16 | 8 |

| GPU model | V100-SXM3 | A100-SXM4 |

| GPU SM/Memory Maximum Frequency | 1597/958 MHz | 1410/1215 MHz |

| GPU Power consumption (single GPU) | 350 W | 400 W |

| GPU Memory Size (single GPU) | 32 GB | 40/80 GB |

| GPU Memory Bandwidth (single GPU) | 980 GBps | 1555/2039 GBps |

| NVLink Bandwidth (bidirectional) | 300 GBps | 600 GBps |

| Mandelbrot Benchmark | |||||

|---|---|---|---|---|---|

| V100 | A100 | ||||

| Specification [TFLOPS] | Measurement [TFLOPS] | Specification [TFLOPS] | Measurement [TFLOPS] | ||

| double | 8.177 | 8.1765 | double | 9.7 | 9.715 |

| float | 16.353 | 16.3530 | float | 19.5 | 19.375 |

| half2 | 32.707 | 32.7038 | half2 | 78 | 75.654 |

| tensor | 130.484 | 130.7928 | half TC | 312 | 310.3 |

| Bfloat16 | 39 | 38.752 | |||

| double TC | 19.5 | 19.435 | |||

| STREAM Benchmark Throughput [GBps] | ||

|---|---|---|

| GPU | V100 | A100 |

| specification | 980.99 | 1555.00 |

| copy | 825.47 | 1329.58 |

| scale | 826.52 | 1327.59 |

| add | 873.63 | 1376.84 |

| triadd | 872.37 | 1377.21 |

| GPU | V100 | A100 |

|---|---|---|

| specification unidirectional | 150.00 GBps | 300.00 GBps |

| specification bidirectional | 300.00 GBps | 600.00 GBps |

| measured latency | 2.45 us | 3.65 us |

| measured unidirectional | 145.16 GBps | 281.00 GBps |

| measured bidirectional | 266.46 GBps | 531.17 GBps |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Špeťko, M.; Vysocký, O.; Jansík, B.; Říha, L. DGX-A100 Face to Face DGX-2—Performance, Power and Thermal Behavior Evaluation. Energies 2021, 14, 376. https://doi.org/10.3390/en14020376

Špeťko M, Vysocký O, Jansík B, Říha L. DGX-A100 Face to Face DGX-2—Performance, Power and Thermal Behavior Evaluation. Energies. 2021; 14(2):376. https://doi.org/10.3390/en14020376

Chicago/Turabian StyleŠpeťko, Matej, Ondřej Vysocký, Branislav Jansík, and Lubomír Říha. 2021. "DGX-A100 Face to Face DGX-2—Performance, Power and Thermal Behavior Evaluation" Energies 14, no. 2: 376. https://doi.org/10.3390/en14020376

APA StyleŠpeťko, M., Vysocký, O., Jansík, B., & Říha, L. (2021). DGX-A100 Face to Face DGX-2—Performance, Power and Thermal Behavior Evaluation. Energies, 14(2), 376. https://doi.org/10.3390/en14020376