A Multi-Time Scale Optimal Scheduling Strategy for the Electro-Hydrogen Coupling System Based on the Modified TCN-PPO

Abstract

1. Introduction

- (1)

- Model dependency dilemma: Conventional methods frequently rely on a set of rigid assumptions and idealized scenarios, necessitating precise modeling of uncertainties and dynamic behaviors. However, in real-world applications, factors such as system parameters, fluctuations in renewable energy, variations in electro-hydrogen load demand, and other complexities exacerbate the model’s intricacy and the challenges of its resolution, thereby constraining the efficacy of conventional methods in practical settings [24].

- (2)

- Temporal feature neglect: DRL methods demonstrate significant potential in addressing complex decision-making challenges, relying on agents to perceive environmental states and formulate decisions. Nevertheless, current methods exhibit limited capacity to capture and analyze multi-time scale dynamic characteristics [12], making it challenging to discern precisely the temporal features of the coupling system across varying scales. Consequently, agents may overlook the equilibrium between long-term trends and short-term fluctuations during decision-making.

- (1)

- This paper proposes a multi-time scale optimal scheduling architecture for the electro-hydrogen coupling system, integrating the modified PPO and TCN algorithms. The temporal characteristics of the coupling system are comprehensively extracted and utilized as model inputs through TCN. Additionally, the optimal paradigm of deep reinforcement learning is employed to address the real-time and stochastic challenges introduced by renewable energy sources and loads. This method not only reduces operational costs but also enhances the utilization of new energy.

- (2)

- A multi-time scale environmental perception model, leveraging TCN, is developed for the electro-hydrogen coupling system. By analyzing the multi-time scale temporal structure data of the coupling system, which encompasses local patterns and long-term dependencies, the TCN model facilitates a profound exploration of multi-time scale features. This enhances the model’s capacity to perceive and interpret the environmental dynamics of the coupling system.

- (3)

- To address the limitations of the conventional PPO algorithm, including low training efficiency, poor stability, and weak state perception, the modified PPO algorithm is introduced. By incorporating state feature enhancement, the adaptive clipping rate (ACR), and the prioritized experience replay (PER) mechanism, high-quality solutions for multi-time scale optimal challenges in the coupling system are successfully obtained.

2. Problem Formulation

2.1. Multi-Time Scale Optimal Operation Model for Electro-Hydrogen Coupling System

2.1.1. Day-Ahead Optimization

- objective function

- 2.

- Constraints

- (1)

- Electrical load balance constraints

- (2)

- Hydrogen load balance constraints

- (3)

- Contact line power constraints

- (4)

- Electrolyzer power constraints

- (5)

- Electrical storage operational constraints

2.1.2. Real-Time Optimization

- Objective function

- 2.

- Constraints

2.2. TCN-Based Dynamic Sensing Model for Environmental Information

2.2.1. Causal Convolution

2.2.2. Dilated Convolution

2.2.3. Residual Connection

2.3. System Operation Model Based on MDP

2.3.1. State Space

2.3.2. Action Space

2.3.3. Reward Function

3. Proposed Method Based on the Modified TCN-PPO

3.1. Fundamentals of the PPO Algorithm

3.2. Modified Mechanisms of the PPO Algorithm

3.2.1. State Feature Enhancement

3.2.2. Adaptive Clipping Rate

3.2.3. Prioritized Experience Replay

3.3. Training Process of the Proposed Modified TCN-PPO Algorithm

4. Case Studies

4.1. Case Study Setup

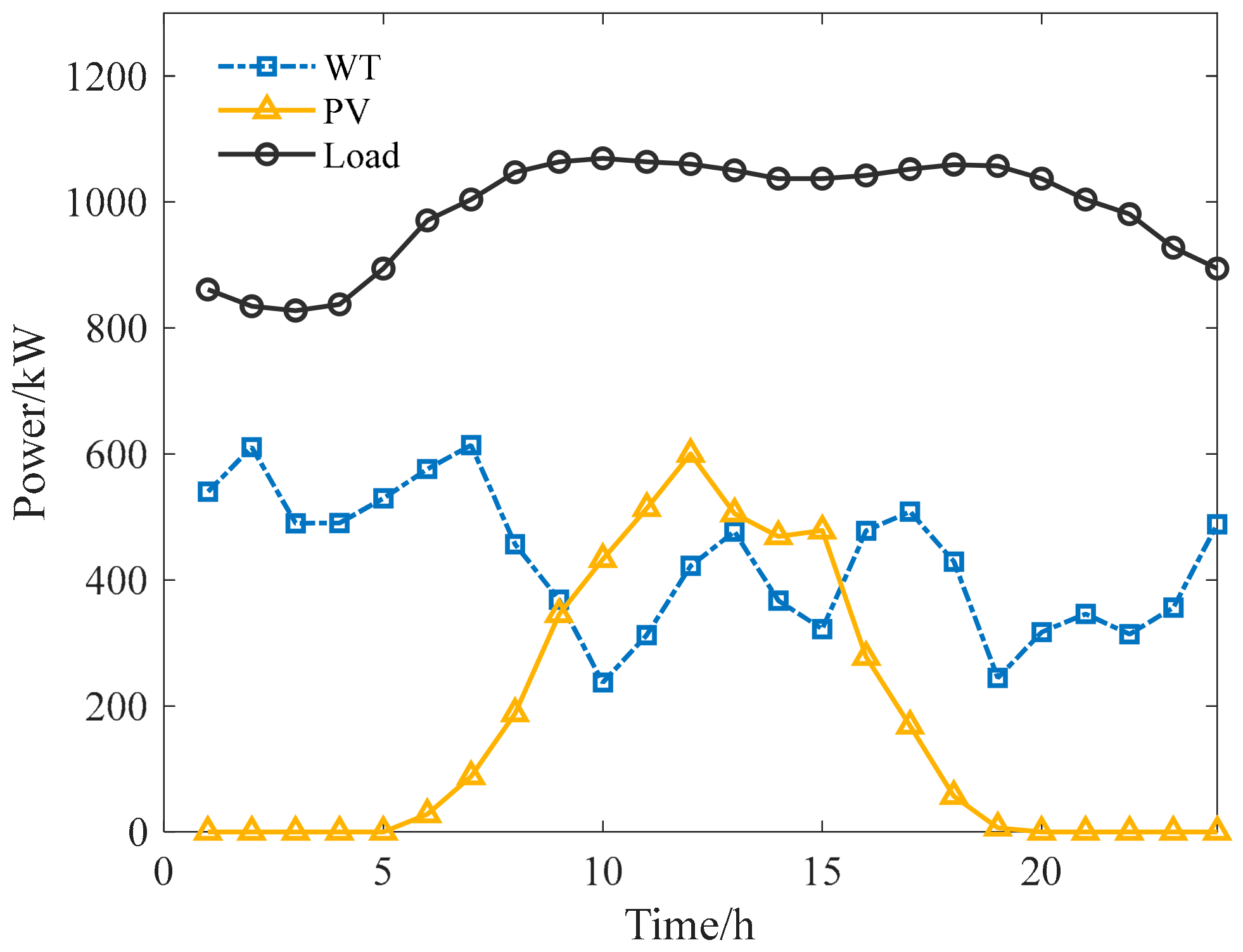

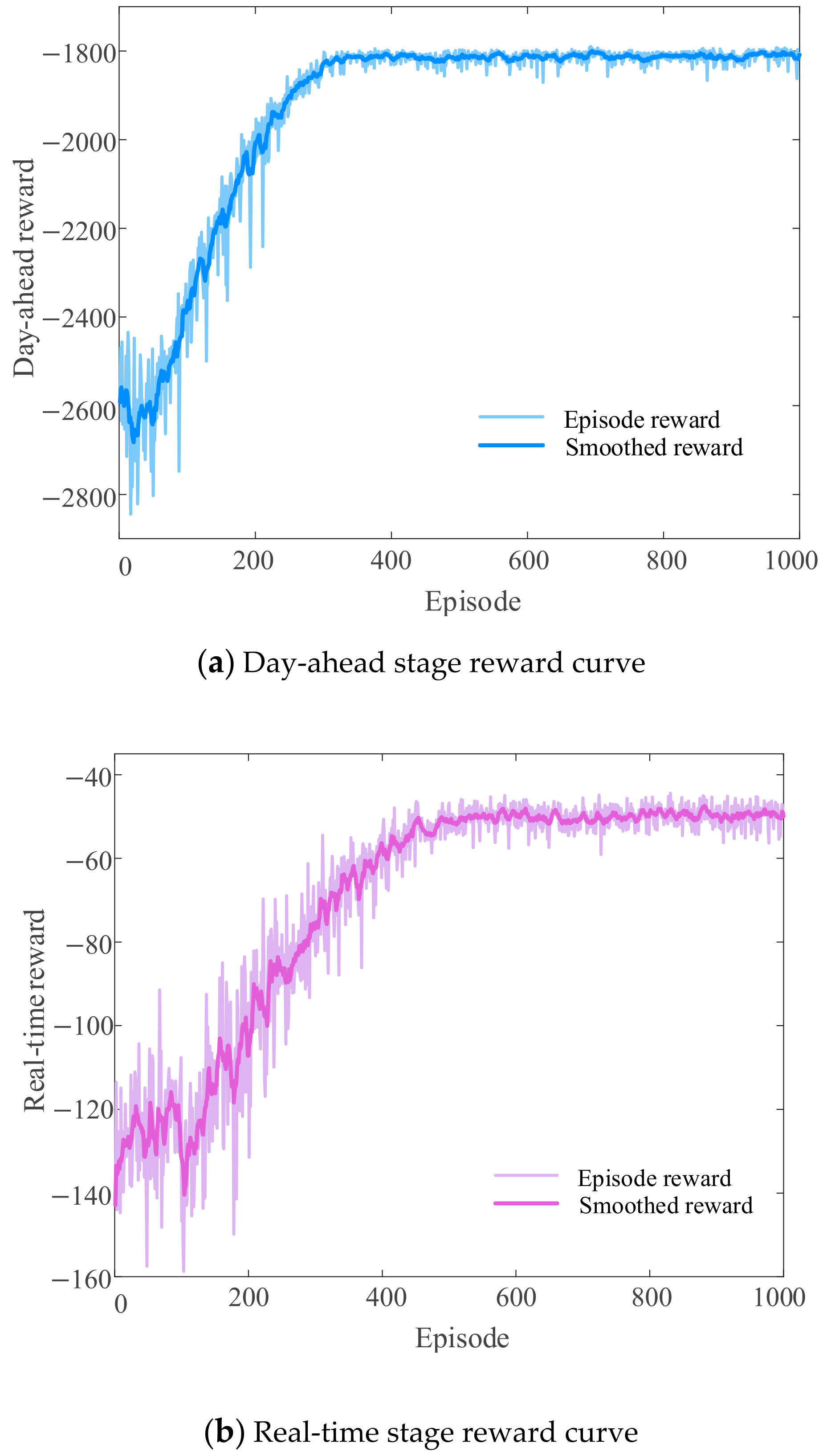

4.2. Analysis of the Training Process

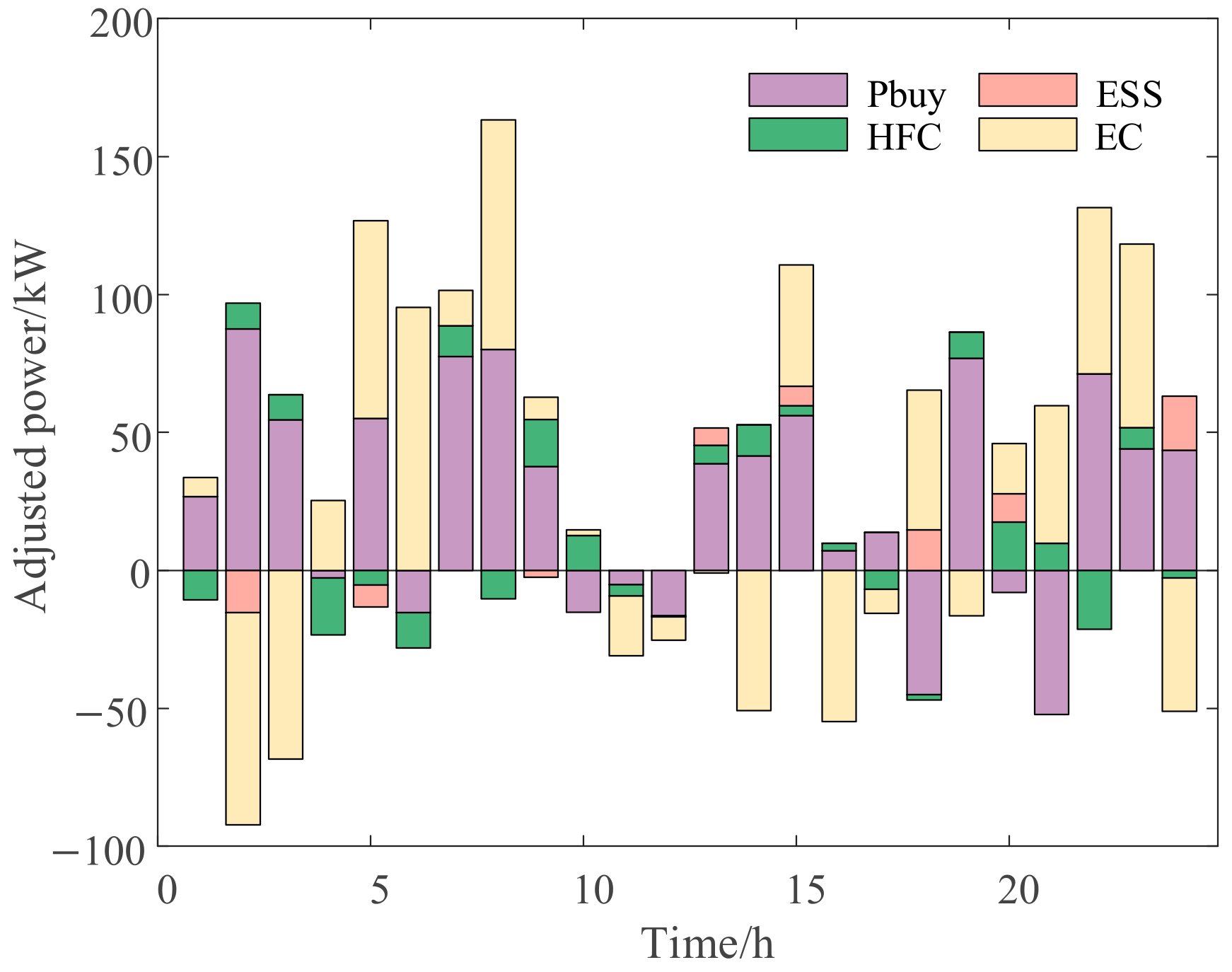

4.3. Analysis of the Testing Results

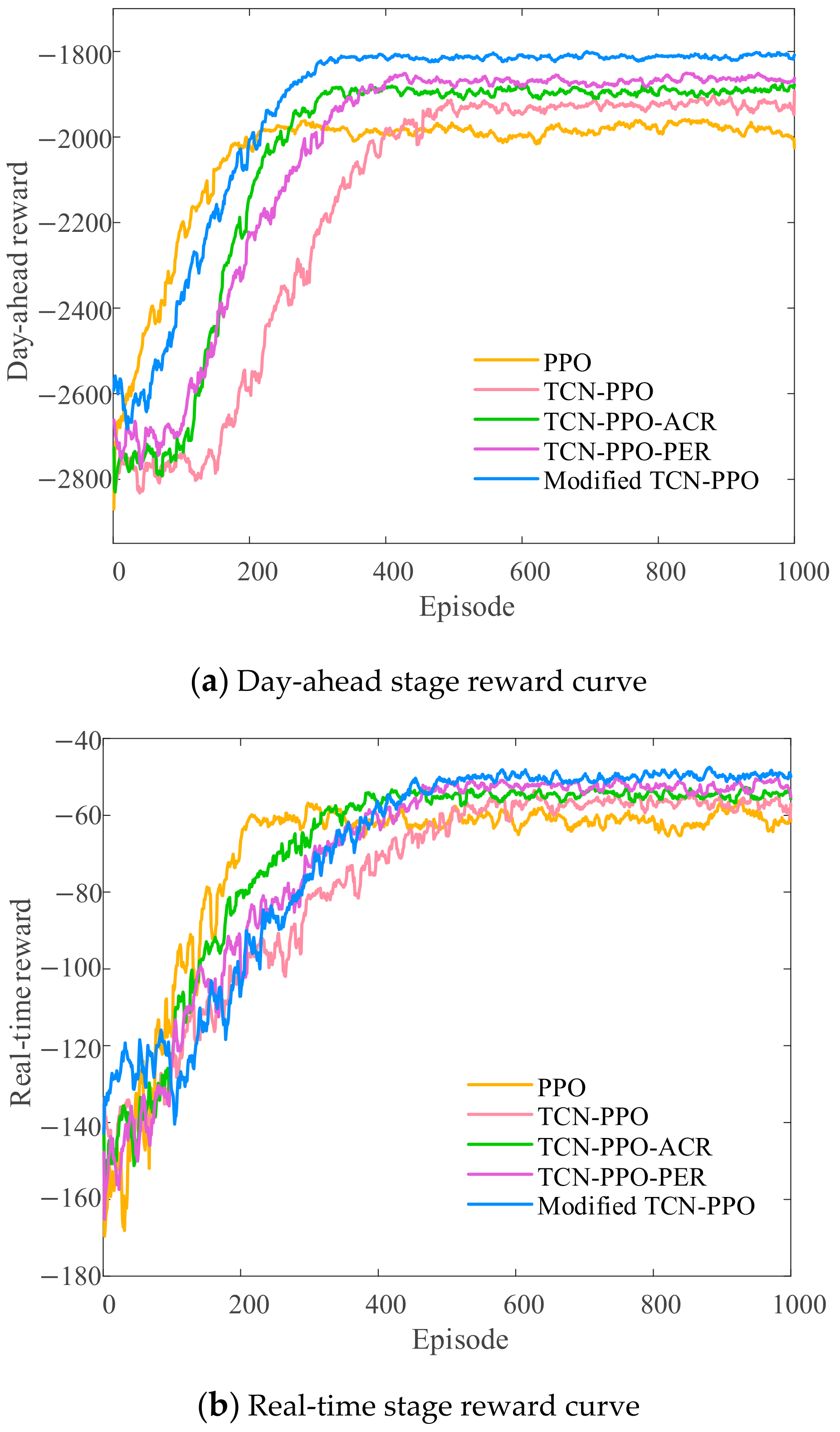

4.4. Comparison of Different Algorithms

4.5. Analysis of Ablation Experiment

4.6. Analysis of Model Sensitivity

5. Conclusions

- (1)

- The proposed optimal scheduling strategy for the electro-hydrogen coupling system employs a multi-time scale architecture that integrates the feature extraction capability of TCN and the decision optimization capability of DRL. By effectively formulating the production plan and real-time adjustment scheme in advance, the system’s real-time power adjustment cost is reduced to ¥629.80, significantly enhancing both economic efficiency and low-carbon operation.

- (2)

- Based on the TCN to capture multi-time scale environmental time-series characteristics, the DRL agents’ environmental perception and decision-making capabilities for the coupling system are enhanced. The cumulative cost of the proposed modified TCN-PPO is only ¥1.31 million during a 30-day test cycle, which is 12.67% lower compared with the PPO algorithm.

- (3)

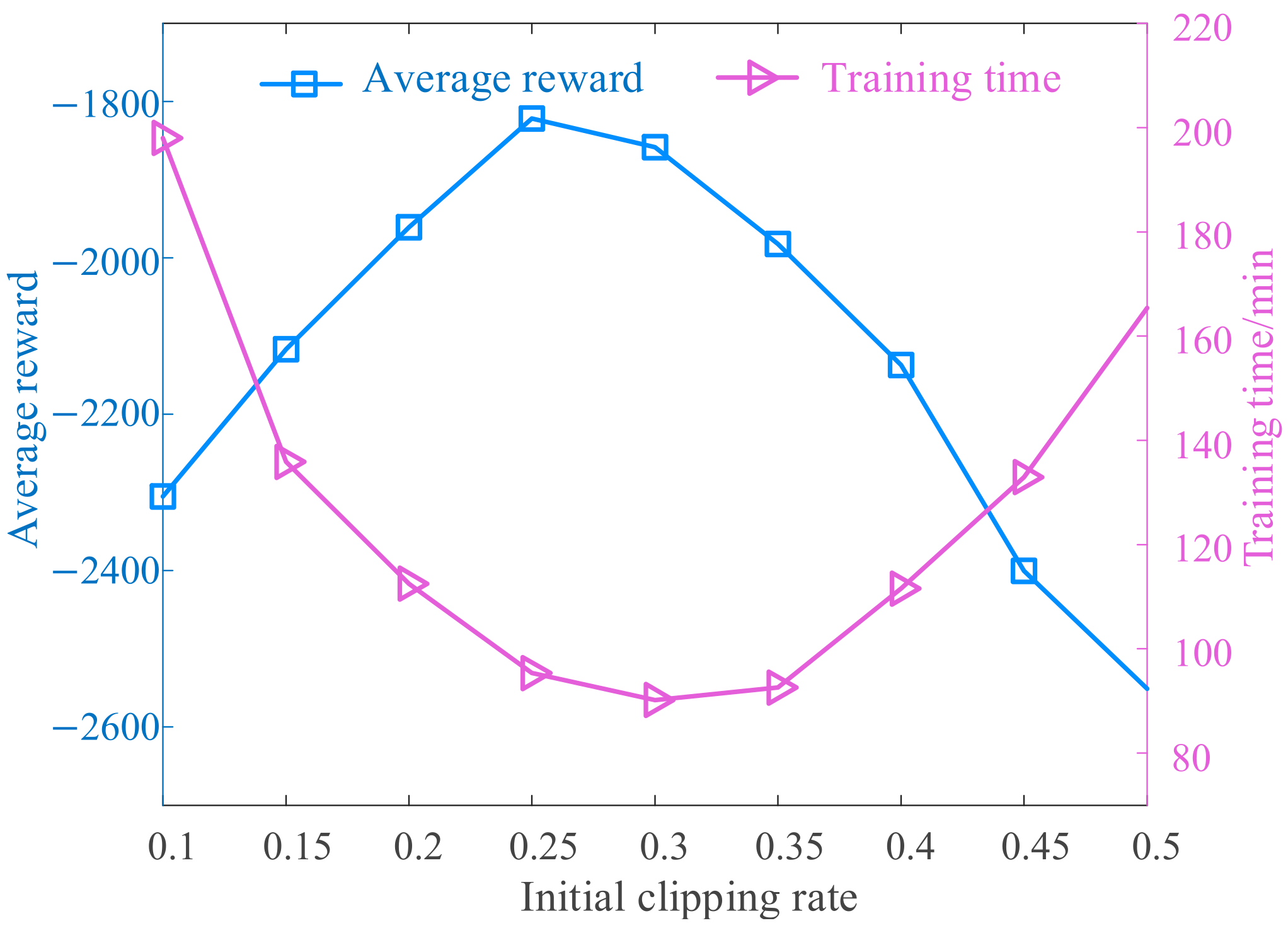

- The proposed feature enhancement, adaptive clipping rate, and PER mechanisms enable the modified TCN-PPO algorithm to address the limitations of the PPO algorithm and achieve high-quality solutions for the multi-time scale optimal problem in the coupling system. Furthermore, model sensitivity experiments demonstrate that the model achieves an optimal balance between performance and stability when the initial clipping rate falls within the interval [0.25, 0.3].

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yuan, K.; Zhang, T.; Xie, X.; Du, S.; Xue, X.; Abdul-Manan, A.F.; Huang, Z. Exploration of low-cost green transition opportunities for China’s power system under dual carbon goals. J. Clean. Prod. 2023, 414, 137590. [Google Scholar] [CrossRef]

- Dong, Y.; Shan, X.; Yan, Y.; Leng, X.; Wang, Y. Architecture, key technologies and applications of load dispatching in china power grid. J. Mod. Power Syst. Clean Energy 2022, 10, 316–327. [Google Scholar] [CrossRef]

- Abdelghany, M.B.; Al-Durra, A.; Zeineldin, H.H.; Gao, F. A Coordinated Multi-Time Scale Model Predictive Control for Output Power Smoothing in Hybrid Microgrid Incorporating Hydrogen Energy Storage. IEEE Trans. Ind. Inform. 2024, 20, 10987–11001. [Google Scholar] [CrossRef]

- Fan, G.; Liu, Z.; Liu, X.; Shi, Y.; Wu, D.; Guo, J.; Zhang, S.; Yang, X.; Zhang, Y. Two-layer collaborative optimization for a renewable energy system combining electricity storage, hydrogen storage, and heat storage. Energy 2022, 259, 125047. [Google Scholar] [CrossRef]

- Yue, M.; Lambert, H.; Pahon, E.; Roche, R.; Jemei, S.; Hissel, D. Hydrogen energy systems: A critical review of technologies, applications, trends and challenges. Renew. Sustain. Energy Rev. 2021, 146, 111180. [Google Scholar] [CrossRef]

- Daneshvar, M.; Mohammadi-Ivatloo, B.; Zare, K.; Asadi, S. Transactive energy management for optimal scheduling of interconnected microgrids with hydrogen energy storage. Int. J. Hydrog. Energy 2021, 46, 16267–16278. [Google Scholar] [CrossRef]

- Li, Q.; Xiao, X.; Pu, Y.; Luo, S.; Liu, H.; Chen, W. Hierarchical optimal scheduling method for regional integrated energy systems considering electricity-hydrogen shared energy. Appl. Energy 2023, 349, 121670. [Google Scholar] [CrossRef]

- Liu, S.; Song, L.; Wang, T.; Hao, Y.; Dai, B.; Wang, Z. Negative carbon optimal scheduling of integrated energy system using a non-dominant sorting genetic algorithm. Energy Convers. Manag. 2023, 291, 117345. [Google Scholar] [CrossRef]

- Lu, J.; Huang, D.; Ren, H. Data-driven source-load robust optimal scheduling of integrated energy production unit including hydrogen energy coupling. Glob. Energy Interconnect. 2023, 6, 375–388. [Google Scholar] [CrossRef]

- Zhao, Y.; Wei, Y.; Zhang, S.; Guo, Y.; Sun, H. Multi-Objective Robust Optimization of Integrated Energy System with Hydrogen Energy Storage. Energies 2024, 17, 1132. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Shu, S.; Zheng, F.; Huang, Z. A data-driven distributionally robust optimization model for multi-energy coupled system considering the temporal-spatial correlation and distribution uncertainty of renewable energy sources. Energy 2021, 216, 119171. [Google Scholar] [CrossRef]

- Fang, X.; Dong, W.; Wang, Y.; Yang, Q. Multiple time-scale energy management strategy for a hydrogen-based multi-energy microgrid. Appl. Energy 2022, 328, 120195. [Google Scholar] [CrossRef]

- Li, Q.; Zou, X.; Pu, Y.; Chen, W. A Real-time Energy Management Method for Electric-hydrogen Hybrid Energy Storage Microgrids Based on DP-MPC. CSEE J. Power Energy Syst. 2020, 10, 324–336. [Google Scholar] [CrossRef]

- Zheng, B.; Hou, X.; Xu, S.; Jin, T.; Liu, W.; Li, N.; Guo, D.; Pan, C. Strategic optimization operations in the integrated energy system through multitime scale comprehensive demand response. Energy Sci. Eng. 2024, 12, 2236–2257. [Google Scholar] [CrossRef]

- Fang, X.; Dong, W.; Wang, Y.; Yang, Q. Multi-stage and multi-timescale optimal energy management for hydrogen-based integrated energy systems. Energy 2024, 286, 129576. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, B.; Wei, J. The Robust Optimization of Low-Carbon Economic Dispatching for Regional Integrated Energy Systems Considering Wind and Solar Uncertainty. Electronics 2024, 13, 3480. [Google Scholar] [CrossRef]

- Li, Q.; Qiu, Y.; Yang, H.; Xu, Y.; Chen, W.; Wang, P. Stability-constrained two-stage robust optimization for integrated hydrogen hybrid energy system. CSEE J. Power Energy Syst. 2020, 7, 162–171. [Google Scholar] [CrossRef]

- Li, X.; Wu, N. A two-stage distributed robust optimal control strategy for energy collaboration in multi-regional integrated energy systems based on cooperative game. Energy 2024, 305, 132221. [Google Scholar] [CrossRef]

- Fan, W.; Ju, L.; Tan, Z.; Li, X.; Zhang, A.; Li, X.; Wang, Y. Two-stage distributionally robust optimization model of integrated energy system group considering energy sharing and carbon transfer. Appl. Energy 2023, 331, 120426. [Google Scholar] [CrossRef]

- Shi, T.; Xu, C.; Dong, W.; Zhou, H.; Bokhari, A.; Klemeš, J.J.; Han, N. Research on energy management of hydrogen electric coupling system based on deep reinforcement learning. Energy 2023, 282, 128174. [Google Scholar] [CrossRef]

- Dong, W.; Sun, H.; Mei, C.; Li, Z.; Zhang, J.; Yang, H. Forecast-driven stochastic optimization scheduling of an energy management system for an isolated hydrogen microgrid. Energy Convers. Manag. 2023, 277, 128174. [Google Scholar] [CrossRef]

- Yang, Z.; Ren, Z.; Li, H.; Sun, Z.; Feng, J.; Xia, W. A multi-stage stochastic dispatching method for electricity-hydrogen integrated energy systems driven by model and data. Appl. Energy 2024, 371, 123668. [Google Scholar] [CrossRef]

- Li, H.; Qin, B.; Wang, S.; Ding, T.; Wang, H. Data-driven two-stage scheduling of multi-energy systems for operational flexibility enhancement. Int. J. Electr. Power Energy Syst. 2024, 162, 110230. [Google Scholar] [CrossRef]

- Kakodkar, R.; He, G.; Demirhan, C.; Arbabzadeh, M.; Baratsas, S.; Avraamidou, S.; Mallapragada, D.; Miller, I.; Allen, R.; Gençer, E.; et al. A review of analytical and optimization methodologies for transitions in multi-scale energy systems. Renew. Sustain. Energy Rev. 2022, 160, 112277. [Google Scholar] [CrossRef]

- Li, Y.; Song, L.; Zhang, S.; Kraus, L.; Adcox, T.; Willardson, R.; Komandur, A.; Lu, N. A TCN-based hybrid forecasting architecture for hours-ahead utility-scale PV forecasting. IEEE Trans. Smart Grid 2023, 14, 4073–4085. [Google Scholar] [CrossRef]

- Huang, B.; Wang, J. Deep-reinforcement-learning-based capacity scheduling for PV-battery storage system. IEEE Trans. Smart Grid 2021, 12, 2272–2283. [Google Scholar] [CrossRef]

- Yuan, T.J.; Wan, Z.; Wang, J.J.; Zhang, D.; Jiang, D. The day-ahead output plan of hydrogen production system considering the start-stop characteristics of electrolyzers. Electr. Power 2022, 55, 101–109. [Google Scholar]

| Time Interval | Price/(Yuan/kWh) | |

|---|---|---|

| Off-peak period | 0:00–8:00 | 0.31 |

| Mid-peak period | 12:00–17:00, 21:00–24:00 | 0.64 |

| Peak period | 8:00–12:00, 17:00–21:00 | 1.07 |

| Parameters | Value |

|---|---|

| /(yuan/kWh) | 0.05 |

| /(yuan/kWh) | 0.5 |

| /(yuan/kWh) | 0.04 |

| /(yuan/time) | 234 |

| /(yuan/kWh) | 0.5 |

| /(yuan/kWh) | 0.5 |

| /(yuan/kWh) | 1 |

| DDPG | PPO | TCN-PPO | The Modified TCN-PPO | |

|---|---|---|---|---|

| Power purchase cost/yuan | 28,792.27 | 27,684.03 | 24,143.57 | 22,359.29 |

| Operation cost/yuan | 18,852.45 | 18,020.57 | 17,498.99 | 17,405.65 |

| Start–stop cost/yuan | 4500.33 | 4209.00 | 4147.67 | 3948.33 |

| Day-Ahead Stage | Real-Time Stage | |||||

|---|---|---|---|---|---|---|

| Episodes to Convergence | Variance of Reward | Mean Converged Reward | Episodes to Convergence | Variance of Reward | Mean Converged Reward | |

| PPO | 200 | 24,162.28 | −1983.62 | 200 | 614.18 | −61.23 |

| TCN-PPO | 450 | 107,223.05 | −1926.86 | 600 | 746.24 | −57.09 |

| TCN-PPO-ACR | 300 | 85,146.11 | −1893.84 | 350 | 724.66 | −54.62 |

| TCN-PPO-PER | 400 | 83,788.75 | −1867.43 | 500 | 852.21 | −52.39 |

| Modified TCN-PPO | 350 | 57,540.32 | −1813.37 | 500 | 797.06 | −49.95 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, D.; Qian, K.; Xu, Y.; Zhou, J.; Wang, Z.; Peng, Y.; Xing, Q. A Multi-Time Scale Optimal Scheduling Strategy for the Electro-Hydrogen Coupling System Based on the Modified TCN-PPO. Energies 2025, 18, 1926. https://doi.org/10.3390/en18081926

Li D, Qian K, Xu Y, Zhou J, Wang Z, Peng Y, Xing Q. A Multi-Time Scale Optimal Scheduling Strategy for the Electro-Hydrogen Coupling System Based on the Modified TCN-PPO. Energies. 2025; 18(8):1926. https://doi.org/10.3390/en18081926

Chicago/Turabian StyleLi, Dongsen, Kang Qian, Yiyue Xu, Jiangshan Zhou, Zhangfan Wang, Yufei Peng, and Qiang Xing. 2025. "A Multi-Time Scale Optimal Scheduling Strategy for the Electro-Hydrogen Coupling System Based on the Modified TCN-PPO" Energies 18, no. 8: 1926. https://doi.org/10.3390/en18081926

APA StyleLi, D., Qian, K., Xu, Y., Zhou, J., Wang, Z., Peng, Y., & Xing, Q. (2025). A Multi-Time Scale Optimal Scheduling Strategy for the Electro-Hydrogen Coupling System Based on the Modified TCN-PPO. Energies, 18(8), 1926. https://doi.org/10.3390/en18081926