A Sustainable Way Forward: Systematic Review of Transformer Technology in Social-Media-Based Disaster Analytics

Abstract

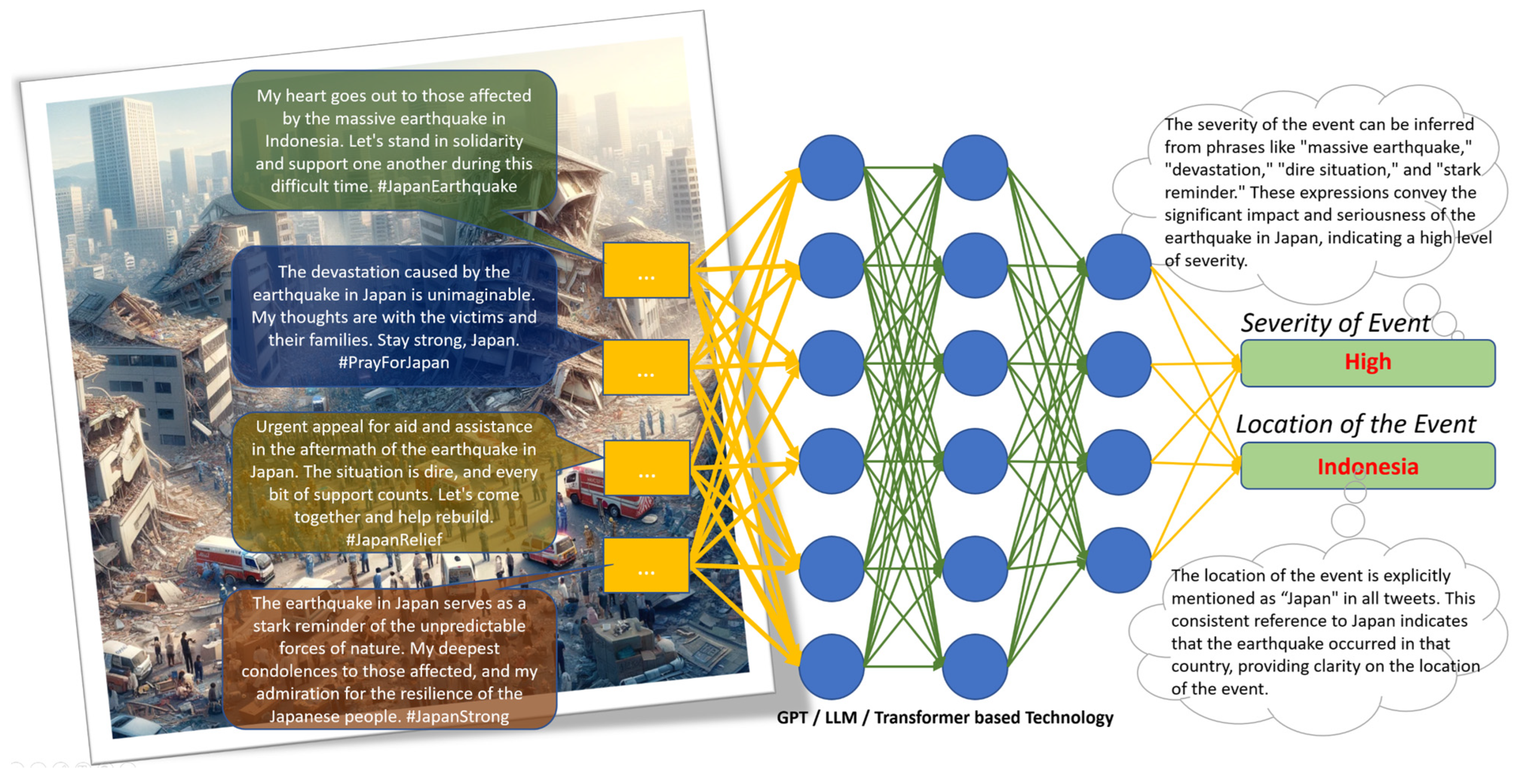

1. Introduction

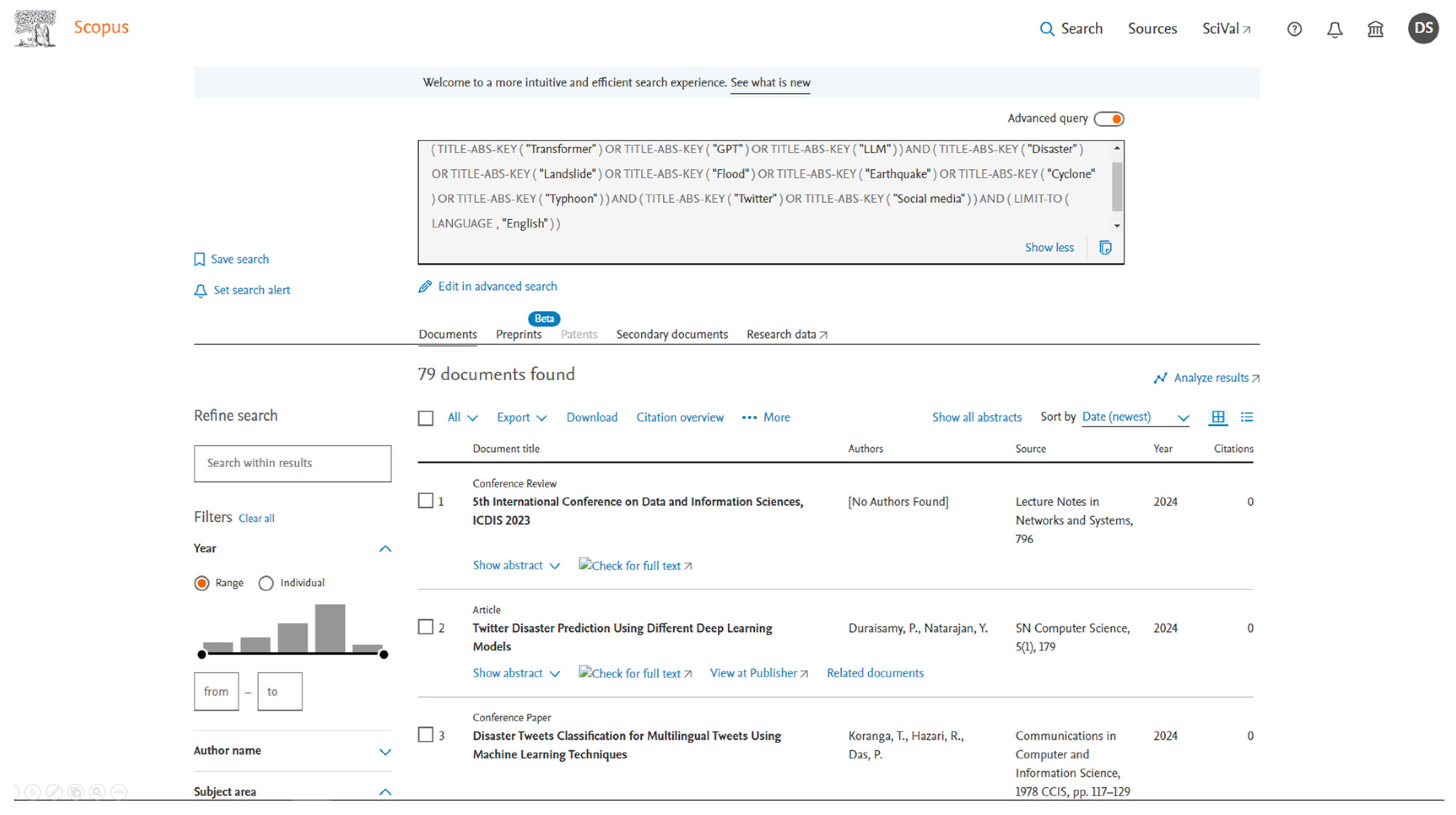

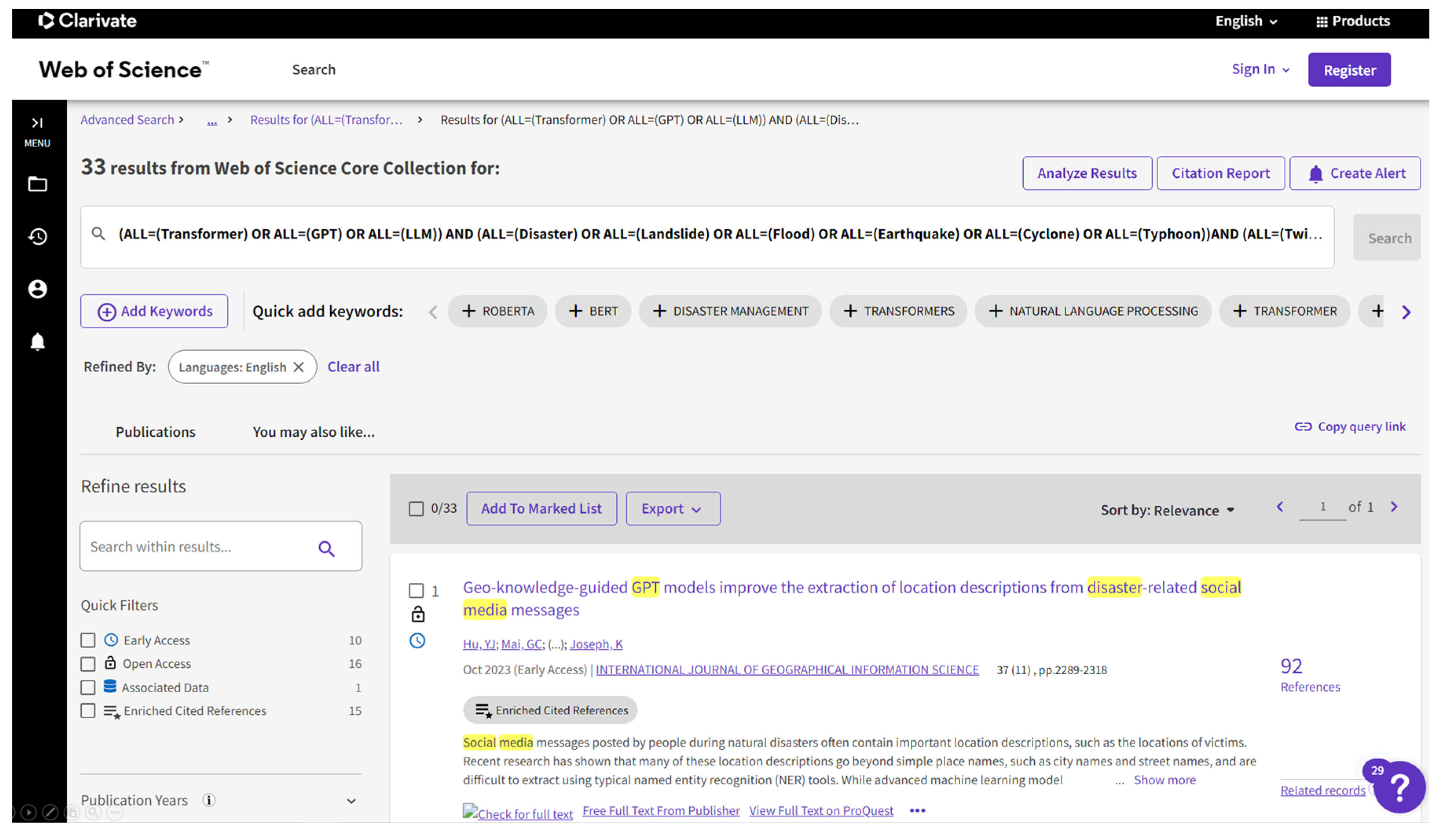

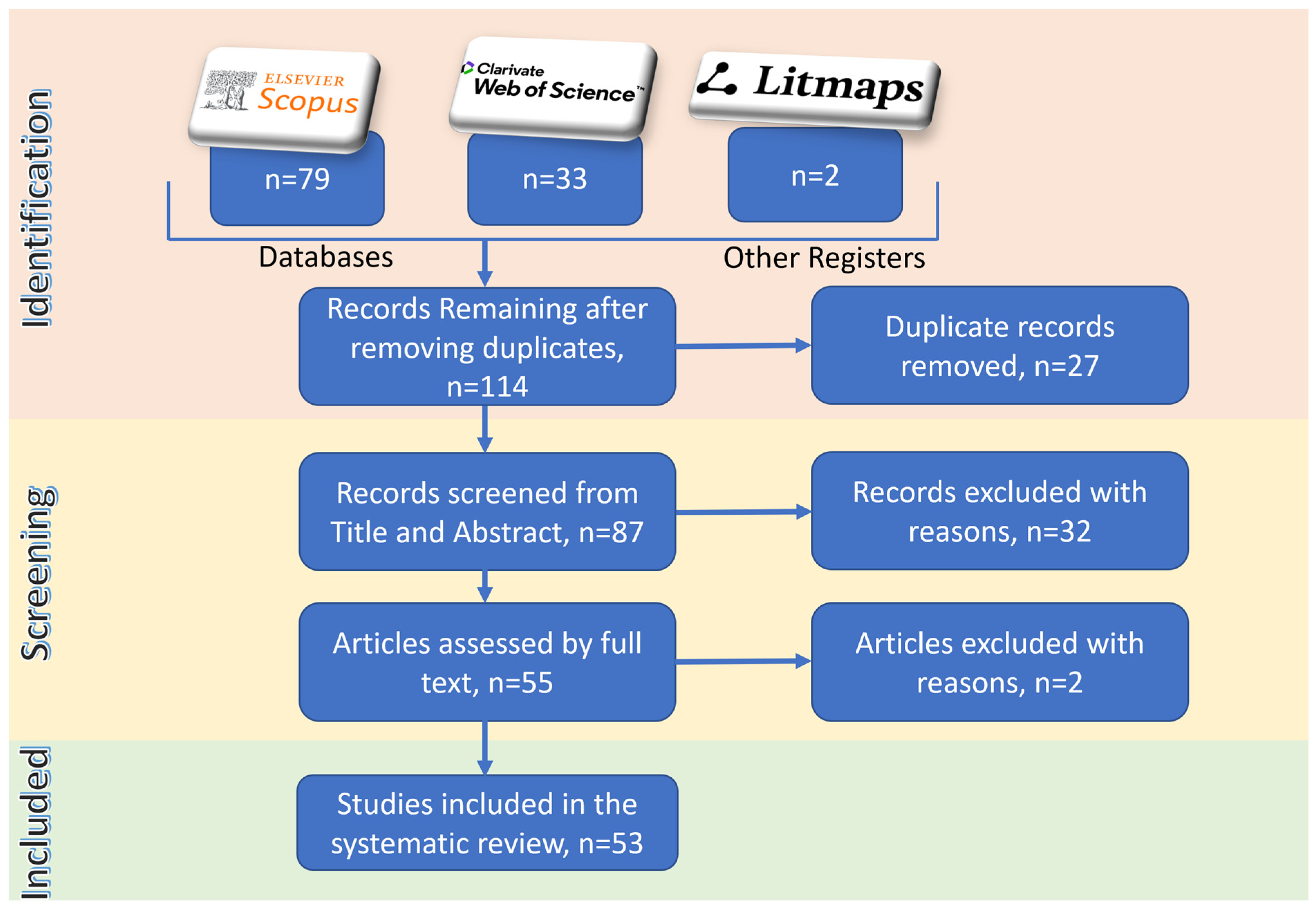

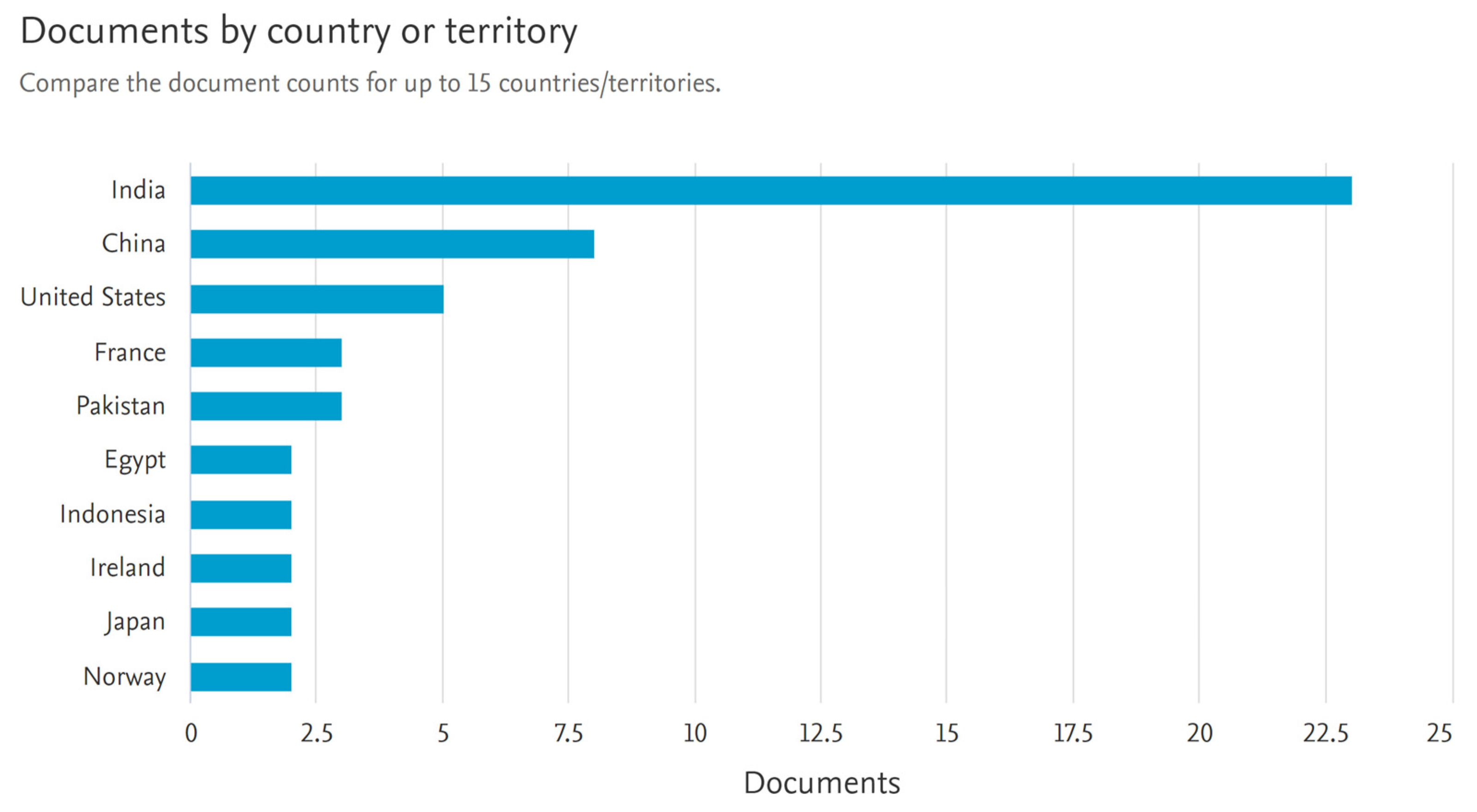

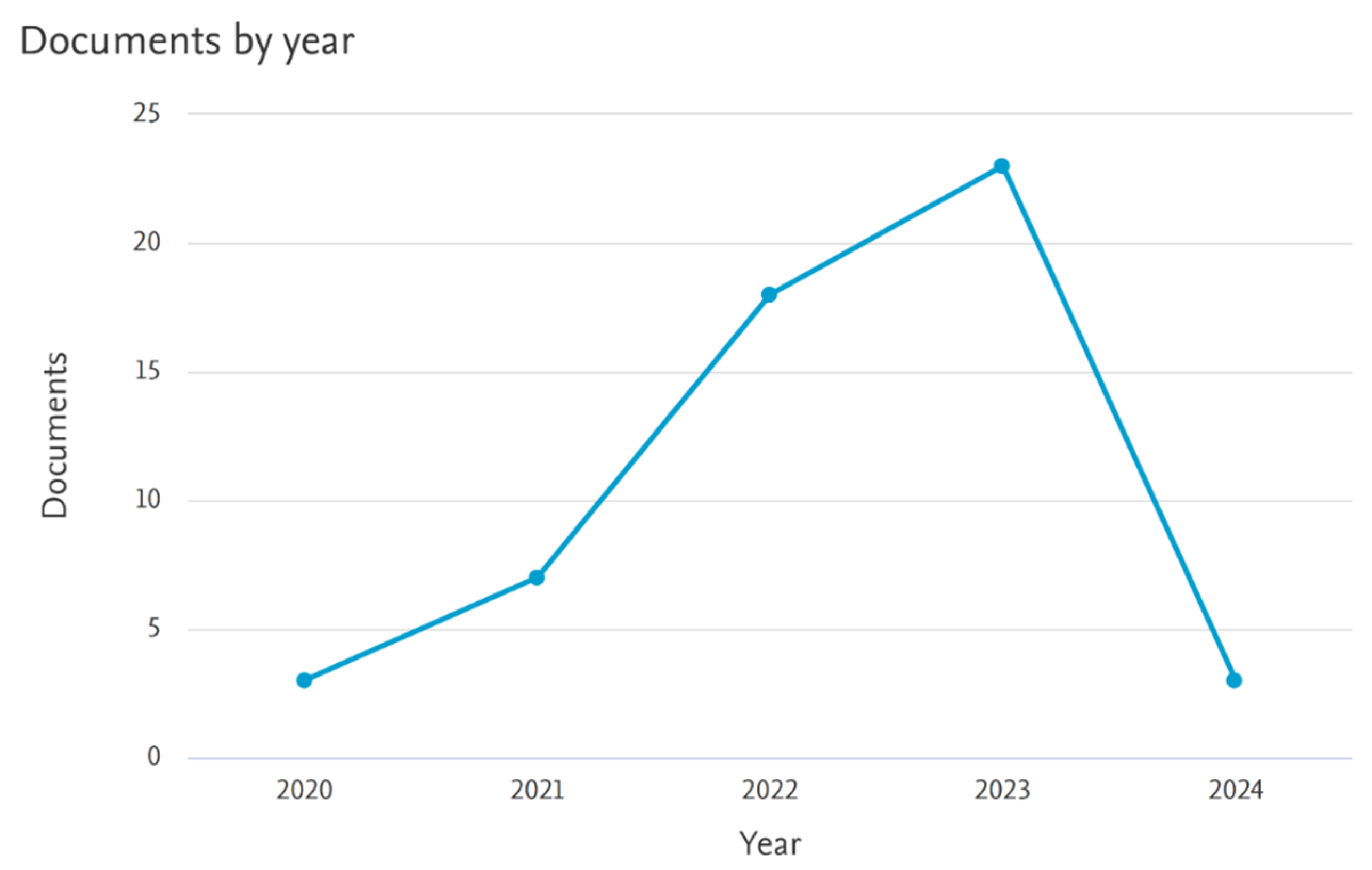

2. Materials and Methods

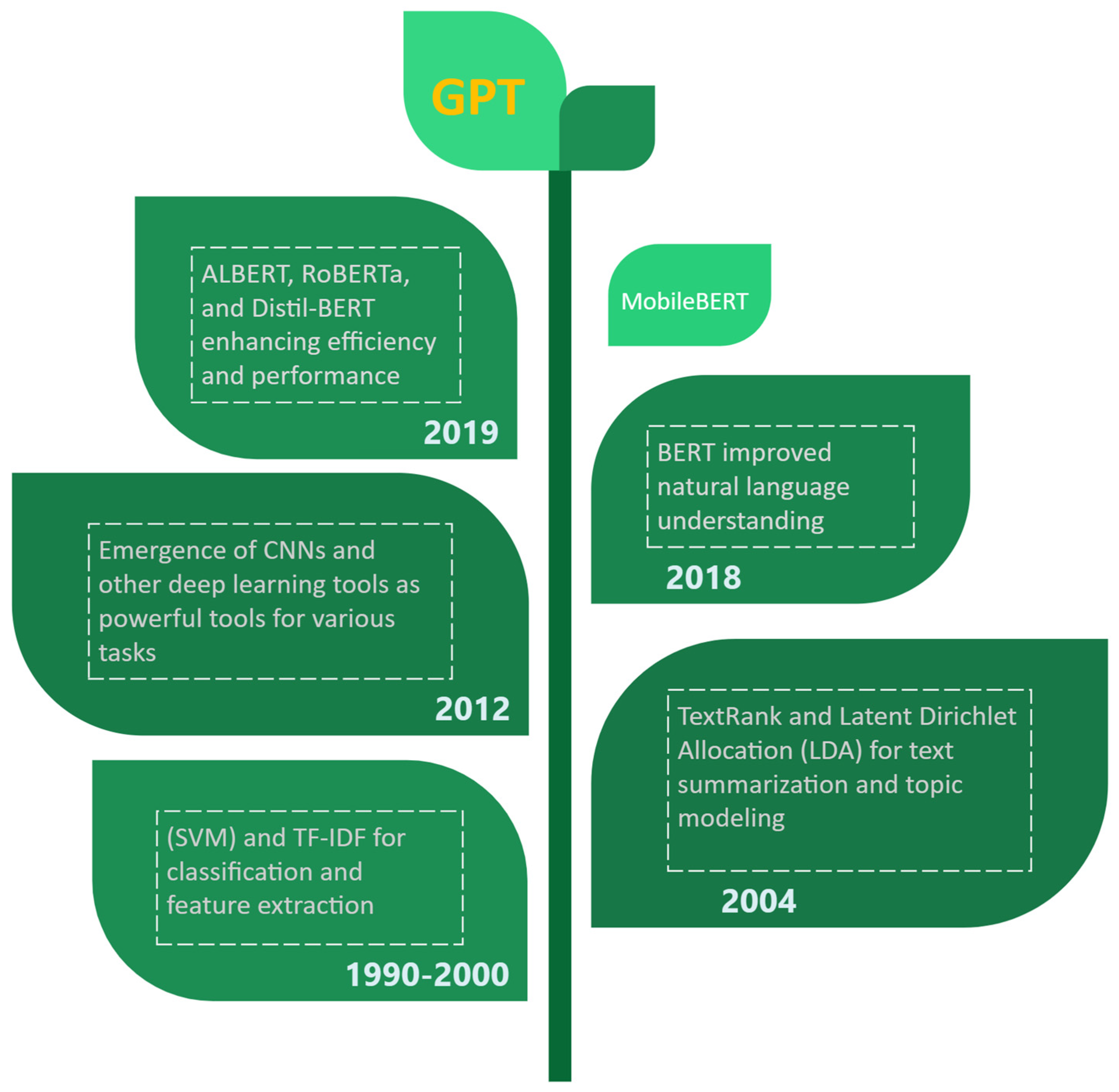

2.1. Algorithms in Social-Media-Based Disaster Analytics

2.2. Social-Media-Based Disaster Analytics

2.3. Systematic Literature Review

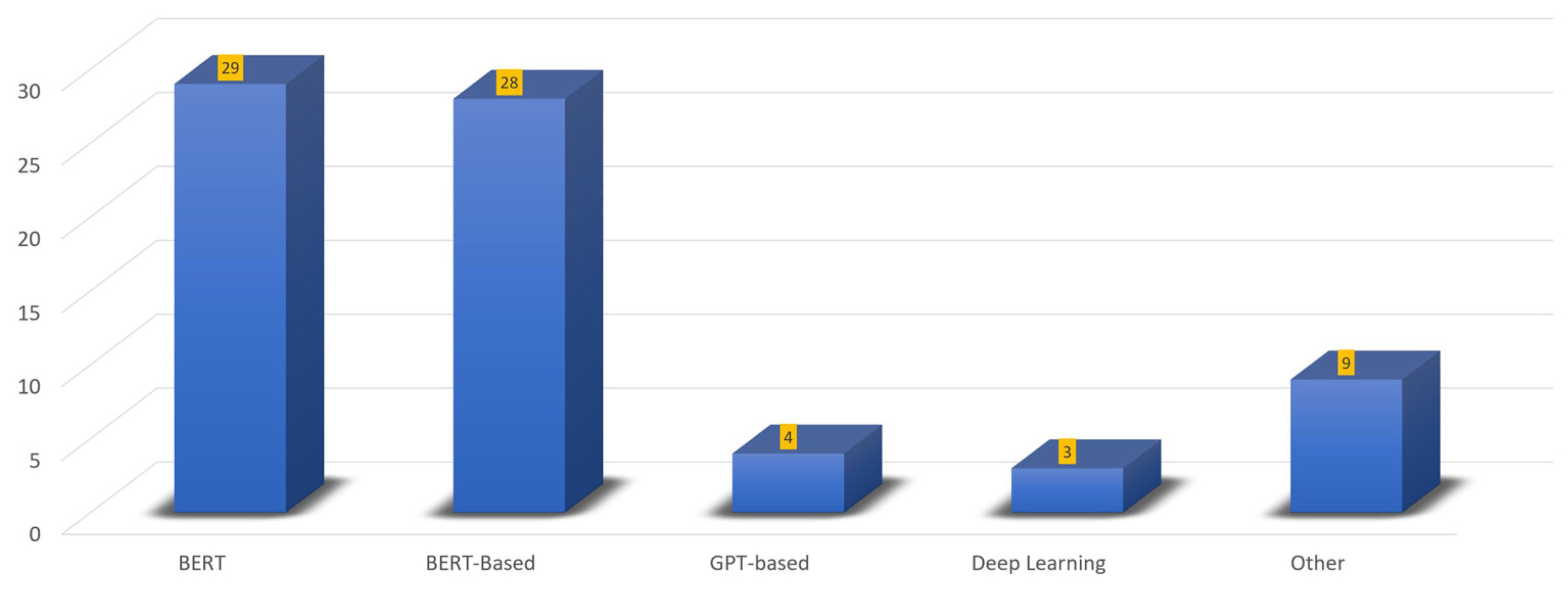

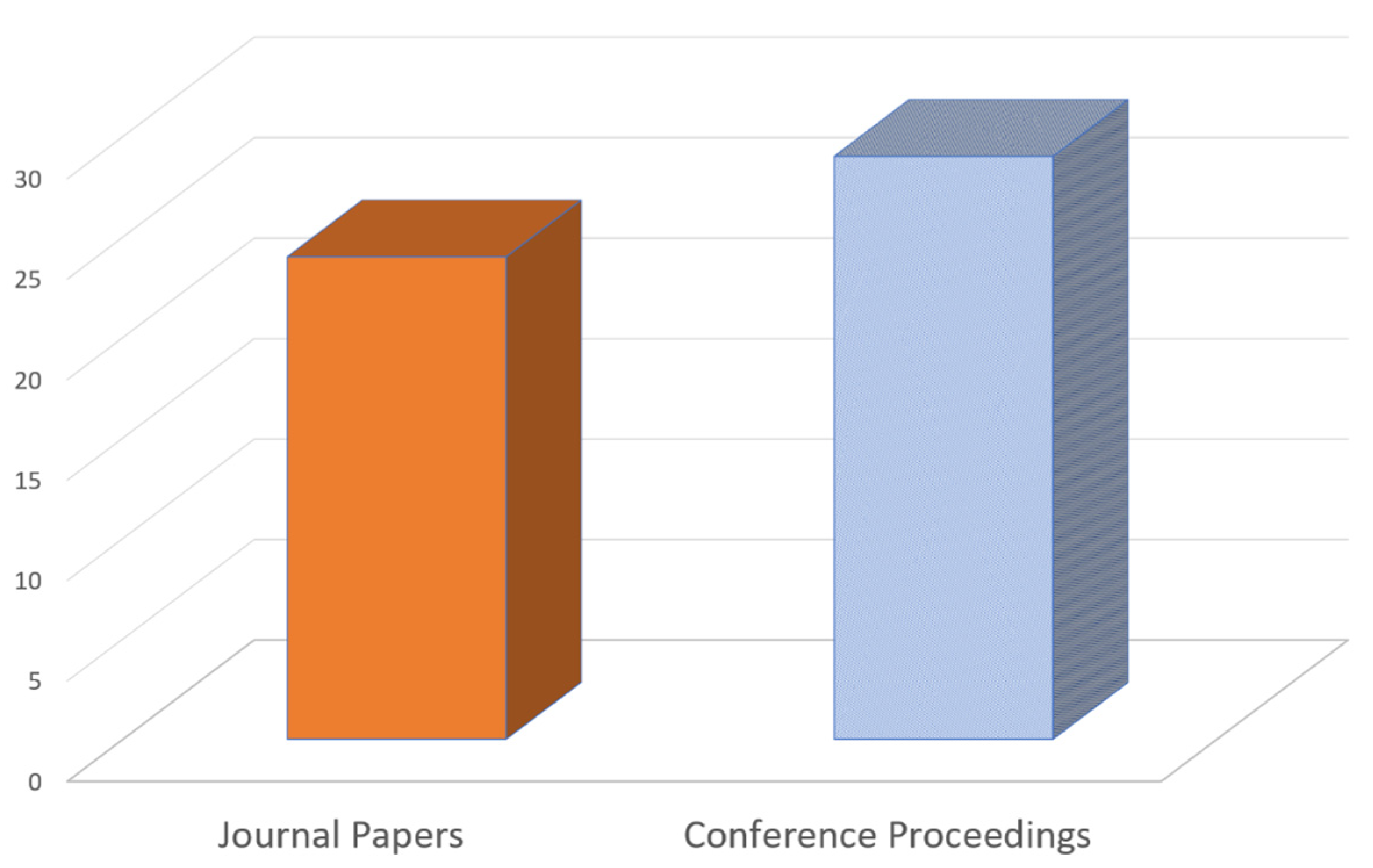

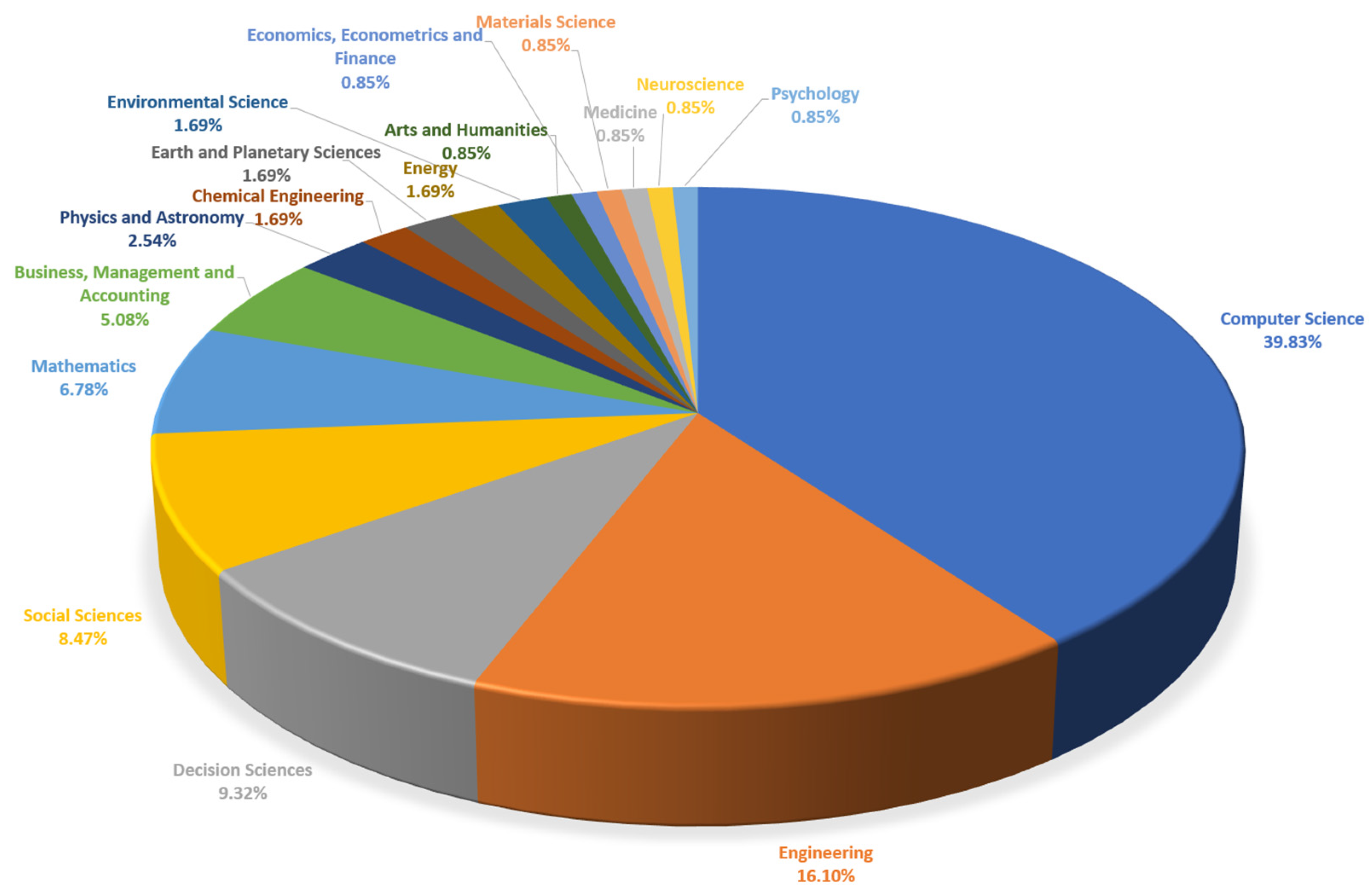

3. Results

3.1. Disaster Event Detection and Classification

| Algorithm Category | References | Generic Advantages | Generic Disadvantages |

|---|---|---|---|

| BERT | [18,20,21,22,27,28,30,31,33,34,35,36,37,40] | High accuracy in classifying tweets related to specific disasters. | Stemming process may remove important features, noise from broad search terms, reliance on keyword position, challenges with imbalanced data, and processing informal social media text. |

| BERT-based (e.g., RoBERTa, DistilBERT) | [23,24,25,29,32,38,39] | Superior performance in various tasks, including textual and visual analysis. | Substantial computational and memory resources, potential hardware limitations, complexity in integrating multimodal data, and challenges with real-time processing and scalability. |

| GPT-based | [26] | Enhanced performance in sentiment analysis on large-scale datasets, including text, images, and audio. | Complexity in implementation and optimization. |

3.2. Sentiment Analysis and Public Perception

| Algorithm Category | References | Generic Advantage | Generic Disadvantage |

|---|---|---|---|

| Deep Learning | [19,41,44] | High accuracy in detecting beliefs, opinions, and emotions, showcasing effective analysis. | Difficulty in collecting and labeling diverse data due to variations in human dialect and speech. Limited application without extensive preprocessing and NLP understanding. |

| BERT | [43] | Accurate analysis of public sentiment and effective in topic modeling and sentiment analysis. | Focuses more on sentiment analysis rather than direct disaster response strategies. Potential challenges include processing vast datasets and identifying nuanced sentiment accurately. |

| BERT-based (e.g., RoBERTa, Word2Vec, LDA) | [42,45] | High accuracy in classifying sentiments and emotions, improving disaster response. | Word2Vec showed lower performance compared to BERT, indicating fixed embeddings may not capture contextual nuances effectively. Potential limitations include the limited availability of specific dataset details. |

3.3. Information Summarization and Retrieval

| Algorithm Category | References | Generic Advantage | Generic Disadvantage |

|---|---|---|---|

| BERT | [28,33,34,48] | Effective summarization and classification of disaster-related information from social media. | Challenges in verifying the authenticity of user-generated content, potential limitations in adapting to new or unforeseen disaster types. Complexity in integrating multiple algorithms and scalability. |

| GPT | [46,47] | High comprehensiveness in summarizing crisis-relevant information from social media and online news. Rapid deployment due to few-shot learning. | High redundancy ratio in generated summaries, complexity in handling multilingual data, and potential for reduced accuracy in cross-lingual information retrieval and summarization. |

| Other (e.g., SVM, TF-IDF, TextRank, LDA) | [33,48] | Effective summarization and topic classification in specific disaster-related contexts. | Challenges in scalability, real-time processing, and handling multilingual data. Limited capability in areas with few Twitter activities and reliance on geotagged tweets. |

3.4. Location Identification and Description Extraction

| Algorithm Category | References | Generic Advantage | Generic Disadvantage |

|---|---|---|---|

| BERT | [49,51] | High accuracy in location identification, crisis tweet classification, and extraction of location descriptions from social media messages. | Challenges include potential lack of direct disaster management application examples and handling diverse data quality. |

| GPT-based | [50] | Significant improvement in accuracy of location extraction from social media messages by leveraging GPT models. | Effectiveness contingent on availability and quality of geo-knowledge about common forms of location descriptions. |

3.5. Tweet Prioritization and Useful Information Extraction

| Algorithm Category | References | Generic Advantage | Generic Disadvantage |

|---|---|---|---|

| BERT-based | [29,52,53,54,55] | Achieves high accuracy and performance in various disaster-related tasks. | Complexity of models, computational costs, reliance on extensive datasets for training, potential challenges in handling large volumes of social media data, and processing informal text. |

| BERT | [29,31,56,57] | Effective in tweet classification, semantic similarity, and multi-label text classification. | May require integration of methods to assess tweet credibility, challenges with imbalanced data, and processing informal social media text. |

| Other (e.g., Deep Learning) | [54,58] | Achieves high accuracy and precision in predicting disaster-related events and identifying informative tweets. | Complexity of model implementation, reliance on large datasets for training, and computational overhead. |

3.6. Multimodal Data Analysis

| Algorithm Category | References | Generic Advantage | Generic Disadvantage |

|---|---|---|---|

| BERT-based | [45,59,60,61,62,63] | High accuracy and performance in disaster-related tasks, including flood detection and disaster management. | Complexity of integrating and optimizing multimodal data inputs, potential limitations in single modality analyses, challenges with feature extraction generalizability. |

| Other (e.g., Multimodal, LDA) | [59,60,63] | Effective fusion of textual and visual data, leading to more accurate informative tweet classification. | Complexity of the multimodal analysis process, reliance on extensive data preprocessing and manual labeling for accurate model training. |

3.7. Multilingual and Cross-Lingual Disaster Analysis

| Algorithm Category | References | Generic Advantage | Generic Disadvantage |

|---|---|---|---|

| BERT-Based | [55,64] | Achieved top performance in tweet prioritization and outperformed median performance for information type classification using pre-trained language models. | Not specified, but complexity and integration of GNN with transformer models might introduce computational overhead. |

3.8. Emotion and Sentiment Identification

| Ref. | Area of Disaster | Algorithm Used | GPT/Transformer Technology | Benefit | Disadvantage |

|---|---|---|---|---|---|

| [19] | Emotion Identification During COVID-19 | Average Voting Ensemble Deep Learning Model (AVEDL Model) Incorporating BERT, DistilBERT, RoBERTa | BERT, DistilBERT, RoBERTa | Achieved high accuracy (86.46%) and macro-average F1-score (85.20%) in classifying emotions from COVID-19-related social media and emergency response calls, showcasing effective emotion analysis in pandemic conditions | The model’s performance is contingent on the quality and size of the dataset, and its application is limited without extensive preprocessing and understanding of NLP concepts for accurate emotion extraction |

3.9. Performance Evaluation and Comparison of Models

| Algorithm Category | References | Generic Advantage | Generic Disadvantage |

|---|---|---|---|

| BERT | [30,40,68] | Improved accuracy in disaster prediction on Twitter by incorporating keyword position information into the BERT model; BERT achieved the highest accuracy in classifying disaster-related tweets; high accuracy and low memory usage for flood prediction using Twitter data | Relies heavily on the keyword position, which may not always accurately reflect the context or importance of a tweet; increased complexity of the BERT architecture may lead to overfitting and requires careful adjustment |

| BERT-based | [32,65,66] | Precision of 0.81, recall of 0.76, and F-score of 0.78 for BERT in providing appropriate guidelines; high accuracy in multilingual tweet classification for disaster response; high accuracy and efficiency in detecting and classifying crisis-related events on social media, leveraging advanced transformer technology and optimized feature selection | Limited test data representing diverse crisis scenarios and issues with handling massive datasets due to token limitations; not explicitly mentioned, but potential issues could include data sparsity and language-specific challenges; challenges could include computational demands for processing and analyzing large-scale social media data in real-time and adapting to diverse and evolving crisis scenarios |

| Other | [67] | High precision (96.19%), recall (98.33%), and accuracy (97.26%) in generating knowledge graphs from disaster tweets, utilizing a comprehensive metadata-driven approach and diverse knowledge sources for enriched auxiliary knowledge | Complexity in the integration and processing of multiple data sources and algorithms, requiring extensive computational resources and expertise in machine learning and natural language processing |

3.10. Practical Applications and System Development

| Algorithm Category | References | Generic Advantage | Generic Disadvantage |

|---|---|---|---|

| BERT | [68] | High accuracy and low memory usage for flood prediction using Twitter data | Not mentioned explicitly, but complexity and potential overfitting can be inferred as disadvantages |

| BERT-based | [45,69] | Effective in disaster detection and flood event detection, improving decision making | Limited by potential algorithm efficiency exploration and dataset availability |

| Other | [70] | Real-time support, high accuracy, and low memory usage for flood prediction using Twitter data | Limited by data specificity, complexity, and potential overfitting inferred as disadvantages |

4. Discussion

4.1. Critical Analysis and Interpretation

4.2. Limitations of Social-Media-Based Disaster Analytics Using Transformer

4.3. Addressing the Challenges and Future Research Avenues

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Ref. | Area of Disaster | Algorithm Used | GPT/Transformer Technology | Benefit | Disadvantage |

|---|---|---|---|---|---|

| [18] | Flood (DKI Jakarta, Indonesia) | BERT-MLP | BERT | High accuracy (82%) in classifying tweets related to flood events using geospatial data. | Stemming process may remove important features, affecting accuracy. |

| [20] | Wildfires in the Western United States (2020) | BERTopic, NER | BERT | Real-time estimation of wildfire situations through social media analysis for decision support. | Potential noise from broad search terms, inaccuracies in user location data, and single-topic document assumption. |

| [21] | Twitter-Based Disaster Prediction | Improved BERT model, LSTM, GRU | BERT | Demonstrates superior accuracy in disaster prediction on Twitter by analyzing patterns associated with various types of disasters. Outperforms traditional models like LSTM and GRU in predicting disaster-related tweets. | Not specified. |

| [22] | Epidemics, Social Unrest, and Disasters | Enhanced BERT model, GloVe for feature extraction, LSTM for classification | BERT | Superior in terms of accuracy, precision, recall, and F1-score, capturing tweet semantics to accurately identify trend-related tweets. | Not specified. |

| [23] | Detection and Classification of Natural Disasters from Social Media | FNN, CNN, BLSTM, BERT, DistilBERT, Albert, RoBERTa, DeBERTa | BERT and its variants | Demonstrated the effectiveness of deep learning methods in accurately detecting and classifying disaster-related information from tweets, with preprocessing and bias mitigation enhancing performance. | The complexity of models and the need for extensive preprocessing and bias mitigation to handle the diverse and noisy nature of social media data. |

| [24] | Various Natural Disasters (Wildfires, Hurricanes, Earthquakes, Floods) | RoBERTa for text analysis, Vision Transformer for image understanding, Bi-LSTM for sequence processing, and attention mechanism for context awareness | RoBERTa, Vision Transformer | Superior performance with accuracy levels ranging from 94% to 98%, effective combination of textual and visual inputs through multimodal fusion. | Requires substantial computational and memory resources, potential hardware limitations. |

| [25] | Various Natural Disasters (Earthquakes, Floods, Hurricanes, Fires) | DenseNet, BERT | BERT for textual features, DenseNet for image features | Achieved an accuracy of 85.33% in classifying social media data into useful and non-useful categories for disaster response, outperforming state-of-the-art techniques. | Not specified, but potential issues could include the complexity of integrating multimodal data and the need for substantial computational resources. |

| [26] | COVID-19 Pandemic Sentiment Analysis | RETN model, BERT-GRU, BERT-biLSTM, nature-inspired optimization techniques | GPT-2, GPT-3 | Enhanced performance in sentiment analysis on large-scale datasets, including text, images, and audio. | Complexity in implementation and optimization. |

| [27] | Cyclone-Related Tweets | BERT, machine learning, and deep learning classifiers | BERT | BERT model achieves better results than other ML and DL models even on small labelled datasets. | Tweets often contain ambiguity and informal language that are hard for the machine to understand |

| [28] | Various (e.g., Earthquakes, Typhoons) | RACLC for classification, RACLTS for summarization | BERTweet (a variant of BERT for Twitter data) | High performance in disaster tweet classification and summarization. Provides interpretability through rationale extraction, enhancing trust in model decisions. | Potential limitations include the need for extensive training data and the challenge of adapting to new or unforeseen disaster types. |

| [29] | Various (e.g., Shootings, Hurricanes, Floods) | Transformer-based multi-task learning (MTL) | BERT, DistilBERT, ALBERT, ELECTRA | Demonstrates superior performance in classifying and prioritizing disaster-related tweets using a multi-task learning approach. Allows for effective handling of large volumes of data during crises. | Challenges include handling the high variability of disaster-related data and the computational demands of processing large datasets in real-time. |

| [30] | Disaster Detection on Twitter | BERT (Bidirectional Encoder Representations from Transformers) with keyword position information | BERT | Improved accuracy in disaster prediction on Twitter by incorporating keyword position information into the BERT model. | Relies heavily on the keyword position, which may not always accurately reflect the context or importance of a tweet. |

| [31] | General Disaster Management | Various BERT-based models (default BERT, BERT + NL, BERT + LSTM, BERT + CNN) | BERT | Effective in classifying disaster-related tweets by using balanced datasets and preprocessing techniques. | Challenges with imbalanced data and processing informal social media text. |

| [32] | Various Crises and Emergencies Detected via Social Media | MobileBERT for feature extraction, SSA improved with MRFO for feature selection | MobileBERT | High accuracy and efficiency in detecting and classifying crisis-related events on social media, leveraging advanced transformer technology and optimized feature selection. | Challenges include computational demands for processing and analyzing large-scale social media data in real-time and adapting to diverse and evolving crisis scenarios. |

| [33] | Flood-Related Volunteered Geographic Information (VGI) | BERT with TF-IDF, TextRank, MMR, LDA | BERT | Provides an ensemble approach combining BERT with traditional NLP methods for enhanced topic classification accuracy in flood-related microblogs. | Complexity in integrating multiple algorithms and potential challenges in scalability and real-time processing. |

| [34] | Electricity Infrastructure | BERT | BERT for text classification | Capable of sensing the temporal evolutions and geographic differences of electricity infrastructure conditions through social media analysis. | Limited capability in areas with few Twitter activities, reliance on geotagged tweets, which are a small portion of total tweets. |

| [35] | Transportation Disaster Detection and Classification in Nigeria | BERT with AdamW optimizer | BERT | Improved accuracy in identifying and classifying transportation disaster tweets with an accuracy of 82%, outperforming existing algorithms. | Relies on named entity recognition (NER) for location identification, which may not be effective if users do not specify their location accurately. |

| [36] | Disaster Prediction from Tweets | GloVe embeddings for word representation, BERT for classification | BERT | Achieved 87% accuracy in classifying tweets related to disasters, showing BERT’s superiority over traditional models like LSTM, random forest, decision trees, naive Bayes. | Requires significant preprocessing and understanding of NLP concepts to implement effectively. |

| [37] | Natural Disaster Tweet Classification | CNN with BERT embedding | BERT | Achieved high accuracy (97.16%), precision (97.63%), recall (96.64%), and F1-score (97.13%) in classifying natural disaster tweets. | Requires complex preprocessing and might overfit after certain epochs, indicating a need for careful model training and validation setup. |

| [38] | Analysis and Classification of Disaster Tweets from a Metaphorical Perspective | BERT, RoBERTa, DistilBERT | BERT, RoBERTa, DistilBERT | Demonstrated improved performance in classifying disaster-related tweets, including those with metaphorical contexts, highlighting the models’ ability to capture metaphorical text representations effectively. | The study did not specifically address the computational efficiency or potential limitations in processing metaphorical content across diverse disaster types and languages. |

| [39] | Various (e.g., Earthquakes, Floods, Shootings) | Transformer-based model with multitask learning approach, including a fine-tuned encoder based on RoBERTa and transformer layers as a task adapter | RoBERTa | Significant improvements in classifying and prioritizing tweets in emergency situations by leveraging entities, event descriptions, and hashtags. This approach benefits from the adaptability of Transformers to handle noisy social media data. | The complexity of the model requires substantial computational resources for training and fine-tuning. The effectiveness of the model is dependent on the quality and representation of the input data, including the preprocessing of hashtags and the augmentation with event metadata. |

| [40] | Various (e.g., Earthquakes, Floods) | BERT, GRU, LSTM | BERT | BERT achieved the highest accuracy (96.2%) in classifying disaster-related tweets, indicating its effectiveness in understanding and categorizing disaster information from social media. | Increased complexity of the BERT architecture may lead to overfitting and requires careful adjustment. |

| Ref. | Area of Disaster | Algorithm Used | GPT/Transformer Technology | Benefit | Disadvantage |

|---|---|---|---|---|---|

| [41] | General Natural Disasters | Transformer Network | Transformer | Accurate analysis of public sentiment towards natural disasters | Focuses more on sentiment analysis rather than direct disaster response strategies |

| [42] | COVID-19 Vaccine Sentiment Analysis and Symptom Reporting from Tweets | BERT, Word2Vec | BERT | High accuracy in classifying sentiments towards COVID-19 vaccines and reporting of symptoms, leveraging contextual embeddings for nuanced understanding | Word2Vec showed lower performance compared to BERT, indicating fixed embeddings may not capture contextual nuances effectively |

| [43] | Climate Change | LDA, BERT | BERT for sentiment analysis | Effective in topic modeling and sentiment analysis with high precision (91.35%), recall (89.65%), and accuracy (93.50%) | Not explicitly mentioned, but potential challenges include processing vast datasets and identifying nuanced sentiment accurately |

| [44] | Public Opinions on Climate Change on Twitter | Convolutional Neural Network (CNN) | BERT pre-trained model | High accuracy in detecting believers (98%) and deniers (90%) of climate change, useful for smart city governance | Difficulty in collecting and labeling diverse Twitter data due to variations in human dialect and speech |

| [19] | Emotion Identification During COVID-19 | Average Voting Ensemble Deep Learning model (AVEDL Model) incorporating BERT, DistilBERT, RoBERTa | BERT, DistilBERT, RoBERTa | Achieved high accuracy (86.46%) and macro-average F1-score (85.20%) in classifying emotions from COVID-19-related social media and emergency response calls, showcasing effective emotion analysis in pandemic conditions | The model’s performance is contingent on the quality and size of the dataset, and its application is limited without extensive preprocessing and understanding of NLP concepts for accurate emotion extraction |

| [45] | Floods | RoBERTa, VADER, LSTM, CLIP | RoBERTa (Transformer and CLIP models) | Enhanced flood event detection through social media analysis, improving disaster response | Limited by the availability of specific dataset details |

| Ref. | Area of Disaster | Algorithm Used | GPT/Transformer Technology | Benefit | Disadvantage |

|---|---|---|---|---|---|

| [46] | General Crisis Situations | Cross-lingual method for retrieving and summarizing crisis-relevant information from social media, utilizing multilingual transformer embeddings for summarization (T5) | T5 for summarization | Enables effective summarization of crisis-relevant information across multiple languages, enhancing situational awareness | Complexity in handling multilingual data and the potential for reduced accuracy in cross-lingual information retrieval and summarization |

| [47] | Crisis Event Social Media Summarization | NeuralSearchX for document retrieval, GPT-3 for summarization | GPT-3 | High comprehensiveness in generated summaries of emergency events from social media and online news, rapid deployment due to few-shot learning | High redundancy ratio in the generated summaries, indicating potential information repetition |

| [48] | Various Disasters | SVM, BART | BART for summarization | Effective summarization of disaster-related tweets, differentiation between authoritative and user-generated content | Challenges in verifying the authenticity of information from user-generated content |

| [28] | Various (e.g., Earthquakes, Typhoons) | RACLC for classification, RACLTS for summarization | BERTweet (a variant of BERT for Twitter data) | High performance in disaster tweet classification and summarization. Provides interpretability through rationale extraction, enhancing trust in model decisions | The document does not explicitly list disadvantages, but potential limitations could include the need for extensive training data and the challenge of adapting to new or unforeseen disaster types |

| [33] | Flood-Related Volunteered Geographic Information (VGI) | BERT with TF-IDF, TextRank, MMR, LDA | BERT | Provides an ensemble approach combining BERT with traditional NLP methods for enhanced topic classification accuracy in flood-related microblogs | Complexity in integrating multiple algorithms and potential challenges in scalability and real-time processing |

| [34] | Electricity Infrastructure | BERT | BERT for text classification | Capable of sensing the temporal evolutions and geographic differences of electricity infrastructure conditions through social media analysis | Limited capability in areas with few Twitter activities, reliance on geotagged tweets, which are a small portion of total tweets |

| Ref. | Area of Disaster | Algorithm Used | GPT/Transformer Technology | Benefit | Disadvantage |

|---|---|---|---|---|---|

| [49] | Location Identification from Textual Data | BERT-BiLSTM-CRF | BERT | High accuracy in recognizing toponyms, enhancing location identification in disaster communications | Focus on technical aspects of toponym recognition without direct disaster management application examples |

| [50] | Extraction of Location from Disaster-Related Social Media Posts | Geo-knowledge-guided approach, fusion of geo-knowledge and GPT models | GPT models such as ChatGPT and GPT-4 | Significantly improves the accuracy of extracting location descriptions from social media messages by over 40% compared to NER approaches, requiring only a small set of examples encoding geo-knowledge | The approach’s effectiveness is contingent on the availability and quality of geo-knowledge about common forms of location descriptions, which may vary by region and disaster type |

| [51] | Crisis Communication | BERT, CNN, MLP, LSTM, Bi-LSTM | BERT | BERT outperforms traditional and other deep learning models in crisis tweet classification | Not explicitly mentioned, but potential challenges include handling diverse data quality and the dynamic nature of social media language |

| Ref. | Area of Disaster | Algorithm Used | GPT/Transformer Technology | Benefit | Disadvantage |

|---|---|---|---|---|---|

| [52] | Earthquake Disasters | Feature-based, BLSTM-based, BERT-based, RoBERTa-based | BERT, BLSTM, RoBERTa | Provides methods to calculate usefulness ratings of tweets with behavioral facilitation information, with BERT achieving the best accuracy | Limited to tweets, may require integration of methods to assess tweet credibility |

| [53] | Harvesting Rescue Requests in Disaster Response from Social Media | BERT, GloVe, ELMo, RoBERTa, DistilBERT, ALBERT, XLNet, with classifiers like CNN, LSTM | BERT and its variations | Significantly increased accuracy in categorizing rescue-related tweets with the best model (a customized BERT-based model with a CNN classifier) achieving an F1-score of 0.919, which outperforms the baseline model by 10.6% | The complexity of models and computational costs, with the need for large and diverse training datasets to achieve high performance and stability |

| [54] | Flood Prediction from Twitter Data and Image Analysis | BMLP, SDAE, HHNN (hyperbolic Hopfield neural network), rule-based matching | BERT for text preprocessing | Achieved high accuracy (97%), precision (95%), recall (96%), and F1 score (96%) in predicting flood levels from Twitter data and images, effectively addressing semantic information loss and enhancing classification accuracy | Complexity in model implementation and reliance on extensive datasets for training. Requires sophisticated preprocessing to handle text and image data effectively |

| [55] | Disaster-Related Multilingual Text Classification | GNoM (graph neural network enhanced language models) | BERT, mBERT, XLM-RoBERTa | Outperforms state-of-the-art models in disaster domain across monolingual, cross-lingual, and multilingual settings with improved F1 scores | Not explicitly mentioned, but complexity and integration of GNN with transformer models might introduce computational overhead |

| [56] | Uttarakhand Floods 2021 | BERT, k-means, USE (universal sentence encoder) | BERT for tweet classification, USE for semantic similarity in clustering | Effective retrieval and prioritization of critical information from Twitter for emergency management, aiding timely disaster response | Not explicitly mentioned, but challenges may include processing vast amounts of social media data and the accuracy of critical information extraction |

| [57] | Flash Floods | FF-BERT: a multi-label text classification model | BERT (bidirectional encoder representations from transformers) | Enhances existing databases by classifying and categorizing information about flash flood events from web data | The primary limitation lies in its relatively low prediction performance for minority labels despite improvements over the baseline model |

| [29] | Various (e.g., Shootings, Hurricanes, Floods) | Transformer-based multi-task learning (MTL) | BERT, DistilBERT, ALBERT, ELECTRA | Demonstrates superior performance in classifying and prioritizing disaster-related tweets using a multi-task learning approach. Allows for effective handling of large volumes of data during crises | Challenges include handling the high variability of disaster-related data and the computational demands of processing large datasets in real-time. |

| [31] | General Disaster Management | BERT, BERT + NL, BERT + LSTM, BERT + CNN | BERT | Effective in classifying disaster-related tweets by using balanced datasets and preprocessing techniques | Challenges with imbalanced data and processing informal social media text. |

| [58] | Informative Tweet Prediction for Disasters | Deep learning architecture (transformer), semantic similarity models, logistic regression, glowworm optimization | Transformers | High precision in identifying informative tweets with the integration of disaster ontology and metadata classification | Complexity of the model and need for large datasets for effective training |

| Ref. | Area of Disaster | Algorithm Used | GPT/Transformer Technology | Benefit | Disadvantage |

|---|---|---|---|---|---|

| [59] | Flood Detection via Twitter Streams | Multimodal bitransformer model for text and image, pretrained Italian BERT model for text, VGGNet16 and ResNet152 for images | Not specified | Highest micro F1-score achieved with multimodal approach (0.859 for development set), demonstrating the effectiveness of combining textual and visual features | Specific performance metrics for individual modalities (text or image alone) were lower compared to the multimodal approach, indicating potential limitations in single modality analyses |

| [60] | Flood Detection via Twitter | Multimodal bitransformer model for text and image, pretrained Italian BERT model for text, VGGNet and ResNet for images | Not specified | High micro F1-score (0.859) achieved with multimodal approach, showing effectiveness in flood event detection combining textual and visual information | The complexity of integrating and optimizing multimodal data inputs for real-time analysis |

| [61] | General Disaster-Related Tweets on Social Media | Visual and linguistic double transformer fusion model (VLDT) | ALBERT for text, S-CBAM-VGG for visuals | Effective fusion of textual and visual data, leading to more accurate informative tweet classification | Potential challenges with feature extraction generalizability to new disaster types not in training data |

| [62] | Disaster Management | BERT | Vision Transformer (ViT) | Improved feature extraction for image processing, attention mechanism for relevance focus | CNN’s limitation to understand feature relations, computing efficiency decreases with large kernels |

| [63] | Henan Heavy Storm (2021) | LDA, BERT, VGG-16 | BERT for text classification; VGG-16 for image classification | High accuracy (0.93) in classifying natural disaster topics from social media data, enabling real-time understanding of disaster themes for informed decision making | Complexity of the multimodal analysis process and reliance on extensive data preprocessing and manual labeling for accurate model training |

| [45] | Floods | RoBERTa, VADER, LSTM, CLIP | RoBERTa (Transformer and CLIP models) | Enhanced flood event detection through social media analysis, improving disaster response. | Limited by the availability of specific dataset details. |

| Ref. | Area of Disaster | Algorithm Used | GPT/Transformer Technology | Benefit | Disadvantage |

|---|---|---|---|---|---|

| [64] | Wildfires, Earthquakes, Floods, Typhoons/Hurricanes, Bombings, Shootings | Fine-tuned RoBERTa-based encoder and Transformer blocks; bag of words for priority classification | RoBERTa, Transformer | Achieved top performance in tweet prioritization and surpassed median performance for information type classification by leveraging pre-trained language models and highlighting entities and hashtags | Not specified |

| [55] | Disaster-Related Multilingual Text Classification | GNoM (graph neural network enhanced language models) | BERT, mBERT, XLM-RoBERTa | Outperforms state-of-the-art models in disaster domain across monolingual, cross-lingual, and multilingual settings with improved F1 scores | Not explicitly mentioned, but complexity and integration of GNN with transformer models might introduce computational overhead |

| Ref. | Area of Disaster | Algorithm Used | GPT/Transformer Technology | Benefit | Disadvantage |

|---|---|---|---|---|---|

| [65] | Various Disasters | BERT, DistilBERT, T5 | Transformer-based question answering techniques | Precision of 0.81, recall of 0.76, and F-score of 0.78 for BERT in providing appropriate guidelines | Limited test data representing diverse crisis scenarios and issues with handling massive datasets due to token limitations |

| [66] | Various Disasters | Naive Bayes, Logistic Regression, Random Forest, SVM, KNN, Gradient Boosting, Decision Tree, LSTM, BiLSTM, CNN, BERT, DistilBERT | BERT and DistilBERT for tweet classification | High accuracy in multilingual tweet classification for disaster response | Not explicitly mentioned, but potential issues could include data sparsity and language-specific challenges |

| [30] | Disaster Detection on Twitter | BERT (Bidirectional Encoder Representations from Transformers) with Keyword Position Information | BERT | Improved accuracy in disaster prediction on Twitter by incorporating keyword position information into the BERT model | Relies heavily on the keyword position, which may not always accurately reflect the context or importance of a tweet |

| [32] | Various Crises and Emergencies Detected via Social Media | MobileBERT for Feature Extraction, SSA Improved with MRFO for Feature Selection | MobileBERT | High accuracy and efficiency in detecting and classifying crisis-related events on social media, leveraging advanced transformer technology and optimized feature selection | The document does not explicitly list disadvantages, but challenges could include computational demands for processing and analyzing large-scale social media data in real-time and adapting to diverse and evolving crisis scenarios |

| [67] | Various Natural Disasters | SMDKGG framework, AdaBoost classifier, STM, LOD Cloud, NELL, DBPedia, CYC, TSS, NGD, Chemical Reaction Optimization | Transformers for metadata classification | High precision (96.19%), recall (98.33%), and accuracy (97.26%) in generating knowledge graphs from disaster tweets, utilizing a comprehensive metadata-driven approach and diverse knowledge sources for enriched auxiliary knowledge | Complexity in the integration and processing of multiple data sources and algorithms, requiring extensive computational resources and expertise in machine learning and natural language processing |

| [40] | Various (e.g., Earthquakes, Floods) | BERT, GRU, LSTM | BERT | BERT achieved the highest accuracy (96.2%) in classifying disaster-related tweets, indicating its effectiveness in understanding and categorizing disaster information from social media | Increased complexity of the BERT architecture may lead to overfitting and requires careful adjustment |

| [68] | Flood Forecasting | ODLFF-BDA, BERT, GRU, MLCNN, Equilibrium Optimizer (EO) | BERT for emotive contextual embedding from tweets | High accuracy and low memory usage for flood prediction using Twitter data | Not mentioned explicitly, but complexity and potential overfitting can be inferred as disadvantages |

| Ref. | Area of Disaster | Algorithm Used | GPT/Transformer Technology | Benefit | Disadvantage |

|---|---|---|---|---|---|

| [70] | Disaster Support via Chatbot | Dual Intent Entity Transformer (DIET) for NLU, RASA for Conversation Management | Not specified directly, but DIET and RASA use underlying transformer models | Provides real-time disaster support and information dissemination in Portuguese, improving situational awareness and decision making | Limited by the specificity of its training data and potentially the depth of its knowledge base, requiring ongoing updates and expansions to remain effective in diverse disaster scenarios |

| [69] | Twitter Disaster Detection | BERT Variants (ELECTRA, Talking Head, TN-BERT), CNN, NN, LSTM, Bi-LSTM | Not specified | Good performance with F-scores between 76% and 80% and AUC between 86% and 90%, demonstrating effectiveness in disaster detection from Twitter data | Only marginally different performances among models, indicating a need for further exploration to identify the most efficient algorithm |

| [45] | Floods | RoBERTa, VADER, LSTM, CLIP | RoBERTa (transformer and CLIP models) | Enhanced flood event detection through social media analysis, improving disaster response | Limited by the availability of specific dataset details |

| [68] | Flood Forecasting | ODLFF-BDA, BERT, GRU, MLCNN, Equilibrium Optimizer (EO) | BERT for emotive contextual embedding from tweets | High accuracy and low memory usage for flood prediction using Twitter data | Not mentioned explicitly, but complexity and potential overfitting can be inferred as disadvantages |

References

- Wang, H.; Nie, D.; Tuo, X.; Zhong, Y. Research on crack monitoring at the trailing edge of landslides based on image processing. Landslides 2020, 17, 985–1007. [Google Scholar] [CrossRef]

- Amatya, P.; Kirschbaum, D.; Stanley, T.; Tanyas, H. Landslide mapping using object-based image analysis and open source tools. Eng. Geol. 2021, 282, 106000. [Google Scholar] [CrossRef]

- Rabby, Y.W.; Li, Y. Landslide inventory (2001–2017) of Chittagong hilly areas, Bangladesh. Data 2020, 5, 4. [Google Scholar] [CrossRef]

- Sufi, F.K.; Alsulami, M. Knowledge Discovery of Global Landslides Using Automated Machine Learning Algorithms. IEEE Access 2021, 9, 131400–131419. [Google Scholar] [CrossRef]

- Tamizi, A.; Young, I.R. A dataset of global tropical cyclone wind and surface wave measurements from buoy and satellite platforms. Sci. Data 2024, 11, 106. [Google Scholar] [CrossRef]

- Sufi, F.K.; Khalil, I. Automated Disaster Monitoring From Social Media Posts Using AI-Based Location Intelligence and Sentiment Analysis. IEEE Trans. Comput. Soc. Syst. 2022. [Google Scholar] [CrossRef]

- Sufi, F.K. AI-SocialDisaster: An AI-based software for identifying and analyzing natural disasters from social media. Softw. Impacts 2022, 13, 100319. [Google Scholar] [CrossRef]

- Sufi, F. A decision support system for extracting artificial intelligence-driven insights from live twitter feeds on natural disasters. Decis. Anal. J. 2022, 5, 100130. [Google Scholar] [CrossRef]

- Sufi, F. A New Social Media Analytics Method for Identifying Factors Contributing to COVID-19 Discussion Topics. Information 2023, 14, 545. [Google Scholar] [CrossRef]

- Sufi, F. Automatic identification and explanation of root causes on COVID-19 index anomalies. MethodsX 2023, 10, 101960. [Google Scholar] [CrossRef]

- Poulsen, S.; Sarsa, S.; Prather, J.; Leinonen, J.; Becker, B.A.; Hellas, A.; Denny, P.; Reeves, B.N. Solving Proof Block Problems Using Large Language Models. In Proceedings of the SIGCSE 2024, Portland, OR, USA, 20–23 March 2024; Volume 7. [Google Scholar] [CrossRef]

- Orrù, G.; Piarulli, A.; Conversano, C.; Gemignani, A. Human-like problem-solving abilities in large language models using ChatGPT. Front. Artif. Intell. 2023, 6, 1199350. [Google Scholar] [CrossRef]

- Kieser, F.; Wulff, P.; Kuhn, J.; Küchemann, S. Educational data augmentation in physics education research using ChatGPT. Phys. Rev. Phys. Educ. Res. 2023, 19, 020150. [Google Scholar] [CrossRef]

- Gusenbauer, M.; Haddaway, N.R. Which academic search systems are suitable for systematic reviews or meta-analyses? Evaluating retrieval qualities of Google Scholar, PubMed, and 26 other resources. Res. Synth. Methods 2020, 11, 181–217. [Google Scholar] [CrossRef]

- Halevi, G.; Moed, H.; Bar-Ilan, J. Suitability of Google Scholar as a source of scientific information and as a source of data for scientific evaluation—Review of the Literature. J. Inf. 2017, 11, 823–834. [Google Scholar] [CrossRef]

- Kaur, A.; Gulati, S.; Sharma, R.; Sinhababu, A.; Chakravarty, R. Visual citation navigation of open education resources using Litmaps. Libr. Hi Tech News 2022, 39, 7–11. [Google Scholar] [CrossRef]

- Sufi, F. Generative Pre-Trained Transformer (GPT) in Research: A Systematic Review on Data Augmentation. Information 2024, 15, 99. [Google Scholar] [CrossRef]

- Maulana, I.; Maharani, W. Disaster Tweet Classification Based on Geospatial Data Using the BERT-MLP Method. In Proceedings of the 2021 9th International Conference on Information and Communication Technology, ICoICT 2021, Yogyakarta, Indonesia, 3–5 August 2021; pp. 76–81. [Google Scholar] [CrossRef]

- Nimmi, K.; Janet, B.; Selvan, A.K.; Sivakumaran, N. Pre-trained ensemble model for identification of emotion during COVID-19 based on emergency response support system dataset. Appl. Soft Comput. 2022, 122, 108842. [Google Scholar] [CrossRef]

- Ma, Z.; Li, L.; Yuan, Y.; Baecher, G.B. Appraising Situational Awareness in Social Media Data for Wildfire Response. In Proceedings of the ASCE Inspire 2023: Infrastructure Innovation and Adaptation for a Sustainable and Resilient World-Selected Papers from ASCE Inspire 2023, Arlington, VA, USA, 16–18 November 2023; pp. 289–297. [Google Scholar]

- Duraisamy, P.; Natarajan, Y. Twitter Disaster Prediction Using Different Deep Learning Models. SN Comput. Sci. 2024, 5, 179. [Google Scholar] [CrossRef]

- Duraisamy, P.; Duraisamy, M.; Periyanayaki, M.; Natarajan, Y. Predicting Disaster Tweets using Enhanced BERT Model. In Proceedings of the 7th International Conference on Intelligent Computing and Control Systems, ICICCS 2023, Madurai, India, 17–19 May 2023; pp. 1745–1749. [Google Scholar] [CrossRef]

- Fontalis, S.; Zamichos, A.; Tsourma, M.; Drosou, A.; Tzovaras, D. A Comparative Study of Deep Learning Methods for the Detection and Classification of Natural Disasters from Social Media. In Proceedings of the 12th International Conference on Pattern Recognition Applications and Methods, Lisbon, Portugal, 22–24 February 2023; pp. 320–327. [Google Scholar] [CrossRef]

- JayaLakshmi, G.; Madhuri, A.; Vasudevan, D.; Thati, B.; Sirisha, U.; Praveen, S.P. Effective Disaster Management Through Transformer-Based Multimodal Tweet Classification. Rev. D’intelligence Artif. 2023, 37, 1263–1272. [Google Scholar] [CrossRef]

- Kamoji, S.; Kalla, M.; Joshi, C. Fusion of Multimodal Textual and Visual Descriptors for Analyzing Disaster Response. In Proceedings of the 2023 5th International Conference on Smart Systems and Inventive Technology, ICSSIT 2023, Tirunelveli, India, 23–25 January 2023; pp. 1614–1619. [Google Scholar] [CrossRef]

- Kour, H.; Gupta, M.K. AI Assisted Attention Mechanism for Hybrid Neural Model to Assess Online Attitudes About COVID-19. Neural Process. Lett. 2023, 55, 2265–2304. [Google Scholar] [CrossRef]

- Sharma, S.; Basu, S.; Kushwaha, N.K.; Kumar, A.N.; Dalela, P.K. Categorizing disaster tweets into actionable classes for disaster managers: An empirical analysis on cyclone data. In Proceedings of the 2021 International Conference on Electrical, Computer, Communications and Mechatronics Engineering, ICECCME 2021, Mauritius, Mauritius, 7–8 October 2021. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Rudra, K. Rationale Aware Contrastive Learning Based Approach to Classify and Summarize Crisis-Related Microblogs. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 1552–1562. [Google Scholar] [CrossRef]

- Wang, C.; Lillis, D.; Nulty, P. Transformer-based Multi-task Learning for Disaster Tweet Categorisation Transformer-based Multi-task Learning for Disaster Tweet Categorisation. In Proceedings of the International ISCRAM Conference, Blacksburg, VA, USA, 23 May 2021; pp. 705–718. Available online: https://www.researchgate.net/publication/355367274 (accessed on 15 February 2024).

- Wang, Z.; Zhu, T.; Mai, S. Disaster Detector on Twitter Using Bidirectional Encoder Representation from Transformers with Keyword Position Information. In Proceedings of the 2020 IEEE 2nd International Conference on Civil Aviation Safety and Information Technology, ICCASIT 2020, Weihai, China, 14–16 October 2020; pp. 474–477. [Google Scholar] [CrossRef]

- Naaz, S.; Abedin, Z.U.; Rizvi, D.R. Sequence Classification of Tweets with Transfer Learning via BERT in the Field of Disaster Management. EAI Endorsed Trans. Scalable Inf. Syst. 2021, 8, e8. [Google Scholar] [CrossRef]

- Dahou, A.; Mabrouk, A.; Ewees, A.A.; Gaheen, M.A.; Abd Elaziz, M. A social media event detection framework based on transformers and swarm optimization for public notification of crises and emergency management. Technol. Forecast. Soc. Change 2023, 192, 122546. [Google Scholar] [CrossRef]

- Du, W.; Ge, C.; Yao, S.; Chen, N.; Xu, L. Applicability Analysis and Ensemble Application of BERT with TF-IDF, TextRank, MMR, and LDA for Topic Classification Based on Flood-Related VGI. ISPRS Int. J. Geo-Inf. 2023, 12, 240. [Google Scholar] [CrossRef]

- Chen, Y.; Umana, A.; Yang, C.; Ji, W. Condition Sensing for Electricity Infrastructure in Disasters by Mining Public Topics from Social Media. In Proceedings of the International ISCRAM Conference, Blacksburg, VA, USA, 23 May 2021; pp. 598–608. [Google Scholar]

- Prasad, R.; Udeme, A.U.; Misra, S.; Bisallah, H. Identification and classification of transportation disaster tweets using improved bidirectional encoder representations from transformers. Int. J. Inf. Manag. Data Insights 2023, 3, 100154. [Google Scholar] [CrossRef]

- Ranade, A.; Telge, S.; Mate, Y. Predicting Disasters from Tweets Using GloVe Embeddings and BERT Layer Classification. In International Advanced Computing Conference; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2022; pp. 492–503. [Google Scholar] [CrossRef]

- Dharma, L.S.A.; Winarko, E. Classifying Natural Disaster Tweet using a Convolutional Neural Network and BERT Embedding. In Proceedings of the 2022 2nd International Conference on Information Technology and Education, ICIT and E 2022, Malang, Indonesia, 22 January 2022; pp. 23–30. [Google Scholar] [CrossRef]

- Alcántara, T.; García-Vázquez, O.; Calvo, H.; Torres-León, J.A. Disaster Tweets: Analysis from the Metaphor Perspective and Classification Using LLM’s. In Mexican International Conference on Artificial Intelligence; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2024; pp. 106–117. [Google Scholar] [CrossRef]

- Boros, E.; Lejeune, G.; Coustaty, M.; Doucet, A. Adapting Transformers for De-tecting Emergency Events on Social Media. In Proceedings of the 14th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management, IC3K-Proceedings, Valletta, Malta, 24–26 October 2022; pp. 300–306. [Google Scholar] [CrossRef]

- Ullah, I.; Jamil, A.; Hassan, I.U.; Kim, B.S. Unveiling the Power of Deep Learning: A Comparative Study of LSTM, BERT, and GRU for Disaster Tweet Classification. IEIE Trans. Smart Process. Comput. 2023, 12, 526–534. [Google Scholar] [CrossRef]

- Li, S.; Sun, X. Application of public emotion feature extraction algorithm based on social media communication in public opinion analysis of natural disasters. PeerJ Comput. Sci. 2023, 9, e1417. [Google Scholar] [CrossRef]

- Bansal, A.; Jain, R.; Bedi, J. Detecting COVID-19 Vaccine Stance and Symptom Reporting from Tweets using Contextual Embeddings. In Proceedings of the CEUR Workshop, Kolkata, India, 9–13 December 2022; pp. 361–368. Available online: http://ceur-ws.org (accessed on 15 February 2024).

- Uthirapathy, S.E.; Sandanam, D. Topic Modelling and Opinion Analysis on Climate Change Twitter Data Using LDA and BERT Model. Procedia Comput. Sci. 2022, 218, 908–917. [Google Scholar] [CrossRef]

- Lydiri, M.; El Mourabit, Y.; El Habouz, Y.; Fakir, M. A performant deep learning model for sentiment analysis of climate change. Soc. Netw. Anal. Min. 2023, 13, 8. [Google Scholar] [CrossRef]

- Bryan-Smith, L.; Godsall, J.; George, F.; Egode, K.; Dethlefs, N.; Parsons, D. Real-time social media sentiment analysis for rapid impact assessment of floods. Comput. Geosci. 2023, 178, 105405. [Google Scholar] [CrossRef]

- Vitiugin, F.; Castillo, C. Cross-Lingual Query-Based Summarization of Crisis-Related Social Media: An Abstractive Approach Using Transformers. In Proceedings of the 33rd ACM Conference on Hypertext and Social Media, Barcelona, Spain, 28 June–1 July 2022; pp. 21–31. [Google Scholar] [CrossRef]

- Pereira, J.; Fidalgo, R.; Nogueira, R. Crisis Event Social Media Summarization with GPT-3 and Neural Reranking. In Proceedings of the International ISCRAM Conference, Omaha, NE, USA, 28–31 May 2023; pp. 371–384. Available online: https://www.researchgate.net/publication/371038649 (accessed on 15 February 2024).

- Sakhapara, A.; Pawade, D.; Dodhia, B.; Jain, J.; Bhosale, O.; Chakrawar, O. Summarization of Tweets Related to Disaster. In Proceedings of the International Conference on Recent Trends in Computing: ICRTC 2021; Lecture Notes in Networks and Systems. Springer: Singapore, 2022; pp. 651–665. [Google Scholar] [CrossRef]

- Ma, K.; Tan, Y.J.; Xie, Z.; Qiu, Q.; Chen, S. Chinese toponym recognition with variant neural structures from social media messages based on BERT methods. J. Geogr. Syst. 2022, 24, 143–169. [Google Scholar] [CrossRef]

- Hu, Y.; Mai, G.; Cundy, C.; Choi, K.; Lao, N.; Liu, W.; Lakhanpal, G.; Zhou, R.Z.; Joseph, K. Geo-knowledge-guided GPT models improve the extraction of location descriptions from disaster-related social media messages. Int. J. Geogr. Inf. Sci. 2023, 37, 2289–2318. [Google Scholar] [CrossRef]

- Chandrakala, S.; Raj, S.A.A. Identifying the label of crisis related tweets using deep neural networks for aiding emergency planning. In Proceedings of the 2022 International Conference on Innovative Computing, Intelligent Communication and Smart Electrical Systems, ICSES 2022, Chennai, India, 15–16 July 2022. [Google Scholar] [CrossRef]

- Yamamoto, F.; Kumamoto, T.; Suzuki, Y.; Nadamoto, A. Methods of Calculating Usefulness Ratings of Behavioral Facilitation Tweets in Disaster Situations. In Proceedings of the 11th International Symposium on Information and Communication Technology, Hanoi, Vietnam, 1–3 December 2022; pp. 88–95. [Google Scholar] [CrossRef]

- Zhou, B.; Zou, L.; Mostafavi, A.; Lin, B.; Yang, M.; Gharaibeh, N.; Cai, H.; Abedin, J.; Mandal, D. VictimFinder: Harvesting rescue requests in disaster response from social media with BERT. Comput. Environ. Urban Syst. 2022, 95, 101824. [Google Scholar] [CrossRef]

- Kamoji, S.; Kalla, M. Effective Flood prediction model based on Twitter Text and Image analysis using BMLP and SDAE-HHNN. Eng. Appl. Artif. Intell. 2023, 123, 106365. [Google Scholar] [CrossRef]

- Ghosh, S.; Maji, S.; Desarkar, M.S. GNoM: Graph Neural Network Enhanced Language Models for Disaster Related Multilingual Text Classification. In Proceedings of the 14th ACM Web Science Conference 2022, Barcelona, Spain, 26–29 June 2022; pp. 55–65. [Google Scholar] [CrossRef]

- Varshney, A.; Kapoor, Y.; Chawla, V.; Gaur, V. A Novel Framework for Assessing the Criticality of Retrieved Information. Int. J. Comput. Digit. Syst. 2022, 11, 1229–1244. [Google Scholar] [CrossRef]

- Wilkho, R.S.; Chang, S.; Gharaibeh, N.G. FF-BERT: A BERT-based ensemble for automated classification of web-based text on flash flood events. Adv. Eng. Inform. 2024, 59, 102293. [Google Scholar] [CrossRef]

- Arulmozhivarman, M.; Deepak, G. TPredDis: Most Informative Tweet Prediction for Disasters Using Semantic Intelligence and Learning Hybridizations. In International Conference on Robotics, Control, Automation and Artificial Intelligence; Lecture Notes in Electrical Engineering; Springer: Singapore, 2023; pp. 993–1002. [Google Scholar] [CrossRef]

- Alam, F.; Hassan, Z.; Ahmad, K.; Gul, A.; Reiglar, M.; Conci, N.; Al-Fuqaha, A. Flood Detection via Twitter Streams using Textual and Visual Features. arXiv 2020, arXiv:2011.14944. [Google Scholar] [CrossRef]

- Wahid, J.A.; Shi, L.; Gao, Y.; Yang, B.; Wei, L.; Tao, Y.; Hussain, S.; Ayoub, M.; Yagoub, I. Topic2Labels: A framework to annotate and classify the social media data through LDA topics and deep learning models for crisis response. Expert Syst. Appl. 2022, 195, 116562. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, X.; Liu, N.; Liu, X.; Lv, J.; Li, X.; Zhang, H.; Cao, R. Visual and Linguistic Double Transformer Fusion Model for Multimodal Tweet Classification. In Proceedings of the 2023 International Joint Conference on Neural Networks, Gold Coast, Australia, 18–23 June 2023. [Google Scholar] [CrossRef]

- Koshy, R.; Elango, S. Multimodal tweet classification in disaster response systems using transformer-based bidirectional attention model. Neural Comput. Appl. 2023, 35, 1607–1627. [Google Scholar] [CrossRef]

- Zhang, M.; Huang, Q.; Liu, H. A Multimodal Data Analysis Approach to Social Media during Natural Disasters. Sustainability 2022, 14, 5536. [Google Scholar] [CrossRef]

- Boros, E.; Nguyen, N.K.; Lejeune, G.; Coustaty, M.; Doucet, A. Transformer-based Methods with #Entities for Detecting Emergency Events on Social Media. In Proceedings of the 30th Text REtrieval Conference, TREC 2021-Proceedings, Online, 15–19 November 2021; Available online: http://trec.nist.gov (accessed on 15 February 2024).

- Karam, E.; Hussein, W.; Gharib, T.F. Detecting needs of people in a crisis using Transformer-based question answering techniques. In Proceedings of the 2021 IEEE 10th International Conference on Intelligent Computing and Information Systems, ICICIS 2021, Cairo, Egypt, 5–7 December 2021; pp. 348–354. [Google Scholar] [CrossRef]

- Koranga, T.; Hazari, R.; Das, P. Disaster Tweets Classification for Multilingual Tweets Using Machine Learning Techniques. In International Conference on Computation Intelligence and Network Systems; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2024; pp. 117–129. [Google Scholar] [CrossRef]

- Bhaveeasheshwar, E.; Deepak, G. SMDKGG: A Socially Aware Metadata Driven Knowledge Graph Generation for Disaster Tweets. In International Conference on Applied Machine Learning and Data Analytics; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2023; pp. 64–77. [Google Scholar] [CrossRef]

- Indra, G.; Duraipandian, N. Modeling of Optimal Deep Learning Based Flood Forecasting Model Using Twitter Data. Intell. Autom. Soft Comput. 2023, 35, 1455–1470. [Google Scholar] [CrossRef]

- Balakrishnan, V.; Shi, Z.; Law, C.L.; Lim, R.; Teh, L.L.; Fan, Y.; Periasamy, J. A Comprehensive Analysis of Transformer-Deep Neural Network Models in Twitter Disaster Detection. Mathematics 2022, 10, 4664. [Google Scholar] [CrossRef]

- Boné, J.; Ferreira, J.C.; Ribeiro, R.; Cadete, G. Disbot: A Portuguese disaster support dynamic knowledge chatbot. Appl. Sci. 2020, 10, 9082. [Google Scholar] [CrossRef]

- Ranaldi, L.; Pucci, G. When Large Language Models contradict humans? Large Language Models’ Sycophantic Behaviour. arXiv 2023, arXiv:2311.09410. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of Hallucination in Natural Language Generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Sahoo, S.R.; Gupta, B.B. Real-time detection of fake account in twitter using machine-learning approach. In Advances in Computational Intelligence and Communication Technology: Proceedings of CICT 2019; Advances in Intelligent Systems and Computing; Springer: Singapore, 2021; pp. 149–159. [Google Scholar] [CrossRef]

- Murayama, T.; Wakamiya, S.; Aramaki, E.; Kobayashi, R. Modeling the spread of fake news on Twitter. PLoS ONE 2021, 16, e0250419. [Google Scholar] [CrossRef]

- Gustafson, D.L.; Woodworth, C.F. Methodological and ethical issues in research using social media: A metamethod of Human Papillomavirus vaccine studies. BMC Med. Res. Methodol. 2014, 14, 127. [Google Scholar] [CrossRef]

| Algorithm Category | Abbreviation | Short Description |

|---|---|---|

| GPT-based | GPT | Generative Pre-trained Transformer—A model architecture used primarily for natural language understanding and generation. |

| BERT | BERT | Bidirectional Encoder Representations from Transformers—A model architecture for natural language understanding tasks. |

| BERT Based | ALBERT | A Lite BERT-based Model—An improved version of BERT with reduced parameter size and faster training. |

| RoBERTa | Robustly optimized BERT approach—A variant of BERT with modifications to improve performance and training efficiency. | |

| DistilBERT | Distilled BERT—A smaller, faster version of BERT designed for resource-constrained environments. | |

| MobileBERT | A BERT variant optimized for mobile devices with reduced parameters and computational requirements. | |

| Deep Learning | CNN | Convolutional Neural Network—A deep learning architecture commonly used for image recognition and natural language processing tasks. |

| AVEDL | Average Voting Ensemble Deep Learning Model—A model ensemble technique combining multiple deep learning architectures for improved performance. | |

| Transformer | Transformer—A deep learning architecture known for its effectiveness in sequence-to-sequence tasks such as language translation and text summarization. | |

| T5 | T5, or Text-to-Text Transfer Transformer, is a versatile machine learning model designed to convert all natural language processing tasks into a unified text-to-text framework. | |

| Other | SVM | Support Vector Machine—A supervised learning model used for classification and regression analysis. |

| TF-IDF | Term Frequency-Inverse Document Frequency—A numerical statistic used to evaluate the importance of a word in a document corpus. | |

| TextRank | TextRank—An algorithm for automatic text summarization based on graph-based ranking. | |

| LDA | Latent Dirichlet Allocation—A generative statistical model used for topic modeling in text corpora. |

| Database Name | Advanced Query | Results Returned |

|---|---|---|

| Scopus | (TITLE-ABS-KEY (“Transformer”) OR TITLE-ABS-KEY (“GPT”) OR TITLE-ABS-KEY (“LLM”)) AND (TITLE-ABS-KEY (“Disaster”) OR TITLE-ABS-KEY (“Landslide”) OR TITLE-ABS-KEY (“Flood”) OR TITLE-ABS-KEY (“Earthquake”) OR TITLE-ABS-KEY (“Cyclone”) OR TITLE-ABS-KEY (“Typhoon”)) AND (TITLE-ABS-KEY (“Twitter”) OR TITLE-ABS-KEY (“Social media”)) AND (LIMIT-TO (LANGUAGE, “English”)) | 79 |

| Web of Science | (ALL = (Transformer) OR ALL = (GPT) OR ALL = (LLM)) AND (ALL = (Disaster) OR ALL = (Landslide) OR ALL = (Flood) OR ALL = (Earthquake) OR ALL = (Cyclone) OR ALL = (Typhoon)) AND (ALL = (Twitter) OR ALL = (Social media)) | 33 |

| Category | Criteria |

|---|---|

| Inclusion |

|

| Exclusion |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sufi, F. A Sustainable Way Forward: Systematic Review of Transformer Technology in Social-Media-Based Disaster Analytics. Sustainability 2024, 16, 2742. https://doi.org/10.3390/su16072742

Sufi F. A Sustainable Way Forward: Systematic Review of Transformer Technology in Social-Media-Based Disaster Analytics. Sustainability. 2024; 16(7):2742. https://doi.org/10.3390/su16072742

Chicago/Turabian StyleSufi, Fahim. 2024. "A Sustainable Way Forward: Systematic Review of Transformer Technology in Social-Media-Based Disaster Analytics" Sustainability 16, no. 7: 2742. https://doi.org/10.3390/su16072742

APA StyleSufi, F. (2024). A Sustainable Way Forward: Systematic Review of Transformer Technology in Social-Media-Based Disaster Analytics. Sustainability, 16(7), 2742. https://doi.org/10.3390/su16072742

_Li.png)