Effectiveness of Artificial Intelligence Practices in the Teaching of Social Sciences: A Multi-Complementary Research Approach on Pre-School Education

Abstract

1. Introduction

1.1. Artificial Intelligence in Social Sciences

1.2. Artificial Intelligence in Pre-School Education

1.3. Purpose and Significance of the Study

- What is the overall effect size (g) of AI applications in preschool education, as derived from meta-analysis findings?

- How do participants perceive AI applications in preschool education, based on meta-thematic analysis?

- Is there a statistically significant difference in pre-test and post-test scores for students in the experimental group?

- How do observer perspectives complement the statistical findings in AI-assisted preschool education?

- Do the combined findings of meta-analysis and experimental results reinforce each other?

2. Method

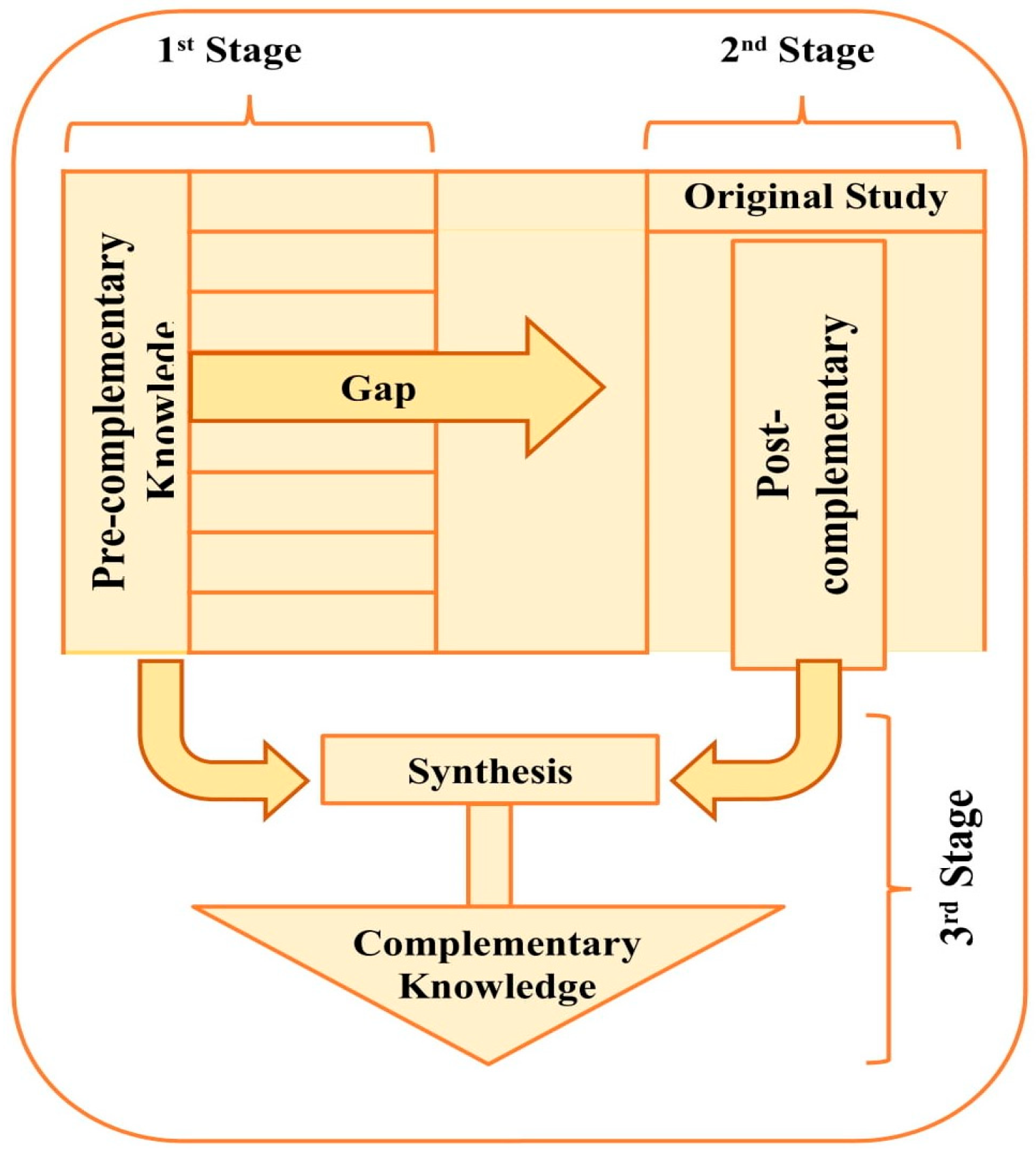

2.1. What Is the Multi-Complementary Approach?

2.2. Pre-Complementary Knowledge Phase

2.2.1. Literature Review and Inclusion Criteria

- “yapay zeka” (artificial intelligence)

- “sosyal bilimler ve yapay zeka” (social sciences and artificial intelligence)

- “artificial intelligence”

- “social sciences and artificial intelligence”

2.2.2. Meta-Analysis Inclusion Criteria

- Conducted between 2005 and 2025;

- Implemented AI applications in the experimental group;

- Contained pre-test and post-test data on AI applications;

- Focused on the impact of AI applications on students’ academic achievement;

- Included descriptive statistics required for effective size calculations within the McA framework, such as sample size (n), arithmetic mean (), and standard deviation (SD).

- Examined the impact of AI applications on academic success;

- Used qualitative research methods and included participants’ perspectives;

- Were conducted between 2005 and 2025 and were retrieved from the same databases used in the meta-analysis.

- Lack of empirical data (50%);

- Absence of pre-test/post-test designs (30%);

- Studies not specific to preschool education (15%);

- Insufficient statistical data for meta-analysis (5%).

2.2.3. Effect Size and Model Selection

- If −0.15 ≤ g < 0.15, the effect size is considered negligible;

- If 0.15 ≤ g < 0.40, it is small;

- If 0.40 ≤ g < 0.75, it is moderate;

- If 0.75 ≤ g < 1.10, it is large;

- If 1.10 ≤ g < 1.45, it is very large;

- If g ≥ 1.45, it is considered excellent [84].

2.2.4. Heterogeneity Test

- The I2 value ranges from 0% to 100%;

- 0% indicates no heterogeneity;

- 75% or higher indicates high heterogeneity [88].

2.2.5. Coding

- Study code, title, author(s), year of publication, academic term, course, educational level, and sample details;

- Statistical data related to these variables.

- “M” represents the article;

- “11” represents the study number;

- “s.5” refers to the page number of the quotation.

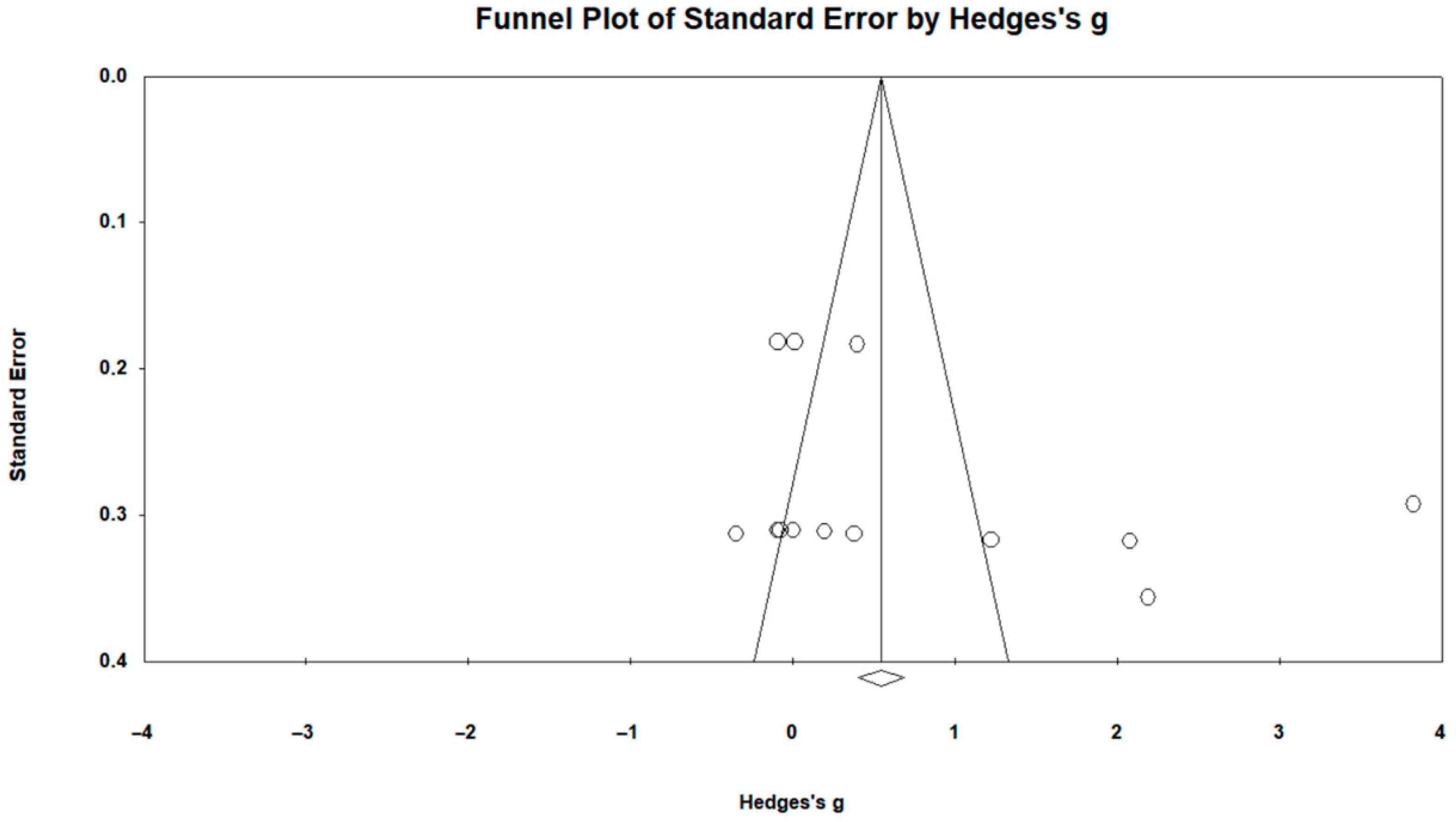

2.2.6. Publication Bias and Reliability

2.3. Post-Complementary Knowledge Phase

2.4. Design of the Experimental Process

2.5. Formation of the Experimental Group and Participation Selection

- Enrollment in an AI-integrated early education program.

- Parental consent for participation.

- No prior exposure to AI-based educational tools.

2.6. Data Collection Tool: Student Evaluation Form

2.7. Process Duration

- Week 1: Healthy Eating;

- Week 2: Learning Colors—Pink;

- Week 3: Natural Disasters;

- Week 4: Dental Health.

- Informed consent was obtained from parents and guardians;

- Children’s data privacy was safeguarded by anonymizing collected data;

- AI applications used in the study did not store sensitive personal information;

- Teachers and researchers actively supervised AI-assisted learning sessions to prevent over-reliance on AI for educational activities.

2.8. Thematic Analysis Process

2.9. Complementary Knowledge

3. Findings

- Education level: “Others” category (g = 0.80);

- Implementation duration: 9+ weeks (g = 0.33);

- Sample size: Medium sample group (g = 1.82).

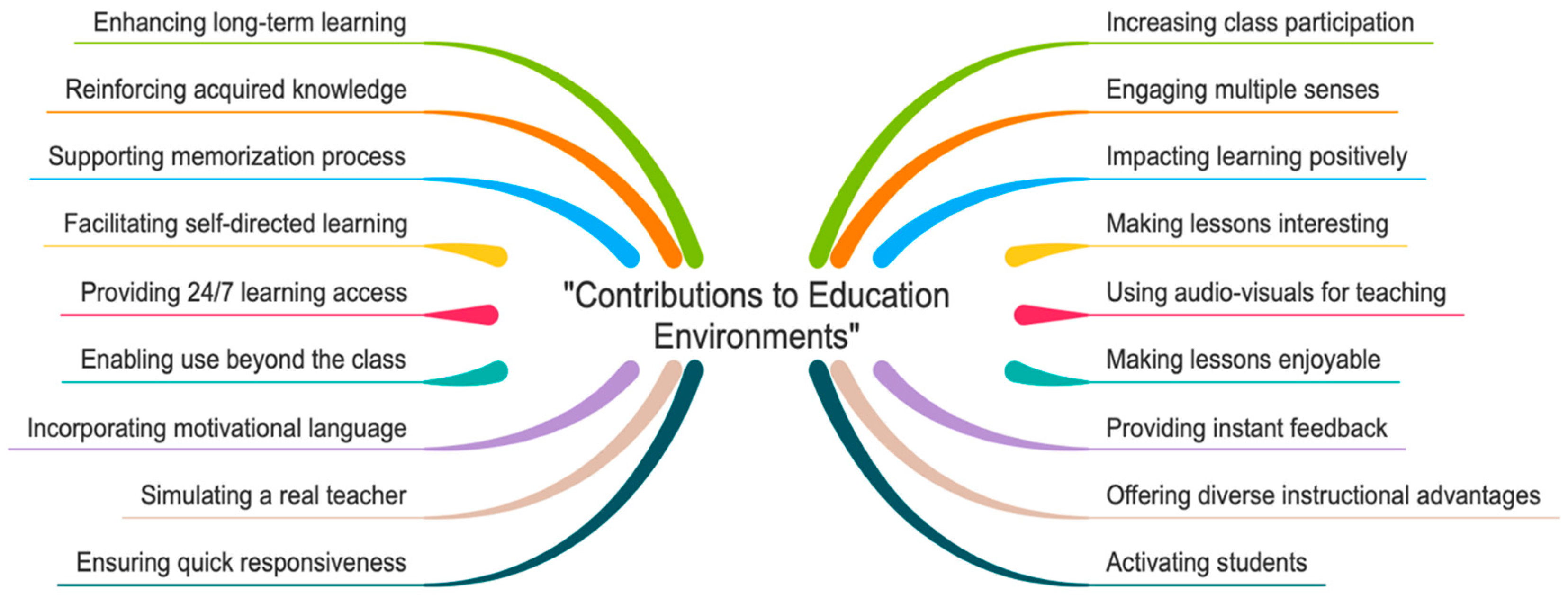

3.1. Meta-Thematic Findings on Artificial Intelligence Applications

- Contribution to Educational Environments;

- Contribution to Innovation and Technological Development;

- Challenges and Solutions.

3.1.1. Contributions to Education Environments

- Resembling a real teacher;

- Improving readiness;

- Being reassuring;

- Using motivating expressions;

- Reinforcing learning by reteaching topics;

- Providing 24/7 learning opportunities;

- Demonstrating tolerance.

- “(M5-p.17053) In the study, it is stated: ‘When I make a mistake, it tells me not to worry, gives me hints, and helps me find the correct answer. It also summarizes topics... It provides visuals, which is something a real teacher would do.’”.

- “(M5-p.17051) It was useful for students who were unprepared for the lesson. It served as a preparatory tool, and thanks to the chatbot, even if we didn’t fully grasp the topic, we had already learned half of it by the time the teacher started explaining”.

- “(M5-p.17069) As a student, I felt good. I believe my classmates felt the same way... It says things like ‘You are amazing!’ ‘Great!’ or ‘I’m making this question easier for you!’”.

- “(M5-p.17051) It was positive... I mean, a teacher comes and teaches a topic, then another one comes and summarizes it”.

3.1.2. Contributions to Innovation and Technological Advancement

- Usage across different disciplines;

- Benefits for educational services;

- Contribution to diagnosis and treatment;

- Saving time and increasing efficiency;

- Helping users stay up to date;

- Performing automated tasks.

- “(M1-p.180) AI and robotics technology can be used in many different disciplines. In the future, it will be an essential field that professionals in all industries need to learn… I cannot provide a very technical definition due to my lack of knowledge”.

- “(M2-p.33) AI is particularly active in the diagnosis and treatment process, especially in the diagnosis phase”.

- “(M4-p.10) AI can retrieve more relevant information faster, helping you stay up to date and potentially learn new skills more quickly”.

- “(M4-p.12) In the future, repetitive, time-consuming, and automatable tasks will be handled by AI”.

- “(M3-p.77) There will be a significant gain in speed and time. It will accelerate processes greatly. Right now, we think our current pace doesn’t harm us. We still wait two weeks for molecular tests. But in 20 years, those two weeks could mean a lot. We need to be even faster”.

3.1.3. Challenges in AI Applications and Suggested Solutions

- In the study (M1-p.183), it is stated:“Right now, it expresses fear and anxiety in me. Since I do not fully grasp the situation, and I cannot predict what it might do to people in the future, it seems frightening to me due to the uncertainty”.

- In the study (M1-p.192), another statement highlights job loss concerns:“As a banker, I believe it will negatively impact my profession. Since we mainly deal with statistical calculations and more technical matters, I think the banking profession will cease to exist in the near future, after 2030”.

- Another concern (M1-p.199) is about control and accessibility:“What worries me is that it will be in the hands of certain individuals, unable to reach the public, and unable to serve the general population. A majority of people may remain in hunger and poverty, and they may survive only if ‘certain individuals’ provide help. There is such a danger”.

- Ethical concerns are also raised in (M3-p.59):“I honestly believe it will create ethical problems. After all, what will happen in terms of ethics? Robots have no legal responsibility…”

- Another concern regarding cost and inefficiency is found in (M3-p.75):“Let’s say an aspect of AI is developed, and it looks great. You invest in it, make serious financial commitments, bring in people to set it up, pay those people, buy the machines. But in the end, you get far less performance than what was promised. That is waste”.

- In (M1-p.188), a participant suggests AI should be used as an assistant rather than a replacement:“I would prefer it to be an assistant. I think it would be more useful that way. I would prefer it as an assistant to make my daily tasks easier”.

- In (M3-p.72), financial feasibility is emphasized:“Financial viability is a very important factor. The initial costs of setting up and integrating new systems can be significant…”

3.2. Comparison of Pre-Test and Post-Test Results After the Experimental Process

3.3. Thematic Findings from Observers’ Opinions After the Experimental Process

3.4. Findings Related to the Holistic Information Stage

4. Discussion and Conclusions

4.1. Results of the Pre-Complementary Knowledge Phase

4.2. Results of the Post-Complementary Knowledge Phase

4.3. Results in the Complementary Knowledge Phase

4.4. Limitations

4.5. Suggestions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A1. The Kappa Agreement Values

| Meta-Thematic Analysis Part | ||||||||||||||

| Contributions to Education Environments | Contributions to Innovation and Technological Advancement | Problems Encountered and Suggestions for Solution | ||||||||||||

| K2 | K2 | K2 | ||||||||||||

| K1 | + | − | Σ | K1 | + | − | Σ | K1 | + | − | Σ | |||

| + | 18 | 2 | 20 | + | 22 | 1 | 23 | + | 31 | 2 | 33 | |||

| − | 3 | 13 | 16 | − | 1 | 17 | 18 | − | 2 | 18 | 20 | |||

| Σ | 21 | 15 | 36 | Σ | 23 | 18 | 41 | Σ | 33 | 20 | 53 | |||

| Kappa: 0.717 p: 0.000 | Kappa: 0.901 p: 0.000 | Kappa: 0.839 p: 0.000 | ||||||||||||

| Experimental-Qualitative Part | ||||||||||||||

| Contribution to Social-Affective Dimension | Problems Encountered and Suggestions for Solution | |||||||||||||

| K2 | K2 | |||||||||||||

| K1 | + | − | Σ | K1 | + | − | Σ | |||||||

| + | 16 | 0 | 16 | + | 16 | 1 | 17 | |||||||

| − | 1 | 9 | 10 | − | 1 | 7 | 8 | |||||||

| Σ | 17 | 9 | 26 | Σ | 17 | 8 | 25 | |||||||

| Kappa: 0.917 p: 0.000 | Kappa: 0.816 p: 0.000 | |||||||||||||

Appendix A2. Key Findings of the Studies Included in the Meta-Thematic Analysis

| Study | Key Findings of Qualitative Studies |

| M1 | This study examines the perceptions of social actors toward artificial intelligence and robotics technologies. The most notable findings are as follows:

|

| M2 | This study includes the perspectives of doctors, nurses, and patients regarding artificial intelligence and robotic nurses. The key findings are as follows:

|

| M3 | This study examines the expectations, concerns, and impacts of artificial intelligence use in healthcare. The most notable findings are as follows:

|

| M4 | This study examines the impact of artificial intelligence on managers’ skills. The most notable findings are as follows:

|

| M5 | This study examines the impact of AI-powered chatbots on social studies education. The most notable findings are as follows:

|

References

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 4th ed.; Pearson Education: Cranbury, NJ, USA, 2021. [Google Scholar]

- Howard, J. Artificial intelligence: Implications for the future of work. Am. J. Ind. Med. 2019, 62, 917–926. [Google Scholar] [PubMed]

- McCarthy, J. What Is Artificial Intelligence? Available online: https://www-formal.stanford.edu/jmc/whatisai.pdf (accessed on 11 January 2019).

- Chatterjee, S.; Bhattacharjee, K.K. Adoption of artificial intelligence in higher education: A quantitative analysis using structural equation modelling. Educ. Inf. Technol. 2020, 25, 3443–3463. [Google Scholar]

- Popenici, S.A.D.; Kerr, S. Exploring the impact of artificial intelligence on teaching and learning in higher education. Res. Pract. Technol. Enhanc. Learn. (RPTEL) 2017, 12, 1–13. [Google Scholar]

- Turgut, K. Yapay zekâ’nın yüksek öğretimde sosyal bilim öğretimine entegrasyonu. Ank. Uluslararası Sos. Bilim. Derg. (Yapay Zekâ ve Sos. Bilim. Öğretimi) 2024, 1–7. [Google Scholar]

- Chen, L.; Chen, P.; Lin, Z. Artificial intelligence in education: A review. IEEE Access 2020, 8, 75264–75278. [Google Scholar]

- Tambuskar, S. Challenges and benefits of 7 ways artificial intelligence in education sector. Rev. Artif. Intell. Educ. 2022, 3, e03. [Google Scholar]

- Zhou, L.; Pan, S.; Wang, J.; Vasilakos, A.V. Machine learning on big data: Opportunities and challenges. Neurocomputing 2017, 237, 350–361. [Google Scholar]

- Nan, J. Research of applications of artificial intelligence in preschool education. J. Phys. Conf. Ser. 2020, 1607, 012119. [Google Scholar]

- Sun, W. Design of auxiliary teaching system for preschool education specialty courses based on artificial intelligence. Math. Probl. Eng. 2022. [Google Scholar] [CrossRef]

- Rezaev, A.V.; Tregubova, N.D. Are sociologists ready for ‘artificial sociality’? current issues and future prospects for studying artificial intelligence in the social sciences. Monit. Public Opin. Econ. Soc. Change 2018, 5, 91–108. [Google Scholar]

- Jarek, K.; Mazurek, G. Marketing and artificial ıntelligence. Cent. Eur. Bus. Rev. 2019, 8, 46–55. [Google Scholar]

- Rezk, S.M.M. The role of artificial intelligence in graphic design. J. Art Des. Music 2023, 2, 1–12. [Google Scholar]

- Rebala, G.; Ravi, A.; Churiwala, S. An Introduction to Machine Learning; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach; Pearson Education: Cranbury, NJ, USA, 2010. [Google Scholar]

- Agarwal, R.; Dhar, V. Big data, data science and analytics: The opportunity and challenge for IS research. Inf. Syst. Res. 2014, 25, 443–448. [Google Scholar]

- Chen, Y.; Wu, X.; Hu, A.; He, G.; Ju, G. Social prediction: A new research paradigm based on machine learning. J. Chin. Sociol. 2021, 8, 1–21. [Google Scholar]

- Liu, Z. Sociological perspectives on artificial intelligence: A typological reading. Sociol. Compass 2021, 15, e12851. [Google Scholar]

- Mercaldo, F.; Nardone, V.; Santone, A. Diabetes mellitus affected patients classification and diagnosis through machine learning techniques. Procedia Comput. Sci. 2017, 112, 2519–2528. [Google Scholar]

- Waljee, A.K.; Higgins, P.D.R. Machine learning in medicine: A primer for physicians. Am. J. Gastroenterol. 2010, 105, 1224–1226. [Google Scholar]

- Mullainathan, S.; Spiess, J. Machine learning: An applied econometric approach. J. Econ. Perspect. 2017, 31, 87–106. [Google Scholar]

- Cavalcante, R.C.; Brasileiro, R.C.; Souza, V.L.; Nobrega, J.P.; Oliveira, A.L. Computational intelligence and financial markets: A survey and future directions. Expert Syst. Appl. 2016, 55, 194–211. [Google Scholar]

- Aggarwal, M.; Murty, M.N. Deep Learning. In Springer-Briefs in Applied Sciences and Technology; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar]

- Zhang, J.; Feng, S. Machine learning modeling: A new way to do quantitative research in social sciences in the Era of AI. J. Web Eng. 2021, 20, 281–302. [Google Scholar]

- Apostolopoulos, I.D.; Groumpos, P.P. Fuzzy cognitive maps: Their role in explainable artificial intelligence. Appl. Sci. 2023, 13, 3412. [Google Scholar] [CrossRef]

- Lazer, D.; Pentland, A.; Adamic, L.; Aral, S.; Barabasi, A.L.; Brewer, D.; Van Alstyne, M. Computational Social Science. Science 2009, 323, 721–723. [Google Scholar] [PubMed]

- Veltri, G.A. Big data is not only about data: The two cultures of modelling. Big Data Soc. 2017, 4. [Google Scholar] [CrossRef]

- Castells, M. The Rise of the Network Society: The Information Age: Economy, Society, and Culture; Wiley-Blackwell Publishing: Hoboken, NJ, USA, 2010. [Google Scholar]

- Chen, M.; Liu, Q.; Huang, S.; Dang, C. Environmental cost control system of manufacturing enterprises using artificial intelligence based on value chain of circular Economy. Enterp. Inf. Syst. 2020, 16, 1856422. [Google Scholar]

- Probst, P.; Wright, M.N.; Boulesteix, A. Hyperparameters and tuning strategies for random forest. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1301. [Google Scholar]

- Sun, Z.; Anbarasan, M.; Kumar, D.P. Design of online intelligent English teaching platform based on artificial intelligence techniques. Comput. Intell. 2020, 37, 1166–1180. [Google Scholar]

- Longo, L. Enpowering qualitative research methods in education with artificial intelligence. In Proceedings of the World Conference on Qualitative Research, Barcelona, Spain, 11 October 2020. [Google Scholar]

- Kumar, R.; Mishra, B.K. (Eds.) Natural Language Processing in Artificial Intelligence, 1st ed.; Apple Academic Press: Palm Bay, FL, USA, 2020. [Google Scholar]

- Boumans, J.W.; Trilling, D. Taking Stock of the Toolkit: An Overview of Relevant Automated Content Analysis Approaches and Techniques for Digital Journalism Scholars; Routledge: London, UK, 2018; pp. 8–23. [Google Scholar]

- Abram, M.D.; Mancini, K.T.; Parker, R.D. Methods to integrate natural language processing into qualitative research. Int. J. Qual. Methods 2020, 19, 1609406920984608. [Google Scholar] [CrossRef]

- Mahmood, A.; Wang, J.; Yao, B.; Wang, D.; Huang, C. LLM-powered conversational voice assistants: Interaction patterns, opportunities, challenges, and design Gguidelines. arXiv 2023, arXiv:2309.13879. [Google Scholar]

- Paulus, T.M.; Marone, V. In Minutes Instead of Weeks”: Discursive constructions of generative AI and qualitative data analysis. Qual. Inq. 2024, 10778004241250065. [Google Scholar] [CrossRef]

- Rietz, T.; Maedche, A. Cody: An AI-based system to semi-automate coding for qualitative research. In Proceedings of the CHI ’21: CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; Article 394. Association for Computing Machinery: New York, NY, USA, 2021; pp. 1–14. [Google Scholar]

- Çelik, S. Understanding Data Science. J. Curr. Res. Soc. Sci. 2019, 9, 235–256. [Google Scholar]

- Isichei, B.C.; Leung, C.K.; Nguyen, L.T.; Morrow, L.B.; Ngo, A.T.; Pham, T.D.; Cuzzocrea, A. Sports Data Management, Mining, and Visualization; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2022; Volume 450 LNNS, pp. 141–153. [Google Scholar]

- Owan, V.; Abang, K.B.; Idika, D.O.; Etta, E.O.; Bassey, B.A. Exploring the potential of artificial intelligence tools in educational measurement and assessment. EURASIA J. Math. Sci. Technol. Educ. 2023, 19, em2307. [Google Scholar]

- Song, P.; Wang, X. A bibliometric analysis of worldwide educational artificial intelligence research development in recent twenty years. Asia Pac. Educ. Rev. 2020, 21, 473–486. [Google Scholar]

- Baker, T.; Smith, L. Educ-AI-Tion Rebooted? Exploring the Future of Artificial Intelligence in Schools and Colleges; Nesta: London, UK, 2019. [Google Scholar]

- Chassigonal, M.; Khoroshvin, A.; Klimova, A. Artificial intelligence trends in education: A narrative overview. Procedia Comput. Sci. 2018, 136, 16–24. [Google Scholar]

- Mondal, K. A synergy of artificial intelligence and education in the 21st-century classrooms. In Proceedings of the 2019 International Conference on Digitization (ICD), Sharjah, United Arab Emirates, 18–19 November 2019; pp. 68–70. [Google Scholar]

- Chan, K.S.; Zary, N. Applications and challenges of implementing artificial intelligence in medical education: An integrative review. JMIR Med. Educ. 2019, 5, e13930. [Google Scholar]

- Miao, F.; Holmes, W.; Huang, R.; Zhang, H. AI and Education: Guidance for Policymakers; UNESCO Publishing: Paris, France, 2021. [Google Scholar]

- Mintz, J.; Holmes, W.; Liu, L.; Perez-Ortiz, M. Artificial intelligence and K-12 education: Possibilities, pedagogies, and risks. Technol. Pedagog. Educ. 2023, 40, 325–333. [Google Scholar]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational Chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar]

- Nilsson, N.J. The Quest for Artificial Intelligence: A History of Ideas and Achievements; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Su, J.; Ng, D.T.K.; Chu, S.K.W. Artificial intelligence (AI) literacy in early childhood education: The challenges and opportunities. Comput. Educ. Artif. Intell. 2023, 4, 100124. [Google Scholar]

- Woo, H.; LeTendre, G.K.; Pham-Shouse, T.; Xiong, Y. The Use of social robots in classrooms: A review of field-based studies. Educ. Res. Rev. 2021, 33, 100388. [Google Scholar]

- Young, M.F.; Slota, S.; Cutter, A.B.; Jalette, G.; Mullin, G.; Lai, B.; Simeoni, Z.; Tran, M.; Yukhymenko, M. Our princess is in another castle: A review of trends in serious gaming for education. Rev. Educ. Res. 2012, 82, 61–89. [Google Scholar]

- Ding, Y. Performance analysis of public management teaching practice training based on artificial intelligence technology. J. Intell. Fuzzy Syst. 2021, 40, 3787–3800. [Google Scholar] [CrossRef]

- Ma, L. An immersive context teaching method for college English based on artificial intelligence and machine learning in virtual reality technology. Mob. Inf. Syst. 2021, 2021, 1–7. [Google Scholar]

- Lin, P.; Van Brummelen, J.; Lukin, G.; Williams, R.; Breazeal, C. Zhorai: Designing a conversational agent for children to explore machine learning concepts. Proc. AAAI Conf. Artif. Intell. 2020, 34, 13381–13388. [Google Scholar]

- Tseng, T.; Murai, Y.; Freed, N.; Gelosi, D.; Ta, T.D.; Kawahara, Y. PlushPal: Storytelling with interactive plush toys and machine learning. In Proceedings of the IDC ’21: Interaction Design and Children, Athens, Greece, 24–30 June 2021; pp. 236–245. [Google Scholar]

- Vartiainen, H.; Tedre, M.; Valtonen, T. Learning machine learning with very young children: Who is teaching whom? Int. J. Child-Comput. Interact. 2020, 25, 100182. [Google Scholar]

- Amershi, S.; Cakmak, M.; Knox, W.B.; Kulesza, T. Power to the people: The role of humans in interactive machine learning. AI Mag. 2014, 35, 105–120. [Google Scholar] [CrossRef]

- Jin, L. Investigation on potential application of artificial intelligence in preschool children’s education. J. Phys. Conf. Ser. 2019, 1288, 012072. [Google Scholar]

- Yi, H.; Liu, T.; Lan, G. The key artificial intelligence technologies in early childhood education: A review. Artif. Intell. Rev. 2024, 57, 12. [Google Scholar] [CrossRef]

- Jiang, X. Design of artificial intelligence-based multimedia resource search service system for preschool education. In Proceedings of the 2022 International Conference on Information System, Computing and Educational Technology (ICISCET), Montreal, QC, Canada, 23–25 May 2022; pp. 76–78. [Google Scholar]

- Lee, J. Coding in early childhood. Contemp. Issues Early Child. 2020, 21, 266–269. [Google Scholar]

- Dongming, L.; Wanjing, L.; Shuang, C.; Shuying, Z. Intelligent robot for early childhood education. In Proceedings of the 2020 8th International Conference on Information and Education Technology, Virtual Conference, 28–30 March 2020. [Google Scholar]

- Williams, R.; Park, H.; Breazeal, C. A Is for artificial intelligence: The impact of artificial intelligence activities on young children’s perceptions of robots. In Proceedings of the CHI ’19: CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019. [Google Scholar]

- Crescenzi-Lanna, L. Literature review of the reciprocal value of artificial and human intelligence in early childhood education. J. Res. Technol. Educ. 2022, 55, 21–33. [Google Scholar]

- Fikri, Y.; Rhalma, M. Artificial intelligence (AI) in early childhood education (ECE): Do effects and interactions matter? Int. J. Relig. 2024, 5, 7536–7545. [Google Scholar]

- Bail, C. Can generative Al improve social science? Proc. Natl. Acad. Sci. USA 2024, 121, e2314021121. [Google Scholar] [CrossRef] [PubMed]

- Grossmann, I.; Feinberg, M.; Parker, D.; Christakis, N.; Tetlock, P.; Cunningham, W. AI and the transformation of social science research. Science 2023, 380, 1108–1109. [Google Scholar] [PubMed]

- Batdı, V. Metodolojik Çoğulculukta Yeni Bir Yönelim: Çoklu Bütüncül Yaklaşım. Sos. Bilim. Derg. 2016, 50, 133–147. [Google Scholar]

- Batdı, V. Eğitimde Yeni Bir Yönelim: Mega-Çoklu Bütüncül Yaklaşım ve Beyin Temelli Öğrenme Örnek Uygulaması, 2nd ed.; IKSAD Publishing House: Ankara, Türkiye, 2018. [Google Scholar]

- Glass, G.V. Primary, Secondary, and Meta-Analysis of Research. Educ. Res. 1976, 5, 3–8. [Google Scholar]

- Borenstein, M.; Hedges, L.V.; Higgins, J.P.T.; Rothstein, H.R. Introduction to Meta-Analysis, 1st ed.; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Lipsey, M.W.; Wilson, D.B. Practical Meta-Analysis; Sage Publications, Inc.: Thousand Oaks, CA, USA, 2001. [Google Scholar]

- Batdı, V. (Ed.) Meta-Tematik Analiz. Meta-Tematik Analiz: Örnek Uygulamalar; Anı Yayıncılık: Ankara, Türkiye, 2019; pp. 10–76. [Google Scholar]

- Patton, M.Q. Nitel Araştırma ve Değerlendirme Yöntemleri; Bütün, M., Demir, S.B., Çev, Eds.; PegemA Yayıncılık: Ankara, Türkiye, 2014. [Google Scholar]

- Batdı, V. (Ed.) Introduction to Meta-Thematic Analysis; Meta-Thematic Analysis in Research Process; Anı Yayıncılık: Ankara, Türkiye, 2020; pp. 1–38. [Google Scholar]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Prisma, G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 6, e1000097. [Google Scholar]

- Rosenberg, M.; Adams, D.; Gurevitch, J. MetaWin: Statistical Software for Meta-Analysis, Version 2.0; Sinauer Associates Inc.: Sunderland, MA, USA, 2000. [Google Scholar]

- Cohen, L.; Manion, L.; Morrison, K. Research Methods in Education, 6th ed.; Routledge: London, UK, 2007. [Google Scholar]

- Hedges, L. Distribution theory for Glass’s estimator of efect size and related estimates. J. Educ. Stat. 1981, 6, 107–112. [Google Scholar]

- Thalheimer, W.; Cook, S. How to Calculate Effect Sizes from Published Research Articles: A Simplified Methodology; Work-Learning Research Publication: New York, NY, USA, 2002. [Google Scholar]

- Ried, K. Interpreting and understanding meta-analysis graphs: A practical guide. Aust. Fam. Physician 2006, 35, 635–638. [Google Scholar]

- Schmidt, F.L.; Oh, I.-S.; Hayes, T.L. Fixed- versus random-effects models in meta-analysis: Model properties and an empirical comparison of differences in results. Br. J. Math. Stat. Psychol. 2009, 62, 97–128. [Google Scholar]

- Higgins, J.P.; Thompson, S.G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 2002, 21, 1539–1558. [Google Scholar]

- Higgins, J.P.T.; Thompson, S.G.; Deeks, J.J.; Altman, D.G. Measuring inconsistency in meta-analyses. BMJ 2003, 237, 557–560. [Google Scholar] [CrossRef]

- Rosenthal, R. The file drawer problem and tolerance for null results. Psychol. Bull. 1979, 86, 638–641. [Google Scholar]

- Wilson, D.B. Systematic Coding. In The Handbook of Research Synthesis and Meta-Analysis; Cooper, H., Hedges, L.V., Valentine, J.C., Eds.; Russell Sage Foundation: Manhattan, NY, USA, 2009; pp. 159–176. [Google Scholar]

- Bangert-Drowns, R.L.; Rudner, L.M. Meta-Analysis in Educational Research; ERIC Clearinghouse on Tests, Measurement, and Evaluation: Washington, DC, USA, 1991. [Google Scholar]

- Miles, M.B.; Huberman, A.M. Qualitative Data Analysis: An Expanded Sourcebook; Sage Publications: Thousand Oaks, CA, USA, 1994. [Google Scholar]

- Silverman, D. Doing Qualitative Research: A Practical Handbook; Sage Publications: Thousand Oaks, CA, USA, 2005. [Google Scholar]

- Duval, S.; Tweedie, R. Trim and Fill: A simple funnel-plot based method of testing and adjusting for publication bias in meta-analysis. Biometrics 2000, 56, 455–463. [Google Scholar] [CrossRef] [PubMed]

- Sterne, J.A.; Harbord, R.M. Funnel plots in meta-analysis. Stata J. Promot. Commun. Stat. Stata 2004, 4, 127–141. [Google Scholar]

- Sterne, J.A.C.; Sutton, A.J.; Ioannidis, J.P.A.; Terrin, N.; Jones, D.R.; Lau, J.; Carpenter, J.; Rücker, G.; Harbord, R.M.; Schmid, C.H.; et al. Recommendations for examining and interpreting funnel plot asymmetry in meta-analyses of randomised controlled trials. BMJ 2011, 343, d4002. [Google Scholar]

- Ivankova, N.V.; Creswell, J.W.; Stick, S.L. Using mixed-methods sequential explanatory design: From theory to practice. Field Methods 2006, 18, 3–20. [Google Scholar]

- Bailey, K.D. Methods of Social Research, 2nd ed.; The Free Press: New York, NY, USA, 1982. [Google Scholar]

- Yıldırım, A.; Şimşek, H. Sosyal Bilimlerde Nitel Araştırma Yöntemleri; Seçkin Yayıncılık: Ankara, Türkiye, 2006. [Google Scholar]

- Merriam, S.B. Qualitative Research: A Guide to Design and Implementation; John Wiley & Sons Inc.: New York, NY, USA, 2013. [Google Scholar]

- Viera, A.J.; Garrett, J.M. Understanding interobserver agreement: The Kappa statistic. Fam. Med. 2005, 37, 360–363. [Google Scholar]

- Johnson, R.B.; Onwuegbuzie, A.J. Mixed methods research: A research paradigm whose time has come. Educ. Res. 2004, 33, 14–26. [Google Scholar]

- Tashakkori, A.; Creswell, J.W. Editorial: Exploring the nature of research questions in mixed methods research. J. Mix. Methods Res. 2007, 1, 207–211. [Google Scholar]

- Cooper, H.; Hedges, L.V.; Valentine, J.C. The Handbook of Research Synthesis and Meta-Analysis; Russell Sage Publication: Washington, DC, USA, 2009. [Google Scholar]

- Robila, M.; Robila, S. Applications of artificial intelligence methodologies to behavioral and social sciences. J. Child Fam. Stud. 2019, 29, 2954–2966. [Google Scholar]

- Liao, H.; Wang, Z.; Liu, Y. Exploring the cross-disciplinary collaboration: A scientometric analysis of social science research related to artificial intelligence and big data application. IOP Conf. Ser. Mater. Sci. Eng. 2020, 806, 012019. [Google Scholar]

- Hernández-Lugo, M.d.l.C. Artificial Intelligence as a tool for analysis in Social Sciences: Methods and applications. LatIA 2024, 2, 11. [Google Scholar] [CrossRef]

- Xu, R.; Sun, Y.; Ren, M.; Guo, S.; Pan, R.; Lin, H.; Sun, L.; Han, X. AI for social science and social science of AI: A survey. Inf. Process. Manag. 2024, 61, 103665. [Google Scholar] [CrossRef]

- Hwang, G.J.; Xie, H.; Wah, B.W.; Gašević, D. Vision, challenges, roles and research issues of artificial intelligence in education. Comput. Educ. Artif. Intell. 2020, 1, 100001. [Google Scholar] [CrossRef]

- Yetişensoy, O.; Karaduman, H. The effect of AI-powered Chatbots in social studies education. Educ. Inf. Technol. 2024, 1, 1–35. [Google Scholar] [CrossRef]

- Schiff, D. Out of the laboratory and into the classroom: The future of artificial intelligence in education. AI Soc. 2020, 36, 331–348. [Google Scholar] [CrossRef]

- Sharma, B. Research paper on artificial intelligence. Int. J. Sci. Res. Eng. Manag. 2024, 8, 1–10. [Google Scholar] [CrossRef]

- Soni, P. A Study on artificial intelligence in finance sector. Int. Res. J. Mod. Eng. Technol. Sci. 2023, 9, 223–232. [Google Scholar]

- Bhattamisra, S.; Banerjee, P.; Gupta, P.; Mayuren, J.; Patra, S.; Candasamy, M. Artificial intelligence in pharmaceutical and healthcare research. Big Data Cogn. Comput. 2023, 7, 10. [Google Scholar] [CrossRef]

- Secinaro, S.; Calandra, D.; Secinaro, A.; Muthurangu, V.; Biancone, P. The role of artificial intelligence in healthcare: A structured literature review. BMC Med. Inform. Decis. Mak. 2021, 21, 125. [Google Scholar] [CrossRef]

- Doğan, M.; Dogan, T.; Bozkurt, A. The use of artificial intelligence (AI) in online learning and distance education processes: A systematic review of empirical studies. Appl. Sci. 2023, 13, 3056. [Google Scholar] [CrossRef]

- Masturoh, U.; Irayana, I.; Adriliyana, F. Digitalization of play activities and Ggames: Artificial intelligence in early childhood education. TEMATIK J. Pemikir. Penelit. Pendidik. Anak Usia Dini 2024, 10, 1. [Google Scholar]

- Kuchkarova, G.; Kholmatov, S.; Tishabaeva, I.; Khamdamova, O.; Husaynova, M.; Ibragimov, N. Al-integrated system design for early stage learning and erudition to develop analytical deftones. In Proceedings of the 2024 4th International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE), Greater Noida, India, 14–15 May 2024; pp. 795–799. [Google Scholar]

- Bozkurt, A. ChatGPT, üretken yapay zeka ve algoritmik paradigma değişikliği. Alanyazın 2023, 4, 63–72. [Google Scholar]

- Puteri, S.; Saputri, Y.; Kurniati, Y. The impact of artificial intelligence (AI) technology on students’ social relations. BICC Proc. 2024, 2, 153–158. [Google Scholar]

- Demircioğlu, E.; Yazıcı, C.; Demir, B. Yapay zekâ destekli matematik eğitimi: Bir içerik analizi. Int. J. Soc. Humanit. Sci. Res. (JSHSR) 2024, 11, 771–785. [Google Scholar]

| Experimental Group | R | T1 | X | T2 |

| Test Type | Model | 95% Confidence Interval | Heterogeneity | |||||

|---|---|---|---|---|---|---|---|---|

| n | g | Lower | Upper | Q | p | I2 | ||

| Achievement | FEM | 13 | 0.55 | 0.41 | 0.69 | 217.75 | 0.00 | 94.48 |

| REM | 13 | 0.74 | 0.13 | 1.35 | ||||

| Item | Groups | Effect Size and 95% Confidence Interval | Null Test | Heterogeneity | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| n | g | Lower | Upper | Z-Value | p-Value | Q-Value | df | p-Value | ||

| Education Level | University | 6 | 0.65 | −0.66 | 1.96 | 0.97 | 0.33 | |||

| Others | 7 | 0.80 | 0.17 | 1.42 | 2.49 | 0.01 | ||||

| Toal | 13 | 0.77 | 0.20 | 1.33 | 2.67 | 0.00 | 0.04 | 1 | 0.84 | |

| Application Duration | Sessions | 7 | 0.30 | −0.29 | 0.90 | 1.00 | 0.32 | |||

| 9–+ | 4 | 0.33 | −0.12 | 0.79 | 1.43 | 0.15 | ||||

| Total | 11 | 0.32 | −0.04 | 0.68 | 1.74 | 0.08 | 0.00 | 1 | 0.95 | |

| Sample Size | Small | 6 | 0.01 | −0.24 | 0.26 | 0.09 | 0.93 | |||

| Medium | 3 | 1.82 | 1.21 | 2.43 | 5.88 | 0.00 | ||||

| Large | 4 | 1.02 | −0.36 | 2.40 | 1.44 | 0.15 | ||||

| Total | 13 | 0.29 | 0.06 | 0.52 | 2.51 | 0.01 | 30.29 | 2 | 0.00 | |

| Test Type | Groups | n | sd | df | Levene | t | p | ||

|---|---|---|---|---|---|---|---|---|---|

| F | p | ||||||||

| pretest | Experiment | 14 | 18.64 | 1.94 | 26 | 2.28 | 0.14 | −14.17 | 0.00 |

| posttest | Experiment | 14 | 27.93 | 1.49 | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Doğan, Y.; Batdı, V.; Topkaya, Y.; Özüpekçe, S.; Akşab, H.V. Effectiveness of Artificial Intelligence Practices in the Teaching of Social Sciences: A Multi-Complementary Research Approach on Pre-School Education. Sustainability 2025, 17, 3159. https://doi.org/10.3390/su17073159

Doğan Y, Batdı V, Topkaya Y, Özüpekçe S, Akşab HV. Effectiveness of Artificial Intelligence Practices in the Teaching of Social Sciences: A Multi-Complementary Research Approach on Pre-School Education. Sustainability. 2025; 17(7):3159. https://doi.org/10.3390/su17073159

Chicago/Turabian StyleDoğan, Yunus, Veli Batdı, Yavuz Topkaya, Salman Özüpekçe, and Hatun Vera Akşab. 2025. "Effectiveness of Artificial Intelligence Practices in the Teaching of Social Sciences: A Multi-Complementary Research Approach on Pre-School Education" Sustainability 17, no. 7: 3159. https://doi.org/10.3390/su17073159

APA StyleDoğan, Y., Batdı, V., Topkaya, Y., Özüpekçe, S., & Akşab, H. V. (2025). Effectiveness of Artificial Intelligence Practices in the Teaching of Social Sciences: A Multi-Complementary Research Approach on Pre-School Education. Sustainability, 17(7), 3159. https://doi.org/10.3390/su17073159