1. Introduction

The detection of small objects in remote sensing images has been known to be a challenging problem in the domain due to the small number of pixels representing these objects within the image compared to the image size. For example, in very high resolution (VHR) Pleiades satellite images (50 cm/pixel), vehicles are contained within an area of about 40 pixels (

pixels). To improve the detection of small objects, e.g., vehicles, ships, and animals in satellite images, conventional state-of-the-art object detectors in computer vision such as Faster R-CNN (Faster Region-based Convolutional Neural Network) [

1], SSD (Single Shot Multibox Detector) [

2], Feature Pyramid Network [

3], Mask R-CNN [

4], YOLOv3 (You Only Look Once version 3) [

5], EfficientDet [

6], or others—see a survey of 20-year object detection in [

7]—can be specialized by reducing anchor sizes, using multi-scale feature learning with data augmentation to target these small object sizes. We mention here some recent proposed models to tackle generic small object detection such as the improved Faster R-CNN [

8], Feature-fused SSD [

9], RefineDet [

10], SCAN (Semantic context aware network) [

11], etc. For more details about their architectures and other developed models, we refer readers to a recent review on deep learning-based small object detection in the computer vision domain [

12]. Back to the remote sensing domain, some efforts have been made to tackle the small object detection task by adapting the existing detectors. In [

13], Deconvolutional R-CNN was proposed by setting a deconvolutional layer after the last convolutional layer in order to recover more details and better localize the position of small targets. This simple but efficient technique helped to increase the performance of ship and plane detection compared to the original Faster R-CNN. In [

14], an IoU-adaptive deformable R-CNN was developed with the goal of adapting IoU threshold according to the object size, to make dealing with small objects whose loss, according to the authors, would be absorbed during training phase easier. In [

15], UAV-YOLO was proposed to adapt the YOLOv3 to detect small objects from unmanned aerial vehicle (UAV) data. Slight modification from YOLOv3 was done by concatenating two residual blocks of the network backbone having the same size. By focusing more on the training optimization of their dataset with UAV-viewed perspectives, the authors reported superior performance of UAV-YOLO compared to YOLOv3 and SSD. Another enhancement of YOLOv3 was done in [

16] where the proposed YOLO-fine is able to better deal with small and very small objects thanks to its finer detection grids. In [

17], the authors exploited and adapted the YOLOv3 detector for the detection of vehicles in Pleiades images at 50 cm/pixel. For this purpose, a dataset of 88 k vehicles was manually annotated to train the network. This approach, therefore, has the drawback of costly manual annotation and, in the case of application to images from another satellite sensor, a new annotation phase would be necessary. Moreover, by further reducing the resolution of these images (1 m/pixel), we may reach the limits of the detection capacity of those detectors (shown later through our experimental study).

An alternative approach that draws attention of researchers is to perform super-resolution (SR) to increase the spatial resolution of the images (and thus the size and details of the objects) before performing the detection task. To deal with the lack of details in the low-resolution images, the latest neural network super-resolution (SR) techniques such as Single Image Super-resolution (SI-SR) [

18], CNN-based SR (SR-CNN) [

19,

20], Very Deep Super-resolution (VDSR) [

21], Multiscale Deep Super-resolution (MDSR), Enhanced Deep Residual Super-resolution (EDSR) [

22], Very Deep Residual Channel Attention Networks (RCAN) [

23], Second-order Attention Network SR (SAN) [

24], Super-Resolution with Cascading Residual Network (CARN) [

25], etc. aim at significantly increasing the resolution of an image much better than the classical and simple bicubic interpolation. For example, while a VDSR model [

21] uses a great number of convolutional layers (very deep), an EDSR network [

22] stacks residual blocks to generate super-resolved images with increased spatial resolution from low-resolution (LR) ones. Readers interested in these networks are invited to read the recent review articles on deep learning-based super-resolution approaches in [

26,

27]. Back to the exploration of SR techniques to assist the detection task in the literature, some recent studies on detecting small objects thus exploit the above SR methods to increase image resolution so that the detector can search for larger objects (i.e., in super-resolved images). In [

28], the authors combined an SR network which is upstream of the SSD detector for vehicle detection on satellite images, with only slight modifications to the first SSD layers. They have shown that an SSD working on super-resolved images (by a factor of 2 and 4) could yield significant improvement compared to the use of LR images. In addition, the authors in [

29] described the gain provided by super-resolution with EDSR for different resolutions in satellite images. They observed that these techniques could considerably improve the SR results for 30-cm images with a factor of 2 (allowing to reach a spatial resolution of 15 cm), but not with a higher factor (of 4, 6, or 8 for example). In [

30], the authors proposed an architecture with three components: an enhanced super-resolution with residual-based generative adversarial network (GAN), an edge enhancement network, and a detector network (Faster R-CNN or SSD). They performed an end-to-end training in which the gradient of the detection loss of the detector is back-propagated into the generator of the GAN network. They have provided significant improvement in detection of cars and gas storage within VHR images at 15-cm and 30-cm resolution with an SR factor of 4.

Indeed, the higher the super-resolution factor required (even lower resolution), the greater the number of residual blocks and the sizes of these blocks (size of the convolution layers) must be in order to reconstruct the image correctly. A simple network with an evaluation or optimization criterion such as MAE (Mean Absolute Error) or MSE (Mean Square Error) is thus particularly difficult to train due to the large number of parameters. In this article, our motivation is to develop novel solutions to improve a super-resolution framework with a final goal of detecting small objects in remote sensing images. We start in

Section 2 with a brief introduction of the EDSR architecture for super-resolution that we select as our baseline model to develop different improvement strategies, without loss of generality. We then present in

Section 3 the proposed improvements based on EDSR, respectively by integrating the Wasserstein generative adversarial network within a cycle model and adding an auxiliary network specified for object detection. To confirm and validate the effectiveness of our proposed strategies, we conduct an experimental study in

Section 4 to evaluate detection performance of small objects in aerial imagery using the ISPRS data [

31]. More precisely, we seek to extract vehicles on images whose spatial resolution has been artificially reduced to 1 m/pixel (by a factor of 8 from original 12.5-cm/pixel images). The objects we are looking for thus cover an area of approximately 10 pixels (for example

pixels for a car). We report several qualitative and quantitative results of super-resolution and object detection that experimentally illustrate the interest of our strategies (

Section 4.1). The generalization capacity of the proposed strategies is also studied by investigating another baseline SR network as well as several object detection models (

Section 4.2 and

Section 4.3). Then, we investigate the advantage of the proposed techniques within a multi-resolution transfer learning context where experiments are conducted on satellite xView data at 30-cm resolution [

32] (

Section 4.4). Finally,

Section 5 draws some conclusions and discusses some perspective works.

2. Residual Block-Based Super-Resolution

In this section, we briefly present different deep learning-based methods for super-resolution before focusing on the EDSR (Enhanced Deep Residual Super-resolution) architecture [

22], which is our baseline model for further improvements proposed within this study. We note that the EDSR is selected since it has been recently adopted and exploited within several studies in the remote sensing domain [

29,

30,

33]. However, this choice is optional and any other SR model could be employed to replace the EDSR role. We will later investigate another SR baseline network in

Section 4.2. As mentioned in our Introduction, the two articles [

26,

27] give a relatively complete overview of super-resolution techniques based on deep learning. A neural network specialized in super-resolution receives as input a low-resolution image

and its high-resolution version

as reference. The network outputs an enhanced resolution image

by minimizing the distance between

and

. The “simplest” architectures are CNNs consisting of a stack of convolutional layers followed by one or more pixel rearrangement layers. A rearrangement layer allows a change in dimension of a set of layers from the dimension

to

, where

represent the number of batches, number of channels, the height, and the width of the feature maps, respectively, and

r is the up-sampling factor. For example with

, we double the dimension in the

x-axis and

y-axis of the output feature map. Similar and further explanations can be found from the related studies such as SISR in [

18] and SR-CNN in [

19,

20]. The former only processes the luminance channel of the input image. These propositions already showed a clear improvement of the output image, compared to a classical and simple solution using the bicubic interpolation, while allowing a fast execution due to their low complexity (i.e., only five convolutional layers within the SISR approach [

18]).

An improvement of these networks is to replace the convolutional layers with residual blocks. Part of the input information of a layer is added to the output feature map of that layer. We focus here on the EDSR approach [

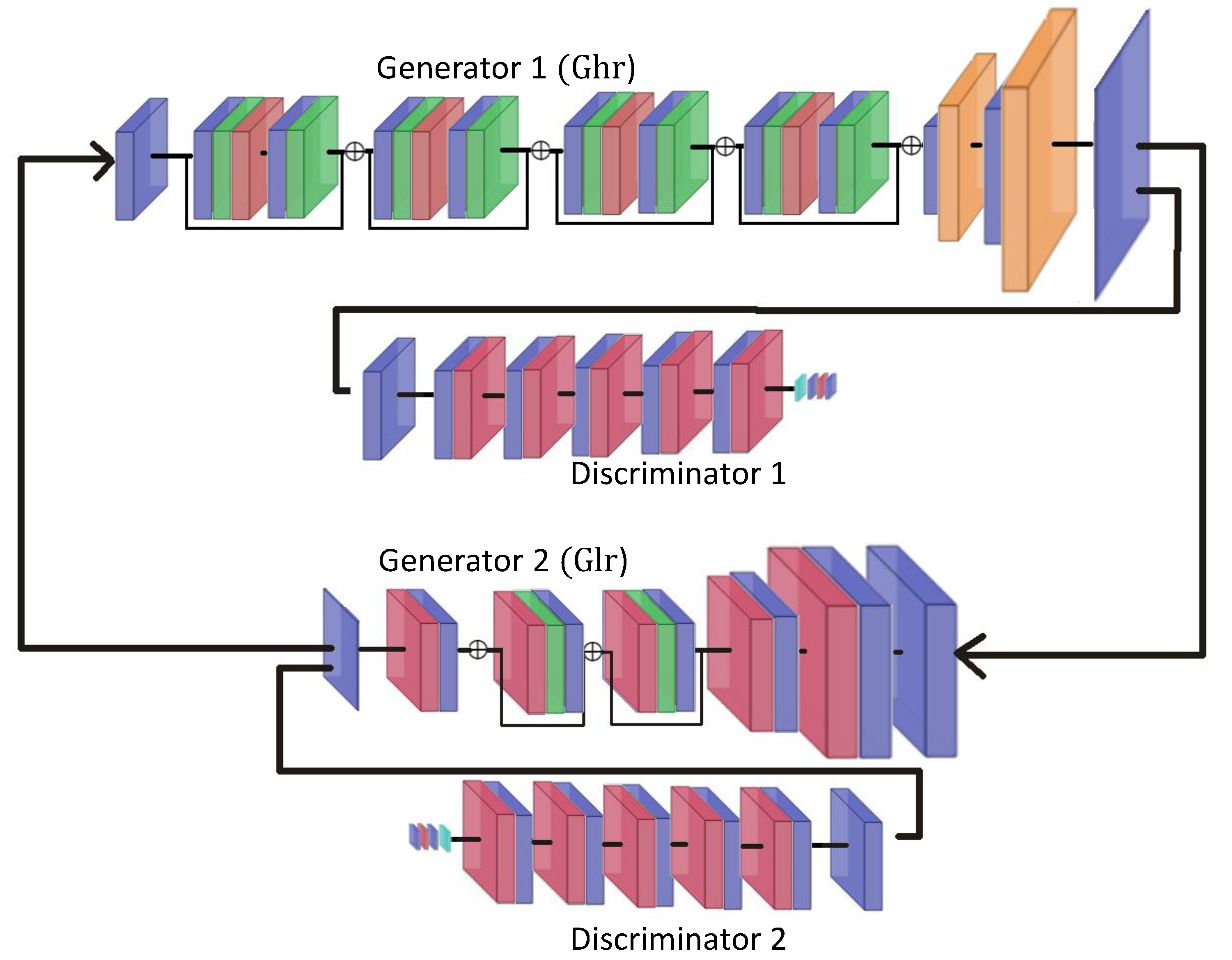

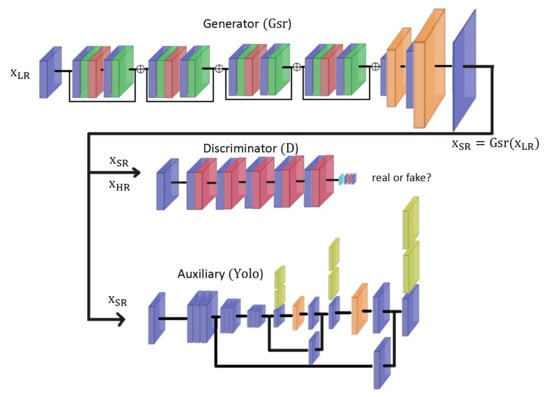

22] which proposes to exploit a set of residual blocks to replace simple convolutional blocks. A simplified illustration of this EDSR architecture is shown in

Figure 1. In this figure, the super-resolution is performed with a factor of 4, simply using four residual blocks formed by a sequence of convolutional layer (blue), normalization (green) and ReLu (red), then convolution and normalization again. The rearrangement layer with an upscaling factor of 2 (i.e., pixel shuffle operation) is shown in orange. We refer readers to the original paper [

22] for further details about this approach.

As previously mentioned, we evaluate the behavior and the performance of the approaches discussed here using the ISPRS 2D Semantic Labeling Contest dataset [

31] (

http://www2.isprs.org/commissions/comm3/wg4/2d-sem-label-potsdam.html). More specifically, we are interested in aerial images acquired over the city of Potsdam which are provided with a spatial resolution of 5 cm/pixel. This dataset was initially designed to evaluate semantic segmentation methods, considering six classes: impervious surfaces, buildings, low vegetation, trees, vehicles, and other/background). It can, however, be exploited for vehicle detection tasks as done in [

34] by retaining only the related components of pixels belonging to the vehicle class. The generated dataset thus contains nearly 10,000 vehicles. We considered the RGB bands from these images to conduct experiments with different spatial resolutions artificially fixed at 12.5 cm/pixel, 50 cm/pixel and 1 m/pixel, and to train the super-resolution network. The 12.5 cm/pixel resolution is our reference high resolution (HR) version. We exploited an EDSR architecture with 16 residual blocks of size

. To optimize the results, EDSR additionally performs a normalization of the image pixels on the three bands during the inference phase, based on the average pixel values of the images used for training. For this network, the calculation of the error was performed by the cost function

and the optimizer used is Adam [

35].

Figure 2 shows the effect of super-resolution by a factor of 4, which increases the resolution from 50 cm/pixel to 12.5 cm/pixel. The significant gain over scaling by bicubic interpolation can be obviously observed. To quantitatively evaluate the performance of super-resolution within our context of small object detection, we adopted the YOLOv3 detector [

5]. YOLOv3 was trained here on HR images at 12.5 cm/pixel. The IoU (Intersection Over Union) criterion was used to calculate an overlapping area between a detected box and the corresponding ground truth box. The object is considered detected if its IoU is greater than a predefined threshold. Since the objects to be detected are small, the IoU threshold was set to

in our work. The network also provided for each detection a confidence score (between 0 and 1), which was compared to a confidence threshold value set to

. In short, only predicted boxes having an IoU greater than

and a confidence score greater than

were considered as detected ones. For detection evaluation, we measured the true positive (TP), false positive (FP), and the F1-score, calculated from TP, FP, and the total number of objects. Finally, we calculated the mAP (mean Average Precision) as a precision index for different recall values.

Table 1 shows that super-resolution by EDSR provides results close to those obtained on high-resolution (HR) images (i.e., mAP of

compared to

), considerably better than those obtained by the simple bicubic interpolation (mAP =

).

We have observed that, with an SR factor of 4, the original EDSR approach is quite effective (mAP of

lower for detection compared to the use of HR version). If one continues to lower the resolution of the image to 1 m/pixel (i.e., the spatial resolution we aim at within this study) as shown in

Figure 3, the super-resolution by EDSR with a factor of 8 (EDSR-8) does not allow a good reconstruction of the image.

Table 2 confirms the poor detection performance achieved by the YOLOv3 detector on SR images yielded by EDSR-8. Only a detection mAP of

was achieved, which was even close to the bicubic approach (

). Therefore, lots of efforts should be done to improve the performance of EDSR with factor 8 in order to work with 1 m/pixel images within our context.

Before describing different propositions to improve the network performance in the next section, we note that one can simply enhance the quality of super-resolved images yielded by the EDSR approach by increasing the number of residual blocks as well as the size of these blocks. To clarify this remark, we modified the number of residual blocks from 16 to 32 and the size of these blocks from

to

. As a result, the number of network parameters significantly increased from 1,665,307 to 6,399,387.

Figure 4 and

Table 3 show an important improvement in image quality as well as in detection results provided by the improved network compared to the former one (i.e., mAP of

compared to

).

However, the main issue of such an approach is the heavy network training with regard to a huge number of parameters. In addition, a network evaluation criterion such as MAE (Mean Absolute Error) or MSE (Mean Square Error) with an Adam optimization also remains limited. Several training processes conducted on the same set of SR images with 32 residual blocks of size

provided very different results. This instability issue depends in particular on the order of the images seen by the network during the training and the use of the random generator at different levels. We show in

Table 4 some illustrative results according to different training cycles to support this remark. To this end, although the detection result has been enhanced by this simple technique, a detection mAP of slightly higher than

is not supposed to be sufficient in the context of object detection. Therefore, in the following section, we will propose some improvements in network architecture that allow more stable super-resolution learning as well as better small object detection performance.