An Efficient Filtering Approach for Removing Outdoor Point Cloud Data of Manhattan-World Buildings

Abstract

:1. Introduction

2. Materials and Methods

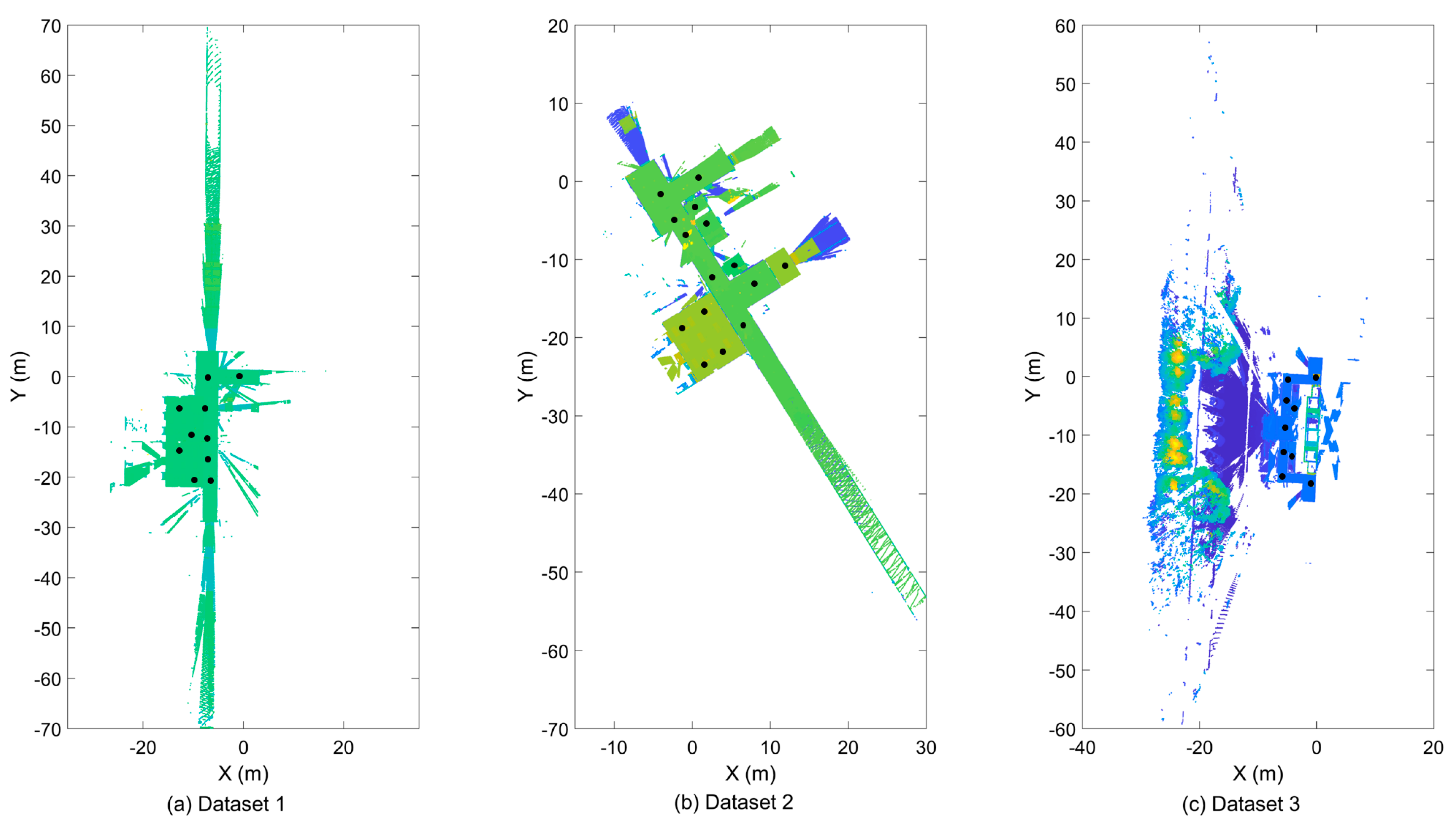

2.1. Study Sites and Data

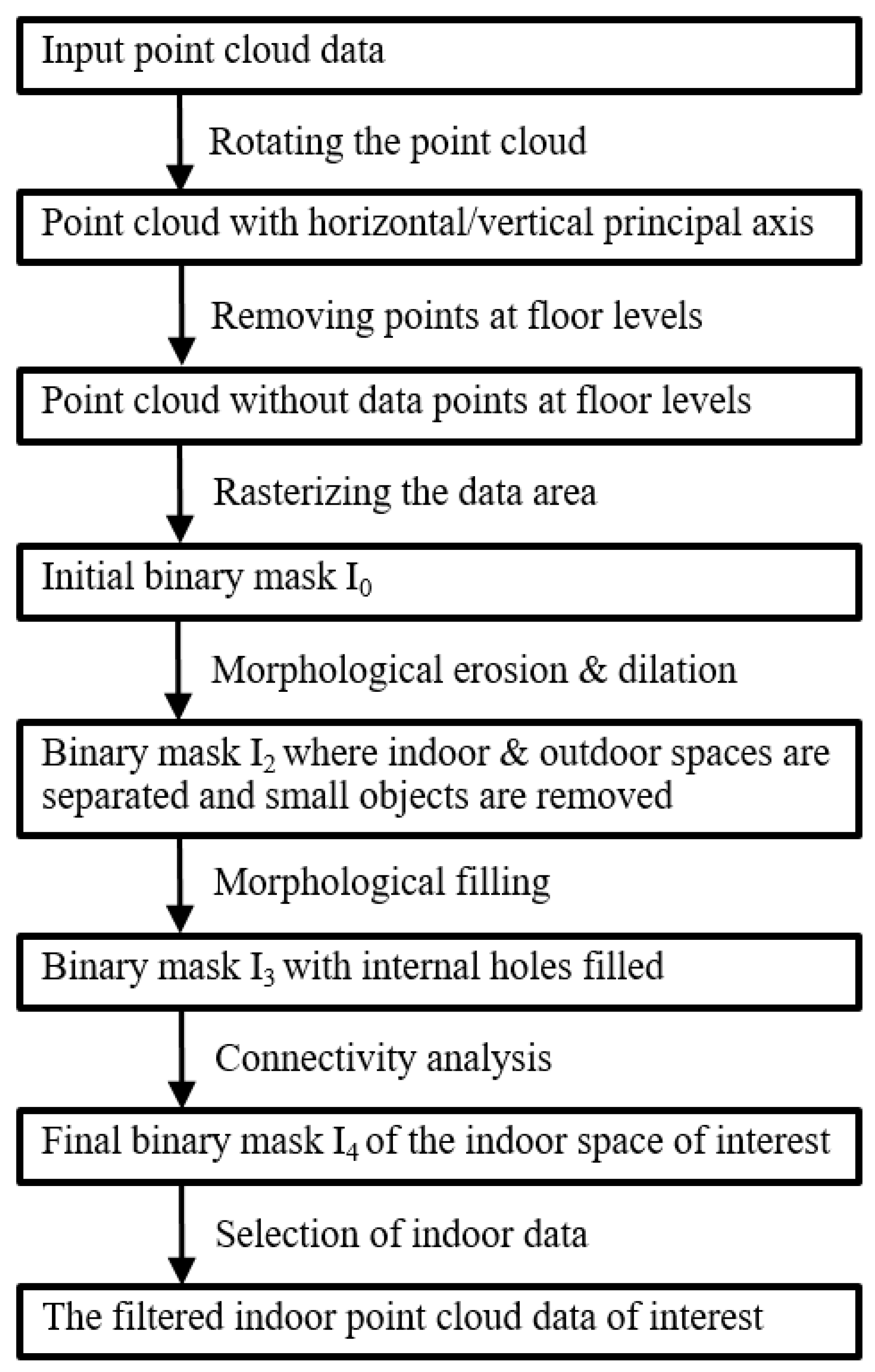

2.2. Methodology

2.2.1. Data Pre-Processing

2.2.2. Removal of Data Points at the Floor Level

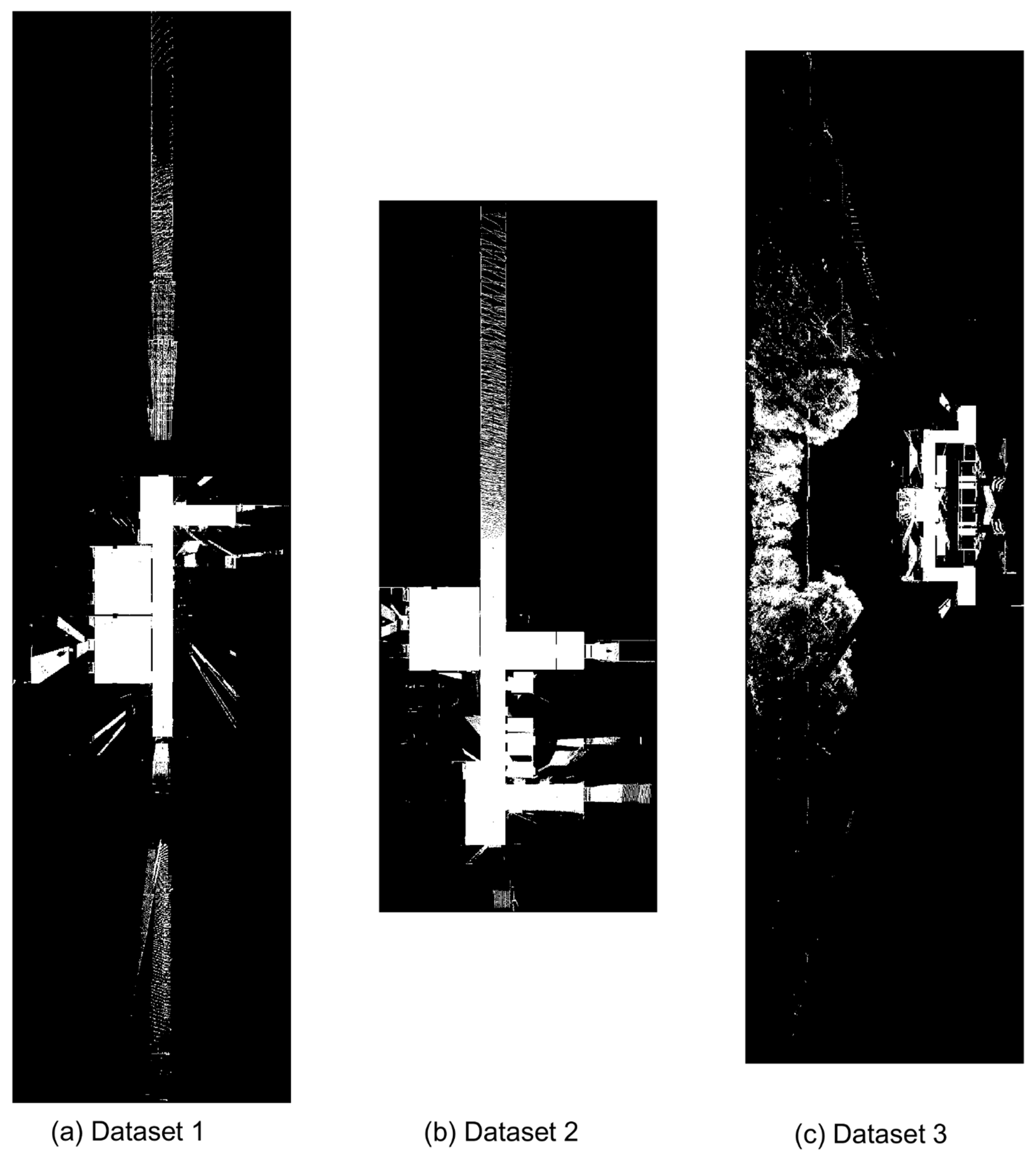

2.2.3. Pixilation of the Data Area

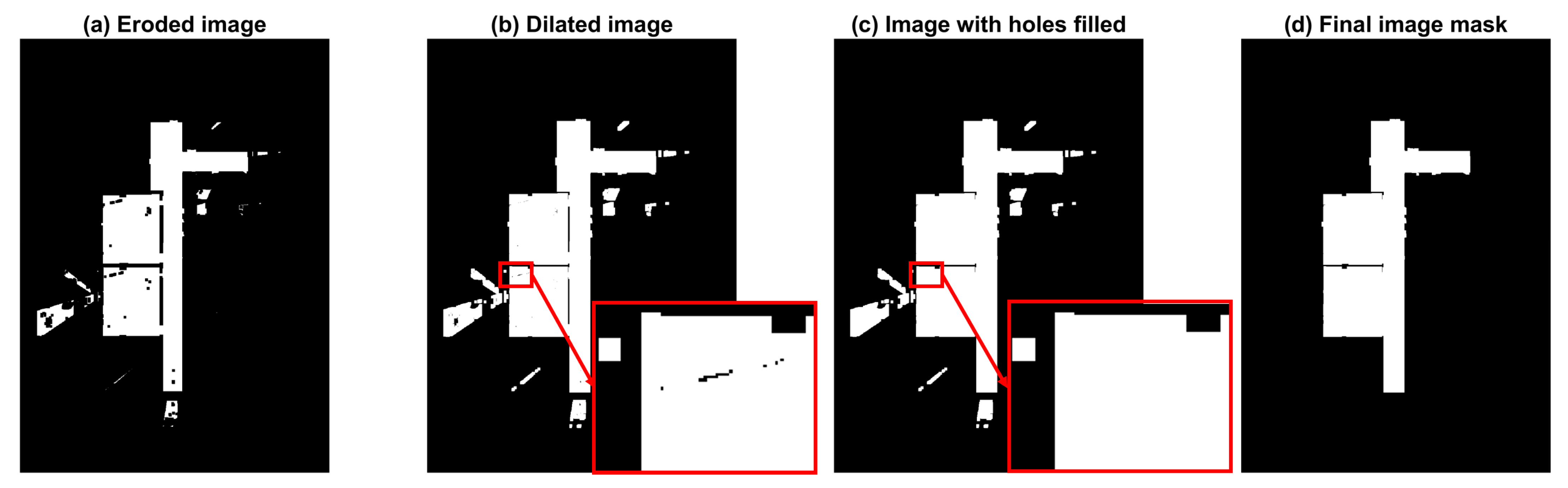

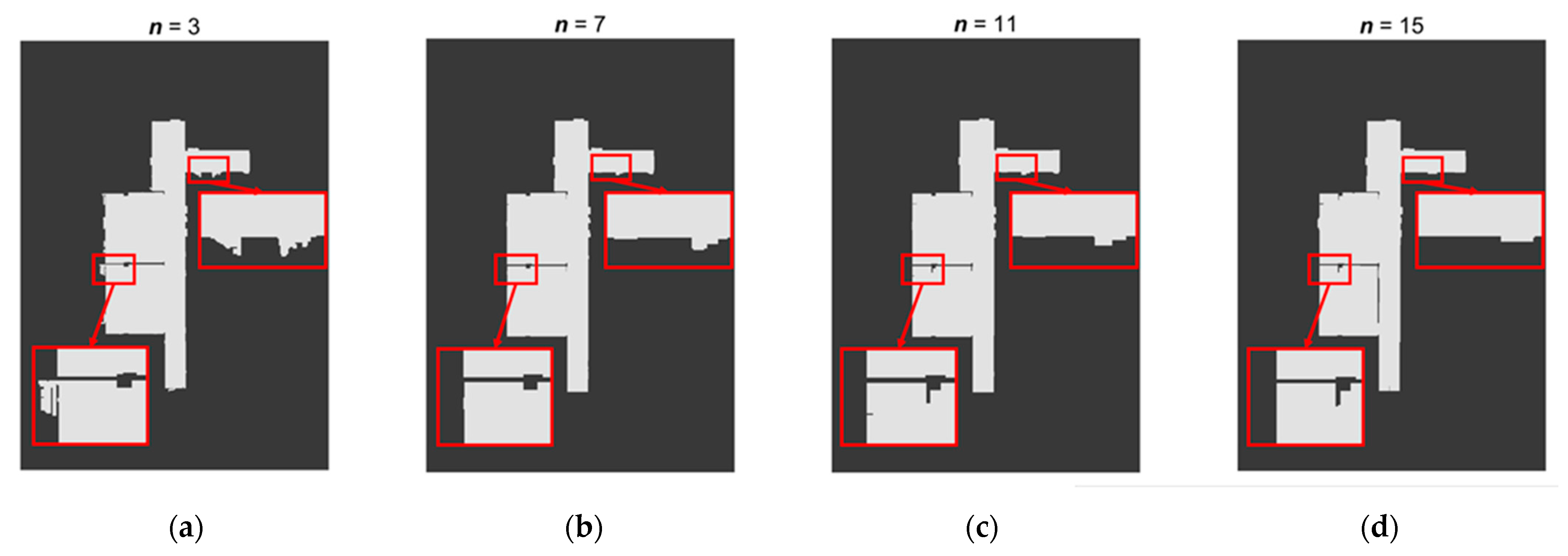

2.2.4. Morphological Erosion and Dilation

2.2.5. Hole Filling

2.2.6. Connectivity Analysis

2.2.7. Selection of Indoor Data

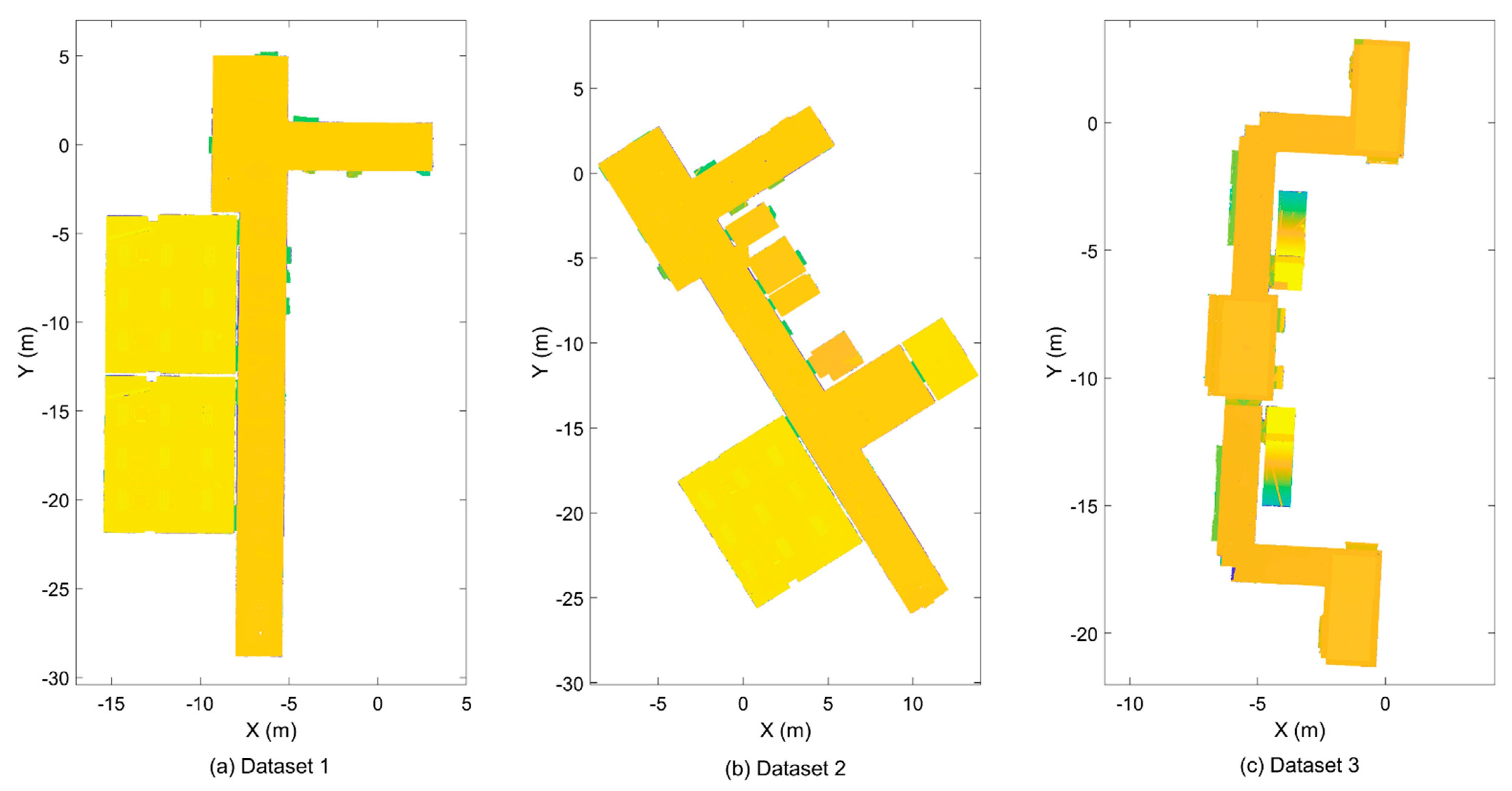

3. Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, M.Y.; Chen, R.Z.; Li, D.R.; Chen, Y.J.; Guo, G.Y.; Cao, Z.P.; Pan, Y.J. Scene Recognition for Indoor Localization Using a Multi-Sensor Fusion Approach. Sensors 2017, 17, 2847. [Google Scholar] [CrossRef] [Green Version]

- Guo, S.; Xiong, H.J.; Zheng, X.W. A Novel Semantic Matching Method for Indoor Trajectory Tracking. ISPRS Int. J. Geo-Inf. 2017, 6, 197. [Google Scholar] [CrossRef]

- Hamieh, A.; Makhlouf, A.B.; Louhichi, B.; Deneux, D. A BIM-based method to plan indoor paths. Autom. Constr. 2020, 113, 103120. [Google Scholar] [CrossRef]

- Tarihmen, B.; Diyarbakirli, B.; Kanbur, M.O.; Demirel, H. Indoor navigation system of faculty of civil engineering, ITU: A BIM approach. Balt. J. Mod. Comput. 2020, 8, 359–369. [Google Scholar] [CrossRef]

- Tekavec, J.; Lisec, A. 3D Geometry-Based Indoor Network Extraction for Navigation Applications Using SFCGAL. ISPRS Int. J. Geo-Inf. 2020, 9, 417. [Google Scholar] [CrossRef]

- Nikoohemat, S.; Diakité, A.A.; Zlatanova, S.; Vosselman, G. Indoor 3D reconstruction from point clouds for optimal routing in complex buildings to support disaster management. Autom. Constr. 2020, 113, 103109. [Google Scholar] [CrossRef]

- Ma, G.; Wu, Z. BIM-based building fire emergency management: Combining building users’ behavior decisions. Autom. Constr. 2020, 109, 102975. [Google Scholar] [CrossRef]

- Wang, R.; Xie, L.; Chen, D. Modeling indoor spaces using decomposition and reconstruction of structural elements. Photogramm. Eng. Remote Sens. 2017, 83, 827–841. [Google Scholar] [CrossRef]

- Heaton, J.; Parlikad, A.K.; Schooling, J. Design and development of BIM models to support operations and maintenance. Comput. Ind. 2019, 111, 172–186. [Google Scholar] [CrossRef]

- Fan, L.; Powrie, W.; Smethurst, J.; Atkinson, P.; Einstein, H. The effect of short ground vegetation on terrestrial laser scans at a local scale. ISPRS J. Photogramm. Remote Sens. 2014, 95, 42–52. [Google Scholar] [CrossRef] [Green Version]

- Fan, L. A comparison between structure-from-motion and terrestrial laser scanning for deriving surface roughness: A case study on a sandy terrain surface, The International Archives of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2020, 42, 1225–1229. [Google Scholar]

- Lau, L.; Quan, Y.; Wan, J.; Zhou, N.; Wen, C.; Qian, N.; Jing, F. An autonomous ultra-wide band-based attitude and position determination technique for indoor mobile laser scanning. ISPRS Int. J. Geo-Inf. 2018, 7, 155. [Google Scholar] [CrossRef] [Green Version]

- Lichti, D.D.; Jarron, D.; Tredoux, W.; Shahbazi, M.; Radovanovic, R. Geometric modelling and calibration of a spherical camera imaging system. Photogramm. Rec. 2020, 35, 123–142. [Google Scholar] [CrossRef]

- Sanhudo, L.; Ramos, N.M.; Martins, J.P.; Almeida, R.M.; Barreira, E.; Simões, M.L.; Cardoso, V. A framework for in-situ geometric data acquisition using laser scanning for BIM modelling. J. Build. Eng. 2020, 28, 101073. [Google Scholar] [CrossRef]

- Sun, Y.; Schaefer, S.; Wang, W. Denoising point sets via L0 minimization. Comput. Aided Geom. Des. 2015, 35, 2–15. [Google Scholar] [CrossRef]

- Mattei, E.; Castrodad, A. Point Cloud Denoising via Moving RPCA. Comput. Graph. Forum 2016, 36, 123–137. [Google Scholar] [CrossRef]

- Han, X.F.; Jin, J.S.; Wang, M.J.; Jiang, W.; Gao, L.; Xiao, L. A review of algorithms for filtering the 3D point cloud. Signal Process. Image Commun. 2017, 57, 103–112. [Google Scholar] [CrossRef]

- Chen, H.; Wei, M.; Sun, Y.; Xie, X.; Wang, J. Multi-Patch Collaborative Point Cloud Denoising via Low-Rank Recovery with Graph Constraint. IEEE Trans. Vis. Comput. Graph. 2020, 26, 3255–3270. [Google Scholar] [CrossRef]

- Kurdi, F.T.; Awrangjeb, M.; Munir, N. Automatic filtering and 2D modeling of airborne laser scanning building point cloud. Trans. GIS 2020, 25, 164–188. [Google Scholar] [CrossRef]

- Huber, D.; Akinci, B.; Adan, A.; Anil, E.B.; Okorn, B.; Xiong, X. Methods for automatically modeling and representing as-built building information models. In Proceedings of the NSF Engineering Research and Innovation Conference, Atlanta, GA, USA, 4 January 2021. [Google Scholar]

- Oesau, S.; Lafarge, F.; Alliez, P. Indoor scene reconstruction using feature sensitive primitive extraction and graph-cut. ISPRS J. Photogramm. Remote Sens. 2014, 90, 68–82. [Google Scholar] [CrossRef] [Green Version]

- Okorn, B.; Xiong, X.; Akinci, B.; Huber, D. Toward Automated Modeling of Floor Plans. In Proceedings of the Symposium on 3D Data Processing, Visualization and Transmission, Paris, France, 18–20 May 2010. [Google Scholar]

- Mura, C.; Mattausch, O.; Pajarola, R. Piecewise-planar reconstruction of multi-room interiors with arbitrary wall arrangements. Comput. Graph. Forum 2016, 35, 179–188. [Google Scholar] [CrossRef]

- Ochmann, S.; Vock, R.; Wessel, R.; Klein, R. Automatic reconstruction of parametric building models from indoor point clouds. Comput. Graph. 2016, 54, 94–103. [Google Scholar] [CrossRef] [Green Version]

- Ochmann, S.; Vock, R.; Klein, R. Automatic reconstruction of fully volumetric 3D building models from oriented point clouds. ISPRS J. Photogramm. Remote Sens. 2019, 151, 251–262. [Google Scholar] [CrossRef] [Green Version]

- Macher, H.; Landes, T.; Grussenmeyer, P. From point clouds to building information models: 3D semi-automatic reconstruction of indoors of existing buildings. Appl. Sci. 2017, 7, 1030. (In Switzerland) [Google Scholar] [CrossRef] [Green Version]

- Frías, E.; Balado, J.; Díaz-Vilariño, L.; Lorenzo, H. Point cloud room segmentation based on indoor spaces and 3D mathematical morphology. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIV-4/W1-2020, 49–55. [Google Scholar]

- Pintore, G.; Mura, C.; Ganovelli, F.; Fuentes-Perez, L.; Pajarola, R.; Gobbetti, E. State-of-the-art in Automatic 3D Reconstruction of Structured Indoor Environments. Comput. Graph. Forum 2020, 39, 667–699. [Google Scholar] [CrossRef]

- Cai, Y.; Fan, L. An efficient approach to automatic construction of 3d watertight geometry of buildings using point clouds. Remote Sens. 2021, 13, 1947. [Google Scholar] [CrossRef]

- Kim, S.; Manduchi, R.; Qin, S. Multi-planar monocular reconstruction of manhattan indoor scenes. In Proceedings of the 2018 International Conference on the 3D Vision, 3DV 2018, Verona, Italy, 5–8 September 2018; pp. 616–624. [Google Scholar] [CrossRef] [Green Version]

- Matheron, G.; Serra, J. The birth of mathematical morphology. In Proceedings of the 6th Intl. Symp. Mathematical Morphology, Sydney, Australia, 3–5 April 2002; pp. 1–16. [Google Scholar]

- Serra, J. Image Analysis and Mathematical Morphology; Academic Press: London, UK, 1982. [Google Scholar]

- Soille, P. Morphological Image Analysis: Principles and Applications; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Rishikeshan, C.A.; Ramesh, H. An automated mathematical morphology driven algorithm for water body extraction from remotely sensed images. ISPRS J. Photogramm. Remote Sens. 2018, 146, 11–21. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E.; Eddins, S.L. Digital Image Processing Using MATLAB, 3rd ed.; Gatesmark Publishing: Knoxville, TN, USA, 2020. [Google Scholar]

- Serra, J.; Soille, P. Mathematical Morphology and Its Applications to Image Processing; Springer Science +Business Media: Berlin, Germany, 2012. [Google Scholar]

- Balado, J.; Oosterom, P.V.; Díaz-Vilariño, L.; Meijers, M. Mathematical morphology directly applied to point cloud data. ISPRS J. Photogramm. Remote Sens. 2020, 168, 208–220. [Google Scholar] [CrossRef]

- Serna, A.; Marcotegui, B. Detection, segmentation and classification of 3D urban objects using mathematical morphology and supervised learning. ISPRS J. Photogramm. Remote Sens. 2014, 93, 243–255. [Google Scholar] [CrossRef] [Green Version]

- Rodríguez-Cuenca, B.; García-Cort´es, S.; Ord´onez, C.; Alonso, C.M. Morphological operations to extract urban curbs in 3D MLS point clouds. ISPRS Int. J. Geo-Inf. 2016, 5, 93. [Google Scholar] [CrossRef]

- Balado, J.; Díaz-Vilariño, L.; Arias, P.; González-Jorge, H. Automatic classification of urban ground elements from mobile laser scanning data. Autom. Constr. 2018, 86, 226–239. [Google Scholar] [CrossRef] [Green Version]

- Balado, J.; Díaz-Vilarino, L.; Arias, P.; Lorenzo, H. Point clouds for direct pedestrian pathfinding in urban environments. ISPRS J. Photogramm. Remote Sens. 2019, 148, 184–196. [Google Scholar] [CrossRef]

- Li, Y.; Yong, B.; Van Oosterom, P.; Lemmens, M.; Wu, H.; Ren, L.; Zheng, M.; Zhou, J. Airborne LiDAR data filtering based on geodesic transformations of mathematical morphology. Remote Sens. 2017, 9, 1104. [Google Scholar] [CrossRef] [Green Version]

- Gorte, B.; Pfeifer, N. Structuring laser-scanned trees using 3D mathematical morphology. ISPRS Int. Arch. Photogramm. Remote Sens. 2004, 35, 929–933. [Google Scholar]

- Bucksch, A.; Wageningen, H. Skeletonization and segmentation of point clouds using octrees and graph theory. In Proceedings of the ISPRS Symposium: Image Engineering and Vision Metrology, Dresden, Germany, 25–27 September 2006; Volume XXXVI. [Google Scholar] [CrossRef]

- Torr, P.H.S.; Zisserman, A. MLESAC: A New Robust Estimator with Application to Estimating Image Geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef] [Green Version]

- MATLAB Image Processing ToolboxTM Documentation, Mathworks. Available online: https://www.mathworks.com/help/images/ (accessed on 17 September 2021).

- Haralick, R.M.; Shapiro, L.G. Computer and Robot Vision; Addison-Wesley: Boston, MA, USA, 1992; pp. 158–205. [Google Scholar]

| Dataset Number | Minimum Distance for Downsampling | Pixel Size of Binary Images | The SE Size for the xy Projection | The SE Size for the yz Projection | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| 1 | 20 mm | 50 mm | 7 | 7 | 99.70% | 99.01% | 99.35% |

| 2 | 20 mm | 50 mm | 11 | 5 | 99.50% | 98.65% | 99.07% |

| 3 | 20 mm | 50 mm | 17 | 7 | 99.31% | 98.52% | 98.92% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, L.; Cai, Y. An Efficient Filtering Approach for Removing Outdoor Point Cloud Data of Manhattan-World Buildings. Remote Sens. 2021, 13, 3796. https://doi.org/10.3390/rs13193796

Fan L, Cai Y. An Efficient Filtering Approach for Removing Outdoor Point Cloud Data of Manhattan-World Buildings. Remote Sensing. 2021; 13(19):3796. https://doi.org/10.3390/rs13193796

Chicago/Turabian StyleFan, Lei, and Yuanzhi Cai. 2021. "An Efficient Filtering Approach for Removing Outdoor Point Cloud Data of Manhattan-World Buildings" Remote Sensing 13, no. 19: 3796. https://doi.org/10.3390/rs13193796

APA StyleFan, L., & Cai, Y. (2021). An Efficient Filtering Approach for Removing Outdoor Point Cloud Data of Manhattan-World Buildings. Remote Sensing, 13(19), 3796. https://doi.org/10.3390/rs13193796