Wetland Mapping in Great Lakes Using Sentinel-1/2 Time-Series Imagery and DEM Data in Google Earth Engine

Abstract

:1. Introduction

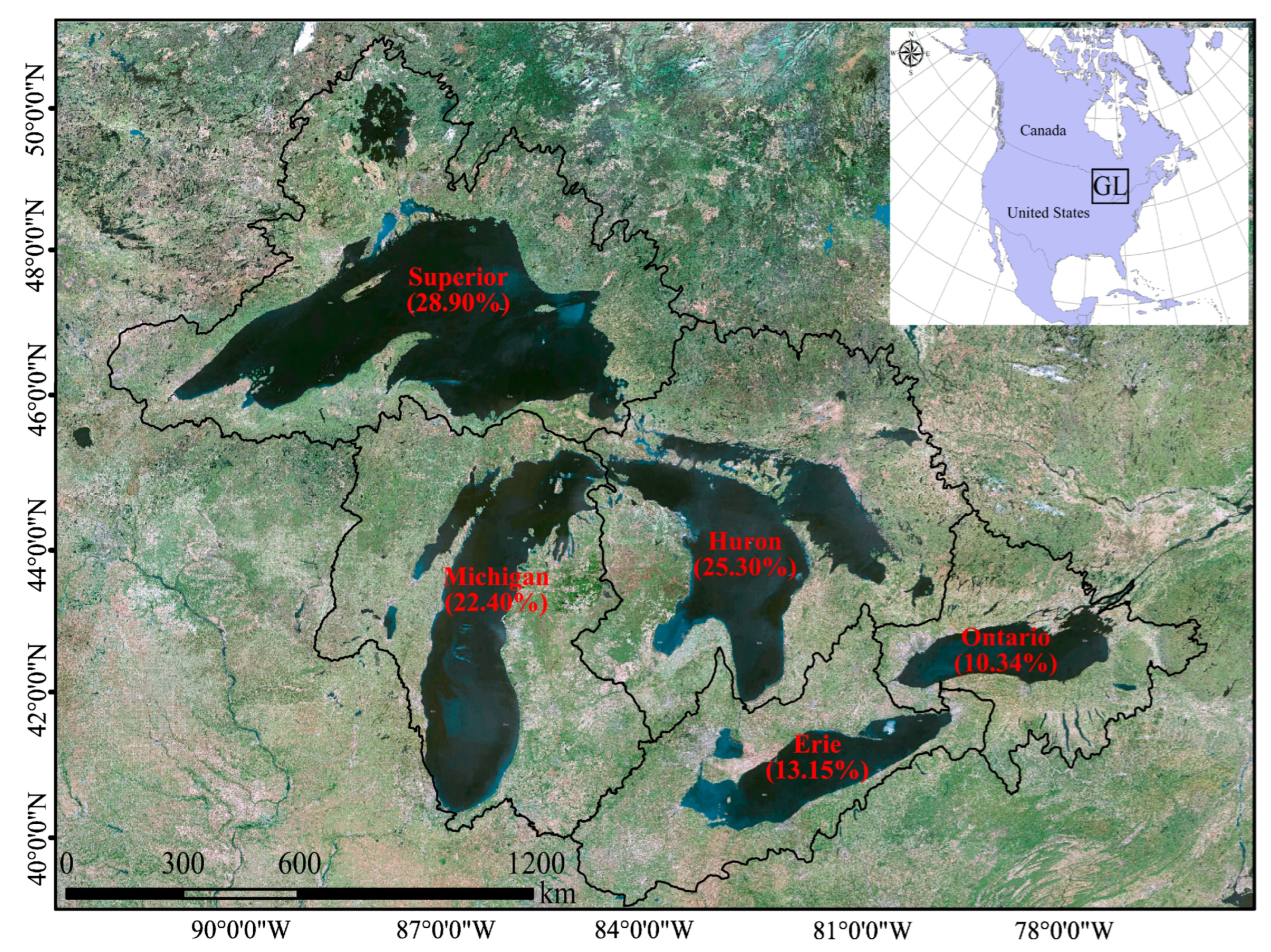

2. Study Area and Data

2.1. Study Area

2.2. Field Data

2.3. Satellite Data

3. Methodology

3.1. Data Preparation

3.1.1. Field Data Preprocessing

3.1.2. RS Data Preprocessing

3.1.3. Feature Extraction

3.2. Classification Model

3.2.1. Segmentation

3.2.2. Classification

- Initial classification

- Final classification

3.3. Accuracy Assessment

4. Results

4.1. Classified Wetland Map

4.2. Distribution of the Wetland Classes in the GL

4.3. Statistical Accuracy Assessment

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| MAP | |||||||||||

| In situ | Erie | Barren | Bog | Cropland | Open Water | Fen | Forest | Grassland/ Shrubland | Marsh | Swamp | PA |

| Barren | 5063 | 0 | 28 | 0 | 1 | 21 | 108 | 7 | 0 | 96.84% | |

| Bog | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Cropland | 260 | 2 | 29,263 | 1 | 21 | 277 | 356 | 51 | 16 | 96.75% | |

| Open Water | 20 | 0 | 21 | 6860 | 0 | 2 | 0 | 1 | 1 | 99.35% | |

| Fen | 15 | 0 | 1 | 0 | 11 | 9 | 0 | 0 | 0 | 30.56% | |

| Forest | 12 | 0 | 13 | 0 | 1 | 6047 | 1 | 3 | 43 | 98.81% | |

| Grassland/ Shrubland | 17 | 0 | 81 | 0 | 1 | 54 | 501 | 1 | 27 | 73.46% | |

| Marsh | 139 | 1 | 406 | 35 | 21 | 137 | 41 | 4696 | 19 | 85.46% | |

| Swamp | 78 | 0 | 41 | 0 | 0 | 231 | 6 | 7 | 5585 | 93.90% | |

| UA | 90.35% | 0 | 98.02% | 99.48% | 19.64% | 89.22% | 49.46% | 98.53% | 98.14% | ||

| MAP | |||||||||||

| In situ | Michigan | Barren | Bog | Cropland | Open Water | Fen | Forest | Grassland/ Shrubland | Marsh | Swamp | PA |

| Barren | 3154 | 0 | 66 | 0 | 9 | 20 | 68 | 18 | 7 | 94.37% | |

| Bog | 0 | 703 | 131 | 1 | 6 | 84 | 0 | 15 | 1 | 74.71% | |

| Cropland | 19 | 3 | 2899 | 1 | 0 | 410 | 0 | 1 | 0 | 86.98% | |

| Open Water | 6 | 0 | 78 | 392 | 0 | 4 | 0 | 2 | 0 | 81.33% | |

| Fen | 3 | 0 | 283 | 1 | 1863 | 45 | 0 | 10 | 0 | 84.49% | |

| Forest | 4 | 0 | 6 | 0 | 2 | 1980 | 2 | 6 | 4 | 98.80% | |

| Grassland/ Shrubland | 0 | 0 | 0 | 0 | 0 | 0 | 69 | 0 | 0 | ||

| Marsh | 47 | 0 | 339 | 123 | 9 | 39 | 0 | 1714 | 1 | 75.44% | |

| Swamp | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||

| UA | 97.56% | 99.58% | 76.25% | 75.68% | 98.62% | 76.68% | 49.64% | 97.06% | 0.00% | ||

| MAP | |||||||||||

| In situ | Superior | Barren | Bog | Cropland | Open Water | Fen | Forest | Grassland/ Shrubland | Marsh | Swamp | PA |

| Barren | 1953 | 2 | 180 | 4 | 55 | 19 | 26 | 439 | 0 | 72.93% | |

| Bog | 0 | 872 | 0 | 1 | 22 | 76 | 0 | 3 | 2 | 89.34% | |

| Cropland | 1 | 0 | 935 | 0 | 32 | 0 | 295 | 1 | 0 | 73.97% | |

| Open Water | 0 | 0 | 0 | 3164 | 0 | 0 | 0 | 3 | 0 | 99.91% | |

| Fen | 0 | 0 | 0 | 0 | 9486 | 115 | 0 | 10 | 1 | 98.69% | |

| Forest | 2 | 2 | 1 | 5 | 18 | 9021 | 0 | 12 | 22 | 99.32% | |

| Grassland/ Shrubland | 0 | 0 | 0 | 0 | 0 | 11 | 53 | 0 | 0 | 82.81% | |

| Marsh | 0 | 4 | 0 | 33 | 9 | 70 | 0 | 998 | 1 | 89.51% | |

| Swamp | 1 | 0 | 2 | 4 | 29 | 308 | 1 | 13 | 1082 | 75.14% | |

| UA | 99.80% | 99.09% | 83.63% | 98.54% | 98.29% | 93.77% | 14.13% | 67.48% | 97.65% | ||

| MAP | |||||||||||

| In situ | Huron | Barren | Bog | Cropland | Open Water | Fen | Forest | Grassland/ Shrubland | Marsh | Swamp | PA |

| Barren | 6228 | 0 | 34 | 74 | 4 | 0 | 0 | 1 | 0 | 98.22% | |

| Bog | 1 | 286 | 204 | 3 | 12 | 99 | 0 | 15 | 0 | 46.13% | |

| Cropland | 2074 | 14 | 149,127 | 77 | 988 | 5413 | 140 | 429 | 70 | 94.19% | |

| Open Water | 0 | 0 | 0 | 40,522 | 1 | 5 | 0 | 0 | 0 | 99.99% | |

| Fen | 2 | 1 | 9 | 4 | 2393 | 28 | 0 | 3 | 1 | 98.03% | |

| Forest | 19 | 1 | 20 | 10 | 8 | 17,275 | 2 | 11 | 39 | 99.37% | |

| Grassland/ Shrubland | 3 | 0 | 97 | 2 | 3 | 143 | 529 | 43 | 1 | 64.43% | |

| Marsh | 65 | 0 | 702 | 1302 | 30 | 191 | 4 | 4141 | 22 | 64.13% | |

| Swamp | 2 | 0 | 33 | 0 | 1 | 496 | 0 | 1 | 2675 | 83.39% | |

| UA | 74.20% | 94.70% | 99.27% | 96.49% | 69.56% | 73.04% | 78.37% | 89.17% | 95.26% | ||

| MAP | |||||||||||

| In situ | Ontario | Barren | Bog | Cropland | Open Water | Fen | Forest | Grassland/ Shrubland | Marsh | Swamp | PA |

| Barren | 5951 | 0 | 305 | 13 | 0 | 14 | 30 | 0 | 0 | 94.27% | |

| Bog | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0.00% | |

| Cropland | 262 | 3 | 32,514 | 5 | 46 | 2040 | 181 | 84 | 16 | 92.50% | |

| Open Water | 0 | 0 | 0 | 5617 | 0 | 0 | 1 | 15 | 0 | 99.72% | |

| Fen | 6 | 0 | 0 | 0 | 155 | 16 | 2 | 7 | 3 | 82.01% | |

| Forest | 14 | 0 | 17 | 0 | 2 | 11,762 | 8 | 6 | 5 | 99.56% | |

| Grassland/ Shrubland | 122 | 0 | 88 | 0 | 1 | 25 | 169 | 18 | 1 | 39.86% | |

| Marsh | 18 | 0 | 1923 | 46 | 1 | 39 | 20 | 4748 | 1 | 69.86% | |

| Swamp | 800 | 0 | 228 | 0 | 0 | 78 | 2 | 4 | 4994 | 81.79% | |

| UA | 82.96% | 0.00% | 92.70% | 98.87% | 75.61% | 84.16% | 40.92% | 97.26% | 99.48% | ||

References

- Yang, Z.; Bai, J.; Zhang, W. Mapping and assessment of wetland conditions by using remote sensing images and POI data. Ecol. Indic. 2021, 127, 107485. [Google Scholar] [CrossRef]

- Jha, C.S.; Dutt, C.B.; Bawa, K.S. Deforestation and land use changes in Western Ghats, India. Curr. Sci. 2000, 79, 231–238. [Google Scholar]

- Kayet, N.; Pathak, K.; Chakrabarty, A.; Sahoo, S. Spatial impact of land use/land cover change on surface temperature distribution in Saranda Forest, Jharkhand. Model. Earth Syst. Environ. 2016, 2, 127. [Google Scholar] [CrossRef] [Green Version]

- Dorren, L.K.; Maier, B.; Seijmonsbergen, A.C. Improved Landsat-based forest mapping in steep mountainous terrain using object-based classification. For. Ecol. Manag. 2003, 183, 31–46. [Google Scholar] [CrossRef]

- Stević, D.; Hut, I.; Dojčinović, N.; Joković, J. Automated identification of land cover type using multispectral satellite images. Energy Build. 2016, 115, 131–137. [Google Scholar] [CrossRef]

- Gavade, A.B.; Rajpurohit, V.S. Systematic analysis of satellite image-based land cover classification techniques: Literature review and challenges. Int. J. Comput. Appl. 2021, 43, 514–523. [Google Scholar] [CrossRef]

- Mahdavi, S.; Salehi, B.; Granger, J.; Amani, M.; Brisco, B.; Huang, W. Remote sensing for wetland classification: A comprehensive review. GIScience Remote Sens. 2018, 55, 623–658. [Google Scholar] [CrossRef]

- Mirmazloumi, S.M.; Moghimi, A.; Ranjgar, B.; Mohseni, F.; Ghorbanian, A.; Ahmadi, S.A.; Amani, M.; Brisco, B. Status and trends of wetland studies in Canada using remote sensing technology with a focus on wetland classification: A bibliographic analysis. Remote Sens. 2021, 13, 4025. [Google Scholar] [CrossRef]

- Amani, M.; Kakooei, M.; Ghorbanian, A.; Warren, R.; Mahdavi, S.; Brisco, B.; Moghimi, A.; Bourgeau-Chavez, L.; Toure, S.; Paudel, A.; et al. Forty years of wetland status and trends analyses in the Great Lakes using Landsat archive imagery and Google Earth Engine. Remote Sens. 2022, 14, 3778. [Google Scholar] [CrossRef]

- Gao, Y.; Mas, J.F. A comparison of the performance of pixel-based and object-based classifications over images with various spatial resolutions. Online J. Earth Sci. 2008, 2, 27–35. [Google Scholar]

- Guo, R.; Liu, J.; Li, N.; Liu, S.; Chen, F.; Cheng, B.; Duan, J.; Li, X.; Ma, C. Pixel-wise classification method for high resolution remote sensing imagery using deep neural networks. ISPRS Int. J. Geo-Inf. 2018, 7, 110. [Google Scholar] [CrossRef] [Green Version]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Li, M.; Zang, S.; Zhang, B.; Li, S.; Wu, C. A review of remote sensing image classification techniques: The role of spatio-contextual information. Eur. J. Remote Sens. 2014, 47, 389–411. [Google Scholar] [CrossRef]

- Qu, L.A.; Chen, Z.; Li, M.; Zhi, J.; Wang, H. Accuracy improvements to pixel-based and object-based lulc classification with auxiliary datasets from Google Earth engine. Remote Sens. 2021, 13, 453. [Google Scholar] [CrossRef]

- Pan, X.; Zhang, C.; Xu, J.; Zhao, J. Simplified object-based deep neural network for very high resolution remote sensing image classification. ISPRS J. Photogramm. Remote Sens. 2021, 181, 218–237. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, C.; Huang, W.; Tang, J.; Li, X.; Zhang, Q. Machine learning-based crop recognition from aerial remote sensing imagery. Front. Earth Sci. 2021, 15, 54–69. [Google Scholar] [CrossRef]

- Berhane, T.M.; Lane, C.R.; Wu, Q.; Anenkhonov, O.A.; Chepinoga, V.V.; Autrey, B.C.; Liu, H. Comparing pixel-and object-based approaches in effectively classifying wetland-dominated landscapes. Remote Sens. 2017, 10, 46. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Jiang, Q.; Ma, Y.; Yang, Q.; Shi, P.; Zhang, S.; Tan, Y.; Xi, J.; Zhang, Y.; Liu, B.; et al. Object-based multigrained cascade forest method for wetland classification using sentinel-2 and radarsat-2 imagery. Water 2022, 14, 82. [Google Scholar] [CrossRef]

- Fatemighomi, H.S.; Golalizadeh, M.; Amani, M. Object-based hyperspectral image classification using a new latent block model based on hidden Markov random fields. Pattern Anal. Appl. 2022, 25, 467–481. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Richards, J.A.; Richards, J.A. Supervised classification techniques. In Remote Sensing Digital Image Analysis; Springer: Berlin/Heidelberg, Germany, 2022; pp. 263–367. [Google Scholar]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Talukdar, S.; Singha, P.; Mahato, S.; Pal, S.; Liou, Y.A.; Rahman, A. Land-use land-cover classification by machine learning classifiers for satellite observations—A review. Remote Sens. 2020, 12, 1135. [Google Scholar] [CrossRef] [Green Version]

- Delalay, M.; Tiwari, V.; Ziegler, A.D.; Gopal, V.; Passy, P. Land-use and land-cover classification using Sentinel-2 data and machine-learning algorithms: Operational method and its implementation for a mountainous area of Nepal. J. Appl. Remote Sens. 2019, 13, 014530. [Google Scholar] [CrossRef]

- Tu, Y.; Lang, W.; Yu, L.; Li, Y.; Jiang, J.; Qin, Y.; Wu, J.; Chen, T.; Xu, B. Improved mapping results of 10 m resolution land cover classification in Guangdong, China using multisource remote sensing data with Google Earth Engine. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5384–5397. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Afshar, M.; Brisco, B.; Huang, W.; Mohammad Javad Mirzadeh, S.; White, L.; Banks, S.; Montgomery, J.; Hopkinson, C. Canadian wetland inventory using Google Earth Engine: The first map and preliminary results. Remote Sens. 2019, 11, 842. [Google Scholar] [CrossRef] [Green Version]

- Amani, M.; Brisco, B.; Afshar, M.; Mirmazloumi, S.M.; Mahdavi, S.; Mirzadeh, S.M.; Huang, W.; Granger, J. A generalized supervised classification scheme to produce provincial wetland inventory maps: An application of Google Earth Engine for big geo data processing. Big Earth Data 2019, 3, 378–394. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Kakooei, M.; Ghorbanian, A.; Brisco, B.; DeLancey, E.R.; Toure, S.; Reyes, E.L. Wetland change analysis in Alberta, Canada using four decades of landsat imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10314–10335. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google earth engine cloud computing platform for remote sensing big data applications: A comprehensive review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5326–5350. [Google Scholar] [CrossRef]

- Kumar, L.; Mutanga, O. Google Earth Engine applications since inception: Usage, trends, and potential. Remote Sens. 2018, 10, 1509. [Google Scholar] [CrossRef] [Green Version]

- Tamiminia, H.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.; Adeli, S.; Brisco, B. Google Earth Engine for geo-big data applications: A meta-analysis and systematic review. ISPRS J. Photogramm. Remote Sens. 2020, 164, 152–170. [Google Scholar] [CrossRef]

- Mirmazloumi, S.M.; Kakooei, M.; Mohseni, F.; Ghorbanian, A.; Amani, M.; Crosetto, M.; Monserrat, O. ELULC-10, a 10 m European land use and land cover map using sentinel and landsat data in google earth engine. Remote Sens. 2022, 14, 3041. [Google Scholar] [CrossRef]

- Hird, J.N.; DeLancey, E.R.; McDermid, G.J.; Kariyeva, J. Google Earth Engine, open-access satellite data, and machine learning in support of large-area probabilistic wetland mapping. Remote Sens. 2017, 9, 1315. [Google Scholar] [CrossRef] [Green Version]

- McCarthy, M.J.; Radabaugh, K.R.; Moyer, R.P.; Muller-Karger, F.E. Enabling efficient, large-scale high-spatial resolution wetland mapping using satellites. Remote Sens. Environ. 2018, 208, 189–201. [Google Scholar] [CrossRef]

- Wang, X.; Xiao, X.; Zou, Z.; Hou, L.; Qin, Y.; Dong, J.; Doughty, R.B.; Chen, B.; Zhang, X.; Chen, Y.; et al. Mapping coastal wetlands of China using time series Landsat images in 2018 and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2020, 163, 312–326. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Brisco, B.; Homayouni, S.; Gill, E.; DeLancey, E.R.; Bourgeau-Chavez, L. Big data for a big country: The first generation of Canadian wetland inventory map at a spatial resolution of 10-m using Sentinel-1 and Sentinel-2 data on the Google Earth Engine cloud computing platform. Can. J. Remote Sens. 2020, 46, 15–33. [Google Scholar] [CrossRef]

- Ghorbanian, A.; Zaghian, S.; Asiyabi, R.M.; Amani, M.; Mohammadzadeh, A.; Jamali, S. Mangrove ecosystem mapping using Sentinel-1 and Sentinel-2 satellite images and random forest algorithm in Google Earth Engine. Remote Sens. 2021, 13, 2565. [Google Scholar] [CrossRef]

- Fekri, E.; Latifi, H.; Amani, M.; Zobeidinezhad, A. A training sample migration method for wetland mapping and monitoring using sentinel data in google earth engine. Remote Sens. 2021, 13, 4169. [Google Scholar] [CrossRef]

- DeLancey, E.R.; Czekajlo, A.; Boychuk, L.; Gregory, F.; Amani, M.; Brisco, B.; Kariyeva, J.; Hird, J.N. Creating a Detailed Wetland Inventory with Sentinel-2 Time-Series Data and Google Earth Engine in the Prairie Pothole Region of Canada. Remote Sens. 2022, 14, 3401. [Google Scholar] [CrossRef]

- White, L.; Ryerson, R.A.; Pasher, J.; Duffe, J. State of science assessment of remote sensing of Great Lakes coastal wetlands: Responding to an operational requirement. Remote Sens. 2020, 12, 3024. [Google Scholar] [CrossRef]

- Bourgeau-Chavez, L.; Endres, S.; Battaglia, M.; Miller, M.E.; Banda, E.; Laubach, Z.; Higman, P.; Chow-Fraser, P.; Marcaccio, J. Development of a bi-national Great Lakes coastal wetland and land use map using three-season PALSAR and Landsat imagery. Remote Sens. 2015, 7, 8655–8682. [Google Scholar] [CrossRef] [Green Version]

- Wolter, P.T.; Johnston, C.A.; Niemi, G.J. Mapping submergent aquatic vegetation in the US Great Lakes using Quickbird satellite data. Int. J. Remote Sens. 2005, 26, 5255–5274. [Google Scholar] [CrossRef]

- Cvetkovic, M.; Chow-Fraser, P. Use of ecological indicators to assess the quality of Great Lakes coastal wetlands. Ecol. Indic. 2011, 11, 1609–1622. [Google Scholar] [CrossRef]

- Eikenberry, B.C. Summary of Biological Investigations Relating to Water Quality in the Western Lake Michigan Drainages, Wisconsin and Michigan; US Geological Survey; US Department of the Interior: Washington, DC, USA, 1996. [Google Scholar]

- Valenti, V.L.; Carcelen, E.C.; Lange, K.; Russo, N.J.; Chapman, B. Leveraging Google earth engine user interface for semiautomated wetland classification in the Great Lakes Basin at 10 m with optical and radar geospatial datasets. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6008–6018. [Google Scholar] [CrossRef]

- Mahdavi, S.; Salehi, B.; Amani, M.; Granger, J.E.; Brisco, B.; Huang, W.; Hanson, A. Object-based classification of wetlands in Newfoundland and Labrador using multi-temporal PolSAR data. Can. J. Remote Sens. 2017, 43, 432–450. [Google Scholar] [CrossRef]

- Amani, M.; Salehi, B.; Mahdavi, S.; Granger, J.E.; Brisco, B.; Hanson, A. Wetland classification using multi-source and multi-temporal optical remote sensing data in Newfoundland and Labrador, Canada. Can. J. Remote Sens. 2017, 43, 360–373. [Google Scholar] [CrossRef]

- Ghorbanian, A.; Ahmadi, S.A.; Amani, M.; Mohammadzadeh, A.; Jamali, S. Application of artificial neural networks for mangrove mapping using multi-temporal and multi-source remote sensing imagery. Water 2022, 14, 244. [Google Scholar] [CrossRef]

- Kaplan, G.; Avdan, U. Evaluating the utilization of the red edge and radar bands from sentinel sensors for wetland classification. Catena 2019, 178, 109–119. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Granger, J.E.; Mohammadimanesh, F.; Salehi, B.; Brisco, B.; Homayouni, S.; Gill, E.; Huberty, B.; Lang, M. Meta-analysis of wetland classification using remote sensing: A systematic review of a 40-year trend in North America. Remote Sens. 2020, 12, 1882. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Pahlevan, N.; Chittimalli, S.K.; Balasubramanian, S.V.; Vellucci, V. Sentinel-2/Landsat-8 product consistency and implications for monitoring aquatic systems. Remote Sens. Environ. 2019, 220, 19–29. [Google Scholar] [CrossRef]

- Huang, H.; Roy, D.P.; Boschetti, L.; Zhang, H.K.; Yan, L.; Kumar, S.S.; Gomez-Dans, J.; Li, J. Separability analysis of Sentinel-2A Multi-Spectral Instrument (MSI) data for burned area discrimination. Remote Sens. 2016, 8, 873. [Google Scholar] [CrossRef] [Green Version]

- Ranghetti, L.; Boschetti, M.; Nutini, F.; Busetto, L. “sen2r”: An R toolbox for automatically downloading and preprocessing Sentinel-2 satellite data. Comput. Geosci. 2020, 139, 104473. [Google Scholar] [CrossRef]

- Henderson, F.M.; Lewis, A.J. Radar detection of wetland ecosystems: A review. Int. J. Remote Sens. 2008, 29, 5809–5835. [Google Scholar] [CrossRef]

- Amani, M.; Salehi, B.; Mahdavi, S.; Brisco, B. Spectral analysis of wetlands using multi-source optical satellite imagery. ISPRS J. Photogramm. Remote Sens. 2018, 144, 119–136. [Google Scholar] [CrossRef]

- Amani, M.; Salehi, B.; Mahdavi, S.; Brisco, B. Separability analysis of wetlands in Canada using multi-source SAR data. GIScience Remote Sens. 2019, 56, 1233–1260. [Google Scholar] [CrossRef]

- Mahdavi, S.; Salehi, B.; Amani, M.; Granger, J.; Brisco, B.; Huang, W. A dynamic classification scheme for mapping spectrally similar classes: Application to wetland classification. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101914. [Google Scholar] [CrossRef]

- Achanta, R.; Susstrunk, S. Superpixels and polygons using simple non-iterative clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4651–4660. [Google Scholar]

- Tassi, A.; Vizzari, M. Object-oriented lulc classification in google earth engine combining snic, glcm, and machine learning algorithms. Remote Sens. 2020, 12, 3776. [Google Scholar] [CrossRef]

- Movaghar, A.; Mailick, M.; Sterling, A.; Greenberg, J.; Saha, K. Automated screening for Fragile X premutation carriers based on linguistic and cognitive computational phenotypes. Sci. Rep. 2017, 7, 2674. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Naboureh, A.; Ebrahimy, H.; Azadbakht, M.; Bian, J.; Amani, M. RUESVMs: An ensemble method to handle the class imbalance problem in land cover mapping using Google Earth Engine. Remote Sens. 2020, 12, 3484. [Google Scholar] [CrossRef]

- Naboureh, A.; Li, A.; Bian, J.; Lei, G.; Amani, M. A hybrid data balancing method for classification of imbalanced training data within google earth engine: Case studies from mountainous regions. Remote Sens. 2020, 12, 3301. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Brisco, B.; Mahdavi, S.; Amani, M.; Granger, J.E. Fisher Linear Discriminant Analysis of coherency matrix for wetland classification using PolSAR imagery. Remote Sens. Environ. 2018, 206, 300–317. [Google Scholar] [CrossRef]

- Amani, M.; Salehi, B.; Mahdavi, S.; Brisco, B.; Shehata, M. A Multiple Classifier System to improve mapping complex land covers: A case study of wetland classification using SAR data in Newfoundland, Canada. Int. J. Remote Sens. 2018, 39, 7370–7383. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very deep convolutional neural networks for complex land cover mapping using multispectral remote sensing imagery. Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef] [Green Version]

- Rezaee, M.; Mahdianpari, M.; Zhang, Y.; Salehi, B. Deep convolutional neural network for complex wetland classification using optical remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3030–3039. [Google Scholar] [CrossRef]

- DeLancey, E.R.; Simms, J.F.; Mahdianpari, M.; Brisco, B.; Mahoney, C.; Kariyeva, J. Comparing deep learning and shallow learning for large-scale wetland classification in Alberta, Canada. Remote Sens. 2019, 12, 2. [Google Scholar] [CrossRef] [Green Version]

- Jamali, A.; Mahdianpari, M.; Mohammadimanesh, F.; Brisco, B.; Salehi, B. 3-D hybrid CNN combined with 3-D generative adversarial network for wetland classification with limited training data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8095–8108. [Google Scholar] [CrossRef]

| Class | MTRI 1 | NASA 2 | Dal 3 | ECCC 4 | OP 5 | NDMNRF 6 | This Study | ||

|---|---|---|---|---|---|---|---|---|---|

| Non-wetland classes | Barren |  | 0 | 0 | 0 | 0 | 59 (4368.7) | 24 (116.7) | 1723 (3484.03) |

| Cropland |  | 12 (198.6) | 0 | 0 | 0 | 364 (2829.5) | 4 (37.0) | 3967 (51,358.59) | |

| Open Water |  | 155 (3775.2) | 0 | 113 (51.0) | 8 (1286.0) | 0 | 20 (226.0) | 1644 (28,893.95) | |

| Forest |  | 43 (1043.8) | 5 (46.2) | 0 | 35 (617.9) | 0 | 472 (13,491.6) | 4603 (29,870.02) | |

| Grassland/Shrubland |  | 0 | 0 | 0 | 0 | 300 (1534.2) | 2 (8.2) | 4611 (30,738.90) For all Grassland/Shrubland, Bog, Fen, Marsh, and Swamp | |

| Wetland classes | Bog |  | 59 (1125.0) | 15 (112.1) | 0 | 0 | 16 (68.2) | 11 (122.4) | |

| Fen |  | 99 (5831.6) | 17 (188.9) | 0 | 0 | 0 | 30 (425.6) | ||

| Marsh |  | 695 (13,314.7) | 188 (834.6) | 54 (88.7) | 27 (1885.4) | 0 | 116 (1309.2) | ||

| Swamp |  | 0 | 0 | 48 (782.1) | 13 (1736.3) | 0 | 211 (3835.8) | ||

| Total | 1062 (25,284.6) | 224 (1179.2) | 215 (921.8) | 83 (5525.6) | 712 (8629.0) | 890 (19,572.5) | 16,548 (144,345.49) | ||

| Predicted Samples | |||||||||||

| Barren | Bog | Cropland | Open Water | Fen | Forest | Grassland/Shrubland | Marsh | Swamp | PA | ||

| Reference Samples | Barren | 21,784 | 1 | 1193 | 90 | 69 | 67 | 213 | 455 | 5 | 91.23% |

| Bog | 1 | 1862 | 335 | 5 | 41 | 257 | 0 | 34 | 3 | 73.36% | |

| Cropland | 2218 | 15 | 217,888 | 79 | 1027 | 5985 | 483 | 483 | 94 | 95.45% | |

| Open Water | 23 | 0 | 140 | 56,495 | 1 | 14 | 0 | 24 | 3 | 99.64% | |

| Fen | 26 | 1 | 323 | 5 | 13,878 | 212 | 2 | 29 | 4 | 95.84% | |

| Forest | 46 | 3 | 587 | 17 | 29 | 45,488 | 9 | 50 | 114 | 98.16% | |

| Grassland/ Shrubland | 90 | 0 | 494 | 3 | 4 | 215 | 1104 | 60 | 21 | 55.45% | |

| Marsh | 252 | 6 | 3855 | 1535 | 71 | 488 | 1826 | 15,827 | 44 | 66.21% | |

| Swamp | 845 | 0 | 673 | 5 | 30 | 1113 | 8 | 29 | 13,999 | 83.82% | |

| UA | 86.15% | 98.62% | 96.63% | 97.01% | 91.60% | 84.49% | 30.29% | 93.15% | 97.98% | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohseni, F.; Amani, M.; Mohammadpour, P.; Kakooei, M.; Jin, S.; Moghimi, A. Wetland Mapping in Great Lakes Using Sentinel-1/2 Time-Series Imagery and DEM Data in Google Earth Engine. Remote Sens. 2023, 15, 3495. https://doi.org/10.3390/rs15143495

Mohseni F, Amani M, Mohammadpour P, Kakooei M, Jin S, Moghimi A. Wetland Mapping in Great Lakes Using Sentinel-1/2 Time-Series Imagery and DEM Data in Google Earth Engine. Remote Sensing. 2023; 15(14):3495. https://doi.org/10.3390/rs15143495

Chicago/Turabian StyleMohseni, Farzane, Meisam Amani, Pegah Mohammadpour, Mohammad Kakooei, Shuanggen Jin, and Armin Moghimi. 2023. "Wetland Mapping in Great Lakes Using Sentinel-1/2 Time-Series Imagery and DEM Data in Google Earth Engine" Remote Sensing 15, no. 14: 3495. https://doi.org/10.3390/rs15143495

APA StyleMohseni, F., Amani, M., Mohammadpour, P., Kakooei, M., Jin, S., & Moghimi, A. (2023). Wetland Mapping in Great Lakes Using Sentinel-1/2 Time-Series Imagery and DEM Data in Google Earth Engine. Remote Sensing, 15(14), 3495. https://doi.org/10.3390/rs15143495