LiDAR-Based Local Path Planning Method for Reactive Navigation in Underground Mines

Abstract

:1. Introduction

2. Study Materials

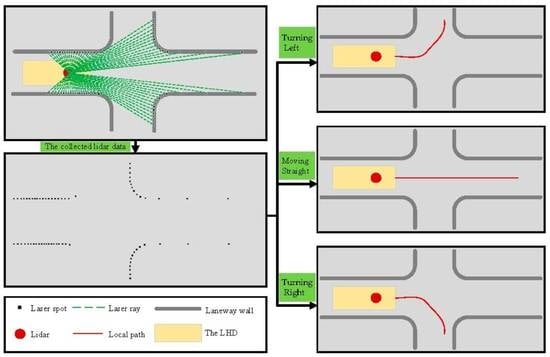

3. Methodology

3.1. Binary Map

3.2. Thinning Algorithm

| Algorithm 1 Thinning algorithm |

Input: Binary map , where 1 is background and 0 is foreground Output: Refined map 1: while Ture do 2: Define an empty matrix with 2 columns 3: for 4: for 5: if 6: Count the number

occurrences of two adjacent pixels in eight pixels near from clockwise around 7: if 8: Count the number 9: if 10: if 11: if 12: Append 13: end for 14: end for 15: if 16: The pixel value of 17: else 18: Break 19: end if 20: Repeat lines 3–19, changing 21: if 22: if 23 end while 24: Output the refined map |

3.3. Centerline Extraction

| Algorithm 2 Search tree algorithm |

| Algorithm 3 Get all paths from the search tree |

3.4. Smoothing Method

3.5. Method Comparison and Robustness Evaluation

4. Results

4.1. Dataset 1

4.1.1. In the Case of Moving Straight

4.1.2. In the Case of Turning Left

4.1.3. In the Case of Turning Right

4.1.4. Summary

4.2. Dataset 2

5. Discussion

5.1. Sensitivity Analysis of Map Resolution

5.2. Prospect

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Roberts, J.M.; Duff, E.S.; Corke, P.I. Reactive navigation and opportunistic localization for autonomous underground mining vehicles. Inf. Sci. 2002, 145, 127–146. [Google Scholar] [CrossRef]

- Dragt, B.J.; Camisani-Calzolari, F.R.; Craig, I.K. An Overview of the Automation of Load-Haul-Dump Vehicles in an Underground Mining Environment; International Federation of Accountants (IFAC): New York, NY, USA, 2005; Volume 38, ISBN 008045108X. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improving grid-based SLAM with Rao-Blackwellized particle filters by adaptive proposals and selective resampling. In Proceedings of the IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; Volume 2005, pp. 2432–2437. [Google Scholar]

- Roh, H.C.; Sung, C.H.; Kang, M.T.; Chung, M.J. Fast SLAM using polar scan matching and particle weight based occupancy grid map for mobile robot. In Proceedings of the URAI 2011—2011 8th International Conference on Ubiquitous Robots and Ambient Intelligence, Incheon, Republic of Korea, 23–26 November 2011; pp. 756–757. [Google Scholar]

- Gustafson, A. Automation of Load Haul Dump Machines; Luleå University of Technology: Luleå, Sweden, 2011. [Google Scholar]

- Roberts, J.M.; Duff, E.S.; Corke, P.I.; Sikka, P.; Winstanley, G.J.; Cunningham, J. Autonomous control of underground mining vehicles using reactive navigation. Proc.—IEEE Int. Conf. Robot. Autom. 2000, 4, 3790–3795. [Google Scholar]

- Duff, E.S.; Roberts, J.M.; Corke, P.I. Automation of an Underground Mining Vehicle using Reactive Navigation and Opportunistic Localization. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003), Las Vegas, NV, USA, 27–31 October 2003; Volume 4, pp. 3775–3780. [Google Scholar]

- Vasilopoulos, V.; Pavlakos, G.; Schmeckpeper, K.; Daniilidis, K.; Koditschek, D.E. Reactive navigation in partially familiar planar environments using semantic perceptual feedback. Int. J. Robot. Res. 2022, 41, 85–126. [Google Scholar] [CrossRef]

- Ohradzansky, M.T.; Humbert, J.S. Lidar-Based Navigation of Subterranean Environments Using Bio-Inspired Wide-Field Integration of Nearness. Sensors 2022, 22, 849. [Google Scholar] [CrossRef] [PubMed]

- Cheng, C.; Sha, Q.; He, B.; Li, G. Path planning and obstacle avoidance for AUV: A review. Ocean Eng. 2021, 235, 109355. [Google Scholar] [CrossRef]

- Tan, C.S.; Mohd-Mokhtar, R.; Arshad, M.R. A Comprehensive Review of Coverage Path Planning in Robotics Using Classical and Heuristic Algorithms. IEEE Access 2021, 9, 119310–119342. [Google Scholar] [CrossRef]

- Ayawli, B.B.K.; Chellali, R.; Appiah, A.Y.; Kyeremeh, F. An Overview of Nature-Inspired, Conventional, and Hybrid Methods of Autonomous Vehicle Path Planning. J. Adv. Transp. 2018, 2018, 8269698. [Google Scholar] [CrossRef]

- Liu, L.S.; Lin, J.F.; Yao, J.X.; He, D.W.; Zheng, J.S.; Huang, J.; Shi, P. Path Planning for Smart Car Based on Dijkstra Algorithm and Dynamic Window Approach. Wirel. Commun. Mob. Comput. 2021, 2021, 8881684. [Google Scholar] [CrossRef]

- Li, C.; Huang, X.; Ding, J.; Song, K.; Lu, S. Global path planning based on a bidirectional alternating search A* algorithm for mobile robots. Comput. Ind. Eng. 2022, 168, 108123. [Google Scholar] [CrossRef]

- Park, J.W.; Kwak, H.J.; Kang, Y.C.; Kim, D.W. Advanced Fuzzy Potential Field Method for Mobile Robot Obstacle Avoidance. Comput. Intell. Neurosci. 2016, 2016, 6047906. [Google Scholar] [CrossRef] [Green Version]

- Park, B.; Choi, J.; Chung, W.K. Roadmap coverage improvement using a node rearrangement method for mobile robot path planning. Adv. Robot. 2012, 26, 989–1012. [Google Scholar] [CrossRef]

- Wang, H.; Li, G.; Hou, J.; Chen, L.; Hu, N. A Path Planning Method for Underground Intelligent Vehicles Based on an Improved RRT* Algorithm. Electronics 2022, 11, 294. [Google Scholar] [CrossRef]

- Asensio, J.R.; Montiel, J.M.M.; Montano, L. Goal directed reactive robot navigation with relocation using laser and vision. In Proceedings of the IEEE International Conference on Robotics and Automation, Detroit, MI, USA, 10–15 May 1999; IEEE: Piscataway, NJ, USA, 1999; Volume 4, pp. 2905–2910. [Google Scholar]

- Pomerleau, D.A. Neural Network Perception for Mobile Robot Guidance; Springer: New York, NY, USA, 1993. [Google Scholar]

- Dubrawski, A.; Crowley, J.L. Self-supervised neural system for reactive navigation. In Proceedings of the IEEE International Conference on Robotics and Automation, San Diego, CA, USA, 8–13 May 1994; IEEE: Piscataway, NJ, USA, 1994; pp. 2076–2081. [Google Scholar]

- Larsson, J.; Broxvall, M.; Saffiotti, A. Laser-based corridor detection for reactive navigation. Ind. Robot 2008, 35, 69–79. [Google Scholar] [CrossRef] [Green Version]

- Larsson, J.; Broxvall, M.; Saffiotti, A. Laser based intersection detection for reactive navigation in an underground mine. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS, Nice, France, 22–26 September 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 2222–2227. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; IEEE: Piscataway, NJ, USA, 2016; Volume 2016, pp. 1271–1278. [Google Scholar]

- Feito, F.; Torres, J.C.; Ureña, A. Orientation, simplicity, and inclusion test for planar polygons. Comput. Graph. 1995, 19, 595–600. [Google Scholar] [CrossRef]

- Peng, P.; Wang, L. Targeted location of microseismic events based on a 3D heterogeneous velocity model in underground mining. PLoS ONE 2019, 14, e0212881. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peng, P.; Jiang, Y.; Wang, L.; He, Z. Microseismic event location by considering the influence of the empty area in an excavated tunnel. Sensors 2020, 20, 574. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Zhang, X.; Sun, Y.; Zhang, P. Road centerline extraction from very-high-resolution aerial image and LiDAR data based on road connectivity. Remote Sens. 2018, 10, 1284. [Google Scholar] [CrossRef] [Green Version]

- Cao, C.; Sun, Y. Automatic road centerline extraction from imagery using road GPS data. Remote Sens. 2014, 6, 9014–9033. [Google Scholar] [CrossRef] [Green Version]

- Zhou, T.; Sun, C.; Fu, H. Road information extraction from high-resolution remote sensing images based on road reconstruction. Remote Sens. 2019, 11, 79. [Google Scholar] [CrossRef] [Green Version]

- Cornea, N.D.; Silver, D.; Min, P. Curve-Skeleton Properties, Applications, and Algorithms. IEEE Trans. Vis. Comput. Graph. 2007, 13, 530–548. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.Y.; Suen, C.Y. A modified fast parallel algorithm for thinning digital patterns. Pattern Recognit. Lett. 1988, 7, 99–106. [Google Scholar]

- Ravankar, A.; Ravankar, A.A.; Kobayashi, Y.; Hoshino, Y.; Peng, C.C. Path smoothing techniques in robot navigation: State-of-the-art, current and future challenges. Sensors 2018, 18, 3170. [Google Scholar] [CrossRef] [PubMed]

- Borges, C.F.; Pastva, T. Total least squares fitting of Bézier and B-spline curves to ordered data. Comput. Aided Geom. Des. 2002, 19, 275–289. [Google Scholar] [CrossRef]

- Tarsha-Kurdi, F.; Landes, T.; Grussenmeyer, P. Hough-Transform and Extended Ransac Algorithms for Automatic Detection of 3D Building Roof Planes From Lidar Data. In Proceedings of the ISPRS Workshop on Laser Scanning 2007 and SilviLaser 2007, Espoo, Finland, 12–14 September 2007; Volume XXXVI, pp. 407–412. [Google Scholar]

- Borrmann, D.; Elseberg, J.; Lingemann, K.; Nüchter, A. The 3D Hough Transform for plane detection in point clouds: A review and a new accumulator design. 3D Res. 2011, 2, 02003. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, C.; Wang, Y.; Lin, H. Shear-related roughness classification and strength model of natural rock joint based on fuzzy comprehensive evaluation. Int. J. Rock Mech. Min. Sci. 2021, 137, 104550. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, Q.; Zhang, C.; Liao, J.; Lin, H.; Wang, Y. Coupled seepage-damage effect in fractured rock masses: Model development and a case study. Int. J. Rock Mech. Min. Sci. 2021, 144, 104822. [Google Scholar] [CrossRef]

- Jiang, Y.; Peng, P.; Wang, L.; Wang, J.; Liu, Y.; Wu, J. Modeling and Simulation of Unmanned Driving System for Load Haul Dump Vehicles in Underground Mines. Sustainability 2022, 14, 15186. [Google Scholar] [CrossRef]

| Figure 8 | Offset Error (m) | Direction Error (Rad) |

|---|---|---|

| (a) | −0.1154 | −0.0102 |

| (b) | 0.1300 | 0.0771 |

| (c) | −0.2812 | −0.6213 |

| Figure 10 | Offset Error (m) | Direction Error (Rad) |

|---|---|---|

| (a) | 0.0143 | −0.1461 |

| (b) | −0.4274 | −0.3398 |

| (c) | −0.1696 | 0.0069 |

| (d) | 0.1871 | 0.1028 |

| (e) | 0.1477 | −0.0920 |

| Method | Total Time (s) | Average Time (s) |

|---|---|---|

| Hough transform | 5295.17 | 0.366 |

| Proposed | 409.83 | 0.028 |

| Method | Total Time (s) | Average Time (s) |

|---|---|---|

| Hough transform | 4251.26 | 0.363 |

| Proposed | 730.43 | 0.062 |

| Resolution (m) | Total Time (s) | Average Time (s) |

|---|---|---|

| 0.1 | 3533.86 | 0.244 |

| 0.3 | 409.83 | 0.028 |

| 0.5 | 164.25 | 0.011 |

| 1 | 53.1 | 0.004 |

| Resolution (m) | Total Time (s) | Average Time (s) |

|---|---|---|

| 0.1 | 6548.62 | 0.559 |

| 0.3 | 730.43 | 0.062 |

| 0.5 | 284 | 0.024 |

| 1 | 84.18 | 0.007 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Y.; Peng, P.; Wang, L.; Wang, J.; Wu, J.; Liu, Y. LiDAR-Based Local Path Planning Method for Reactive Navigation in Underground Mines. Remote Sens. 2023, 15, 309. https://doi.org/10.3390/rs15020309

Jiang Y, Peng P, Wang L, Wang J, Wu J, Liu Y. LiDAR-Based Local Path Planning Method for Reactive Navigation in Underground Mines. Remote Sensing. 2023; 15(2):309. https://doi.org/10.3390/rs15020309

Chicago/Turabian StyleJiang, Yuanjian, Pingan Peng, Liguan Wang, Jiaheng Wang, Jiaxi Wu, and Yongchun Liu. 2023. "LiDAR-Based Local Path Planning Method for Reactive Navigation in Underground Mines" Remote Sensing 15, no. 2: 309. https://doi.org/10.3390/rs15020309