Point Cloud Denoising in Outdoor Real-World Scenes Based on Measurable Segmentation

Abstract

:1. Introduction

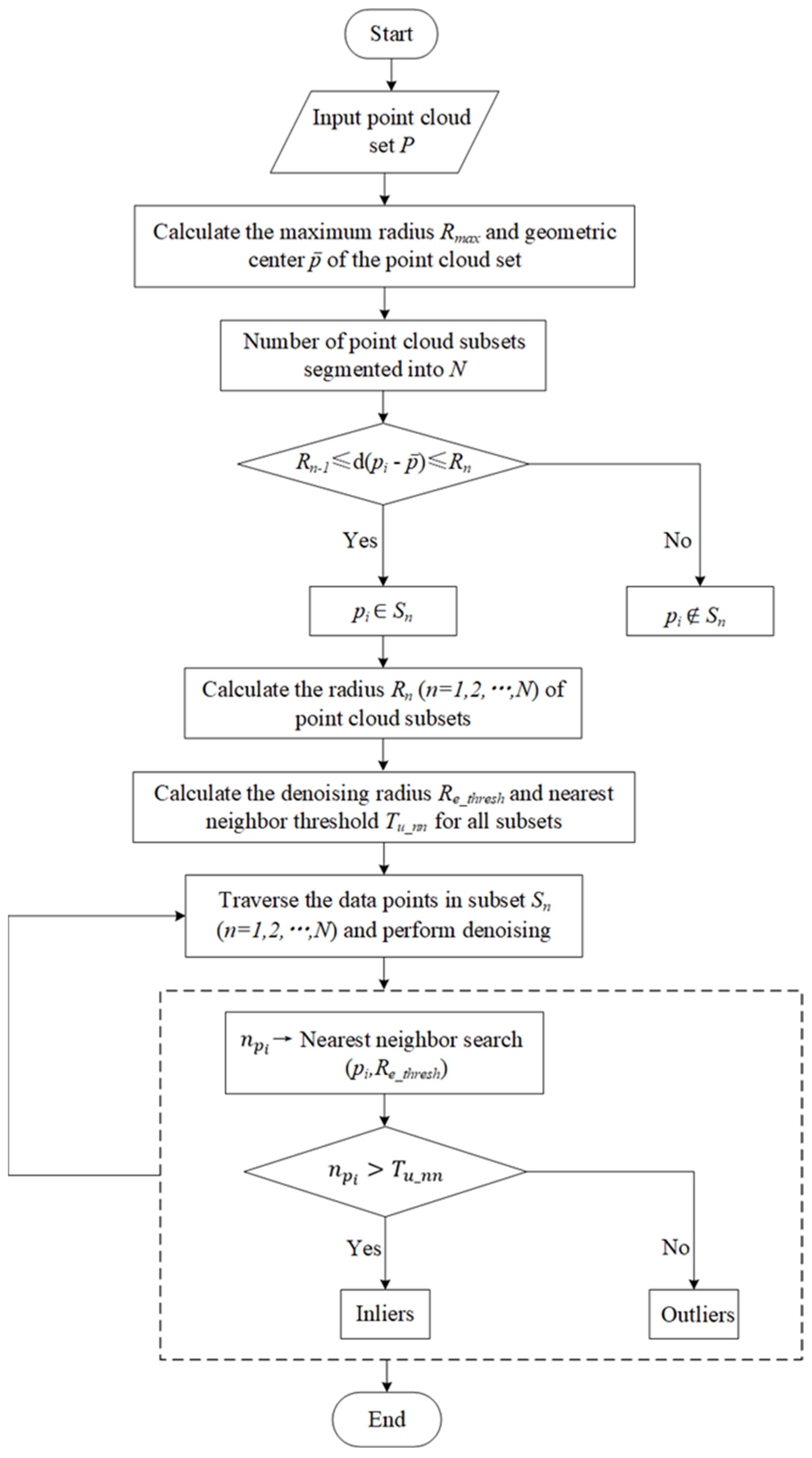

2. Methodology

2.1. Calculation of the Maximum Radius

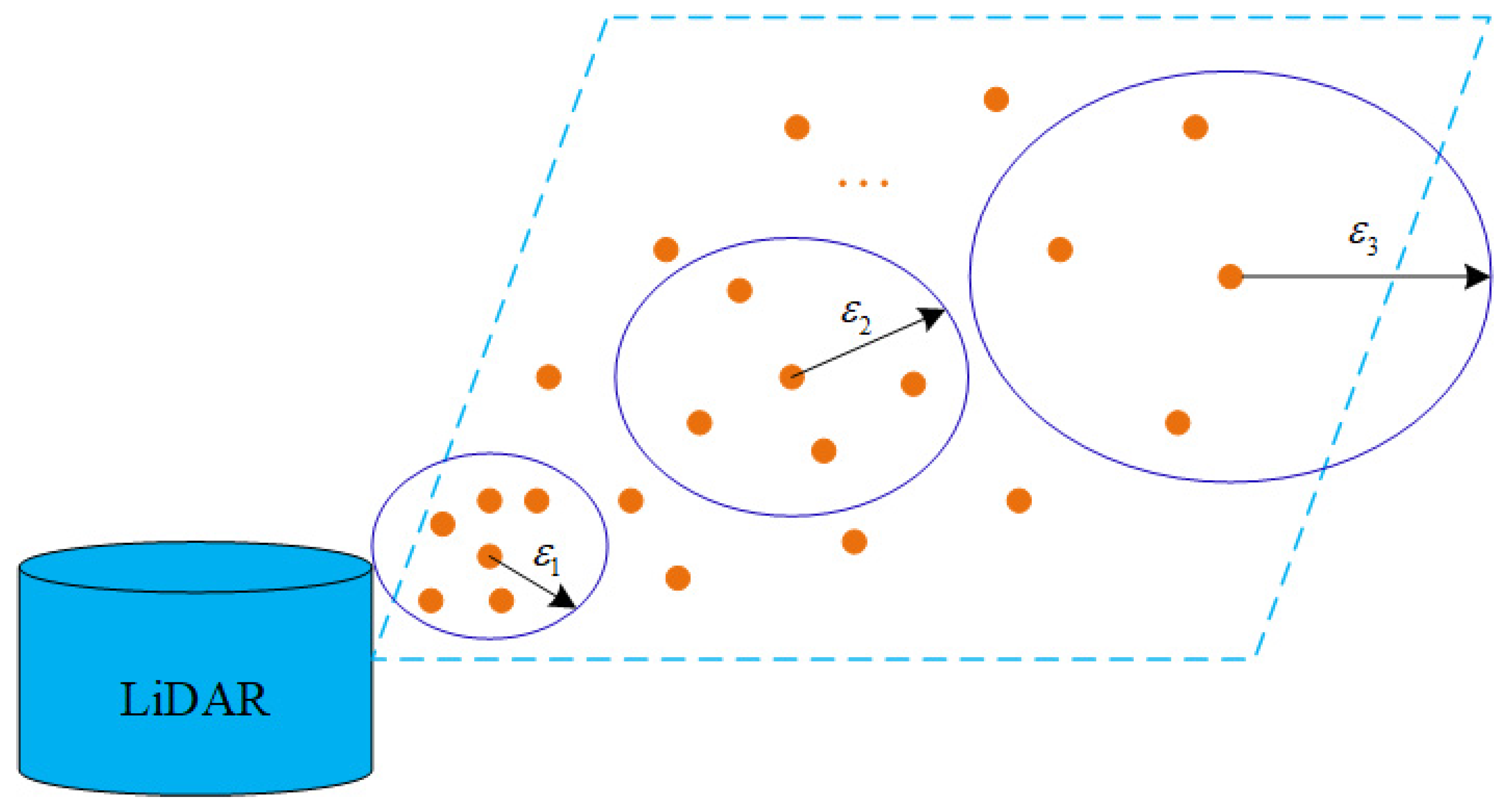

2.2. Calculation of the Number of Concentric Spheres

2.3. Finite Measurable Segmentation of Point Clouds in Spherical Space

2.3.1. Equidistant Segmentation (ES) and Proportional Segmentation (PS)

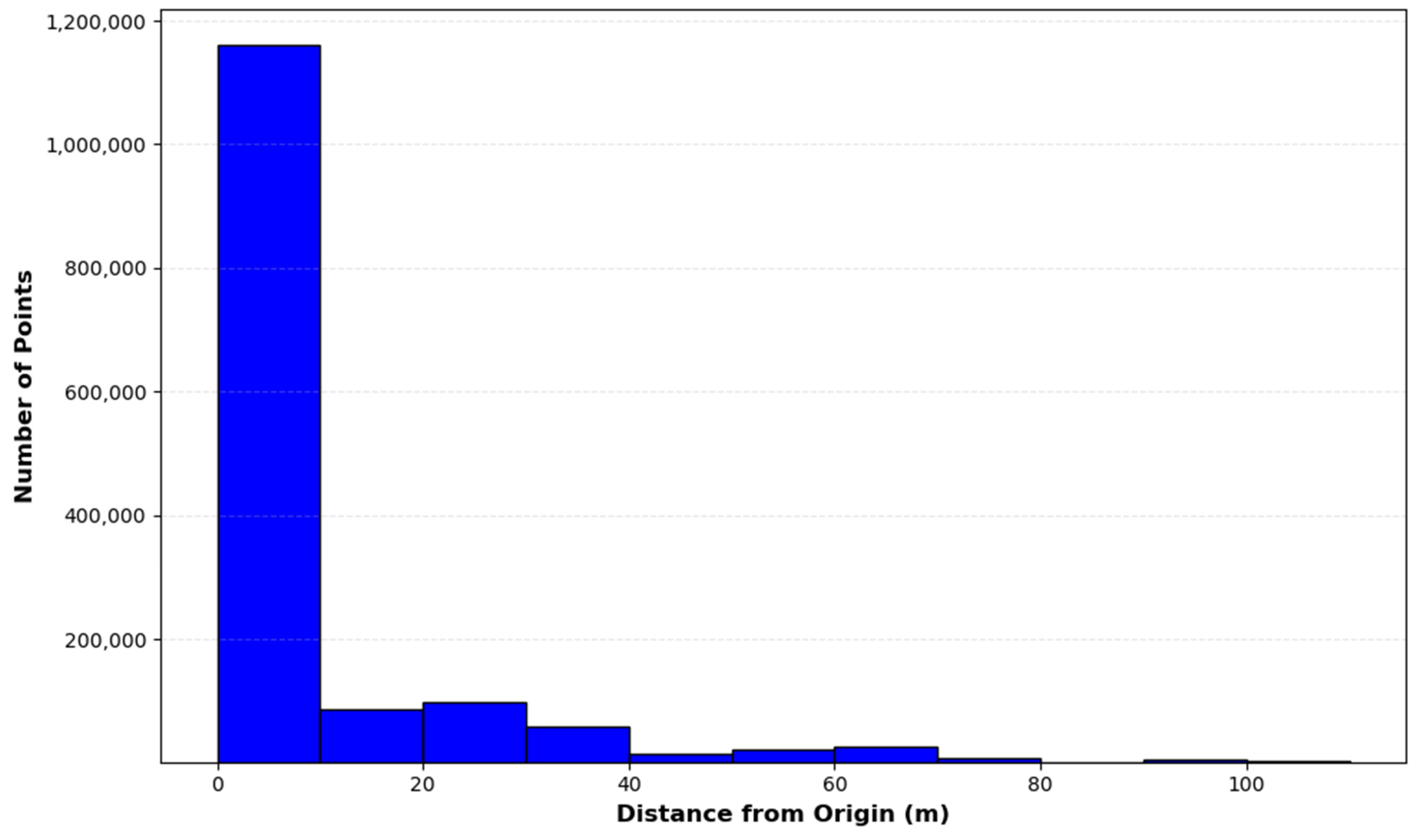

2.3.2. Distribution of Point Clouds in Different Hierarchical Spaces

2.4. Calculation of Point Cloud Hierarchical Denoising Parameters

2.4.1. Calculation of Point Cloud Hierarchical Denoising Parameters under Equidistant Segmentation

2.4.2. Calculation of Point Cloud Hierarchical Denoising Parameters under Proportional Segmentation

3. Experiment

3.1. Experimental Setup

3.1.1. Dataset Description

3.1.2. Experimental Environment and Tools

- (1)

- Hardware environment

- CPU: High-performance Intel® Core™ i9-14900 with a clock speed of up to 5.8 GHz, providing robust data processing capabilities.

- Graphics Card: NVIDIA® GeForce RTX™ 4060, supports efficient graphical processing.

- Memory: 32 GB of Samsung DDR5, ensuring ample data processing and storage capacity.

- (2)

- Software environmentThe experiments were performed on a Windows 10 operating system. Data processing and analysis primarily relied on the Python 3.8.8 environment and its associated scientific computing libraries. The tools used included the Spyder 5.4.3 integrated development environment, Pandas 1.4.3 and NumPy 1.22.0 for data processing, SciPy 1.7.3 for scientific calculations, and Open3D 0.13.0 for visualization and processing of point cloud datasets.

3.2. Experimental Result

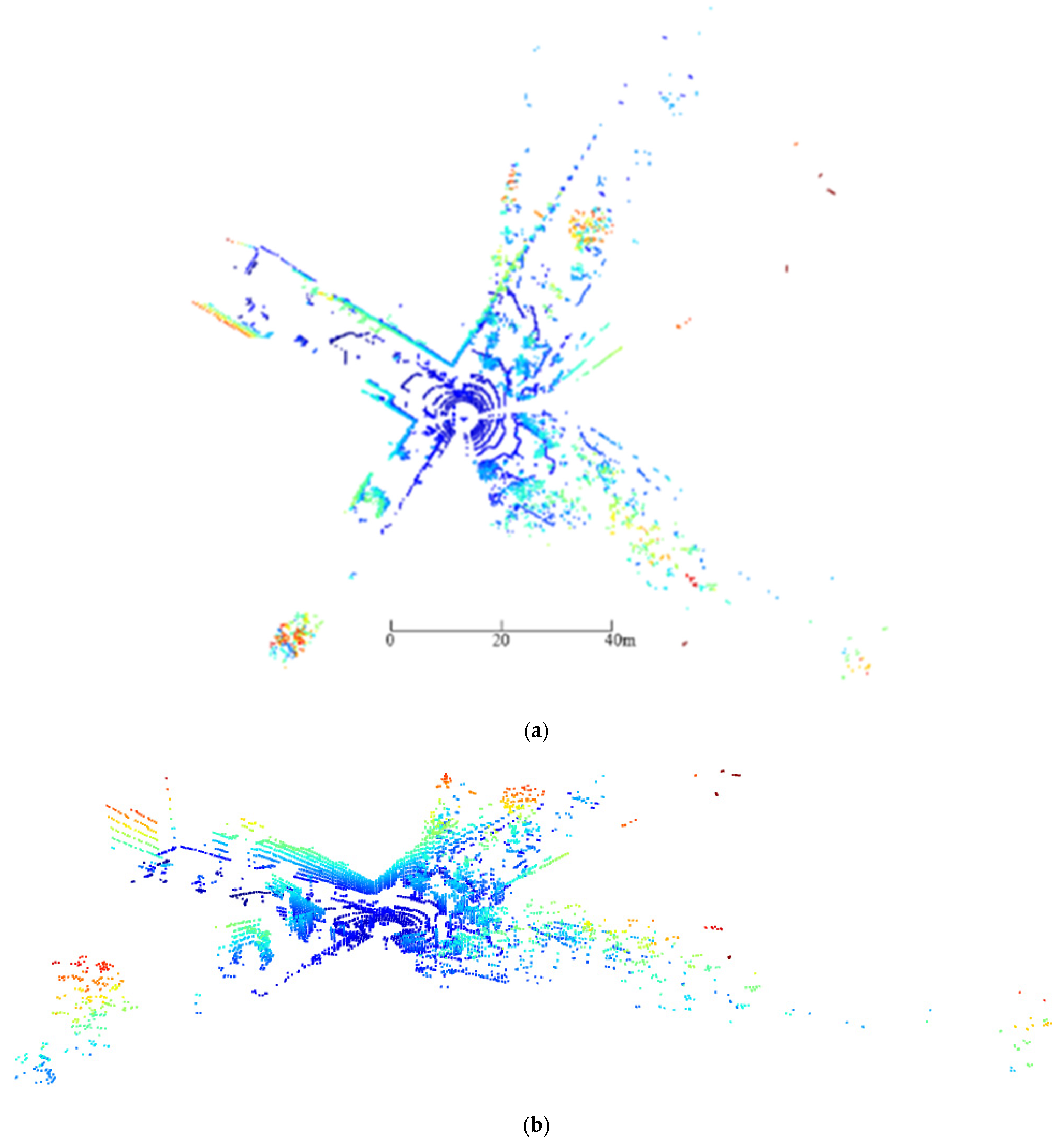

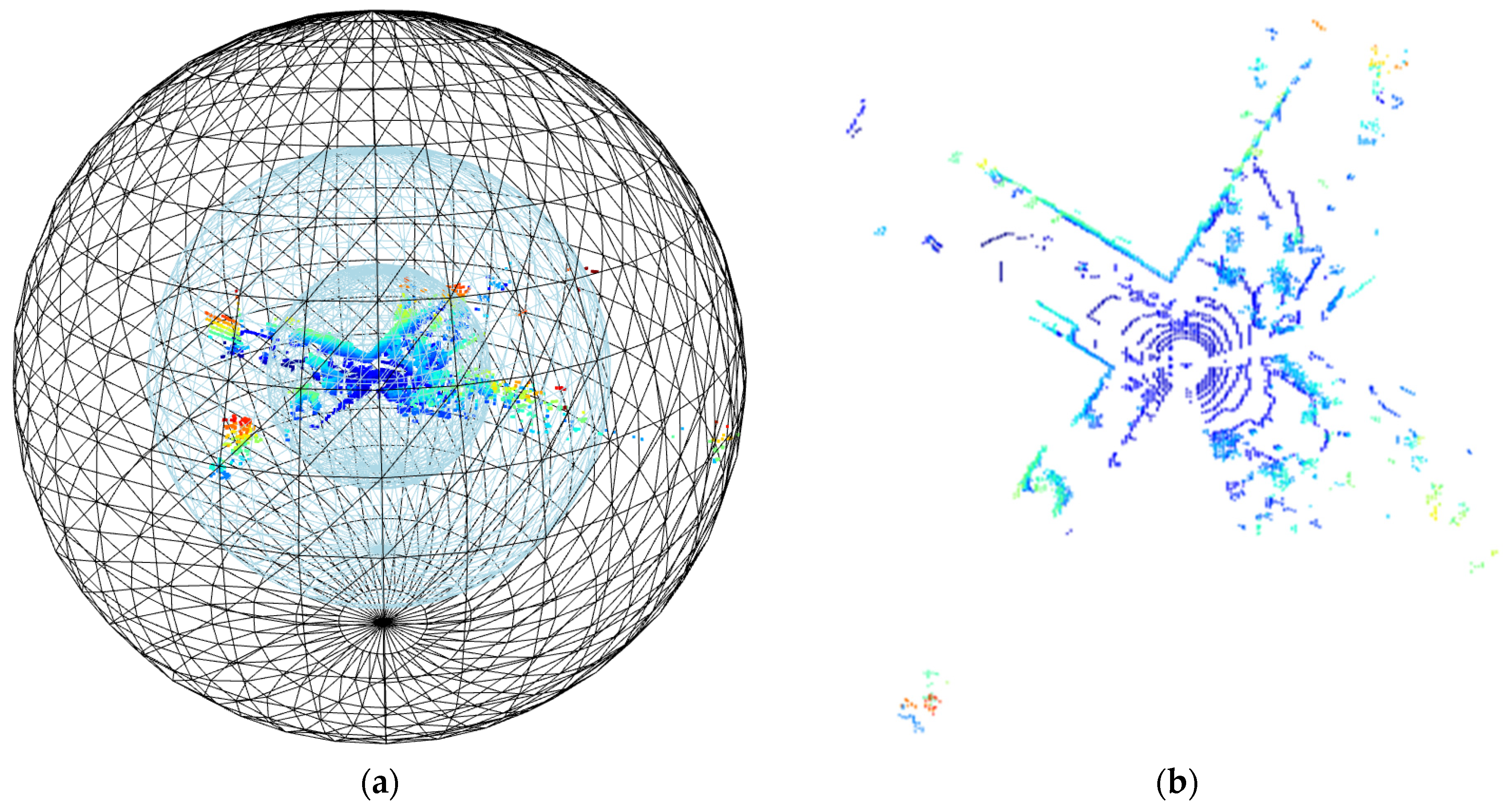

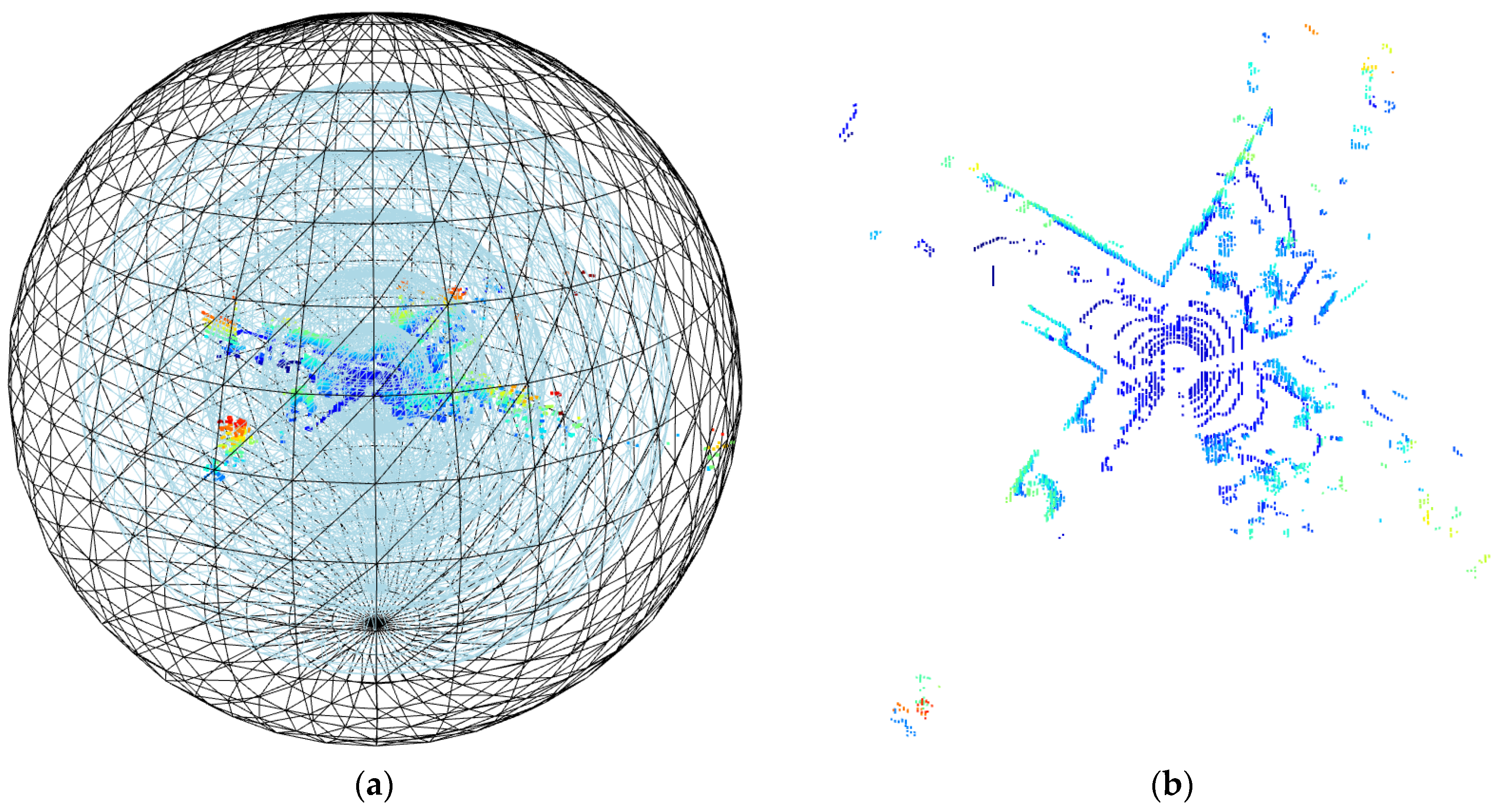

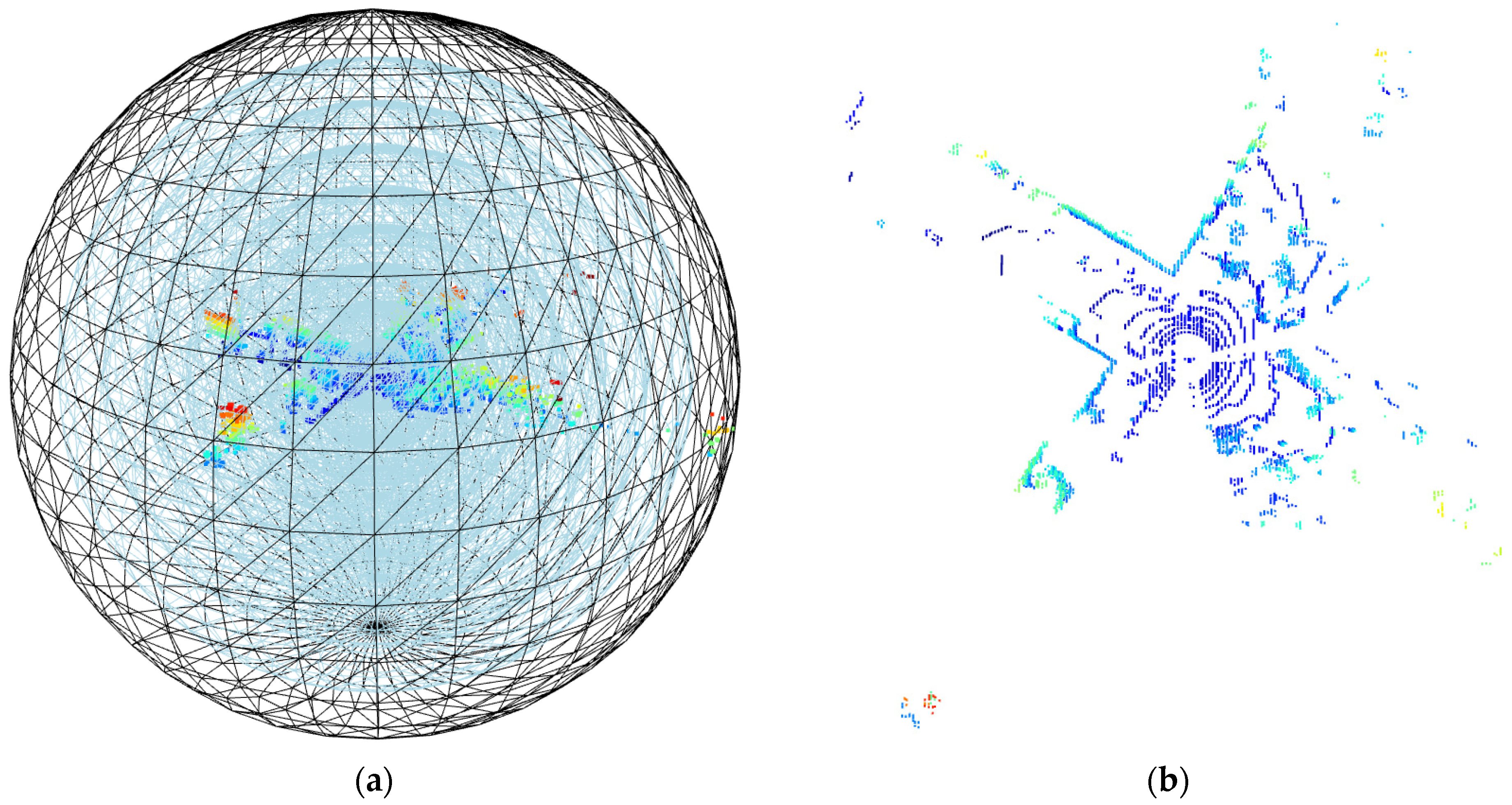

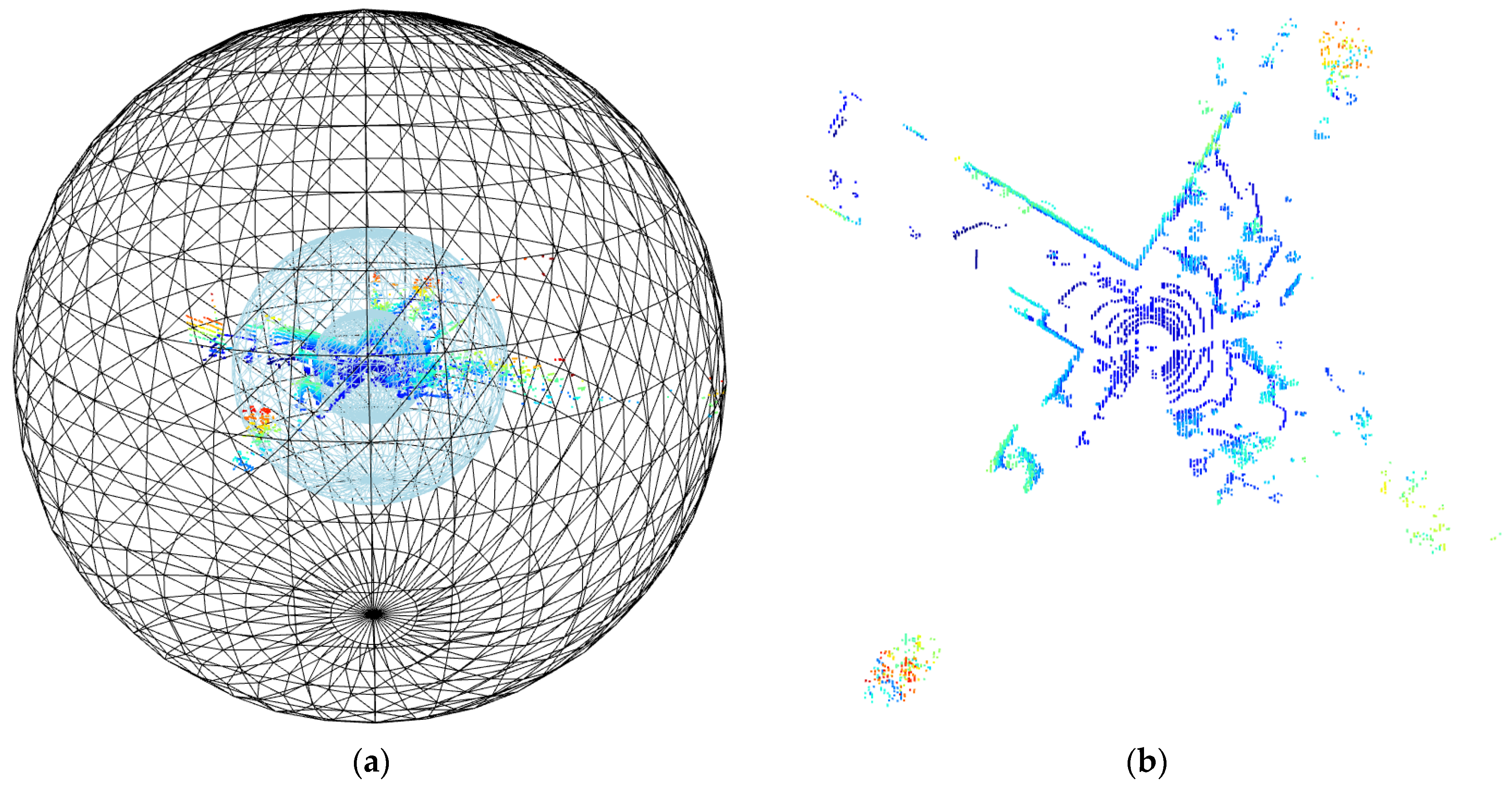

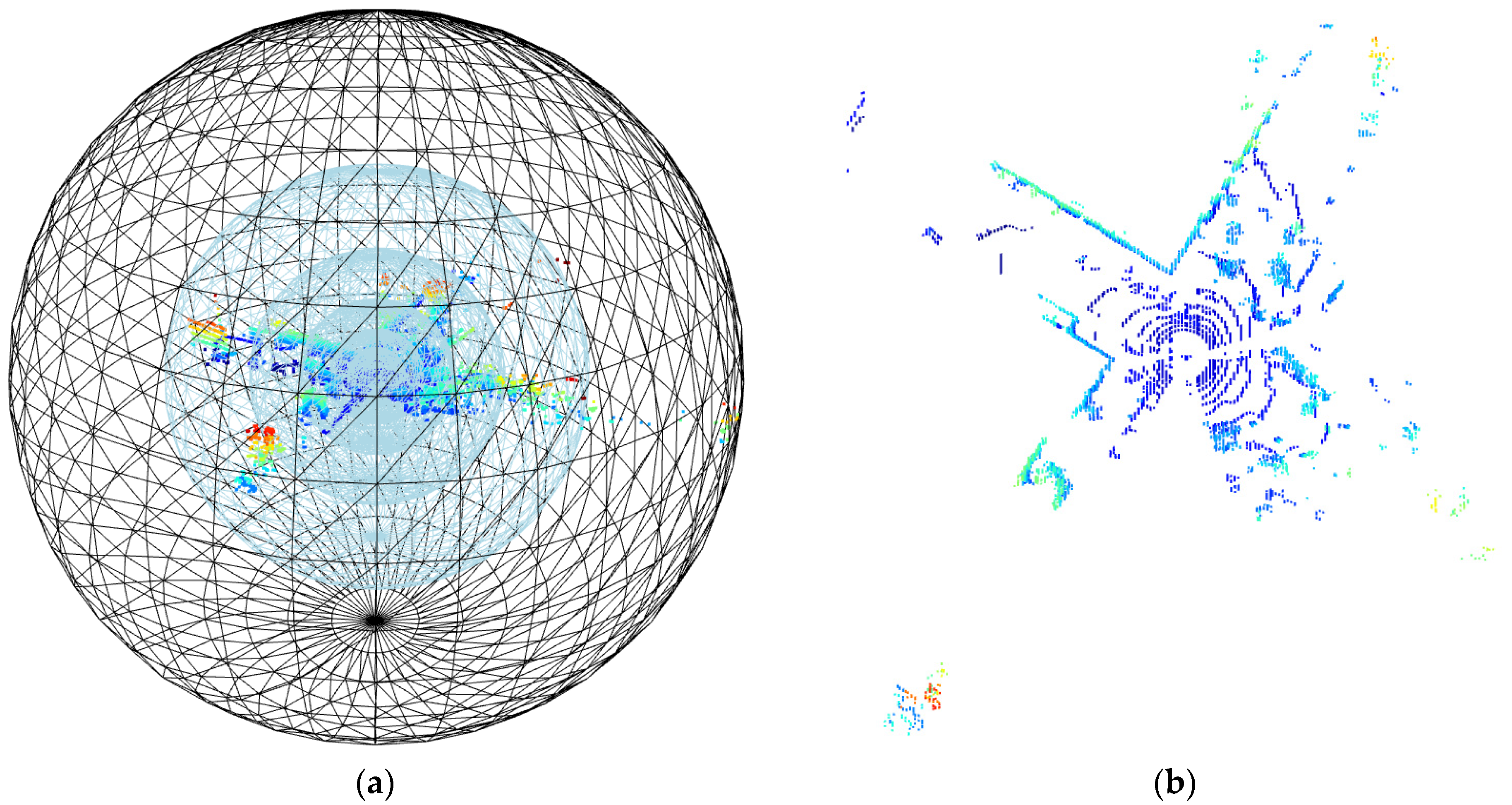

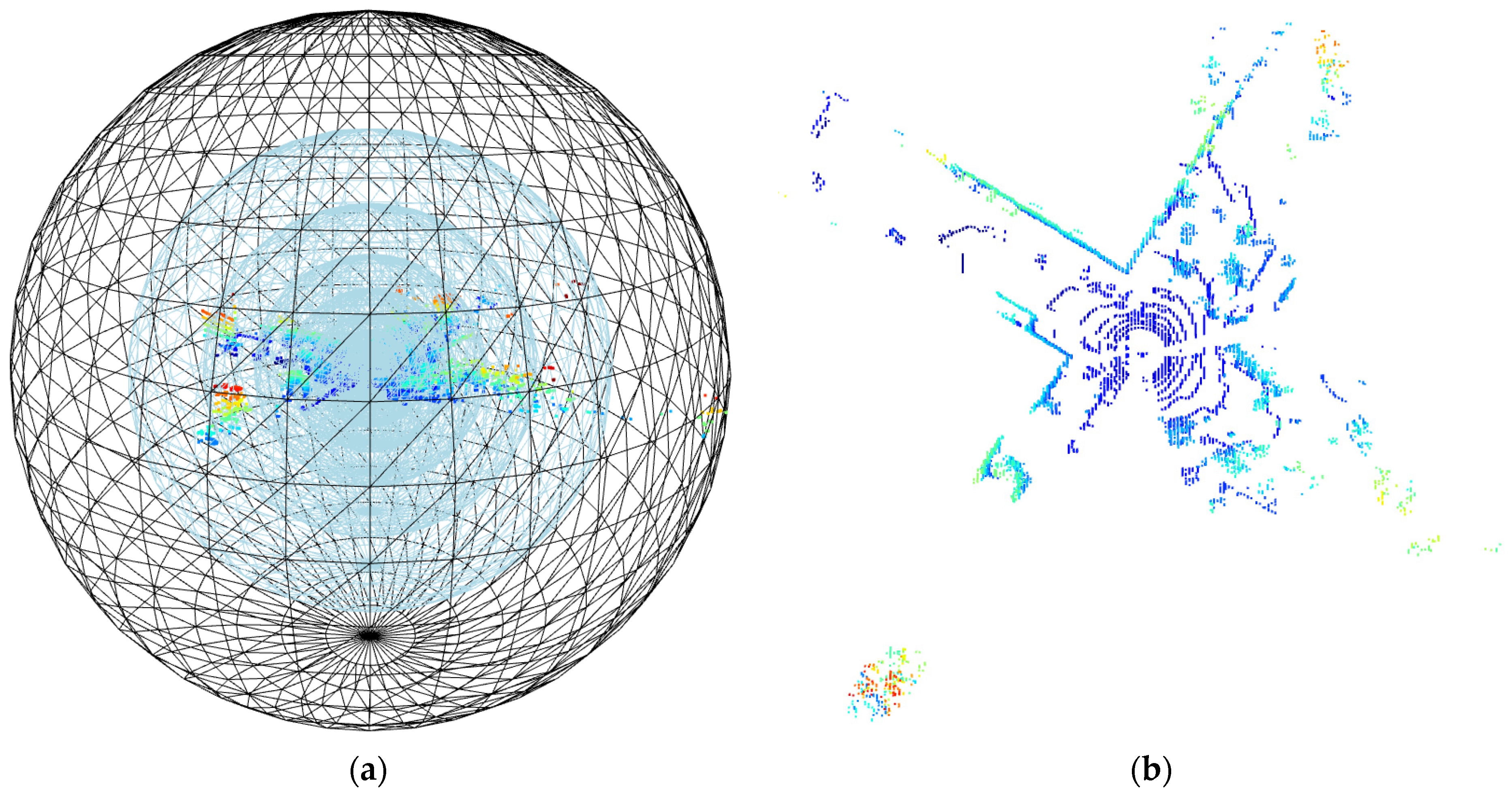

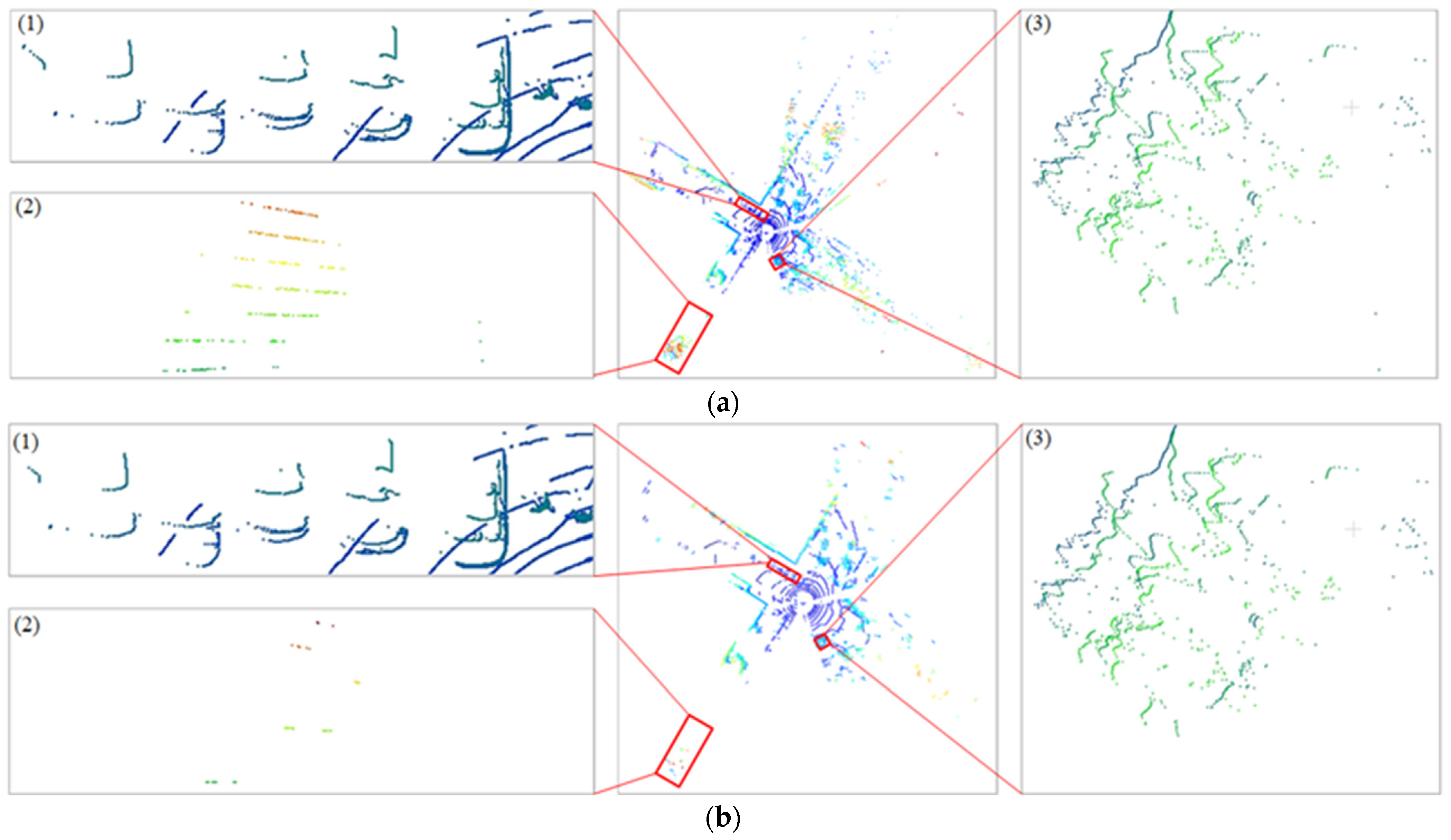

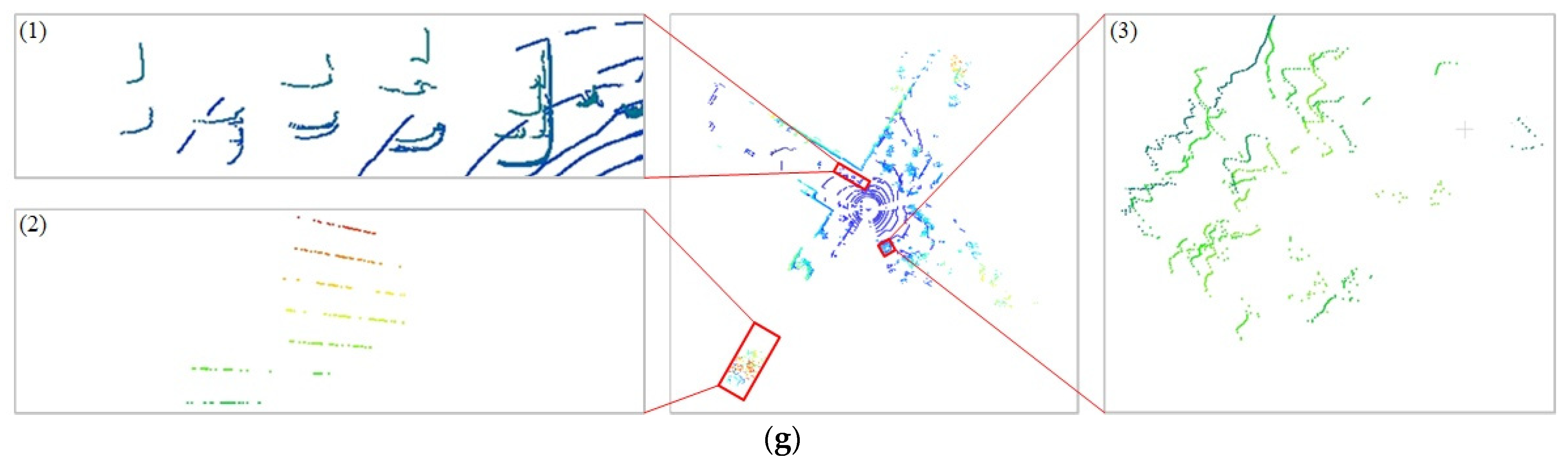

3.2.1. Visualization Comparison of Point Cloud before and after Hierarchical Denoising

- (1)

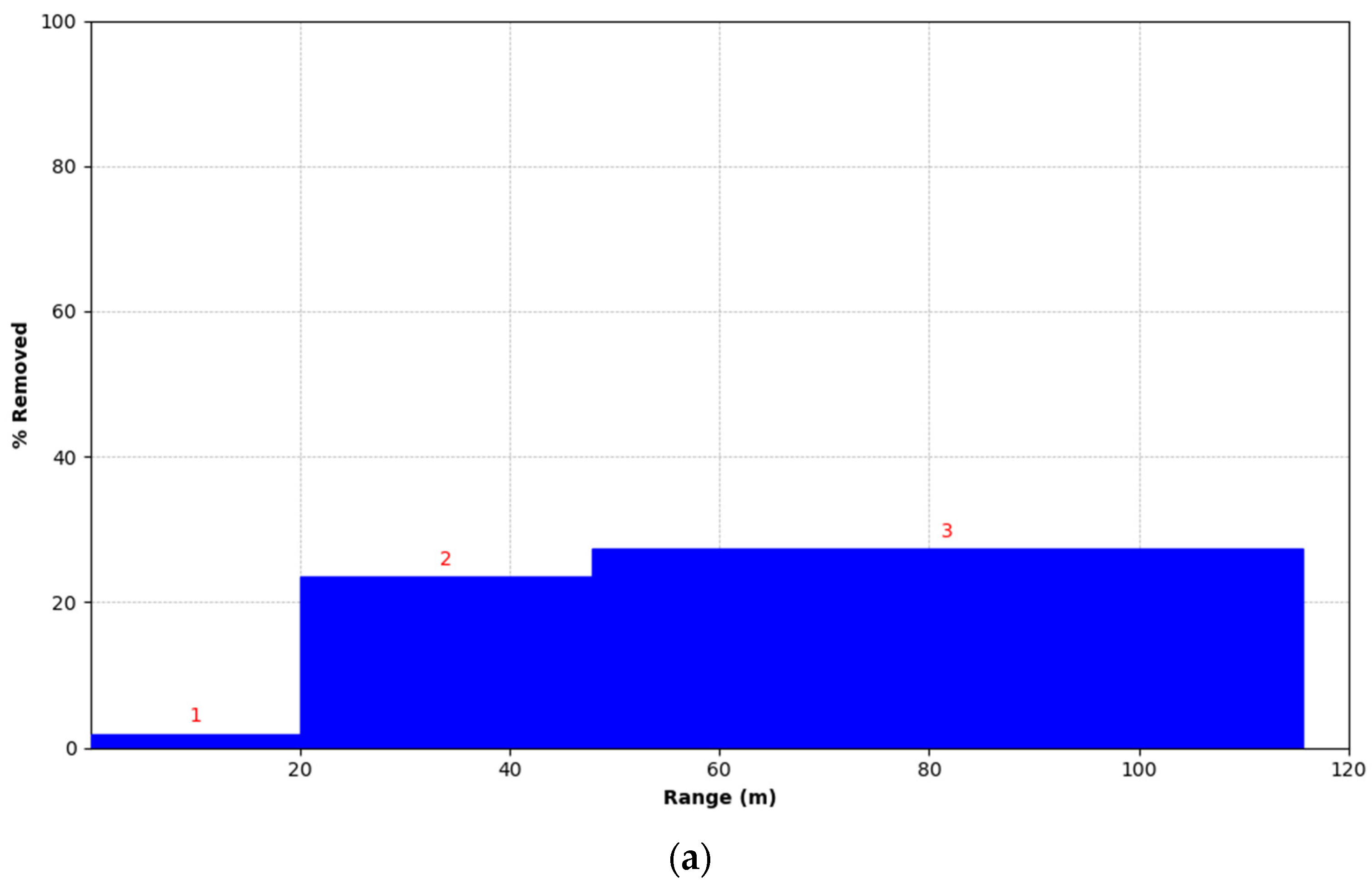

- Spatial distribution of data points in different ranges

- (2)

- Denoising of point cloud data under equidistant segmentation in spherical space

- (3)

- Denoising of point cloud data under proportional segmentation in spherical space

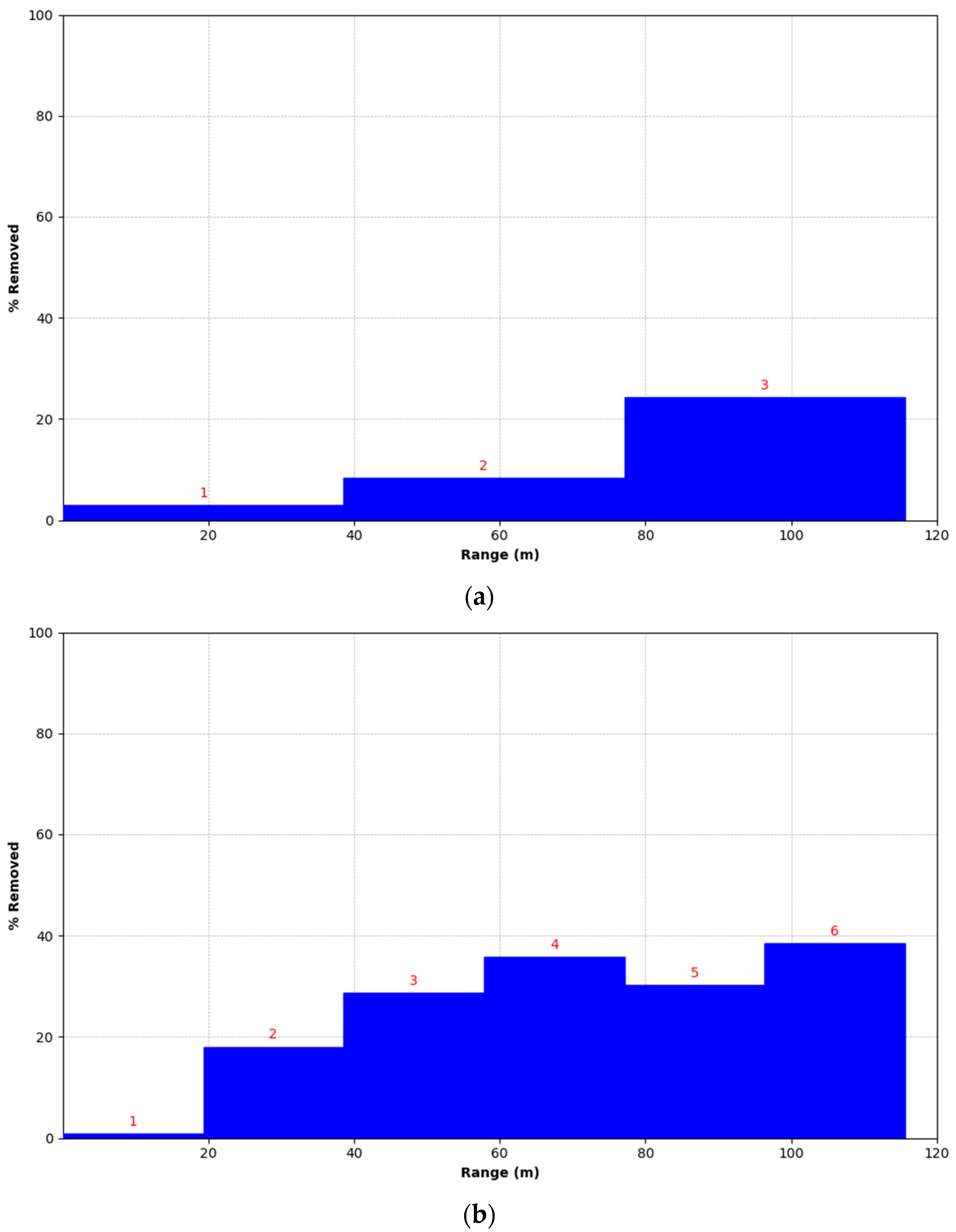

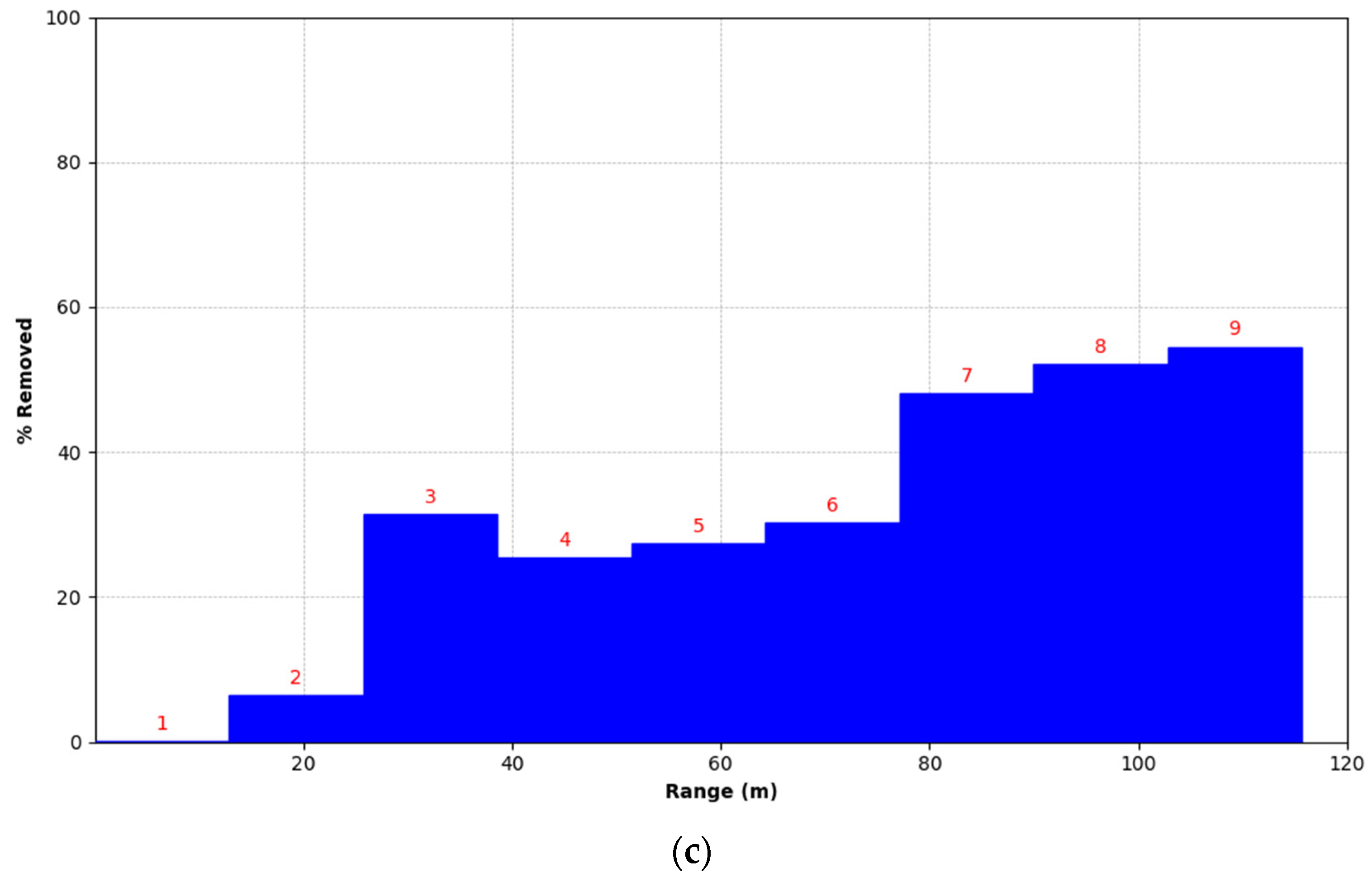

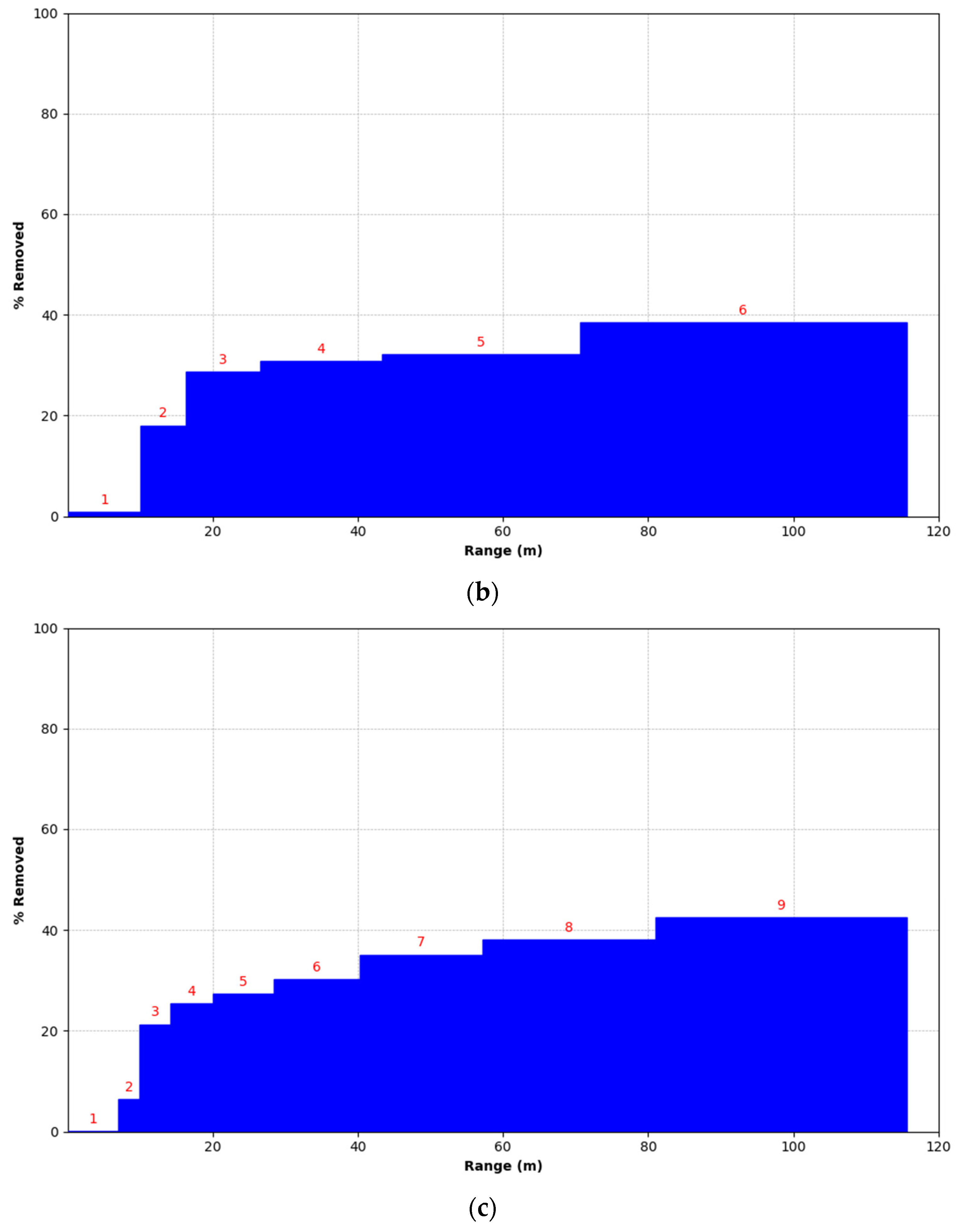

3.2.2. Quantitative Evaluation of Point Cloud Distribution under Equidistant and Proportional Segmentations

- (1)

- The radius of point cloud subsets under equidistant and proportional segmentations

- (2)

- The overall standard deviation of point clouds after denoising

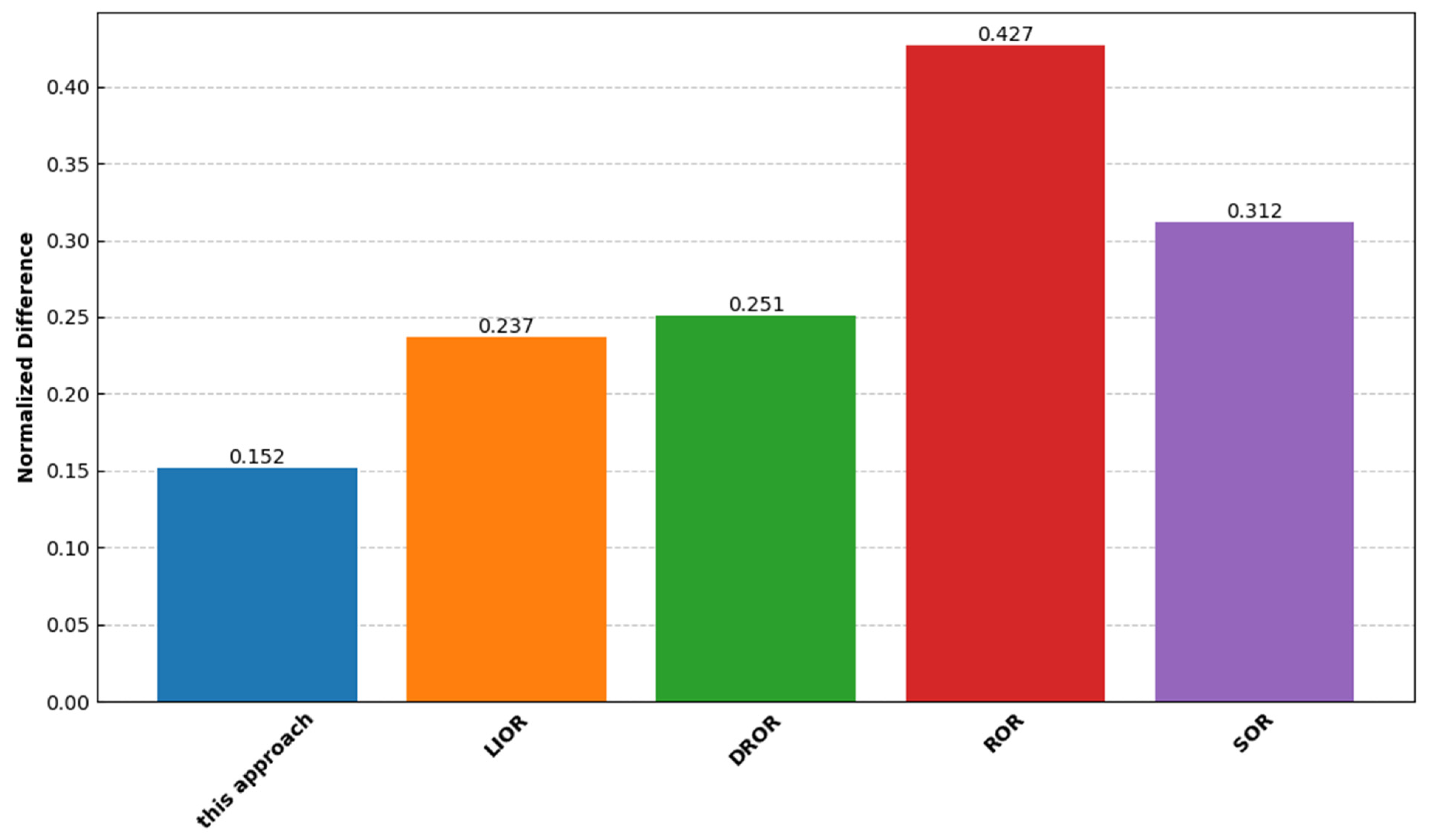

3.2.3. Point Cloud Denoising Effectiveness Assessment

- (1)

- The denoising performance of different denoising methods in outdoor real-world scenes

- (2)

- Comparing the efficiency of point cloud denoising methods

- (3)

- Quantitative evaluation of different denoising methods

4. Discussion

4.1. Efficacy of Point Cloud Hierarchical Denoising with Spherical Space Measurable Segmentation

4.2. Limitations of Point Cloud Hierarchical Denoising with Spherical Space Measurable Segmentation

4.3. The Practicality Extension and Computational Efficiency Optimization of the Hierarchical Denoising Method

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yang, B.; Haala, N.; Dong, Z. Progress and Perspectives of Point Cloud Intelligence. Geo-Spat. Inf. Sci. 2023, 26, 189–205. [Google Scholar] [CrossRef]

- Wirth, F.; Quchl, J.; Ota, J.; Stiller, C. PointAtMe: Efficient 3D Point Cloud Labeling in Virtual Reality. In Proceedings of the 2019 30th IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1693–1698. [Google Scholar]

- Valenzuela-Urrutia, D.; Muñoz-Riffo, R.; Ruiz-del-Solar, J. Virtual Reality-Based Time-Delayed Haptic Teleoperation Using Point Cloud Data. J. Intell. Robot. Syst. 2019, 96, 387–400. [Google Scholar] [CrossRef]

- Tredinnick, R.; Broecker, M.; Ponto, K. Progressive Feedback Point Cloud Rendering for Virtual Reality Display. In Proceedings of the 2016 IEEE Virtual Reality Conference (VR), Greenville, SC, USA, 19–23 March 2016; pp. 301–302. [Google Scholar]

- Alexiou, E.; Upenik, E.; Ebrahimi, T. Towards Subjective Quality Assessment of Point Cloud Imaging in Augmented Reality. In Proceedings of the 2017 19th IEEE International Workshop on Multimedia Signal Processing (MMSP), Luton, UK, 16–18 October 2017. [Google Scholar]

- Ma, K.; Lu, F.; Chen, X.W. Robust Planar Surface Extraction from Noisy and Semi-dense 3D Point Cloud for Augmented Reality. In Proceedings of the 2016 International Conference on Virtual Reality and Visualization (ICVRV), Hangzhou, China, 24–26 September 2016; pp. 453–458. [Google Scholar]

- Lin, X.H.; Wang, F.H.; Yang, B.S.; Zhang, W.W. Autonomous Vehicle Localization with Prior Visual Point Cloud Map Constraints in GNSS-Challenged Environments. Remote Sens. 2021, 13, 506. [Google Scholar] [CrossRef]

- Chen, S.H.; Liu, B.A.; Feng, C.; Vallespi-Gonzalez, C.; Wellington, C. 3D Point Cloud Processing and Learning for Autonomous Driving: Impacting Map Creation, Localization, and Perception. IEEE Signal Process. Mag. 2021, 38, 68–86. [Google Scholar] [CrossRef]

- Xiao, Z.P.; Dai, B.; Li, H.D.; Wu, T.; Xu, X.; Zeng, Y.J.; Chen, T.T. Gaussian Process Regression-Based Robust Free Space Detection for Autonomous Vehicle by 3-D Point Cloud and 2-D Appearance Information Fusion. Int. J. Adv. Robot. Syst. 2017, 14, 172988141771705. [Google Scholar] [CrossRef]

- Abellan, A.; Derron, M.-H.; Jaboyedoff, M. “Use of 3D Point Clouds in Geohazards” Special Issue: Current Challenges and Future Trends. Remote Sens. 2016, 8, 130. [Google Scholar] [CrossRef]

- Cai, X.H.; Lü, Q.; Zheng, J.; Liao, K.W.; Liu, J. An Efficient Adaptive Approach to Automatically Identify Rock Discontinuity Parameters Using 3D Point Cloud Model from Outcrops. Geol. J. 2023, 58, 2195–2210. [Google Scholar] [CrossRef]

- Jung, M.Y.; Jung, J.H. A Scalable Method to Improve Large-Scale Lidar Topographic Differencing Results. Remote Sens. 2023, 15, 4289. [Google Scholar] [CrossRef]

- Mammoliti, E.; Di Stefano, F.; Fronzi, D.; Mancini, A.; Malinverni, E.S.; Tazioli, A. A Machine Learning Approach to Extract Rock Mass Discontinuity Orientation and Spacing, from Laser Scanner Point Clouds. Remote Sens. 2022, 14, 2365. [Google Scholar] [CrossRef]

- Javaheri, A.; Brites, C.; Pereira, F.; Ascenso, J. Subjective and Objective Quality Evaluation of 3D Point Cloud Denoising Algorithms. In Proceedings of the 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017; pp. 1–6. [Google Scholar]

- Mian, A.; Bennamoun, M.; Owens, R. On the Repeatability and Quality of Keypoints for Local Feature-Based 3D Object Retrieval from Cluttered Scenes. Int. J. Comput. Vis. 2010, 89, 348–361. [Google Scholar] [CrossRef]

- Digne, J. Similarity Based Filtering of Point Clouds. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 73–79. [Google Scholar]

- Cheng, D.; Zhao, D.; Zhang, J.; Wei, C.; Tian, D. PCA-Based Denoising Algorithm for Outdoor Lidar Point Cloud Data. Sensors 2021, 21, 3703. [Google Scholar] [CrossRef] [PubMed]

- Sun, G.; Chu, C.; Mei, J.; Li, W.; Su, Z. Structure-Aware Denoising for Real-world Noisy Point Clouds with Complex Structures. Comput.-Aided Des. 2022, 149, 103275. [Google Scholar] [CrossRef]

- Zheng, Z.; Zha, B.; Zhou, Y.; Huang, J.; Xuchen, Y.; Zhang, H. Single-Stage Adaptive Multi-Scale Point Cloud Noise Filtering Algorithm Based on Feature Information. Remote Sens. 2022, 14, 367. [Google Scholar] [CrossRef]

- Shi, C.; Wang, C.; Liu, X.; Sun, S.; Xiao, B.; Li, X.; Li, G. Three-Dimensional Point Cloud Denoising via a Gravitational Feature Function. Appl. Opt. 2022, 61, 1331–1343. [Google Scholar] [CrossRef]

- Charron, N.; Phillips, S.; Waslander, S.L. De-noising of Lidar Point Clouds Corrupted by Snowfall. In Proceedings of the 2018 15th Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 8–10 May 2018; pp. 254–261. [Google Scholar]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Park, J.-I.; Park, J.; Kim, K.-S. Fast and Accurate Desnowing Algorithm for LiDAR Point Clouds. IEEE Access 2020, 8, 160202–160212. [Google Scholar] [CrossRef]

- Elseberg, J.; Borrmann, D.; Nüchter, A. Efficient Processing of Large 3D Point Clouds. In Proceedings of the 2011 XXIII International Symposium on Information, Communication and Automation Technologies, Sarajevo, Bosnia and Herzegovina, 27–29 October 2011; pp. 1–7. [Google Scholar]

| Number of Subsets | Segmentation Strategy | TSD |

|---|---|---|

| 3 | ES | 1.266 |

| PS | 0.947 | |

| 6 | ES | 1.177 |

| PS | 0.683 | |

| 9 | ES | 0.983 |

| PS | 0.416 |

| ROR | SOR | DROR | LIOR | This Approach | |

|---|---|---|---|---|---|

| Denoising time (s) | 8.154 | 0.503 | 7.731 | 3.287 | 2.793 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Chen, Y.; Xu, H. Point Cloud Denoising in Outdoor Real-World Scenes Based on Measurable Segmentation. Remote Sens. 2024, 16, 2347. https://doi.org/10.3390/rs16132347

Wang L, Chen Y, Xu H. Point Cloud Denoising in Outdoor Real-World Scenes Based on Measurable Segmentation. Remote Sensing. 2024; 16(13):2347. https://doi.org/10.3390/rs16132347

Chicago/Turabian StyleWang, Lianchao, Yijin Chen, and Hanghang Xu. 2024. "Point Cloud Denoising in Outdoor Real-World Scenes Based on Measurable Segmentation" Remote Sensing 16, no. 13: 2347. https://doi.org/10.3390/rs16132347

APA StyleWang, L., Chen, Y., & Xu, H. (2024). Point Cloud Denoising in Outdoor Real-World Scenes Based on Measurable Segmentation. Remote Sensing, 16(13), 2347. https://doi.org/10.3390/rs16132347