A Novel Method for Cloud and Cloud Shadow Detection Based on the Maximum and Minimum Values of Sentinel-2 Time Series Images

Abstract

1. Introduction

2. Data

2.1. Sentinel-2 MSI

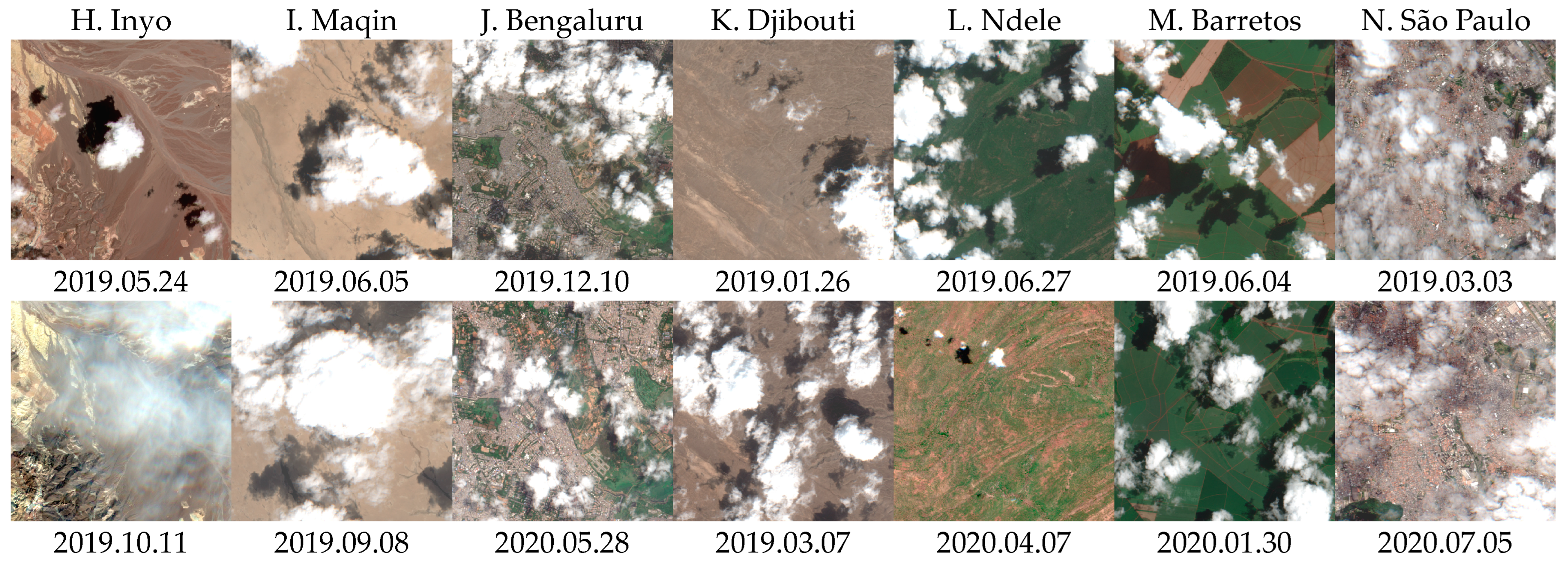

2.2. S2ccs Dataset

2.3. CloudSEN12 Dataset

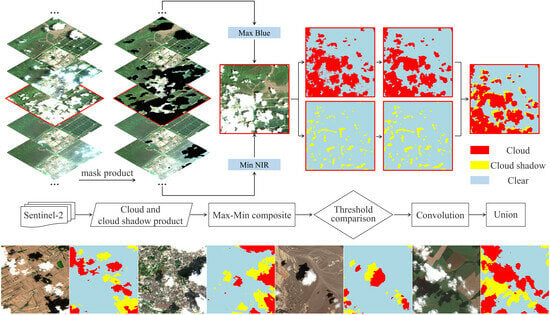

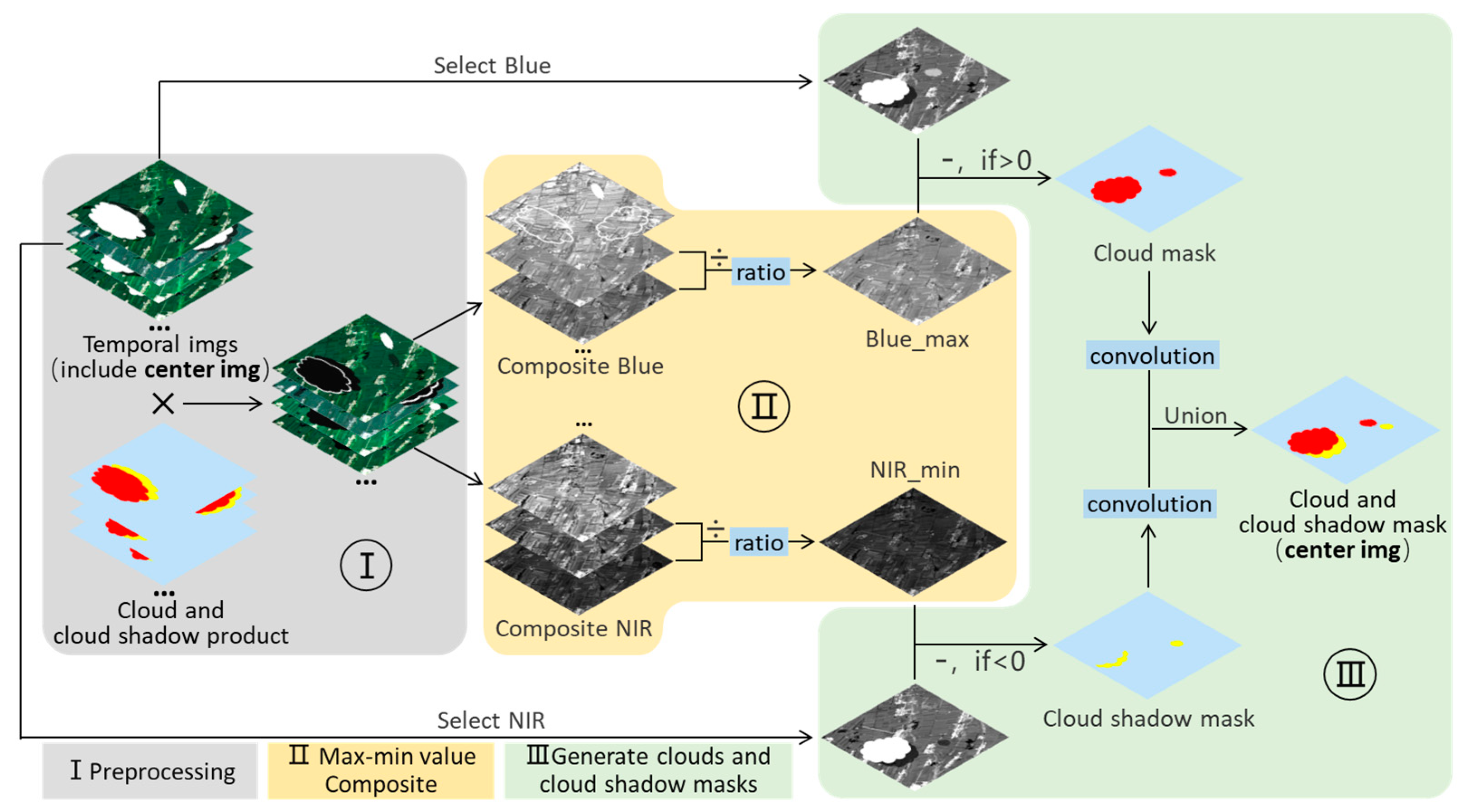

3. Method

3.1. Preprocessing

3.2. Maximum and Minimum Value Composite

3.3. Cloud and Cloud Shadow Extraction

4. Experiment

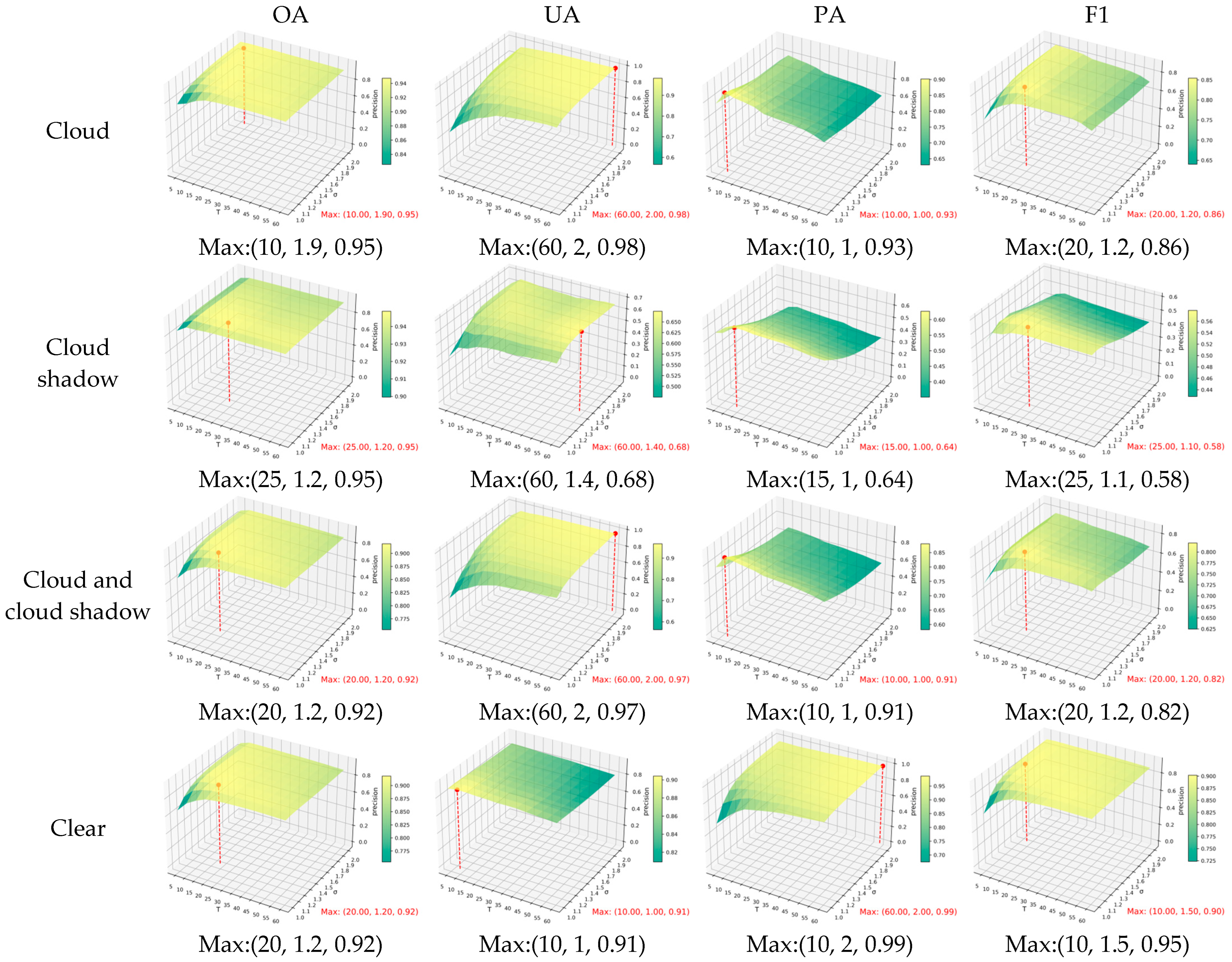

4.1. Sensitivity Experiments

4.1.1. Time Series Length and Max–Min Magnification

4.1.2. Convolution Kernel Size and Neighborhood Mean

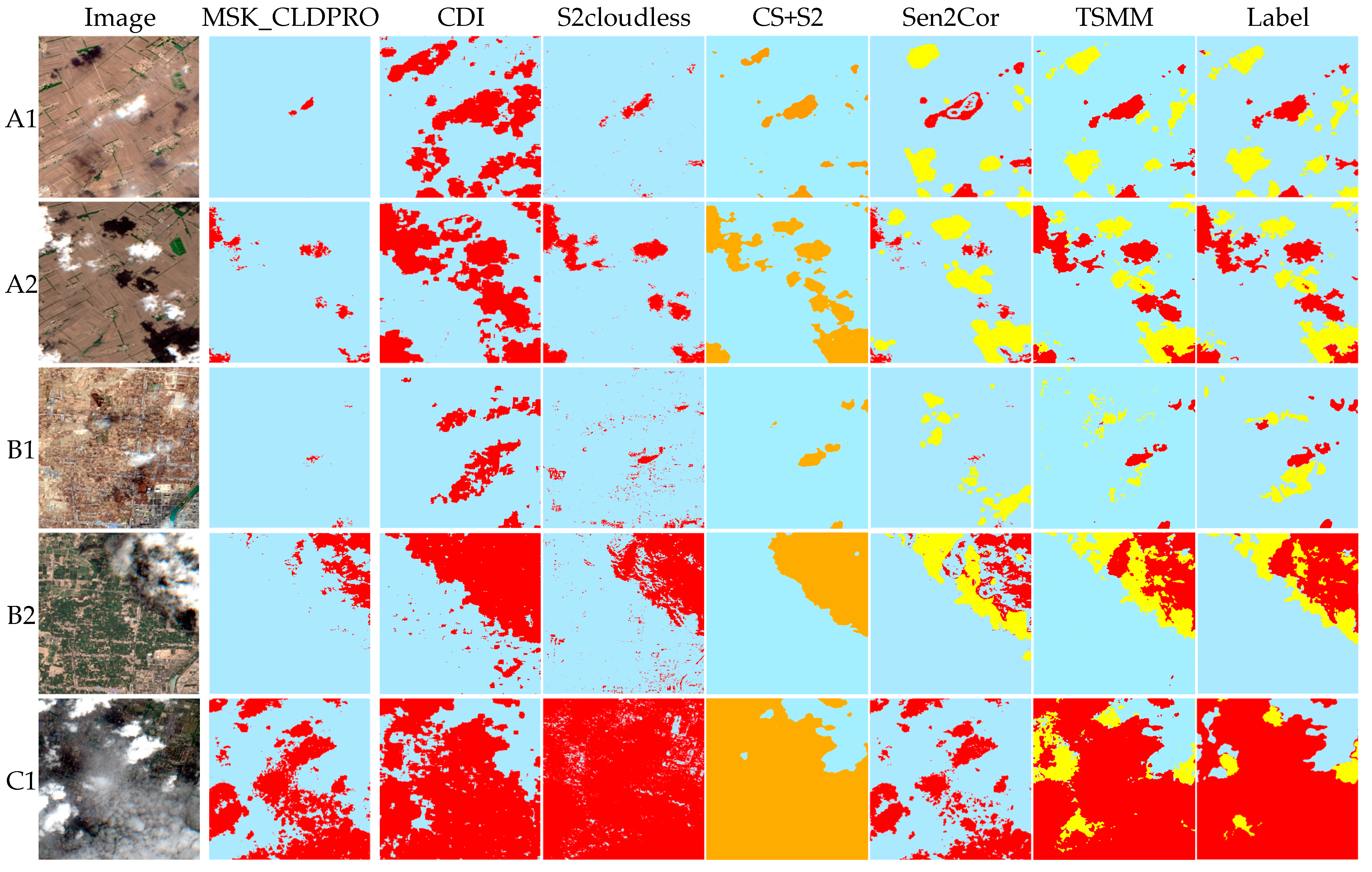

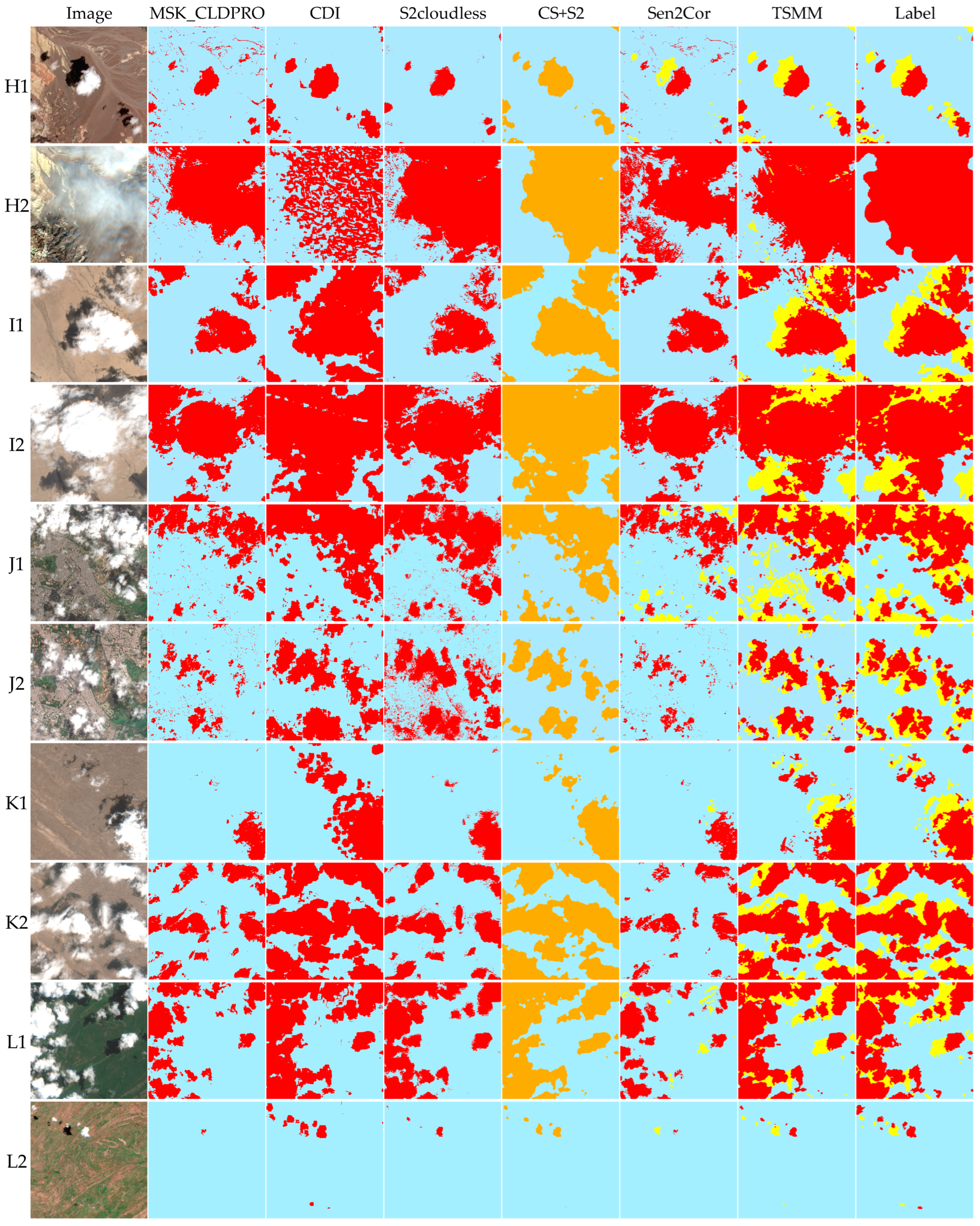

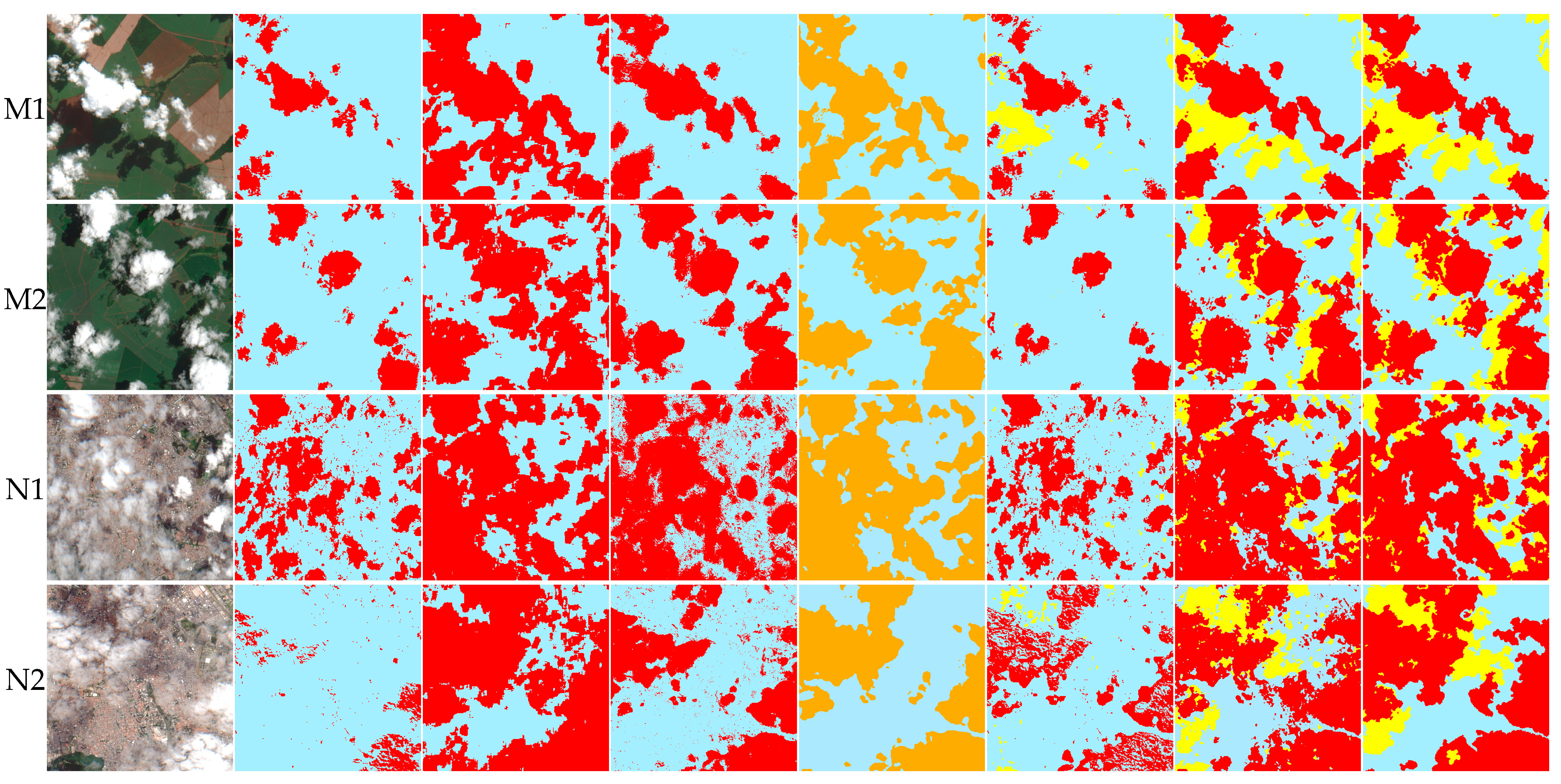

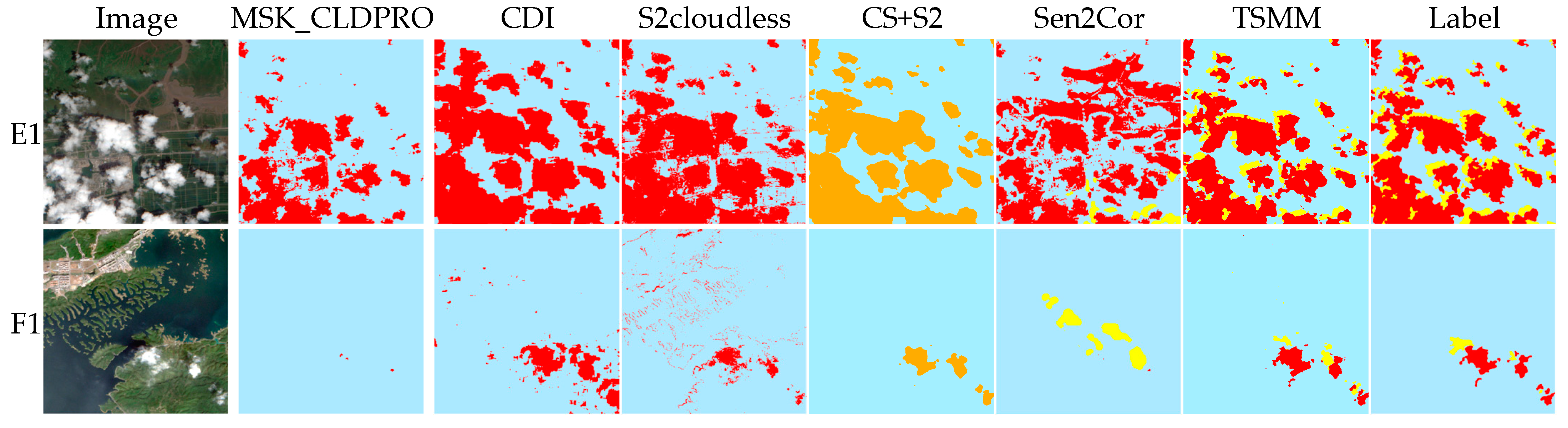

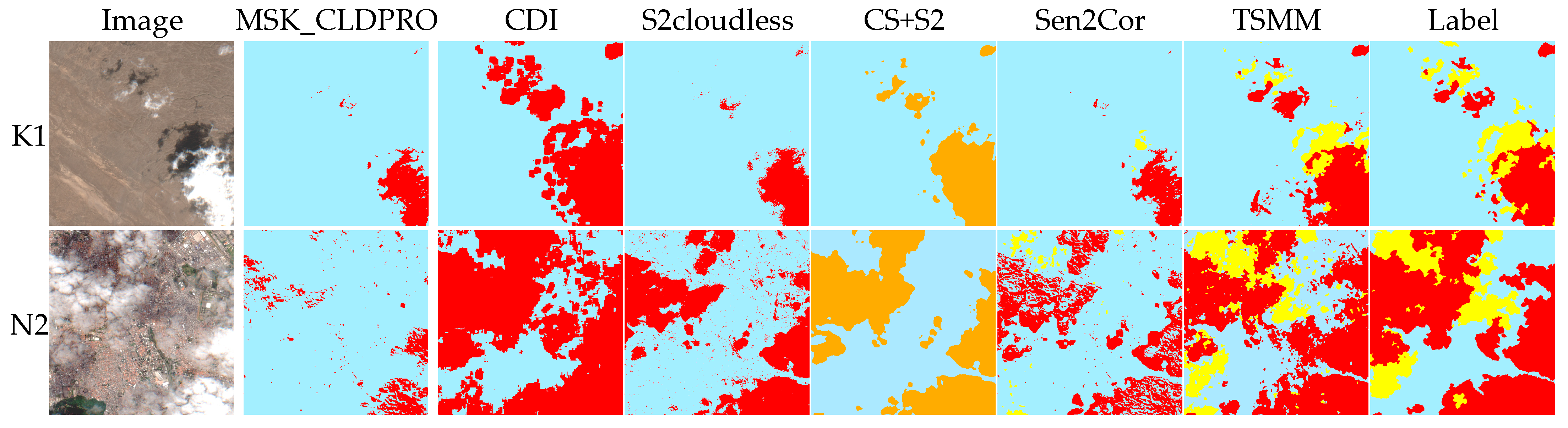

4.2. Qualitative Assessment

4.3. Quantitative Evaluation

5. Discussion

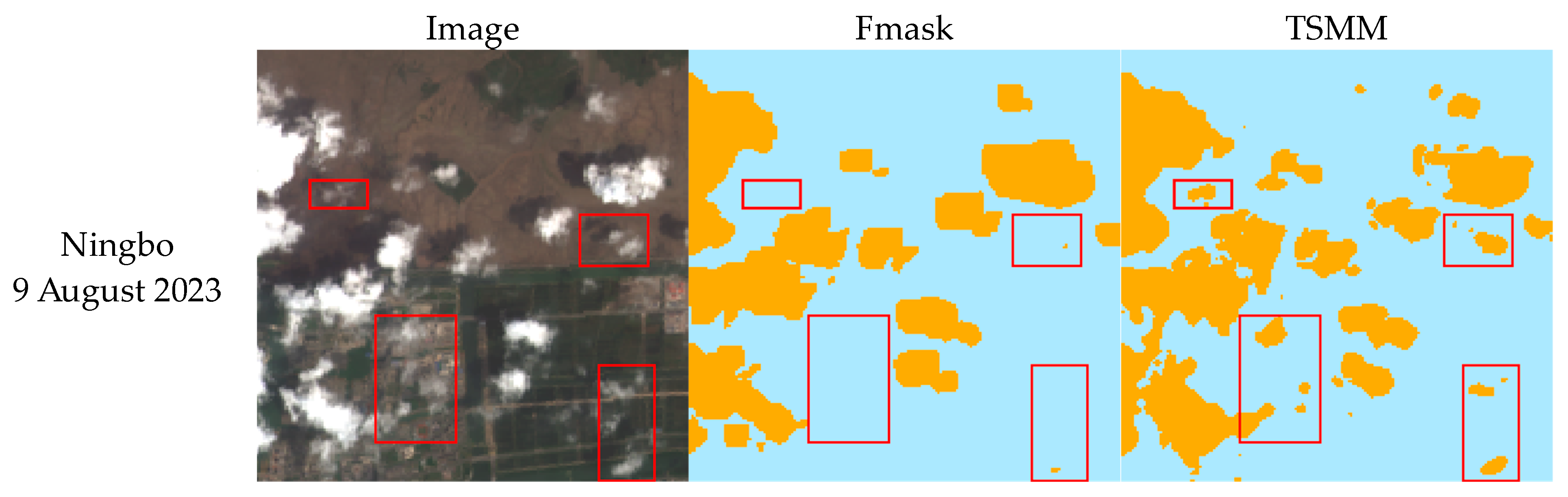

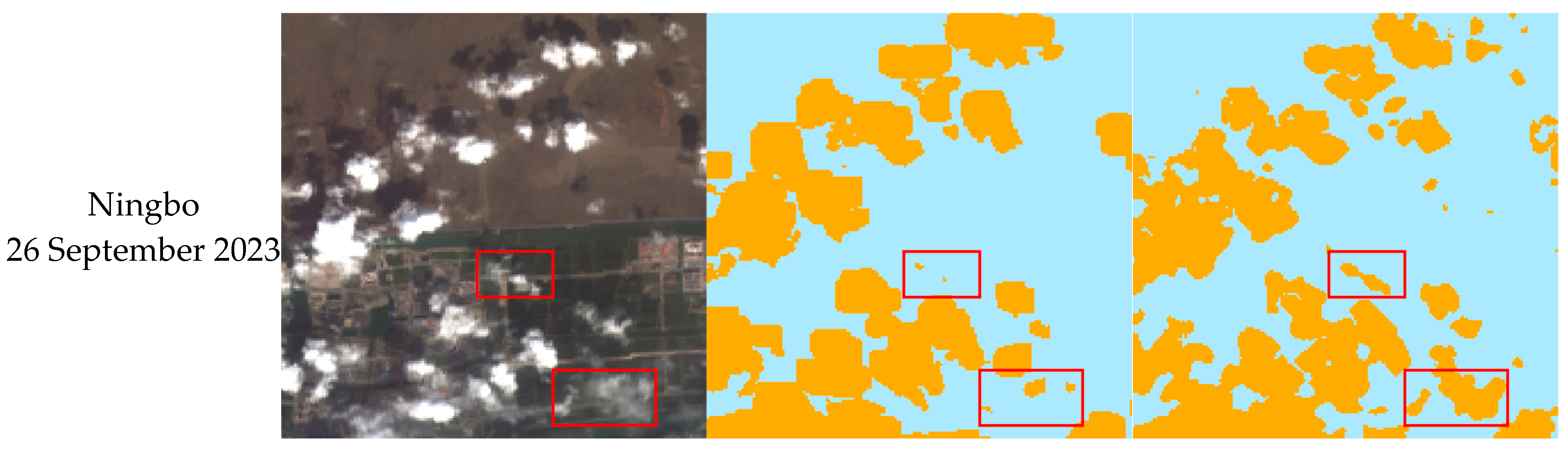

5.1. The Application Potential of Long Time Series and Large Area

5.2. The Generalization of TSMM

5.3. The Limitation of TSMM

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Zhang, Q.; Yuan, Q.; Li, J.; Li, Z.; Shen, H.; Zhang, L. Thick cloud and cloud shadow removal in multitemporal imagery using progressively spatio-temporal patch group deep learning. ISPRS J. Photogramm. Remote Sens. 2020, 162, 148–160. [Google Scholar] [CrossRef]

- Shen, H.; Li, X.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.; Zhang, L. Missing Information Reconstruction of Remote Sensing Data: A Technical Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

- Shu, H.; Jiang, S.; Zhu, X.; Xu, S.; Tan, X.; Tian, J.; Xu, Y.N.; Chen, J. Fusing or filling: Which strategy can better reconstruct high-quality fine-resolution satellite time series? Sci. Remote Sens. 2022, 5, 100046. [Google Scholar] [CrossRef]

- Zhou, J.; Jia, L.; Menenti, M.; Gorte, B. On the performance of remote sensing time series reconstruction methods—A spatial comparison. Remote Sens. Environ. 2016, 187, 367–384. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Weng, Q.; Zhang, Y.; Dou, P.; Zhang, L. Cloud and cloud shadow detection for optical satellite imagery: Features, algorithms, validation, and prospects. ISPRS J. Photogramm. Remote Sens. 2022, 188, 89–108. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Qiu, S.; Zhu, Z.; He, B. Fmask 4.0: Improved cloud and cloud shadow detection in Landsats 4–8 and Sentinel-2 imagery. Remote Sens. Environ. 2019, 231, 111205. [Google Scholar] [CrossRef]

- Skakun, S.; Wevers, J.; Brockmann, C.; Doxani, G.; Aleksandrov, M.; Batič, M.; Frantz, D.; Gascon, F.; Gómez-Chova, L.; Hagolle, O.; et al. Cloud Mask Intercomparison eXercise (CMIX): An evaluation of cloud masking algorithms for Landsat 8 and Sentinel-2. Remote Sens. Environ. 2022, 274, 112990. [Google Scholar] [CrossRef]

- Hagolle, O.; Huc, M.; Pascual, D.V.; Dedieu, G. A multi-temporal method for cloud detection, applied to FORMOSAT-2, VENµS, LANDSAT and SENTINEL-2 images. Remote Sens. Environ. 2010, 114, 1747–1755. [Google Scholar] [CrossRef]

- Zhai, H.; Zhang, H.; Zhang, L.; Li, P. Cloud/shadow detection based on spectral indices for multi/hyperspectral optical remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2018, 144, 235–253. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, H.; Wang, Y.; Zhou, Q.; Li, Y. Deep network based on up and down blocks using wavelet transform and successive multi-scale spatial attention for cloud detection. Remote Sens. Environ. 2021, 261, 112483. [Google Scholar] [CrossRef]

- Wu, K.; Xu, Z.; Lyu, X.; Ren, P. Cloud detection with boundary nets. ISPRS J. Photogramm. Remote Sens. 2022, 186, 218–231. [Google Scholar] [CrossRef]

- Wulder, M.A.; Loveland, T.R.; Roy, D.P.; Crawford, C.J.; Masek, J.G.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Belward, A.S.; Cohen, W.B.; et al. Current status of Landsat program, science, and applications. Remote Sens. Environ. 2019, 225, 127–147. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Wang, J.; Yang, D.; Chen, S.; Zhu, X.; Wu, S.; Bogonovich, M.; Guo, Z.; Zhu, Z.; Wu, J. Automatic cloud and cloud shadow detection in tropical areas for PlanetScope satellite images. Remote Sens. Environ. 2021, 264, 112604. [Google Scholar] [CrossRef]

- Mahajan, S.; Fataniya, B. Cloud detection methodologies: Variants and development—A review. Complex Intell. Syst. 2019, 6, 251–261. [Google Scholar] [CrossRef]

- Ackerman, S.A.; Strabala, K.I.; Menzel, W.P.; Frey, R.A.; Moeller, C.C.; Gumley, L.E. Discriminating clear sky from clouds with MODIS. J. Geophys. Res. Atmos. 1998, 103, 32141–32157. [Google Scholar] [CrossRef]

- Irish, R.R.; Barker, J.L.; Goward, S.N.; Arvidson, T. Characterization of the Landsat-7 ETM+ Automated Cloud-Cover Assessment (ACCA) Algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 1179–1188. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Automated cloud, cloud shadow, and snow detection in multitemporal Landsat data: An algorithm designed specifically for monitoring land cover change. Remote Sens. Environ. 2014, 152, 217–234. [Google Scholar] [CrossRef]

- Frantz, D.; Haß, E.; Uhl, A.; Stoffels, J.; Hill, J. Improvement of the Fmask algorithm for Sentinel-2 images: Separating clouds from bright surfaces based on parallax effects. Remote Sens. Environ. 2018, 215, 471–481. [Google Scholar] [CrossRef]

- Qiu, S.; Zhu, Z.; Woodcock, C.E. Cirrus clouds that adversely affect Landsat 8 images: What are they and how to detect them? Remote Sens. Environ. 2020, 246, 111884. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Bovolo, F.; Bruzzone, L.; Gascon, F.; Müller-Wilm, U.; Debaecker, V.; Louis, J.; Pflug, B.; Main-Knorn, M. Sen2Cor for Sentinel-2. In Proceedings of the SPIE, Image and Signal Processing for Remote Sensing XXIII2017, Warsaw, Poland, 11–14 September 2017. [Google Scholar]

- Tang, H.; Yu, K.; Hagolle, O.; Jiang, K.; Geng, X.; Zhao, Y. A cloud detection method based on a time series of MODIS surface reflectance images. Int. J. Digit. Earth 2013, 6, 157–171. [Google Scholar] [CrossRef]

- Lin, C.-H.; Lin, B.-Y.; Lee, K.-Y.; Chen, Y.-C. Radiometric normalization and cloud detection of optical satellite images using invariant pixels. ISPRS J. Photogramm. Remote Sens. 2015, 106, 107–117. [Google Scholar] [CrossRef]

- Gómez-Chova, L.; Amorós-López, J.; Mateo-García, G.; Muñoz-Marí, J.; Camps-Valls, G. Cloud masking and removal in remote sensing image time series. J. Appl. Remote Sens. 2017, 11, 015005. [Google Scholar] [CrossRef]

- Chai, D.; Newsam, S.; Zhang, H.K.; Qiu, Y.; Huang, J. Cloud and cloud shadow detection in Landsat imagery based on deep convolutional neural networks. Remote Sens. Environ. 2019, 225, 307–316. [Google Scholar] [CrossRef]

- Li, J.; Wu, Z.; Hu, Z.; Zhang, J.; Li, M.; Mo, L.; Molinier, M. Thin cloud removal in optical remote sensing images based on generative adversarial networks and physical model of cloud distortion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 373–389. [Google Scholar] [CrossRef]

- Li, J.; Wu, Z.; Sheng, Q.; Wang, B.; Hu, Z.; Zheng, S.; Camps-Valls, G.; Molinier, M. A hybrid generative adversarial network for weakly-supervised cloud detection in multispectral images. Remote Sens. Environ. 2022, 280, 113197. [Google Scholar] [CrossRef]

- Xu, M.; Deng, F.; Jia, S.; Jia, X.; Plaza, A.J. Attention mechanism-based generative adversarial networks for cloud removal in Landsat images. Remote Sens. Environ. 2022, 271, 112902. [Google Scholar] [CrossRef]

- Pasquarella, V.J.; Brown, C.F.; Czerwinski, W.; Rucklidge, W.J. Comprehensive Quality Assessment of Optical Satellite Imagery Using Weakly Supervised Video Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 2125–2135. [Google Scholar]

- Tarrio, K.; Tang, X.; Masek, J.G.; Claverie, M.; Ju, J.; Qiu, S.; Zhu, Z.; Woodcock, C.E. Comparison of cloud detection algorithms for Sentinel-2 imagery. Sci. Remote Sens. 2020, 2, 100010. [Google Scholar] [CrossRef]

- Luo, C.; Feng, S.; Yang, X.; Ye, Y.; Li, X.; Zhang, B.; Chen, Z.; Quan, Y. LWCDnet: A Lightweight Network for Efficient Cloud Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Shao, Z.; Pan, Y.; Diao, C.; Cai, J. Cloud Detection in Remote Sensing Images Based on Multiscale Features-Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4062–4076. [Google Scholar] [CrossRef]

- Li, Y.; Chen, W.; Zhang, Y.; Tao, C.; Xiao, R.; Tan, Y. Accurate cloud detection in high-resolution remote sensing imagery by weakly supervised deep learning. Remote Sens. Environ. 2020, 250, 112045. [Google Scholar] [CrossRef]

- Domnich, M.; Sünter, I.; Trofimov, H.; Wold, O.; Harun, F.; Kostiukhin, A.; Järveoja, M.; Veske, M.; Tamm, T.; Voormansik, K.; et al. KappaMask: AI-Based Cloudmask Processor for Sentinel-2. Remote Sens. 2021, 13, 4100. [Google Scholar] [CrossRef]

- Pieschke, R.L. U.S. Geological Survey Distribution of European Space Agency’s Sentinel-2 Data; U.S. Geological Survey: Reston, VA, USA, 2017. [Google Scholar] [CrossRef]

- Segarra, J.; Buchaillot, M.L.; Araus, J.L.; Kefauver, S.C. Remote Sensing for Precision Agriculture: Sentinel-2 Improved Features and Applications. Agronomy 2020, 10, 641. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Louis, J.; Pflug, B.; Main-Knorn, M.; Debaecker, V.; Mueller-Wilm, U.; Iannone, R.Q.; Cadau, E.G.; Boccia, V.; Gascon, F. Sentinel-2 Global Surface Reflectance Level-2a Product Generated with Sen2Cor. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 8522–8525. [Google Scholar] [CrossRef]

- Aybar, C.; Ysuhuaylas, L.; Loja, J.; Gonzales, K.; Herrera, F.; Bautista, L.; Yali, R.; Flores, A.; Diaz, L.; Cuenca, N.; et al. CloudSEN12, a global dataset for semantic understanding of cloud and cloud shadow in Sentinel-2. Sci. Data 2022, 9, 782. [Google Scholar] [CrossRef]

| Code | Study Area | Center Coordinates | Timing Image Date (2022) | Label Date and Proportion of Cloud and Cloud Shadow (2022) | Main Type |

|---|---|---|---|---|---|

| A | Changchun | (125.39, 44.34) | 23 April–3 June 12 May–22 June | 05.13 (15%) 06.02 (26%) | Farmland |

| B | Dunhuang | (99.64, 40.17) | 27 February–7 April 13 June–23 July | 03.17 (8%) 07.03 (30%) | Gobi beach |

| C | Chengdu | (104.17, 30.81) | 9 April–19 May 5 July–25 July | 04.29 (85%) 07.25 (15%) | Buildings |

| D | Wuhan | (114.25, 30.59) | 2 April–12 May 11 July–21 August | 04.22 (26%) 07.31 (32%) | Buildings |

| E | Ningbo | (21.23, 30.37) | 9 July–19 August 31 August–10 October | 07.29 (42%) 09.20 (39%) | Wetlands |

| F | Hangzhou | (119.11, 29.58) | 4 March–14 April 15 August–25 September | 03.24 (3%) 09.05 (88%) | Water and vegetation |

| G | Hong Kong | (114.24, 22.25) | 4 January–14 February 22 February–2 April | 01.24 (38%) 03.12 (43%) | Vegetation and ocean |

| Method | Type | OA | UA | PA | F1 |

|---|---|---|---|---|---|

| MSK_CLDPRB | Cloud | 0.84 | 0.95 | 0.34 | 0.47 |

| Clear | 0.75 | 0.7 | 1 | 0.8 | |

| CDI | Cloud | 0.83 | 0.59 | 0.78 | 0.61 |

| Clear | 0.81 | 0.82 | 0.89 | 0.83 | |

| S2cloudless | Cloud | 0.87 | 0.7 | 0.77 | 0.7 |

| Clear | 0.81 | 0.8 | 0.84 | 0.79 | |

| CS+ | Cloud and cloud shadow | 0.9 | 0.94 | 0.68 | 0.76 |

| Clear | 0.9 | 0.86 | 0.95 | 0.89 | |

| Sen2Cor | Cloud | 0.82 | 0.9 | 0.36 | 0.47 |

| Cloud shadow | 0.92 | 0.54 | 0.47 | 0.42 | |

| Cloud and cloud shadow | 0.76 | 0.7 | 0.44 | 0.52 | |

| Clear | 0.76 | 0.73 | 0.92 | 0.79 | |

| TSMM | Cloud | 0.95 | 0.88 | 0.89 | 0.88 |

| Cloud shadow | 0.96 | 0.65 | 0.63 | 0.62 | |

| Cloud and cloud shadow | 0.93 | 0.86 | 0.86 | 0.85 | |

| Clear | 0.93 | 0.91 | 0.9 | 0.9 |

| Method | Type | OA | UA | PA | F1 |

|---|---|---|---|---|---|

| MSK_CLDPRB | Cloud | 0.76 | 0.69 | 0.3 | 0.36 |

| Clear | 0.67 | 0.56 | 0.9 | 0.64 | |

| CDI | Cloud | 0.79 | 0.6 | 0.56 | 0.52 |

| Clear | 0.78 | 0.64 | 0.81 | 0.68 | |

| S2cloudless | Cloud | 0.85 | 0.71 | 0.57 | 0.58 |

| Clear | 0.8 | 0.62 | 0.8 | 0.65 | |

| CS+ | Cloud and cloud shadow | 0.87 | 0.78 | 0.57 | 0.62 |

| Clear | 0.87 | 0.7 | 0.87 | 0.75 | |

| Sen2Cor | Cloud | 0.76 | 0.69 | 0.36 | 0.41 |

| Cloud shadow | 0.91 | 0.32 | 0.08 | 0.11 | |

| Cloud and cloud shadow | 0.69 | 0.71 | 0.33 | 0.4 | |

| Clear | 0.69 | 0.55 | 0.82 | 0.61 | |

| TSMM | Cloud | 0.86 | 0.68 | 0.66 | 0.63 |

| Cloud shadow | 0.92 | 0.49 | 0.33 | 0.35 | |

| Cloud and cloud shadow | 0.87 | 0.71 | 0.67 | 0.66 | |

| Clear | 0.87 | 0.73 | 0.72 | 0.69 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, K.; Yang, G.; Zuo, Y.; Chen, J.; Sun, W.; Meng, X.; Chen, B. A Novel Method for Cloud and Cloud Shadow Detection Based on the Maximum and Minimum Values of Sentinel-2 Time Series Images. Remote Sens. 2024, 16, 1392. https://doi.org/10.3390/rs16081392

Liang K, Yang G, Zuo Y, Chen J, Sun W, Meng X, Chen B. A Novel Method for Cloud and Cloud Shadow Detection Based on the Maximum and Minimum Values of Sentinel-2 Time Series Images. Remote Sensing. 2024; 16(8):1392. https://doi.org/10.3390/rs16081392

Chicago/Turabian StyleLiang, Kewen, Gang Yang, Yangyan Zuo, Jiahui Chen, Weiwei Sun, Xiangchao Meng, and Binjie Chen. 2024. "A Novel Method for Cloud and Cloud Shadow Detection Based on the Maximum and Minimum Values of Sentinel-2 Time Series Images" Remote Sensing 16, no. 8: 1392. https://doi.org/10.3390/rs16081392

APA StyleLiang, K., Yang, G., Zuo, Y., Chen, J., Sun, W., Meng, X., & Chen, B. (2024). A Novel Method for Cloud and Cloud Shadow Detection Based on the Maximum and Minimum Values of Sentinel-2 Time Series Images. Remote Sensing, 16(8), 1392. https://doi.org/10.3390/rs16081392