High Precision Navigation and Positioning for Multisource Sensors Based on Bibliometric and Contextual Analysis

Abstract

1. Introduction

2. Research Approach

2.1. Data Collection

2.2. Bibliometric, Network, and Content Analysis

3. Results

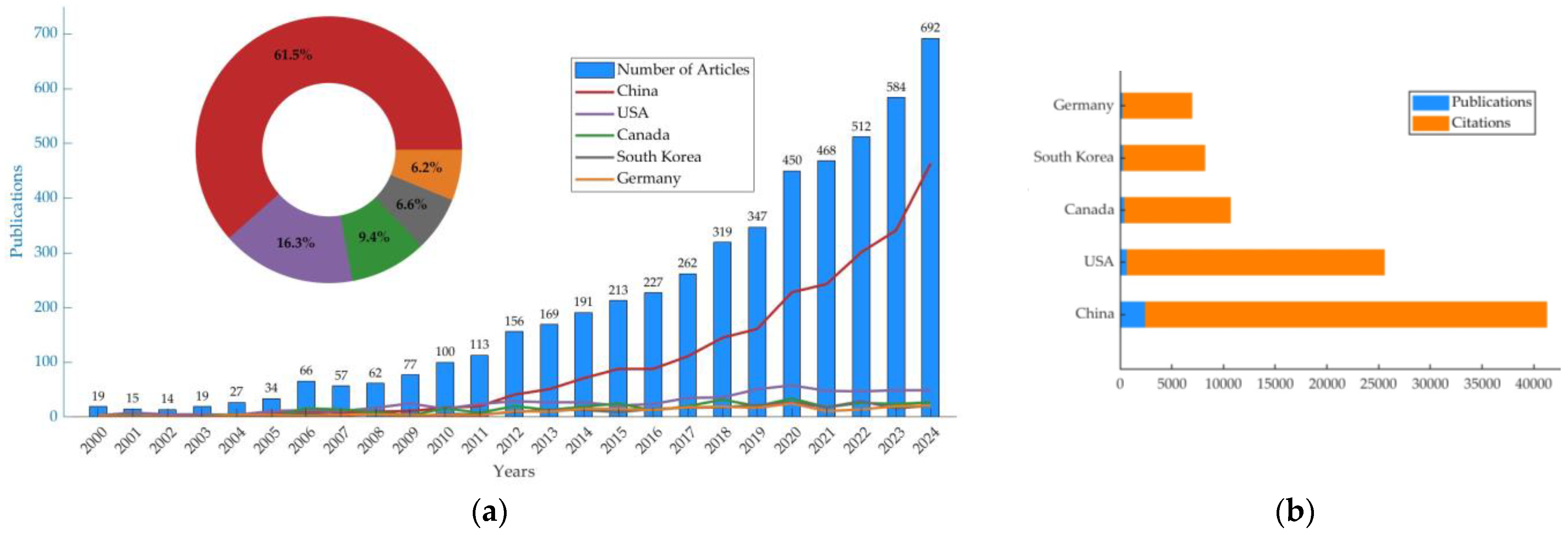

3.1. Analysis of Publication Outputs

3.2. Comprehensive Analysis of Authors, Countries, and Institutions

3.2.1. Author Contribution Analysis

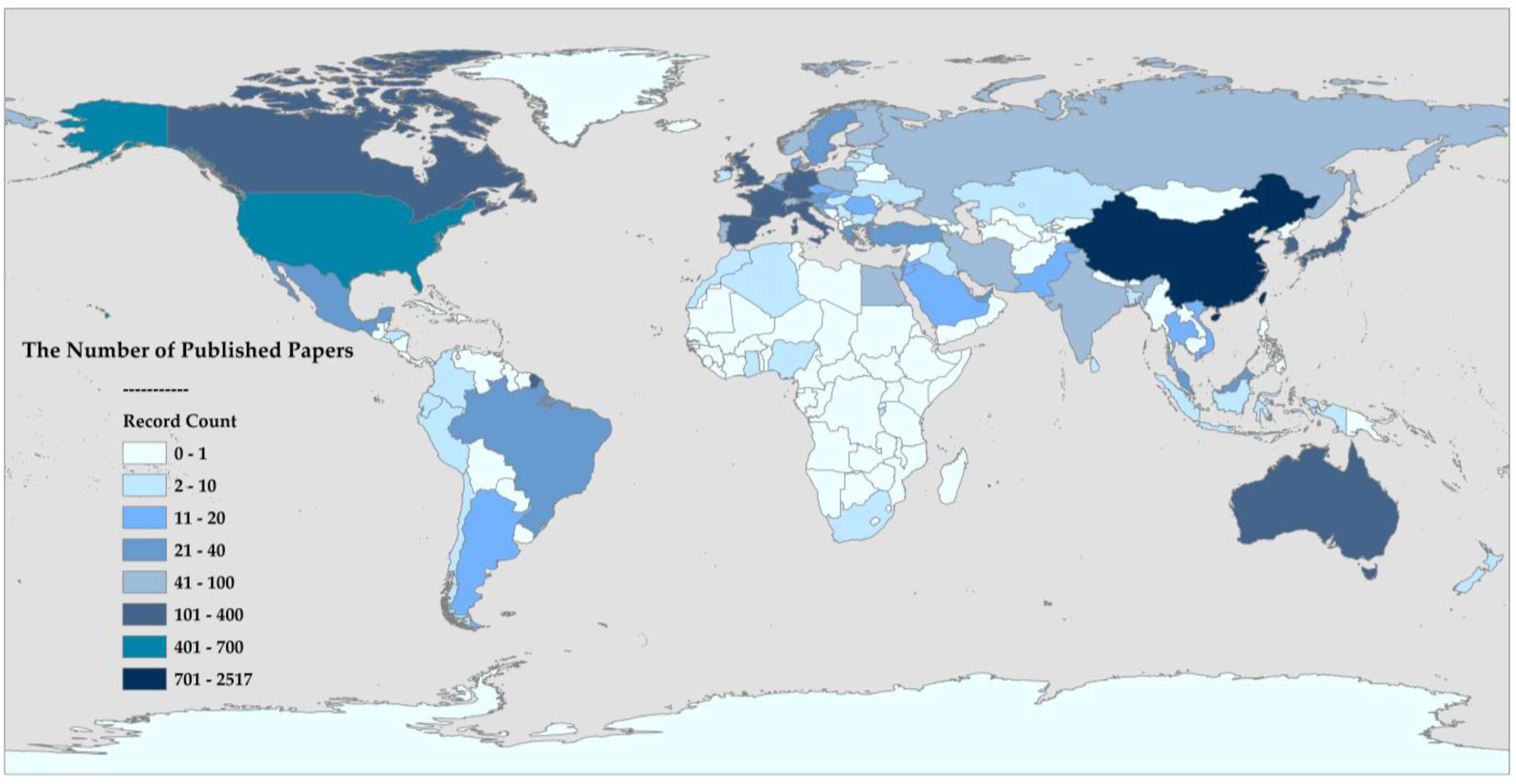

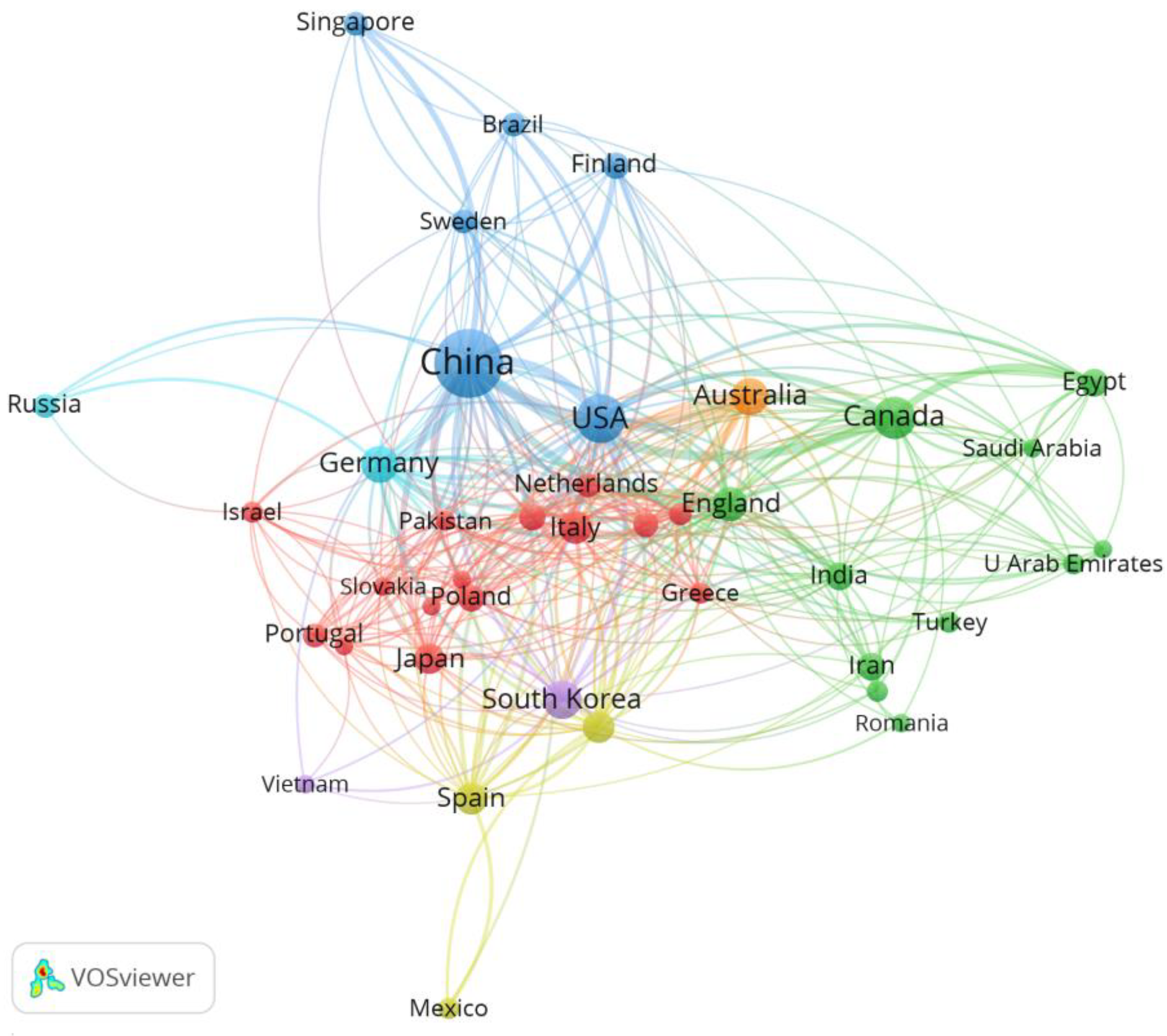

3.2.2. Geographical Distribution of Contributions

3.2.3. Institutional Collaboration Patterns

3.3. Research Hotspot Analysis

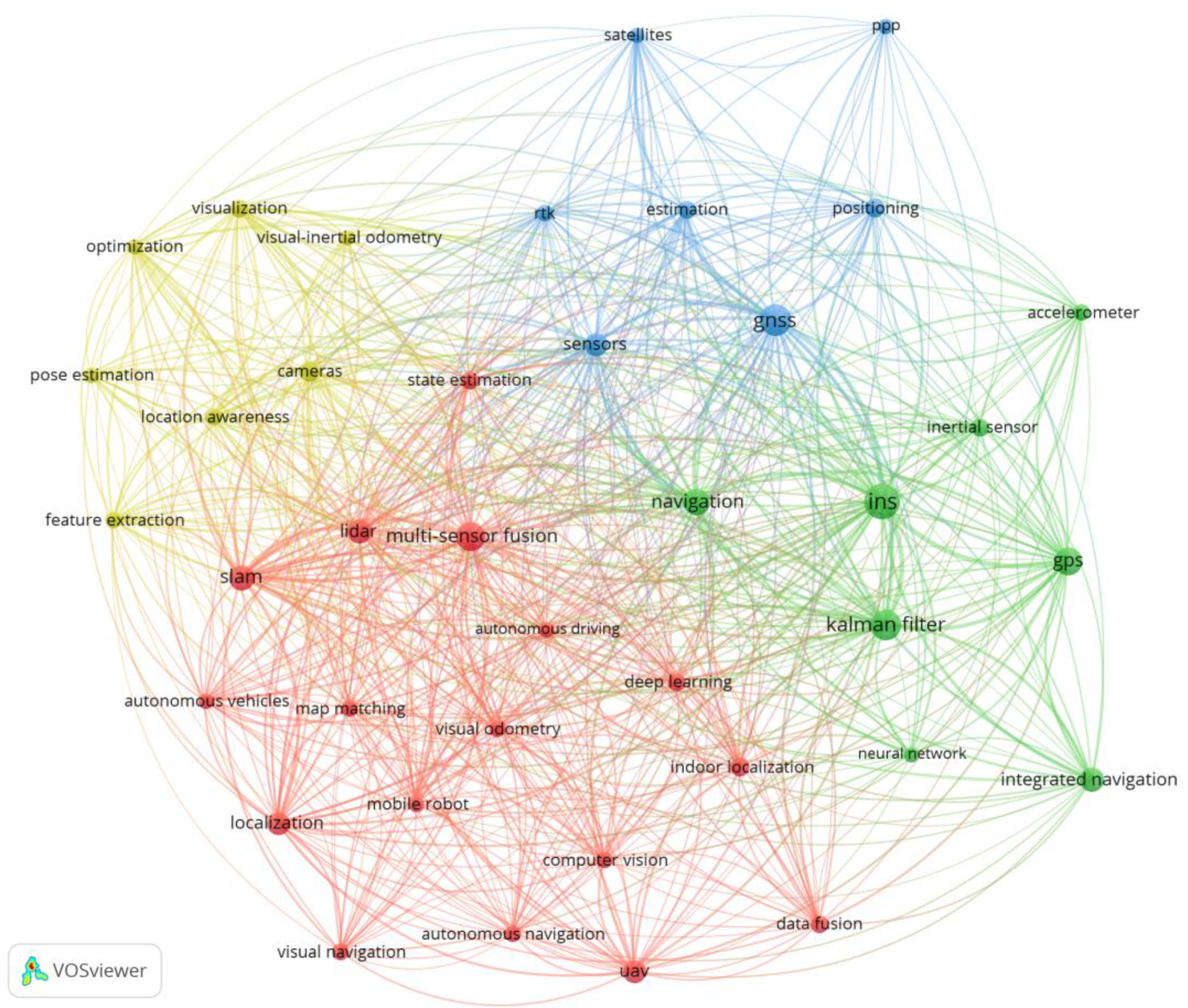

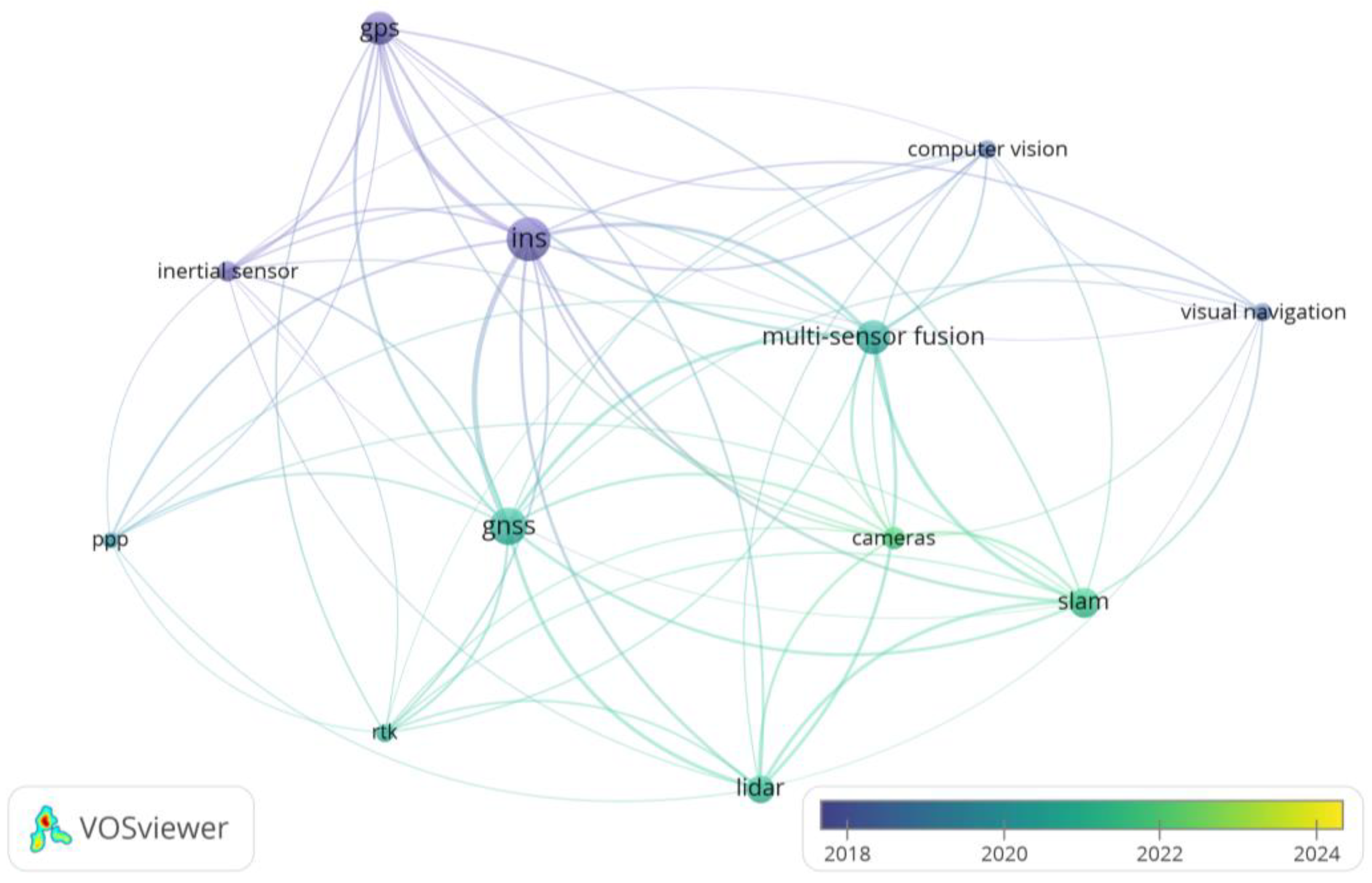

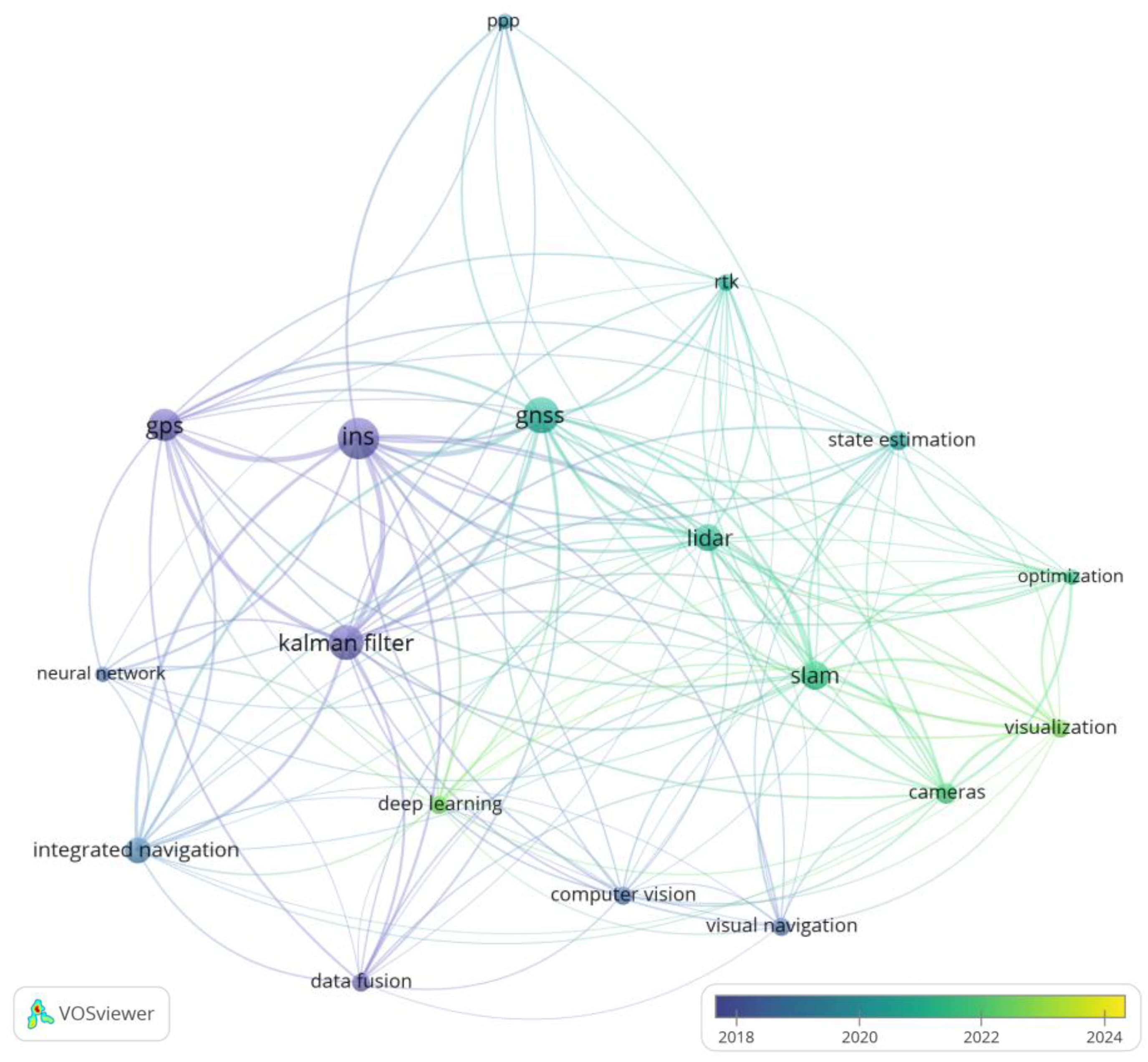

3.3.1. Keyword Analysis

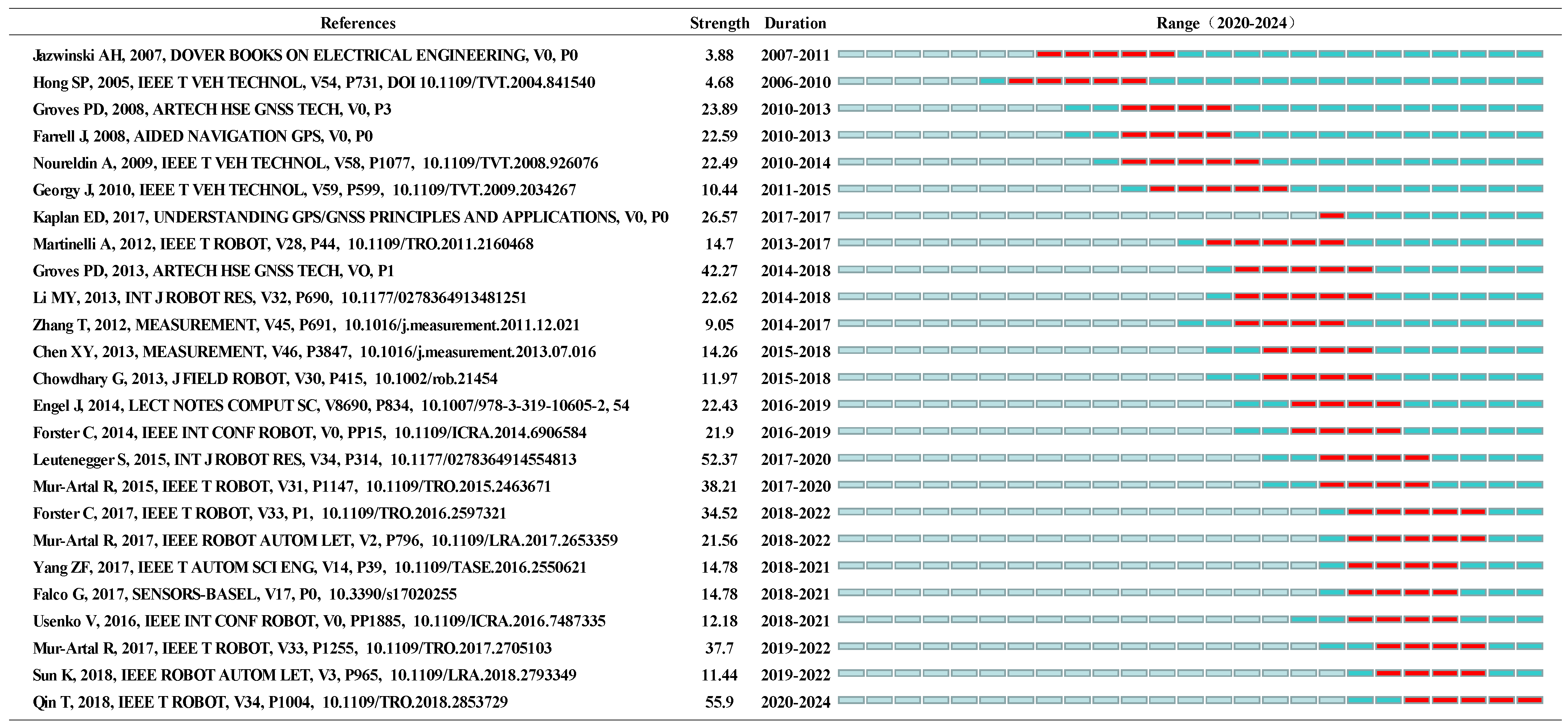

3.3.2. Citation Impact Analysis

4. Discussion

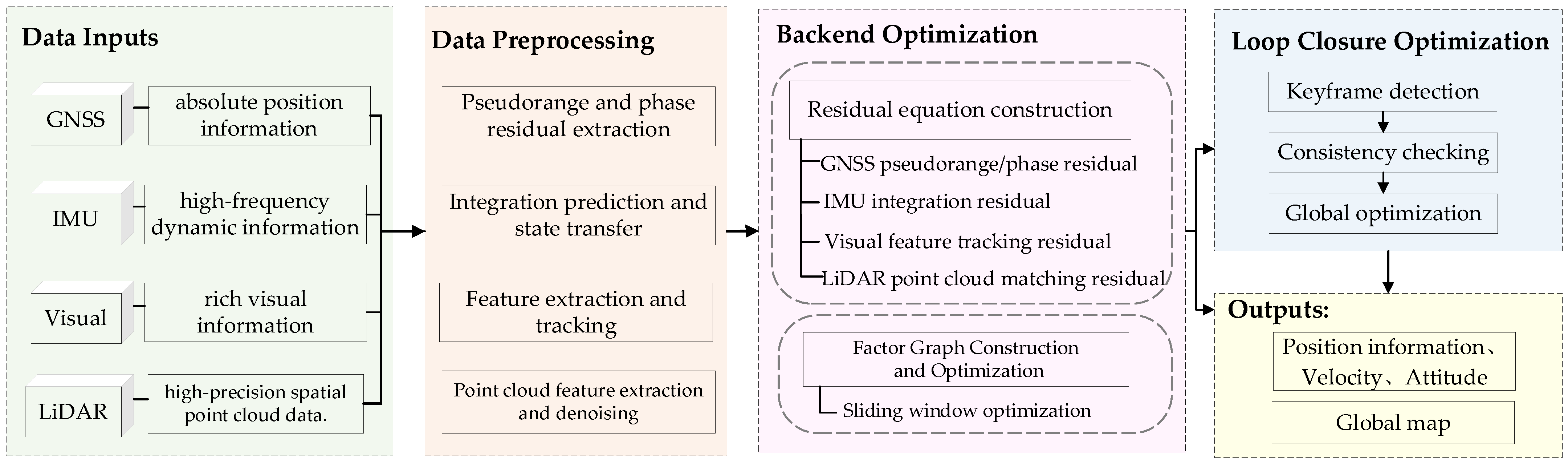

4.1. Development and Innovation in Multisource Sensor Technologies

4.2. Integration and State Estimation in Multisource Systems

4.2.1. Integration Methods of Multisource Sensors

- 1.

- Loosely coupled integration mode

- 2.

- Tightly coupled integration mode

- 3.

- Deeply coupled integration mode

4.2.2. State Estimation Methods in Integrated Navigation

- 1.

- Filtering techniques in integrated navigation.

- 2.

- Graph optimization methods in integrated navigation

- 3.

- Deep learning techniques in integrated navigation

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Adeel, M.; Gong, Z.; Liu, P.L.; Wang, Y.Z.; Chen, X.; Inst, N. Research and Performance Analysis of Tightly Coupled Vision, INS and GNSS System for Land Vehicle Applications. In Proceedings of the 30th International Technical Meeting of The Satellite-Division-of-the-Institute-of-Navigation (ION GNSS+), Portland, OR, USA, 25–29 September 2017; pp. 3321–3330. [Google Scholar]

- Chiang, K.W.; Chang, H.W.; Li, Y.H.; Tsai, G.J.; Tseng, C.L.; Tien, Y.C.; Hsu, P.C. Assessment for INS/GNSS/Odometer/Barometer Integration in Loosely-Coupled and Tightly-Coupled Scheme in a GNSS-Degraded Environment. IEEE Sens. J. 2020, 20, 3057–3069. [Google Scholar]

- Geng, X.S.; Guo, Y.; Tang, K.H.; Wu, W.Q.; Ren, Y.C. Research on Covert Directional Spoofing Method for INS/GNSS Loosely Integrated Navigation. IEEE Trans. Veh. Technol. 2023, 72, 5654–5663. [Google Scholar]

- Cong, L.; Yue, S.; Qin, H.L.; Li, B.; Yao, J.T. Implementation of a MEMS-Based GNSS/INS Integrated Scheme Using Supported Vector Machine for Land Vehicle Navigation. IEEE Sens. J. 2020, 20, 14423–14435. [Google Scholar]

- Shang, X.Y.; Sun, F.P.; Liu, B.D.; Zhang, L.D.; Cui, J.Y. GNSS Spoofing Mitigation With a Multicorrelator Estimator in the Tightly Coupled INS/GNSS Integration. IEEE Trans. Instrum. Meas. 2023, 72, 12. [Google Scholar]

- Wang, J.; Wang, S.; Zou, D.; Chen, H.; Zhong, R.; Li, H.; Zhou, W.; Yan, K. Social Network and Bibliometric Analysis of Unmanned Aerial Vehicle Remote Sensing Applications from 2010 to 2021. Remote Sens. 2021, 13, 2912. [Google Scholar] [CrossRef]

- Huang, Z.; Wu, X.; Wang, H.; Hwang, C.; He, X. Monitoring Inland Water Quantity Variations: A Comprehensive Analysis of Multi-Source Satellite Observation Technology Applications. Remote Sens. 2023, 15, 3945. [Google Scholar] [CrossRef]

- Van Eck, N.; Waltman, L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [PubMed]

- Wei, J.; Wang, F.; Lindell, M.K. The evolution of stakeholders’ perceptions of disaster: A model of information flow. J. Assoc. Inf. Sci. Technol. 2016, 67, 441–453. [Google Scholar]

- Chen, C.; Dubin, R.; Kim, M.C. Emerging trends and new developments in regenerative medicine: A scientometric update (2000–2014). Expert Opin. Biol. Ther. 2014, 14, 1295–1317. [Google Scholar]

- Schmoch, U. Mean values of skewed distributions in the bibliometric assessment of research units. Scientometrics 2020, 125, 925–935. [Google Scholar]

- Chen, C.M. Eugene Garfield’s scholarly impact: A scientometric review. Scientometrics 2018, 114, 489–516. [Google Scholar] [CrossRef]

- Yang, L.; Li, Y.; Wu, Y.L.; Rizos, C. An enhanced MEMS-INS/GNSS integrated system with fault detection and exclusion capability for land vehicle navigation in urban areas. GPS Solut. 2014, 18, 593–603. [Google Scholar] [CrossRef]

- Bhatti, U.I.; Ochieng, W.Y.; Feng, S.J. Integrity of an integrated GPS/INS system in the presence of slowly growing errors. Part I: A critical review. GPS Solut. 2007, 11, 173–181. [Google Scholar] [CrossRef]

- Luo, Q.; Cao, Y.R.; Liu, J.J.; Benslimane, A. Localization and Navigation in Autonomous Driving: Threats and Countermeasures. IEEE Wirel. Commun. 2019, 26, 38–45. [Google Scholar] [CrossRef]

- Hollar, S.; Brain, M.; Nayak, A.A.; Stevens, A.; Patil, N.; Mittal, H.; Smith, W.J. A New Low Cost, Efficient, Self-Driving Personal Rapid Transit System. In Proceedings of the 28th IEEE Intelligent Vehicles Symposium (IV), Redondo Beach, CA, USA, 11–14 June 2017; pp. 412–417. [Google Scholar]

- Li, S.; Cui, P.Y.; Cui, H.T. Autonomous navigation and guidance for landing on asteroids. Aerosp. Sci. Technol. 2006, 10, 239–247. [Google Scholar] [CrossRef]

- Sabatini, R.; Moore, T.; Ramasamy, S. Global navigation satellite systems performance analysis and augmentation strategies in aviation. Prog. Aeosp. Sci. 2017, 95, 45–98. [Google Scholar] [CrossRef]

- Walter, T.; Enge, P.; Blanch, J.; Pervan, B. Worldwide Vertical Guidance of Aircraft Based on Modernized GPS and New Integrity Augmentations. Proc. IEEE 2008, 96, 1918–1935. [Google Scholar] [CrossRef]

- Chang, L.; Niu, X.J.; Liu, T.Y.; Tang, J.; Qian, C. GNSS/INS/LiDAR-SLAM Integrated Navigation System Based on Graph Optimization. Remote Sens. 2019, 11, 1009. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, X.; Dedman, S.; Rosso, M.; Zhu, J.; Yang, J.; Xia, Y.; Tian, Y.; Zhang, G.; Wang, J. UAV remote sensing applications in marine monitoring: Knowledge visualization and review. Sci. Total Environ. 2022, 838, 155939. [Google Scholar]

- Noureldin, A.; Karamat, T.B.; Eberts, M.D.; El-Shafie, A. Performance Enhancement of MEMS-Based INS/GPS Integration for Low-Cost Navigation Applications. IEEE Trans. Veh. Technol. 2009, 58, 1077–1096. [Google Scholar] [CrossRef]

- Skog, I.; Handel, P. In-Car Positioning and Navigation Technologies—A Survey. IEEE Trans. Intell. Transp. Syst. 2009, 10, 4–21. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. On-manifold preintegration for real-time visual--inertial odometry. IEEE Trans. Robot. 2017, 33, 1–21. [Google Scholar]

- Xu, W.; Cai, Y.X.; He, D.J.; Lin, J.R.; Zhang, F. FAST-LIO2: Fast Direct LiDAD-Inertial Odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodriguez, J.J.G.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Paull, L.; Saeedi, S.; Seto, M.; Li, H. AUV Navigation and Localization: A Review. IEEE J. Ocean. Eng. 2014, 39, 131–149. [Google Scholar] [CrossRef]

- Li, M.; Mourikis, A.I. High-precision, consistent EKF-based visual-inertial odometry. Int. J. Robot. Res. 2013, 32, 690–711. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. Visual-Inertial Monocular SLAM With Map Reuse. IEEE Robot. Autom. Lett. 2017, 2, 796–803. [Google Scholar] [CrossRef]

- Chen, C. CiteSpace II: Detecting and visualizing emerging trends and transient patterns in scientific literature. J. Am. Soc. Inf. Sci. Technol. 2006, 57, 359–377. [Google Scholar]

- Groves, P.D. Principles of GNSS, Inertial, and Multisensor Integrated Navigation Systems, 2nd ed.; Artech: Boston, MA, USA, 2013; pp. 1–776. [Google Scholar]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual–inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Jazwinski, A.H. Stochastic Processes and Filtering Theory; Courier Corporation: Chelmsford, MA, USA, 2007. [Google Scholar]

- Sinpyo, H.; Man Hyung, L.; Ho-Hwan, C.; Sun-Hong, K.; Speyer, J.L. Observability of error States in GPS/INS integration. IEEE Trans. Veh. Technol. 2005, 54, 731–743. [Google Scholar] [CrossRef]

- Groves, P.D. Principles of GNSS, Inertial, and Multisensor Integrated Navigation Systems; Artech: Boston, MA, USA, 2008; pp. 1–518. [Google Scholar]

- Farrell, J. Aided Navigation: GPS with High Rate Sensors; McGraw-Hill, Inc.: New York, NY, USA, 2008. [Google Scholar]

- Georgy, J.; Noureldin, A.; Korenberg, M.J.; Bayoumi, M.M. Low-Cost Three-Dimensional Navigation Solution for RISS/GPS Integration Using Mixture Particle Filter. IEEE Trans. Veh. Technol. 2010, 59, 599–615. [Google Scholar] [CrossRef]

- Kaplan, E.D.; Hegarty, C. Understanding GPS/GNSS: Principles and Applications; Artech house: Boston, MA, USA, 2017. [Google Scholar]

- Martinelli, A. Vision and IMU Data Fusion: Closed-Form Solutions for Attitude, Speed, Absolute Scale, and Bias Determination. IEEE Trans. Robot. 2012, 28, 44–60. [Google Scholar] [CrossRef]

- Zhang, T.; Xu, X. A new method of seamless land navigation for GPS/INS integrated system. Measurement 2012, 45, 691–701. [Google Scholar] [CrossRef]

- Chen, X.; Shen, C.; Zhang, W.-b.; Tomizuka, M.; Xu, Y.; Chiu, K. Novel hybrid of strong tracking Kalman filter and wavelet neural network for GPS/INS during GPS outages. Measurement 2013, 46, 3847–3854. [Google Scholar] [CrossRef]

- Chowdhary, G.; Johnson, E.N.; Magree, D.; Wu, A.; Shein, A. GPS-denied indoor and outdoor monocular vision aided navigation and control of unmanned aircraft. J. Field Robot. 2013, 30, 415–438. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In Proceedings of the Computer Vision—ECCV 2014, Cham, Switzerland, 6–12 September 2014; pp. 834–849. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Yang, Z.; Shen, S. Monocular Visual–Inertial State Estimation With Online Initialization and Camera–IMU Extrinsic Calibration. IEEE Trans. Autom. Sci. Eng. 2017, 14, 39–51. [Google Scholar] [CrossRef]

- Falco, G.; Pini, M.; Marucco, G. Loose and Tight GNSS/INS Integrations: Comparison of Performance Assessed in Real Urban Scenarios. Sensors 2017, 17, 255. [Google Scholar] [CrossRef] [PubMed]

- Usenko, V.; Engel, J.; Stückler, J.; Cremers, D. Direct visual-inertial odometry with stereo cameras. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1885–1892. [Google Scholar]

- Sun, K.; Mohta, K.; Pfrommer, B.; Watterson, M.; Liu, S.; Mulgaonkar, Y.; Taylor, C.J.; Kumar, V. Robust Stereo Visual Inertial Odometry for Fast Autonomous Flight. IEEE Robot. Autom. Lett. 2018, 3, 965–972. [Google Scholar] [CrossRef]

- Ning, J.; Yao, Y.; Zhang, X. Review of the Development of Global Satellite Navigation System. J. Navig. Position. 2013, 1, 3–8. [Google Scholar] [CrossRef]

- Liu, Z.; Zhou, Q.; Qin, Y.; El-Sheimy, N. Vision-Aided Inertial Navigation System with Point and Vertical Line Observations for Land Vehicle Applications. In China Satellite Navigation Conference (CSNC) 2017 Proceedings: Volume II; Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2017; pp. 445–457. [Google Scholar]

- Boguspayev, N.; Akhmedov, D.; Raskaliyev, A.; Kim, A.; Sukhenko, A. A comprehensive review of GNSS/INS integration techniques for land and air vehicle applications. Appl. Sci. 2023, 13, 4819. [Google Scholar] [CrossRef]

- El-Sheimy, N.; Youssef, A. Inertial sensors technologies for navigation applications: State of the art and future trends. Satell. Navig. 2020, 1, 1–21. [Google Scholar] [CrossRef]

- Bird, J.; Arden, D. Indoor navigation with foot-mounted strapdown inertial navigation and magnetic sensors [emerging opportunities for localization and tracking]. IEEE Wirel. Commun. 2011, 18, 28–35. [Google Scholar] [CrossRef]

- Vaduvescu, V.A.; Negrea, P. Inertial Measurement Unit—A Short Overview of the Evolving Trend for Miniaturization and Hardware Structures. In Proceedings of the 2021 International Conference on Applied and Theoretical Electricity (ICATE), Craiova, Romania, 27–29 May 2021. [Google Scholar]

- Dutta, I.; Savoie, D.; Fang, B.; Venon, B.; Alzar, C.L.G.; Geiger, R.; Landragin, A. Continuous Cold-Atom Inertial Sensor with 1 nrad/sec Rotation Stability. Phys. Rev. Lett. 2016, 116, 183003. [Google Scholar] [CrossRef]

- Guessoum, M.; Gautier, R.; Bouton, Q.; Sidorenkov, L.; Landragin, A.; Geiger, R. High Stability Two Axis Cold-Atom Gyroscope. In Proceedings of the 2022 9th IEEE International Symposium on Inertial Sensors and Systems (IEEE INERTIAL 2022), Avignon, France, 8–11 May 2022. [Google Scholar]

- Zhang, L.; Gao, W.; Li, Q.; Li, R.B.; Yao, Z.W.; Lu, S.B. A Novel Monitoring Navigation Method for Cold Atom Interference Gyroscope. Sensors 2019, 19, 222. [Google Scholar] [CrossRef]

- Palacios-Laloy, A.; Rutkowski, J.; Troadec, Y.; Léger, J.-M. On the Critical Impact of HF Power Drifts for Miniature Helium-Based NMR Gyroscopes. IEEE Sens. J. 2016, 17, 657–659. [Google Scholar] [CrossRef]

- Tan, S.X.; Stoker, J.; Greenlee, S. Detection of foliage-obscured vehicle using a multiwavelength polarimetric lidar. In Proceedings of the IGARSS: 2007 IEEE International Geoscience and Remote Sensing Symposium, VOLS 1–12: Sensing and Understanding Our Planet, Barcelona, Spain, 23–28 July 2007; pp. 2503–2506. [Google Scholar]

- Zhao, H.; Hua, D.X.; Mao, J.D.; Zhou, C.Y. Correction to near-range multiwavelength lidar optical parameter based on the measurements of particle size distribution. Acta Phys. Sin. 2015, 64, 124208. [Google Scholar] [CrossRef]

- Xu, Z.; Yan, Z.; Li, X.; Shen, Z.; Zhou, Y.; Wu, Z.; Li, X. Review of high- precision multi-sensor integrated positioning towards intelligent driving. Position Navig. Timing 2023, 10, 1–20. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-Time Single Camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Ng, Z.Y. Indoor-Positioning for Warehouse Mobile Robots Using Computer Vision; UTAR: Kampar, Malaysia, 2021. [Google Scholar]

- Kim, T.-L.; Arshad, S.; Park, T.-H. Adaptive Feature Attention Module for Robust Visual–LiDAR Fusion-Based Object Detection in Adverse Weather Conditions. Remote Sens. 2023, 15, 3992. [Google Scholar] [CrossRef]

- Gallego, G.; Delbrück, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K.; et al. Event-Based Vision: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 154–180. [Google Scholar] [CrossRef]

- Wang, X.; Yang, D.; Wang, Z.; Kwan, H.; Chen, J.; Wu, W.; Li, H.; Liao, Y.; Liu, S. Towards realistic uav vision-language navigation: Platform, benchmark, and methodology. arXiv 2024, arXiv:2410.07087. [Google Scholar]

- Liu, S.; Zhang, H.; Qi, Y.; Wang, P.; Zhang, Y.; Wu, Q. Aerialvln: Vision-and-language navigation for uavs. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 15384–15394. [Google Scholar]

- Lee, J.; Miyanishi, T.; Kurita, S.; Sakamoto, K.; Azuma, D.; Matsuo, Y.; Inoue, N. CityNav: Language-Goal Aerial Navigation Dataset with Geographic Information. arXiv 2024, arXiv:2406.14240. [Google Scholar]

- Li, T.; Zhang, H.P.; Gao, Z.Z.; Niu, X.J.; El-sheimy, N. Tight Fusion of a Monocular Camera, MEMS-IMU, and Single-Frequency Multi-GNSS RTK for Precise Navigation in GNSS-Challenged Environments. Remote Sens. 2019, 11, 24. [Google Scholar] [CrossRef]

- Carlson, N.A. Federated Square Root Filter for Decentralized Parallel Processes. IEEE Trans. Aerosp. Electron. Syst. 1990, 26, 517–525. [Google Scholar] [CrossRef]

- Schütz, A.; Sánchez-Morales, D.E.; Pany, T. Precise Positioning Through a Loosely-Coupled Sensor Fusion of Gnss-Rtk, INS and LiDAR for Autonomous Driving. In Proceedings of the 2020 IEEE/ION Position, Location and Navigation Symposium (PLANS), Portland, OR, USA, 20–23 April 2020; pp. 219–225. [Google Scholar]

- Li, T.; Pei, L.; Xiang, Y.; Wu, Q.; Xia, S.; Tao, L.; Guan, X.; Yu, W. P 3-LOAM: PPP/LiDAR loosely coupled SLAM with accurate covariance estimation and robust RAIM in urban canyon environment. IEEE Sens. J. 2020, 21, 6660–6671. [Google Scholar]

- Gao, Z.; Zhang, H.; Ge, M.; Niu, X.; Shen, W.; Wickert, J.; Schuh, H. Tightly coupled integration of multi-GNSS PPP and MEMS inertial measurement unit data. GPS Solut. 2016, 21, 377–391. [Google Scholar] [CrossRef]

- Wang, D.; Dong, Y.; Li, Z.; Li, Q.; Wu, J. Constrained MEMS-Based GNSS/INS Tightly Coupled System With Robust Kalman Filter for Accurate Land Vehicular Navigation. IEEE Trans. Instrum. Meas. 2020, 69, 5138–5148. [Google Scholar] [CrossRef]

- Chen, K.; Chang, G.B.; Chen, C. GINav: A MATLAB-based software for the data processing and analysis of a GNSS/INS integrated navigation system. GPS Solut. 2021, 25, 7. [Google Scholar] [CrossRef]

- Luo, N.; Hu, Z.; Ding, Y.; Li, J.; Zhao, H.; Liu, G.; Wang, Q. DFF-VIO: A General Dynamic Feature Fused Monocular Visual-Inertial Odometry. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 1758–1773. [Google Scholar] [CrossRef]

- Liu, C.; Jiang, C.; Wang, H. InGVIO: A Consistent Invariant Filter for Fast and High-Accuracy GNSS-Visual-Inertial Odometry. IEEE Robot. Autom. Lett. 2023, 8, 1850–1857. [Google Scholar] [CrossRef]

- Li, X.X.; Li, S.Y.; Zhou, Y.X.; Shen, Z.H.; Wang, X.B.; Li, X.; Wen, W.S. Continuous and Precise Positioning in Urban Environments by Tightly Coupled Integration of GNSS, INS and Vision. IEEE Robot. Autom. Lett. 2022, 7, 11458–11465. [Google Scholar] [CrossRef]

- Jiang, H.; Yan, D.; Wang, J.; Yin, J. Innovation-based Kalman filter fault detection and exclusion method against all-source faults for tightly coupled GNSS/INS/Vision integration. GPS Solut. 2024, 28, 108. [Google Scholar] [CrossRef]

- Li, S.Y.; Li, X.X.; Wang, H.D.; Zhou, Y.X.; Shen, Z.H. Multi-GNSS PPP/INS/Vision/LiDAR tightly integrated system for precise navigation in urban environments. Inf. Fusion 2023, 90, 218–232. [Google Scholar] [CrossRef]

- Li, X.X.; Wang, X.B.; Liao, J.C.; Li, X.; Li, S.Y.; Lyu, H.B. Semi-tightly coupled integration of multi-GNSS PPP and S-VINS for precise positioning in GNSS-challenged environments. Satell. Navig. 2021, 2, 14. [Google Scholar] [CrossRef]

- Liu, C.L.; Wang, C.; Wang, J. A Bandwidth Adaptive Pseudo-Code Tracking Loop Design for BD/INS Integrated Navigation. In Proceedings of the 2nd International Conference on Control Science and Systems Engineering (ICCSSE), Singapore, 27–29 July 2016; pp. 46–49. [Google Scholar]

- Liu, H.R.; Zhang, T.S.; Zhang, P.H.; Qi, F.R.; Li, Z. Accuracy Analysis of GNSS/INS Deeply-Coupled Receiver for Strong Earthquake Motion. In Proceedings of the 8th China Satellite Navigation Conference (CSNC), Shanghai, China, 23–25 May 2017; pp. 339–349. [Google Scholar]

- Wu, M.Y.; Ding, J.C.; Zhao, L.; Kang, Y.Y.; Luo, Z.B. An adaptive deep-coupled GNSS/INS navigation system with hybrid pre-filter processing. Meas. Sci. Technol. 2018, 29, 14. [Google Scholar] [CrossRef]

- Ruotsalainen, L.; Kirkko-Jaakkola, M.; Bhuiyan, M.; Söderholm, S.; Thombre, S.; Kuusniemi, H. Deeply coupled GNSS, INS and visual sensor integration for interference mitigation. In Proceedings of the 27th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS+ 2014), Tampa, FL, USA, 8–12 September 2014; pp. 2243–2249. [Google Scholar]

- Zuo, Z.; Yang, B.; Li, Z.; Zhang, T. A GNSS/IMU/Vision Ultra-Tightly Integrated Navigation System for Low Altitude Aircraft. IEEE Sens. J. 2022, 22, 11857–11864. [Google Scholar] [CrossRef]

- Indelman, V.; Gurfil, P.; Rivlin, E.; Rotstein, H. Real-time vision-aided localization and navigation based on three-view geometry. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 2239–2259. [Google Scholar]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Castellanos, J.A.; Martinez-Cantin, R.; Tardós, J.D.; Neira, J. Robocentric map joining: Improving the consistency of EKF-SLAM. Robot. Auton. Syst. 2007, 55, 21–29. [Google Scholar]

- Mourikis, A.I.; Roumeliotis, S.I. A multi-state constraint Kalman filter for vision-aided inertial navigation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar]

- Heo, S.; Jung, J.H.; Park, C.G. Consistent EKF-Based Visual-Inertial Navigation Using Points and Lines. IEEE Sens. J. 2018, 18, 7638–7649. [Google Scholar] [CrossRef]

- Li, X.H.; Jiang, H.D.; Chen, X.Y.; Kong, H.; Wu, J.F. Closed-Form Error Propagation on SEn(3) Group for Invariant EKF With Applications to VINS. IEEE Robot. Autom. Lett. 2022, 7, 10705–10712. [Google Scholar] [CrossRef]

- Weiss, S.; Scaramuzza, D.; Siegwart, R. Monocular-SLAM–based navigation for autonomous micro helicopters in GPS-denied environments. J. Field Robot. 2011, 28, 854–874. [Google Scholar] [CrossRef]

- Novara, C.; Ruiz, F.; Milanese, M. Direct Filtering: A New Approach to Optimal Filter Design for Nonlinear Systems. IEEE Trans. Autom. Control 2013, 58, 86–99. [Google Scholar] [CrossRef]

- Xin, S.; Wang, X.; Zhang, J.; Zhou, K.; Chen, Y. A Comparative Study of Factor Graph Optimization-Based and Extended Kalman Filter-Based PPP-B2b/INS Integrated Navigation. Remote Sens. 2023, 15, 5144. [Google Scholar] [CrossRef]

- Li, X.; Zhang, X.; Niu, X.; Wang, J.; Pei, L.; Yu, F.; Zhang, H.; Yang, C.; Gao, Z.; Zhang, Q.; et al. Progress and Achievements of Multi-sensor Fusion Navigation in China during 2019--2023. J. Geod. Geoinf. Sci. 2023, 6, 102–114. [Google Scholar]

- Zhu, F.; Xu, Z.; Zhang, X.; Zhang, Y.; Chen, W.; Zhang, X. On State Estimation in Multi-Sensor Fusion Navigation: Optimization and Filtering. arXiv 2024, arXiv:2401.05836. [Google Scholar]

- Kim, J.; Sukkarieh, S. 6DoF SLAM aided GNSS/INS navigation in GNSS denied and unknown environments. J. Glob. Position. Syst. 2005, 4, 120–128. [Google Scholar]

- Chu, T.; Guo, N.; Backén, S.; Akos, D. Monocular camera/IMU/GNSS integration for ground vehicle navigation in challenging GNSS environments. Sensors 2012, 12, 3162–3185. [Google Scholar] [CrossRef] [PubMed]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems Trans. ASME. Ser. D 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Lv, W.F.; Publishing, I.O.P. Kalman Filtering Algorithm for Integrated Navigation System in Unmanned Aerial Vehicle. In Proceedings of the 5th Annual International Conference on Information System and Artificial Intelligence (ISAI), Zhejiang, China, 22–23 May 2020. [Google Scholar]

- Zhao, L.; Wang, X.; Ding, J.; Cao, W. Overview of nonlinear filter methods applied in integrated navigation system. J. Chin. Inert. Technol. 2009, 17, 46–52. [Google Scholar]

- Zhang, W.; Sun, R. Research on performance comparison of EKF and UKF and their application. J. Nanjing Univ. Sci. Technol. 2015, 39, 614–618. [Google Scholar]

- Shen, Z.; Yu, W.; Fang, J. Nonlinear algorithm based on UKF for lowcost SINS /GPS integrated navigation system. Syst. Eng. Electron. 2007, 29, 408–411. [Google Scholar]

- Hu, G.; Wang, W.; Zhong, Y.; Gao, B.; Gu, C. A new direct filtering approach to INS/GNSS integration. Aerosp. Sci. Technol. 2018, 77, 755–764. [Google Scholar] [CrossRef]

- Xu, C.; Chen, S.; Hou, Z. A hybrid information fusion method for SINS/GNSS integrated navigation system utilizing GRU-aided AKF during GNSS outages. Meas. Sci. Technol. 2024, 35, 106311. [Google Scholar] [CrossRef]

- Zhang, T.; Yuan, M.; Wang, L.; Tang, H.; Niu, X. A Robust and Efficient IMU Array/GNSS Data Fusion Algorithm. IEEE Sens. J. 2024, 24, 26278–26289. [Google Scholar] [CrossRef]

- Zhang, L.L.; Qu, H.; Mao, J.; Hu, X.P. A Survey of Intelligence Science and Technology Integrated Navigation Technology. Navig. Position. Timing 2020, 7, 50–63. [Google Scholar] [CrossRef]

- Fornasier, A.; van Goor, P.; Allak, E.; Mahony, R.; Weiss, S. MSCEqF: A Multi State Constraint Equivariant Filter for Vision-Aided Inertial Navigation. IEEE Robot. Autom. Lett. 2024, 9, 731–738. [Google Scholar] [CrossRef]

- Hesch, J.A.; Kottas, D.G.; Bowman, S.L.; Roumeliotis, S.I. Camera-IMU-based localization: Observability analysis and consistency improvement. Int. J. Robot. Res. 2013, 33, 182–201. [Google Scholar] [CrossRef]

- Huai, Z.; Huang, G. Robocentric visual–inertial odometry. Int. J. Robot. Res. 2019, 41, 667–689. [Google Scholar] [CrossRef]

- Eckenhoff, K.; Geneva, P.; Huang, G. MIMC-VINS: A Versatile and Resilient Multi-IMU Multi-Camera Visual-Inertial Navigation System. IEEE Trans. Robot. 2021, 37, 1360–1380. [Google Scholar] [CrossRef]

- Lynen, S.; Achtelik, M.W.; Weiss, S.; Chli, M.; Siegwart, R. A Robust and Modular Multi-Sensor Fusion Approach Applied to MAV Navigation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–8 November 2013; pp. 3923–3929. [Google Scholar]

- Yue, Z.; Lian, B.; Tang, C.; Tong, K. A novel adaptive federated filter for GNSS/INS/VO integrated navigation system. Meas. Sci. Technol. 2020, 31, 85102. [Google Scholar] [CrossRef]

- Xia, C.; Li, X.; Li, S.; Zhou, Y. Invariant-EKF-Based GNSS/INS/Vision Integration with High Convergence and Accuracy. IEEE/ASME Trans. Mechatron. 2024, 29, 1–12. [Google Scholar] [CrossRef]

- Liao, J.; Li, X.; Wang, X.; Li, S.; Wang, H. Enhancing navigation performance through visual-inertial odometry in GNSS-degraded environment. GPS Solut. 2021, 25, 50. [Google Scholar] [CrossRef]

- Gu, S.; Dai, C.; Mao, F.; Fang, W. Integration of Multi-GNSS PPP-RTK/INS/Vision with a Cascading Kalman Filter for Vehicle Navigation in Urban Areas. Remote Sens. 2022, 14, 4337. [Google Scholar] [CrossRef]

- Li, K.; Li, J.; Wang, A. Discussion on development of GNSS/INS/Visual integrated navigation technology and data fusion. J. Navig. Position. 2023, 11, 9–15. [Google Scholar] [CrossRef]

- Hu, J.-S.; Chen, M.-Y. A sliding-window visual-IMU odometer based on tri-focal tensor geometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3963–3968. [Google Scholar]

- Xian, Z.; Hu, X.; Lian, J. Fusing stereo camera and low-cost inertial measurement unit for autonomous navigation in a tightly-coupled approach. J. Navig. 2015, 68, 434–452. [Google Scholar] [CrossRef]

- Kong, X.; Wu, W.; Zhang, L.; Wang, Y. Tightly-coupled stereo visual-inertial navigation using point and line features. Sensors 2015, 15, 12816–12833. [Google Scholar] [CrossRef]

- Huang, G.P.; Mourikis, A.I.; Roumeliotis, S.I. A quadratic-complexity observability-constrained unscented Kalman filter for SLAM. IEEE Trans. Robot. 2013, 29, 1226–1243. [Google Scholar]

- Xu, J.; Yang, G.; Sun, Y.; Picek, S. A Multi-Sensor Information Fusion Method Based on Factor Graph for Integrated Navigation System. IEEE Access 2021, 9, 12044–12054. [Google Scholar] [CrossRef]

- Taghizadeh, S.; Nezhadshahbodaghi, M.; Safabakhsh, R.; Mosavi, M.R. A low-cost integrated navigation system based on factor graph nonlinear optimization for autonomous flight. GPS Solut. 2022, 26, 78. [Google Scholar]

- Wen, W.; Pfeifer, T.; Bai, X.; Hsu, L.-T. Factor graph optimization for GNSS/INS integration: A comparison with the extended Kalman filter. Navig. J. Inst. Navig. 2021, 68, 315–331. [Google Scholar]

- Chiu, H.-P.; Zhou, X.S.; Carlone, L.; Dellaert, F.; Samarasekera, S.; Kumar, R. Constrained optimal selection for multi-sensor robot navigation using plug-and-play factor graphs. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 663–670. [Google Scholar]

- Indelman, V.; Williams, S.; Kaess, M.; Dellaert, F. Information fusion in navigation systems via factor graph based incremental smoothing. Robot. Auton. Syst. 2013, 61, 721–738. [Google Scholar]

- Li, W.; Cui, X.W.; Lu, M.Q. A Robust Graph Optimization Realization of Tightly Coupled GNSS/INS Integrated Navigation System for Urban Vehicles. Tsinghua Sci. Technol. 2018, 23, 724–732. [Google Scholar] [CrossRef]

- Lin, F.; Wang, S.; Chen, Y.; Zou, M.; Peng, H.; Liu, Y. Vehicle integrated navigation IMU mounting angles estimation method based on nonlinear optimization. Meas. Sci. Technol. 2023, 35, 036304. [Google Scholar] [CrossRef]

- Zhang, L.; Wen, W.; Hsu, L.-T.; Zhang, T. An improved inertial preintegration model in factor graph optimization for high accuracy positioning of intelligent vehicles. IEEE Trans. Intell. Veh. 2023, 9, 1641–1653. [Google Scholar]

- Li, T.; Zhang, H.; Han, B.; Xia, M.; Shi, C. Relative Accuracy of GNSS/INS Integration Based on Factor Graph Optimization. IEEE Sens. J. 2024, 24, 33182–33194. [Google Scholar] [CrossRef]

- Walcott-Bryant, A.; Kaess, M.; Johannsson, H.; Leonard, J.J. Dynamic Pose Graph SLAM: Long-term Mapping in Low Dynamic Environments. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 1871–1878. [Google Scholar]

- Li, J.; Pan, X.; Huang, G.; Zhang, Z.; Wang, N.; Bao, H.; Zhang, G. RD-VIO: Robust Visual-Inertial Odometry for Mobile Augmented Reality in Dynamic Environments. IEEE Trans. Vis. Comput. Graph. 2024, 30, 6941–6955. [Google Scholar] [CrossRef]

- Zheng, F.; Lin, W.; Sun, L. Dyna VIO: Real-Time Visual-Inertial Odometry with Instance Segmentation in Dynamic Environments. In Proceedings of the 2024 4th International Conference on Computer, Control and Robotics (ICCCR), Shanghai, China, 19–21 April 2024; pp. 21–25. [Google Scholar]

- Mascaro, R.; Teixeira, L.; Hinzmann, T.; Siegwart, R.; Chli, M. GOMSF: Graph-Optimization Based Multi-Sensor Fusion for robust UAV Pose estimation. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1421–1428. [Google Scholar]

- Niu, X.; Tang, H.; Zhang, T.; Fan, J.; Liu, J. IC-GVINS: A Robust, Real-Time, INS-Centric GNSS-Visual-Inertial Navigation System. IEEE Robot. Autom. Lett. 2023, 8, 216–223. [Google Scholar] [CrossRef]

- Chi, C.; Zhang, X.; Liu, J.; Sun, Y.; Zhang, Z.; Zhan, X. GICI-LIB: A GNSS/INS/Camera Integrated Navigation Library. IEEE Robot. Autom. Lett. 2023, 8, 7977. [Google Scholar] [CrossRef]

- Yin, J.; Li, T.; Yin, H.; Yu, W.X.; Zou, D.P. Sky-GVINS: A sky-segmentation aided GNSS-Visual-Inertial system for robust navigation in urban canyons. Geo-Spat. Inf. Sci. 2023, 11, 2257–2267. [Google Scholar] [CrossRef]

- Ravi, R.; Lin, Y.-J.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. Simultaneous System Calibration of a Multi-LiDAR Multicamera Mobile Mapping Platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Zhou, T.; Hasheminasab, S.M.; Habib, A. Tightly-coupled camera/LiDAR integration for point cloud generation from GNSS/INS-assisted UAV mapping systems. ISPRS J. Photogramm. Remote Sens. 2021, 180, 336–356. [Google Scholar] [CrossRef]

- Liao, J.; Li, X.; Feng, S. GVIL: Tightly—Coupled GNSS PPP/Visual/INS/LiDAR SLAM Based on Graph Optimization. Geomat. Inf. Sci. Wuhan Univ. 2023, 48, 1204–1215. [Google Scholar] [CrossRef]

- Liu, F.; Zhao, H.; Chen, W. A Hybrid Algorithm of LSTM and Factor Graph for Improving Combined GNSS/INS Positioning Accuracy during GNSS Interruptions. Sensors 2024, 24, 5605. [Google Scholar] [CrossRef]

- Wu, F.; Luo, H.; Zhao, F.; Wei, L.; Zhou, B. Optimizing GNSS/INS Integrated Navigation: A Deep Learning Approach for Error Compensation. IEEE Signal Process. Lett. 2024, 31, 3104–3108. [Google Scholar] [CrossRef]

- Meng, X.; Tan, H.; Yan, P.; Zheng, Q.; Chen, G.; Jiang, J. A GNSS/INS Integrated Navigation Compensation Method Based on CNN–GRU + IRAKF Hybrid Model During GNSS Outages. IEEE Trans. Instrum. Meas. 2024, 73, 1–15. [Google Scholar] [CrossRef]

- Liu, J.; Guo, G. Vehicle Localization During GPS Outages with Extended Kalman Filter and Deep Learning. IEEE Trans. Instrum. Meas. 2021, 70, 1–10. [Google Scholar] [CrossRef]

- Clark, R.; Wang, S.; Wen, H.; Markham, A.; Trigoni, N. VINet: Visual-Inertial Odometry as a Sequence-to-Sequence Learning Problem. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 3995–4001. [Google Scholar]

- Chen, C.H.; Lu, C.X.; Wang, B.; Trigoni, N.; Markham, A. DynaNet: Neural Kalman Dynamical Model for Motion Estimation and Prediction. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 5479–5491. [Google Scholar] [CrossRef]

- Han, L.; Lin, Y.; Du, G.; Lian, S. DeepVIO: Self-supervised Deep Learning of Monocular Visual Inertial Odometry using 3D Geometric Constraints. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 6906–6913. [Google Scholar]

- Kurt, Y.B.; Akman, A.; Alatan, A.A. Causal Transformer for Fusion and Pose Estimation in Deep Visual Inertial Odometry. arXiv 2024, arXiv:2409.08769. [Google Scholar]

- Chen, C.H.; Rosa, S.; Miao, Y.S.; Lu, C.X.X.; Wu, W.; Markham, A.; Trigoni, N.; Soc, I.C. Selective Sensor Fusion for Neural Visual-Inertial Odometry. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 10534–10543. [Google Scholar]

- Ragab, H.; Abdelaziz, S.K.; Elhabiby, M.; Givigi, S.; Noureldin, A.; Inst, N. Machine Learning-based Visual Odometry Uncertainty Estimation for Low-cost Integrated Land Vehicle Navigation. In Proceedings of the 33rd International Technical Meeting of the Satellite-Division-of-The-Institute-of-Navigation (ION GNSS), Electr Network, 21–25 September 2020; pp. 2569–2578. [Google Scholar]

- Ma, C.; Lin, J.; Zhao, Y.; Shi, Q.; Xia, G.; Qiu, A.; Huang, J. Low Noise Temperature Compensation Strategy for North-finding MEMS Gyroscope. In Proceedings of the 2024 IEEE SENSORS, Kobe, Japan, 20–23 October 2024; pp. 1–4. [Google Scholar]

- Li, K.; Cui, R.; Cai, Q.; Wei, W.; Shen, C.; Tang, J.; Shi, Y.; Cao, H.; Liu, J. A Fusion Algorithm for Real-Time Temperature Compensation and Noise Suppression With a Double U-Beam Vibration Ring Gyroscope. IEEE Sens. J. 2024, 24, 7614–7624. [Google Scholar] [CrossRef]

- Li, A.; Cui, K.; An, D.; Wang, X.; Cao, H. Multi-Frame Vibration MEMS Gyroscope Temperature Compensation Based on Combined GWO-VMD-TCN-LSTM Algorithm. Micromachines 2024, 15, 1379. [Google Scholar] [CrossRef]

- Rodriguez Mendoza, L.; O’Keefe, K. Wearable Multi-Sensor Positioning Prototype for Rowing Technique Evaluation. Sensors 2024, 24, 5280. [Google Scholar] [CrossRef] [PubMed]

- Yan, P.; Jiang, J.; Tan, H.; Zheng, Q.; Liu, J. High Precision Time Synchronization Strategy for Low-Cost Embedded GNSS/MEMS-IMU Integrated Navigation Module. IEEE Trans. Intell. Transp. Syst. 2024, 25, 14087–14099. [Google Scholar] [CrossRef]

| Rank | Journals | Publications |

|---|---|---|

| 1 | Sensors | 493 |

| 2 | IEEE Sensors Journal | 226 |

| 3 | Remote Sensing | 209 |

| 4 | IEEE Access | 161 |

| 5 | IEEE Transactions on Instrumentation and Measurement | 138 |

| 6 | GPS Solutions | 114 |

| 7 | IEEE Transactions on Intelligent Transportation Systems | 104 |

| 8 | Journal of Navigation | 100 |

| 9 | IEEE Robotics and Automation Letters | 99 |

| 10 | Measurement Science and Technology | 86 |

| 11 | IEEE Transactions on Vehicular Technology | 76 |

| 12 | Measurement | 70 |

| 13 | Applied Sciences Basel | 68 |

| 14 | IEEE Transactions on Aerospace and Electronic Systems | 65 |

| 15 | Journal of Field Robotics | 55 |

| Rank | Author | Total Publications |

|---|---|---|

| 1 | Xiaoji Niu | 84 |

| 2 | Noureldin, Aboelmagd | 65 |

| 3 | El-Sheimy, Naser | 49 |

| 4 | Hsu, Li-Ta | 48 |

| 5 | Xingxing Li | 37 |

| 6 | Jingnan Liu | 35 |

| 7 | Chiang, Kai-Wei | 35 |

| 8 | Jian Wang | 33 |

| 9 | Quan Zhang | 33 |

| 10 | Shengyu Li | 31 |

| 11 | Tisheng Zhang | 30 |

| 12 | Yuxuan Zhou | 29 |

| 13 | Weisong Wen | 29 |

| 14 | Zhouzheng Gao | 28 |

| 15 | Hongping Zhang | 28 |

| Rank | Author | Citations |

|---|---|---|

| 1 | Shaojie Sheng | 3314 |

| 2 | Xiaoji Niu | 2163 |

| 3 | Noureldin, Aboelmagd | 2095 |

| 4 | Scaramuzza, Davide | 1621 |

| 5 | El-Sheimy, Naser | 1418 |

| 6 | Yongmin Zhong | 1264 |

| 7 | Hsu, Li-Ta | 1239 |

| 8 | Stergios I. Roumeliotis | 1218 |

| 9 | Wei Xu | 1104 |

| 10 | Fu Zhang | 1104 |

| 11 | Anastasios I. Mourikis | 1088 |

| 12 | Gaoge Hu | 1067 |

| 13 | Guoquan Huang | 910 |

| 14 | Chang, Kai-Wei | 863 |

| 15 | Jinling Wang | 860 |

| Rank | Affiliations | Articles |

|---|---|---|

| 1 | Wuhan University | 262 |

| 2 | Beihang University | 199 |

| 3 | University of Calgary | 157 |

| 4 | National University of Defense Technology | 126 |

| 5 | Southeast University | 116 |

| 6 | Chinese Academy of Science | 106 |

| 7 | Northwestern Polytechnical University | 103 |

| 8 | Harbin Engineering University | 98 |

| 9 | Nanjing University of Aeronautics and Astronautics | 92 |

| 10 | The Hong Kong Polytechnic University | 78 |

| 11 | Tsinghua University | 77 |

| 12 | Beijing Institute of Technology | 72 |

| 13 | China University of Mining and Technology | 71 |

| 14 | Shanghai Jiao Tong University | 71 |

| 15 | Royal Military College of Canada | 63 |

| Rank | Title | Author | Year |

|---|---|---|---|

| 1 | Vision Meets Robotics: The KITTI dataset | Geiger, Andreas | 2013 |

| 2 | VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator | Tong Qin | 2018 |

| 3 | ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial, and Multimap SLAM | Campos, Carlos | 2021 |

| 4 | AUV Navigation and Localization: A Review | Paull, Liam | 2014 |

| 5 | On-Manifold Pre-integration for Real-Time Visual–Inertial Odometry | Forster, Christian | 2017 |

| 6 | High-precision, consistent EKF-based visual-inertial odometry | Mingyang Li | 2013 |

| 7 | Visual-Inertial Monocular SLAM With Map Reuse | Mur-Artal, R | 2017 |

| 8 | FAST-LIO2: Fast Direct LiDAR-Inertial Odometry | Wei Xu | 2022 |

| 9 | In-Car Positioning and Navigation Technologies-A Survey | Skog, Isaac | 2009 |

| 10 | Performance Enhancement of MEMS-Based INS/GPS Integration for Low-Cost Navigation Applications | Noureldin, Aboelmagd | 2009 |

| Sensor Type | Algorithm Name | Fusion Type | Algorithm Type | Features |

|---|---|---|---|---|

| GNSS/INS | KF-GINS | LC | EKF | Adopts optimized Bayesian methods with high robustness; effectively processes noise, outliers, and uncertainties |

| OB_GINS | LC | Graph Optimization | Optimized Bayesian approach, enhances robustness against noise, outliers, and uncertainties. | |

| GINav | LC/TC | EKF | Applications for real-time and postprocessing; flexible sensor configurations and an extensible framework for advanced filtering and other sensor integrations. | |

| Ignav | LC/TC | Filtering | Modular design, adaptable to various application scenarios; high flexibility. | |

| INS/visual | ROVIO | LC | IEKF | High real-time performance and robustness; suitable for fast-moving platforms. |

| MSCKF | TC | KF | Suitable for embedded platforms; lightweight real-time navigation, suppresses motion blur and lighting changes. | |

| MSCEqF | TC | Equivariant Filtering | Integrates multiple state variables with self-calibration capabilities; applies group theory for equivariant design, ensuring improved linearization and internal consistency. | |

| R-VIO | TC | EKF | Uses a mobile local coordinate system for state estimation; operates from any initial posture without requiring alignment with the global gravity direction. | |

| MIMC-VINS | TC | Multistate Constrained Kalman Filter | Fuses data from multiple uncalibrated cameras and IMUs; ensures seamless 3D motion tracking; incorporates on-manifold state interpolation and online calibration for spatiotemporal and intrinsic parameters. | |

| VINS-Mono | TC | Graph Optimization | Monocular vision and inertial fusion; preintegration and partial marginalization techniques for large-scale graph optimization. | |

| OKVIS | TC | Graph Optimization | Keyframe-based global pose estimation; handles scale uncertainty, suitable for complex dynamic environments. | |

| StructVIO | TC | Graph Optimization | Structured line features with iterative optimization graph optimization; improves accuracy in weak and repetitive texture environments. | |

| ORB-SLAM3 | TC | Graph Optimization | Multisensor support (monocular, stereo, RGB-D); visual + IMU tightly coupled; multimap system; excellent positioning accuracy and robustness. | |

| RD-VIO | TC | Graph Optimization | Utilizes IMU-PARSAC for robust keypoint detection and matching; employs deferred triangulation to handle pure rotation; enhances performance in dynamic environments. | |

| INS/visual | DFF-VIO | TC | Graph Optimization | Utilizes dynamic feature matching, handles dynamic environments, improves tracking accuracy and robustness, especially for moving objects. |

| DynaVIO | TC | Graph Optimization | Identifies and rejects dynamic features; uses dynamic probability propagation for robust pose estimation. | |

| DeepVIO | TC | Deep Learning (LSTM + CNN + Fusion Network) | Combines 2D optical flow and IMU data; self-supervised learning for trajectory estimation; robust to calibration errors and missing data. | |

| VIFT | TC | Deep Learning (Transformer-Based) | Uses attention mechanisms for pose estimation; refines latent feature vectors temporally; addresses data imbalance and rotation learning in SE(3); suitable for monocular VIO systems in autonomous driving and robotics. | |

| GNSS/INS/ visual | IC-GVINS | GNSS LC | Factor Graph Optimization | Adapted to complex environments and sensor failures. |

| InGVIO | GNSS TC | IEKF | High precision and efficient computation with keyframe marginalization; robust performance. | |

| GICI-LIB | GNSS LC/TC | Factor Graph Optimization | The first open-source integrated navigation library; multifrequency processing and optimized RTK, PPP algorithms for high-precision fusion navigation. | |

| GVINS | GNSS TC | Graph Optimization | Accurate and robust 3D navigation and positioning, suitable for high-precision and high-robustness requirements. | |

| Sky-GVINS | GNSS TC | Graph Optimization | Optimized for UAVs; high-precision, low-latency 3D positioning and attitude estimation. | |

| GNSS/INS/ visual/LiDAR | GVIL | TC | Graph Optimization | Integrates multisensor information for high-precision, comprehensive 3D perception and positioning, suitable for complex environments and autonomous driving. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, J.; Song, M.; Yuan, Y. High Precision Navigation and Positioning for Multisource Sensors Based on Bibliometric and Contextual Analysis. Remote Sens. 2025, 17, 1136. https://doi.org/10.3390/rs17071136

Wei J, Song M, Yuan Y. High Precision Navigation and Positioning for Multisource Sensors Based on Bibliometric and Contextual Analysis. Remote Sensing. 2025; 17(7):1136. https://doi.org/10.3390/rs17071136

Chicago/Turabian StyleWei, Jiayi, Min Song, and Yunbin Yuan. 2025. "High Precision Navigation and Positioning for Multisource Sensors Based on Bibliometric and Contextual Analysis" Remote Sensing 17, no. 7: 1136. https://doi.org/10.3390/rs17071136

APA StyleWei, J., Song, M., & Yuan, Y. (2025). High Precision Navigation and Positioning for Multisource Sensors Based on Bibliometric and Contextual Analysis. Remote Sensing, 17(7), 1136. https://doi.org/10.3390/rs17071136