Spatiotemporal Fusion of Multi-Temporal MODIS and Landsat-8/9 Imagery for Enhanced Daily 30 m NDVI Reconstruction: A Case Study of the Shiyang River Basin Cropland (2022)

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Regions

2.2. Input Remote Sensing Data

2.2.1. Land Use/Land Cover Data

2.2.2. Surface Reflectance Data

2.3. Data Preprocessing

2.3.1. MODIS Products

2.3.2. Landsat Products

2.4. Spatial and Temporal Fusion

2.5. Calculation of Vegetation Indexes

2.6. Validation

3. Results

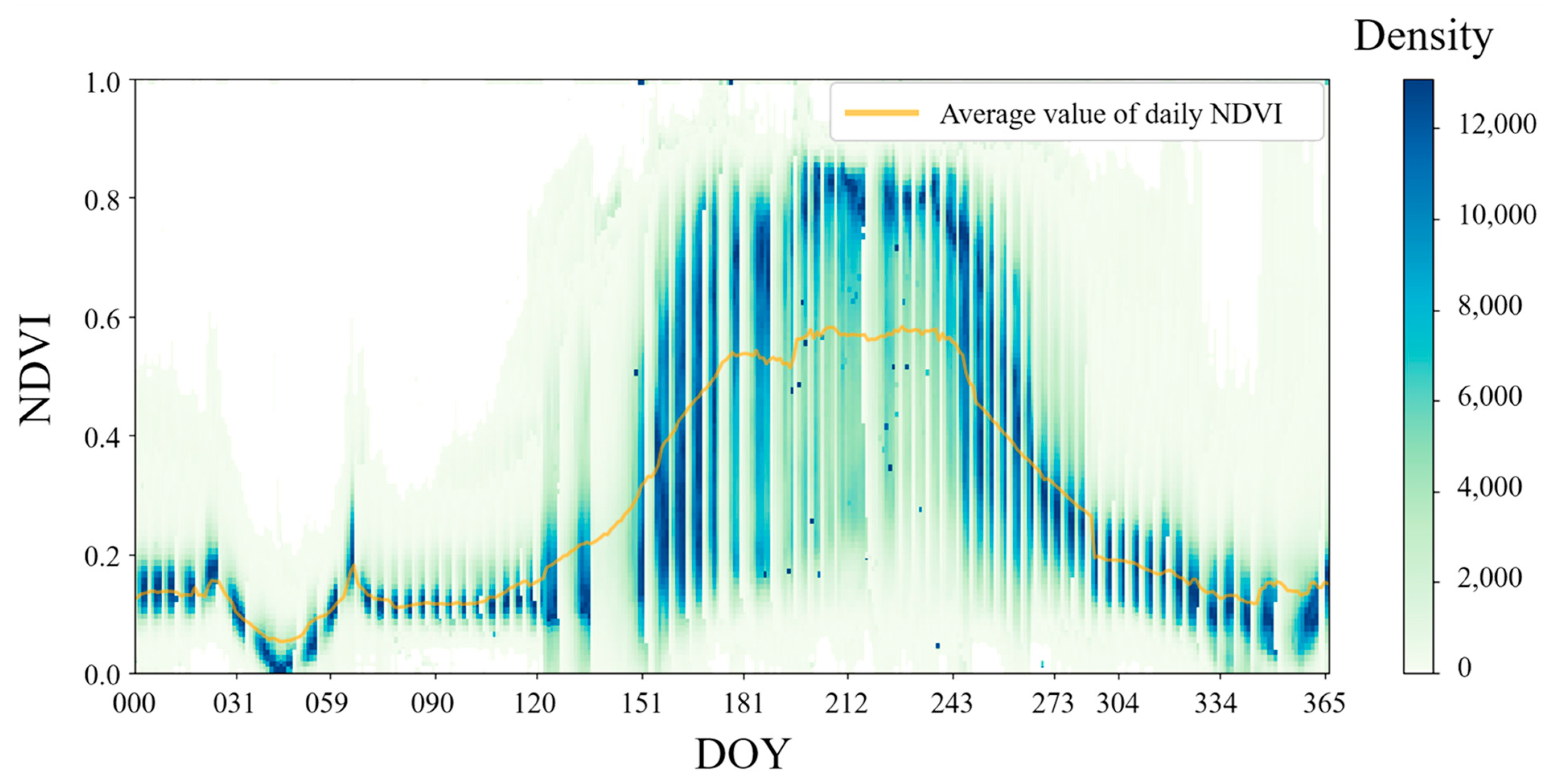

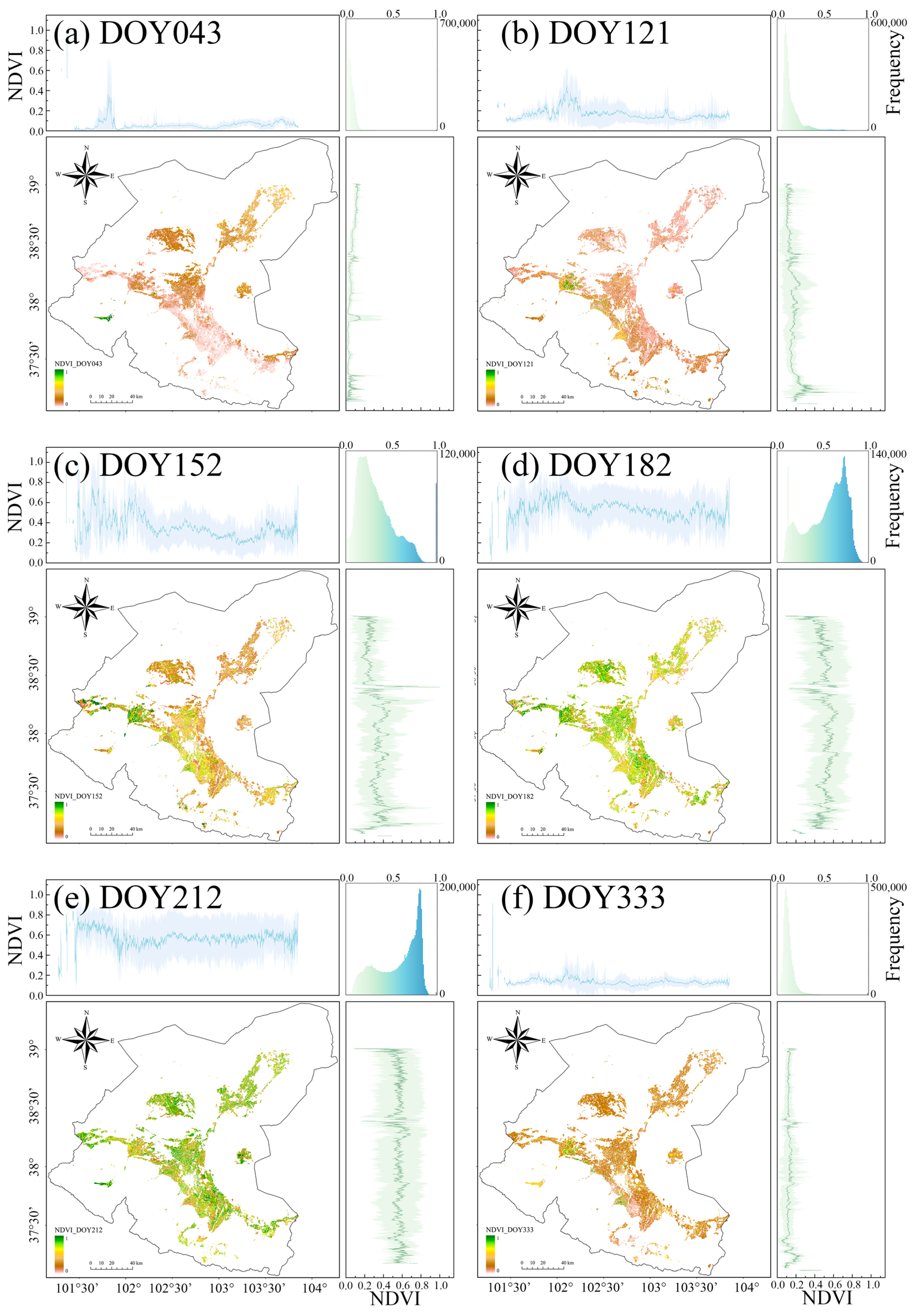

3.1. Regional Reconstruction NDVI

3.2. Quantitative Evaluation of Reconstructed NDVI

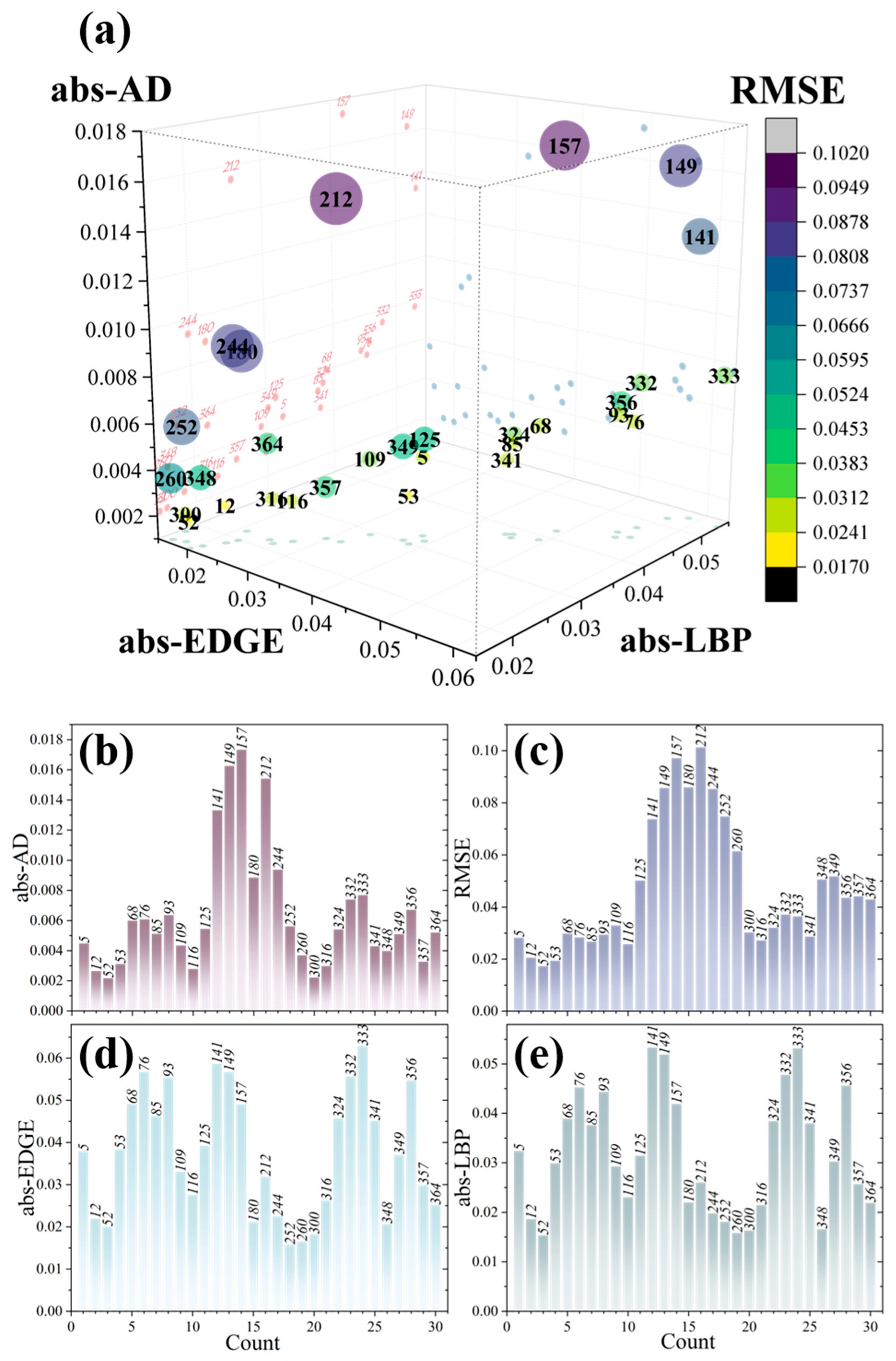

3.2.1. Evaluation Based on 3D-APA Diagram

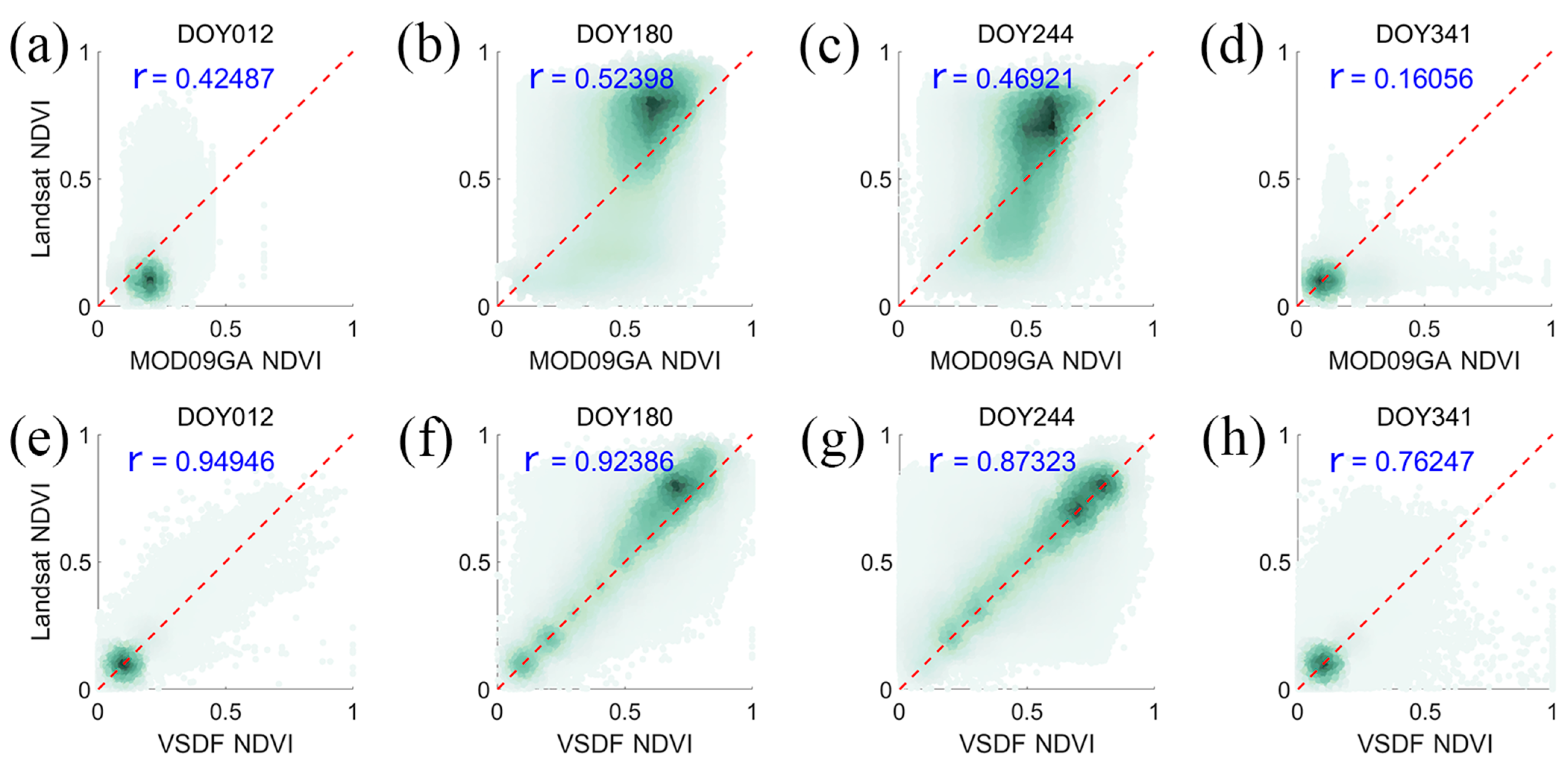

3.2.2. Comparison with Original MOD09GA NDVI

3.3. Daily Temporal–Spatial Distribution

4. Discussion

4.1. Optimized Image Selection Strategy for NDVI Fusion

4.2. Enhanced Accuracy Assessment Through 3D-APA Analysis

4.3. Spatiotemporal Visualization of Crop Growth Dynamics

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gao, Y.-Y.; He, J.; Li, X.-H.; Li, J.-H.; Wu, H.; Wen, T.; Li, J.; Hao, G.-F.; Yoon, J. Fluorescent Chemosensors Facilitate the Visualization of Plant Health and Their Living Environment in Sustainable Agriculture. Chem. Soc. Rev. 2024, 53, 6992–7090. [Google Scholar] [CrossRef] [PubMed]

- Down-to-Earth Drought Resistance. Nat. Plants 2024, 10, 525–526. [CrossRef] [PubMed]

- Nogrady, B. How to Address Agriculture’s Water Woes. Nature 2024, 630, S26–S27. [Google Scholar] [CrossRef]

- Li, X.; Wang, Y.; Zhao, Y.; Zhai, J.; Liu, Y.; Han, S.; Liu, K. Research on the Impact of Climate Change and Human Activities on the NDVI of Arid Areas—A Case Study of the Shiyang River Basin. Land 2024, 13, 533. [Google Scholar] [CrossRef]

- Grayson, M. Agriculture and Drought. Nature 2013, 501, S1. [Google Scholar] [CrossRef]

- Christou, A.; Beretsou, V.G.; Iakovides, I.C.; Karaolia, P.; Michael, C.; Benmarhnia, T.; Chefetz, B.; Donner, E.; Gawlik, B.M.; Lee, Y.; et al. Sustainable Wastewater Reuse for Agriculture. Nat. Rev. Earth Environ. 2024, 5, 504–521. [Google Scholar] [CrossRef]

- Mooney, S.J.; Castrillo, G.; Cooper, H.V.; Bennett, M.J. Root–Soil–Microbiome Management Is Key to the Success of Regenerative Agriculture. Nat. Food 2024, 5, 451–453. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Deering, D.W.; Schell, J.A. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation; Texas A&M University: College Station, TX, USA, 1973. [Google Scholar]

- Rouse, J.W., Jr.; Haas, R.H.; Deering, D.W.; Schell, J.A.; Harlan, J.C. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation; Texas A&M University: College Station, TX, USA, 1974. [Google Scholar]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Chen, D.; Hu, H.; Liao, C.; Ye, J.; Bao, W.; Mo, J.; Wu, Y.; Dong, T.; Fan, H.; Pei, J. Crop NDVI Time Series Construction by Fusing Sentinel-1, Sentinel-2, and Environmental Data with an Ensemble-Based Framework. Comput. Electron. Agric. 2023, 215, 108388. [Google Scholar] [CrossRef]

- Zhang, C.; Yang, Z.; Di, L.; Yu, E.G.; Zhang, B.; Han, W.; Lin, L.; Guo, L. Near-Real-Time MODIS-Derived Vegetation Index Data Products and Online Services for CONUS Based on NASA LANCE. Sci. Data 2022, 9, 477. [Google Scholar] [CrossRef]

- Gu, Z.; Chen, J.; Chen, Y.; Qiu, Y.; Zhu, X.; Chen, X. Agri-Fuse: A Novel Spatiotemporal Fusion Method Designed for Agricultural Scenarios with Diverse Phenological Changes. Remote Sens. Environ. 2023, 299, 113874. [Google Scholar] [CrossRef]

- Farbo, A.; Sarvia, F.; De Petris, S.; Basile, V.; Borgogno-Mondino, E. Forecasting Corn NDVI through AI-Based Approaches Using Sentinel 2 Image Time Series. ISPRS J. Photogramm. Remote Sens. 2024, 211, 244–261. [Google Scholar] [CrossRef]

- MODIS Terra Daily NDSI|Earth Engine Data Catalog. Available online: https://developers.google.cn/earth-engine/datasets/catalog/MODIS_MOD09GA_006_NDSI (accessed on 15 July 2024).

- Lyapustin, A. MODIS/Terra+Aqua Vegetation Index from MAIAC, Daily L3 Global 0.05Deg CMG V061 2023. Available online: https://lpdaac.usgs.gov/products/mcd19a3cmgv061 (accessed on 14 July 2024).

- Li, H.; Cao, Y.; Xiao, J.; Yuan, Z.; Hao, Z.; Bai, X.; Wu, Y.; Liu, Y. A Daily Gap-Free Normalized Difference Vegetation Index Dataset from 1981 to 2023 in China. Sci. Data 2024, 11, 527. [Google Scholar] [CrossRef]

- Xiong, C.; Ma, H.; Liang, S.; He, T.; Zhang, Y.; Zhang, G.; Xu, J. Improved Global 250 m 8-Day NDVI and EVI Products from 2000–2021 Using the LSTM Model. Sci. Data 2023, 10, 800. [Google Scholar] [CrossRef]

- Didan, K. MODIS/Aqua Vegetation Indices 16-Day L3 Global 250m SIN Grid V061 2021. Available online: https://lpdaac.usgs.gov/products/myd13q1v061 (accessed on 14 July 2024).

- Kidner, D.; Dorey, M.; Smith, D. What’s the Point? Interpolation and Extrapolation with a Regular Grid DEM. In Proceedings of the 4th International Conference on GeoComputation, Fredericksburg, VA, USA, 25–28 July 1999. [Google Scholar]

- Savitzky, A.; Golay, M.J.E. Smoothing and Differentiation of Data by Simplified Least Squares Procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Kalman, R.E.; Bucy, R.S. New Results in Linear Filtering and Prediction Theory. J. Basic Eng. 1961, 83, 95–108. [Google Scholar] [CrossRef]

- Morlet, J.; Arens, G.; Fourgeau, E.; Glard, D. Wave Propagation and Sampling Theory—Part I: Complex Signal and Scattering in Multilayered Media. Geophysics 1982, 47, 203–221. [Google Scholar] [CrossRef]

- Grossmann, A.; Morlet, J. Decomposition of Hardy Functions into Square Integrable Wavelets of Constant Shape. SIAM J. Math. Anal. 1984, 15, 723–736. [Google Scholar] [CrossRef]

- Li, M.; Cao, S.; Zhu, Z.; Wang, Z.; Myneni, R.B.; Piao, S. Spatiotemporally Consistent Global Dataset of the GIMMS Normalized Difference Vegetation Index (PKU GIMMS NDVI) from 1982 to 2022. Earth Syst. Sci. Data 2023, 15, 4181–4203. [Google Scholar] [CrossRef]

- Wu, P.; Shen, H.; Zhang, L.; Göttsche, F.-M. Integrated Fusion of Multi-Scale Polar-Orbiting and Geostationary Satellite Observations for the Mapping of High Spatial and Temporal Resolution Land Surface Temperature. Remote Sens. Environ. 2015, 156, 169–181. [Google Scholar] [CrossRef]

- Dong, W.; Fu, F.; Shi, G.; Cao, X.; Wu, J.; Li, G.; Li, X. Hyperspectral Image Super-Resolution via Non-Negative Structured Sparse Representation. IEEE Trans. Image Process. 2016, 25, 2337–2352. [Google Scholar] [CrossRef] [PubMed]

- Dian, R.; Fang, L.; Li, S. Hyperspectral Image Super-Resolution via Non-Local Sparse Tensor Factorization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5344–5353. [Google Scholar]

- Tan, Z.; Yue, P.; Di, L.; Tang, J. Deriving High Spatiotemporal Remote Sensing Images Using Deep Convolutional Network. Remote Sens. 2018, 10, 1066. [Google Scholar] [CrossRef]

- Song, H.; Liu, Q.; Wang, G.; Hang, R.; Huang, B. Spatiotemporal Satellite Image Fusion Using Deep Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 821–829. [Google Scholar] [CrossRef]

- Liu, X.; Liu, Q.; Wang, Y. Remote Sensing Image Fusion Based on Two-Stream Fusion Network. Inf. Fusion 2020, 55, 1–15. [Google Scholar] [CrossRef]

- Yang, Y.; Tu, W.; Huang, S.; Lu, H.; Wan, W.; Gan, L. Dual-Stream Convolutional Neural Network with Residual Information Enhancement for Pansharpening. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5402416. [Google Scholar] [CrossRef]

- Ozcelik, F.; Alganci, U.; Sertel, E.; Unal, G. Rethinking CNN-Based Pansharpening: Guided Colorization of Panchromatic Images via GANs. IEEE Trans. Geosci. Remote Sens. 2020, 59, 3486–3501. [Google Scholar] [CrossRef]

- Tan, Z.; Gao, M.; Li, X.; Jiang, L. A Flexible Reference-Insensitive Spatiotemporal Fusion Model for Remote Sensing Images Using Conditional Generative Adversarial Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5601413. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep Learning in Environmental Remote Sensing: Achievements and Challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Wang, Z.; Ma, Y.; Zhang, Y. Review of Pixel-Level Remote Sensing Image Fusion Based on Deep Learning. Inf. Fusion 2023, 90, 36–58. [Google Scholar] [CrossRef]

- Ao, Z.; Sun, Y.; Pan, X.; Xin, Q. Deep Learning-Based Spatiotemporal Data Fusion Using a Patch-to-Pixel Mapping Strategy and Model Comparisons. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5407718. [Google Scholar] [CrossRef]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting Unreasonable Effectiveness of Data in Deep Learning Era. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the Blending of the Landsat and MODIS Surface Reflectance: Predicting Daily Landsat Surface Reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar] [CrossRef]

- Zhang, W.; Li, A.; Jin, H.; Bian, J.; Zhang, Z.; Lei, G.; Qin, Z.; Huang, C. An Enhanced Spatial and Temporal Data Fusion Model for Fusing Landsat and MODIS Surface Reflectance to Generate High Temporal Landsat-Like Data. Remote Sens. 2013, 5, 5346–5368. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.H.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M.A. A Flexible Spatiotemporal Method for Fusing Satellite Images with Different Resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Gao, H.; Zhu, X.; Guan, Q.; Yang, X.; Yao, Y.; Zeng, W.; Peng, X. cuFSDAF: An Enhanced Flexible Spatiotemporal Data Fusion Algorithm Parallelized Using Graphics Processing Units. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4403016. [Google Scholar] [CrossRef]

- Zhukov, B.; Oertel, D.; Lanzl, F.; Reinhackel, G. Unmixing-Based Multisensor Multiresolution Image Fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1212–1226. [Google Scholar] [CrossRef]

- Wu, M.; Niu, Z.; Wang, C.; Wu, C.; Wang, L. Use of MODIS and Landsat Time Series Data to Generate High-Resolution Temporal Synthetic Landsat Data Using a Spatial and Temporal Reflectance Fusion Model. J. Appl. Remote Sens. 2012, 6, 063507. [Google Scholar] [CrossRef]

- Xu, C.; Du, X.; Yan, Z.; Zhu, J.; Xu, S.; Fan, X. VSDF: A Variation-Based Spatiotemporal Data Fusion Method. Remote Sens. Environ. 2022, 283, 113309. [Google Scholar] [CrossRef]

- Chen, J.; Jönsson, P.; Tamura, M.; Gu, Z.; Matsushita, B.; Eklundh, L. A Simple Method for Reconstructing a High-Quality NDVI Time-Series Data Set Based on the Savitzky–Golay Filter. Remote Sens. Environ. 2004, 91, 332–344. [Google Scholar] [CrossRef]

- Shi, J.; Shi, P.; Li, X.; Wang, Z.; Xu, A. Spatial and temporal variability of ecosystem services in the Shiyang River Basin and its multi-scale influencing factors. Prog. Geogr. 2024, 43, 276–289. [Google Scholar] [CrossRef]

- Yang, X.; Shi, X.; Zhang, Y.; Tian, F.; Ortega-Farias, S. Response of Evapotranspiration (ET) to Climate Factors and Crop Planting Structures in the Shiyang River Basin, Northwestern China. Remote Sens. 2023, 15, 3923. [Google Scholar] [CrossRef]

- Karra, K.; Kontgis, C.; Statman-Weil, Z.; Mazzariello, J.C.; Mathis, M.; Brumby, S.P. Global Land Use/Land Cover with Sentinel 2 and Deep Learning. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 4704–4707. [Google Scholar]

- Vermote, E. MODIS/Terra Surface Reflectance Daily L2G Global 1 km and 500 m SIN Grid 2021. Available online: https://lpdaac.usgs.gov/products/mod09gav061 (accessed on 14 April 2024).

- Schwenger, S.; MODIS and VIIRS Characterization Support Teams, NASA GSFC. MODIS and VIIRS Instrument Operations Status. In Proceedings of the 2023 MODIS/VIIRS Science Team Meeting, College Park, MD, USA, 1–4 May 2023; Available online: https://modis.gsfc.nasa.gov/sci_team/meetings/202305/presentations/cal/01_Schwenger_MODISVIIRSInstOps.pdf (accessed on 2 August 2024).

- Zhu, X.; Zhan, W.; Zhou, J.; Chen, X.; Liang, Z.; Xu, S.; Chen, J. A Novel Framework to Assess All-Round Performances of Spatiotemporal Fusion Models. Remote Sens. Environ. 2022, 274, 113002. [Google Scholar] [CrossRef]

- Mu, P. A Daily 30 m NDVI Product of Cropland in the Shiyang River Basin, Northwestern China of 2022. Available online: https://cstr.cn/18406.11.Terre.tpdc.302191 (accessed on 7 March 2025).

- Xu, H.; Sun, H.; Xu, Z.; Wang, Y.; Zhang, T.; Wu, D.; Gao, J. kNDMI: A Kernel Normalized Difference Moisture Index for Remote Sensing of Soil and Vegetation Moisture. Remote Sens. Environ. 2025, 319, 114621. [Google Scholar] [CrossRef]

- Ebrahimy, H.; Yu, T.; Zhang, Z. Developing a Spatiotemporal Fusion Framework for Generating Daily UAV Images in Agricultural Areas Using Publicly Available Satellite Data. ISPRS J. Photogramm. Remote Sens. 2025, 220, 413–427. [Google Scholar] [CrossRef]

- Cao, H.; Han, L.; Li, L. Changes in Extent of Open-Surface Water Bodies in China’s Yellow River Basin (2000–2020) Using Google Earth Engine Cloud Platform. Anthropocene 2022, 39, 100346. [Google Scholar] [CrossRef]

- Dimson, M.; Cavanaugh, K.C.; von Allmen, E.; Burney, D.A.; Kawelo, K.; Beachy, J.; Gillespie, T.W. Monitoring Native, Non-Native, and Restored Tropical Dry Forest with Landsat: A Case Study from the Hawaiian Islands. Ecol. Inform. 2024, 83, 102821. [Google Scholar] [CrossRef]

- Chen, S.; Wang, J.; Gong, P. ROBOT: A Spatiotemporal Fusion Model toward Seamless Data Cube for Global Remote Sensing Applications. Remote Sens. Environ. 2023, 294, 113616. [Google Scholar] [CrossRef]

- Kong, J.; Ryu, Y.; Jeong, S.; Zhong, Z.; Choi, W.; Kim, J.; Lee, K.; Lim, J.; Jang, K.; Chun, J.; et al. Super Resolution of Historic Landsat Imagery Using a Dual Generative Adversarial Network (GAN) Model with CubeSat Constellation Imagery for Spatially Enhanced Long-Term Vegetation Monitoring. ISPRS J. Photogramm. Remote Sens. 2023, 200, 1–23. [Google Scholar] [CrossRef]

- Dai, R.; Wang, Z.; Wang, W.; Jie, J.; Chen, J.; Ye, Q. VTNet: A Multi-Domain Information Fusion Model for Long-Term Multi-Variate Time Series Forecasting with Application in Irrigation Water Level. Appl. Soft Comput. 2024, 167, 112251. [Google Scholar] [CrossRef]

- Zhao, W.; Qu, Y.; Zhang, L.; Li, K. Spatial-Aware SAR-Optical Time-Series Deep Integration for Crop Phenology Tracking. Remote Sens. Environ. 2022, 276, 113046. [Google Scholar] [CrossRef]

- Rhif, M.; Ben Abbes, A.; Martínez, B.; Farah, I.R.; Gilabert, M.A. Optimal Selection of Wavelet Transform Parameters for Spatio-Temporal Analysis Based on Non-Stationary NDVI MODIS Time Series in Mediterranean Region. ISPRS J. Photogramm. Remote Sens. 2022, 193, 216–233. [Google Scholar] [CrossRef]

- Guo, H.; Wang, Y.; Yu, J.; Yi, L.; Shi, Z.; Wang, F. A Novel Framework for Vegetation Change Characterization from Time Series Landsat Images. Environ. Res. 2023, 222, 115379. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.; Zhang, Y.; Yao, Y.; Meng, F.; Zhao, Q.; Zhang, Y. Contrasting Temperature Effects on the Velocity of Early-versus Late-stage Vegetation Green-up in the Northern Hemisphere. Glob. Change Biol. 2022, 28, 6961–6972. [Google Scholar] [CrossRef] [PubMed]

- Ji, Z.; Pan, Y.; Zhu, X.; Zhang, D.; Wang, J. A Generalized Model to Predict Large-Scale Crop Yields Integrating Satellite-Based Vegetation Index Time Series and Phenology Metrics. Ecol. Indic. 2022, 137, 108759. [Google Scholar] [CrossRef]

- Jamali, S.; Olsson, P.-O.; Ghorbanian, A.; Müller, M. Examining the Potential for Early Detection of Spruce Bark Beetle Attacks Using Multi-Temporal Sentinel-2 and Harvester Data. ISPRS J. Photogramm. Remote Sens. 2023, 205, 352–366. [Google Scholar] [CrossRef]

- Seguini, L.; Vrieling, A.; Meroni, M.; Nelson, A. Annual Winter Crop Distribution from MODIS NDVI Timeseries to Improve Yield Forecasts for Europe. Int. J. Appl. Earth Obs. Geoinf. 2024, 130, 103898. [Google Scholar] [CrossRef]

- Zhang, Y.; Commane, R.; Zhou, S.; Williams, A.P.; Gentine, P. Light Limitation Regulates the Response of Autumn Terrestrial Carbon Uptake to Warming. Nat. Clim. Chang. 2020, 10, 739–743. [Google Scholar] [CrossRef]

- Li, J.; Song, J.; Li, M.; Shang, S.; Mao, X.; Yang, J.; Adeloye, A.J. Optimization of Irrigation Scheduling for Spring Wheat Based on Simulation-Optimization Model under Uncertainty. Agric. Water Manag. 2018, 208, 245–260. [Google Scholar] [CrossRef]

- Lu, S.; Zhang, T.; Tian, F. Evaluation of Crop Water Status and Vegetation Dynamics For Alternate Partial Root-Zone Drip Irrigation of Alfalfa: Observation With an UAV Thermal Infrared Imagery. Front. Environ. Sci. 2022, 10, 791982. [Google Scholar] [CrossRef]

- Bo, L.; Guan, H.; Mao, X. Diagnosing Crop Water Status Based on Canopy Temperature as a Function of Film Mulching and Deficit Irrigation. Field Crops Res. 2023, 304, 109154. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, X.; Tian, F. Study on Soil Moisture Status of Soybean and Corn across the Whole Growth Period Based on UAV Multimodal Remote Sensing. Remote Sens. 2024, 16, 3166. [Google Scholar] [CrossRef]

- Song, L.; Guanter, L.; Guan, K.; You, L.; Huete, A.; Ju, W.; Zhang, Y. Satellite Sun-Induced Chlorophyll Fluorescence Detects Early Response of Winter Wheat to Heat Stress in the Indian Indo-Gangetic Plains. Glob. Change Biol. 2018, 24, 4023–4037. [Google Scholar] [CrossRef] [PubMed]

- Hu, P.; Zheng, B.; Chen, Q.; Grunefeld, S.; Choudhury, M.R.; Fernandez, J.; Potgieter, A.; Chapman, S.C. Estimating Aboveground Biomass Dynamics of Wheat at Small Spatial Scale by Integrating Crop Growth and Radiative Transfer Models with Satellite Remote Sensing Data. Remote Sens. Environ. 2024, 311, 114277. [Google Scholar] [CrossRef]

| Crop Type | Sow | Grow | Maturity | Harvest |

|---|---|---|---|---|

| Corn | 121 | 166 | 237 | 273–304 |

| Wheat | 79 | 121 | 166 | 196–212 |

| Vegetables and potatoes | 121 | 140 | 166–273 | |

| Oil | 121 | 182 | 243 | 273–304 |

| Forage grass | 91/243 | 121 | 140–304 | |

| Datasets | Bands | |||

|---|---|---|---|---|

| MODIS/061/MOD09GA | Red (1) | 620–670 | 500 | 1 |

| Nir (2) | 841–876 | |||

| LANDSAT/LC08/C02/T1_L2LANDSAT/LC09/C02/T1_L2 | Red (4) | 630–680 | 30 | 16 |

| Nir (5) | 845–885 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mu, P.; Tian, F. Spatiotemporal Fusion of Multi-Temporal MODIS and Landsat-8/9 Imagery for Enhanced Daily 30 m NDVI Reconstruction: A Case Study of the Shiyang River Basin Cropland (2022). Remote Sens. 2025, 17, 1510. https://doi.org/10.3390/rs17091510

Mu P, Tian F. Spatiotemporal Fusion of Multi-Temporal MODIS and Landsat-8/9 Imagery for Enhanced Daily 30 m NDVI Reconstruction: A Case Study of the Shiyang River Basin Cropland (2022). Remote Sensing. 2025; 17(9):1510. https://doi.org/10.3390/rs17091510

Chicago/Turabian StyleMu, Peiwen, and Fei Tian. 2025. "Spatiotemporal Fusion of Multi-Temporal MODIS and Landsat-8/9 Imagery for Enhanced Daily 30 m NDVI Reconstruction: A Case Study of the Shiyang River Basin Cropland (2022)" Remote Sensing 17, no. 9: 1510. https://doi.org/10.3390/rs17091510

APA StyleMu, P., & Tian, F. (2025). Spatiotemporal Fusion of Multi-Temporal MODIS and Landsat-8/9 Imagery for Enhanced Daily 30 m NDVI Reconstruction: A Case Study of the Shiyang River Basin Cropland (2022). Remote Sensing, 17(9), 1510. https://doi.org/10.3390/rs17091510