The Role of Radiomics in the Prediction of Clinically Significant Prostate Cancer in the PI-RADS v2 and v2.1 Era: A Systematic Review

Abstract

Simple Summary

Abstract

1. Introduction

2. Evidence Acquisition

2.1. Literature Search

2.2. Eligibility Criteria

2.3. Quality Assessment

2.4. Artificial Intelligence Quality Assessment

2.5. Data Collection

3. Evidence Synthesis

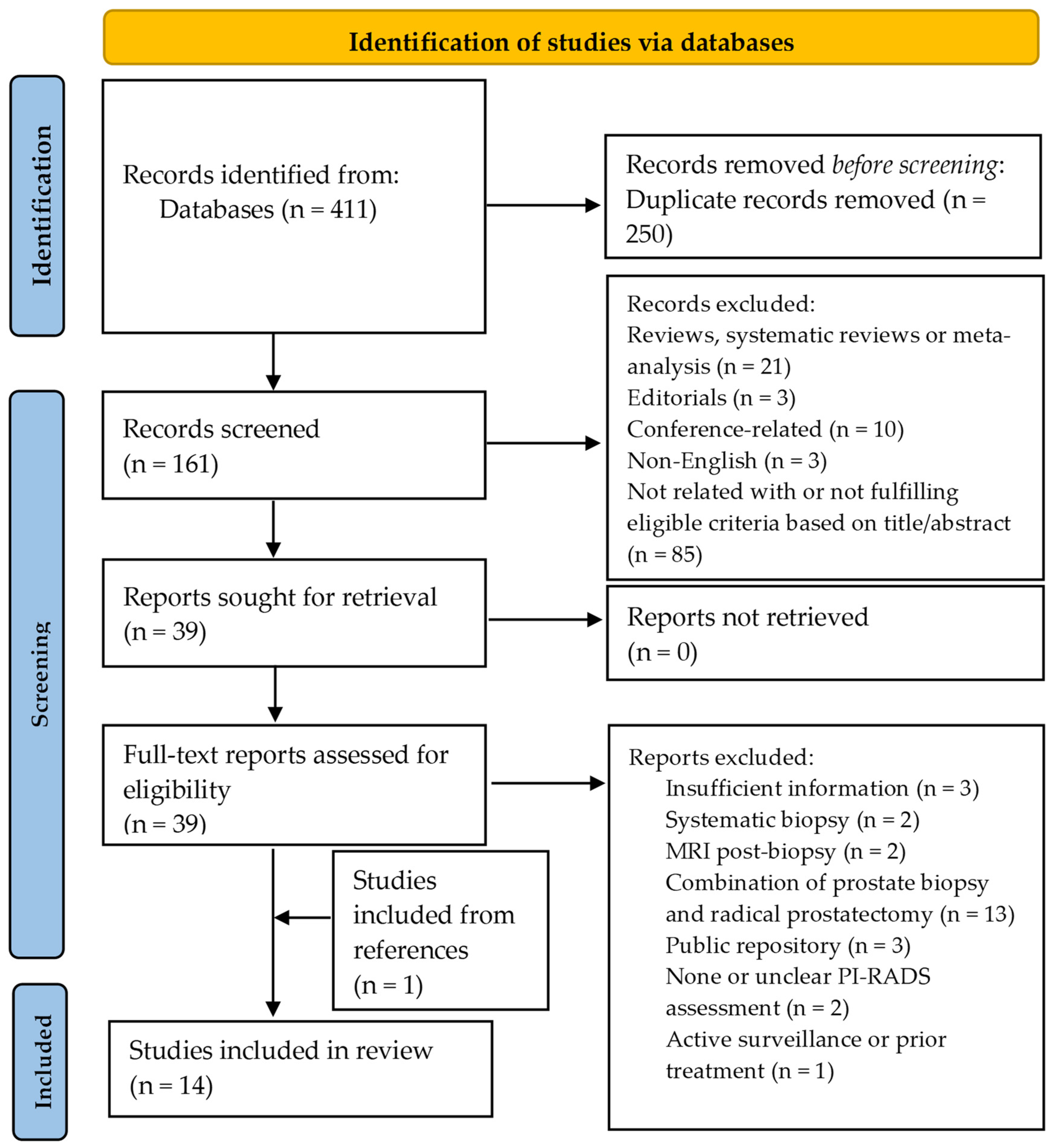

3.1. Study Selection

3.2. QUADAS-2 Risk of Bias Assessment

3.3. Quality Assessment Based on RQS and CLAIM

3.4. Study Characteristics

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Epstein, J.I.; Egevad, L.; Amin, M.B.; Delahunt, B.; Srigley, J.R.; Humphrey, P.A.; Grading Committee. The 2014 International Society of Urological Pathology (ISUP) Consensus Conference on Gleason Grading of Prostatic Carcinoma: Definition of Grading Patterns and Proposal for a New Grading System. Am. J. Surg. Pathol. 2016, 40, 244–252. [Google Scholar] [CrossRef] [PubMed]

- Van Poppel, H.; Roobol, M.J.; Chandran, A. Early Detection of Prostate Cancer in the European Union: Combining Forces with PRAISE-U. Eur. Urol. 2023, 84, 519–522. [Google Scholar] [CrossRef] [PubMed]

- Van Poppel, H.; Hogenhout, R.; Albers, P.; van den Bergh, R.C.N.; Barentsz, J.O.; Roobol, M.J. A European Model for an Organised Risk-stratified Early Detection Programme for Prostate Cancer. Eur. Urol. Oncol. 2021, 4, 731–739. [Google Scholar] [CrossRef]

- Van Poppel, H.; Albreht, T.; Basu, P.; Hogenhout, R.; Collen, S.; Roobol, M. Serum PSA-based Early Detection of Prostate Cancer in Europe and Globally: Past, Present and Future. Nat. Rev. Urol. 2022, 19, 562–572. [Google Scholar] [CrossRef]

- Turkbey, B.; Rosenkrantz, A.B.; Haider, M.A.; Padhani, A.R.; Villeirs, G.; Macura, K.J.; Tempany, C.M.; Choyke, P.L.; Cornud, F.; Margolis, D.J.; et al. Prostate Imaging Reporting and Data System Version 2.1: 2019 Update of Prostate Imaging Reporting and Data System Version 2. Eur. Urol. 2019, 76, 340–351. [Google Scholar] [CrossRef]

- Sathianathen, N.J.; Omer, A.; Harriss, E.; Davies, L.; Kasivisvanathan, V.; Punwani, S.; Moore, C.M.; Kastner, C.; Barrett, T.; Van Den Bergh, R.C.; et al. Negative Predictive Value of Multiparametric Magnetic Resonance Imaging in the Detection of Clinically Significant Prostate Cancer in the Prostate Imaging Reporting and Data System Era: A Systematic Review and Meta-analysis. Eur. Urol. 2020, 78, 402–414. [Google Scholar] [CrossRef]

- Oerther, B.; Engel, H.; Bamberg, F.; Sigle, A.; Gratzke, C.; Benndorf, M. Cancer Detection Rates of the PI-RADS v2.1 Assessment Categories: Systematic Review and Meta-analysis on Lesion Level and Patient Level. Prostate Cancer Prostatic Dis. 2022, 25, 256–263. [Google Scholar] [CrossRef]

- Drost, F.H.; Osses, D.; Nieboer, D.; Bangma, C.H.; Steyerberg, E.W.; Roobol, M.J.; Schoots, I.G. Prostate Magnetic Resonance Imaging, with or Without Magnetic Resonance Imaging-targeted Biopsy, and Systematic Biopsy for Detecting Prostate Cancer: A Cochrane Systematic Review and Meta-analysis. Eur. Urol. 2020, 77, 78–94. [Google Scholar] [CrossRef]

- Mottet, N.; van den Bergh, R.C.N.; Briers, E.; Van den Broeck, T.; Cumberbatch, M.G.; De Santis, M.; Fanti, S.; Fossati, N.; Gandaglia, G.; Gillessen, S.; et al. EAU-EANM-ESTRO-ESUR-SIOG Guidelines on Prostate Cancer-2020 Update. Part 1: Screening, Diagnosis, and Local Treatment with Curative Intent. Eur. Urol. 2021, 79, 243–262. [Google Scholar] [CrossRef]

- Purysko, A.S.; Baroni, R.H.; Giganti, F.; Costa, D.; Renard-Penna, R.; Kim, C.K.; Raman, S.S. PI-RADS Version 2.1: A Critical Review, From the AJR Special Series on Radiology Reporting and Data Systems. AJR Am. J. Roentgenol. 2021, 216, 20–32. [Google Scholar] [CrossRef] [PubMed]

- Bhayana, R.; O’Shea, A.; Anderson, M.A.; Bradley, W.R.; Gottumukkala, R.V.; Mojtahed, A.; Pierce, T.T.; Harisinghani, M. PI-RADS Versions 2 and 2.1: Interobserver Agreement and Diagnostic Performance in Peripheral and Transition Zone Lesions Among Six Radiologists. AJR Am. J. Roentgenol. 2021, 217, 141–151. [Google Scholar] [CrossRef] [PubMed]

- Smith, C.P.; Harmon, S.A.; Barrett, T.; Bittencourt, L.K.; Law, Y.M.; Shebel, H.; An, J.Y.; Czarniecki, M.; Mehralivand, S.; Coskun, M.; et al. Intra- and Interreader Reproducibility of PI-RADSv2: A Multireader Study. J. Magn. Reson. Imaging 2019, 49, 1694–1703. [Google Scholar] [CrossRef] [PubMed]

- Osses, D.F.; Roobol, M.J.; Schoots, I.G. Prediction Medicine: Biomarkers, Risk Calculators and Magnetic Resonance Imaging as Risk Stratification Tools in Prostate Cancer Diagnosis. Int. J. Mol. Sci. 2019, 20, 1637. [Google Scholar] [CrossRef]

- Lambin, P.; Leijenaar, R.T.H.; Deist, T.M.; Peerlings, J.; de Jong, E.E.C.; van Timmeren, J.; Sanduleanu, S.; Larue, R.T.H.M.; Even, A.J.G.; Jochems, A.; et al. Radiomics: The Bridge Between Medical Imaging and Personalized Medicine. Nat. Rev. Clin. Oncol. 2017, 14, 749–762. [Google Scholar] [CrossRef]

- Scapicchio, C.; Gabelloni, M.; Barucci, A.; Cioni, D.; Saba, L.; Neri, E. A Deep Look Into Radiomics. Radiol Med. 2021, 126, 1296–1311. [Google Scholar] [CrossRef]

- Ferro, M.; de Cobelli, O.; Musi, G.; Del Giudice, F.; Carrieri, G.; Busetto, G.M.; Falagario, U.G.; Sciarra, A.; Maggi, M.; Crocetto, F.; et al. Radiomics in Prostate Cancer: An Up-to-Date Review. Ther. Adv. Urol. 2022, 14, 17562872221109020. [Google Scholar] [CrossRef]

- Cutaia, G.; La Tona, G.; Comelli, A.; Vernuccio, F.; Agnello, F.; Gagliardo, C.; Salvaggio, L.; Quartuccio, N.; Sturiale, L.; Stefano, A.; et al. Radiomics and Prostate MRI: Current Role and Future Applications. J. Imaging 2021, 7, 34. [Google Scholar] [CrossRef]

- Penzkofer, T.; Padhani, A.R.; Turkbey, B.; Haider, M.A.; Huisman, H.; Walz, J.; Salomon, G.; Schoots, I.G.; Richenberg, J.; Villeirs, G.; et al. ESUR/ESUI Position Paper: Developing Artificial Intelligence for Precision Diagnosis of Prostate Cancer Using Magnetic Resonance Imaging. Eur. Radiol. 2021, 31, 9567–9578. [Google Scholar] [CrossRef]

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 Explanation and Elaboration: Updated Guidance and Exemplars for Reporting Systematic Reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef]

- Schardt, C.; Adams, M.B.; Owens, T.; Keitz, S.; Fontelo, P. Utilization of the PICO Framework to Improve Searching PubMed for Clinical Questions. BMC Med. Inform. Decis. Mak. 2007, 7, 16. [Google Scholar] [CrossRef] [PubMed]

- QUADAS-2|Bristol Medical School: Population Health Sciences|University of Bristol. Available online: https://www.bristol.ac.uk/population-health-sciences/projects/quadas/quadas-2/ (accessed on 1 June 2024).

- Mongan, J.; Moy, L.; Kahn, C.E., Jr. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): A Guide for Authors and Reviewers. Radiol. Artif. Intell. 2020, 2, e200029. [Google Scholar] [CrossRef]

- Dominguez, I.; Rios-Ibacache, O.; Caprile, P.; Gonzalez, J.; San Francisco, I.F.; Besa, C. MRI-Based Surrogate Imaging Markers of Aggressiveness in Prostate Cancer: Development of a Machine Learning Model Based on Radiomic Features. Diagnostics 2023, 13, 2779. [Google Scholar] [CrossRef] [PubMed]

- Prata, F.; Anceschi, U.; Cordelli, E.; Faiella, E.; Civitella, A.; Tuzzolo, P.; Iannuzzi, A.; Ragusa, A.; Esperto, F.; Prata, S.M.; et al. Radiomic Machine-Learning Analysis of Multiparametric Magnetic Resonance Imaging in the Diagnosis of Clinically Significant Prostate Cancer: New Combination of Textural and Clinical Features. Curr. Oncol. 2023, 30, 2021–2031. [Google Scholar] [CrossRef] [PubMed]

- Jin, P.; Shen, J.; Yang, L.; Zhang, J.; Shen, A.; Bao, J.; Wang, X. Machine Learning-Based Radiomics Model to Predict Benign and Malignant PI-RADS v2.1 Category 3 Lesions: A Retrospective Multi-Center Study. BMC Med. Imaging 2023, 23, 47. [Google Scholar] [CrossRef]

- Hamm, C.A.; Baumgärtner, G.L.; Biessmann, F.; Beetz, N.L.; Hartenstein, A.; Savic, L.J.; Froböse, K.; Dräger, F.; Schallenberg, S.; Rudolph, M.; et al. Interactive Explainable Deep Learning Model Informs Prostate Cancer Diagnosis at MRI. Radiology 2023, 307, e222276. [Google Scholar] [CrossRef]

- Hong, S.; Kim, S.H.; Yoo, B.; Kim, J.Y. Deep Learning Algorithm for Tumor Segmentation and Discrimination of Clinically Significant Cancer in Patients with Prostate Cancer. Curr. Oncol. 2023, 30, 7275–7285. [Google Scholar] [CrossRef]

- Jing, G.; Xing, P.; Li, Z.; Ma, X.; Lu, H.; Shao, C.; Lu, Y.; Lu, J.; Shen, F. Prediction of Clinically Significant Prostate Cancer with a Multimodal MRI-Based Radiomics Nomogram. Front. Oncol. 2022, 12, 918830. [Google Scholar] [CrossRef]

- Zhu, L.; Gao, G.; Zhu, Y.; Han, C.; Liu, X.; Li, D.; Liu, W.; Wang, X.; Zhang, J.; Zhang, X.; et al. Fully Automated Detection and Localization of Clinically Significant Prostate Cancer on MR Images Using a Cascaded Convolutional Neural Network. Front. Oncol. 2022, 12, 958065. [Google Scholar] [CrossRef]

- Jiang, K.W.; Song, Y.; Hou, Y.; Zhi, R.; Zhang, J.; Bao, M.L.; Li, H.; Yan, X.; Xi, W.; Zhang, C.X.; et al. Performance of Artificial Intelligence-Aided Diagnosis System for Clinically Significant Prostate Cancer with MRI: A Diagnostic Comparison Study. J. Magn. Reson. Imaging 2023, 57, 1352–1364. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, H.; Liang, Z.; Miao, Q.; Brisbane, W.G.; Marks, L.S.; Raman, S.S.; Reiter, R.E.; Yang, G.; Sung, K. Textured-Based Deep Learning in Prostate Cancer Classification with 3T Multiparametric MRI: Comparison with PI-RADS-Based Classification. Diagnostics 2021, 11, 1785. [Google Scholar] [CrossRef] [PubMed]

- Lim, C.S.; Abreu-Gomez, J.; Thornhill, R.; James, N.; Al Kindi, A.; Lim, A.S.; Schieda, N. Utility of Machine Learning of Apparent Diffusion Coefficient (ADC) and T2-Weighted (T2W) Radiomic Features in PI-RADS Version 2.1 Category 3 Lesions to Predict Prostate Cancer Diagnosis. Abdom. Radiol. 2021, 46, 5647–5658. [Google Scholar] [CrossRef] [PubMed]

- Hectors, S.J.; Chen, C.; Chen, J.; Wang, J.; Gordon, S.; Yu, M.; Al Hussein Al Awamlh, B.; Sabuncu, M.R.; Margolis, D.J.A.; Hu, J.C. Magnetic Resonance Imaging Radiomics-Based Machine Learning Prediction of Clinically Significant Prostate Cancer in Equivocal PI-RADS 3 Lesions. J. Magn. Reson. Imaging 2021, 54, 1466–1473. [Google Scholar] [CrossRef] [PubMed]

- Castillo, T.J.M.; Starmans, M.P.A.; Arif, M.; Niessen, W.J.; Klein, S.; Bangma, C.H.; Schoots, I.G.; Veenland, J.F. A Multi-Center, Multi-Vendor Study to Evaluate the Generalizability of a Radiomics Model for Classifying Prostate Cancer: High Grade vs. Low Grade. Diagnostics 2021, 11, 369. [Google Scholar] [CrossRef]

- Li, M.; Chen, T.; Zhao, W.; Wei, C.; Li, X.; Duan, S.; Ji, L.; Lu, Z.; Shen, J. Radiomics Prediction Model for the Improved Diagnosis of Clinically Significant Prostate Cancer on Biparametric MRI. Quant. Imaging Med. Surg. 2020, 10, 368–379. [Google Scholar] [CrossRef]

- Zhong, X.; Cao, R.; Shakeri, S.; Scalzo, F.; Lee, Y.; Enzmann, D.R.; Wu, H.H.; Raman, S.S.; Sung, K. Deep Transfer Learning-Based Prostate Cancer Classification Using 3 Tesla Multi-Parametric MRI. Abdom. Radiol. 2019, 44, 2030–2039. [Google Scholar] [CrossRef]

- Litjens, G.; Debats, O.; Barentsz, J.; Karssemeijer, N.; Huisman, H. SPIE-AAPM PROSTATEx Challenge Data (Version 2) [dataset]. Cancer Imaging Arch. 2017. [Google Scholar] [CrossRef]

- Castillo, T.J.M.; Arif, M.; Niessen, W.J.; Schoots, I.G.; Veenland, J.F. Automated Classification of Significant Prostate Cancer on MRI: A Systematic Review on the Performance of Machine Learning Applications. Cancers 2020, 12, 1606. [Google Scholar] [CrossRef]

- Cuocolo, R.; Cipullo, M.B.; Stanzione, A.; Romeo, V.; Green, R.; Cantoni, V.; Ponsiglione, A.; Ugga, L.; Imbriaco, M. Machine Learning for the Identification of Clinically Significant Prostate Cancer on MRI: A Meta-Analysis. Eur. Radiol. 2020, 30, 6877–6887. [Google Scholar] [CrossRef]

- Hosseinzadeh, M.; Saha, A.; Brand, P.; Slootweg, I.; de Rooij, M.; Huisman, H. Deep Learning-Assisted Prostate Cancer Detection on Bi-Parametric MRI: Minimum Training Data Size Requirements and Effect of Prior Knowledge. Eur. Radiol. 2022, 32, 2224–2234. [Google Scholar] [CrossRef]

- Sushentsev, N.; Moreira Da Silva, N.; Yeung, M.; Barrett, T.; Sala, E.; Roberts, M.; Rundo, L. Comparative Performance of Fully-Automated and Semi-Automated Artificial Intelligence Methods for the Detection of Clinically Significant Prostate Cancer on MRI: A Systematic Review. Insights Imaging 2022, 13, 59. [Google Scholar] [CrossRef] [PubMed]

- Syer, T.; Mehta, P.; Antonelli, M.; Mallelet, S.; Atkinson, D.; Ourselin, S.; Punwani, S. Artificial Intelligence Compared to Radiologists for the Initial Diagnosis of Prostate Cancer on Magnetic Resonance Imaging: A Systematic Review and Recommendations for Future Studies. Cancers 2021, 13, 3318. [Google Scholar] [CrossRef] [PubMed]

- Twilt, J.J.; van Leeuwen, K.G.; Huisman, H.J.; Fütterer, J.J.; de Rooij, M. Artificial Intelligence Based Algorithms for Prostate Cancer Classification and Detection on Magnetic Resonance Imaging: A Narrative Review. Diagnostics 2021, 11, 959. [Google Scholar] [CrossRef] [PubMed]

- Rosenkrantz, A.B.; Taneja, S.S. Radiologist, Be Aware: Ten Pitfalls That Confound the Interpretation of Multiparametric Prostate MRI. AJR Am. J. Roentgenol. 2014, 202, 109–120. [Google Scholar] [CrossRef]

- Tewes, S.; Mokov, N.; Hartung, D.; Schick, V.; Peters, I.; Schedl, P.; Pertschy, S.; Wacker, F.; Voshage, G.; Hueper, K. Standardized Reporting of Prostate MRI: Comparison of the Prostate Imaging Reporting and Data System (PI-RADS) Version 1 and Version 2. PLoS ONE 2016, 11, e0162879. [Google Scholar] [CrossRef]

- Rudolph, M.M.; Baur, A.D.J.; Cash, H.; Haas, M.; Mahjoub, S.; Hartenstein, A.; Hamm, C.A.; Beetz, N.L.; Konietschke, F.; Hamm, B.; et al. Diagnostic Performance of PI-RADS Version 2.1 Compared to Version 2.0 for Detection of Peripheral and Transition Zone Prostate Cancer. Sci. Rep. 2020, 10, 15982. [Google Scholar] [CrossRef]

- Kasivisvanathan, V.; Rannikko, A.S.; Borghi, M.; Panebianco, V.; Mynderse, L.A.; Vaarala, M.H.; Briganti, A.; Budäus, L.; Hellawell, G.; PRECISION Study Group Collaborators; et al. MRI-Targeted or Standard Biopsy for Prostate-Cancer Diagnosis. N. Engl. J. Med. 2018, 378, 1767–1777. [Google Scholar] [CrossRef]

- Goel, S.; Shoag, J.E.; Gross, M.D.; Al Hussein Al Awamlh, B.; Robinson, B.; Khani, F.; Baltich Nelson, B.; Margolis, D.J.; Hu, J.C. Concordance Between Biopsy and Radical Prostatectomy Pathology in the Era of Targeted Biopsy: A Systematic Review and Meta-Analysis. Eur. Urol. Oncol. 2020, 3, 10–20. [Google Scholar] [CrossRef]

- Sun, Z.; Wu, P.; Cui, Y.; Liu, X.; Wang, K.; Gao, G.; Wang, H.; Zhang, X.; Wang, X. Deep-Learning Models for Detection and Localization of Visible Clinically Significant Prostate Cancer on Multi-Parametric MRI. J. Magn. Reson. Imaging 2023, 58, 1067–1081. [Google Scholar] [CrossRef]

- Bonmatí, L.M.; Miguel, A.; Suárez, A.; Aznar, M.; Beregi, J.P.; Fournier, L.; Neri, E.; Laghi, A.; França, M.; Sardanelli, F.; et al. CHAIMELEON Project: Creation of a Pan-European Repository of Health Imaging Data for the Development of AI-Powered Cancer Management Tools. Front. Oncol. 2022, 12, 742701. [Google Scholar] [CrossRef]

- Leevy, J.L.; Khoshgoftaar, T.M.; Bauder, R.A.; Seliya, N. A Survey on Addressing High-Class Imbalance in Big Data. J. Big Data 2018, 5, 42. [Google Scholar] [CrossRef]

- Varoquaux, G.; Cheplygina, V. Machine Learning for Medical Imaging: Methodological Failures and Recommendations for the Future. NPJ Digit. Med. 2022, 5, 48. [Google Scholar] [CrossRef]

- Peng, Y.; Jiang, Y.; Antic, T.; Giger, M.L.; Eggener, S.E.; Oto, A. Validation of Quantitative Analysis of Multiparametric Prostate MR Images for Prostate Cancer Detection and Aggressiveness Assessment: A Cross-Imager Study. Radiology 2014, 271, 461–471. [Google Scholar] [CrossRef] [PubMed]

- Transin, S.; Souchon, R.; Gonindard-Melodelima, C.; de Rozario, R.; Walker, P.; Funes de la Vega, M.; Loffroy, R.; Cormier, L.; Rouvière, O. Computer-Aided Diagnosis System for Characterizing ISUP Grade ≥2 Prostate Cancers at Multiparametric MRI: A Cross-Vendor Evaluation. Diagn. Interv. Imaging 2019, 100, 801–811. [Google Scholar] [CrossRef] [PubMed]

- Ozkan, T.A.; Eruyar, A.T.; Cebeci, O.O.; Memik, O.; Ozcan, L.; Kuskonmaz, I. Interobserver Variability in Gleason Histological Grading of Prostate Cancer. Scand. J. Urol. 2016, 50, 420–424. [Google Scholar] [CrossRef]

- Schwier, M.; van Griethuysen, J.; Vangel, M.G.; Pieper, S.; Peled, S.; Tempany, C.; Aerts, H.J.W.L.; Kikinis, R.; Fennessy, F.M.; Fedorov, A. Repeatability of Multiparametric Prostate MRI Radiomics Features. Sci. Rep. 2019, 9, 9441. [Google Scholar] [CrossRef]

- Lee, J.; Steinmann, A.; Ding, Y.; Lee, H.; Owens, C.; Wang, J.; Yang, J.; Followill, D.; Ger, R.; MacKin, D.; et al. Radiomics Feature Robustness as Measured Using an MRI Phantom. Sci. Rep. 2021, 11, 3973. [Google Scholar] [CrossRef]

- Rosenkrantz, A.B.; Hindman, N.; Lim, R.P.; Das, K.; Babb, J.S.; Mussi, T.C.; Taneja, S.S. Diffusion-Weighted Imaging of the Prostate: Comparison of b1000 and b2000 Image Sets for Index Lesion Detection. J. Magn. Reson. Imaging 2013, 38, 694–700. [Google Scholar] [CrossRef]

- Peerlings, J.; Woodruff, H.C.; Winfield, J.M.; Ibrahim, A.; Van Beers, B.E.; Heerschap, A.; Jackson, A.; Wildberger, J.E.; Mottaghy, F.M.; DeSouza, N.M.; et al. Stability of Radiomics Features in Apparent Diffusion Coefficient Maps from a Multi-Centre Test-Retest Trial. Sci. Rep. 2019, 9, 4800. [Google Scholar] [CrossRef]

- Bluemke, D.A.; Moy, L.; Bredella, M.A.; Ertl-Wagner, B.B.; Fowler, K.J.; Goh, V.J.; Halpern, E.F.; Hess, C.P.; Schiebler, M.L.; Weiss, C.R. Assessing Radiology Research on Artificial Intelligence: A Brief Guide for Authors, Reviewers, and Readers-From the Radiology Editorial Board. Radiology 2020, 294, 487–489. [Google Scholar] [CrossRef]

- Park, S.H.; Han, K. Methodologic Guide for Evaluating Clinical Performance and Effect of Artificial Intelligence Technology for Medical Diagnosis and Prediction. Radiology 2018, 286, 800–809. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.; Yilmaz, E.C.; Belue, M.J.; Harmon, S.A.; Tetreault, J.; Phelps, T.E.; Merriman, K.M.; Hazen, L.; Garcia, C.; Yang, D.; et al. Evaluation of a Cascaded Deep Learning-Based Algorithm for Prostate Lesion Detection at Biparametric MRI. Radiology 2024, 311, e230750. [Google Scholar] [CrossRef] [PubMed]

- Jaouen, T.; Souchon, R.; Moldovan, P.C.; Bratan, F.; Duran, A.; Hoang-Dinh, A.; Di Franco, F.; Debeer, S.; Dubreuil-Chambardel, M.; Arfi, N.; et al. Characterization of High-Grade Prostate Cancer at Multiparametric MRI Using a Radiomic-Based Computer-Aided Diagnosis System as Standalone and Second Reader. Diagn. Interv. Imaging 2023, 104, 465–476. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A Comparison of Deep Learning Performance Against Health-Care Professionals in Detecting Diseases from Medical Imaging: A Systematic Review and Meta-Analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef] [PubMed]

| Reference, Year | Data Source, n, Country | Dataset Year/s | csPCa, n (%) | PZ, n (%) | MRI Vendor, Tesla | PI-RADS lesions | Reference Standard, MRI to Procedure | Biopsy Technique |

|---|---|---|---|---|---|---|---|---|

| Dominguez et al. 2023 [24] | Single-center, 86, Chile | 2017–2021 | 66 (0.77) | 81 (0.94) | Philips, 3 | ≥3 | PB, NR | MRI/US fusion, TRUS |

| Prata et al. 2023 [25] | Single-center, 91, Italy | 2019–2020 | 39 (0.43) | NR | Siemens, 1.5 | ≥3 | PB, within 4 weeks | MRI/US fusion, TRUS |

| Jin et al. 2023 [26] | Multicenter, 463, China | 2018–2019 | 100 (0.22) | 216 (0.47) | Siemens/Philips, 3 | 3 | PB, within 4 weeks | MRI/US fusion, TRUS |

| Hamm et al. 2023 a [27] | Single-center, 1224, GER | 2012–2017 | 595 (0.49) | 1935 (0.59) b | Siemens, 3 | ≥1 | PB, within 6 months | NR |

| Hong et al. 2023 a [28] | Single-center, 171, Korea | 2018–2022 | 40 (0.37) | 81 (0.47) | Multivendor, 3 | ≥3 | RP, NR | NA |

| Jing et al. 2022 [29] | Multicenter, 389, China | 2016–2021 | 270 (0.69) | 190 (0.49) | GE/UI, 3 | ≥2 | RP, within 12 weeks | NA |

| Zhu et al. 2022 [30] | Single-center, 347, China | 2017–2020 | 235 (0.68) | 212 (0.68) c | GE, 3 | NR | PB, within 12 weeks | CT, TRUS |

| Jiang et al. 2022 a [31] | Single-center, 1230, China | 2012–2019 | 856 (0.63) b | 853 (0.63) b | Siemens/UI, 3 | ≥1 | PB, within 4 weeks | MRI/US fusion, TRUS |

| Liu et al. 2021 [32] | Single-center, 402, USA | 2010–2018 | 303 (0.75) b | 364 (0.78) b | NR, 3 | ≥3 | RP, NR | NA |

| Lim et al. 2021 [33] | Multicenter, 158, Canada | 2015–2018 | 29 (0.18) b | 79 (0.49) b | Siemens/GE, 3 | 3 | PB, NR | CT, TRUS |

| Hectors et al. 2021 [34] | Single-center, 240, USA | 2015–2020 | 28 (0.12) | NR | Siemens/GE, 3 | 3 | PB, within 12 months | MRI/US fusion, TRUS |

| Castillo et al. 2021 [35] | Multicenter, 107, NL | 2011–2014 | 112 (0.55) b | 137 (0.67) b | GE/Siemens/Philips, 3/1.5 | ≥1 | RP, NR | NA |

| Li et al. 2020 [36] | Single-center, 381, China | 2014–2017 | 142 (0.37) | NR | Philips, 3 | NR | PB, NR | TRUS |

| Zhong et al. 2019 [37] | Single-center, 140, USA | 2010–2016 | 105 (0.49) b | NR | Siemens, 3 | NR | RP, NR | NA |

| Reference, Year | Sequences | Segmentation | Feature Extraction | Image Preprocessing | Data Imbalance techniques, Data Augmentation | Feature Selection | Train/Test (%) b | Algorithm |

|---|---|---|---|---|---|---|---|---|

| Dominguez et al. 2023 [24] | T2, ADC | Lesion | Shape, FO, HTF | Not performed | NR | RFE | 80 (CV)/20 | LR |

| Prata et al. 2023 [25] | T2, ADC | Lesion | FO, HTF, BLP | NR | NR | Wrapper (RF) | CV | RF |

| Jin et al. 2023 [26] | T2, ADC, DWI (b2000) | Lesion | FO, HTF, wavelet features | IN, grey-level quantization, resampling, IR | SMOTE, NR | ANOVA | 70/30 | SVM |

| Hamm et al. 2023 [27] | T2, ADC, DWI (high-b value) | Lesion, prostate, PZ, TZ | Deep radiomics | IN, resampling, lesion cropping | NR, Yes | NA | 80 (CV)/20 | Visual Geometry Group Net-based CNN |

| Hong et al. 2023 [28] | ADC | Lesion, prostate | Deep radiomics | IN, resizing, prostate cropping, cut-off filtering | Image allocation, NR | NA | 80/20 | DenseNet 201 |

| Jing et al. 2022 [29] | T2, DWI (b1500) | Lesion, prostate | Shape, FO, HTF, higher-order features | IN, Resampling | NR | Variance threshold algorithm, Select K-best, LASSO | 70/30 | LR |

| Zhu et al. 2022 [30] | T2, ADC | Lesion | Deep radiomics | IN, resampling, prostate cropping, IR | NR, Yes | NA | 60/40 | Res-UNet |

| Jiang et al. 2022 [31] | T2, DWI (b1500), ADC | Lesion, prostate | Deep radiomics | IN, resampling, prostate cropping, IR | NR, Yes | NA | 66.6/33.3 | Attention-Gated TrumpetNet |

| Liu et al. 2021 [32] | T2, ADC | Lesion | Deep radiomics | IN, lesion cropping, IR | NR | NA | 70/30 | 3D GLCM extractor + CNN |

| Lim et al. 2021 [33] | T2, ADC | Lesion | Shape, FO, HTF | NR | NR | Mann–Whitney U-test | CV | XGBoost |

| Hectors et al. 2021 [34] | T2 | Lesion | Shape, FO, HTF | IN, grey-level quantization, resampling | SMOTE, NR | RF | 80 (CV)/20 | RF, LR |

| Castillo et al. 2021 [35] | T2, DWI (highest-b value), ADC | Lesion | Shape, FO, HTF, higher-order features | Resampling | WORC Workflow a | WORC Workflow a | 80 (CV)/20 | WORC Workflow a |

| Li et al. 2020 [36] | T2, ADC | Lesion | FO, HTF | IN, grey-level quantization, resampling | NR | mRMR, LASSO | 60/40 | LR |

| Zhong et al. 2019 [37] | T2, ADC | Lesion | Deep radiomics | IN, resizing, lesion cropping | Not necessary, Yes | NA | 80/20 | ResNet with TL |

| Reference, Year | Analysis | Validation | Sequence for the Best Model | Best Radiomic Model [CI, 95%] a | PI-RADS Cut-Off | PI-RADS Model [CI, 95%] a | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| AUC | Sensitivity | Specificity | AUC | Sensitivity | Specificity | |||||

| Dominguez et al. 2023 [24] | Index | CV//Hold-out set | ADC | 0.81 [0.56–0.94]//0.71 | NR | NR | NR | 0.66 [0.57–0.74]//NR | NR | NR |

| Prata et al. 2023 [25] | Index | CV | ADC | 0.77 | NR | NR | NR | 0.68 | NR | NR |

| Jin et al. 2023 [26] | Index | Hold-out set//External (1 set) | T2 + ADC + DWI (b2000) | 0.80//0.80 | 0.80//0.73 | 0.65//0.92 | NA | NA | NA | NA |

| Jing et al. 2022 [29] | Index | Hold-out set//External (2 sets) | T2 (prostate) + DWI b1500 (lesion) | 0.96 [0.90, 1.00]//0.95 [0.87, 1.00]//0.94 [0.90, 0.99] b | 0.95//0.98//0.86 b | 0.94//0.86//0.91 b | NR | 0.84 [0.74, 0.95]//0.82 [0.72, 0.93]//0.80 [0.71, 0.88] | 0.98//0.98//0.50 | 0.56//0.52//0.94 |

| Lim et al. 2021 [33] | All | CV | ADC | 0.68 [0.65–0.72] | NR | NR | NA | NA | NR | NR |

| Hectors et al. 2021 [34] | Index | Hold-out set | T2 | 0.76 [0.60–0.92] | 0.75 | 0.8 | NA | NA | NA | NA |

| Castillo et al. 2021 [35] | Index | CV//External | T2 + ADC + DWI (highest-b value) | 0.72 [0.64, 0.79]//0.75 | 0.76 [0.66, 0.89]//0.88 | 0.55 [0.44, 0.66]//0.63 | ≥3 | 0.50//0.44 (2 radiologists, External Validation) | 0.76//0.88 | 0.25//0 |

| Li et al. 2020 [36] | Index | Hold-out set | T2 + ADC | 0.98 [0.97–1.00] | 0.95 | 0.87 | NA | NA | NA | NA |

| Reference, Year | Analysis | Validation | Sequence for the Best Model | Best Radiomic Model [CI, 95%] a | PI-RADS Cut-Off | PI-RADS Model [CI, 95%] a | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| AUC | Sensitivity | Specificity | AUC | Sensitivity | Specificity | |||||

| Hamm et al. 2023 [27] | All | Hold-out set//External (PROSTATEx) | T2 + ADC + DWI (high-b value) | 0.89 [0.85, 0.93]//0.87 [0.81, 0.93] | 0.77 [0.69, 0.85]//0.90 [0.83, 0.97] | 0.89 [0.84, 0.95]//0.85 [0.80, 0.90] | NA | NA | NA | NA |

| Index | 0.78//NR | 0.98 [0.95, 1.00] b//NR | NR | |||||||

| Hong et al. 2023 [28] | Index | Hold-out set//External (1 set) | ADC | NR//0.63 | 0.72//0.84 | 0.74//0.48 | NA | NA | NA | NA |

| Zhu et al. 2022 [30] | All | Hold-out set | T2 + ADC | NR | 0.96 [0.89, 0.99] | NR | ≥3 | NR | 0.94 [0.87, 0.98] | NR |

| Sextant | NR | 0.96 [0.90, 0.99] | 0.92 [0.89, 0.93] | NR | 0.93 [0.87, 0.97] | 0.92 [0.90, 0.94] | ||||

| Index | NR | 0.99 [0.92, 0.99] | 0.65 [0.53, 0.76] | NR | 0.99 [0.92, 0.99] | 0.66 [0.54, 0.77] | ||||

| Jiang et al. 2022 [31] | All | Hold-out set//External (PROSTATEx) | T2 + ADC + DWI (b1500) | 0.85 [0.81, 0.88]//0.86 [0.81, 0.91] | 0.93//0.87 | 0.5//0.66 | ≥3 | 0.92 [0.89, 0.95]//0.86 [0.80, 0.90] | 0.94//0.77 | 0.79//0.87 |

| Liu et al. 2021 [32] | All | Hold-out set | T2 + ADC | 0.85 [0.79, 0.91] | 0.90 [0.83, 0.96] | 0.70 [0.59, 0.82] | ≥4 | 0.73 [0.65, 0.80] | 0.83 [0.75, 0.92] | 0.47 [0.35, 0.59] |

| Index | 0.73 [0.59, 0.88] | 0.90 [0.83, 0.96] | 0.47 [0.21, 0.72] | 0.65 [0.52, 0.78] | 0.83 [0.75, 0.91] | 0.27 [0.04, 0.72] | ||||

| Zhong et al. 2019 [37] | All | Hold-out set | T2 + ADC | 0.73 [0.58, 0.88] | 0.64 | 0.8 | ≥4 | 0.71 [0.58, 0.87] | 0.86 | 0.48 |

| Reference, Year | Analysis | Validation | PSA-D [CI, 95%]a | Clinical Model [CI, 95%] a | Combined Model [CI, 95%] a | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC | Sensitivity | Specificity | AUC | Sensitivity | Specificity | AUC | Sensitivity | Specificity | |||

| Dominguez et al. 2023 [24] | Index | CV//Hold-out set | 0.77 [0.66–0.87]//NR | NR | NR | 0.76 [0.62–0.87]//0.80 (PV-MR, PSA, PSA-D) | NR | NR | 0.91 [0.76–0.99]//0.80 (Clinical Model and Radiomic Model) | NR | NR |

| Prata et al. 2023 [25] | Index | Hold-out set | NA | NA | NA | 0.69 (DRE, PI-RADS) | NR | NR | 0.80 (DRE, PI-RADS and Radiomic Model) | 0.915 | 0.844 |

| Jin et al. 2023 [26] | Index | Hold-out set//External (1 set) | 0.71//0.69 | 0.84//0.77 | 0.60//0.62 | NA | NA | NA | N/A | NA | NA |

| Jing et al. 2022 [29] | Index | Hold-out set//External (2 sets) | NA | NA | NA | NA | N/A | N/A | 0.96 [0.90, 1.00]//0.95 [0.87, 1.00]//0.94 [0.90, 0.99] (Radiomic Model + PI-RADS) | 0.952//0.978//0.861 | 0.944//0.857//0.907 |

| Hectors et al. 2021 [34] | Index | Hold-out set | 0.61 [0.41, 0.80] | 0.72 | 0.52 | NA | NA | NA | NA | NA | NA |

| Li et al. 2020 [36] | Index | Hold-out set | NA | NA | NA | 0.79 [0.70–0.88] (Age, PSA, PSA-D) | 0.76 | 0.74 | 0.98 [0.97–1.00] (Age, PSA, PSA-D and Radiomic Model) | 0.82 | 0.97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Antolin, A.; Roson, N.; Mast, R.; Arce, J.; Almodovar, R.; Cortada, R.; Maceda, A.; Escobar, M.; Trilla, E.; Morote, J. The Role of Radiomics in the Prediction of Clinically Significant Prostate Cancer in the PI-RADS v2 and v2.1 Era: A Systematic Review. Cancers 2024, 16, 2951. https://doi.org/10.3390/cancers16172951

Antolin A, Roson N, Mast R, Arce J, Almodovar R, Cortada R, Maceda A, Escobar M, Trilla E, Morote J. The Role of Radiomics in the Prediction of Clinically Significant Prostate Cancer in the PI-RADS v2 and v2.1 Era: A Systematic Review. Cancers. 2024; 16(17):2951. https://doi.org/10.3390/cancers16172951

Chicago/Turabian StyleAntolin, Andreu, Nuria Roson, Richard Mast, Javier Arce, Ramon Almodovar, Roger Cortada, Almudena Maceda, Manuel Escobar, Enrique Trilla, and Juan Morote. 2024. "The Role of Radiomics in the Prediction of Clinically Significant Prostate Cancer in the PI-RADS v2 and v2.1 Era: A Systematic Review" Cancers 16, no. 17: 2951. https://doi.org/10.3390/cancers16172951

APA StyleAntolin, A., Roson, N., Mast, R., Arce, J., Almodovar, R., Cortada, R., Maceda, A., Escobar, M., Trilla, E., & Morote, J. (2024). The Role of Radiomics in the Prediction of Clinically Significant Prostate Cancer in the PI-RADS v2 and v2.1 Era: A Systematic Review. Cancers, 16(17), 2951. https://doi.org/10.3390/cancers16172951