Artificial Intelligence for Neuroimaging in Pediatric Cancer

Simple Summary

Abstract

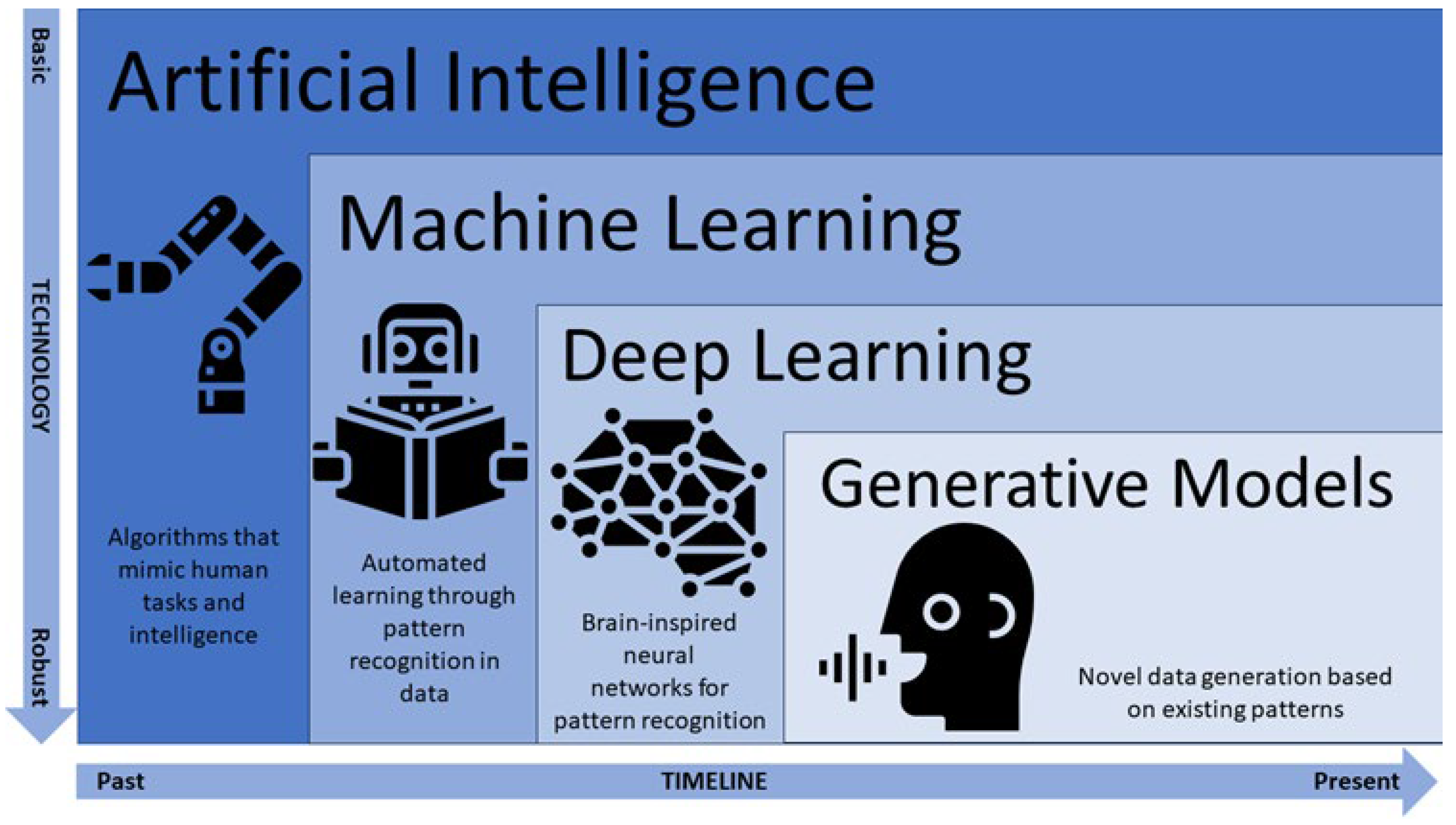

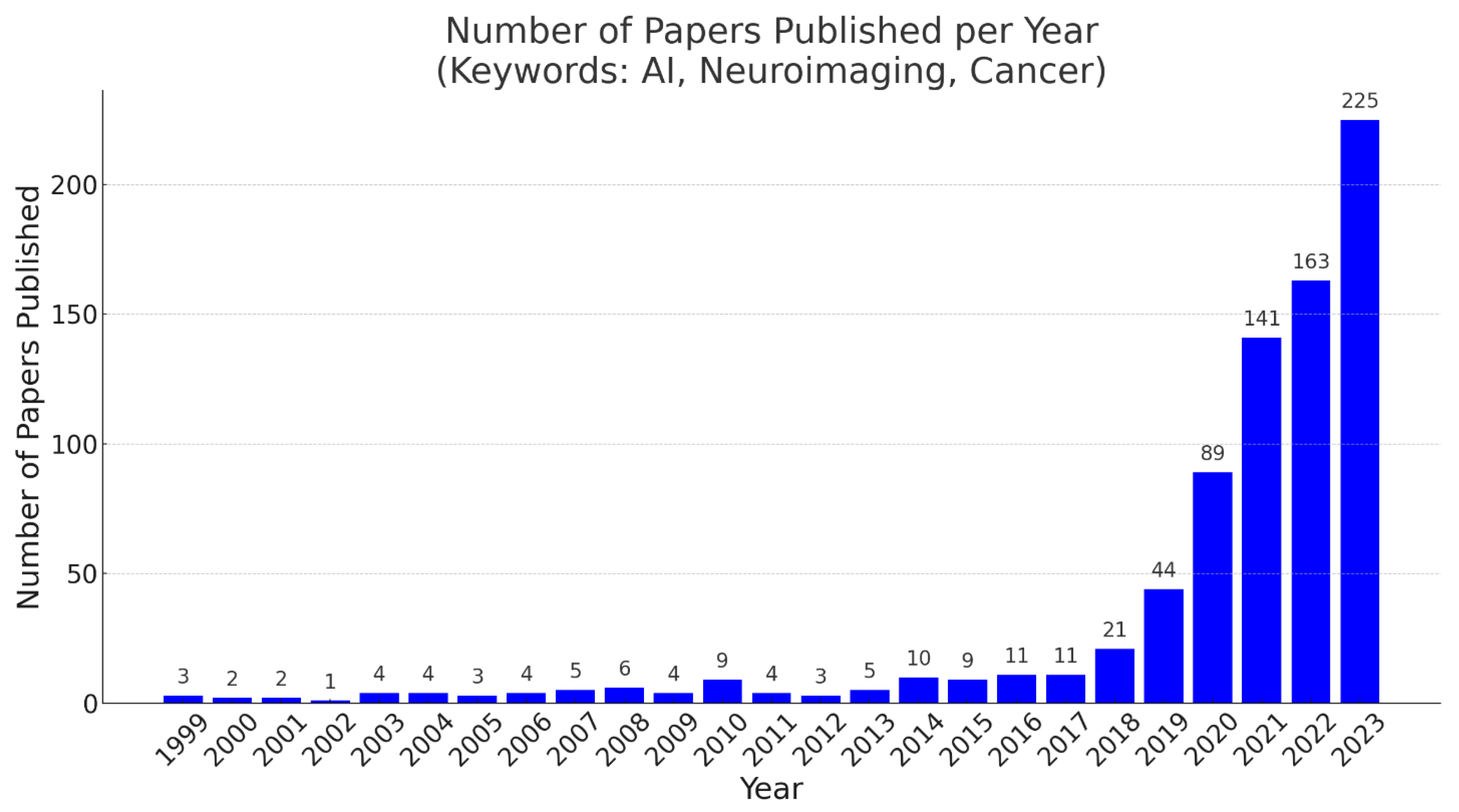

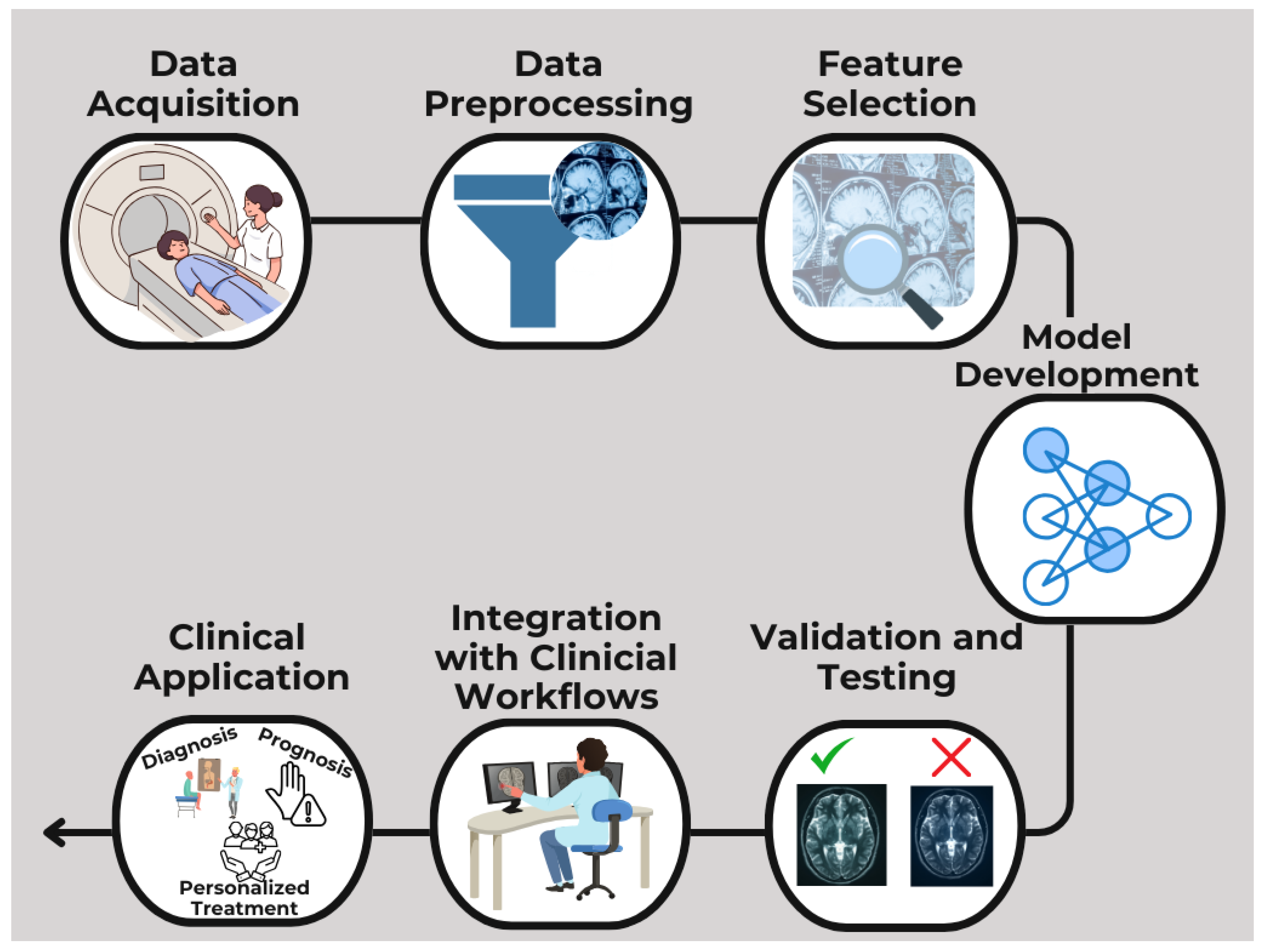

1. Introduction

2. Challenges in Pediatric Neuroimaging

3. AI for Improving Image Acquisition and Preprocessing

3.1. Accelerating Image Acquisition

3.2. Reducing Radiation Exposure or Contrast Doses

3.3. Removing Artifacts

4. AI for Tumor Detection and Classification

4.1. AI in Tumor Segmentation

4.2. AI in Tumor Margin Detection

4.3. AI in Tumor Characterization

4.4. Radiomics and Radiogenomics for Specific Pediatric Brain Tumors

4.4.1. Posterior Fossa Tumors

4.4.2. Craniopharyngiomas

4.4.3. Low-Grade Gliomas

4.4.4. High-Grade Gliomas

4.4.5. Ependymomas

4.5. Hybrid Models

5. AI for Functional Imaging and Neuromodulation

6. AI in Monitoring Treatment Response

7. AI in Predicting Survival for Patients with Pediatric Brain Tumors

8. AI for Transparent Explanations in Cancer Neuroimaging

9. Discussion

9.1. Data-Related Issues

9.2. Technical Limitations

9.3. Ethical Considerations

9.4. Future Directions

10. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Martin, D.; Tong, E.; Kelly, B.; Yeom, K.; Yedavalli, V. Current perspectives of artificial intelligence in pediatric neuroradiology: An overview. Front. Radiol. 2021, 1, 713681. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.B.; Sarrami, A.H.; Gatidis, S.; Varniab, Z.S.; Chaudhari, A.; Daldrup-Link, H.E. Applications of Artificial Intelligence for Pediatric Cancer Imaging. Am. J. Roentgenol. 2024, 223, e2431076. [Google Scholar] [CrossRef] [PubMed]

- Pringle, C.; Kilday, J.P.; Kamaly-Asl, I.; Stivaros, S.M. The role of artificial intelligence in paediatric neuroradiology. Pediatr. Radiol. 2022, 52, 2159–2172. [Google Scholar] [CrossRef]

- Wang, J.; Wang, J.; Wang, S.; Zhang, Y. Deep learning in pediatric neuroimaging. Displays 2023, 80, 102583. [Google Scholar] [CrossRef]

- Bhatia, A.; Khalvati, F.; Ertl-Wagner, B.B. Artificial Intelligence in the Future Landscape of Pediatric Neuroradiology: Opportunities and Challenges. Am. J. Neuroradiol. 2024, 45, 549–553. [Google Scholar] [CrossRef]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Pearson: London, UK, 2016. [Google Scholar]

- Helm, J.M.; Swiergosz, A.M.; Harberle, H.S.; Karnuta, J.M.; Schaffer, J.L.; Krebs, V.E.; Spitzer, A.I.; Ramkumar, P.N. Machine learning and artificial intelligence: Definitions, applications, and future directions. Curr. Rev. Musculoskelet. Med. 2020, 13, 69–76. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning; Springer: Berlin/Heidelberg, Germany, 2009; ISBN 978-0387848570. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; ISBN 978-0387310732. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; MIT Press: Cambridge, MA, USA, 2018; ISBN 978-0262039246. [Google Scholar]

- McCall, J. Genetic algorithms for modelling and optimisation. J. Comput. Appl. Math. 2005, 184, 205–222. [Google Scholar] [CrossRef]

- Valueva, M.V.; Nagornov, N.N.; Lyakhov, P.A.; Valuev, G.V.; Chervyakov, N.I. Application of the residue number system to reduce hardware costs of the convolutional neural network implementation. Math. Comput. Simul. 2020, 177, 232–243. [Google Scholar] [CrossRef]

- Mzoughi, H.; Njeh, I.; Wali, A.; Ben Slima, M.; BenHamida, A.; Mhiri, C.; Ben Mahfoudhe, K. Deep multi-scale 3D convolutional neural network (CNN) for MRI gliomas brain tumor classification. J. Digit. Imaging 2020, 33, 903–915. [Google Scholar] [CrossRef]

- Lu, D. AlexNet-based digital recognition system for handwritten brushes. In Proceedings of the 7th International Conference on Cyber Security and Information Engineering (ICCSIE’22), Brisbane, Australia, 23–25 September 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 509–514. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A.; Liu, W.; et al. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Wen, L.; Dong, Y.; Gao, L. A new ensemble residual convolutional neural network for remaining useful life estimation. Math. Biosci. Eng. 2019, 16, 862–880. [Google Scholar] [CrossRef]

- Jonnalagedda, P.; Weinberg, B.; Allen, J.; Min, T.; Bhanu, S.; Bhanu, B. SAGE: Sequential attribute generator for analyzing glioblastomas using limited dataset. arXiv 2020, arXiv:2005.07225. [Google Scholar] [CrossRef]

- Namdar, K.; Wagner, M.W.; Kudus, K.; Hawkins, C.; Tabori, U.; Ertl-Wagner, B.B.; Khalvati, F. Improving Deep Learning Models for Pediatric Low-Grade Glioma Tumours Molecular Subtype Identification Using MRI-based 3D Probability Distributions of Tumour Location. Can. Assoc. Radiol. J. 2024, 2024, 8465371241296834. [Google Scholar] [CrossRef] [PubMed]

- Ranschaert, E.; Topff, L.; Pianykh, O. Optimization of radiology workflow with artificial intelligence. Radiol. Clin. N. Am. 2021, 59, 955–966. [Google Scholar] [CrossRef] [PubMed]

- Ravi, K.S.; Nandakumar, G.; Thomas, N.; Lim, M.; Qian, E.; Jimeno, M.M.; Poojar, P.; Jin, Z.; Quarterman, P.; Srinivasan, G.; et al. Accelerated MRI using intelligent protocolling and subject-specific denoising applied to Alzheimer’s disease imaging. Front. Neuroimaging 2023, 2, 1072759. [Google Scholar] [CrossRef]

- Bash, S.; Wang, L.; Airriess, C.; Zaharchuk, G.; Gong, E.; Shankaranarayanan, A.; Tanenbaum, L.N. Deep learning enables 60% accelerated volumetric brain MRI while preserving quantitative performance: A prospective, multicenter, multireader trial. Am. J. Neuroradiol. 2021, 42, 2130–2137. [Google Scholar] [CrossRef]

- Chen, Y.; Schönlieb, C.B.; Liò, P.; Leiner, T.; Dragotto, P.L.; Wang, G.; Rueckert, D.; Firmin, D.; Yang, G. AI-based reconstruction for fast MRI—A systematic review and meta-analysis. Proc. IEEE 2022, 110, 224–245. [Google Scholar] [CrossRef]

- MacDougall, R.D.; Zhang, Y.; Callahan, M.J.; Perez-Rosselli, J.; Breen, M.A.; Johnston, P.R.; Yu, H. Improving low-dose pediatric abdominal CT by using convolutional neural networks. Radiol. Artif. Intell. 2019, 1, e180087. [Google Scholar] [CrossRef]

- Gokyar, S.; Robb, F.; Kainz, W.; Chaudhari, A.; Winkler, S. MRSaiFE: An AI-based approach towards the real-time prediction of specific absorption rate. IEEE Access 2021, 9, 140824–140834. [Google Scholar] [CrossRef]

- Dyer, T.; Chawda, S.; Alkilani, R.; Morgan, T.N.; Hughes, M.; Rasalingham, S. Validation of an artificial intelligence solution for acute triage and rule-out normal of non-contrast CT head scans. Neuroradiology 2022, 64, 735–743. [Google Scholar] [CrossRef]

- Tian, Q.; Yan, L.; Zhang, X.; Hu, Y.; Han, Y.; Liu, Z.; Nan, H.; Sun, Q.; Sun, Y.; Yang, Y.; et al. Radiomics strategy for glioma grading using texture features from multiparametric MRI. J. Magn. Reson. Imaging 2018, 48, 1518–1528. [Google Scholar] [CrossRef]

- Zhang, M.; Wong, S.W.; Wright, J.N.; Wagner, M.W.; Toescu, S.; Han, M.; Tam, L.T.; Zhou, Q.; Ahmadian, S.S.; Shpanskaya, K.; et al. MRI radiogenomics of pediatric medulloblastoma: A multicenter study. Radiology 2022, 304, 406–416. [Google Scholar] [CrossRef] [PubMed]

- Chiu, F.Y.; Yen, Y. Efficient radiomics-based classification of multi-parametric MR images to identify volumetric habitats and signatures in glioblastoma: A machine learning approach. Cancers 2022, 14, 1475. [Google Scholar] [CrossRef] [PubMed]

- Khalili, N.; Kazerooni, A.F.; Familiar, A.; Haldar, D.; Kraya, A.; Foster, J.; Koptyra, M.; Storm, P.B.; Resnick, A.C.; Nabavizadeh, A. Radiomics for characterization of the glioma immune microenvironment. NPJ Precis. Oncol. 2023, 7, 59. [Google Scholar] [CrossRef]

- Park, J.E.; Kim, H.S.; Goh, M.J.; Kim, N.; Park, S.Y.; Kim, Y.H.; Kim, J.H. Pseudoprogression in patients with glioblastoma: Assessment by using volume-weighted voxel-based multiparametric clustering of MR imaging data in an independent test set. Radiology 2015, 275, 792–802. [Google Scholar] [CrossRef]

- Yang, Y.; Zhao, Y.; Liu, X.; Huang, J. Artificial intelligence for prediction of response to cancer immunotherapy. Semin. Cancer Biol. 2022, 87, 137–147. [Google Scholar] [CrossRef]

- Patel, U.K.; Anwar, A.; Saleem, S.; Malik, P.; Rasul, B.; Patel, K.; Yao, R.; Seshadri, A.; Yousufuddin, M.; Arumaithurai, K. Artificial intelligence as an emerging technology in the current care of neurological disorders. J. Neurol. 2021, 268, 1623–1642. [Google Scholar] [CrossRef]

- Fathi Kazerooni, A.; Saxena, S.; Toorens, E.; Tu, D.; Bashyam, V.; Akbari, H.; Mamourian, E.; Sako, C.; Koumenis, C.; Verginadis, I.; et al. Clinical measures, radiomics, and genomics offer synergistic value in AI-based prediction of overall survival in patients with glioblastoma. Sci. Rep. 2022, 12, 8784. [Google Scholar] [CrossRef]

- Yeh, F.C.; Tseng, W.Y. Advances in connectomics and tractography using diffusion MRI data. NeuroImage 2021, 244, 118720. [Google Scholar] [CrossRef]

- McCarthy, M.L.; Ding, R.; Hsu, H.; Dausey, D.J. Developmental and behavioral comorbidities of asthma in children. J. Dev. Behav. Pediatr. 2018, 39, 293–304. [Google Scholar]

- Zhang, L.-Y.; Du, H.-Z.; Lu, T.-T.; Song, S.-H.; Xu, R.; Jiang, Y.; Pan, H. Long-term outcome of childhood and adolescent patients with craniopharyngiomas: A single center retrospective experience. BMC Cancer 2024, 24, 1555. [Google Scholar] [CrossRef]

- Fangusaro, J.; Jones, D.T.; Packer, R.J.; Gutmann, D.H.; Milde, T.; Witt, O.; Mueller, S.; Fisher, M.J.; Hansford, J.R.; Tabori, U.; et al. Pediatric low-grade glioma: State-of-the-art and ongoing challenges. Neuro-Oncology 2024, 26, 25–37. [Google Scholar] [CrossRef] [PubMed]

- Gajjar, A.; Mahajan, A.; Abdelbaki, M.; Anderson, C.; Antony, R.; Bale, T.; Bindra, R.; Bowers, D.C.; Cohen, K.; Cole, B.; et al. Pediatric Central Nervous System Cancers, Version 2.2023. J. Natl. Compr. Cancer Netw. 2022, 20, 1339–1362. [Google Scholar]

- Müller, H.L. Craniopharyngioma. Endocr. Rev. 2014, 35, 513–543. [Google Scholar] [CrossRef] [PubMed]

- Fjalldal, S.; Holmer, H.; Rylander, L.; Elfving, M.; Ekman, B.; Osterberg, K.; Erfurth, E.M. Hypothalamic involvement predicts cognitive performance and psychosocial health in long-term survivors of childhood craniopharyngioma. J. Clin. Endocrinol. Metab. 2013, 98, 3253–3262. [Google Scholar] [CrossRef]

- Jale Ozyurt, M.S.; Thiel, C.M.; Lorenzen, A.; Gebhardt, U.; Calaminus, G.; Warmuth-Metz, M.; Muller, H.L. Neuropsychological outcome in patients with childhood craniopharyngioma and hypothalamic involvement. J. Pediatr. 2014, 164, 876–881.e4. [Google Scholar] [CrossRef]

- DeAngelis, L.M. Brain tumours. N. Engl. J. Med. 2001, 344, 114–122. [Google Scholar] [CrossRef]

- Alksas, A.; Shehata, M.; Atef, H.; Sherif, F.; Alghamdi, N.S.; Ghazal, M.; Abdel Fattah, S.; El-Serougy, L.G.; El-Baz, A. A novel system for precise grading of glioma. Bioengineering 2022, 9, 532. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Weyer-Jamora, C.; Brie, M.S.; Luks, T.L.; Smith, E.M.; Braunstein, S.E.; Villanueva-Meyer, J.E.; Bracci, P.M.; Chang, S.; Hervey-Jumper, S.L.; Taylor, J.W. Cognitive impact of lower-grade gliomas and strategies for rehabilitation. Neurooncol. Pract. 2021, 8, 117–128. [Google Scholar] [CrossRef]

- Louis, D.N.; Ohgaki, H.; Wiestler, O.D.; Cavenee, W.K.; Burger, P.C.; Jouvet, A.; Scheithauer, B.W.; Kleihues, P. The 2007 WHO classification of tumours of the central nervous system. Acta Neuropathol. 2017, 114, 97–109. [Google Scholar] [CrossRef]

- Speville, E.D.; Kieffer, V.; Dufour, C.; Grill, J.; Noulhiane, M.; Hertz-Pannier, L.; Chevignard, M. Neuropsychological consequences of childhood medulloblastoma and possible interventions. Neurochirurgie 2021, 67, 90–98. [Google Scholar] [CrossRef]

- Power, J.D.; Barnes, K.A.; Snyder, A.Z.; Schlaggar, B.L.; Petersen, S.E. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. NeuroImage 2015, 59, 2142–2154. [Google Scholar] [CrossRef] [PubMed]

- Beauferris, Y.; Teuwen, J.; Karkalousos, D.; Moriakov, N.; Caan, M.; Yiasemis, G.; Rodrigues, L.; Lopes, A.; Pedrini, H.; Rittner, L.; et al. Multi-coil MRI reconstruction challenge—Assessing brain MRI reconstruction models and their generalizability to varying coil configurations. Front. Neurosci. 2022, 16, 919186. [Google Scholar] [CrossRef] [PubMed]

- Lustig, M.; Donoho, D.L.; Santos, J.M.; Pauly, J.M. Compressed sensing MRI. IEEE Signal Process. Mag. 2008, 25, 72–82. [Google Scholar] [CrossRef]

- Singh, N.M.; Harrod, J.B.; Subramanian, S.; Robinson, M.; Chang, K.; Cetin-Karayumak, S.; Dalca, A.V.; Eickhoff, S.; Fox, M.; Franke, L.; et al. How machine learning is powering neuroimaging to improve brain health. Neuroinformatics 2022, 20, 943–964. [Google Scholar] [CrossRef]

- Evans, A.C.; Brain Development Cooperative Group. The NIH MRI study of normal brain development. NeuroImage 2006, 30, 184–202. [Google Scholar] [CrossRef]

- Molfese, P.J.; Glen, D.; Mesite, L.; Cox, R.W.; Hoeft, F.; Frost, S.J.; Mencl, W.E.; Pugh, K.R.; Bandettini, P.A. The Haskins pediatric atlas: A magnetic-resonance-imaging-based pediatric template and atlas. Pediatr. Radiol. 2021, 51, 628–639. [Google Scholar] [CrossRef]

- Wang, L.; Gao, Y.; Shi, F.; Li, G.; Gilmore, J.H.; Lin, W.; Shen, D. LINKS: Learning-based multi-source integration framework for segmentation of infant brain images. Neuroimage 2015, 108, 160–172. [Google Scholar] [CrossRef]

- Barkovich, A.J.; Raybaud, C. Pediatric Neuroimaging, 5th ed.; Lippincott Williams & Wilkins, Wolters Kluwer Health: Philadelphia, PA, USA, 2012. [Google Scholar]

- Slater, D.A.; Melie-Garcia, L.; Preisig, M.; Kherif, F.; Lutti, A.; Draganski, B. Evolution of white matter tract microstructure across the lifespan. Hum. Brain Mapp. 2019, 40, 2252–2268. [Google Scholar] [CrossRef]

- Pfefferbaum, A.; Mathalon, D.H.; Sullivan, E.V.; Rawles, J.M.; Zipursky, R.B.; Lim, K.O. A quantitative magnetic resonance imaging study of changes in brain morphology from infancy to late adulthood. Arch. Neurol. 1994, 51, 874–887. [Google Scholar] [CrossRef]

- Blockmans, L.; Golestani, N.; Dalboni da Rocha, J.L.; Wouters, J.; Ghesquière, P.; Vandermosten, M. Role of family risk and of pre-reading auditory and neurostructural measures in predicting reading outcome. Neurobiol. Lang. 2023, 4, 474–500. [Google Scholar] [CrossRef]

- Kim, S.H.; Lyu, I.; Fonov, V.S.; Vachet, C.; Hazlett, H.C.; Smith, R.G.; Piven, J.; Dager, S.R.; McKinstry, R.C.; Pruett, J.R., Jr.; et al. Development of cortical shape in the human brain from 6 to 24 months of age via a novel measure of shape complexity. NeuroImage 2016, 135, 163–176. [Google Scholar] [CrossRef] [PubMed]

- da Rocha, J.L.D.; Schneider, P.; Benner, J.; Santoro, R.; Atanasova, T.; Van De Ville, D.; Golestani, N. TASH: Toolbox for the Automated Segmentation of Heschl’s gyrus. Sci. Rep. 2020, 10, 3887. [Google Scholar] [CrossRef]

- Maghsadhagh, S.; Dalboni da Rocha, J.L.; Benner, J.; Schneider, P.; Golestani, N.; Behjat, H. A discriminative characterization of Heschl’s gyrus morphology using spectral graph features. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Virtual, 1–5 November 2021; pp. 3577–3581. [Google Scholar] [CrossRef]

- Fonov, V.; Evans, A.C.; Botteron, K.; Almli, C.R.; McKinstry, R.C.; Collins, D.L.; the Brain Development Cooperative Group. Unbiased average age-appropriate atlases for pediatric studies. NeuroImage 2011, 54, 313–327. [Google Scholar] [CrossRef] [PubMed]

- Wilke, M.; Holland, S.K.; Altaye, M.; Gaser, C. Template-O-Matic: A toolbox for creating customized pediatric templates. Neuroimage 2008, 41, 903–913. [Google Scholar] [CrossRef]

- Kazemi, K.; Moghaddam, H.A.; Grebe, R.; Gondry-Jouet, C.; Wallois, F. A neonatal atlas template for spatial normalization of whole-brain magnetic resonance images of newborns: Preliminary results. NeuroImage 2007, 37, 463–473. [Google Scholar] [CrossRef]

- Shi, F.; Yap, P.-T.; Wu, G.; Jia, H.; Gilmore, J.H.; Lin, W.; Shen, D. Infant brain atlases from neonates to 1- and 2-year-olds. PLoS ONE 2011, 6, e18746. [Google Scholar] [CrossRef]

- Giedd, J.N. Structural magnetic resonance imaging of the adolescent brain. Ann. N.Y. Acad. Sci. 2004, 1021, 77–85. [Google Scholar] [CrossRef]

- Mohsen, F.; Ali, H.; El Hajj, N.; Shah, Z. Artificial intelligence-based methods for fusion of electronic health records and imaging data. Sci. Rep. 2022, 12, 17981. [Google Scholar] [CrossRef]

- Nichols, T.E.; Das, S.; Eickhoff, S.B.; Evans, A.C.; Glatard, T.; Hanke, M.; Yeo, B.T. Best practices in data analysis and sharing in neuroimaging using MRI. Nat. Neurosci. 2017, 20, 299–303. [Google Scholar] [CrossRef]

- Calhoun, V.D.; Miller, R.; Pearlson, G.; Adalı, T. The chronnectome: Time-varying connectivity networks as the next frontier in fMRI data discovery. Neuron 2012, 84, 262–274. [Google Scholar] [CrossRef]

- Pati, S.; Baid, U.; Edwards, B.; Sheller, M.H.; Wang, S.H.; Reina, G.A.; Foley, P.; Gruzdev, A.; Karkada, D.; Davatzikos, C.; et al. Federated learning enables big data for rare cancer boundary detection. Nat. Commun. 2022, 13, 7346. [Google Scholar] [CrossRef] [PubMed]

- Karkazis, K.; Cho, M.K.; Radhakrishnan, R. Human subjects protections and research on the developing brain: Drawing lines and shifting boundaries. Neuron 2017, 95, 221–228. [Google Scholar]

- Huang, Y.; Ahmad, S.; Fan, J.; Shen, D.; Yap, P. Difficulty-aware hierarchical convolutional neural networks for deformable registration of brain MR images. Med. Image Anal. 2020, 67, 101817. [Google Scholar] [CrossRef]

- Zhao, C.; Shao, M.; Carass, A.; Li, H.; Dewey, B.E.; Ellingsen, L.M.; Woo, J.; Guttman, M.A.; Blitz, A.M.; Stone, M.; et al. Applications of a deep learning method for anti-aliasing and super-resolution in MRI. Magn. Reson. Imaging 2019, 64, 132–141. [Google Scholar] [CrossRef]

- Fair, M.J.; Gatehouse, P.D.; DiBella, E.V.R.; Firmin, D.N. A review of 3D first-pass, whole-heart, myocardial perfusion cardiovascular magnetic resonance. J. Cardiovasc. Magn. Reson. 2015, 17, 68. [Google Scholar] [CrossRef]

- Monsour, R.; Dutta, M.; Mohamed, A.Z.; Borkowski, A.; Viswanadhan, N.A. Neuroimaging in the era of artificial intelligence: Current applications. Fed. Pract. 2022, 39 (Suppl. S1), S14–S20. [Google Scholar] [CrossRef]

- Mardani, M.; Gong, E.; Cheng, J.Y.; Vasanawala, S.; Zaharchuk, G.; Alley, M.; Thakur, N.; Han, S.; Dally, W.; Pauly, J.M.; et al. Deep generative adversarial networks for compressed sensing automates MRI. arXiv 2017, arXiv:1706.00051. [Google Scholar]

- Ng, C.K.C. Generative Adversarial Network (Generative Artificial Intelligence) in Pediatric Radiology: A Systematic Review. Children 2023, 10, 1372. [Google Scholar] [CrossRef]

- Familiar, A.M.; Kazerooni, A.F.; Kraya, A.; Rathi, K.; Khalili, N.; Gandhi, D.; Anderson, H.; Mahtabfar, A.; Ware, J.B.; Vossough, A.; et al. IMG-13. Prediction of pediatric medulloblastoma subgroups using clinico-radiomic analysis. Neuro-Oncology 2024, 26 (Suppl. S4), 103–104. [Google Scholar] [CrossRef]

- Hall, E.J. Lessons we have learned from our children: Cancer risks from diagnostic radiology. Pediatr. Radiol. 2002, 32, 700–706. [Google Scholar] [CrossRef] [PubMed]

- Gong, E.; Pauly, J.M.; Wintermark, M.; Zaharchuk, G. Deep learning enables reduced gadolinium dose for contrast-enhanced brain MRI. J. Magn. Reson. Imaging 2018, 48, 330–340. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Zhang, Y.; Zhang, W.; Liao, P.; Li, K.; Zhou, J.; Wang, G. Low-dose CT via convolutional neural network. Biomed. Opt. Express 2017, 8, 679–694. [Google Scholar] [CrossRef] [PubMed]

- Kang, J.; Rancati, T.; Lee, S.; Oh, J.H.; Kerns, S.L.; Scott, J.G.; Schwartz, R.; Kim, S.; Rosenstein, B.S. Machine learning and radiogenomics: Lessons learned and future directions. Front. Oncol. 2018, 8, 228. [Google Scholar] [CrossRef] [PubMed]

- Nishio, M.; Nagashima, C.; Hirabayashi, S.; Ohnishi, A.; Sasaki, K.; Sagawa, T.; Hamada, M.; Yamashita, T. Convolutional auto-encoder for image denoising of ultra-low-dose CT. Heliyon 2017, 3, e00393. [Google Scholar] [CrossRef] [PubMed]

- Zaharchuk, G. Next-generation research applications for hybrid PET/MR and PET/CT imaging using deep learning. Eur. J. Nucl. Med. Mol. Imaging 2019, 46, 2700–2707. [Google Scholar] [CrossRef]

- Lee, D.; Yoo, J.; Tak, S.; Ye, J.C. Deep residual learning for accelerated MRI using magnitude and phase networks. IEEE Trans. Biomed. Eng. 2018, 65, 1985–1995. [Google Scholar] [CrossRef]

- Anand, C.S.; Sahambi, J.S. Wavelet domain non-linear filtering for MRI denoising. Magn. Reson. Imaging 2010, 28, 842–861. [Google Scholar] [CrossRef]

- Chun, S.Y.; Reese, T.G.; Ouyang, J.; Guerin, B.; Catana, C.; Zhu, X.; Alpert, N.M.; El Fakhri, G. MRI-based nonrigid motion correction in simultaneous PET/MRI. J. Nucl. Med. 2012, 53, 1284–1291. [Google Scholar] [CrossRef]

- Haskell, M.W.; Cauley, S.F.; Wald, L.L. Targeted motion estimation and reduction (TAMER): Data consistency based motion mitigation for MRI using a reduced model joint optimization. IEEE Trans. Med. Imaging 2018, 37, 1253–1265. [Google Scholar] [CrossRef]

- Singh, N.M.; Iglesias, J.E.; Adalsteinsson, E.; Dalca, A.V.; Golland, P. Joint frequency and image space learning for Fourier imaging. arXiv 2020, arXiv:200701441. [Google Scholar]

- Gjesteby, L.; Yang, Q.; Xi, Y.; Shan, H.; Claus, B.; Jin, Y.; Man, B.D.; Wang, G. Deep learning methods for CT image-domain metal artifact reduction. In Developments in X-Ray Tomography XI; International Society for Optics and Photonics: Bellingham, WC, USA, 2017; p. 103910W. [Google Scholar] [CrossRef]

- Hu, Z.; Jiang, C.; Sun, F.; Zhang, Q.; Ge, Y.; Yang, Y.; Liu, X.; Zheng, H.; Liang, D. Artifact correction in low-dose dental CT imaging using Wasserstein generative adversarial networks. Med. Phys. 2019, 46, 1686–1696. [Google Scholar] [CrossRef] [PubMed]

- Zhu, D.; Zhang, T.; Jiang, X.; Hu, X.; Chen, H. Opportunities and challenges of artificial intelligence in pediatric neuroimaging. Front. Neurosci. 2020, 14, 204. [Google Scholar]

- Schooler, G.R.; Davis, J.T.; Daldrup-Link, H.E.; Frush, D.P. Current utilization and procedural practices in pediatric whole-body MRI. Pediatr. Radiol. 2018, 48, 1101–1107. [Google Scholar] [CrossRef] [PubMed]

- Daldrup-Link, H. Artificial intelligence applications for pediatric oncology imaging. Pediatr. Radiol. 2019, 49, 1384–1390. [Google Scholar] [CrossRef]

- Soltaninejad, M.; Yang, G.; Lambrou, T.; Allinson, N.; Jones, T.L.; Barrick, T.R.; Howe, F.A.; Ye, X. Automated brain tumor detection and segmentation using super-pixel-based extremely randomized trees in FLAIR MRI. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 183–203. [Google Scholar] [CrossRef]

- Irmak, E. Multi-classification of brain tumor MRI images using deep convolutional neural network with fully optimized framework. Iran. J. Sci. Technol. Trans. Electr. Eng. 2021, 45, 1015–1036. [Google Scholar] [CrossRef]

- Fetit, A.E.; Novak, J.; Rodriguez, D.; Auer, D.P.; Clark, C.A.; Grundy, R.G.; Peet, A.C.; Arvanitis, T.N. Radiomics in paediatric neuro-oncology: A multicentre study on MRI texture analysis. NMR Biomed. 2018, 31, e3781. [Google Scholar] [CrossRef]

- Kickingereder, P.; Bonekamp, D.; Nowosielski, M.; Kratz, A.; Sill, M.; Burth, S.; Wick, A.; Eidel, O.; Schlemmer, H.P.; Radbruch, A.; et al. Radiogenomics of glioblastoma: Machine learning-based classification of molecular characteristics by using multiparametric and multiregional MR imaging features. Radiology 2016, 281, 907–918. [Google Scholar] [CrossRef]

- Zarinabad, N.; Wilson, M.; Gill, S.K.; Manias, K.A.; Davies, N.P.; Peet, A.C. Multiclass imbalance learning: Improving classification of pediatric brain tumors from magnetic resonance spectroscopy. Magn. Reson. Med. 2017, 77, 2114–2124. [Google Scholar] [CrossRef]

- Styner, M.; Oguz, I.; Xu, S.; Brechbuehler, C.; Pantazis, D.; Levitt, J.J.; Shenton, M.E.; Gerig, G. Framework for the statistical shape analysis of brain structures using SPHARM-PDM. Insight J. 2006, 1071, 242–250. [Google Scholar] [CrossRef]

- Dalboni da Rocha, J.L.; Kepinska, O.; Schneider, P.; Benner, J.; Degano, G.; Schneider, L.; Golestani, N. Multivariate Concavity Amplitude Index (MCAI) for characterizing Heschl’s gyrus shape. NeuroImage 2023, 272, 120052. [Google Scholar] [CrossRef] [PubMed]

- Van Griethuysen, J.J.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.; Fillion-Robin, J.C.; Pieper, S.; Aerts, H.J. Computational radiomics system to decode the radiographic phenotype. Cancer Res. 2017, 77, e104–e107. [Google Scholar] [CrossRef] [PubMed]

- Boyd, A.; Ye, Z.; Prabhu, S.; Tjong, M.C.; Zha, Y.; Zapaishchykova, A.; Vajapeyam, S.; Hayat, H.; Chopra, R.; Liu, K.X.; et al. Expert-level pediatric brain tumor segmentation in a limited data scenario with stepwise transfer learning. medRxiv 2023. [Google Scholar] [CrossRef]

- Mulvany, T.; Griffiths-King, D.; Novak, J.; Rose, H. Segmentation of pediatric brain tumors using a radiologically informed, deep learning cascade. arXiv 2024, arXiv:2410.14020. [Google Scholar] [CrossRef]

- Bengtsson, M.; Keles, E.; Durak, G.; Anwar, S.; Velichko, Y.S.; Linguraru, M.G.; Waanders, A.J.; Bagci, U. A new logic for pediatric brain tumor segmentation. arXiv 2024, arXiv:2411.01390. [Google Scholar] [CrossRef]

- Vossough, A.; Khalili, N.; Familiar, A.M.; Gandhi, D.; Viswanathan, K.; Tu, W.; Haldar, D.; Bagheri, S.; Anderson, H.; Haldar, S.; et al. Training and Comparison of nnU-Net and DeepMedic Methods for Autosegmentation of Pediatric Brain Tumors. Am. J. Neuroradiol. 2024, 45, 1081–1089. [Google Scholar] [CrossRef]

- Gu, X.; Shen, Z.; Xue, J.; Fan, Y.; Ni, T. Brain tumor MR image classification using convolutional dictionary learning with local constraint. Front. Neurosci. 2021, 15, 679847. [Google Scholar] [CrossRef]

- Chen, R.J.; Lu, M.Y.; Wang, J.; Williamson, D.F.K.; Rodig, S.J.; Lindeman, N.I.; Mahmood, F. Pathomic Fusion: An integrated framework for fusing histopathology and genomic features for cancer diagnosis and prognosis. IEEE Trans. Med. Imaging 2022, 41, 757–770. [Google Scholar] [CrossRef]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Bangalore Yogananda, C.G.; Shah, B.R.; Vejdani-Jahromi, M.; Nalawade, S.S.; Murugesan, G.K.; Yu, F.F.; Pinho, M.C.; Wagner, B.C.; Emblem, K.E.; Bjørnerud, A.; et al. A fully automated deep learning network for brain tumor segmentation. Tomography 2020, 6, 186–193. [Google Scholar] [CrossRef]

- Florez, E.; Nichols, T.; Parker, E.E.; Lirette, S.T.; Howard, C.M.; Fatemi, A. Multiparametric magnetic resonance imaging in the assessment of primary brain tumors through radiomic features: A metric for guided radiation treatment planning. Cureus 2018, 10, e3426. [Google Scholar] [CrossRef]

- Obuchowicz, R.; Lasek, J.; Wodziński, M.; Piórkowski, A.; Strzelecki, M.; Nurzynska, K. Artificial Intelligence-Empowered Radiology—Current Status and Critical Review. Diagnostics 2025, 15, 282. [Google Scholar] [CrossRef]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images are more than pictures, they are data. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef]

- Casamitjana, A.; Puch, S.; Aduriz, A.; Vilaplana, V. 3D convolutional neural networks for brain tumor segmentation: A comparison of multi-resolution architectures. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries; Crimi, A., Menze, B., Maier, O., Reyes, M., Winzeck, S., Handels, H., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2016; Volume 10154. [Google Scholar] [CrossRef]

- Urban, G.; Bendszus, M.; Hamprecht, F.A.; Kleesiek, J. Multi-Modal Brain Tumor Segmentation Using Deep Convolutional Neural Networks; Springer: Berlin/Heidelberg, Germany, 2014; pp. 31–35. [Google Scholar]

- Sajjad, M.; Khan, S.; Muhammad, K.; Wu, W.; Ullah, A.; Baik, S.W. Multi-grade brain tumor classification using deep CNN with extensive data augmentation. J. Comput. Sci. 2019, 30, 174–182. [Google Scholar] [CrossRef]

- Richter, V.; Ernemann, U.; Bender, B. Novel Imaging Approaches for Glioma Classification in the Era of the World Health Organization 2021 Update: A Scoping Review. Cancers 2024, 16, 1792. [Google Scholar] [CrossRef]

- Moassefi, M.; Faghani, S.; Erickson, B. Artificial Intelligence in Neuro-Oncology: Predicting molecular markers and response to therapy. Med. Res. Arch. 2024, 12, 80. [Google Scholar] [CrossRef]

- Gorden, D.V. Machine learning tool identifies molecular subtypes of brain cancer. Oncol. Times 2023, 45, 6. [Google Scholar] [CrossRef]

- Yearley, A.G.; Blitz, S.E.; Patel, R.V.; Chan, A.; Baird, L.C.; Friedman, G.K.; Arnaout, O.; Smith, T.R.; Bernstock, J.D. Machine learning in the classification of pediatric posterior fossa tumors: A systematic review. Cancers 2022, 14, 5608. [Google Scholar] [CrossRef]

- Quon, J.L.; Bala, W.; Chen, L.C.; Wright, J.; Kim, L.H.; Han, M.; Shpanskaya, K.; Lee, E.H.; Tong, E.; Iv, M.; et al. Deep learning for pediatric posterior fossa tumor detection and classification: A multi-institutional study. Am. J. Neuroradiol. 2020, 41, 1718–1725. [Google Scholar] [CrossRef]

- Iv, M.; Zhou, M.; Shpanskaya, K.; Perreault, S.; Wang, Z.; Tranvinh, E.; Lanzman, B.; Vajapeyam, S.; Vitanza, N.; Fisher, P.; et al. MR imaging–based radiomic signatures of distinct molecular subgroups of medulloblastoma. Am. J. Neuroradiol. 2019, 40, 154–161. [Google Scholar] [CrossRef]

- Qin, C.; Hu, W.; Wang, X.; Ma, X. Application of artificial intelligence in diagnosis of craniopharyngioma. Front. Neurol. 2022, 12, 752119. [Google Scholar] [CrossRef]

- Prince, E.W.; Whelan, R.; Mirsky, D.M.; Stence, N.; Staulcup, S.; Klimo, P.; Anderson, R.C.E.; Niazi, T.N.; Grant, G.; Souweidane, M.; et al. Robust deep learning classification of adamantinomatous craniopharyngioma from limited preoperative radiographic images. Sci. Rep. 2020, 10, 16885. [Google Scholar] [CrossRef]

- Chen, X.; Tong, Y.; Shi, Z.; Chen, H.; Yang, Z.; Wang, Y.; Chen, L.; Yu, J. Noninvasive molecular diagnosis of craniopharyngioma with MRI-based radiomics approach. BMC Neurol. 2019, 19, 6. [Google Scholar] [CrossRef]

- Ma, G.; Kang, J.; Qiao, N.; Zhang, B.; Chen, X.; Li, G.; Gao, Z.; Gui, S. Non-invasive radiomics approach predicts invasiveness of adamantinomatous craniopharyngioma before surgery. Front. Oncol. 2021, 10, 599888. [Google Scholar] [CrossRef]

- Ertosun, M.G.; Rubin, D.L. Automated grading of gliomas using deep learning in digital pathology images: A modular approach with ensemble of convolutional neural networks. AMIA Annu. Symp. Proc. 2015, 2015, 1899–1908. [Google Scholar] [PubMed] [PubMed Central]

- Sultan, H.H.; Salem, N.M.; Al-Atabany, W. Multi-classification of brain tumor images using deep neural network. IEEE Access 2019, 7, 69215–69225. [Google Scholar] [CrossRef]

- Pisapia, J.M.; Akbari, H.; Rozycki, M.; Thawani, J.P.; Storm, P.B.; Avery, R.A.; Vossough, A.; Fisher, M.J.; Heuer, G.G.; Davatzikos, C. Predicting pediatric optic pathway glioma progression using advanced magnetic resonance image analysis and machine learning. Neurooncol. Adv. 2020, 2, vdaa090. [Google Scholar] [CrossRef]

- Sturm, D.; Pfister, S.M.; Jones, D.T. Pediatric Gliomas: Current Concepts on Diagnosis, Biology, and Clinical Management. J. Clin. Oncol. Off. J. Am. Soc. Clin. Oncol. 35 2017, 21, 2370–2377. [Google Scholar] [CrossRef]

- Guo, W.; She, D.; Xing, Z.; Lin, X.; Wang, F.; Song, Y.; Cao, D. Multiparametric MRI-based radiomics model for predicting H3 K27M mutant status in diffuse midline glioma: A comparative study across different sequences and machine learning techniques. Front. Oncol. 2022, 12, 796583. [Google Scholar] [CrossRef]

- Huang, B.; Zhang, Y.; Mao, Q.; Ju, Y.; Liu, Y.; Su, Z.; Lei, Y.; Ren, Y. Deep learning-based prediction of H3K27M alteration in diffuse midline gliomas based on whole-brain MRI. Cancer Med. 2023, 12, 17139–17148. [Google Scholar] [CrossRef]

- Su, X.; Chen, N.; Sun, H.; Liu, Y.; Yang, X.; Wang, W.; Zhang, S.; Tan, Q.; Gong, Q.; Yue, Q. Automated machine learning based on radiomics features predicts H3 K27M mutation in midline gliomas of the brain. Neuro Oncol. 2020, 22, 393–401. [Google Scholar] [CrossRef]

- Kandemirli, S.G.; Kocak, B.; Naganawa, S.; Ozturk, K.; Yip, S.S.F.; Chopra, S.; Rivetti, L.; Aldine, A.S.; Jones, K.; Cayci, Z.; et al. Machine learning-based multiparametric magnetic resonance imaging radiomics for prediction of H3K27M mutation in midline gliomas. World Neurosurg. 2021, 151, e78–e85. [Google Scholar] [CrossRef]

- Wagner, M.W.; Namdar, K.; Napoleone, M.; Hainc, N.; Amirabadi, A.; Fonseca, A.; Laughlin, S.; Shroff, M.M.; Bouffet, E.; Hawkins, C.; et al. Radiomic features based on MRI predict progression-free survival in pediatric diffuse midline glioma/diffuse intrinsic pontine glioma. Can. Assoc. Radiol. J. 2023, 74, 119–126. [Google Scholar] [CrossRef]

- Cheng, D.; Zhuo, Z.; Du, J.; Weng, J.; Zhang, C.; Duan, Y.; Sun, T.; Wu, M.; Guo, M.; Hua, T.; et al. A fully automated deep-learning model for predicting the molecular subtypes of posterior fossa ependymomas using T2-weighted images. Clin. Cancer Res. 2024, 30, 150–158. [Google Scholar] [CrossRef]

- Safai, A.; Shinde, S.; Jadhav, M.; Chougule, T.; Indoria, A.; Kumar, M.; Santosh, V.; Jabeen, S.; Beniwal, M.; Konar, S.; et al. Developing a radiomics signature for supratentorial extra-ventricular ependymoma using multimodal MR imaging. Front. Neurol. 2021, 12, 648092. [Google Scholar] [CrossRef]

- Lai, J.; Zou, P.; da Rocha, J.L.D.; Heitzer, A.M.; Patni, T.; Li, Y.; Scoggins, M.A.; Sharma, A.; Wang, W.C.; Helton, K.J.; et al. Hydroxyurea maintains working memory function in pediatric sickle cell disease. PLoS ONE 2024, 19, e0296196. [Google Scholar] [CrossRef]

- Abdel-Jaber, H.; Devassy, D.; Al Salam, A.; Hidaytallah, L.; EL-Amir, M. A review of deep learning algorithms and their applications in healthcare. Algorithms 2022, 15, 71. [Google Scholar] [CrossRef]

- Deepak, S.; Ameer, P.M. Brain tumor classification using deep CNN features via transfer learning. Comput. Biol. Med. 2019, 111, 103345. [Google Scholar] [CrossRef]

- Ning, Z.; Luo, J.; Xiao, Q.; Cai, L.; Chen, Y.; Yu, X.; Wang, J.; Zhang, Y. Multi-modal magnetic resonance imaging-based grading analysis for gliomas by integrating radiomics and deep features. Ann. Transl. Med. 2021, 9, 298. [Google Scholar] [CrossRef]

- Raza, A.; Ayub, H.; Khan, J.A.; Ahmad, I.; Salama, S.; Daradkeh, Y.I.; Javeed, D.; Rehman, A.U.; Hamam, H. A hybrid deep learning-based approach for brain tumor classification. Electronics 2022, 11, 1146. [Google Scholar] [CrossRef]

- Zou, P.; Conklin, H.M.; Scoggins, M.A.; Li, Y.; Li, X.; Jones, M.M.; Palmer, S.L.; Gajjar, A.; Ogg, R.J. Functional MRI in medulloblastoma survivors supports prophylactic reading intervention during tumor treatment. Brain Imaging Behav. 2016, 10, 258–271. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Dalboni da Rocha, J.L.; Zou Stinnett, P.; Scoggins, M.A.; McAfee, S.S.; Conklin, H.M.; Gajjar, A.; Sitaram, R. Functional MRI assessment of brain activity patterns associated with reading in medulloblastoma survivors. Brain Sci. 2024, 14, 904. [Google Scholar] [CrossRef] [PubMed]

- Svärd, D.; Follin, C.; Fjalldal, S.; Hellerstedt, R.; Mannfolk, P.; Mårtensson, J.; Sundgren, P.; Erfurth, E.M. Cognitive interference processing in adults with childhood craniopharyngioma using functional magnetic resonance imaging. Endocrine 2021, 74, 714–722. [Google Scholar] [CrossRef] [PubMed]

- Shibu, C.J.; Sreedharan, S.; Arun, K.M.; Kesavadas, C.; Sitaram, R. Explainable artificial intelligence model to predict brain states from fNIRS signals. Front. Hum. Neurosci. 2023, 16, 1029784. [Google Scholar] [CrossRef]

- Budnick, H.C.; Baygani, S.; Easwaran, T.; Vortmeyer, A.; Jea, A.; Desai, V.; Raskin, J. Predictors of seizure freedom in pediatric low-grade gliomas. Cureus 2022, 14, e31915. [Google Scholar] [CrossRef]

- Nune, G.; DeGiorgio, C.; Heck, C. Neuromodulation in the treatment of epilepsy. Curr. Treat. Options Neurol. 2015, 17, 43. [Google Scholar] [CrossRef]

- Pineau, J.; Guex, A.; Vincent, R.; Panuccio, G.; Avoli, M. Treating epilepsy via adaptive neurostimulation: A reinforcement learning approach. Int. J. Neural Syst. 2009, 19, 227–240. [Google Scholar] [CrossRef]

- Azriel, R.; Hahn, C.D.; Cooman, T.D.; Van Huffel, S.; Payne, E.T.; McBain, K.L.; Eytan, D.; Behar, J.A. Machine learning to support triage of children at risk for epileptic seizures in the pediatric intensive care unit. Physiol. Meas. 2022, 43, 095003. [Google Scholar] [CrossRef]

- Chen, H.; Wang, Y.; Ji, T.; Jiang, Y.; Zhou, X.H. Brain functional connectivity-based prediction of vagus nerve stimulation efficacy in pediatric pharmacoresistant epilepsy. CNS Neurosci. Ther. 2023, 29, 3259–3268. [Google Scholar] [CrossRef]

- Rana, M.; Gupta, N.; Dalboni da Rocha, J.L.; Lee, S.; Sitaram, R. A toolbox for real-time subject-independent and subject-dependent classification of brain states from fMRI signals. Front. Neurosci. 2013, 7, 170. [Google Scholar] [CrossRef]

- Kim, S.; Dalboni da Rocha, J.L.; Birbaumer, N.; Sitaram, R. Self-regulation of the posterior–frontal brain activity with real-time fMRI neurofeedback to influence perceptual discrimination. Brain Sci. 2024, 14, 713. [Google Scholar] [CrossRef] [PubMed]

- Ponce, H.; Martínez-Villaseñor, L.; Chen, Y. Editorial: Artificial intelligence in brain-computer interfaces and neuroimaging for neuromodulation and neurofeedback. Front. Neurosci. 2022, 16, 974269. [Google Scholar] [CrossRef] [PubMed]

- Jang, B.S.; Jeon, S.H.; Kim, I.H.; Kim, I.A. Prediction of pseudoprogression versus progression using machine learning algorithm in glioblastoma. Sci. Rep. 2018, 8, 12516. [Google Scholar] [CrossRef]

- Kim, J.Y.; Park, J.E.; Jo, Y.; Shim, W.H.; Nam, S.J.; Kim, J.H.; Yoo, R.E.; Choi, S.H.; Kim, H.S. Incorporating diffusion- and perfusion-weighted MRI into a radiomics model improves diagnostic performance for pseudoprogression in glioblastoma patients. Neuro Oncol. 2019, 21, 404–414. [Google Scholar] [CrossRef]

- Liu, Z.M.; Zhang, H.; Ge, M.; Hao, X.L.; An, X.; Tian, Y.J. Radiomics signature for the prediction of progression-free survival and radiotherapeutic benefits in pediatric medulloblastoma. Childs Nerv. Syst. 2022, 38, 1085–1094. [Google Scholar] [CrossRef]

- Luo, Y.; Zhuang, Y.; Xiao, Q.; Cai, L.; Chen, Y.; Yu, X.; Wang, J.; Zhang, Y. Multiparametric MRI-based radiomics signature with machine learning for preoperative prediction of prognosis stratification in pediatric medulloblastoma. Acad. Radiol. 2023, 31, 1629–1642. [Google Scholar] [CrossRef]

- Ashry, E.R.; Maghraby, F.A.; El-Latif, Y.M.A.; Agag, M. Pediatric Posterior Fossa Tumors Classification and Explanation-Driven with Explainable Artificial Intelligence Models. Int. J. Comput. Intell. Syst. 2024, 17, 166. [Google Scholar] [CrossRef]

- Kumarakulasinghe, N.B.; Blomberg, T.; Liu, J.; Leao, A.S.; Papapetrou, P. Evaluating Local Interpretable Model-Agnostic Explanations on Clinical Machine Learning Classification Models. In Proceedings of the 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS), Rochester, MN, USA, 28–30 July 2020; pp. 7–12. [Google Scholar]

- Mosca, E.; Szigeti, F.; Tragianni, S.; Gallagher, D.; Groh, G.L. SHAP-Based Explanation Methods: A Review for NLP Interpretability. In Proceedings of the International Conference on Computational Linguistics 2022, Gyeongju, Republic of Korea, 12–17 October 2022. [Google Scholar]

- Esmaeili, M.; Vettukattil, R.; Banitalebi, H.; Krogh, N.R.; Geitung, J.T. Explainable artificial intelligence for human-machine interaction in brain tumor localization. J. Pers. Med. 2021, 11, 1213. [Google Scholar] [CrossRef]

- Zeineldin, R.A.; Karar, M.E.; Elshaer, Z.; Coburger, J.; Wirtz, C.R.; Burgert, O.; Mathis-Ullrich, F. Explainability of deep neural networks for MRI analysis of brain tumors. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1673–1683. [Google Scholar] [CrossRef]

- Alkhalaf, S.; Alturise, F.; Bahaddad, A.A.; Elnaim, B.M.E.; Shabana, S.; Abdel-Khalek, S.; Mansour, R.F. Adaptive Aquila Optimizer with explainable artificial intelligence-enabled cancer diagnosis on medical imaging. Cancers 2023, 15, 1492. [Google Scholar] [CrossRef]

- Friston, K.J. Statistical Parametric Mapping. In Neuroscience Databases; Kӧtter, R., Ed.; Springer: Boston, MA, USA, 2003. [Google Scholar] [CrossRef]

- Smith, S.M.; Jenkinson, M.; Woolrich, M.W.; Beckmann, C.F.; Behrens, T.E.; Johansen-Berg, H.; Bannister, P.R.; De Luca, M.; Drobnjak, I.; Flitney, D.E.; et al. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage 2004, 23 (Suppl. S1), S208–S219. [Google Scholar] [CrossRef] [PubMed]

- Fischl, B. FreeSurfer. NeuroImage 2012, 62, 774–781. [Google Scholar] [CrossRef] [PubMed]

- Cheng, A.S.; Guan, Q.; Su, Y.; Zhou, P.; Zeng, Y. Integration of machine learning and blockchain technology in the healthcare field: A literature review and implications for cancer care. Asia-Pac. J. Oncol. Nurs. 2021, 8, 720–724. [Google Scholar] [CrossRef] [PubMed]

- Dalboni da Rocha, J.L.; Coutinho, G.; Bramati, I.; Moll, F.T.; Sitaram, R. Multilevel diffusion tensor imaging classification technique for characterizing neurobehavioral disorders. Brain Imaging Behav. 2020, 14, 641–652. [Google Scholar] [CrossRef]

- Zurita, M.; Montalba, C.; Labbé, T.; Cruz, J.P.; Dalboni da Rocha, J.; Tejos, C.; Ciampi, E.; Cárcamo, C.; Sitaram, R.; Uribe, S. Characterization of relapsing-remitting multiple sclerosis patients using support vector machine classifications of functional and diffusion MRI data. Neuroimage Clin. 2018, 20, 724–732. [Google Scholar] [CrossRef]

| Study | Dataset Size | Performance |

|---|---|---|

| [102] | Training: 184 samples/testing: 100 samples | Dice coefficient: 0.877 |

| [103] | Training: 261 samples/testing: 112 samples | Dice coefficient: 0.657–0.967 |

| [104] | Training: 261 samples/testing: 30 samples | Dice coefficient: 0.642 |

| [105] | Training: 364 samples/testing: 125 samples | Dice coefficient: 0.98 |

| Key Issue | Description | Machine Learning Solutions |

|---|---|---|

| Limited pediatric data | Scarcity of large, high-quality datasets impedes AI model training. | Transfer learning, federated learning, GANs for data augmentation |

| Motion artifacts in young patients | Children struggle to stay still during scans, leading to degraded image quality. | AI-based motion correction, CNNs for image denoising |

| Prolonged scan times | Long MRI scans increase discomfort and require sedation. | Compressed sensing, AI-accelerated MRI reconstruction (e.g., deep CNNs) |

| Radiation and contrast agent exposure | Pediatric patients are more sensitive to radiation and contrast dyes. | Low-dose imaging with deep learning, contrast-free MRI enhancement with CNNs |

| Tumor detection and segmentation | Early and precise tumor localization is essential for treatment planning. | CNNs (3D-CNN, ResNet, U-Net, nnU-Net), GANs for data augmentation |

| Tumor classification and molecular subtyping | Differentiating between tumor types and predicting molecular markers. | Radiomics radiogenomics, CNNs, SVMs, deep learning classifiers |

| Functional neuroimaging and cognitive deficits | Pediatric brain tumors affect cognitive function, requiring fMRI and other functional imaging. | AI-based functional MRI analysis, machine learning for cognitive pattern recognition (e.g., SVM, CNNs) |

| Explainability and interpretability of AI | Clinicians need interpretable AI models for trust and adoption. | Explainable AI (XAI), SHAP, LIME, Grad-CAM |

| Personalized treatment planning | Individualized therapy selection based on imaging and genetic markers. | Multi-modal deep learning (integrating MRI, PET, clinical data) |

| Predicting treatment outcomes and survival | AI models need to distinguish between real tumor progression and pseudoprogression. | Radiomic feature analysis, deep learning prognostic models, survival prediction frameworks |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dalboni da Rocha, J.L.; Lai, J.; Pandey, P.; Myat, P.S.M.; Loschinskey, Z.; Bag, A.K.; Sitaram, R. Artificial Intelligence for Neuroimaging in Pediatric Cancer. Cancers 2025, 17, 622. https://doi.org/10.3390/cancers17040622

Dalboni da Rocha JL, Lai J, Pandey P, Myat PSM, Loschinskey Z, Bag AK, Sitaram R. Artificial Intelligence for Neuroimaging in Pediatric Cancer. Cancers. 2025; 17(4):622. https://doi.org/10.3390/cancers17040622

Chicago/Turabian StyleDalboni da Rocha, Josue Luiz, Jesyin Lai, Pankaj Pandey, Phyu Sin M. Myat, Zachary Loschinskey, Asim K. Bag, and Ranganatha Sitaram. 2025. "Artificial Intelligence for Neuroimaging in Pediatric Cancer" Cancers 17, no. 4: 622. https://doi.org/10.3390/cancers17040622

APA StyleDalboni da Rocha, J. L., Lai, J., Pandey, P., Myat, P. S. M., Loschinskey, Z., Bag, A. K., & Sitaram, R. (2025). Artificial Intelligence for Neuroimaging in Pediatric Cancer. Cancers, 17(4), 622. https://doi.org/10.3390/cancers17040622