Artificial Intelligence in the Diagnosis of Oral Diseases: Applications and Pitfalls

Abstract

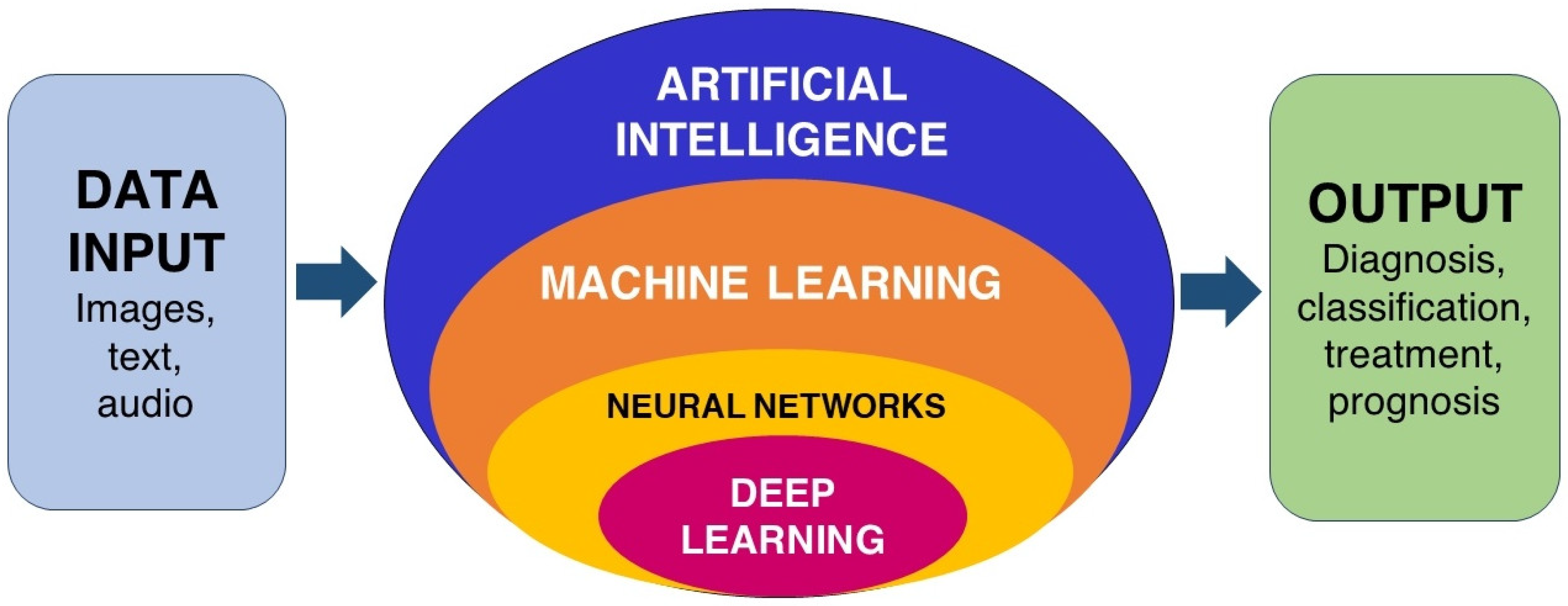

1. Introduction

2. Search Strategy

3. Dental Caries

4. Tooth Fracture

5. Periodontal Diseases

6. Maxillary Sinus Diseases

7. Salivary Gland Diseases

8. Temporomandibular Joint Disorders

9. Osteoporosis

10. Oral Cancer and Cervical Lymph Node Metastasis

11. Prospects and Challenges

12. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Ensmenger, N.; Nilsson, N.J. The Quest for Artificial Intelligence: A History of Ideas and Achievements. Xv + 562 pp., Index; Cambridge University Press: Cambridge, MA, USA; New York, NY, USA, 2010; Volume 102, pp. 588–589. [Google Scholar] [CrossRef]

- Turing, A.M. On Computable Numbers, with an Application to the Entscheidungsproblem; London Mathematical Society: London, UK, 1937; Volume s2–s42, pp. 230–265. [Google Scholar]

- Newell, A.; Simon, H.A. Computer Science as Empirical Inquiry. Commun. ACM 1976, 19, 113–126. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Al-Ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, Application, and Performance of Artificial Intelligence in Dentistry—A Systematic Review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef] [PubMed]

- Bowling, M.; Fürnkranz, J.; Graepel, T.; Musick, R. Machine Learning and Games. Mach. Learn. 2006, 63, 211–215. [Google Scholar] [CrossRef]

- Park, W.J.; Park, J.B. History and Application of Artificial Neural Networks in Dentistry. Eur. J. Dent. 2018, 12, 594–601. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Sarker, I.H. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications, and Research Directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef] [PubMed]

- Jung, S.K.; Kim, T.W. New Approach for the Diagnosis of Extractions with Neural Network Machine Learning. Am. J. Orthod. Dentofac. Orthop. 2016, 149, 127–133. [Google Scholar] [CrossRef]

- Niño-Sandoval, T.C.; Perez, S.V.G.; González, F.A.; Jaque, R.A.; Infante-Contreras, C. An Automatic Method for Skeletal Patterns Classification Using Craniomaxillary Variables on a Colombian Population. Forensic Sci. Int. 2016, 261, 159.e1–159.e6. [Google Scholar] [CrossRef]

- Niño-Sandoval, T.C.; Pérez, S.V.G.; González, F.A.; Jaque, R.A.; Infante-Contreras, C. Use of Automated Learning Techniques for Predicting Mandibular Morphology in Skeletal Class I, II and III. Forensic Sci. Int. 2017, 281, 187.e1–187.e7. [Google Scholar] [CrossRef]

- Saghiri, M.A.; Garcia-Godoy, F.; Gutmann, J.L.; Lotfi, M.; Asgar, K. The Reliability of Artificial Neural Network in Locating Minor Apical Foramen: A Cadaver Study. J. Endod. 2012, 38, 1130–1134. [Google Scholar] [CrossRef]

- Saghiri, M.A.; Asgar, K.; Boukani, K.K.; Lotfi, M.; Aghili, H.; Delvarani, A.; Karamifar, K.; Saghiri, A.M.; Mehrvarzfar, P.; Garcia-Godoy, F. A New Approach for Locating the Minor Apical Foramen Using an Artificial Neural Network. Int. Endod. J. 2012, 45, 257–265. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Wu, J.; Li, S.; Lyu, P.; Wang, Y.; Li, M. An Ontology-Driven, Case-Based Clinical Decision Support Model for Removable Partial Denture Design. Sci. Rep. 2016, 6, 27855. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Lai, L.; Chen, L.; Lu, C.; Cai, Q. The Prediction in Computer Color Matching of Dentistry Based on GA+BP Neural Network. Comput. Math. Methods Med. 2015, 2015, 816719. [Google Scholar] [CrossRef] [PubMed]

- Aliaga, I.J.; Vera, V.; de Paz, J.F.; García, A.E.; Mohamad, M.S. Modelling the Longevity of Dental Restorations by Means of a CBR System. BioMed Res. Int. 2015, 2015, 540306. [Google Scholar] [CrossRef] [PubMed]

- Thanathornwong, B.; Suebnukarn, S.; Ouivirach, K. Decision Support System for Predicting Color Change after Tooth Whitening. Comput. Methods Programs Biomed. 2016, 125, 88–93. [Google Scholar] [CrossRef] [PubMed]

- Ozden, F.O.; Özgönenel, O.; Özden, B.; Aydogdu, A. Diagnosis of Periodontal Diseases Using Different Classification Algorithms: A Preliminary Study. Niger. J. Clin. Pract. 2015, 18, 416. [Google Scholar] [CrossRef]

- Nakano, Y.; Suzuki, N.; Kuwata, F. Predicting Oral Malodour Based on the Microbiota in Saliva Samples Using a Deep Learning Approach. BMC Oral Health 2018, 18, 128. [Google Scholar] [CrossRef]

- Dar-Odeh, N.S.; Alsmadi, O.M.; Bakri, F.; Abu-Hammour, Z.; Shehabi, A.A.; Al-Omiri, M.K.; Abu-Hammad, S.M.K.; Al-Mashni, H.; Saeed, M.B.; Muqbil, W.; et al. Predicting Recurrent Aphthous Ulceration Using Genetic Algorithms-Optimized Neural Networks. Adv. Appl. Bioinform. Chem. 2010, 3, 7. [Google Scholar] [CrossRef][Green Version]

- Kositbowornchai, S.; Plermkamon, S.; Tangkosol, T. Performance of an Artificial Neural Network for Vertical Root Fracture Detection: An Ex Vivo Study. Dent. Traumatol. 2013, 29, 151–155. [Google Scholar] [CrossRef]

- de Bruijn, M.; ten Bosch, L.; Kuik, D.J.; Langendijk, J.A.; Leemans, C.R.; de Leeuw, I.V. Artificial Neural Network Analysis to Assess Hypernasality in Patients Treated for Oral or Oropharyngeal Cancer. Logop. Phoniatr. Vocol. 2011, 36, 168–174. [Google Scholar] [CrossRef]

- Chang, S.W.; Abdul-Kareem, S.; Merican, A.F.; Zain, R.B. Oral Cancer Prognosis Based on Clinicopathologic and Genomic Markers Using a Hybrid of Feature Selection and Machine Learning Methods. BMC Bioinform. 2013, 14, 170. [Google Scholar] [CrossRef] [PubMed]

- Pethani, F. Promises and Perils of Artificial Intelligence in Dentistry. Aust. Dent. J. 2021, 66, 124–135. [Google Scholar] [CrossRef] [PubMed]

- Shan, T.; Tay, F.R.; Gu, L. Application of Artificial Intelligence in Dentistry. J. Dent. Res. 2021, 100, 232–244. [Google Scholar] [CrossRef] [PubMed]

- Devito, K.L.; de Souza Barbosa, F.; Filho, W.N.F. An Artificial Multilayer Perceptron Neural Network for Diagnosis of Proximal Dental Caries. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2008, 106, 879–884. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and Diagnosis of Dental Caries Using a Deep Learning-Based Convolutional Neural Network Algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef] [PubMed]

- Hung, M.; Voss, M.W.; Rosales, M.N.; Li, W.; Su, W.; Xu, J.; Bounsanga, J.; Ruiz-Negrón, B.; Lauren, E.; Licari, F.W. Application of Machine Learning for Diagnostic Prediction of Root Caries. Gerodontology 2019, 36, 395–404. [Google Scholar] [CrossRef] [PubMed]

- Casalegno, F.; Newton, T.; Daher, R.; Abdelaziz, M.; Lodi-Rizzini, A.; Schürmann, F.; Krejci, I.; Markram, H. Caries Detection with Near-Infrared Transillumination Using Deep Learning. J. Dent. Res. 2019, 98, 1227–1233. [Google Scholar] [CrossRef] [PubMed]

- Cantu, A.G.; Gehrung, S.; Krois, J.; Chaurasia, A.; Rossi, J.G.; Gaudin, R.; Elhennawy, K.; Schwendicke, F. Detecting Caries Lesions of Different Radiographic Extension on Bitewings Using Deep Learning. J. Dent. 2020, 100, 103425. [Google Scholar] [CrossRef]

- Park, Y.H.; Kim, S.H.; Choi, Y.Y. Prediction Models of Early Childhood Caries Based on Machine Learning Algorithms. Int. J. Environ. Res. Public Health 2021, 18, 8613. [Google Scholar] [CrossRef] [PubMed]

- Paniagua, B.; Shah, H.; Hernandez-Cerdan, P.; Budin, F.; Chittajallu, D.; Walter, R.; Mol, A.; Khan, A.; Vimort, J.-B. Automatic Quantification Framework to Detect Cracks in Teeth. Proc. SPIE Int. Soc. Opt. Eng. 2018, 10578, 105781K. [Google Scholar] [CrossRef]

- Fukuda, M.; Inamoto, K.; Shibata, N.; Ariji, Y.; Yanashita, Y.; Kutsuna, S.; Nakata, K.; Katsumata, A.; Fujita, H.; Ariji, E. Evaluation of an Artificial Intelligence System for Detecting Vertical Root Fracture on Panoramic Radiography. Oral Radiol. 2019, 36, 337–343. [Google Scholar] [CrossRef] [PubMed]

- Danks, R.P.; Bano, S.; Orishko, A.; Tan, H.J.; Moreno Sancho, F.; D’Aiuto, F.; Stoyanov, D. Automating Periodontal Bone Loss Measurement via Dental Landmark Localisation. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1189–1199. [Google Scholar] [CrossRef] [PubMed]

- Tonetti, M.S.; Greenwell, H.; Kornman, K.S. Staging and Grading of Periodontitis: Framework and Proposal of a New Classification and Case Definition. J. Periodontol. 2018, 89 (Suppl. 1), S159–S172. [Google Scholar] [CrossRef] [PubMed]

- Chang, H.J.; Lee, S.J.; Yong, T.H.; Shin, N.Y.; Jang, B.G.; Kim, J.E.; Huh, K.H.; Lee, S.S.; Heo, M.S.; Choi, S.C.; et al. Deep Learning Hybrid Method to Automatically Diagnose Periodontal Bone Loss and Stage Periodontitis. Sci. Rep. 2020, 10, 7531. [Google Scholar] [CrossRef]

- Murata, M.; Ariji, Y.; Ohashi, Y.; Kawai, T.; Fukuda, M.; Funakoshi, T.; Kise, Y.; Nozawa, M.; Katsumata, A.; Fujita, H.; et al. Deep-Learning Classification Using Convolutional Neural Network for Evaluation of Maxillary Sinusitis on Panoramic Radiography. Oral Radiol. 2018, 35, 301–307. [Google Scholar] [CrossRef]

- Kim, Y.; Lee, K.J.; Sunwoo, L.; Choi, D.; Nam, C.M.; Cho, J.; Kim, J.; Bae, Y.J.; Yoo, R.E.; Choi, B.S.; et al. Deep Learning in Diagnosis of Maxillary Sinusitis Using Conventional Radiography. Investig. Radiol. 2019, 54, 7–15. [Google Scholar] [CrossRef]

- Kuwana, R.; Ariji, Y.; Fukuda, M.; Kise, Y.; Nozawa, M.; Kuwada, C.; Muramatsu, C.; Katsumata, A.; Fujita, H.; Ariji, E. Performance of Deep Learning Object Detection Technology in the Detection and Diagnosis of Maxillary Sinus Lesions on Panoramic Radiographs. Dentomaxillofacial Radiol. 2021, 50, 20200171. [Google Scholar] [CrossRef]

- Hung, K.F.; Ai, Q.Y.H.; King, A.D.; Bornstein, M.M.; Wong, L.M.; Leung, Y.Y. Automatic Detection and Segmentation of Morphological Changes of the Maxillary Sinus Mucosa on Cone-Beam Computed Tomography Images Using a Three-Dimensional Convolutional Neural Network. Clin. Oral Investig. 2022. online ahead of print. [Google Scholar] [CrossRef]

- Kise, Y.; Ikeda, H.; Fujii, T.; Fukuda, M.; Ariji, Y.; Fujita, H.; Katsumata, A.; Ariji, E. Preliminary Study on the Application of Deep Learning System to Diagnosis of Sjögren’s Syndrome on CT Images. Dentomaxillofacial Radiol. 2019, 48, 48. [Google Scholar] [CrossRef]

- López-Janeiro, Á.; Cabañuz, C.; Blasco-Santana, L.; Ruiz-Bravo, E. A Tree-Based Machine Learning Model to Approach Morphologic Assessment of Malignant Salivary Gland Tumors. Ann. Diagn. Pathol. 2022, 56, 151869. [Google Scholar] [CrossRef]

- De Felice, F.; Valentini, V.; de Vincentiis, M.; di Gioia, C.R.T.; Musio, D.; Tummulo, A.A.; Ricci, L.I.; Converti, V.; Mezi, S.; Messineo, D.; et al. Prediction of Recurrence by Machine Learning in Salivary Gland Cancer Patients After Adjuvant (Chemo)Radiotherapy. Vivo 2021, 35, 3355–3360. [Google Scholar] [CrossRef] [PubMed]

- Chiesa-Estomba, C.M.; Echaniz, O.; Sistiaga Suarez, J.A.; González-García, J.A.; Larruscain, E.; Altuna, X.; Medela, A.; Graña, M. Machine Learning Models for Predicting Facial Nerve Palsy in Parotid Gland Surgery for Benign Tumors. J. Surg. Res. 2021, 262, 57–64. [Google Scholar] [CrossRef] [PubMed]

- Radke, J.C.; Ketcham, R.; Glassman, B.; Kull, R.S. Artificial Neural Network Learns to Differentiate Normal TMJs and Nonreducing Displaced Disks after Training on Incisor-Point Chewing Movements. Cranio J. Craniomandib. Pract. 2003, 21, 259–264. [Google Scholar] [CrossRef]

- Bas, B.; Ozgonenel, O.; Ozden, B.; Bekcioglu, B.; Bulut, E.; Kurt, M. Use of Artificial Neural Network in Differentiation of Subgroups of Temporomandibular Internal Derangements: A Preliminary Study. J. Oral Maxillofac. Surg. 2012, 70, 51–59. [Google Scholar] [CrossRef] [PubMed]

- Iwasaki, H. Bayesian Belief Network Analysis Applied to Determine the Progression of Temporomandibular Disorders Using MRI. Dentomaxillofacial Radiol. 2015, 44, 20140279. [Google Scholar] [CrossRef] [PubMed]

- Choi, E.; Kim, D.; Lee, J.Y.; Park, H.K. Artificial Intelligence in Detecting Temporomandibular Joint Osteoarthritis on Orthopantomogram. Sci. Rep. 2021, 11, 10246. [Google Scholar] [CrossRef] [PubMed]

- Orhan, K.; Driesen, L.; Shujaat, S.; Jacobs, R.; Chai, X. Development and Validation of a Magnetic Resonance Imaging-Based Machine Learning Model for TMJ Pathologies. BioMed Res. Int. 2021, 2021, 6656773. [Google Scholar] [CrossRef] [PubMed]

- de Lima, E.D.; Paulino, J.A.S.; de Farias Freitas, A.P.L.; Ferreira, J.E.V.; da Silva Barbosa, J.; Silva, D.F.B.; Bento, P.M.; Araújo Maia Amorim, A.M.; Melo, D.P. Artificial Intelligence and Infrared Thermography as Auxiliary Tools in the Diagnosis of Temporomandibular Disorder. Dentomaxillofacial Radiol. 2022, 51, 20210318. [Google Scholar] [CrossRef]

- Taguchi, A.; Ohtsuka, M.; Nakamoto, T.; Naito, K.; Tsuda, M.; Kudo, Y.; Motoyama, E.; Suei, Y.; Tanimoto, K. Identification of Post-Menopausal Women at Risk of Osteoporosis by Trained General Dental Practitioners Using Panoramic Radiographs. Dentomaxillofacial Radiol. 2014, 36, 149–154. [Google Scholar] [CrossRef]

- Okabe, S.; Morimoto, Y.; Ansai, T.; Yoshioka, I.; Tanaka, T.; Taguchi, A.; Kito, S.; Wakasugi-Sato, N.; Oda, M.; Kuroiwa, H.; et al. Assessment of the Relationship between the Mandibular Cortex on Panoramic Radiographs and the Risk of Bone Fracture and Vascular Disease in 80-Year-Olds. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endod. 2008, 106, 433–442. [Google Scholar] [CrossRef]

- Klemetti, E.; Kolmakov, S.; Kröger, H. Pantomography in Assessment of the Osteoporosis Risk Group. Eur. J. Oral Sci. 1994, 102, 68–72. [Google Scholar] [CrossRef] [PubMed]

- Taguchi, A.; Suei, Y.; Ohtsuka, M.; Otani, K.; Tanimoto, K.; Ohtaki, M. Usefulness of Panoramic Radiography in the Diagnosis of Postmenopausal Osteoporosis in Women. Width and Morphology of Inferior Cortex of the Mandible. Dentomaxillofacial Radiol. 2014, 25, 263–267. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.-S.; Adhikari, S.; Liu, L.; Jeong, H.-G.; Kim, H.; Yoon, S.-J. Osteoporosis Detection in Panoramic Radiographs Using a Deep Convolutional Neural Network-Based Computer-Assisted Diagnosis System: A Preliminary Study. Dentomaxillofacial Radiol. 2019, 48, 20170344. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.S.; Jung, S.K.; Ryu, J.J.; Shin, S.W.; Choi, J. Evaluation of Transfer Learning with Deep Convolutional Neural Networks for Screening Osteoporosis in Dental Panoramic Radiographs. J. Clin. Med. 2020, 9, 392. [Google Scholar] [CrossRef]

- Cancer. Available online: https://www.who.int/news-room/fact-sheets/detail/cancer (accessed on 31 January 2022).

- Nayak, G.S.; Kamath, S.; Pai, K.M.; Sarkar, A.; Ray, S.; Kurien, J.; D’Almeida, L.; Krishnanand, B.R.; Santhosh, C.; Kartha, V.B.; et al. Principal Component Analysis and Artificial Neural Network Analysis of Oral Tissue Fluorescence Spectra: Classification of Normal Premalignant and Malignant Pathological Conditions. Biopolymers 2006, 82, 152–166. [Google Scholar] [CrossRef]

- Uthoff, R.D.; Song, B.; Sunny, S.; Patrick, S.; Suresh, A.; Kolur, T.; Keerthi, G.; Spires, O.; Anbarani, A.; Wilder-Smith, P.; et al. Point-of-Care, Smartphone-Based, Dual-Modality, Dual-View, Oral Cancer Screening Device with Neural Network Classification for Low-Resource Communities. PLoS ONE 2018, 13, e0207493. [Google Scholar] [CrossRef]

- Aubreville, M.; Knipfer, C.; Oetter, N.; Jaremenko, C.; Rodner, E.; Denzler, J.; Bohr, C.; Neumann, H.; Stelzle, F.; Maier, A. Automatic Classification of Cancerous Tissue in Laserendomicroscopy Images of the Oral Cavity Using Deep Learning. Sci. Rep. 2017, 7, 11979. [Google Scholar] [CrossRef]

- Shams, W.K.; Htike, Z.Z. Oral Cancer Prediction Using Gene Expression Profiling and Machine Learning. Int. J. Appl. Eng. Res. 2017, 12, 4893–4898. [Google Scholar]

- Jeyaraj, P.R.; Samuel Nadar, E.R. Computer-Assisted Medical Image Classification for Early Diagnosis of Oral Cancer Employing Deep Learning Algorithm. J. Cancer Res. Clin. Oncol. 2019, 145, 829–837. [Google Scholar] [CrossRef]

- Kim, D.W.; Lee, S.; Kwon, S.; Nam, W.; Cha, I.H.; Kim, H.J. Deep Learning-Based Survival Prediction of Oral Cancer Patients. Sci. Rep. 2019, 9, 6994. [Google Scholar] [CrossRef]

- Alabi, R.O.; Elmusrati, M.; Sawazaki-Calone, I.; Kowalski, L.P.; Haglund, C.; Coletta, R.D.; Mäkitie, A.A.; Salo, T.; Almangush, A.; Leivo, I. Comparison of Supervised Machine Learning Classification Techniques in Prediction of Locoregional Recurrences in Early Oral Tongue Cancer. Int. J. Med. Inform. 2020, 136, 104068. [Google Scholar] [CrossRef] [PubMed]

- Alhazmi, A.; Alhazmi, Y.; Makrami, A.; Masmali, A.; Salawi, N.; Masmali, K.; Patil, S. Application of Artificial Intelligence and Machine Learning for Prediction of Oral Cancer Risk. J. Oral Pathol. Med. 2021, 50, 444–450. [Google Scholar] [CrossRef] [PubMed]

- Chu, C.S.; Lee, N.P.; Adeoye, J.; Thomson, P.; Choi, S.W. Machine Learning and Treatment Outcome Prediction for Oral Cancer. J. Oral Pathol. Med. 2020, 49, 977–985. [Google Scholar] [CrossRef] [PubMed]

- Kirubabai, M.P.; Arumugam, G. Deep Learning Classification Method to Detect and Diagnose the Cancer Regions in Oral MRI Images. Med. Leg. Update 2021, 21, 462–468. [Google Scholar] [CrossRef]

- Ariji, Y.; Fukuda, M.; Kise, Y.; Nozawa, M.; Yanashita, Y.; Fujita, H.; Katsumata, A.; Ariji, E. Contrast-Enhanced Computed Tomography Image Assessment of Cervical Lymph Node Metastasis in Patients with Oral Cancer by Using a Deep Learning System of Artificial Intelligence. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2019, 127, 458–463. [Google Scholar] [CrossRef]

- Ariji, Y.; Sugita, Y.; Nagao, T.; Nakayama, A.; Fukuda, M.; Kise, Y.; Nozawa, M.; Nishiyama, M.; Katumata, A.; Ariji, E. CT Evaluation of Extranodal Extension of Cervical Lymph Node Metastases in Patients with Oral Squamous Cell Carcinoma Using Deep Learning Classification. Oral Radiol. 2019, 36, 148–155. [Google Scholar] [CrossRef]

- General Data Protection Regulation (GDPR)—Official Legal Text. Available online: https://gdpr-info.eu/ (accessed on 28 March 2022).

- Hulsen, T. Sharing Is Caring—Data Sharing Initiatives in Healthcare. Int. J. Environ. Res. Public Health 2020, 17, 3046. [Google Scholar] [CrossRef]

- Sun, C.; Ippel, L.; van Soest, J.; Wouters, B.; Malic, A.; Adekunle, O.; van den Berg, B.; Mussmann, O.; Koster, A.; van der Kallen, C.; et al. A Privacy-Preserving Infrastructure for Analyzing Personal Health Data in a Vertically Partitioned Scenario. Stud. Health Technol. Inform. 2019, 264, 373–377. [Google Scholar] [CrossRef]

- Gaye, A.; Marcon, Y.; Isaeva, J.; Laflamme, P.; Turner, A.; Jones, E.M.; Minion, J.; Boyd, A.W.; Newby, C.J.; Nuotio, M.L.; et al. DataSHIELD: Taking the Analysis to the Data, Not the Data to the Analysis. Int. J. Epidemiol. 2014, 43, 1929–1944. [Google Scholar] [CrossRef]

- Schwendicke, F.; Samek, W.; Krois, J. Artificial Intelligence in Dentistry: Chances and Challenges. J. Dent. Res. 2020, 99, 769–774. [Google Scholar] [CrossRef]

- Rodrigues, J.A.; Krois, J.; Schwendicke, F. Demystifying Artificial Intelligence and Deep Learning in Dentistry. Braz. Oral Res. 2021, 35, 1–7. [Google Scholar] [CrossRef] [PubMed]

- MacHoy, M.E.; Szyszka-Sommerfeld, L.; Vegh, A.; Gedrange, T.; Woźniak, K. The Ways of Using Machine Learning in Dentistry. Adv. Clin. Exp. Med. 2020, 29, 375–384. [Google Scholar] [CrossRef] [PubMed]

| Study | Algorithm Used | Study Factor | Modality | Number of Input Data | Performance | Comparison | Outcome |

|---|---|---|---|---|---|---|---|

| Lee J et al. (2018) [27] | CNN | Dental caries | Periapical radiographs | 600 | Mean AUC—0.890 | 4 Dentists | Deep CNN showed a considerably good performance in detecting dental caries in periapical radiographs. |

| Casalegno et al. (2019) [29] | CNN | Dental caries | Near-infrared transillumination imaging | 217 | ROC of 83.6% for occlusal caries; ROC of 84.6% for proximal caries | Dentists with clinical experience | CNN showed increased speed and accuracy in detecting dental caries |

| Cantu et al. (2019) [30] | CNN | Dental caries | Bitewing radiographs | 141 | Accuracy 0.80; sensitivity 0.75%; specificity 0.83%; | 4 experienced dentists | AI model was more accurate than dentists |

| Radke et al. (2003) [45] | ANN | Disk displacement | Frontal plane jaw recordings from chewing | 68 | Accuracy 86.8%, specificity 100%, sensitivity 91.8% | None | The proposed model has an acceptable level of error and an excellent cost/benefit ratio. |

| Park YH et al. (2021) [31] | ML | Early childhood caries | Demographic details, oral hygiene management details, maternal details | 4195 | AUROC between 0.774 and 0.785 | Traditional regression model | Both ML-based and traditional regression models showed favorable performance and can be used as a supporting tool. |

| Kuwana et al. (2021) [39] | CNN | Maxillary sinus lesions | Panoramic radiographs | 1174 | Diagnostic accuracy, sensitivity, and specificity were 90–91%, 81–85% and 91–96% for maxillary sinusitis and 97–100%, 80–100% and 100% for maxillary sinus cysts. | None | The proposed deep learning model can be reliably used for detecting the maxillary sinuses and identifying lesions in them. |

| Murata et al. (2018) [37] | CNN | Maxillary sinusitis | Panoramic radiographs | 120 | Accuracy 87.5%; sensitivity 86.7%; specificity 88.3% | 2 experienced radiologists, 2 dental residents | The AI model can be a supporting tool for inexperienced dentists |

| Kim et al. (2019) [38] | CNN | Maxillary sinusitis | Water’s view radiographs | 200 | AUC of 0.93 for temporal; AUC of 0.88 for geographic external | 5 radiologists | the AI-based model showed statistically higher performance than radiologists. |

| Hung KF et al. (2022) [40] | CNN | maxillary sinusitis | Cone-beam computed tomography | 890 | AUC for detection of mucosal thickening and mucous retention cyst was 0.91 and 0.84 in low dose, and 0.89 and 0.93 for high dose | None | The proposed model can accurately detect mucosal thickening and mucous retention cysts in both low and high-dose protocol CBCT scans. |

| Danks et al. (2021) [34] | DNN symmetric hourglass architecture | Periodontal bone loss | Periapical radiographs | 340 | Percentage Correct Keypoints of 83.3% across all root morphologies | Asymmetric hourglass architecture, Resnet | The proposed system showed promising capability in localizing landmarks and periodontal bone loss and performed 1.7% better than the next best architecture. |

| Chang et al. (2020) [36] | CNN | Periodontal bone loss | Panoramic radiographs | 340 | Pixel accuracy of 0.93; Jaccard index of 0.92; dice coefficient values of 0.88 for localization of periodontal bone. | None | The proposed model showed high accuracy and excellent reliability in the detection of periodontal bone loss and classification of periodontitis |

| Ozden et al. (2015) [18] | ANN | Periodontal disease | Risk factors, periodontal data, and radiographic bone loss | 150 | Performance of SVM & DT was 98%; ANN was 46% | SVM &DT | SVM and DT showed good performance in the classification of periodontal disease while ANN had the worst performance |

| Devito et al. (2008) [26] | ANN | Proximal caries | Bitewing radiograph | 160 | ROC curve area of 0.884 | 25 examiners | ANN could improve the performance of diagnosing proximal caries. |

| Dar-Odeh et al. (2010) [20] | ANN | Recurrent aphthous ulcers | Predisposing factor and RAU status | 96 | Accuracy of prediction for network 3 & 8 is 90%; 4,6 & 9 is 80%; 1& 7 is 70%; 2 & 5 is 60% | None | the ANN model seemed to use gender, hematologic and mycologic data, tooth brushing, fruit, and vegetable consumption for the prediction of RAU. |

| Hung M et al. (2019) [28] | CNN | Root caries | Data set | 5135 | Accuracy 97.1%; Precision 95.1%; sensitivity 99.6%; specificity 94.3% | Trained medical personnel | Shows good performance and can be clinically implemented. |

| Iwasaki et al. (2015) [47] | BBN | Temporomandibular disorders | Magnetic resonance imaging | 590 | Of the 11 BBN algorithms used path conditions using resubstitution validation and 10—fold cross-validation showed an accuracy of >99% | necessary path condition, path condition, greedy search-and-score with Bayesian information criterion, Chow-Liu tree, Rebane-Pearl poly tree, tree augmented naïve Bayes model, maximum log-likelihood, Akaike information criterion, minimum description length, K2 and C4.5 | The proposed model can be used to predict the prognosis of TMDs. |

| Orhan et al. (2021) [49] | ML | Temporomandibular disorders | Magnetic resonance imaging | 214 | The performance accuracy for condylar changes and disk displacement are 0.77 and 0.74 | logistic regression (LR), random forest (RF), decision tree (DT), k-nearest neighbors (KNN), XGBoost, and support vector machine (SVM) | The proposed model using KNN and RF was found to be optimal for predicting TMJ pathologies |

| Diniz de lima et al. (2021) [50] | ML | Temporomandibular disorders | Infrared thermography | 74 | Semantic and radiomic-semantic associated ML feature extraction methods and MLP classifier showed statistically good performance in detecting TMDs | KNN, SVM, MLP | ML model associated with infrared thermography can be used for the detection of TMJ pathologies |

| Bas B et al. (2012) [46] | ANN | TMJ internal derangements | Clinical symptoms and diagnoses | 219 | Sensitivity and specificity for unilateral and anterior disk displacement with and without reduction were 80% & 95% and 69% & 91%; for bilateral and anterior disk displacement with and without reduction were 37% &100% and 100% & 89% respectively. | Experienced surgeon | The developed model can be used as a supportive diagnostic tool for the diagnoses of subtypes of TMJ internal derangements |

| Choi et al. (2021) [48] | CNN | TMJ osteoarthritis | Panoramic radiographs | 1189 | Accuracy of 0.78, the sensitivity of 0.73, and specificity of 0.82 | Oral and maxillofacial radiologist | The developed model showed performance equivalent to experts and can be used in general practices where OMFR experts or CT is n |

| Fukuda et al. (2019) [33] | CNN | Vertical root fracture | Panoramic radiograph | 60 | The precision of 0.93; Recall of 0.75 | 2 Radiologists and 1 Endodontist | The CNN model was a promising supportive tool for the detection of vertical root fracture. |

| Study | Algorithm Used | Study Factor | Modality | Number of Input Data | Performance | Comparison | Outcome |

|---|---|---|---|---|---|---|---|

| Ariji et al. (2019) [69] | CNN | Extra-nodal extension of cervical lymph node | CT images | 703 | Accuracy of 84% | 4 radiologists | The diagnostic performance of the DL model was significantly higher than the radiologists |

| Lopez—Janeiro et al. (2022) [42] | ML | Malignant salivary gland tumor | Primary tumor resection specimens | 115 | 84–89% of the samples were diagnosed correctly | None | The developed model can be used as a guide for the morphological approach to the diagnosis of malignant salivary gland tumors |

| Felice et al. (2021) [43] | Decision tree | Malignant salivary gland tumor | Age at diagnosis, gender, salivary gland type, histologic type, surgical margin, tumor stage, node stage, lymphovascular invasion/perineural invasion, type of adjuvant treatment | 54 | 5-year disease-free survival was 62.1%. Important variables to predict recurrence were pathological tumor and node stage. Based on the variables, 3 groups were partitioned as pN0, pT1-2 pN+ and PT3-4 pN+ with 26%, 38% and 75% of recurrence and 73.7%, 57.1% and 34.3% disease-free survival rate, respectively | None | The proposed model can be used to classify patients with salivary gland malignancy and predict the recurrence rate. |

| Ariji et al. (2019) [68] | CNN | Metastasis of cervical lymph nodes | CT images | 441 | Accuracy 78.2%; sensitivity 75.4%; specificity 81.1% | not clear | The diagnostic performance of the CNN model is similar to that of radiologists |

| Nayak et al. (2005) [58] | ANN | Normal, premalignant and malignant conditions | Pulsed laser-induced autofluorescence spectroscopic studies | Not clear | Specificity and sensitivity were 100% and 96.5% | Principal component analysis | ANN showed better performance compared to PCA in the classification of normal, premalignant, and malignant conditions |

| Shams et al. (2017) [61] | DNN | Oral cancer | Gene expression profiling | 86 | Accuracy of 96% | support vector machine (SVM), Regularized Least Squares (RLS), multi-layer perceptron (MLP) with backpropagation | The proposed system showed significantly higher performance, which can be easily implemented |

| Jeyaraj et al. (2019) [62] | CNN | Oral cancer | Hyperspectral images | 600 | Accuracy of 91.4% for benign tissue and 94.5% for normal tissue | Support vector machine and Deep belief network | The proposed method can be deployed for the automatic classification of |

| Aubreville et al. (2017) [60] | CNN | oral squamous cell carcinoma | Confocal laser endomicroscopy (CLE) images | 7894 | AUC 0.96; Mean accuracy sensitivity 86.6%; specificity 90%; | not clear | This method seemed better than the state-of-the-art CLE recognition system |

| Uthoff et al. (2018) [59] | CNN | Precancerous and cancerous lesions | Autofluorescence and white light imaging | 170 | sensitivity, specificity, positive, and negative predictive values ranging from 81.25 to 94.94% | None | The proposed model is a low-cost, portable, and easy-to-use system. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Patil, S.; Albogami, S.; Hosmani, J.; Mujoo, S.; Kamil, M.A.; Mansour, M.A.; Abdul, H.N.; Bhandi, S.; Ahmed, S.S.S.J. Artificial Intelligence in the Diagnosis of Oral Diseases: Applications and Pitfalls. Diagnostics 2022, 12, 1029. https://doi.org/10.3390/diagnostics12051029

Patil S, Albogami S, Hosmani J, Mujoo S, Kamil MA, Mansour MA, Abdul HN, Bhandi S, Ahmed SSSJ. Artificial Intelligence in the Diagnosis of Oral Diseases: Applications and Pitfalls. Diagnostics. 2022; 12(5):1029. https://doi.org/10.3390/diagnostics12051029

Chicago/Turabian StylePatil, Shankargouda, Sarah Albogami, Jagadish Hosmani, Sheetal Mujoo, Mona Awad Kamil, Manawar Ahmad Mansour, Hina Naim Abdul, Shilpa Bhandi, and Shiek S. S. J. Ahmed. 2022. "Artificial Intelligence in the Diagnosis of Oral Diseases: Applications and Pitfalls" Diagnostics 12, no. 5: 1029. https://doi.org/10.3390/diagnostics12051029

APA StylePatil, S., Albogami, S., Hosmani, J., Mujoo, S., Kamil, M. A., Mansour, M. A., Abdul, H. N., Bhandi, S., & Ahmed, S. S. S. J. (2022). Artificial Intelligence in the Diagnosis of Oral Diseases: Applications and Pitfalls. Diagnostics, 12(5), 1029. https://doi.org/10.3390/diagnostics12051029