Abstract

We developed a framework to detect and grade knee RA using digital X-radiation images and used it to demonstrate the ability of deep learning approaches to detect knee RA using a consensus-based decision (CBD) grading system. The study aimed to evaluate the efficiency with which a deep learning approach based on artificial intelligence (AI) can find and determine the severity of knee RA in digital X-radiation images. The study comprised people over 50 years with RA symptoms, such as knee joint pain, stiffness, crepitus, and functional impairments. The digitized X-radiation images of the people were obtained from the BioGPS database repository. We used 3172 digital X-radiation images of the knee joint from an anterior–posterior perspective. The trained Faster-CRNN architecture was used to identify the knee joint space narrowing (JSN) area in digital X-radiation images and extract the features using ResNet-101 with domain adaptation. In addition, we employed another well-trained model (VGG16 with domain adaptation) for knee RA severity classification. Medical experts graded the X-radiation images of the knee joint using a consensus-based decision score. We trained the enhanced-region proposal network (ERPN) using this manually extracted knee area as the test dataset image. An X-radiation image was fed into the final model, and a consensus decision was used to grade the outcome. The presented model correctly identified the marginal knee JSN region with 98.97% of accuracy, with a total knee RA intensity classification accuracy of 99.10%, with a sensitivity of 97.3%, a specificity of 98.2%, a precision of 98.1%, and a dice score of 90.1% compared with other conventional models.

1. Introduction

Cancer starts when healthy cells in the breast change and grow out of control, forming a mass or sheet of cells called a tumor. A tumor may be benign or cancerous. Malignant means a cancerous tumor can grow and spread to other body parts. If a tumor is benign, it can enlarge but not spread. Radiologists must use a computer-aided detection (CAD) system to differentiate between normal and cancerous cell growth [1]. Arthritis is a form of joint inflammation that causes pain, stiffness, swelling, and limited movement. In India, 15% of the adult population had arthritis in 2015, compared with 22.7% in the United States [2]. According to medical research, there are around 100 different types of arthritis, with rheumatoid arthritis (RA) and osteoarthritis (OA) being the most frequent [3]. Medical imaging is a growing field and has several tools and methods for getting information from medical images. Magnetic resonance imaging (MRI) is one of the most extensively employed imaging techniques. MRI image samples are obtained using Brainix and neuroimaging data sources [4].

Up to 17% of people worldwide have progressive RA, an inflammatory condition. Most of the time, the condition causes inflammation in the joints, which makes them hurt for life, shortens people’s lives, and makes life less enjoyable. Some of the first signs of arthritis are narrowing joint space, breaking cartilage, less synovial fluid, and a torn meniscus. In both men and women, although more typically in middle-aged women, it can also affect organs and systems such as the skin, heart, and lungs [5]. RA is a long-lasting inflammatory disease that painfully destroys joints. Still, measuring how well a therapy works greatly depends on how the patient and the clinician feel about it. Aggressive disease-modifying therapy can alleviate symptoms and avoid irreversible joint deterioration [6]. Patients with RA who are getting good medical care may still develop musculoskeletal problems, such as damage to the knee joints that cannot be fixed. Total knee arthroplasty (TKA) surgeries on RA patients may become more common and have more complications as patients age. Pathogenesis, prognosis, and medical treatment for RA of the knee differ from those for OA of the knee, so the expected results of TKA are also different. The advanced knee damage RA causes is not a local problem, but a sign of a systemic problem. These variables influence the outcomes of TKA [7]. Research into the ways that artificial intelligence (AI), especially machine learning (ML) and deep learning (DL) techniques, could be used in medicine and healthcare is growing quickly. When there is not a good treatment for people with chronic rheumatological disorders such as RA, the second most common autoimmunity, these strategies can be very important for giving good care [8]. RA, an autoimmune disorder, affects between 0.5 and 1 percent of adults globally. The condition is most prevalent between the ages of 50 and 60, and women are twice as likely as men to develop it. Despite the lack of knowledge of what brings on rheumatoid arthritis, the consequences of this condition can be quite severe [9].

In 2010, the European League Against Rheumatism (EULAR) made its first suggestions about how to treat RA with biological and synthetic disease-modifying antirheumatic drugs (DMARDs). They gave rheumatologists, patients, payers, and other interested parties a summary of what is known now and what European specialists think based on evidence. Targeted synthetic and biologic DMARDs have transformed the treatment of this disorder. However, there are concerns about applying these novel ideas to clinical practice [10]. People with RA can now stop irreversible joint damage and, in some cases, heal damaged joints with the help of new drugs, biologics, and intensive treatment plans. Radiographs may reveal subchondral bone sclerosis and osteophyte formation as injured joints heal. The “evaluation of RA by scoring big joint destruction and healing in radiographic imaging” (ARASHI score) says that these changes, called secondary osteoarthritis (OA), are signs of rheumatoid arthritis. Radiographs include them in the definition of structural remodeling of large joints and recommend them as a way to make large joints more stable [11]. Scientists have used the term “human activity recognition” (HAR) to describe how machines automatically analyze what people do. Healthcare professionals rely heavily on this to promote health and wellness. For example, aged monitoring, exercise supervision, and rehabilitation monitoring all use it. Human activity recognition (HAR) can be used to track and analyze the activity levels of the elderly so that we can detect health issues prematurely [12]. This semi-supervised hierarchical convolutional neural network (SS-HCNN) aims to get around how hard it is to put images into categories and how the annotations limit what can be done. The concept is to use unsupervised clustering of the low-level characteristics to divide images into a tree-like structure and then train a tree-like network of convolutional neural networks (CNNs) at the root and parent nodes using the generated cluster labels [13]. This study proposes a probability-based real-parameter encoding operator. The approach also reduces the chromosomal length, saving computation space. The proposed revised GA algorithm passed the two-step validation process. First, a typical DFJSP demonstrated the algorithm’s utility. Second, the algorithm solved a real-world problem. A Taiwanese fastener factory applied historical data to 100 and 200 work orders. The proposed GA method change solved GA encoding problems. They completed the 100 sets of work orders in 102 days and 39 min using the proposed GA, saving 3878 min (150,797 min – 146,919 min = 3878 min) [14]. The author used the 3D articular bone shapes of the hand joints of people with RA and psoriatic arthritis and healthy controls to train a new neural network.

High-resolution peripheral computed tomography (HR-pQCT) data from the head of the second metacarpal bone were used to make the bone. Using GradCAM, it generated heat maps of problem areas. After training, we gave the neural network patterns of arthritis that could not be categorized as RA, PsA, or HC to figure out what kind of arthritis they were. In 932 HR-pQCT images of 617 patients, hand bone shapes were obtained. The network discriminated HC, RA, and PsA with area-under-receiver-operator curves of 82%, 75%, and 68%, respectively. Heat maps showed bare spots and places where ligaments connect that are likely to erode and form bone spurs. Based on joint form, the neural network identified 86% of UA data as “RA,” 11% as “PsA,” and 3% as “HC” [15]. The author came up with a new, simple, and computer-generated method for diagnosing knee RA that is based on deep CNNs and automatically measures the severity of RA in knee joints. This network was trained using around 1650 digital X-radiation images of the knee joint from the Optical Knee X-radiation Images Mendeley Collection. We performed the validation procedure for 20% of the data [16].

The proposed method was based on spatial analysis, and they separated the edges of the skin using a method based on intensity. The thresholding algorithm for segmenting bone regions, the hit-or-miss transformation for segmenting bone lines, and a distance measure for detecting the joint region following localization was followed. The synovial region was then identified using the active contour technique. In arthritic situations, synovitis also develops. We divided this condition into four types based on how much fluid builds up in the synovial area. The various grades were defined and analyzed using deep learning. A deep learning system used a convolutional neural network to determine the exact grade of synovitis and the type of arthritis. The validation produced an average true-positive percentage of 88.52%, ranging from 78.12% to 98.95%. False positives fell by 1.41 percent. These findings demonstrate that the network efficiently differentiates synovial grades [17]. In a big data set, the machine-learning-based ensemble analytical approach (MLEAA) has two parts: learning and predicting. During the learning phase, Hadoop’s map-reduce technology processes the data, while the highlighted attributes move the prediction stage forward. Three different algorithms, including Ababoost, SVM, and ANN, were used in the proposed MLEAA approach’s prediction phase, and the final predictive value was computed based on the voting system [18]. There are ways to conduct traditional statistical modeling, but they limit these methods in how much data they can effectively analyze. It is necessary to create thorough, patient-specific prediction models. Techniques such as data mining and machine learning should be used to help make these kinds of models. Although it will be difficult, current technology should allow for the sub-grouping of patients with OA, which could improve clinical judgment and advance precision medicine [19]. Early knee osteoarthritis detection is presented. Deep-learning-based feature descriptors on X-radiation pictures perform this. Training and testing use the Mendeley Dataset VI. Deep learning-based feature descriptors on X-radiation pictures perform this CNN with LBP and HOG used joint space width to obtain the proposed model feature from the region of interest. KOA was classified using KL, SVM, RF, and KNN. Images were five-fold validated and cross-validated. The method achieved 97.14% cross-validation and 98% five-fold validation accuracy. In the future, it will be possible to combine the proposed method with other models to find diseases above and below the knee more complexly. Feature fusion can detect and classify different diseases [20]. The model contained both a joint-detection step and a joint-evaluation step. There were 216 radiographs taken from 108 RA patients, with 186 assigned to the training/validation dataset and 30 to the test dataset. In the training/validation dataset, pictures of the PIP joints, the thumb’s IP joint, and the MCP joints were manually cropped, evaluated by clinicians for joint space narrowing (JSN), and then augmented. To train and test a deep convolutional neural network for joint evaluation, 11,160 images were used. The joint detection machine learning system was trained using 3720 carefully selected images. Putting these two methods together made a model for figuring out how badly radiographic finger joints damage it. With a sensitivity of 95.3%, the model estimated JSN and erosion for the PIP, thumb IP, and MCP joints. JSN’s accuracy range was 49.6–65.4 percent, while its erosion range was 70–74.1 percent. The correlation between model and clinician scores per image was 0.72 to 0.88 for JSN and 0.54 to 0.56 for erosion [21].

The proposed system architecture was made up of a CNN layer and a multilayer-based metadata learning layer. This was conducted so that the information was reliable. Sparse coding estimates and metadata-based vector encoding were used for the additional dimension. To keep the geometric format of supervised data [22], a well-structured k-neighbored network was used to build nearby limitation atoms. This study proposes SVM-based detection of finger joints and mTS score estimation. Using X-radiations of 45 RA patients, the suggested approach recognized finger joints with 81.4% accuracy and evaluated erosion and the JSN score with 50.9% and 64.4% accuracy, respectively [23]. The proposed model scored JSN and erosion for PIP, thumb IP, and MCP joints with 95.3% sensitivity. JSN had an accuracy range of 49.6–65.4% and an erosion range of 70–74.1%. They correlated the model and clinician scores per image at 0.72–0.88 for JSN and 0.54–0.75 for erosion [24]. The accuracy of the modified pre-trained GoogleNet model was 89%, whereas that of the proposed custom model was 95%. Google Net had a sensitivity of 84% and a specificity of 90%. Model number three was 95% sensitive and 94% specific. When extracted features from customized models (SIFT + CNN) are compared with those from ML classifiers, the custom3 model performed better [25]. The suggested method was compared with other fuzzy clustering methods that are already used to show how well it works. We compared the support vector machine (SVM), Decision Tree (DT), rough set data analysis (RSDA), and fuzzy-SVM classification algorithms to find the best way to group things [26]. The authors aim to create an AI-based computer-aided diagnosis tool that can classify abnormalities by reading chest X-radiations and help doctors make an accurate diagnosis quickly. We used a Google-created convolutional neural network (CNN) called XceptionNet to find those pathologies in the ChestX-radiation-14 data. Additionally, the same data are being used to run other CNN-ResNet algorithms [27]. At the 100th training iteration, the mean square error and the false recognition rate dropped below 1.1%, suggesting that the LPRNN was trained correctly. Edge preservation index values were above the experimental threshold of 0.48, signal-to-noise ratios (SNRs) were greater than 65 dB, peak SNR ratios were greater than 70 dB, and destruction times were faster [28]. Principal Component Analysis improved characteristics, while the Co-Active Adaptive Neuro-Fuzzy Expert System sorted images of the brain into glioma or non-glioma groups. The PCA and CANFES classification techniques had a sensitivity (Se) of 97.6%, a specificity of 98.56%, an accuracy of 98.73%, a precision of 98.85%, a false positive rate of 98.11%, and a false negative rate of 98.185 [29]. Table 1 illustrates the various state-of-the-art methods for knee RA classification.

Table 1.

Knee RA Grade Classification from different state-of-the-art methods.

1.1. Contribution

- The proposed system predicts the minimal joint space narrow region and knee RA severity grade value.

- The proposed system’s experimental analysis was carried out using various criteria. The RA severity classification parameters such as sensitivity, specificity, accuracy, precision, and dice score.

- Our proposed system classification paradigm outcomes perform better than traditional techniques.

1.2. Organization of Work

Section 1: this paper also discusses the techniques for dataset validation and inflammatory mediator ground truth production and discusses the different state-of-the-art method performances. Section 2: following the data collection step, pre-processing and segmentation of the thermograms take place. Section 3: in the last step, the algorithm differentiates between abnormal and normal knee thermograms and then divides aberrant knee thermograms into three distinct categories. Section 4: provides the RA classification results from various parameters and compares the results of various existing techniques.

2. Materials and Methods

The primary goal of this study is to examine whether or not a deep learning strategy is effective for RA categorization. In our presented system, we use two approaches like; (i) feature extraction for ROI localization using a deep learning model (active F-CRNN + Hybrid ResNet101 with domain adaption); and (ii) feature selection via a supervised learning technique (marginal joint space narrowing region). To classify the severity of RA in the knee, AlexNet was used. The following procedures were carried out on our system.

2.1. Materials

The study encompassed patients with RA symptoms who were older than 55. (knee pain, rigidity, palpitation, and impaired functioning). The BioGPS database repository provided the digitized X-radiation images of the patients (805 men and 1207 women), which are a publicly accessible dataset; hence, the total number of patients is 2012. We discarded 181 radiographic images out of a total of 3353 records for reasons such as postoperative assessment, injury, and infection. Thus, only 3172 X-radiation images were acquired for analysis. Knee X-radiation digital images start in the DICOM format, but are easily converted to the universal JPG format for further use [30]. The digital X-radiation of the knee joint had a resolution of around 3000 by 1500 pixels. Before conducting the analysis, the image brightness was standardized. Three medical domain specialists reviewed each digital X-radiation set (from the Dindigul scan center, Dindigul). Medical domain experts manually examined each digital X-radiation image in order to obtain two ground truth data points (minimum joint space narrowing area and RA classification using CBD grading criteria). Table 2 displays the numerous consensus-based decision grades used in analyzing rheumatoid arthritis.

Table 2.

Consensus-based decision gradings.

Table 3 depicts the total number of digitized knee X-radiation images and CBD grading evaluations by three clinical professionals. We used 80% of the data for training, and we further split the training data into training (70%) and validation (10%). The remaining data (20%) were used for testing. The split-up of the dataset is displayed in Table 4.

Table 3.

Consensus-based decision from three experienced clinicians.

Table 4.

Training and testing phase analysis of a dataset.

2.2. Methods

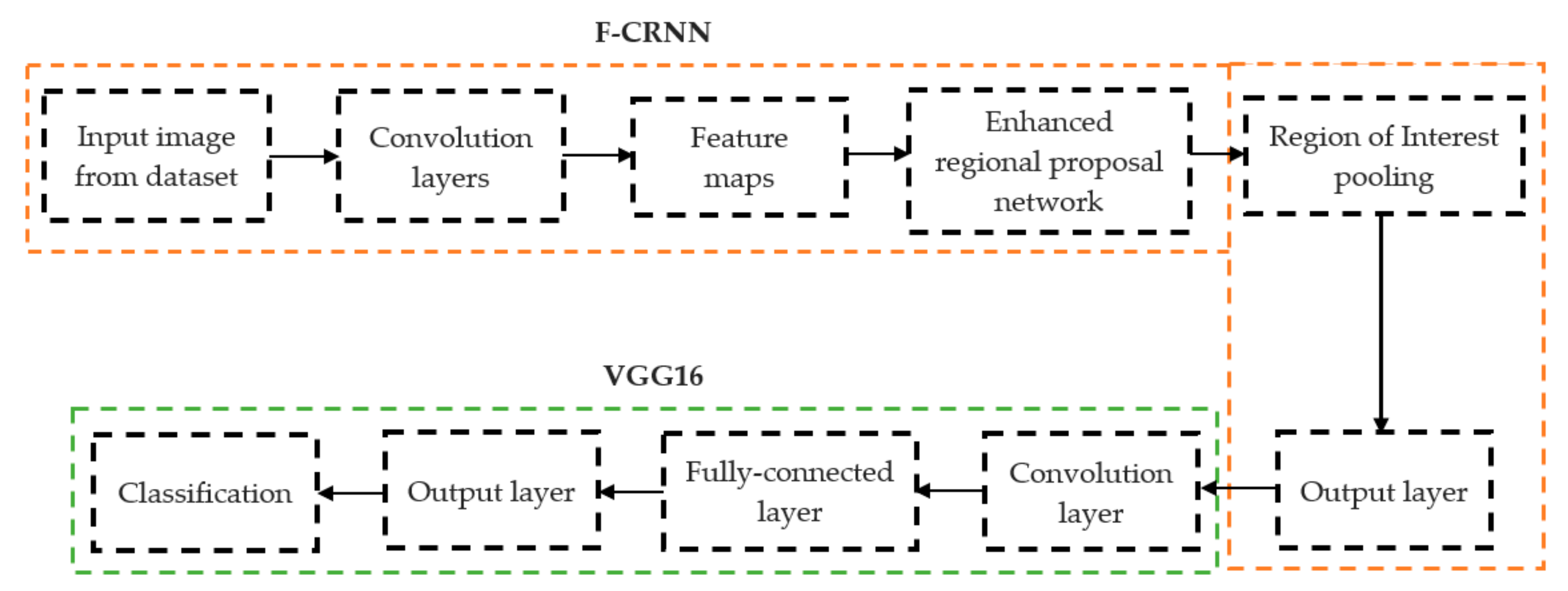

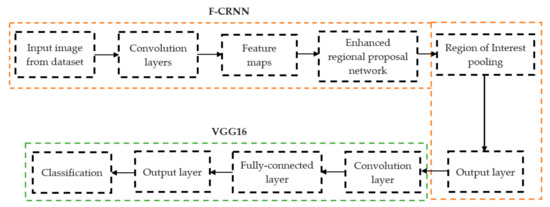

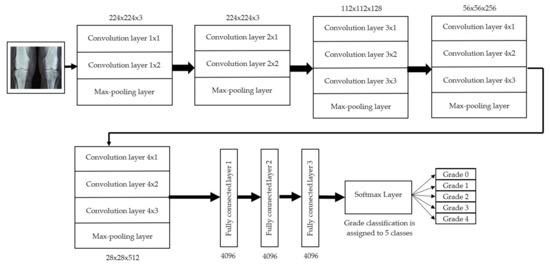

The initial step involved reducing an image to a size of 227 × 227 × 3. We used two convolutional neural networks for RA detection and classification in the second phase (Hybrid ResNet101 and VGG16). The image characteristics must be sufficient for accurate CBD grade determination and effective RA classification. We identified marginal joint space narrowing and categorized RA using these convolutional neural networks to extract instructive visual characteristics. To complete the RA classification process, two CNNs were used. First, we used ResNet101 with a domain adaptation strategy to identify marginal joint space contraction. Second, we used VGG16, which was trained using a domain adaptation technique, to classify RA. Finally, we evaluated the method’s effectiveness and contrasted our findings with those of other similar techniques already in use. Figure 1 shows a flowchart of the recommended process.

Figure 1.

Presented association of F-CRNN and VGG16 layered architecture.

The above Equations (1) and (2) represent the overall loss value ( of an enhanced region proposal network along with an association of the classification loss. (, is robust loss, is aground-truth regression targets, and is a predicted tuple. is computed by a softmax.

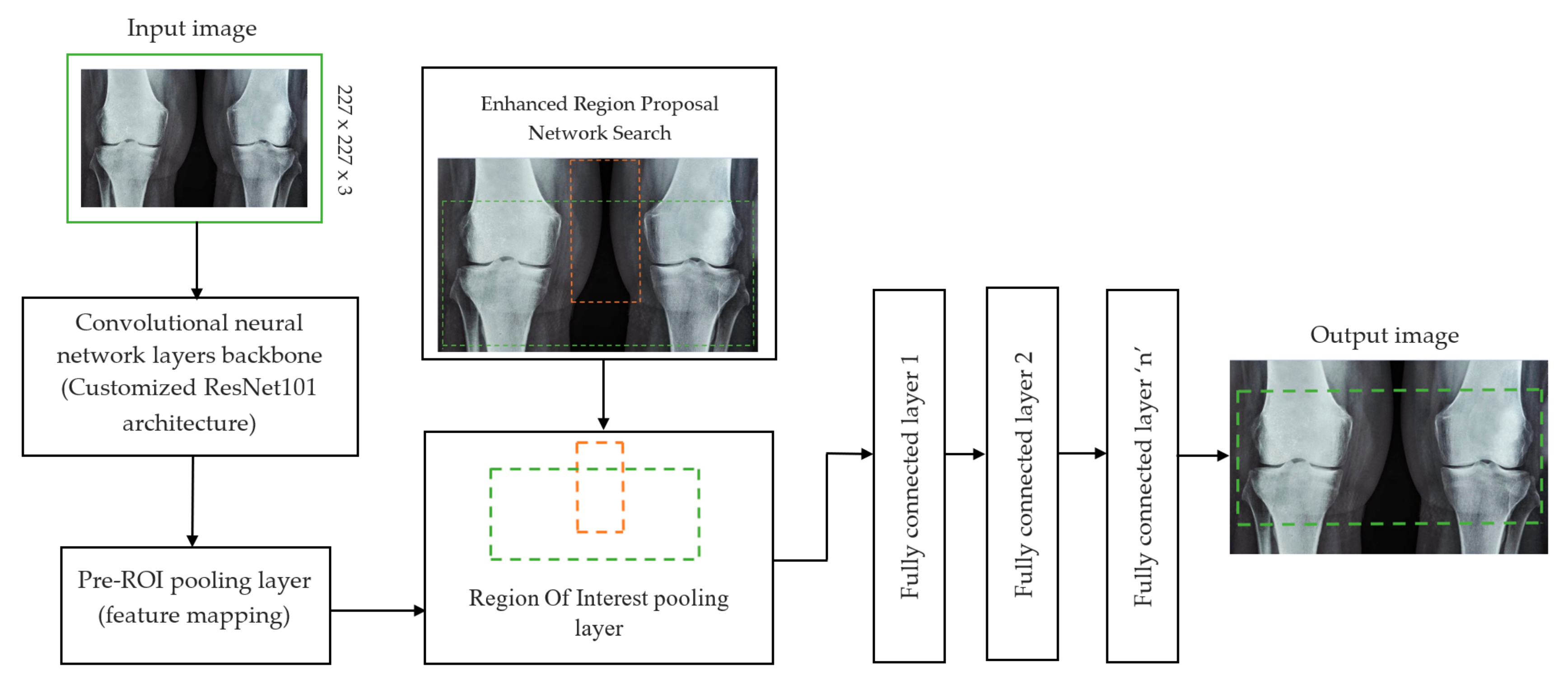

2.2.1. Determination of Joint Space Narrowing

In deep learning, the F-CRNN is just one of the methods. The faster CRNN architecture is now the standard object identification method because of its ability to anticipate and score single or numerous items in an image. The enhanced-region proposal network and F-CRNN are two integral parts of the F-CRNN network. To ensure that the Quick R-CNN module receives only the best region suggestions, the ERPN generates them. To identify areas of interest in digital X-radiation pictures, we trained the ERPN. The end-to-end convolutional network (ERPN) can accurately anticipate the boundaries and scores of objects of interest at any coordinate. The ResNet101 network was used for F-CRNN feature extraction.

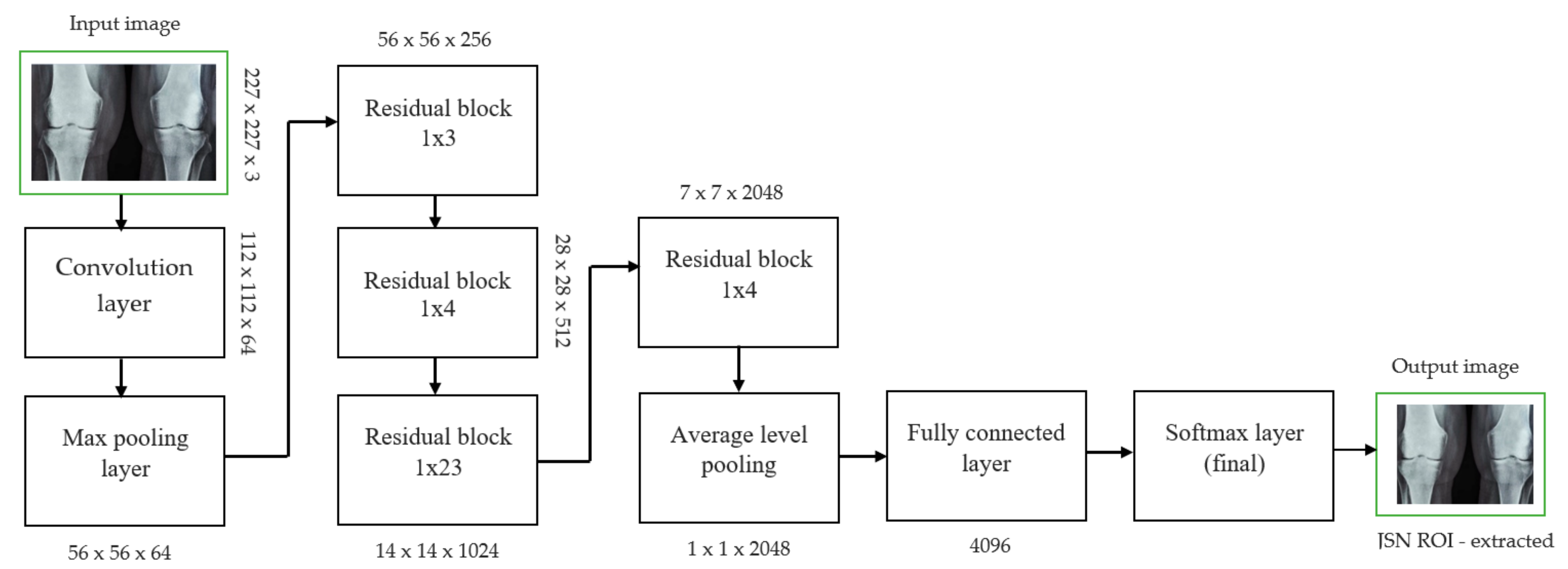

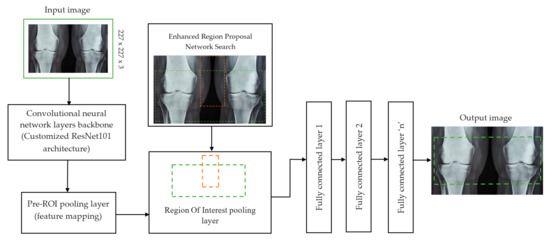

Each convolutional layer in ResNet101 was followed by a batch normalization and activation layer (ReLU). By avoiding parallel connections to the typical layers, this architecture facilitated more efficient training of deep neural networks. Features were extracted, and convolutional feature maps were generated using a combination of convolutional and max-pooling layers. Image characteristics were fed into ERPN, and region suggestions were generated as outputs. The ROI pooling layer took the feature vectors from the function maps. Each vector function was linked to the underlying layer. We individually trained the ROI detection model for the AP view’s medial and lateral compartments. When the algorithm produced several ROI detections, we chose the ROI with the highest prediction accuracy for each knee joint. To evaluate the proposed model, we counted the narrow regions of the marginal joint space that achieved IoU ≥ 0.70. As a result of the detection, we saved the predicted bounding boxes. We used weights that had already been trained on ResNet-101, and then used the domain adaptation method to fine-tune them. Figure 2 shows how modified ResNet-101 can find approaches with a narrow joint space in the knee. The most important part of the Faster R-CNN architecture is ERPN. ERPN predicts the scores of objects and their locations. The algorithm compares the narrow areas of the knee joint space in the medial and lateral compartments to find the narrow area in the middle. The best thing about this method is that it can find even the smallest changes in knee joint space.

Figure 2.

Association features of F-CRNN and cutomized ResNet101 architecture.

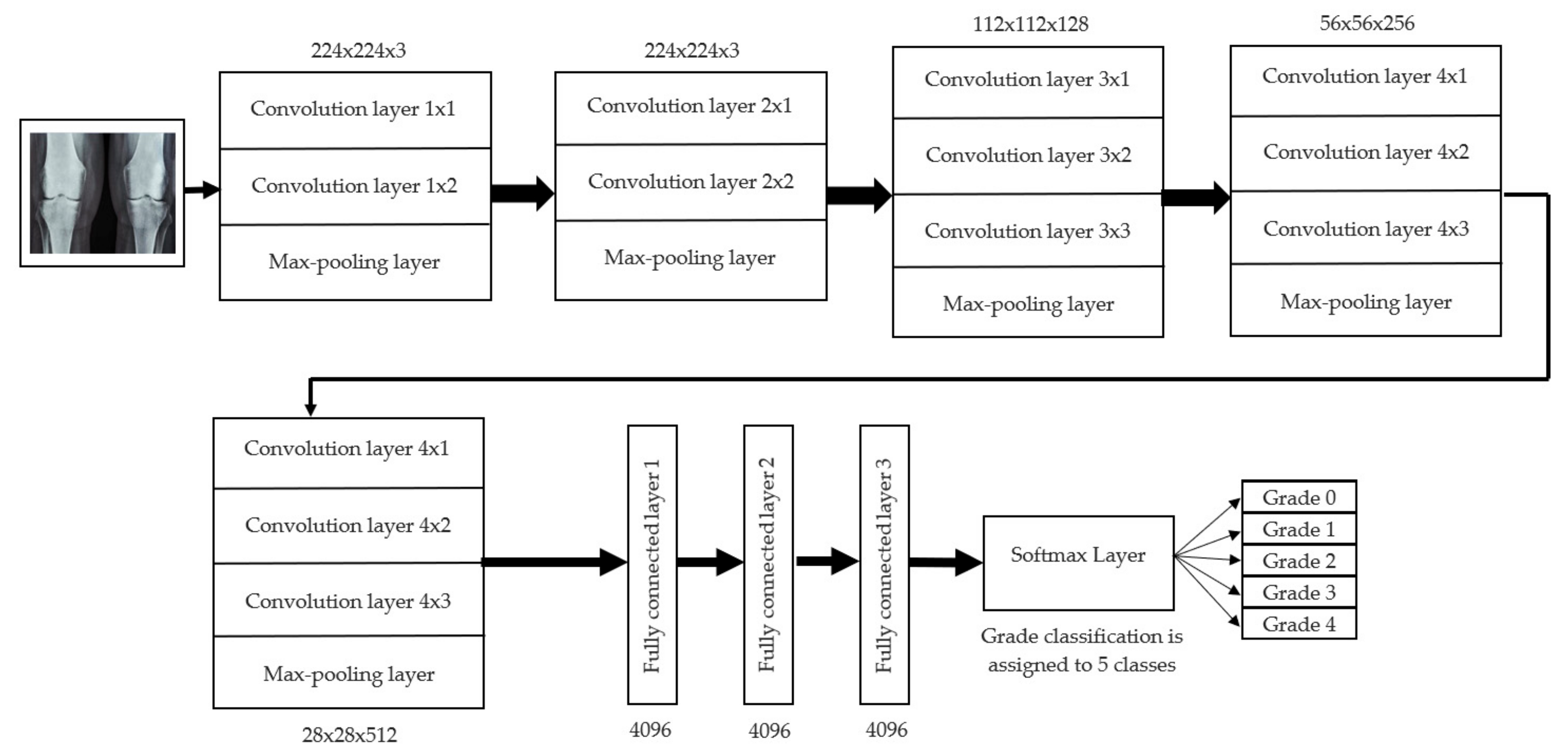

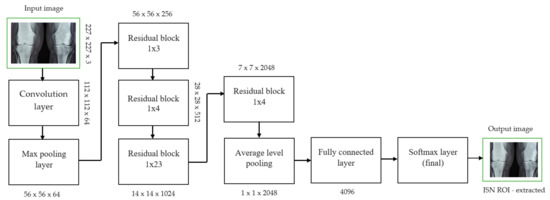

2.2.2. RA Classification

For knee RA severity classification, we conducted this research using a modified version of the VGG16 architecture and a domain adaptation technique, as shown in Figure 3. The VGG16 model was made up of five convolutional layers, three max-pooling layers, and three fully connected layers—all the digital X-radiation images needed to be resized to (227 × 227 × 3). In our implementation, X-radiation image information for training purposes accounted for eighty percent of the total, while X-radiation image information for evaluating purposes accounted for twenty percent. Although there are sixteen layers in VGG16, only a subset of those layers is required for feature extraction. In order to shorten the amount of time needed for training and establish more control over the fitting process, we assigned a dropout ratio value of 0.5 to the completely connected layer (fcl6) and the fully connected layer (fcl7). The characteristics were taken from the fully connected layers designated fcl6 and fcl7, respectively. To categorize the retrieved features into 1000 categories, VGG16’s architecture used a fully connected layer (fcl8). Then, we conducted one last round of tuning on the pre-trained VGG16 model’s ability to classify RA by changing parameters in the model’s last three layers. The model’s last three layers were swapped out for a fully linked layer, a softmax layer, and a classification layer. In addition, a newly linked layer was assigned to five groups of RA grades for the dataset: Grade 0, Grade 1, Grade 2, Grade 3, and Grade 4. We trained the proposed network by using digital knee X-radiations, a small-batch test dataset, gradient descent, and maximal epochs. Our proposed network learning strategy used stochastic gradient descent, and we compared its performance to previous efforts. The proportion of knee X-radiation images from the test set for which the network correctly predicted the RA grade was used to calculate proposed work accuracy. The proposed approach achieved an overall accuracy of 99.10% in classifying knee RA cases. Table 5 illustrates the Visual Geometry Group (VGG16) CNN operation for RA grade classification. Figure 4, depicts the RA classification using VGG16 architecture.

Figure 3.

JSN ROI extracted for knee joint narrow space from ResNet101 architecture.

Table 5.

VGG16 architecture for RA grade classification.

Figure 4.

RA classification using VGG16 architecture.

3. Experimental Results and Discussion

3.1. Experimental Parameter Settings

For our investigation, we utilized a machine with 8 GB of RAM and 256 GB of SSD, an Intel i3-core CPU, and Radeon R2 graphics. For image processing, we selected MATLAB 2020-a. Each stochastic gradient descent iteration used a batch size of 256 for both the F-CRNN and the enhanced-region proposal networks, and a learning rate of 4e-3 was applied. The presented approach method corresponds to around 4 h of model training, and the maximum number of iterations was 0.6 k.

3.2. Detection of Marginal Knee Joint Space Narrowing

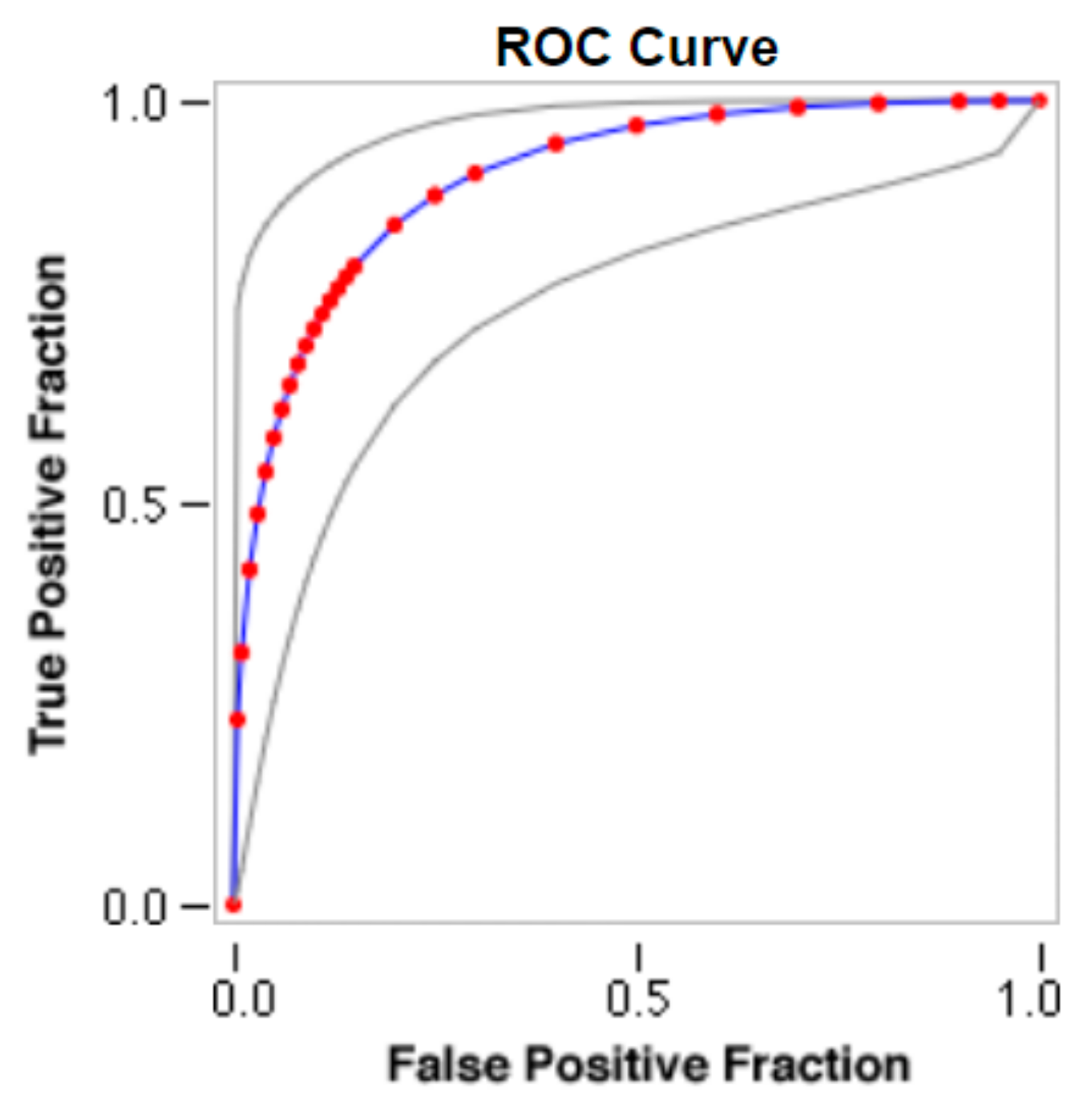

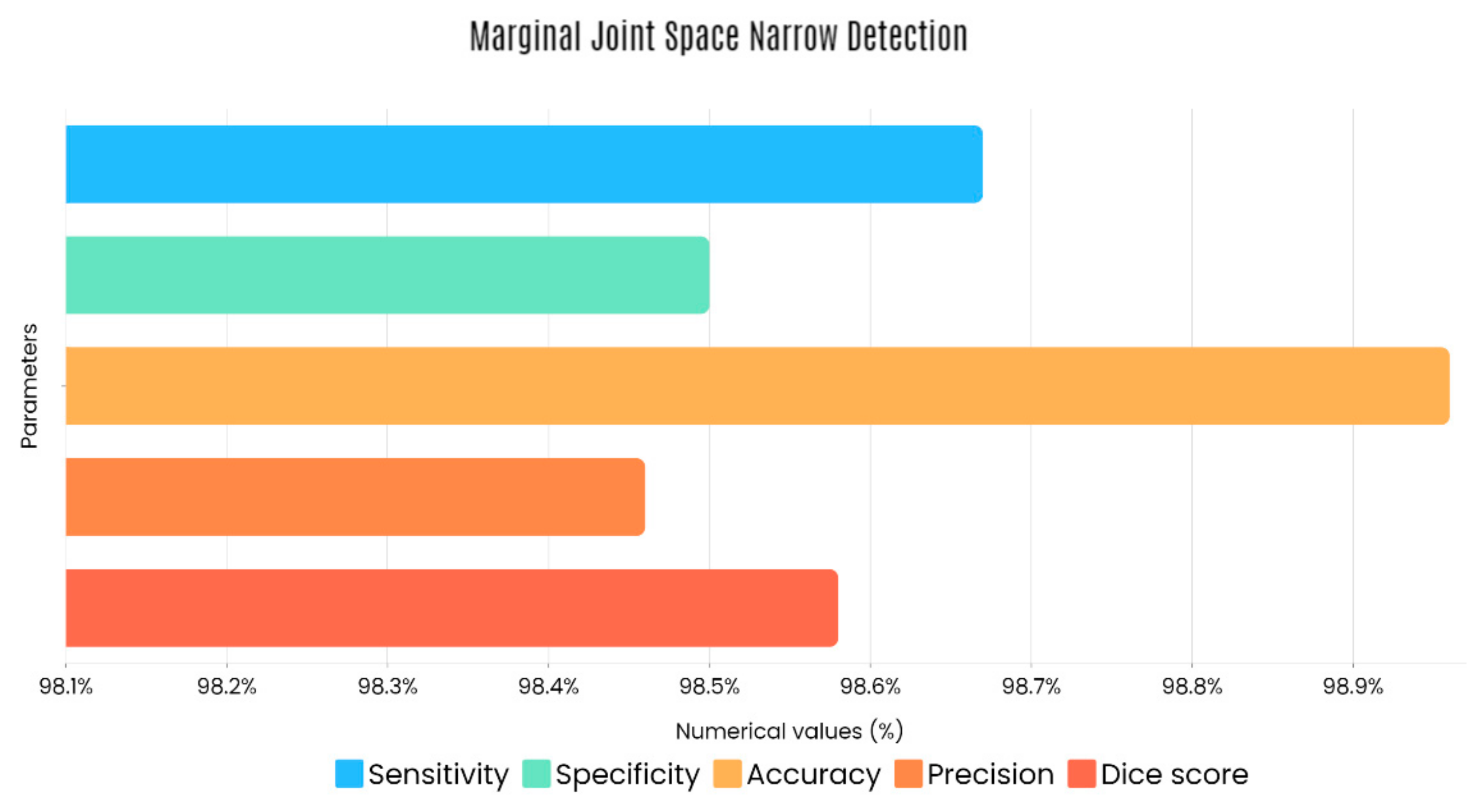

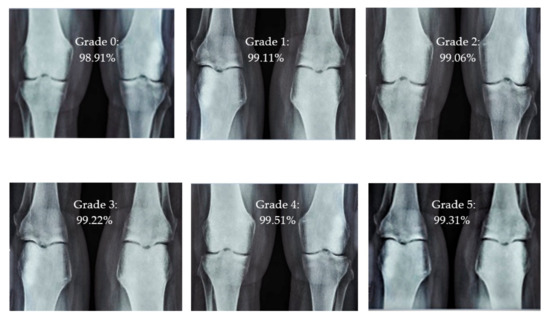

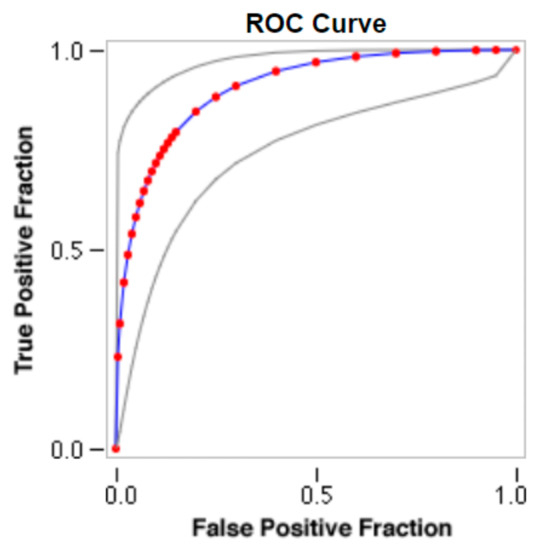

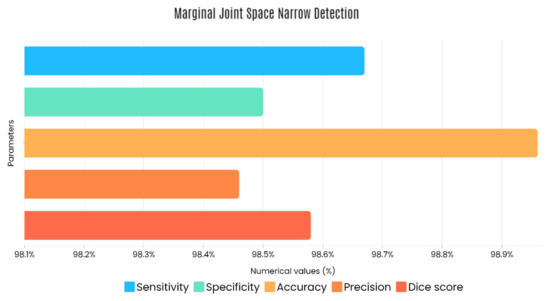

Sample images of the marginal joint space narrowing region of interest can be seen in Figure 5. The IoU (Intersection of Union) metrics were used to evaluate our region of interest detection system. This metric was the size of the intersection between the area of the actual bounding box and the area of the predicted bounding box divided by the size of the area of both boxes added together. When the IoU was 0.70, the narrow marginal joint space was found in 99.72% of the knee joints using our presented model. Additionally, Figure 6 depicts the ROC curve for marginal joint space narrow detection. The results of the presented marginal joint space narrow detection model obtained a sensitivity rate of 98.67%, a Dice score of 98.58%, a precision rate of 98.46%, a specificity rate of 98.50%, a false positive rate of 0.0100, a false negative rate of 0.0197, and an overall accuracy rate of 98.97%, as shown in Table 6, and the graphical illustration of Table 6 values is depicted in Figure 7. Table 7 demonstrates the metric performance outcomes of the proposed ResNet101 and VGG16 model to classify the RA. From Table 7, the outcome of the VGG16 outperforms the well-pre-trained ResNet101 model in classifying RA.

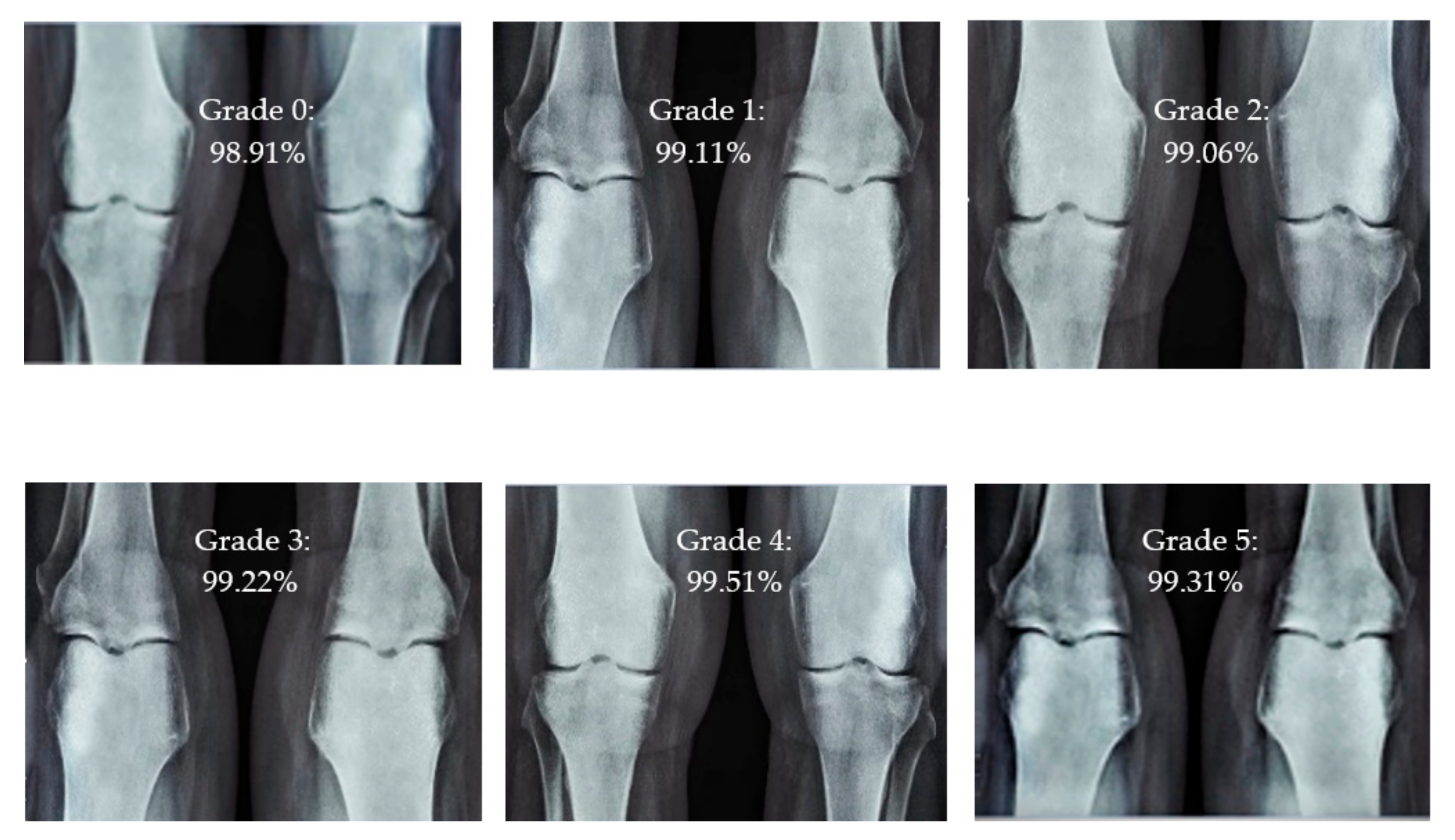

Figure 5.

Different CBD grade levels of knee rheumatoid arthritis.

Figure 6.

ROC curve for marginal joint space narrow detection.

Table 6.

Marginal joint space narrow detection parameter outcomes.

Figure 7.

Graphical illustration of obtained marginal JSN detection parameters.

Table 7.

Outcome comparison of ResNet101 and VGG16 models.

3.3. Parameter Metrics for Performance Computation

In our presented systems, classification accuracy analysis was computed by five different performance metrics: sensitivity, specificity, precision, accuracy, and Dice score.

Here, β-represents the true positive values, ø-represents the true negative values, µ-represents the false negative values, and γ-represents the false positive values.

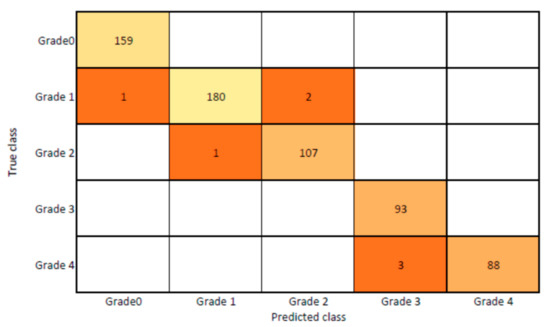

3.4. Intensity Classification of Rheumatoid Arthritis

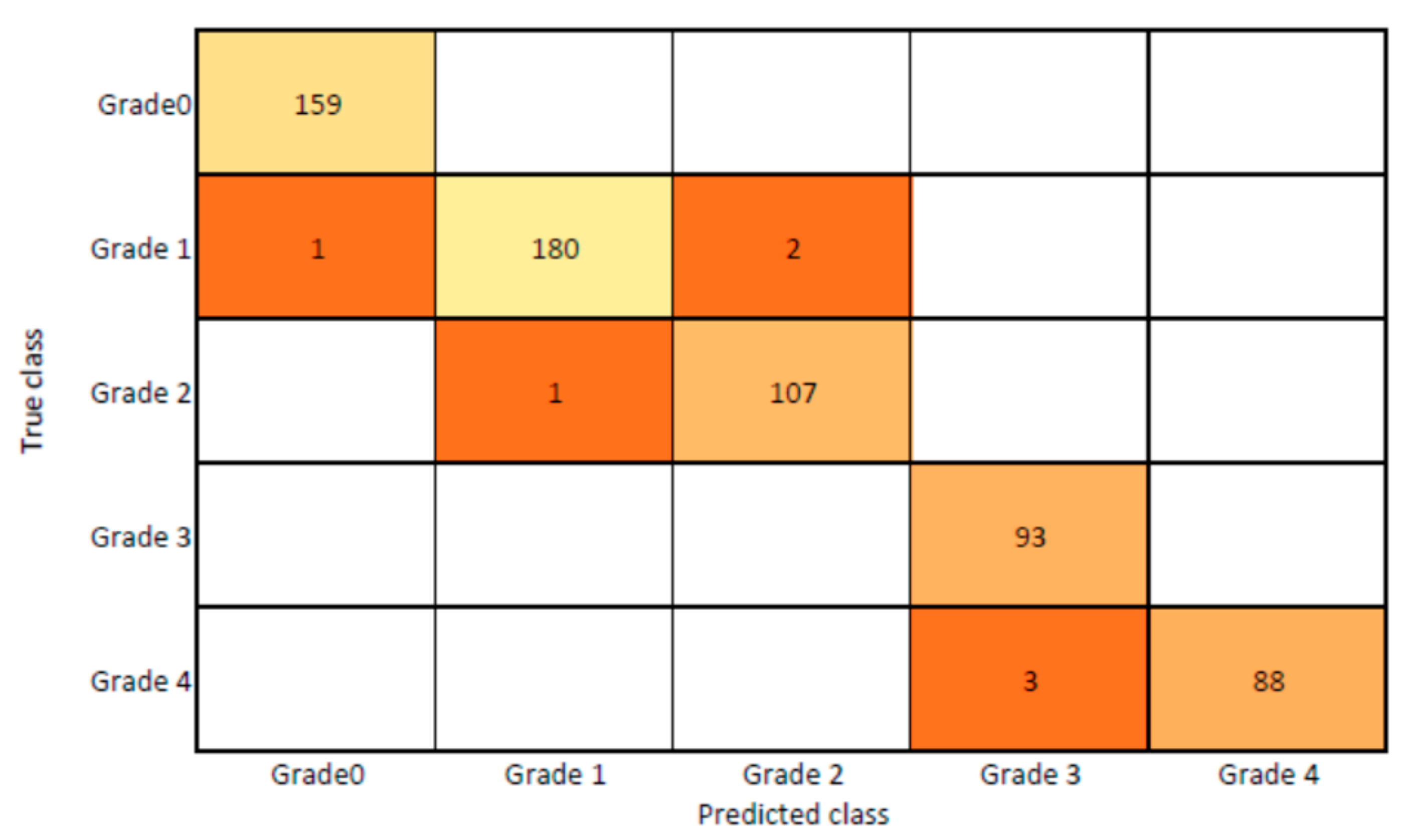

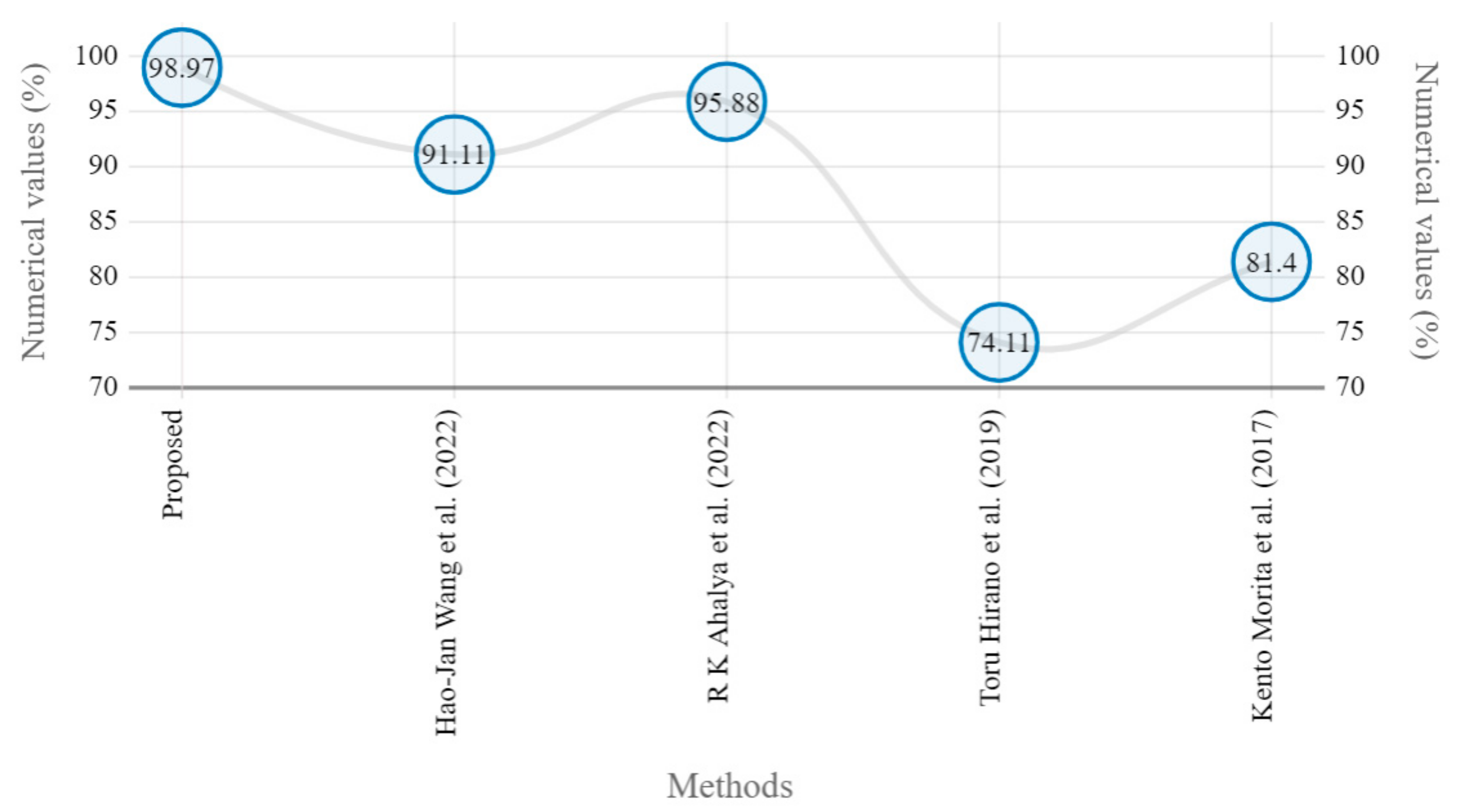

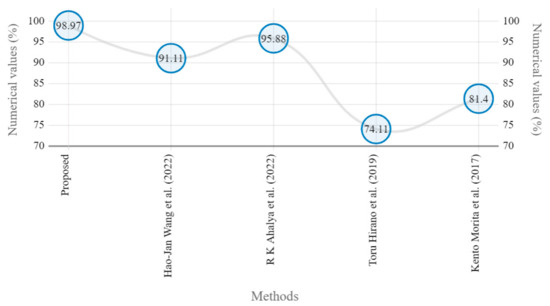

The presented model achieved 99.10% accuracy on the whole test set. The confusion matrix of the presented method is shown in Figure 8, and its performance is compared in detail to that of current methods in Table 5. In Figure 8, we examine the training and learning procedure as a whole to assess the planned activity’s success. Table 6 demonstrates the highest accuracy rate for classifying CBD grades zero–three–four knee joints. The knee joints with a CBD grade of one or two are the toughest to categorize. As can be seen in Figure 8, there is only a marginal amount of room for error when classifying knee joints as CBD Grades zero, three, or four. Knee joints that are classified as CBD Grades one or two have a small number of marginal misclassifications. In several circumstances, the proposed approach incorrectly estimated CBD Grade two as Grade one and vice versa. Joint space narrowing and bony spur development are significantly different in CBD Grade four knee joints. However, CBD-grade one knee joints show little change in JSN or osteophyte growth compared with the other classes. Types of knee RA and their intensity levels are shown in Figure 5. Table 8 and Figure 9 illustrate the JSN accuracy of the proposed and other state-of-the-art methods comparison.

Figure 8.

Proposed system confusion matrix (manual vs. automatic) for grade classification.

Table 8.

JSN outcomes of proposed and state-of-the-art methods.

Figure 9.

Marginal JSN accuracy comparison of proposed and state-of-the art methods [5,22,24,25].

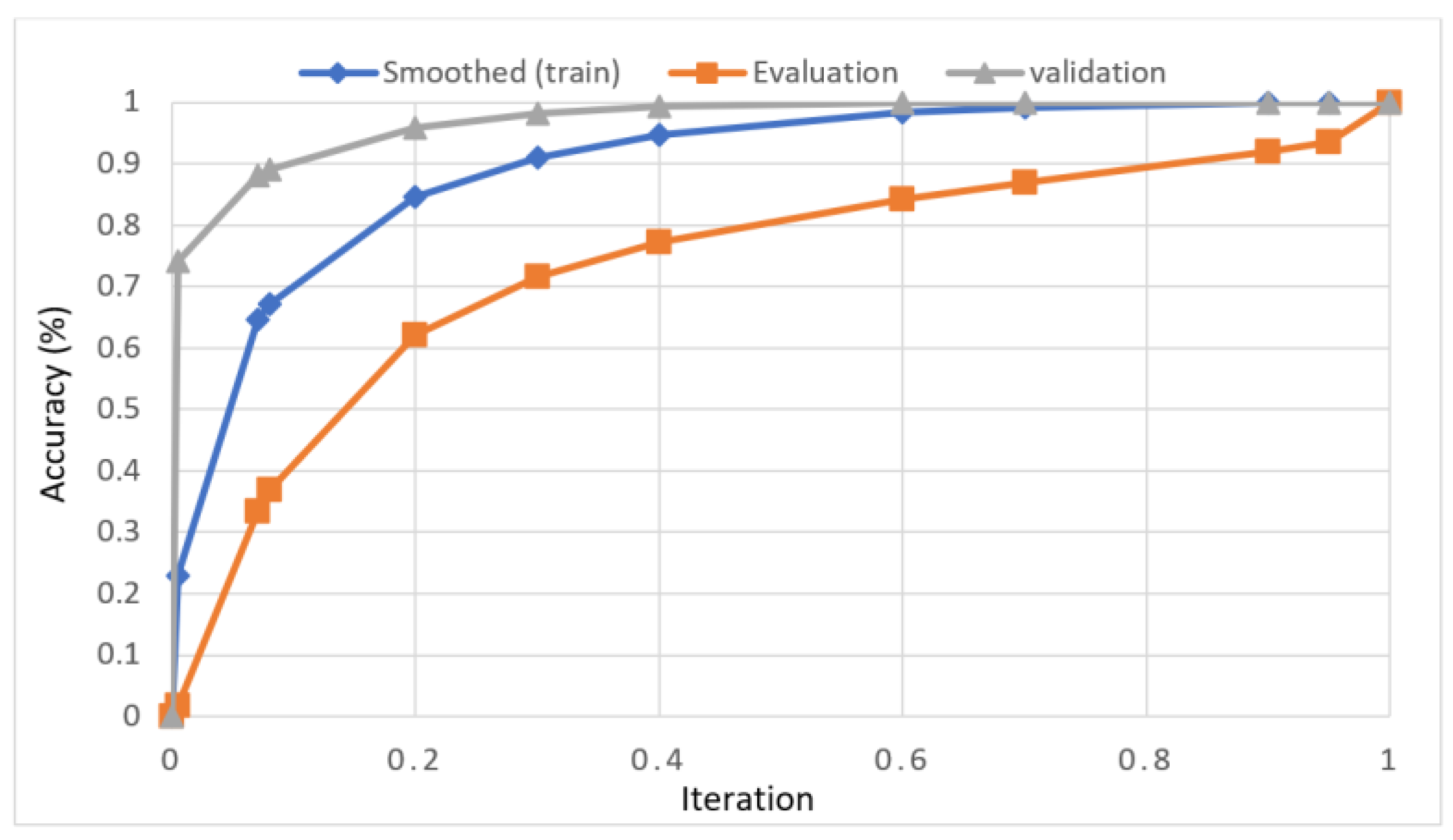

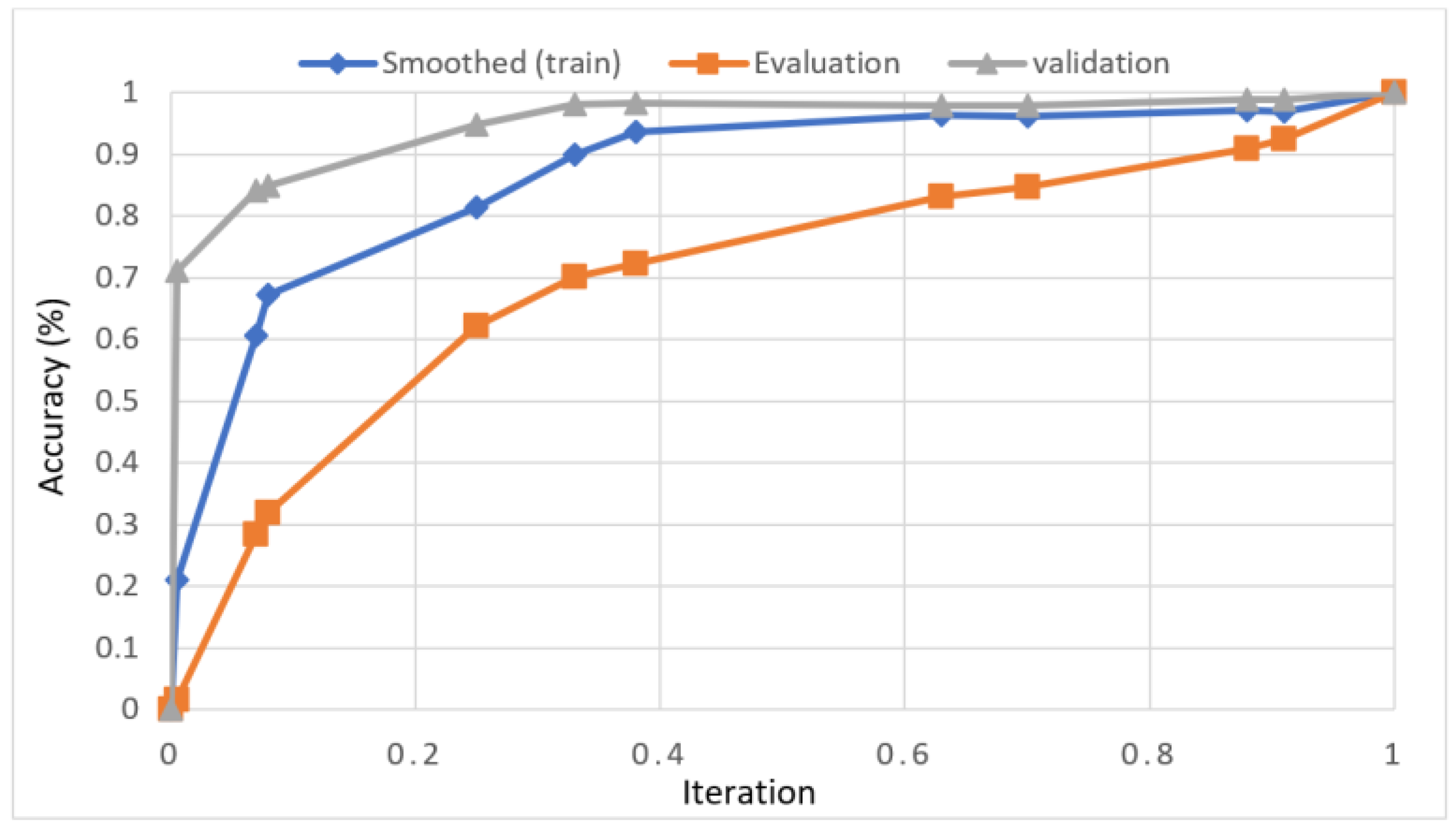

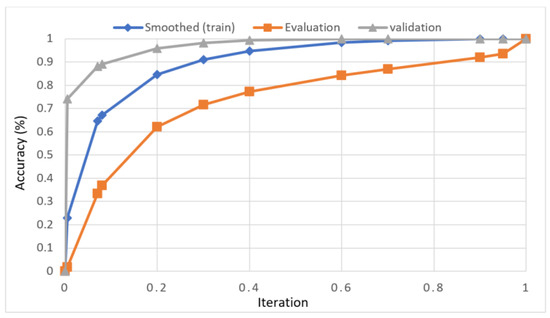

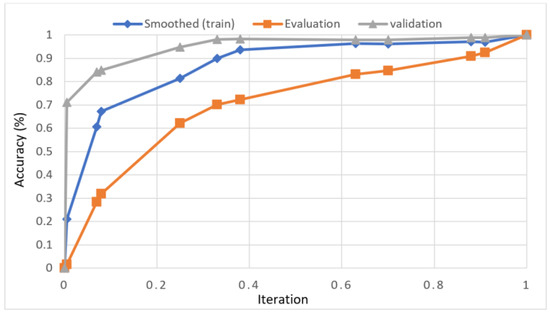

In this study, we developed a deep learning model to automatically grade the severity of knee RA using a consensus-based approach. We compared the proposed work to prior strategies and found that it outperformed the competition. At the elementary level, notably in Grade one and Grade two, we found that our method differed from that of the medical professionals. We evaluated the presented work by comparing its results with similar existing studies. Compared with previously existing models, the presented work (a knee joint space narrowing diagnosis and class label) fares very well. It takes about 7 h of training to reach 0.6 k iterations. The outcomes of the presented methodology are shown in Table 9, which includes the outcomes of each CBD grade individually. Multiple metrics were employed to estimate the model’s performance, as indicated in Table 10. Figure 10 and Figure 11 depict the ROI curve for RA severity classification for both knees.

Table 9.

Presented and conventional methods performance metric comparison.

Table 10.

Consensus-based decision grade outcomes.

Figure 10.

ROI curve for RA severity classification for knee-1.

Figure 11.

ROI curve for RA severity classification for knee-2.

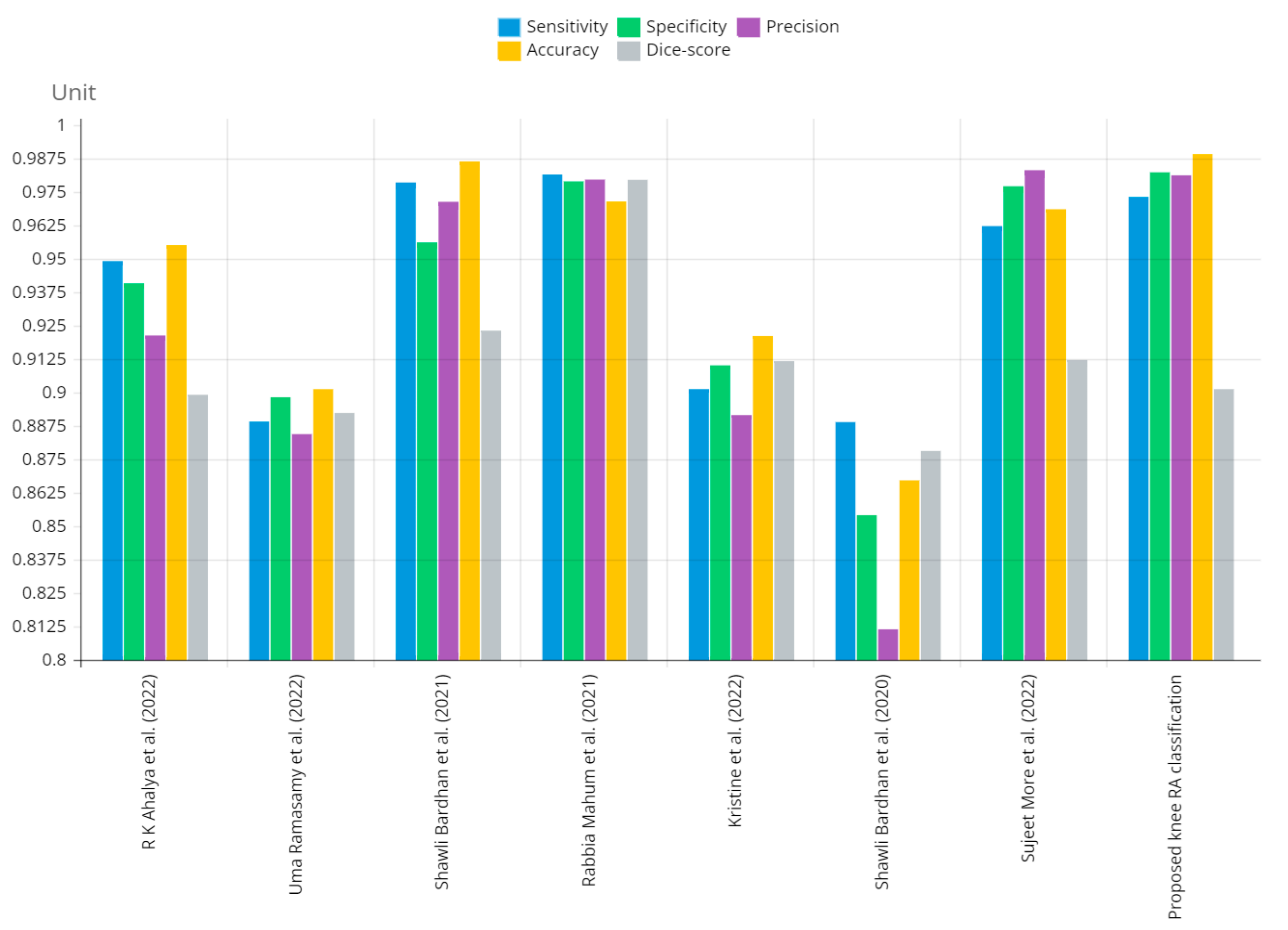

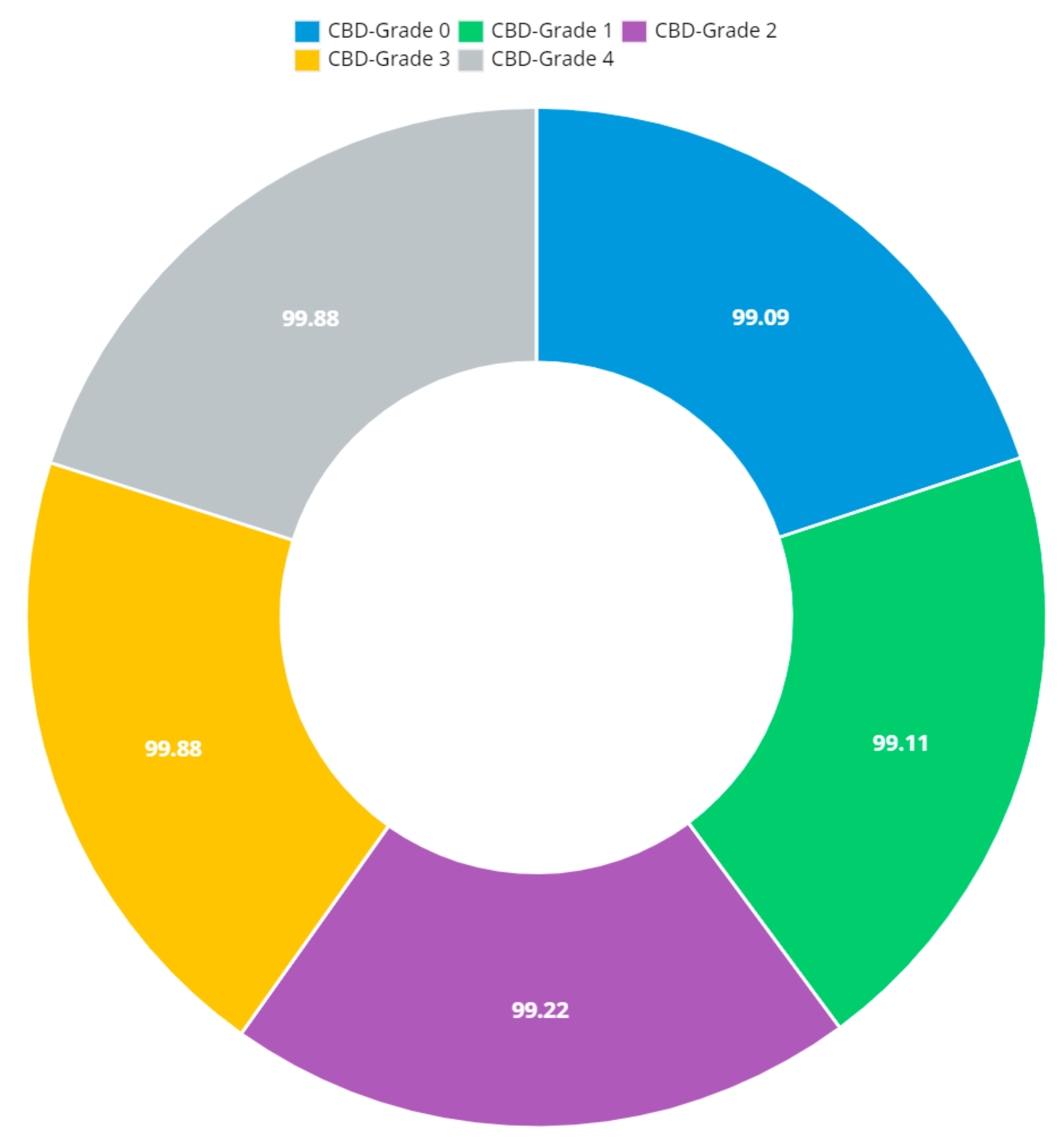

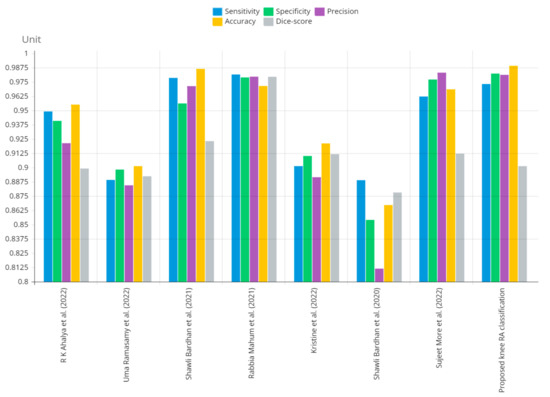

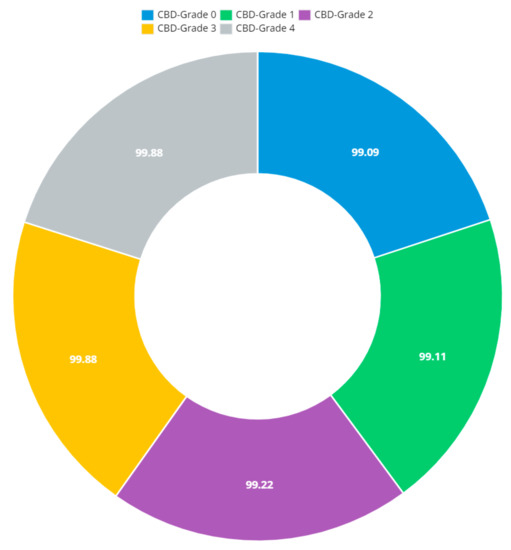

Figure 12 shows that the presented system outperformed other methods in terms of sensitivity (Se), specificity (Sp), precision (Pr), accuracy (Acc), and dice score (Ds), demonstrating deep learning’s capability. Figure 13 depicts the CBD grade outcome doughnut chart. In this research, the presented model increased overall ROI detection accuracy by up to 0.5 percent and improved classification accuracy by up to 1.18 percent. The proposed model is more dependable as a result of the detailed knee JSN characteristics. The improvement was satisfactory, and we agree with the observation that the AP view has a significant portion of the information necessary to assess the severity of knee RA with the CBD grading system. The CBD score is often examined using the AP view alone. Table 6 presents a comparison of the output of the proposed methodology with that of other methods that are currently in use. R K Ahalya et al. (2022) obtained Se of 0.9491, Sp of 0.9408 Pr of 0.9213, Acc of 0.9551 and Ds 0.8991; Uma Ramasamy et al. (2022) achieved Se of 0.8891, Sp of 0.8982, Pr of 0.8844, Acc of 0.9012, and Ds of 0.8923; Shawli Bardhan et al. (2021) obtained Se of 0.9785, Sp of 0.9561, Pr of 0.9713, Acc of 0.9864, and Ds of 0.9231; Rabbia Mahum et al. (2021) achieved Se 0.9815, Sp of 0.9789, Pr of 0.9896, Acc of 0.9714, and Ds of 0.9795; Kristine et al. (2022) obtained Se of 0.9012, Sp of 0.9101, Pr of 0.8915, Acc of 0.9211, and Ds of 0.9117; Shawli Bardhan et al. (2020) achieved Se of 0.8889, Sp of 0.8541, Pr of 0.8114, Acc of 0.8671, and Ds of 0.8781; and Sujeet More et al. (2022) obtained Se of 0.9622, Sp of 0.9771, Pr of 0.9831, Acc of 0.9685, and Ds of 0.9121. Our active deep CNN model acquired a knee joint identification accuracy of 98.97% and a knee RA severity classification accuracy of 99.10% using the presented methodology. This model also gives superior performance to handmade features. The active deep CNN model that we have presented and the pre-trained domain adaptation models that are employed in our system produce improved prediction accuracy outcomes for the five classes of knee RA that were experimentally determined.

Figure 12.

Graphical annotation of presented and conventional methods metric comparison.

Figure 13.

Doughnut chart of CBD grade outcomes.

4. Conclusions and Future Work

In this study, we propose a way to find and classify rheumatoid knee arthritis by using a deep convolutional neural network (CNN). We use the domain adaptation strategy to use already-trained models such as ResNet101 and VGG16. We evaluate the results against standard methods. The results of our experiments show that our proposed method is better at diagnosing rheumatoid knee arthritis than the current best practices. The presented approach achieved an classification accuracy of 98.97% and 99.10%. We used active deep CNN to predict and grade knee RA and then compared our results to work performed in MATLAB 2020a before. In this study, we provide a deep learning method for the automated detection and classification of RA in the knee. The presented methodology would analyze digital X-radiation pictures of the knee to identify the ROI (minimum knee joint space narrow area) and determine the degree of rheumatoid arthritis. Soon, we want to use this method to assign grades to MRI scans of knees affected by rheumatoid arthritis.

A potential direction for future research might be developing a system to assist medical professionals in identifying the location and cause of knee inflammation using thermogram images as a secondary diagnostic tool. The size of the dataset will also be increased so that temperature-flow patterns specific to arthritis can be made for better classification. Additionally, the presented method can be used with other models to find diseases other than knee problems in a hybrid and flexible way. Even more, this may be combined with feature fusion techniques for diagnosing and categorizing a wide range of additional disorders.

Author Contributions

Conceptualization, S.S., and S.G.; methodology, S.K.M., M.A.K.; validation, P.J.; resources, M.A.K.; data curation, A.A. and M.A.K.; writing—original draft preparation, S.S., and S.G.; writing—review and editing, S.K.M., M.A.K., M.M. and P.J.; visualization, M.M. and A.A.; supervision, P.J., M.A.K., A.A., M.M. and A.M.; project administration, P.J., M.A.K., A.A., M.M. and A.M. All authors have read and agreed to the published version of the manuscript.

Funding

The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work through Small Groups. Project under grant number (R.G.P.1/257/43).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Safdar, S.; Rizwan, M.; Gadekallu, T.R.; Javed, A.R.; Rahmani, M.K.I.; Jawad, K.; Bhatia, S. Bio-Imaging-Based Machine Learning Algorithm for Breast Cancer Detection. Diagnostics 2022, 12, 1134. [Google Scholar] [CrossRef] [PubMed]

- Jafarzadeh, S.R.; Felson, D.T. Updated estimates suggest a much higher prevalence of arthritis in United States adults than previous ones. Arthritis Rheumatol. 2018, 70, 185–192. [Google Scholar] [CrossRef] [PubMed]

- Bardhan, S.; Nath, S.; Debnath, T.; Bhattacharjee, D.; Bhowmik, M.K. Designing of an inflammatory knee joint thermogram dataset for arthritis classification using deep convolution neural network. Quant. Infrared Thermogr. J. 2020, 19, 145–171. [Google Scholar] [CrossRef]

- Bhatia, M.; Bhatia, S.; Hooda, M.; Namasudra, S.; Taniar, D. Analyzing and classifying MRI images using robust mathematical modeling. Multimed. Tools Appl. 2022, 81, 37519–37540. [Google Scholar] [CrossRef]

- Ahalya, R.K.; Umapathy, S.; Krishnan, P.T.; Joseph, A.N. Automated evaluation of RA from hand radiographs using ML and DL. Proc. Inst. Mech. Eng. 2022, 236, 1238–1249. [Google Scholar] [CrossRef]

- More, S.; Singla, J. A generalized deep learning framework for automatic RA severity grading. J. Intell. Fuzzy Syst. 2021, 41, 7603–7614. [Google Scholar] [CrossRef]

- Richardson, K.L.; Teague, C.N.; Mabrouk, S.; Nevius, B.N.; Ozmen, G.C.; Graham, R.S.; Zachs, D.P.; Tuma, A.; Peterson, E.J.; Lim, H.H.; et al. Quantifying Rheumatoid Arthritis Disease Activity Using a Multimodal Sensing Knee Brace. IEEE Trans. Biomed. Eng. 2022, 69, 3772–3783. [Google Scholar] [CrossRef]

- Baek, J.H.; Lee, S.C.; Kim, J.W.; Ahn, H.S.; Nam, C.H. Inferior outcomes of primary total knee arthroplasty in patients with RA compared to patients with osteoarthritis. Knee Surg. Sport. Traumatol. Arthrosc. 2021, 30, 2786–2792. [Google Scholar] [CrossRef]

- Mahum, R.; Rehman, S.U.; Meraj, T.; Rauf, H.T.; Irtaza, A.; El-Sherbeeny, A.M.; El-Meligy, M.A. A Novel Hybrid Approach Based on Deep CNN Features to Detect Knee Osteoarthritis. Sensors 2021, 21, 6189. [Google Scholar] [CrossRef]

- Smolen, J.S.; Landewé, R.; Breedveld, F.C.; Dougados, M.; Emery, P.; Gaujoux-Viala, C.; Gorter, S.; Knevel, R.; Nam, J.; Schoels, M.; et al. EULAR recommendations for the management of RA with synthetic and biological disease-modifying antirheumatic drugs. Ann. Rheum. Dis. 2020, 79, 685–699. [Google Scholar] [CrossRef]

- Yamanaka, H.; Tanaka, E.; Nakajima, A.; Furuya, T.; Ikari, K.; Taniguchi, A.; Inoue, E.; Harigai, M. A large observational cohort study of rheumatoid arthritis, IORRA: Providing context for today’s treatment options. Mod. Rheumatol. 2020, 30, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Asai, N.; Asai, S.; Takahashi, N.; Ishiguro, N.; Kojima, T. Factors associated with osteophyte formation in patients with RAundergoing total knee arthroplasty. Mod. Rheumatol. 2020, 30, 937–939. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Lin, S.; Wang, N.; Dai, G.; Xie, Y.; Zhou, J. TSE-CNN: A Two-Stage End-to-End CNN for Human Activity Recognition. IEEE J. Biomed. Health Inform. 2020, 24, 292–299. [Google Scholar] [CrossRef]

- Chen, T.; Lu, S.; Fan, J. SS-HCNN: Semi-Supervised Hierarchical Convolutional Neural Network for Image Classification. IEEE Trans. Image Process. 2019, 289, 2389–2398. [Google Scholar] [CrossRef]

- Sharon, H.; Elamvazuthi, C. Classification of RAusing Machine Learning Algorithms. In Proceedings of the IEEE 4th International Symposium in Robotics and Manufacturing Automation, Virtual, 10–12 December 2018. [Google Scholar] [CrossRef]

- Folle, L.; Simon, D.; Tascilar, K.; Krönke, G.; Liphardt, A.M.; Maier, A.; Schett, G.; Kleyer, A. Deep Learning-Based Classification of Inflammatory Arthritis by Identification of Joint Shape Patterns—How Neural Networks Can Tell Us Where to “Deep Dive” Clinically. Front. Med. 2022, 9, 607. [Google Scholar] [CrossRef]

- Apoorva, P.; Rahul, R. Early Detection of RA in Knee using Deep Learning. In Proceedings of the International Conference on Data Science, Machine Learning and Artificial Intelligence, Windhoek, Namibia, 9–12 August 2021; pp. 231–236. [Google Scholar] [CrossRef]

- Hemalatha, R.J.; Vijaybaskar, V.; Thamizhvani, T.R. Automatic localization of anatomical regions in medical ultrasound images of RAusing deep learning. Proc. Inst. Mech. Eng. J. Eng. Med. 2019, 233, 657–667. [Google Scholar] [CrossRef]

- Shanmugam, S.; Preethi, J. Design of RA Predictor Model Using Machine Learning Algorithms. Cogn. Sci. Artif. Intell. 2018, 94, 106500. [Google Scholar] [CrossRef]

- Amshidi, A.; Pelletier, J.P.; Martel-Pelletier, J. Machine-learning-based patient-specific prediction models for knee osteoarthritis. Nat. Rev. Rheumatol. 2019, 15, 49–60. [Google Scholar] [CrossRef] [PubMed]

- Yang, T.; Zhu, H.; Gao, X.; Zhang, Y.; Hui, Y.; Wang, F. Grading of Metacarpophalangeal RAon Ultrasound Images Using Machine Learning Algorithms. IEEE Access 2020, 8, 67137–67146. [Google Scholar] [CrossRef]

- Hirano, T.; Nishide, M.; Nonaka, N.; Seita, J.; Ebina, K.; Sakurada, K.; Kumanogoh, A. Development and validation of a deep-learning model for scoring of radiographic finger joint destruction in rheumatoid arthritis. Rheumatol. Adv. Pract. 2019, 3, rkz047. [Google Scholar] [CrossRef]

- Saravanan, S.; Vinoth Kumar, V.; Velliangiri, S.; Alagiri, I.; Elangovan, D.; Allayear, S. Computational and Mathematical Methods in Medicine Glioma Brain Tumor Detection and Classification Using Convolutional Neural Network. Comput. Math. Methods Med. 2022, 2022, 4380901. [Google Scholar] [CrossRef] [PubMed]

- Morita, K.; Tashita, A.; Nii, M.; Kobashi, S. Computer-aided diagnosis system for RAusing machine learning. In Proceedings of the International Conference on Machine Learning and Cybernetics, Ningbo, China, 9–12 July 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Wang, H.; Su, C.; Lai, C.; Chen, W.; Chen, C.; Ho, L.; Chu, W.; Lien, C. Deep Learning-Based Computer-Aided Diagnosis of RA with Hand X-radiation Images Conforming to Modified Total Sharp/van der Heijde Score. Biomedicines 2022, 10, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Khan, Y.F.; Kaushik, B.; Chowdhary, C.L.; Srivastava, G. Ensemble Model for Diagnostic Classification of Alzheimer’s Disease Based on Brain Anatomical Magnetic Resonance Imaging. Diagnostics 2022, 12, 3193. [Google Scholar] [CrossRef]

- Chowdhary, C.L.; Mittal, M.; Pattanaik, P.A.; Marszalek, Z. An efficient segmentation and classification system in medical images using intuitionist possibilistic fuzzy C-mean clustering and fuzzy SVM algorithm. Sensors 2020, 20, 3903. [Google Scholar] [CrossRef] [PubMed]

- Das, T.K.; Chowdhary, C.L.; Gao, X.Z. Chest X-radiation investigation: A convolutional neural network approach. J. Biomim. Biomater. Biomed. Eng. 2020, 45, 57–70. [Google Scholar]

- Vimala, B.B.; Srinivasan, S.; Mathivanan, S.K.; Muthukumaran, V.; Babu, J.C.; Herencsar, N.; Vilcekova, L. Image Noise Removal in Ultrasound Breast Images Based on Hybrid Deep Learning Technique. Sensors 2023, 23, 1167. [Google Scholar] [CrossRef]

- Saravanan, S.; Thirumurugan, P. Performance Analysis of Glioma Brain Tumor Segmentation Using Ridgelet Transform and Co-Active Adaptive Neuro Fuzzy Expert System Methodology. J. Med. Imaging Health Inform. 2020, 10, 2642–2648. [Google Scholar] [CrossRef]

- Ramasamy, U.; Sundar, S. An Illustration of Rheumatoid Arthritis Disease Using Decision Tree Algorithm. Informatica 2022, 46, 107–119. [Google Scholar] [CrossRef]

- Bardhan, S.; Bhowmik, M.K. 2-Stage classification of knee joint thermograms for rheumatoid arthritis prediction in subclinical inflammation. Australas. Phys. Eng. Sci. Med. 2019, 42, 259–277. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).