Enhancing Accuracy in Breast Density Assessment Using Deep Learning: A Multicentric, Multi-Reader Study

Abstract

:1. Introduction

2. Background

3. Materials and Methods

3.1. Software

3.2. Train Data

3.3. Model Architecture

3.4. Test Data

3.5. Ground Truth

3.6. Reader Study

3.7. Statistical Analysis

4. Results

5. Discussion

Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DLAD | deep learning-based automatic detection algorithm |

| CAD | computer-aided diagnosis |

| CNN | convolutional neural network |

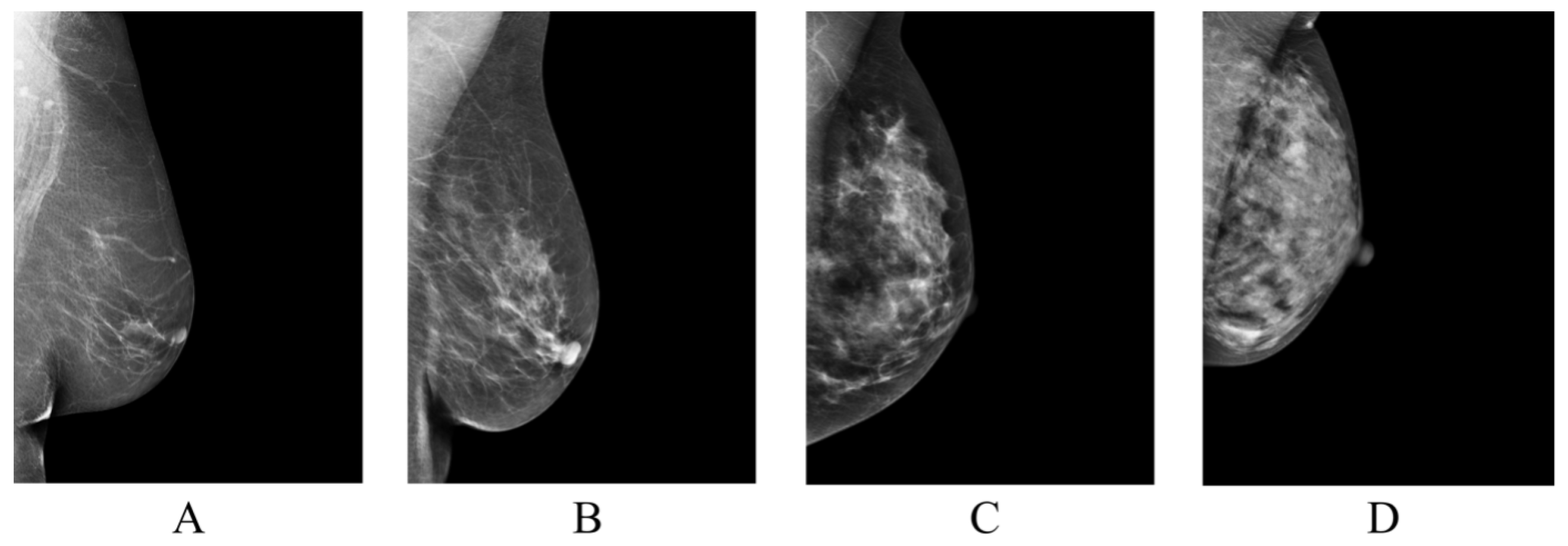

| BI-RADS | Breast Imaging-Reporting and Data System |

| CI | confidence interval |

Appendix A

| No. | Authors | Key Contribution | Datasets | Methodology | Performance Metrics |

|---|---|---|---|---|---|

| [13] | Kallenberg et al. | Unsupervised deep learning for breast density segmentation and mammographic risk scoring. | Three clinical datasets; details not fully specified. | Convolutional Sparse Autoencoders (CSAE); Unsupervised feature learning. | Pearson correlation for PMD: 0.85 |

| [14] | Mohamed et al. | Classifying mammographic breast density categories between “scattered density” and “heterogeneously dense”. | 22,000 digital mammogram images from 1427 women. | CNN with transfer learning and training from scratch. | AUC: 0.9421 when trained from scratch; AUC increased to 0.9882 after refining the dataset. |

| [15] | Becker et al. | Evaluate diagnostic accuracy of a multipurpose image analysis software. | Dual-center retrospective study of 3228 mammograms; external validation with a publicly available dataset. | Artificial neural network trained on retrospective and prospective cohort data. | AUC: 0.82; comparable to experienced radiologists (AUC: 0.77–0.87). |

| [16] | Li et al. | Multi-view mammographic density classification using dilated and attention-guided residual learning. | Two datasets: a clinical dataset and the INBreast dataset. | Dilated and attention-guided residual learning | Accuracy: 88.7% on the clinical dataset, 70.0% on the INBreast dataset. |

| [17] | Deng et al. | SE-Attention neural networks for breast density classification. | 18,157 images from 4982 patients from Shanxi Medical University. | SE-Attention integrated into a CNN framework. | Enhanced performance over traditional models with accuracies reaching up to 92.17% for Inception-V4-SE. |

| [18] | Wu et al. | Deep learning for automated breast density classification in over 200,000 screening mammograms. | 201,179 exams, containing 19,939 class 0, 85,665 class 1, 83,852 class 2, and 11,723 class 3 exams. | Multi-column deep convolutional neural networks (CNNs). | Top-1 accuracy: 76.7%, Top-2 accuracy: 98.2%, macAUC: 0.916. |

References

- Broeders, M.; Moss, S.; Nyström, L.; Njor, S.; Jonsson, H.; Paap, E.; Massat, N.; Duffy, S.; Lynge, E.; Paci, E. The impact of mammographic screening on breast cancer mortality in Europe: A review of observational studies. J. Med. Screen. 2012, 19, 14–25. [Google Scholar] [CrossRef] [PubMed]

- De Gelder, R.; Fracheboud, J.; Heijnsdijk, E.; Heeten, G.; Verbeek, A.; Broeders, M.; Draisma, G.; De Koning, H. Digital mammography screening: Weighing reduced mortality against increased overdiagnosis. Prev. Med. 2011, 53, 134–140. [Google Scholar] [CrossRef]

- Boyd, N.; Guo, H.; Martin, L.; Sun, L.; Stone, J.; Fishell, E.; Jong, R.; Hislop, G.; Chiarelli, A.; Minkin, S. Others Mammographic density and the risk and detection of breast cancer. N. Engl. J. Med. 2007, 356, 227–236. [Google Scholar] [CrossRef] [PubMed]

- Ellenbogen, P. BI-RADS: Revised and replicated. J. Am. Coll. Radiol. 2014, 11, 2. [Google Scholar] [CrossRef] [PubMed]

- Gweon, H.; Youk, J.; Kim, J.; Son, E. Radiologist assessment of breast density by BI-RADS categories versus fully automated volumetric assessment. AJR Am. J. Roentgenol. 2013, 201, 692–697. [Google Scholar] [CrossRef] [PubMed]

- Bernardi, D.; Pellegrini, M.; Michele, S.; Tuttobene, P.; Fantò, C.; Valentini, M.; Gentilini, M.; Ciatto, S. Interobserver agreement in breast radiological density attribution according to BI-RADS quantitative classification. Radiol. Med. 2012, 117, 519–528. [Google Scholar] [CrossRef] [PubMed]

- Portnow, L.; Choridah, L.; Kardinah, K.; Handarini, T.; Pijnappel, R.; Bluekens, A.; Duijm, L.; Schoub, P.; Smilg, P.; Malek, L.; et al. International Interobserver Variability of Breast Density Assessment. J. Am. Coll. Radiol. 2023, 20, 671–684. [Google Scholar] [CrossRef] [PubMed]

- Koch, H.; Larsen, M.; Bartsch, H.; Kurz, K.; Hofvind, S. Artificial intelligence in BreastScreen Norway: A retrospective analysis of a cancer-enriched sample including 1254 breast cancer cases. Eur. Radiol. 2023, 33, 3735–3743. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Wolfgruber, T.; Leong, L.; Jensen, M.; Scott, C.; Winham, S.; Sadowski, P.; Vachon, C.; Kerlikowske, K.; Shepherd, J. Deep learning predicts interval and screening-detected cancer from screening mammograms: A case-case-control study in 6369 women. Radiology 2021, 301, 550–558. [Google Scholar] [CrossRef]

- Gastounioti, A.; Eriksson, M.; Cohen, E.; Mankowski, W.; Pantalone, L.; Ehsan, S.; McCarthy, A.; Kontos, D.; Hall, P.; Conant, E. External Validation of a Mammography-Derived AI-Based Risk Model in a US Breast Cancer Screening Cohort of White and Black Women. Cancers 2022, 14, 4803. [Google Scholar] [CrossRef]

- Leeuwen, K.; Rooij, M.; Schalekamp, S.; Ginneken, B.; Rutten, M. How does artificial intelligence in radiology improve efficiency and health outcomes? Pediatr. Radiol. 2021, 52, 2087–2093. [Google Scholar] [CrossRef] [PubMed]

- Redondo, A.; Comas, M.; Macia, F.; Ferrer, F.; Murta-Nascimento, C.; Maristany, M.; Molins, E.; Sala, M.; Castells, X. Inter-and intraradiologist variability in the BI-RADS assessment and breast density categories for screening mammograms. Br. J. Radiol. 2012, 85, 1465–1470. [Google Scholar] [CrossRef] [PubMed]

- Kallenberg, M.; Petersen, K.; Nielsen, M.; Ng, A.; Diao, P.; Igel, C.; Vachon, C.; Holland, K.; Winkel, R.; Karssemeijer, N. Others Unsupervised deep learning applied to breast density segmentation and mammographic risk scoring. IEEE Trans. Med. Imaging 2016, 35, 1322–1331. [Google Scholar] [CrossRef] [PubMed]

- Mohamed, A.; Berg, W.; Peng, H.; Luo, Y.; Jankowitz, R.; Wu, S. A deep learning method for classifying mammographic breast density categories. Med. Phys. 2018, 45, 314–321. [Google Scholar] [CrossRef] [PubMed]

- Becker, A.; Marcon, M.; Ghafoor, S.; Wurnig, M.; Frauenfelder, T.; Boss, A. Deep learning in mammography: Diagnostic accuracy of a multipurpose image analysis software in the detection of breast cancer. Investig. Radiol. 2017, 52, 434–440. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Xu, J.; Liu, Q.; Zhou, Y.; Mou, L.; Pu, Z.; Xia, Y.; Zheng, H.; Wang, S. Multi-view mammographic density classification by dilated and attention-guided residual learning. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 18, 1003–1013. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Ma, Y.; Li, D.; Zhao, J.; Liu, Y.; Zhang, H. Classification of breast density categories based on SE-Attention neural networks. Comput. Methods Programs Biomed. 2020, 193, 105489. [Google Scholar] [CrossRef] [PubMed]

- Wu, N.; Geras, K.; Shen, Y.; Su, J.; Kim, S.; Kim, E.; Wolfson, S.; Moy, L.; Cho, K. Breast density classification with deep convolutional neural networks. In Proceedings of the 2018 IEEE International Conference On Acoustics, Speech And Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 6682–6686. [Google Scholar]

- Sergeant, J.; Walshaw, L.; Wilson, M.; Seed, S.; Barr, N.; Beetles, U.; Boggis, C.; Bundred, S.; Gadde, S.; Lim, Y. Others Same task, same observers, different values: The problem with visual assessment of breast density. In Proceedings of the Medical Imaging 2013: Image Perception, Observer Performance, and Technology Assessment, Lake Buena Vista, FL, USA, 9–14 February 2013; Volume 8673, pp. 197–204. [Google Scholar]

- Alomaim, W.; O’Leary, D.; Ryan, J.; Rainford, L.; Evanoff, M.; Foley, S. Variability of breast density classification between US and UK radiologists. J. Med. Imaging Radiat. Sci. 2019, 50, 53–61. [Google Scholar] [CrossRef] [PubMed]

- Alomaim, W.; O’Leary, D.; Ryan, J.; Rainford, L.; Evanoff, M.; Foley, S. Subjective versus quantitative methods of assessing breast density. Diagnostics 2020, 10, 331. [Google Scholar] [CrossRef]

- Wortsman, M.; Ilharco, G.; Gadre, S.; Roelofs, R.; Gontijo-Lopes, R.; Morcos, A.; Namkoong, H.; Farhadi, A.; Carmon, Y.; Kornblith, S. Others Model soups: Averaging weights of multiple fine-tuned models improves accuracy without increasing inference time. In Proceedings of the International Conference On Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 23965–23998. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference On Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Dansereau, C.; Sobral, M.; Bhogal, M.; Zalai, M. Model soups to increase inference without increasing compute time. arXiv 2023, arXiv:2301.10092. [Google Scholar]

- McHugh, M. Interrater reliability: The kappa statistic. Biochem. Medica 2012, 22, 276–282. [Google Scholar] [CrossRef]

- Sprague, B.; Gangnon, R.; Burt, V.; Trentham-Dietz, A.; Hampton, J.; Wellman, R.; Kerlikowske, K.; Miglioretti, D. Prevalence of mammographically dense breasts in the United States. J. Natl. Cancer Inst. 2014, 106, dju255. [Google Scholar] [CrossRef] [PubMed]

- Advani, S.; Zhu, W.; Demb, J.; Sprague, B.; Onega, T.; Henderson, L.; Buist, D.; Zhang, D.; Schousboe, J.; Walter, L. Others Association of breast density with breast cancer risk among women aged 65 years or older by age group and body mass index. JAMA Netw. Open 2021, 4, e2122810. [Google Scholar] [CrossRef] [PubMed]

| BI-RADS Category | / |

|---|---|

| Class A | 879/3516 |

| Class B | 3212/12,848 |

| Class C | 928/3712 |

| Class D | 111/444 |

| Total | 5130/20,520 |

| Institution | Mammography X-ray Machine | / |

|---|---|---|

| Institution 1 | GE Senographe Essential VERSION ADS 54.20 | 60/240 |

| Institution 2 | GE Senographe Essential VERSION ADS 55.31.10 | 28/112 |

| Institution 3 | Hologic Selenia Dimensions | 27/108 |

| Siemens Healthineers MAMMOMAT Revelation | 7/28 | |

| Total | 122/488 |

| ID | Experience |

|---|---|

| GT 1 | Head of the radiology department of screening center, 27 years of experience, board-certified |

| GT 2 | Head of the radiology department of oncology hospital, 13 years of experience, board-certified |

| Institution | Mammography X-ray Machine | / |

|---|---|---|

| Institution 1 | GE Senographe Essential VERSION ADS 54.20 | 33/132 |

| Institution 2 | GE Senographe Essential VERSION ADS 55.31.10 | 15/60 |

| Institution 3 | Hologic Selenia Dimensions | 20/80 |

| Siemens Healthineers MAMMOMAT Revelation | 4/16 | |

| Total | 72/288 |

| BI-RADS Category | / |

|---|---|

| Class A | 18/72 |

| Class B | 43/172 |

| Class C | 7/28 |

| Class D | 4/16 |

| Total | 72/288 |

| ID | Experience |

|---|---|

| RAD 1 | 2 years of experience, without board certification |

| RAD 2 | 2 years of experience, without board certification |

| RAD 3 | 4 years of experience, without board certification |

| RAD 4 | 7 years of experience, board-certified |

| RAD 5 | 8 years of experience, board-certified |

| ID | Accuracy (95% CI) | p-Value | (95% CI) | Agreement [25] | Difference |

|---|---|---|---|---|---|

| DLAD | 0.819 (0.736–0.903) | - | 0.708 (0.562–0.841) | Substantial | - |

| RAD 1 | 0.694 (0.583–0.806) | 0.052 | 0.506 (0.331–0.661) | Moderate | 0.203 |

| RAD 2 | 0.778 (0.681–0.861) | 0.606 | 0.658 (0.511–0.798) | Substantial | 0.053 |

| RAD 3 | 0.875 (0.805–0.944) | 0.423 | 0.800 (0.680–0.912) | Substantial | −0.094 |

| RAD 4 | 0.792 (0.694–0.889) | 0.823 | 0.684 (0.553–0.818) | Substantial | 0.026 |

| RAD 5 | 0.736 (0.639–0.833) | 0.327 | 0.610 (0.464–0.749) | Substantial | 0.099 |

| ID | F1 Score (95% CI) | Precision (95% CI) | Recall (95% CI) |

|---|---|---|---|

| DLAD | 0.798 (0.594–0.905) | 0.806 (0.596–0.896) | 0.830 (0.650–0.946) |

| RAD 1 | 0.641 (0.412–0.789) | 0.729 (0.425–0.854) | 0.639 (0.458–0.807) |

| RAD 2 | 0.782 (0.600–0.879) | 0.795 (0.633–0.873) | 0.850 (0.697–0.938) |

| RAD 3 | 0.877 (0.782–0.941) | 0.849 (0.764–0.923) | 0.948 (0.915–0.976) |

| RAD 4 | 0.754 (0.549–0.861) | 0.762 (0.571–0.865) | 0.826 (0.630–0.938) |

| RAD 5 | 0.781 (0.700–0.855) | 0.805 (0.750–0.858) | 0.873 (0.816–0.920) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Biroš, M.; Kvak, D.; Dandár, J.; Hrubý, R.; Janů, E.; Atakhanova, A.; Al-antari, M.A. Enhancing Accuracy in Breast Density Assessment Using Deep Learning: A Multicentric, Multi-Reader Study. Diagnostics 2024, 14, 1117. https://doi.org/10.3390/diagnostics14111117

Biroš M, Kvak D, Dandár J, Hrubý R, Janů E, Atakhanova A, Al-antari MA. Enhancing Accuracy in Breast Density Assessment Using Deep Learning: A Multicentric, Multi-Reader Study. Diagnostics. 2024; 14(11):1117. https://doi.org/10.3390/diagnostics14111117

Chicago/Turabian StyleBiroš, Marek, Daniel Kvak, Jakub Dandár, Robert Hrubý, Eva Janů, Anora Atakhanova, and Mugahed A. Al-antari. 2024. "Enhancing Accuracy in Breast Density Assessment Using Deep Learning: A Multicentric, Multi-Reader Study" Diagnostics 14, no. 11: 1117. https://doi.org/10.3390/diagnostics14111117